2022

[2]\fnmLei \surBai

1]\orgnameThe University of Sydney, \orgaddress\postcode2006, \stateNSW, \countryAustralia [2]\orgnameShangai AI Laboratory, \postcode200232, \stateShanghai, \countryChina 3]\orgnameSenseTime Group Ltd., \countryChina

Towards Frame Rate Agnostic Multi-Object Tracking

Abstract

Multi-Object Tracking (MOT) is one of the most fundamental computer vision tasks that contributes to various video analysis applications. Despite the recent promising progress, current MOT research is still limited to a fixed sampling frame rate of the input stream. They are neither as flexible as humans nor well-matched to industrial scenarios which require the trackers to be frame rate insensitive in complicated conditions. In fact, we empirically found that the accuracy of all recent state-of-the-art trackers drops dramatically when the input frame rate changes. For a more intelligent tracking solution, we shift the attention of our research work to the problem of Frame Rate Agnostic MOT (FraMOT), which takes frame rate insensitivity into consideration. In this paper, we propose a Frame Rate Agnostic MOT framework with a Periodic training Scheme (FAPS) to tackle the FraMOT problem for the first time. Specifically, we propose a Frame Rate Agnostic Association Module (FAAM) that infers and encodes the frame rate information to aid identity matching across multi-frame-rate inputs, improving the capability of the learned model in handling complex motion-appearance relations in FraMOT. Moreover, the association gap between training and inference is enlarged in FraMOT because those post-processing steps not included in training make a larger difference in lower frame rate scenarios. To address it, we propose Periodic Training Scheme (PTS) to reflect all post-processing steps in training via tracking pattern matching and fusion. Along with the proposed approaches, we make the first attempt to establish an evaluation method for this new task of FraMOT. Besides providing simulations and evaluation metrics, we try to solve new challenges in two different modes, i.e., known frame rate and unknown frame rate, aiming to handle a more complex situation. The quantitative experiments on the challenging MOT17/20 dataset (FraMOT version) have clearly demonstrated that the proposed approaches can handle different frame rates better and thus improve the robustness against complicated scenarios.

keywords:

Frame Rate Agnostic, Multi-Object-Tracking, Multi-Frame-Rate, Frame Rate Agnostic MOT Framework, Frame Rate Information Inference and Encoding, Frame Rate Agnostic Association, Periodic Training Scheme

1 Introduction

Multi-Object Tracking (MOT) is one of the most fundamental computer vision tasks that help machines recognize the world automatically and intelligently, contributing to a great number of industrial applications of video analysis. Along with the development of deep learning, the MOT methods evolve from motion-color-texture tracking (Takala and Pietikainen, 2007; Zhang et al, 2008) to motion-appearance-mixed tracking (Chu et al, 2017; Sadeghian et al, 2017), and are jointly trained with detection to simplify the procedures and reduce computational cost (Kieritz et al, 2018; Zhou et al, 2020; Zhang et al, 2021).

Despite the aforementioned promising progress, we argue that current MOT algorithms are still not intelligent enough and cannot well satisfy industrial demands since they all work on videos with a fixed frame rate. On the one hand, humans can track objects in videos with diverse frame rates, even in a situation lacking any information on the streaming frame rate. However, the recent State-Of-The-Art (SOTA) trackers (Zhou et al, 2020; Wu et al, 2021; Wang et al, 2021; Zhang et al, 2021, 2022) were not robust to frame rate changes. As shown in Fig. 1, we evaluated several recent trackers (dashed lines in Fig. 1) on MOT17 and MOT20 challenges with different input frame rates and have found that their tracking capability decreases a lot when the frame rate is reduced. The ByteTrack (Zhang et al, 2022) is much better than CenterTrack (Zhou et al, 2020) in the normal frame rate setting but is not advanced in lower frame rate settings, showing that some tracking techniques are frame rate sensitive. On the other hand, different applications require MOT models to work on different sampling rates. Some applications (e.g., autonomous driving) require the tracker to have low latency and provide input videos of very high sampling frame rates, but more others (e.g., large-scale trajectory retrieval and tracking-based crowd counting, Han et al (2022)) only require the MOT algorithms to work on different lower frame rates. These applications care less about the frequent perception of object locations at every single time step but focus more on trajectory integrity along a greater temporal range. Although training and deploying a separate tracker for each frame rate is feasible, this trivial solution is neither convenient nor efficient because developing, selecting, and deploying the best tracker for each application and frame rate is laborious and expensive for large systems. Besides, it assumes that the frame rate is available during testing, which may not always be true. Thus, it is necessary to propose trackers that can comprehend videos with different frame rates like humans. These trackers should be general, unified, and frame rate independent. This will be not only a reasonable objective for a smarter perception system but also an efficient solution for many real-world situations in terms of bandwidth, storage, and computation.

To train a unified frame rate agnostic tracker, a straightforward manner is training a model of the classical design on the dataset with multiple diverse frame rates (i.e., frame rate agnostic training). However, this vanilla design does not work well due to the following two challenges.

First, the optimal matching rules of motion-appearance relations are different at different input frame rates. For example, motion cues are usually more reliable when the input frame rate is high, because of a smaller movement between adjacent frames. While in lower-frame-rate settings, appearance cues become more important. Two detections with similar appearances may be unlikely to be judged as the same object in higher frame rate videos given the large spatial distance but are more likely to be the same object in lower frame rate videos. Classical association models lack the mechanisms to handle these complex motion-appearance relations well.

Second, involving multi-frame-rate data in traditional frame-pair association training schemes leads to a larger gap between training and inference. Specifically, post-processing procedures that are not included in the training stage but applied in the inference stage will change the detected object locations, resulting in the input data of the association networks in the training stage being different from that in the inference stage. These changes are small in normal (higher) frame rates and thus can be ignored in the traditional training scheme. However, these changes are enlarged in the lower frame rates and are not negligible in the multi-frame-rate training.

To tackle these challenges and achieve a unified frame rate agnostic tracker, we propose the Frame Rate Agnostic MOT framework with a Periodic training Scheme (FAPS), which consists of two effective techniques. For the first challenge, a unified Frame Rate Agnostic Association Module (FAAM) is proposed to handle various frame rate settings. Specifically, FAAM first employs the available frame rate information (e.g., the exact frame rate) to generate the frame rate embedding and infer the frame rate aware attention, which will further be multiplied with the prediction embedding in a channel-wise manner to predict final association scores. For the cases where the exact frame rate is known, the frame rate embedding can be directly obtained by the frame rate cosine embedding. For the cases where the exact frame rate is unknown during testing, we propose to use the Inter-frame Best-matched Distance Vector (IBDV) to infer the frame rate information. To calculate the IBDV of two adjacent frames, we first calculate the normalized positional distance matrix, and then select the minimum distance of each row in the matrix, sorting them to form a vector. IBDV encodes the distribution of object movements and thus provides information to infer the frame rate. For the second challenge, a Periodic Training Scheme (PTS) is designed to enhance the frame rate agnostic training. Before starting the training, we sample the tracking patterns via running previous model checkpoints on the real inference pipeline including all post-processing steps. The tracking patterns record all information we need (i.e., positions, motion predictions, and cached features) to simulate the inference stage environment during training. We assume these patterns of the tracker to have negligible variation within a short period, and thus we divide the whole training procedure into several training periods and only update patterns between periods. During training, instances not matching those patterns will be discarded because they probably do not appear in inference time, thus reducing the difficulties of frame rate agnostic training. The remaining instances will be fused with the recorded patterns to reduce the input variance and will be turned into affinity features. With the proposed approaches, we successfully improved the accuracy of the tracker, especially at lower frame rate settings.

To make a fair evaluation for the FraMOT task, we simulate the multi-frame-rate settings using existing benchmarking MOT datasets and develop metrics for the evaluation. Moreover, we test the methods of FraMOT task in two modes, i.e., known-frame-rate mode and unknown-frame-rate mode, reflecting different deployment demands and bringing a more challenging yet practical MOT problem to the community. Section 5.1.1 shows more details of the evaluation method.

In summary, our contributions are four-fold:

-

•

We, for the first time, raise the problem of Frame Rate Agnostic Multi-Object-Tracking (FraMOT), which targets on learning a unified model to track objects in videos with agnostic frame rates. Compared with the classical MOT, FraMOT is more intelligent and also practical for large vision systems.

-

•

We propose a Frame Rate Agnostic MOT framework with a Periodic training Scheme (FAPS), which is the first frame rate agnostic MOT baseline attempting to effectively handle various input frame rates with a single unified model for a more robust MOT tracker in industrial scenarios.

-

•

We propose a Frame Rate Agnostic Association Module (FAAM) to make use of given or inferred frame rate cues for aiding the identity matching, leading to a more intelligent tracker.

-

•

We propose a Periodic Training Scheme (PTS) for frame rate agnostic MOT model training, providing the simulation of inference environment and thus reducing the training-inference gap of data association.

Quantitative experiments on the challenging MOT17 and MOT20 (FraMOT versions) datasets clearly demonstrate the effectiveness of our proposed approaches at various input frame rates, which provides new insights for a more intelligent tracking solution. Code is available at https://github.com/Helicopt/FraMOT.

2 MOT in the Industry: A Context

2.1 Scenarios

Low Latency MOT. In specific contexts, MOT trackers must function with minimal latency, provided that there is adequate computational capacity accessible. This classification encompasses use cases where automated assessments have the potential to result in significant outcomes. For example, real-time surveillance systems that require immediate response to potential security threats and autonomous vehicles that must react promptly to changes in their environment. In these scenarios, the ability to perform MOT with low latency can greatly enhance the effectiveness and safety of the system.

Balanced MOT. In specific instances of MOT applications, certain errors may not result in catastrophic consequences. For such use cases, it is crucial that applications achieve a satisfactory level of accuracy while simultaneously maximizing their processing throughput capacity. The best approach in these scenarios is to strike a balance between processing speed and accuracy. This usually leads to the use of low frame-rate MOT systems. For example, in crowd monitoring applications, the goal may be to track the overall movement patterns and density of a crowd rather than identifying individual people with perfect trajectories. In traffic monitoring applications, the focus may be on detecting traffic flow and congestion rather than identifying every single vehicle. Similarly, in warehouse or logistics applications, the priority may be on tracking the overall movement of goods rather than identifying every single item with perfect accuracy. In these scenarios, a satisfactory level of accuracy coupled with high processing throughput can still provide useful insights and enable effective decision-making.

Even for applications that require a detailed path of an object, a high perception frequency may not always be necessary. For instance, in large-scale video surveillance databases, it may be sufficient to determine the location of a specific target every few seconds rather than obtaining 25 locations per second. Another example could be in sports analysis, where the movement of players and the ball needs to be tracked. However, not every frame of the video needs to be analyzed, and a lower frame rate may suffice to capture the key moments of the game.

2.2 Robustness

Deployment Robustness. Deploying MOT algorithms is a challenging task, as the trackers involve numerous hyperparameters that must be tailored for different use cases. The frame rate is a crucial factor that impacts these hyperparameters. However, adjusting a large number of hyperparameters for a diverse range of cameras is impractical. To ensure ease of large-scale deployment, which we refer to as deployment robustness, the MOT trackers need to exhibit greater intelligence and adaptability to diverse scenarios, camera views, and frame rates.

Hardware Robustness. In addition to deployment concerns, hardware-related issues are also crucial factors that can impact the robustness of MOT algorithms. For video collection hubs located far away from the data center, network issues caused by limited bandwidth or overloaded hardware capacity are unavoidable. These issues often manifest as lost packets and video lag. Furthermore, in some cases, cameras may need to be manually configured to adopt different sampling rates to accommodate temporary computational outages and recovery. All of these situations can result in dynamic changes to the sampling frame rates in the video streams. The need for hardware robustness, which involves addressing hardware-related issues, underscores the importance of frame-rate agnostic MOT algorithms. These algorithms should be capable of adapting to changes in sampling frame rates caused by hardware-related issues, while maintaining their ability to track objects robustly.

2.3 Frame Rate Intelligence

The variation in frame rate results in changes in the motions of objects. The challenge of handling different frame rates ultimately lies in managing different motion-appearance relationships. In some cases, the frame rate may not accurately reflect the motion distribution. For instance, a 25 fps video of a highway may have a similar motion distribution to a 1 fps pedestrian video. In such situations, using motion descriptors instead of relying solely on the frame rate may be more effective. However, in some cases, the motion descriptors may not be meaningful, such as when there are only a few objects with large covariance of motions, and the exact frame rate number may provide precise information. Therefore, we believe that an MOT solution with frame rate intelligence should be developed under two different modes: known frame rate mode and unknown frame rate mode. These modes can address the issues of different motion-appearance relationships and allow for more effective tracking.

3 Related Works

3.1 MOT Trackers

Most methods for MOT follow the tracking-by-detection (TBD) paradigm (Wojke et al, 2017; Tang et al, 2017; Milan et al, 2017; Sadeghian et al, 2017; Chu et al, 2017; Xu et al, 2019; Zhou et al, 2020; Zhang et al, 2021). Based on the TBD paradigm, recent MOT methods could be categorized into some different groups.

Motion Tracking. Some methods (Milan et al, 2017; Saleh et al, 2021) focused on motion tracking. Milan et al (2017) proposed to model the target motion states using RNNs and predict target motions in future frames. Saleh et al (2021) proposed a motion model to score the tracklets, and was able to improve the performance by tracklet inpainting.

Complex Feature Learning. Other than motion features, learning appearance features is also an important part of modern MOT methods (Wojke et al, 2017; Sadeghian et al, 2017; Chu et al, 2017). Wojke et al (2017) proposed to borrow techniques from the Re-ID task to aid the MOT task. Sadeghian et al (2017) proposed to encode motion, appearance, and interaction information for data association. Chu et al (2017) proposed to use target-specific trackers to model the appearance of each target.

Joint Detection and Tracking. Recently joint learning of detection and tracking is also a trending branch of MOT (Zhou et al, 2020; Zhang et al, 2021). Zhou et al (2020) proposed a point-based framework for joint detection and tracking. Zhang et al (2021) proposed to fairly balance the detection and tracking part in the joint task. Some other methods focus on the optimization of the association of trajectories.

Association as Graph Partition. While the Hungarian Algorithm is commonly used in online MOT data association, some other graph-based association methods are developed for near-online or offline MOT. For example, Tang et al (2016, 2017); Hornakova et al (2020) developed different graph partition methods for offline data association. Yoon et al (2019) further proposed to add structural constraints on the data association. Hu et al (2020) formulates the data association as a rank-1 tensor approximation problem.

Cross-Camera MOT. Besides single-camera MOT, Wen et al (2017); Ma et al (2021) contributed to the cross-camera MOT problem. The cross-camera MOT problem focuses on building up relationships between different cameras so that identities can be associated among multiple different cameras.

Nevertheless, all these methods have the common assumption of a fixed input frame rate, which makes the methods vulnerable in some industrial scenarios where input frame rate sampling strategies may be various. Our proposed approaches aim to establish a frame rate agnostic MOT solution that is robust to frame rate changing via unified frame rate inferring and encoding mechanisms.

3.2 MOT Training

There are mainly two types of MOT training, i.e., with the association in end-to-end training and without association in end-to-end training. Most recent research on joint detection and tracking separates the feature training and the association training. Typically, Wojke et al (2017); Zhang et al (2021) utilized deep models to perform detection and generate appearance features for tracking, and the tracker itself was not data-driven. Some hand-crafted rules were used for target association. These rules might work well in some situations, but would be difficult to be transferred to new environments. Brasó and Leal-Taixé (2020); Li et al (2020); Sadeghian et al (2017); Chu and Ling (2019) presented their methods with association in end-to-end training. However, most methods still had a post-processing stage, with hand-crafted target management. These post-processing steps were not considered in the association training. It might have minor impacts when the streaming frame rate is high and the movements of targets are small, but we found that it actually has large impacts when running a mixed frame rate training. This problem leads to a gap between association training and inference, which is even enlarged in FraMOT. The post-processing including target management and smoothing techniques like Kalman Filter also helps reduce the training difficulties. If we still follow the traditional training scheme and ignore the impacts of the post-processing, we can hardly achieve a promising tracking performance. Therefore, in this paper, we propose a new training scheme that includes the impacts from post-processing into the training stage of association, in a way of periodically updating. We thus make the training and inference environment almost the same and reduce the challenge of mixed frame rate training. Maksai and Fua (2019) also proposed to train a tracker in an iterative way, which aims to enrich the training data by adding wrongly tracked samples of the previous checkpoint. Our method differs from this work in two aspects: first, our method is for the online FraMOT problem and improvements are mainly from low frame rate scenarios, while Maksai and Fua (2019) works on normal frame rate multiple hypothesis tracking (MHT), which is for a totally different purpose; second, our approach is not enriching the training data but doing the opposite, aiming to reduce the gap and reduce unnecessary challenges in frame rate agnostic tracking. Our work is technically complementary to this work.

3.3 MOT Evaluation

CLEAR metrics (Bernardin and Stiefelhagen, 2008), the most popular MOT evaluation metrics up to now, propose to use Multi-Object-Tracking Accuracy (MOTA) as the performance indicator for MOT. MOTA considers the False Positive (FP), False Negative (FN), and IDentity SWitch (IDSW) as three critical terms for MOT. However, the IDSW number is usually far smaller than the FN number, which makes detection quality dominate the task. Later then, the Identity metrics (Ristani et al, 2016) and Higher Order Tracking Accuracy (HOTA) (Luiten et al, 2021) metrics are proposed to solve such a problem. The core metric of Identity metrics, IDF1 score, is designed to evaluate MOT in the multi-camera MOT task. IDF1 basically indicates the largest ratio of consistent tracking. Due to the optimization problem in Identity metrics, IDF1 takes a long time to compute, especially when the video to be evaluated contains thousands of frames and hundreds of objects. HOTA is then proposed to solve these drawbacks of MOTA and IDF1. In the experiment of the HOTA metric, surveys were conducted and it shows that HOTA can reflect the judgments of human beings about tracking quality in a more accurate way.

Although these metrics provide a general definition of tracking quality, it is still difficult to predict the actual performance in some complicated scenarios, e.g., a so-called better tracker may become much worse when the input frame rate changes. Unlike the change of resolution size, changing the frame rate leads to a more complicated setting which usually involves dozens of modifications of the method details. We believe that a robust tracker should have the capability of handling different frame rates. As current evaluation methods are not sufficient for frame rate agnostic MOT evaluation, we attempt to propose new evaluation methods and provide a wider analysis of tracking quality in application scenarios.

4 Frame Rate Agnostic MOT Framework with a Periodic Training Scheme

In order to tackle the emerging challenges associated with the FraMOT problem, it is imperative to develop a novel framework that incorporates multi-frame-rate inputs and mechanisms to handle dynamic motion-appearance relationships. Additionally, the training regime of the framework should prevent an augmented gap between the training and inference stages caused by non-parametric post-processing steps under a low-frame-rate setting in the training phase.

This section presents our Frame Rate Agnostic MOT framework with a Periodic training Scheme (FAPS), which has been specifically designed to address the aforementioned objectives and overcome the challenges associated with FraMOT.

4.1 Overview

There are three different modules in the framework, i.e., Joint Extractor Module (JEM), Association Module (AM), and Trajectory Management Module (TM). The JEM generates the detection results and corresponding appearance feature embeddings from raw images. The AM associates the new detection results with existing trajectories. The TM determines the initiation and termination of all trajectories, making them smoother and handling their status.

The core module of the proposed framework is the Association Module. We design a new Frame Rate Agnostic Association Module with mechanisms to encode frame rate information, providing the capability to handle complex motion-appearance relations of the various frame rates. At the same time, the framework is trained with the proposed Periodic Training Scheme (PTS), which takes all post-processing steps into consideration, providing a simulation of the inference stage environment and thus reducing the gap of data association between training and inference.

Fig. 2 illustrates the overview of the proposed framework. The training pipeline follows the proposed PTS consisting of several training periods, each period contains two stages, i.e., tracking patterns generation stage, and tracking module training stage. Specifically, the tracking patterns generation stage conducts a forward pass using the model checkpoints from the last period and generates the tracking patterns. The tracking patterns include some inference stage information that helps simulate the inference run-time (details in 4.2). In the module training stage, the Association Model takes the output of the JEM and the tracking patterns as input, generates the affinity features, predicts the association scores using the proposed Frame Rate Agnostic Association Module (FAAM), and is supervised by the corresponding association ground-truth signals, as shown in Fig. 3. Especially, during training, instead of passing the input data to the FAAM directly, we design an affinity feature generation module to adjust the affinity features utilizing the generated tracking patterns via pattern matching and fusion. Then the adjusted affinity features will be passed through the FAAM. The FAAM networks utilize the frame rate information to infer the frame rate aware attention and enhance the association prediction. During Inference, the model checkpoints of the last period will be used. The association model only takes the output of the JEM as input, the tracking patterns are no longer needed and the pattern matching and fusion step is removed. The inference pipeline is the same as the tracking patterns generation pipeline.

4.2 Periodic Training Scheme

As mentioned before, one of the challenges that FraMOT brings to us is that the diverse video frame rates enlarge the gap between frame-pair association training and actual inference run-time. The most straightforward solution is adding these inference stage post-processing procedures to the training stage, which is hard to implement since most of these procedures can not be backward propagated. To address this issue, we propose to reflect the inference time post-processing steps during training by considering the tracking patterns in the association network (detailed in Section 4.3.1), which is generated via the Periodic Training Scheme.

Here, the tracking patterns are some records that can reflect the inference time patterns, which depend on the post-processing steps of the tracker. In this paper, the tracking patterns , where , include the location of each tracked target at every frame (denoted by ), the Kalman-Filter-predicted movement based on the previous trajectory (denoted by ), the cached appearance feature embedding (denoted by ), as well as the level index (denoted by ) of the two-stage association strategy because we use a cascade association strategy as some previous works (Yu et al, 2016; Zhang et al, 2021). Other patterns such as the tracklet occlusion status can also be included if the tracker does have a carefully-designed occlusion management.

The Periodic Training Scheme aims at generating these tracking patterns in a periodic manner considering that the inference stage tracking behaviors are not going to change significantly within a short training period. As shown in Fig. 2, we set up multiple training periods and re-sample the tracking patterns before each period starts. In the tracking pattern generation stage of a new period, we utilize the trained checkpoints of the previous period to build an MOT tracker, conduct tracking on full training videos, and generate the tracking patterns that will be used in the affinity feature generation pipeline (see Section 4.3.1). Then we train all the models using the training data and tracking patterns. When a training period is done, we then have the updated checkpoints to generate tracking patterns for the next period. For the first period, we use a pre-trained joint extractor and an IoU-based hand-crafted association module to generate the patterns instead of using randomly initialized checkpoints. For example, in this paper, we use the pre-trained YOLOX detection framework (Ge et al, 2021) with appearance embedding branch as the JEM, a linear combination of the spatial distances and appearance similarities as the AM, and the commonly used Hungarian Algorithm and Kalman Filter with thresholding strategies for target initiation and termination as the TM (detailed in Section 4.4). For the later periods, we use the proposed Frame Rate Agnostic Association Module (FAAM), and the architecture of JEM and TM remains the same.

The whole pipeline is shown in Procedure 1. is the overall loss which combines detection losses and id loss from the JEM, and association loss from the AM (see Section 5). and are learning rates.

4.3 Frame Rate Agnostic Association

The Association Model (AM) takes the detection results and corresponding appearance feature embeddings from the JEM and the tracking patterns (for training only) provided by the PTS as inputs and generates the affinity scores supervised by ground-truth labels. As shown in Fig. 3, there are two parts in the AM, i.e., Affinity Feature Generation Module and Frame Rate Agnostic Association Module.

4.3.1 Affinity Feature Generation

Training Stage. Given a frame pair of frame and , where is not a constant (i.e., the sampling interval is not fixed), the JEM generates the detection results , , and the corresponding appearance features , , where denotes the numbers of proposals of frame , contains the bounding box and confidence score of proposal in frame , and represents the appearance feature vector of proposal in frame , respectively. At the same time, we have the tracking patterns from frame , denoted by , where consists of a bounding box location , an inference stage temporal prediction based on the inference environment, a saved appearance feature embedding from the inference time, as well as the matching level index in the two-stage association. We then construct a pattern matching and fusing pipeline to simulate the inference stage tracking environment for frame rate agnostic training. As shown in the left part of Fig. 3, only detections that are matched with a certain will be chosen for the training, otherwise, they will be discarded because they do not appear in the inference stage (e.g., being filtered out). In this work, we define a is matched with a pattern of the same frame if and only if has the largest Intersection Over Union (IoU) with among all available patterns, and their IoU () is larger than a threshold (e.g., 0.7 as used in our work). Then the matched instances are fused with the tracking patterns, and it generates

| (1) |

The corresponding appearance features of are denoted by . Then the corresponding embedding of is

| (2) |

After matching and fusing, the and are then used to calculate some affinity features for the association networks. We include three distance measurements and one environment variable as the affinity features, denoted by , where

| (3) |

denotes the normalized euclidean distance and is the matching level index in the two stage association where appears in the tracking patterns.

Inference Stage. In the inference process, it is not mandatory to match the tracking patterns with the observations of the previous frame. The primary goal of the matching is to mimic the environment during inference time, including post-processing. However, during the actual inference stage, such a matching is unnecessary. Consequently, the generation of affinity features becomes simpler since the tracking patterns can be directly employed:

| (4) |

where is the tracking patterns collected from the previous frame and is the detection results of current frame . To put it differently, the tracked trajectories can be viewed as cached in the tracking patterns. The association results of the tracking patterns and the new observations are exactly the association results of the tracked trajectories and the new observations.

4.3.2 Frame Rate Agnostic Association Module

The affinity features (in shape of , is the sample amount and is the dimension of each sample) are then fed into a 4-layer neural network and transformed to more discriminative features (in the shape of ), as shown in the right part of Fig. 3. On the other branch generates the frame rate aware attention. The frame rate cues are first encoded into a frame rate embedding by the frame rate encoder (see the next paragraph), then the embedding is pooled into the shape of , followed by the 3-layer frame rate sub-net and finally the channel size is reduced to the same as the feature branch. The output of this branch is denoted by (in the shape of ). The final output prediction of the association module is

| (5) |

denotes the -th element of and denotes the -th element of . Finally, we apply a binary cross entropy loss for the prediction with label 1 for the same identity and 0 for a different one. Specially, the label of a false detection and a true detection is 0 (they are not the same identity); the pair of two false detections will be removed from the training (unable to judge whether they are the same background region). The overall loss , where and is the detection losses and id loss for the joint extractor, following the similar design of Zhang et al (2021).

Frame Rate Encoder. As discussed in Section 2.3, the frame rate could either be known or unknown during the deployment stage. Thus, two frame rate encoders are introduced correspondingly.

For known frame rate, we simply use the cosine embedding of the given frame rate. Specifically, the -th element of the frame rate embedding ), where is the frame rate number, is a constant scaling factor, and is the embedding dimension.

For unknown frame rate, we propose to utilize the Inter-frame Best-matched Distance Vector (IBDV) as the frame rate indicator. Specifically, IBDV describes the distribution of the normalized distances between instances in the two frames if the instances are best matched. This distribution roughly encodes the frame rate information, because best-matched distances will increase when the frame rate is reduced. As shown in Fig. 4, we first find the best matching object pairs through some pre-defined criteria, e.g., minimizing spatial distances or appearance feature distances, and then put all normalized distance values of these pairs into a vector. To make its dimension unchanged, we sort the values in the vector and interpolate them into a fixed shape. We investigate the effects of different criteria to find the best matching pairs in 5.4.5. In our final solution, according to the results on MOT datasets, we choose the ‘spatial distances” as our criterion, i.e., we choose the pairs minimizing the sum of the spatial distances as our best matching pairs. Mathematically, when the frame rate is unknown,

| (6) |

where is the -th matched pair, denotes the linear interpolation and denotes sorting the values of a vector in non-decreasing order.

4.4 Online Tracking

For online tracking, we adopt a simple but effective pipeline that consists of steps including detection filtering, Kalman-Filter-based movement prediction, and a two-stage association strategy. The target management cycle is stated in Procedure 2. We first filter out background objects with a threshold , then predict the movement for each tracked target using Kalman-Filter. In the next, we perform two different stages of association, which is a commonly used technique to reduce fragments (Yu et al, 2016; Zhang et al, 2021). For the first stage, only detection results of high confidence (confidence score greater than ) will be put into the matching pool. For the second stage, the remaining unmatched tracked targets will be again put into the matching pool and matched with the less confident detection results (confidence score less than but greater than ). The association step will find out the best matching pairs minimizing the sum of pair distances (or maximizing the sum of pair scores). This can be easily calculated by the Hungarian Algorithm. Finally, the remaining unmatched detection results of high confidence (confidence score greater than ) will be appended to the tracked target list. If a target in the tracked target list is unmatched for a long interval , then it will be dropped from the list.

5 Experiment

In this section, we provide quantitative experiment results, ablation studies, and in-deep analysis of the proposed approaches to tackle the FraMOT problem.

5.1 Evaluation Datasets and Metrics

5.1.1 Datasets

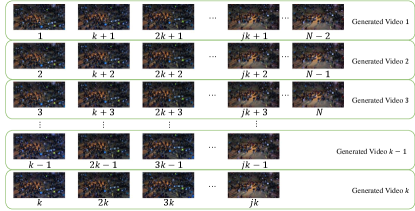

For evaluating FraMOT, a multi-frame-rate dataset is necessary for training and evaluating the algorithm. Instead of collecting and re-annotating extra data, we simulate the multi-frame-rate inputs from existing high-frame-rate MOT datasets. We do not consider a higher frame rate than 30 fps, because i) currently most cameras work at a frame rate of 30 fps, and streaming devices of higher frame rate are rare, and ii) to reduce computational cost most industrial scenarios tend to reduce the frame rate while not increase the frame rate, and iii) higher frame rate usually does not bring extra challenge. Given a video with frames per second and frames in total, the frames are denoted by . The target frame rate is frame per second and we assume that is divisible by . Our simulation method re-samples the original video and re-composes the frames into new videos, satisfying the target frame rate. Specifically, we generate new videos, the -th of which consists of frames where

| (7) |

We call the sampling factor of a simulation. As shown in Fig. 5, the original video is decomposed into a matrix of rows and columns, with each row generating a new video at the target frame rate.

| Dataset | =1 | =2 | =4 | =8 | =16 | =25 | =36 | =50 | ||||||||

| fr. | id. | fr. | id. | fr. | id. | fr. | id. | fr. | id. | fr. | id. | fr. | id. | fr. | id. | |

| MOT17-train | 759 | 78 | 380 | 78 | 190 | 77 | 95 | 76 | 47 | 73 | 30 | 71 | 21 | 68 | 15 | 64 |

| MOT17-test | 846 | - | 423 | - | 211 | - | 106 | - | 53 | - | 34 | - | 23 | - | 17 | - |

| MOT20-train | 2233 | 554 | 1116 | 553 | 558 | 552 | 279 | 551 | 140 | 547 | 89 | 543 | 62 | 539 | 45 | 534 |

| MOT20-test | 1120 | - | 560 | - | 280 | - | 140 | - | 70 | - | 45 | - | 31 | - | 22 | - |

Considering that most videos nowadays have a default frame rate of 25 fps, we start from 25 fps and sample videos with 12.5 fps, 6.25 fps, 3.125 fps, 1.5625 fps, 1 fps, 0.722 fps, and 0.5 fps for a thorough evaluation of the trackers’ performance. That means we choose 1, 2, 4, 8, 16, 25, 36, and 50 for in the Eq. 7, leading to eight different settings in total. By using a broader range of non-geometric acceleration coherence, it is possible to obtain a more comprehensive representation of the overall impact while maintaining a small evaluation time cost. Although it is possible to sample videos of frame rates lower than 0.5 fps, a 0.25 fps setting will generate videos with less than 10 frames, leading to weak test cases. This range may also be extended in the future as the demands change.

Table 1 shows the statistics of the simulation datasets. Every single frame from the original dataset is perfectly included in the new dataset. Lower target frame rate setting results in a larger number of videos but a shorter length for each generated video. However, the average number of different identities in each video remains stable.

5.1.2 Metrics

Following the CLEAR MOT metrics and HOTA metrics, we define the corresponding mean metrics over multi-frame-rate settings to reflect the overall performance of trackers on videos with diverse frame rates. Specifically, we use mean-HOTA (mHOTA) and mean-MOTA (mMOTA) as the primary indicators of frame rate agnostic tracking performance, where

| (8) |

| (9) |

is the set of all target frame rates. We also provide mean-IDF1 (mIDF1) in the main results for reference. Similarily, .

To further investigate the robustness of the trackers against multiple frame rates, we further propose a new metric named ‘Vulnerable Ratio” (VR):

| (10) |

where and denote the highest HOTA and lowest HOTA among all different frame rate settings. VR indicates the largest possible drop in extreme cases and will be helpful in reliability and validity evaluation.

5.2 Implementation Details

Joint extractor. We adopt YOLOX (Ge et al, 2021) detection framework with an extra identity branch as the joint extractor model. The backbone type is yolox-x. All baselines in the experiments share the same joint extractor architecture, including a detection branch and an identity branch (for tracking).

Training data. The joint extractor is first pre-trained on the COCO dataset without the identity branch and then trained on joint MOT17/20 (Milan et al, 2016; Dendorfer et al, 2021, 2020), CrowdHuman (Shao et al, 2018), CityScapes (Cordts et al, 2016) and HIE (Lin et al, 2020) datasets. For MOT datasets, we split the datasets into two different parts without overlapping with each other. The first part is for training, occupying about 60% of the original datasets, and the second part is for evaluation and ablation studies. The training part is further divided into two sets, i.e., Set-A and Set-B, which share some frames but also have their own independent data. Set-A and other non-MOT datasets are for the joint extractor training, and Set-B is for joint extractor fine-tuning (smaller learning rate) and association module training. Set-B samples 300 images from each video, with 200 images shared with Set-A. The design of Set-A and Set-B can help avoid the association module from overfitting to the seen data.

Data augmentation. We follow the same data augmentation techniques as YOLOX on non-tracking data (identity branch and association module will not be optimized on these data). On tracking data, we only apply random flip and random resize and remove other augmentations in YOLOX.

Optimization. Stochastic Gradient Descent (SGD) is used as our optimizer. We first train the joint extractor without association module on Set-A and other non-MOT datasets for 60 epochs. In this stage, we apply a cosine learning rate strategy with an initial learning rate of 0.00001. The batch size is set to 32. Then, we fine-tune the joint extractor and train the association module following the proposed Periodic Training Scheme (PTS) on Set-B for 10 more epochs each period. The period number is set to 3.

Other hyper-parameters. In the training stage, loss weights is set to 0.5 and is set to 1. During online tracking, is set to 0.6 and is set to 0.1. The drop interval is set to 30. A matching pair is formed only if the association score is greater than 0.1.

| Dataset | Method | known frame rate | unknown frame rate | ||||||

| mHOTA | mMOTA | mIDF1 | VR | mHOTA | mMOTA | mIDF1 | VR | ||

| MOT17 | ByteTrack(Zhang et al (2022)) | 52.5 | 64.4 | 61.3 | 38.3 | 51.0 | 62.9 | 59.3 | 43.9 |

| CenterTrack(Zhou et al (2020)) | - | - | - | - | 47.0 | 60.5 | 54.8 | 24.6 | |

| FairMOT(Zhang et al (2021)) | 51.0 | 60.1 | 56.9 | 32.3 | 49.7 | 58.0 | 56.0 | 35.5 | |

| GSDT(Wang et al (2021)) | 48.6 | 52.3 | 56.8 | 32.1 | 46.8 | 50.2 | 54.7 | 37.1 | |

| TraDeS(Wu et al (2021)) | - | - | - | - | 38.6 | 58.3 | 44.4 | 49.0 | |

| Ours | 57.0 | 70.0 | 67.3 | 14.6 | 53.8 | 65.6 | 62.1 | 22.4 | |

| MOT20 | ByteTrack(Zhang et al (2022)) | 43.5 | 48.6 | 49.9 | 58.1 | 40.7 | 46.6 | 46.9 | 63.3 |

| FairMOT(Zhang et al (2021)) | 42.2 | 36.6 | 48.4 | 49.9 | 41.0 | 34.2 | 47.1 | 53.1 | |

| GSDT(Wang et al (2021)) | 44.0 | 41.9 | 51.7 | 50.1 | 42.9 | 46.9 | 51.9 | 54.0 | |

| Ours | 50.8 | 59.3 | 58.3 | 38.8 | 48.0 | 56.1 | 53.5 | 41.0 | |

| Setting | Param-Scale | FAAM | PTS | known frame rate | unknown frame rate | ||||

| mHOTA | mMOTA | VR | mHOTA | mMOTA | VR | ||||

| Baseline (UM) | 1x | - | - | - | 56.5 | 74.6 | 34.6 | ||

| Baseline (MM) | 3x | 58.9 | 77.0 | 29.5 | - | - | - | ||

| UM + PTS | 1x | ✓ | - | - | - | 58.6 | 75.6 | 26.7 | |

| FAAM | 1x | ✓ | 59.3 | 77.4 | 28.5 | 59.1 | 75.8 | 29.1 | |

| FAAM + PTS | 1x | ✓ | ✓ | 61.1 | 77.6 | 24.8 | 61.0 | 77.3 | 24.9 |

5.3 Results

Table 2 shows the overall results of the recent open-source state-of-the-art MOT methods and our method on the challenging MOT17 and MOT20 datasets, with the aforementioned multiple frame rate simulation. ByteTrack (Zhang et al, 2022), FairMOT (Zhang et al, 2021), and GSDT (Wang et al, 2021) have design strategies using the provided frame rate to control cache length. CenterTrack and TraDeS do not design any frame-rate-relevant procedures so their known frame rate mode results are absent. Our method outperforms all other methods with all metrics in terms of frame rate agnostic tracking. In the known frame rate mode, we are 4.5% and 6.8% higher than the runner-up methods in MOT17 and MOT20 for mHOTA, respectively. For mMOTA, we are 5.6% and 10.7% higher. For mIDF1, we are 6.0% and 6.6% higher. Besides, we have the least Vulnerable Rate (VR), which means our performance is the least influenced by the change of frame rate. In unknown frame rate mode, our method still outperforms other methods. The improvement in this mode is smaller than those of the known frame rate mode due to the difficulty of obtaining accurate motion distribution. We have 2.8% and 5.1% improvement in the term of mHOTA for MOT17 and MOT20, respectively. We also have higher mHOTA and mIDF1 scores in this mode, and the Vulnerable Rate is also lower than other methods. The results show that our proposed method is effective for frame rate agnostic tracking.

Fig. 6 further shows the HOTA and MOTA curves of these methods w.r.t the sampling factor (larger means lower frame rate) on MOT20 FraMOT simulation datasets. Among these methods, ByteTrack is the first method making use of the YOLO-X detection baseline, and thus has more accurate detection results and outperforms all other trackers in normal frame rate setting (). To compare with ByteTrack fairly, we also develop our method based on YOLO-X baseline. However, ByteTrack does not use any appearance features for tracking, while our method has an extra tracking branch for exploiting appearance features. As can be observed in Fig. 6, our method is slightly lower than ByteTrack at in terms of HOTA, which confirms that appearance features do not help improve and may harm the tracking performance with high frame rates. However, when the frame rates are low (e.g., with larger ), the performance of ByteTrack drops significantly due to less reliable motion. At the same time, the performance of our method also drops slowly but is much higher than all compared methods. It shows that the tracking becomes more difficult when the frame rate is lowered, but our method has better capability to handle these complicated scenarios. Nevertheless, the overall performance of our method is the best, which reveals our design of FAAM and PTS training is effective for FraMOT.

Other than the MOTChallenge benchmarks, we also conducted experiments using the recent SOMPT22 dataset (simsek2023sompt22), and the results are presented in the applendix B.

5.4 Ablation Study and Analysis

To better understand the proposed methods, we further conduct quantitative experiments on the MOT20 validation set (which is split from the original training set and is not included in the real training set).

5.4.1 Effects of the Proposed Methods

Table 3 shows how different components affect the overall performance. In this experiment, we first present two straightforward baselines for the FraMOT problem, i.e., Unified Model (UM) and Multiple Model (MM). Baseline (UM) is a simple model which is directly trained from sampled frame pairs of all different frame rates without any extra strategy proposed in this work. In contrast, Baseline (MM) trains 3 separate models for 3 different ranges of sampling factor , i.e., high-frame-rate (), middle-frame-rate () and low-frame-rate (), and deploys each frame rate specific model independently for the corresponding frame rate during testing. The first baseline (UM) does not need a frame rate number during inference and thus is presented in the unknown frame rate mode. The second baseline (MM) requires a frame rate number for model switching, so its results are presented in known frame rate mode. All methods in this ablation study use the same joint extractor framework and checkpoint, with differences in the association modules. The mean-HOTA, mean-MOTA, and Vulnerable Ratio of the UM baseline are the worst among all settings in the table, indicating traditional unified association models are not capable of performing frame rate agnostic tracking. The MM baseline is better than the unified model baseline due to the prior knowledge of frame rate division. However, the results are still not satisfying and the method is not feasible when the frame rate number is not given, which is not comparable with the way that humans track objects. Our proposed methods are presented by ‘UM + PTS’, ‘FAAM’, and ‘FAAM + PTS’. ‘UM + PTS’ means applying the Periodic Training Scheme on the unified model baseline. With the help of the PTS strategy, the unified model baseline improves by 2.1% mHOTA and 1.0% mMOTA, as well as reduces the Vulnerable Ratio by 8.9%, showing that the PTS training successfully reduces the difficulty of frame rate agnostic tracking training. ‘FAAM’ means only applying the Frame Rate Agnostic Association Module, which can both work at known frame rate mode and unknown frame rate mode. This unified approach performs better than both baselines while using only 1/3 of the parameters compared with the MM baseline. More importantly, it can automatically infer the frame rate information. When we apply both the two proposed methods, presented as ‘FAAM + PTS’, we obtain the best results of 61.1% mHOTA and 77.6% mMOTA, with the lowest Vulnerable Ratio of 24.9% (known frame rate mode). The results in the known frame rate mode are also improved and are quite competitive compared with the known frame rate mode. The results above show that both two approaches are effective, improve the overall frame rate agnostic tracking accuracy and make the tracker wiser.

5.4.2 Results on Unseen Frame Rates

We have selected 8 different sampling factors in the training and testing dataset simulation. To better understand the behaviors of the proposed method about handling various frame rates, we conducted experiments on unseen frame rates, i.e., testing on frame rates that are different from the training set. Specifically, we select and rebuild the validation set based on these unseen . We still include the normal frame rate, i.e., , in the unseen set because it is a baseline for analysis. Table 4 shows the results of two baselines and our FAAM equipped with PTS training. All models drop slightly when tested on unseen frame rates. However, our FAAM still outperforms the two baselines. Note that the FAAM result here is from unknown-frame-rate mode, which does not need extra frame rate information, while the MM baseline must be provided with a frame rate number.

| Setting | unseen frame rate | ||

| mHOTA | mMOTA | VR | |

| Baseline (UM) | 54.9 | 72.1 | 36.7 |

| Baseline (MM) | 57.0 | 74.4 | 33.5 |

| FAAM + PTS | 59.6 | 75.0 | 29.6 |

5.4.3 Results under Dynamic Sampling Setting

We also tried testing the methods on dynamic sampling videos to simulate some scenarios with network instability, where some images are accidentally, randomly missing. We generated dynamic sampling videos by randomly sampling frames in the original videos. Given a video with frames and an integer , we randomly choose frames from the frame pool (initially includes all frames of the given video) and ensemble the chosen frames into a video. The chosen frames are then removed from the frame pool. We keep choosing frames randomly from the frame pool and generating videos until the frame pool is empty. In such way, we will obtain videos in total with approximately frames in each generated video. We chose . Fig. 7 shows the distribution of the sampling gap, with an average gap time of 15.7 frames (628 ms) and a median gap time of 11 frames (440 ms).

Table 5 shows the results of two baselines and our FAAM equipped with PTS training. Under dynamic sampling settings, our FAAM still outperforms the two baselines. Note that the FAAM result here is from unknown-frame-rate mode, and the baseline (MM) treats all dynamic videos as 1.6 fps (as the average gap time is 628 ms, which is approximately 1.6 fps).

| Setting | dynamic sampling | ||

| HOTA | MOTA | IDF1 | |

| Baseline (UM) | 50.5 | 76.2 | 58.3 |

| Baseline (MM) | 47.6 | 74.4 | 53.3 |

| FAAM + PTS | 55.5 | 77.5 | 64.8 |

5.4.4 Influence of Different Motions

| Model | Slow ( 4 ppf) | Normal (8-14 ppf) | Fast (19 ppf) | ||||||

| mHOTA | mMOTA | VR | mHOTA | mMOTA | VR | mHOTA | mMOTA | VR | |

| Baseline (UM) | 68.0 | 72.2 | 5.7 | 50.7 | 58.3 | 14.3 | 54.2 | 52.4 | 19.1 |

| Baseline (MM) | 68.7 | 73.3 | 5.1 | 51.5 | 58.9 | 18.5 | 44.1 | 38.5 | 51.9 |

| FAAM + PTS | 68.9 | 72.3 | 8.2 | 54.5 | 59.7 | 7.0 | 53.8 | 50.7 | 19.0 |

In order to investigate the effectiveness of our proposed method in different scenarios with varying object motions, we conducted additional experiments. To do this, we utilized the MOT17 validation set and divided it into three separate subsets based on the object motions present in each video sequence. To estimate the object motions, we computed the median motion per frame of the top 30% fastest objects (in pixels per frame, ppf), which provided a clear representation of the motion differences between the videos.

The first subset, which consisted of sequences MOT17-02 and MOT17-04, featured static camera views and slow object motions (with a median motion of less than 4 ppf for the top 30% fastest objects). The second subset included MOT17-05, MOT17-09, MOT17-10, and MOT17-11, which featured moving camera views and normal object motions (with a median motion of 8-14 ppf for the top 30% fastest objects). The third subset comprised MOT17-13, which featured a camera view that was turning significantly and fast object motions (with a median motion of 19 ppf for the top 30% fastest objects).

We evaluated two baseline methods and our proposed approach on each of these subsets and recorded the results in Table 6. Our findings demonstrated that the baseline method MM performed better under slow scenarios but was ineffective under fast object motions, while the baseline method UM performed better under fast object motions but struggled under slow object motions. In contrast, our proposed approach maintained a balanced performance across all three scenarios and achieved the highest overall performance.

| Group | threshold | criterion | mHOTA | mMOTA | VR | HOTA@k | |||

| Different | 0 | 0.9 | dist. | 59.1 | 75.8 | 29.1 | 65.5 | 60.0 | 46.4 |

| 1 | 0.9 | dist. | 60.6 | 77.4 | 26.9 | 65.6 | 62.3 | 48.3 | |

| 2 | 0.9 | dist. | 60.8 | 77.2 | 25.8 | 65.2 | 62.6 | 48.8 | |

| 3 | 0.9 | dist. | 61.0 | 77.3 | 24.9 | 65.3 | 62.7 | 49.4 | |

| 4 | 0.9 | dist. | 60.7 | 77.2 | 27.2 | 65.3 | 62.7 | 47.9 | |

| Different thresholds | 3 | 0.5 | dist. | 60.8 | 77.4 | 25.2 | 65.2 | 62.6 | 49.1 |

| 3 | 0.7 | dist. | 60.9 | 77.3 | 25.0 | 65.3 | 62.6 | 49.3 | |

| 3 | 0.9 | dist. | 61.0 | 77.3 | 24.9 | 65.3 | 62.7 | 49.4 | |

| 3 | 1.1 | dist. | 61.0 | 77.2 | 24.8 | 65.3 | 62.7 | 49.5 | |

| 3 | 1.3 | dist. | 61.1 | 77.1 | 24.7 | 65.4 | 62.8 | 49.6 | |

| Different criteria | 3 | 0.9 | random | 58.7 | 75.9 | 26.6 | 63.1 | 61.0 | 46.7 |

| 3 | 0.9 | sim. | 60.8 | 77.5 | 26.8 | 65.7 | 62.7 | 48.3 | |

| 3 | 0.9 | dist. | 61.0 | 77.3 | 24.9 | 65.3 | 62.7 | 49.4 | |

5.4.5 Influence of Hyper-Settings

Table 7 shows how the hyper-settings influence the overall performance.

Period number . In PTS training, we introduce the period number . To understand its influence, we conduct experiments with different from 0 to 4. 0 means PTS is not applied. We can see that when , we obtain the best performance, while the other settings are also obtaining better performance than the not applied case. The results indicate that a large is not bringing more gain. It also suggests different checkpoints at later periods do not change the inference environment a lot, the critical change is at the first period.

Matching threshold. Thresholding is sometimes affecting the results a lot. To ensure the matching threshold is stable and the gain is not from the thresholding strategy, we conduct experiments with different thresholds ranging from 0.5 to 1.3. We can see that our method obtains the best mHOTA at the threshold of 1.3, and obtains the best mMOTA at the threshold of 0.5. Although the thresholds vary, the performances are still stable with less than 0.3% difference. We choose 0.9 as the final threshold because we can obtain a balance between mHOTA and mMOTA at the threshold of 0.9.

IBDV criteria. In Section 4.3.2 we introduce Inter-frame Best-matched Distance Vector (IBDV) as the feature of frame rate embedding. We further design more different matching rules to understand how the rules affect the overall results. We present three different matching rules in the table. ‘random’ means we randomly sample pairs as matched pairs. ‘sim.’ means we choose the pairs with the highest appearance similarity as the matched pairs. ‘dist.’ means we choose the pairs with closest positional distance as the matched pairs. The results show that both ‘sim.’ and ‘dist.’ rules perform better than the ‘random’ rule, while the ‘dist.’ rule is slightly better than the ‘sim.’ rule. It might be because positional information is more reliable in general. It also reminds us that more complicated rules might be more effective.

5.4.6 Visualization and Analysis

In this section, we demonstrate the data simulation and our proposed method via visualization and analysis.

Visualization of FraMOT simulation data. Fig. 8 shows some selected simulation image data from sequence MOT17-13 and sequence MOT20-03. In MOT17-13, the concerned target (ID 133) has a small movement between adjacent frames when , while the movement becomes much larger at . In MOT20-03, the movements (ID 34 and 95) are moderated, but the numbers of matching candidates are still increased a lot in this crowded scenario.

Fig. 9 illustrates curves of candidate numbers during matching with respect to sampling factor and thresholding factor , i.e., how many possible candidates that are having an equal or closer distance to the concerned object, compared with the times distance to the correct ground-truth object. In other words, the curves show the average numbers of objects we must compare with during matching, under different and different thresholding strategies. Usually, is set to a number larger than 1 in order to make the correct object with the same identity number being compared for sure, or we are not able to recall the previously tracked object. If the candidate number is large, that means we have more chances to make mistakes and thus the task is more challenging. From the two figures we can see that when is increased, the candidate numbers increase significantly, especially in MOT20 dataset. In fact, in normal frame rate scenarios, the candidate number is slightly larger than 1, which means in most cases, finding the object with the closest distance will hit the ground-truth. It also well explained why introducing Re-ID branch in YOLO-X baseline did not improve the results at the normal frame rate, it is because positional information is so reliable to perform matching. Fig. 9 shows that the FraMOT task is much more difficult due to various candidate numbers.

Visualization of the PTS data. 10 visualizes the affinity features used in this paper. We both show PTS and non-PTS affinity features at sampling rates of and . The four sub-figures show that after applying PTS training, the boundary between the same and different identity pairs is clearer, which means the task is easier. At both sampling rates, there are more difficult data points in non-PTS counterparts. We can further find that at , the gap between PTS and non-PTS is enlarged compared with , which indicates PTS is more effective at larger sampling rates.

Visualization of the attention embedding of Frame Rate Agnostic Association Module. 11 illustrates the attention maps learned by the proposed Frame Rate Agnostic Association Module (FAAM) at different sampling rates (different -s). The channels of the attention map at are sorted in increasing order. Other attention maps are aligned with the attention map. When is small, e.g., , the right part of the affinity features are emphasized. Then, the right part becomes less important when goes large. Meanwhile, some critical points on the left part are focused on. These observations reflect that the module tends to focus on different dimensions of the affinity features at different sampling rates. We hypothesize that most of the right part is related to motion features, which well explains motion becomes less reliable when the frame rate is lowered. More interestingly, when the frame rate is slightly lower (e.g., ), the attention map focuses on more different channels, which indicates motion cues are still reliable but it is probably not reliable using the single feature of motion (e.g., IoU).

6 Conclusion and Future Work

In this paper, we introduce Frame Rate Agnostic Multi-Object-Tracking (FraMOT) as an extended challenge of the classical MOT task to seek a wiser solution to the tracking problem. We also present our initial attempt to address the new challenge via the Frame Rate Agnostic MOT Framework with a Periodic training Scheme (FAPS). In the proposed framework, the carefully designed Frame Rate Agnostic Association Module (FAAM) is able to infer frame rate information automatically and utilize it to aid the identity matching of more complicated motion-appearance relations; while the Periodic Training Scheme (PTS) aligns the training and inference environment and help reduce the enlarged gap between training and inference in FraMOT. The two proposed approaches successfully avoid the tracking performance from dropping dramatically when the input frame rate changes, and help build a more robust tracking algorithm. Experimental results and analysis have shown that the proposed methods are effective.

In the future, we may continue to focus on how to further improve the tracking stability against various frame rates. The core problem might be how to develop better frame rate perception methods to fully utilize the video information to aid the task. Besides, FraMOT makes it more difficult to utilize tricky strategies for obtaining improvement. We may find more robust strategies that work on multiple frame rates rather than work on the normal frame rate only, and thus improve the tracking robustness.

Acknowledgments Wanli Ouyang was supported by the Australian Research Council Grant DP200103223, Australian Medical Research Future Fund MRFAI000085, CRC-P Smart Material Recovery Facility (SMRF) – Curby Soft Plastics, and CRC-P ARIA - Bionic Visual-Spatial Prosthesis for the Blind.

Data Availability All data used in this paper are publicly available on corresponding websites.

MOT17/MOT20: motchallenge.net;

CrowdHuman: www.crowdhuman.org;

CityScapes: www.cityscapes-dataset.com;

HIE: humaninevents.org.

Appendix A Tacking results Demo

Fig. A1 and Fig. A2 show some selected tracking results of our approach on the MOT20 testing set. Different colors and different numbers represent different trajectories. We can see that at the lower frame rate scenarios (i.e., sampling factor ), our method keeps a stable performance. More importantly, the results are all from the same model checkpoint, showing that our method can handle various frame rates robustly. Among these scenarios, the movements of the targets between adjacent frames are much larger than those in normal frame rate scenarios.

| Methods | mHOTA | mMOTA | mIDF1 | VR |

| ByteTrack(Zhang et al (2022)) | 50.4 | 53.5 | 60.4 | 27.4 |

| FairMOT(Zhang et al (2021)) | 34.5 | 23.1 | 42.3 | 17.2 |

| GSDT(Wang et al (2021)) | 30.4 | 26.2 | 37.7 | 14.7 |

| Ours | 54.5 | 62.9 | 66.8 | 10.2 |

In Fig. A2, the targets of number 2, number 6 and number 19 do not have a large bounding box overlap between adjacent frames, leading to less reliable motion cues. In normal frame rate scenarios, such a large motion gap in adjacent frames usually indicates a different identity, which is quite different from lower frame rate scenarios. Thanks to the FAAM design and PTS strategy, Our method is able to make a correct prediction in multi-frame-rate settings simultaneously.

Appendix B Additional Experiments on SOMPT22

Table A1 shows the results of some recent SOTA methods and our approach on the multiple frame rate version of a new MOT dataset called SOMPT22 (simsek2023sompt22). SOMPT22 consists of 9 videos in the training set and 5 videos in the testing set. This new dataset has been created to enhance tracking annotations with videos of middle-level densities and static camera views. Compared to MOT20, which also comprises static camera views, SOMPT22 encompasses a wider range of scenarios with various object motions. All methods have used the same joint extractor configuration and checkpoints while testing on MOT20. We have used the same method as MOT17/20 splitting for dividing the SOMPT22 training set and creating the validation set. The reported outcomes have been tested on the validation set.

From Table A1, it is evident that our method continues to outperform the recent SOTA methods. Compared to the ByteTrack approach, we have gained improvements of 4.1%, 9.4%, and 6.4% in terms of mHOTA, mMOTA, and mIDF1, respectively. It is noteworthy that both ByteTrack and our method have a deeper backbone. Therefore, when a new dataset with vastly different scenarios is considered, these two methods perform better, while FairMOT and GSDT are less capable of transferring their detection and tracking ability to new environments.

References

- \bibcommenthead

- Bernardin and Stiefelhagen (2008) Bernardin K, Stiefelhagen R (2008) Evaluating multiple object tracking performance: the clear mot metrics. Journal on Image and Video Processing 2008:1

- Brasó and Leal-Taixé (2020) Brasó G, Leal-Taixé L (2020) Learning a neural solver for multiple object tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 6247–6257

- Chu and Ling (2019) Chu P, Ling H (2019) Famnet: Joint learning of feature, affinity and multi-dimensional assignment for online multiple object tracking. In: The IEEE International Conference on Computer Vision (ICCV)

- Chu et al (2017) Chu Q, Ouyang W, Li H, et al (2017) Online multi-object tracking using cnn-based single object tracker with spatial-temporal attention mechanism. In: ICCV

- Cordts et al (2016) Cordts M, Omran M, Ramos S, et al (2016) The cityscapes dataset for semantic urban scene understanding. In: Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- Dendorfer et al (2020) Dendorfer P, Rezatofighi H, Milan A, et al (2020) Mot20: A benchmark for multi object tracking in crowded scenes. 2003.09003

- Dendorfer et al (2021) Dendorfer P, Osep A, Milan A, et al (2021) Motchallenge: A benchmark for single-camera multiple target tracking. International Journal of Computer Vision 129(4):845–881

- Ge et al (2021) Ge Z, Liu S, Wang F, et al (2021) YOLOX: exceeding YOLO series in 2021. CoRR abs/2107.08430. URL https://arxiv.org/abs/2107.08430, https://arxiv.org/abs/2107.08430

- Han et al (2022) Han T, Bai L, Gao J, et al (2022) Dr. vic: Decomposition and reasoning for video individual counting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 3083–3092

- Hornakova et al (2020) Hornakova A, Henschel R, Rosenhahn B, et al (2020) Lifted disjoint paths with application in multiple object tracking. In: International conference on machine learning, PMLR, pp 4364–4375

- Hu et al (2020) Hu W, Shi X, Zhou Z, et al (2020) Dual l1-normalized context aware tensor power iteration and its applications to multi-object tracking and multi-graph matching. International Journal of Computer Vision 128(2):360–392

- Kieritz et al (2018) Kieritz H, Hubner W, Arens M (2018) Joint detection and online multi-object tracking. In: CVPRW

- Li et al (2020) Li J, Gao X, Jiang T (2020) Graph networks for multiple object tracking. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp 719–728

- Lin et al (2020) Lin W, Liu H, Liu S, et al (2020) Human in events: A large-scale benchmark for human-centric video analysis in complex events. 2005.04490

- Luiten et al (2021) Luiten J, Osep A, Dendorfer P, et al (2021) Hota: A higher order metric for evaluating multi-object tracking. International journal of computer vision 129(2):548–578

- Ma et al (2021) Ma C, Yang F, Li Y, et al (2021) Deep trajectory post-processing and position projection for single & multiple camera multiple object tracking. International Journal of Computer Vision 129(12):3255–3278

- Maksai and Fua (2019) Maksai A, Fua P (2019) Eliminating exposure bias and metric mismatch in multiple object tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 4639–4648

- Milan et al (2016) Milan A, Leal-Taixé L, Reid I, et al (2016) MOT16: A benchmark for multi-object tracking. arXiv:160300831 [cs] URL http://arxiv.org/abs/1603.00831, arXiv: 1603.00831

- Milan et al (2017) Milan A, Rezatofighi SH, Dick AR, et al (2017) Online multi-target tracking using recurrent neural networks. In: AAAI

- Ristani et al (2016) Ristani E, Solera F, Zou R, et al (2016) Performance measures and a data set for multi-target, multi-camera tracking. In: European Conference on Computer Vision, Springer, pp 17–35

- Sadeghian et al (2017) Sadeghian A, Alahi A, Savarese S (2017) Tracking the untrackable: Learning to track multiple cues with long-term dependencies. In: ICCV

- Saleh et al (2021) Saleh F, Aliakbarian S, Rezatofighi H, et al (2021) Probabilistic tracklet scoring and inpainting for multiple object tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 14,329–14,339

- Shao et al (2018) Shao S, Zhao Z, Li B, et al (2018) Crowdhuman: A benchmark for detecting human in a crowd. arXiv preprint arXiv:180500123

- Takala and Pietikainen (2007) Takala V, Pietikainen M (2007) Multi-object tracking using color, texture and motion. In: 2007 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, pp 1–7

- Tang et al (2016) Tang S, Andres B, Andriluka M, et al (2016) Multi-person tracking by multicut and deep matching. In: ECCV

- Tang et al (2017) Tang S, Andriluka M, Andres B, et al (2017) Multiple people tracking by lifted multicut and person reidentification. In: CVPR

- Wang et al (2021) Wang Y, Kitani K, Weng X (2021) Joint object detection and multi-object tracking with graph neural networks. In: 2021 IEEE International Conference on Robotics and Automation (ICRA), IEEE, pp 13,708–13,715

- Wen et al (2017) Wen L, Lei Z, Chang MC, et al (2017) Multi-camera multi-target tracking with space-time-view hyper-graph. International Journal of Computer Vision 122(2):313–333

- Wojke et al (2017) Wojke N, Bewley A, Paulus D (2017) Simple online and realtime tracking with a deep association metric. In: ICIP

- Wu et al (2021) Wu J, Cao J, Song L, et al (2021) Track to detect and segment: An online multi-object tracker. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12,352–12,361

- Xu et al (2019) Xu J, Cao Y, Zhang Z, et al (2019) Spatial-temporal relation networks for multi-object tracking. In: ICCV

- Yoon et al (2019) Yoon JH, Lee CR, Yang MH, et al (2019) Structural constraint data association for online multi-object tracking. International Journal of Computer Vision 127(1):1–21

- Yu et al (2016) Yu F, Li W, Li Q, et al (2016) Poi: Multiple object tracking with high performance detection and appearance feature. In: ECCV

- Zhang et al (2008) Zhang L, Li Y, Nevatia R (2008) Global data association for multi-object tracking using network flows. In: CVPr

- Zhang et al (2021) Zhang Y, Wang C, Wang X, et al (2021) Fairmot: On the fairness of detection and re-identification in multiple object tracking. International Journal of Computer Vision 129(11):3069–3087

- Zhang et al (2022) Zhang Y, Sun P, Jiang Y, et al (2022) Bytetrack: Multi-object tracking by associating every detection box. In: Proceedings of the European Conference on Computer Vision (ECCV)

- Zhou et al (2020) Zhou X, Koltun V, Krähenbühl P (2020) Tracking objects as points. ECCV