Towards Explainable Goal Recognition Using Weight of Evidence (WoE): A Human-Centered Approach

Abstract

Goal recognition (GR) involves inferring an agent’s unobserved goal from a sequence of observations. This is a critical problem in AI with diverse applications. Traditionally, GR has been addressed using ’inference to the best explanation’ or abduction, where hypotheses about the agent’s goals are generated as the most plausible explanations for observed behavior. Alternatively, some approaches enhance interpretability by ensuring that an agent’s behavior aligns with an observer’s expectations or by making the reasoning behind decisions more transparent. In this work, we tackle a different challenge: explaining the GR process in a way that is comprehensible to humans. We introduce and evaluate an explainable model for goal recognition (GR) agents, grounded in the theoretical framework and cognitive processes underlying human behavior explanation. Drawing on insights from two human-agent studies, we propose a conceptual framework for human-centered explanations of GR. Using this framework, we develop the eXplainable Goal Recognition (XGR) model, which generates explanations for both why and why not questions. We evaluate the model computationally across eight GR benchmarks and through three user studies. The first study assesses the efficiency of generating human-like explanations within the Sokoban game domain, the second examines perceived explainability in the same domain, and the third evaluates the model’s effectiveness in aiding decision-making in illegal fishing detection. Results demonstrate that the XGR model significantly enhances user understanding, trust, and decision-making compared to baseline models, underscoring its potential to improve human-agent collaboration.

1 Introduction

Goal Recognition (GR) is the problem of predicting an agent’s intent by observing its behavior. The task of GR has numerous potential and practical applications, such as smart homes (?) and workplace safety (?), among others (?, ?). Research on GR uses different inference techniques to predict the ultimate goals of the agents being observed. It advances with increasingly complex domain models and better approaches. However, understanding and fostering human trust in these systems is challenging due to their lack of explainability. This becomes particularly crucial in safety-critical applications like social care, military planning, and medical support, where the system’s decisions can have major consequences. Systems must be capable of explaining the decisions made and communicating their decisions in a way that is understandable to people (?, ?, ?).

The vast majority of work has focused on improving the explicability of agent behavior (?, ?, ?, ?, ?). This typically involves making the behavior more understandable to observers, either by aligning it with their expectations or ensuring the interpretability of the inference process. In shifting the focus from interpretability to justification, the goal is to develop an explainable GR agent that provides context and rationale for each predicted goal, rather than merely making the inference process interpretable.

This paper contributes to the ongoing work in eXplainable AI (XAI) by developing an explainable GR model from a human-centered perspective. Studies in social psychology indicate that people use the same conceptual framework that they apply to humans to explain artificial agents’ behavior, and they also expect artificial agents to adopt this framework (?). Therefore, our approach is to provide comprehensible explanations that align with how people rationalize outcomes and interpret information. This effort aims to bridge the gap between mere prediction and understandable explanation.

There are two main contributions of this work. First, we propose a conceptual framework for the explanation process in GR tasks and identify key concepts people use when reasoning about goal prediction. Using a bottom-up approach, the framework is derived from an analysis of human explanations of recognition tasks. We study this in two different domains to increase the generalizability of our model: Sokoban and StarCraft games. We examine the frequency, sequence, and relationships between the basic components of these explanations. Using the thematic analysis process, we identified 11 concepts from 864 explanations of agents operating in various scenarios. Incorporating insights from the folk theory of mind and behavior (?), we propose a human-centered model for GR explanations.

Our second contribution is building an eXplainable Goal Recognition (XGR) model based on the proposed conceptual framework. The model generates explanations for GR agents using the information theory concept of Weight of Evidence (WoE) (Good 1985; Melis et al. 2021). We define the problem of explanation selection using the main concept from our conceptual model, which we call an observational marker, i.e., the observation with the highest WoE. We computationally evaluate the XGR model on eight GR benchmark domains (?). We conducted three user studies to evaluate our model’s performance in different aspects: 1) Efficiency in Generating Human-Like Explanations: This study focused on assessing how well the model produces explanations that resemble those generated by humans, specifically within the Sokoban game domain; 2) Perceived Explainability: This study examined how users perceive the model’s ability to explain its actions and decisions, also within the Sokoban game domain. 3) Effectiveness in Supporting Decision-Making: This study evaluated the model’s effectiveness in supporting users’ decision-making process in the domain of illegal fishing detection. In the first study, our model aligns with human explanations in over 73% of scenarios. In the second and third studies, our model outperforms the tested baselines.

Part of this paper was published at the International Conference on Automated Planning and Scheduling (ICAPS) (?), where we presented our XGR model and its evaluation through the first two user studies. In this work, we introduce the conceptual framework for GR explanations, provide further details on our XGR model, and extend its evaluation to the context of decision-making support by conducting a third user study.

The structure of the paper is as follows. Section 2 reviews the related work on explainability in GR and human behavior explanation; Section 3 presents the human-agent experiment to build the conceptual framework; Section 4 provides the necessary background required to follow up with the proposed XGR model; Section 5 presents the XGR model; Section 6 describes experiments that evaluate the model; We then conclude with a summary and opportunities for further research in Section 7.

2 Related Work

2.1 Goal Recognition and Explainability

Goal recognition (GR) involves identifying an agent’s unobserved goal based on a sequence of observations. Various approaches exist to address the GR problem. Common methods include library-based GR algorithms, which use specialized plan recognition libraries to represent all known methods for achieving known goals (?); model-based GR algorithms (?, ?, ?), where GR agents leverage domain knowledge through planners to generate the necessary plans for achieving a goal (?); and machine learning GR approaches that rely on large training datasets from which algorithms learn domain constraints (?, ?, ?, ?). Well-established algorithms have shown high performance in labeling action sequences with corresponding goals (?, ?, ?), yet explaining why the algorithm arrived at a particular conclusion remains under-explored.

Prior work has focused on explaining GR in the form of answering the question: what goal is the agent trying to achieve? A long line of work has suggested explaining goal inference, which is a form of ’inference to the best explanation’, also called abduction (?, ?, ?, ?, ?). This process formulates hypotheses about the agent’s goals which are identified as the most plausible explanations for the observed actions. That would assist in making sense of actions and attributing appropriate goals or intentions to them, enhancing our understanding of the agent’s behavior. Another approach is improving the explicability of agent behavior (?, ?, ?, ?, ?, ?, ?). This involves ensuring that the behavior of the agent is self-explanatory to an observer by either aligning its actions with the observer’s expectations or making the reasoning behind its decisions interpretable. These approaches often assume optimal or simplified sub-optimal actions, neglecting the inherent challenges in agent planning. ? (?) introduced the Goal Recognition Design (GRD) approach, which facilitates the process of inferring an agent’s goals. This approach aims to analyze and redesign the underlying domain environment to ensure early and accurate detection of the agent’s objectives.

However, previous approaches assume that the agent’s behavior or domain is controlled to make its actions explicable. In this work, we address a different problem: explaining the GR process in a way that is understandable to humans. In complex and dynamic environments where agent behavior may not neatly align with human expectations or optimal action models, understanding the reasoning behind goal predictions is crucial for building trust in GR systems. There is a need for an explanation model that justifies the GR output, ensuring that the reasons behind goal predictions are clear and understandable. Instead of merely making the inference process transparent by controlling the domain or agent’s actions, a model should provide context and rationale for each predicted goal. This includes accounting for sub-optimal behavior that might arise due to planning difficulties or environmental constraints, thereby offering a more nuanced and realistic understanding of agent actions.

Additionally, fostering a solid understanding of an agent’s behavior presents a significant challenge for decision-makers. Human-AI team performance is influenced by scenarios where the AI system provides predictions while humans maintain the final decision-making authority. GR systems play an essential role in this context by accurately predicting and interpreting user intentions, which inform and guide subsequent actions (?, ?, ?, ?). While these systems demonstrate proficiency in high-stakes event prediction, they often lack the ability to provide justifications that clarify the motivations behind predicted intentions. The need for a human-like explanation should be considered to elevate system prediction toward a cognitive understanding of why certain outcomes are predicted and how they relate to the broader context of high-stakes situations (?). It will enhance decision-making by ensuring that AI predictions are effectively understood and appropriately used.

2.2 Human behavior explanation

We outline work on social attribution, which defines how people attribute and explain others’ behavior. Social attribution focuses not on the actual causes of human behavior but on how individuals attribute or explain the behavior of others. ? (?) defines social attribution as person perception, emphasizing the importance of intentions and intentionality. An intention is a mental state where a person commits to a specific action or goal. People consistently agree on classifying events as either ”intentional” or ”unintentional” (?). It is argued that while intentionality can be objective, it is also a social construct, as people ascribe intentions to one another, impacting social interactions.

In addition to intentions, research suggests that other factors, such as beliefs, desires, and traits, play a significant role in attributing social behavior. Researchers from various fields have converged on the insight that people’s everyday explanations of behavior are rooted in a basic conceptual framework, commonly referred to as folk psychology or theory of mind (?, ?, ?). Folk psychology involves attributing human behavior using everyday terms like beliefs, desires, intentions, emotions, and personality traits. This area of cognitive and social psychology acknowledges that, although these concepts may not genuinely cause human behavior, they are the ones people use to understand and predict each other’s actions (?).

Malle [110] presents a model grounded in the Theory of Mind to explain how people attribute behavior to others and themselves by assigning mental states such as desires, beliefs, values, and intentions. This model identifies different modes of behavior explanations and their cognitive processes by distinguishing between intentional and unintentional actions (Figure 1). Intentional behavior is typically explained by reasoning over key mental components—the reasons behind deliberate acts—based on the rationality principle, where agents are expected to act efficiently to achieve their desires given their beliefs and values. Sometimes, people explain intentional actions using two additional modes: causal history of reason explanations (CHR) and enabling factor explanations (EF). In CHR mode, people focus on factors that influence the reasons behind an action, such as unconscious motives, emotions, and cultural influences, which do not necessarily involve rationality or subjectivity. In EF mode, instead of explaining the intention, they explain how the intention led to the outcome, considering personal abilities or environmental conditions that facilitated the action. Unintentional behavior, on the other hand, is explained by referring to causes like habitual or physical phenomena, particularly those that prevent or prohibit intentional behavior.

Our proposed model is based on Malle’s framework, focusing on the mode of reasoning for intentions and intentional actions. Since people tend to attribute human-like traits to artificial agents, they expect explanations from these agents using the same conceptual framework (?). Therefore, we develop our model by incorporating insights from human-agent studies. To the best of our knowledge, this is the first model designed for explainable GR agents.

3 Human-Agent Study: Insights from Human Explanation

In this section, we present our conceptual model for explaining GR, grounded on empirical data from two different human-agent studies.

3.1 Study Objective

The goal of this study is to investigate how humans explain a GR agent’s behavior and identify the key concepts present in such explanations, their frequency, and the relationships among these concepts. Based on our findings, we construct a conceptual framework for GR explanation. We have two case studies with different scenarios and assumptions. The first case study is set in a general domain where no specific expertise is required, such as the Sokoban domain, a classic puzzle game where a player pushes boxes to designated storage locations within a grid. In contrast, the second case study involves explanations provided by domain experts, as seen in the StarCraft domain, a complex real-time strategy game that requires strategic planning, resource management, and tactical combat.

3.2 Goal Markov Decision Process (Goal MDP)

For both case studies, we employ the Goal Markov Decision Process (Goal MDP) framework to capture an observer’s view of the world. A Goal MDP (?) represents the possible actions that can be taken and the causal relationships of their effects on the world’s states. Formally, it is defined as a tuple , where is a non-empty state space, is a non-empty set of goal states, is a set of actions, is the probability of transitioning from state to state given action , and is the cost of that transition. The solution to a Goal MDP is a policy that maps states to actions with an overall minimum expected cost.

We describe an observer’s worldview as a Goal MDP. Our description is based on the following assumptions: (1) the observer perceives the world as a finite set of discrete states and actions; (2) the observer interprets transitions between states in a deterministic manner, while the probability reflects the observer’s confidence or uncertainty about the transition; (3) the observer values actions based on costs, aiming to minimize them over time; (4) the observer’s reasoning is based on their internal world state representation, which may not necessarily match the actual state observed by the player/agent; (5) the player’s preferences are not observable, meaning the observer must infer information and rely on observable actions to make judgments; and (6) the observer is assumed to have full observability, meaning they have access to the complete state of the environment.

3.3 Case Study 1: Sokoban Game

Sokoban is a classic puzzle game (Figure 2) set in a warehouse environment, where the player or agent navigates through a grid-like layout to move boxes onto designated storage locations. The objective is that each box must be pushed, one at a time, to its assigned spot. The challenge lies in navigating movement constraints and spatial limitations; boxes can only be pushed into empty spaces and cannot be pulled or pushed against walls or other boxes. We modified the Sokoban game rules to allow the player to push multiple boxes simultaneously. This modification transforms the game from a straightforward navigational task into a strategic challenge with multiple objectives, where the player aims to minimize the number of steps taken. We used a STRIPS-like discrete planner to generate plan hypotheses derived from the domain theory and observations as our ground truth.

3.3.1 Study Design

We defined the observer’s worldview within the framework of Goal MDPs as follows:

-

•

State Space : Represents the snapshot of the world, including the player’s position, walls, boxes, and storage locations at any given point in time.

-

•

Action Space : Encompasses the various actions the player can take, either moving or pushing the box(es) in one of the following directions: up, down, right, or left.

-

•

Cost Function : Assigns a cost for each action to encourage making the fewest amount of moves.

-

•

Goal State : Includes all possible goals that the player can achieve within the current state of the world. Each goal represents a desired configuration of boxes in relation to storage locations.

Table 1 presents different goal representations based on the original game version. In versions 1 and 2, the player can push one box at a time, whereas in version 3, they can push two boxes at a time to goal locations. The goal recognition problems include a number of competing goal hypotheses: multiple possible plans to achieve a goal, two sequential goals with interleaved plans to achieve them, or failed/unsolvable plans (See Appendix A for screenshots of different scenarios).

| Version | Player Task |

|---|---|

| Game 1 | Deliver one box to one of three possible goal locations; push one box at a time. |

| Game 2 | Deliver two boxes to two of four possible goal locations; push one box at a time. |

| Game 3 | Deliver two boxes to two of six possible goal locations; push multiple boxes at a time. |

We additionally varied scenarios to present different rationality levels, including rational (optimal or suboptimal) behaviors and irrational behaviors. For optimal behavior, we assumed that observers would form a simple notion of optimal behavior, where the player takes the shortest path toward a goal. In the suboptimal behavior scenario, the player either chooses a longer path toward a goal (suboptimal plan) or deviates from a rational action in an observed sequence of a particular goal plan (e.g., the agent’s goal may have changed). We also included irrational behaviors where the player fails to complete the task (e.g., getting stuck in a dead-end state) to observe how these actions would be interpreted by participants.

The participant’s task was divided into the following phases:

-

1.

Watch an instructional video to introduce the task and game rules.

-

2.

For each of the 18 different scenarios (three games, six scenarios per game):

-

•

Watch a video clip in which a player tries to achieve the task (Figure 2).

-

•

After watching the observed actions sequence (plan’s completion percentage ), predict which goal location the player is trying to get to, and, accordingly, assign a likelihood (with one as the least likely and five as the most likely) of each goal. This prediction task is not central to the objectives of this study but was used to engage participants in reasoning about behavior.

-

•

Provide reasons for your prediction. Participants were required to answer specific questions based on the condition they were in.

-

•

Each participant was randomly assigned to one of three conditions:

-

•

‘Why’ condition: participants were asked to: ”Explain why you have rated that/those goal(s) as the most likely?”

-

•

‘Why-not’ condition: participants were asked to: ”Explain why you have not rated that/those goal(s) as the most likely?”

-

•

‘Dual’ condition: participants were asked to explain both why and why you have not in that order.

We collected data for the first and second conditions to analyze the differences between why and why not, and for the third condition to analyze how people answer why not if they have already answered why, and how the answer of why differs if they know there is a why not.

3.3.2 Data

We recruited 36 participants (22 male, 14 female), allocated evenly and randomly to each condition, aged between 20 and 65, with a mean age of 38. We limited the study to participants from the United States who are fluent in English. Recruitment was conducted via Amazon Mechanical Turk. Participants were compensated $6.50 for completing the task and a bonus of $3.50 for providing more thoughtful answers.

With three different game versions, six scenarios per game, and 12 participants per condition, a total of 864 textual data points were collected (Table 2). We used several methods to filter out deceptive participants. We excluded explanations with fewer than three words or containing gibberish. We also used the time taken to complete the survey as a threshold. This left us with a total of 828 explanations.

We used participants’ open-ended explanations to better identify the concepts they used to explain the player’s predicted goal. The word count of given answers within the dataset is between 1 and 98 words (, ) for the first condition, 3 and 81 words (, ) for the second condition, and 1 and 64 words (, ) for the third condition.

| #Questions per game | |||||

|---|---|---|---|---|---|

| Conditions | G1 | G2 | G3 | Participants | Explanations |

| Why | 6 | 6 | 6 | 12 | 216 |

| Why-not | 6 | 6 | 6 | 12 | 216 |

| Dual | 12 | 12 | 12 | 12 | 432 |

3.4 Case Study 2: StarCraft Game

StarCraft is a real-time strategy (RTS) game (Figure 3) where players manage an economy, produce units and buildings, and compete for control of the map with the ultimate aim of defeating all opponents. As an RTS game, StarCraft has several defining characteristics (?):

-

•

Players engage in a Simultaneous Move Game, where they execute actions concurrently—such as moving units, building structures, and managing resources—demanding effective multitasking skills;

-

•

The Partially Observable Domain limits players’ visibility of the game map and opponents’ setups, necessitating strategic reconnaissance for informed decisions;

-

•

Real-time gameplay adds urgency, requiring rapid thinking and reflexes to outmaneuver opponents within time constraints;

-

•

Non-Deterministic Actions introduce uncertainty, challenging players to adapt strategies dynamically;

-

•

The game’s High Complexity stems from its vast state space and diverse strategic options involving units, buildings, and technologies, compelling players to consider multiple factors when planning strategies.

These elements combine to create a deeply strategic and challenging gaming experience, where success depends on a player’s ability to react at strategic, economic, and tactical levels.

During gameplay, shoutcasters (commentators) in esports deliver real-time explanations and commentary to audiences, elucidating the intricate strategies and tactics of the game to enhance accessibility and engagement. Their expert insights can potentially benefit XAI tools by analyzing their commentary in the RTS domain (?).

3.4.1 Study Design

We defined the worldview of shoutcasters, i.e. observers, within the framework of Goal MDPs as follows:

-

•

State Space : Encapsulates the game environment’s configurations and conditions at any given time. This includes the positions of units, resources, and other relevant game elements.

-

•

Action Space : Includes low-level actions of specific game units and high-level actions related to game strategies and tactics (sequences of actions).

-

•

Cost Function : Quantifies the value of states and actions in terms of progress toward winning.

-

•

Goal State : Comprises both subgoals (intermediate objectives that players or teams aim to achieve) and the main goal (the ultimate objective, typically winning the game).

We analyzed a dataset comprising professional StarCraft tournament videos (?), where expert shoutcasters provided commentary. We identified key instances where shoutcasters made predictions about players’ goals and strategies and coded these instances to capture the underlying concepts used in their explanations. We clustered the dataset to ensure representative sampling.

3.4.2 Data

We obtained the dataset from ? (?), which was collected from professional StarCraft tournaments available as videos on demand from 2016 and 2017. They selected 10 matches and then randomly chose one game from each match. Each of the 10 videos features two shoutcasters (expert commentators) providing commentary.

To obtain representative samples, we identified six clusters within the dataset (1387 instances divided by 6 clusters equals approximately 231 instances per cluster). We then randomly selected one sample of 50 instances from each cluster. The resulting sample size was 300 instances (6 clusters * 50 instances each). We only considered instances involving predictions, specifically when shoutcasters explain their recognition process of what the agents/players aim to achieve (their goals) and so ended up having a total of 132 instances out of the six samples. As the data source is public, we provided supplementary material of coded data to support future research.

3.5 Method

We used a hybrid approach of deductive and inductive reasoning, employing thematic analysis as outlined by ? (?) to analyze our data. The analysis process was divided into six phases: familiarization with the collected data, development of codes, sorting different codes into potential themes, reviewing themes, defining and naming themes, and writing up the report.

Initially, the collected data was re-read multiple times to ensure immersion before proceeding to the coding phase. The coding process was inductive, aiming to identify basic concepts regarding how people explain goals, and deductive by relating them to the existing literature on explaining human behavior (?). Malle’s explanation model (?) shows that people reason over others’ beliefs, values, and desires to explain intentional actions. Following that model, we apply these concepts to our coded data. Subsequently, the codes were grouped into defined themes based on their similarities.

In the context of this study, the proposed themes are linked to our research topic of explaining human behavior in goal recognition scenarios. After establishing a set of candidate themes, the refinement process focused on ensuring internal homogeneity—i.e., a cohesive pattern within each candidate theme to accurately reflect the overall data set. Relationships, links, and distinctions between themes were identified during this phase.

The next steps involved naming and describing the themes and illustrating the thematic elements with examples. To ensure consistency, four authors independently coded 10% of the data, achieving approximately 75% inter-rater reliability, as measured by percentage agreement. After achieving this level of agreement, the first and fourth authors continued to code the remainder of the data.

3.6 Results

3.6.1 The Conceptual Model of Goal Recognition Explanation

We developed the conceptual model by integrating insights from both studies. Initially, we built a model based on the findings from the Sokoban study. This model was then extended to encompass the complexities of the Starcraft study, ensuring that it accurately reflects both studies’ dynamics and unique aspects. We present our conceptual model of an explainable Goal Recognition (GR) agent in Figure 4. The figure highlights the common elements (concepts) that encode an observer’s view of the world and the different representations of given explanations. The model process is guided by two levels; situational awareness and situational conveyance. The situational awareness model, presented by Endsley’s in (?), is a widely used situation awareness model consisting of three consecutive stages:

-

1.

Perceived input: In this stage, the observer perceives the basic elements and their properties in the environment—object, quantity, quality, spatial and temporal information.

-

2.

Reasoning process: Based on the perceived inputs, the observer makes causal inferences between their beliefs (including the actor’s mental model) and goals, generates counterfactuals, and associates them with their preferences.

-

3.

Projection: This stage presents the observer’s predictions of future actions guided by their expected goals and the uncertainty level based on the understanding of the previous stages.

In this level, two types of reasoning occur due to the two mental model representations (?). The first type is stereotypical reasoning, in which the observer reasons about others’ mental states (what the observer would have done). An example from the data corpus is: “It might just do old classic seven gate [a game strategy].” The second type is empathetic reasoning, where the observer casts themselves into the actor’s mental model and reasons as they would (what the actor would have done). An example is: “This is exactly what I was talking about, you do something to try to force them.” In practice, the observer often contrasts both views in a single explanation—what they think should happen vs. what the actor is likely to do. For example: “It’s actually going to look for a run by here with this scan it looks like but unfortunately unable to find it with the ravager here poking away.” This was observed when explanations were provided for either a failed plan or a sub-optimal one toward achieving a goal. For simplicity, we assume a local perspective in this work, where the actor’s mental state is equal to the observer’s through the data coding and model implementation process.

The second level is a situational conveyance, where different explanations are formed and communicated by the observer. At this level of the model, the explaining process requires additional strategic knowledge. This includes reasoning about contrastive, conditional, temporal, and spatial cases of problem-solving tasks, allowing for more than just a causal representation of a given explanation.

| Code | Description | Example |

|---|---|---|

| Causal | A cause and effect relationship | “because it was positioned closer” |

| Conditional | A conditional relationship | “if jjakji takes a 3rd” |

| Contrastive | A contrastive relationship | “blocked for any goal but 1” |

| Temporal | A series of events over time (order, repetition, opportunity chain, timing) | “a very strong timing that he can hit where he might be able to kill” |

| Spatial | Refer to places or distances | “he has moved above it” |

| Preference/Judgement | Assessment of actions or outcomes | “he gets the perfect split” |

| Goal | Refer to a goal state(s) | ”it likely wanted to go to goal 1” |

| Plan | Refer to a future action or sequence of actions | “he is going to snipe the warp prism” |

| Counterfactual goal | Refer to counterfactual goal(s) that could have occurred under different conditions | “boxes have been moved away from goal one” |

| Counterfactual Plan | Refer to a counterfactual action or sequence of actions | “instead of building a robo in a prism” |

| Belief | Refer to general domain knowledge | “No vision on the left-hand side of the map” |

| Observational Marker | Refer to the observed precondition(s) that supports the hypothesized goal(s) | “Given the player’s last move, box 1 belongs on goal 3” |

| Counterfactual Observational Marker | Refer to the observed precondition(s) that is against the hypothesized counterfactual goal(s) | “The player would have taken different steps if position 1 was the goal” |

| Object | An object in the domain | “to build a gate” |

| Quantitative | Refer to some quantity or measured value of an object | “a turret at 90% complete” |

| Qualitative | Refer to some quality or characteristic of an object | “here with inferior roaches” |

| Uncertainty | Refer to a state of being uncertain | “I suspect it is to push the block to goal 2” |

3.6.2 Concepts

Table 3 shows the different codes and concepts that emerged from across the two studies. The given explanations include factual and experiential knowledge (‘belief’), subjective likes and objective assessments (‘preference’), the desired state to be achieved (‘goal’), and possible future actions (‘plan’). When people explain others’ actions, they infer their goals to provide better explanations (?). When explaining a recognized goal, people infer the most relevant evidence from their belief state. Thus, we break down the belief concept into an ‘observational marker’, an observed precondition that most influences goal prediction. This concept applies not only to optimal behavior, measured by traditional efficiency metrics such as time and shortest route, but also to suboptimal behavior.

Since recognition problems activate counterfactual thinking (?), explanations of GR reasoning also include ‘counterfactuals’ — observational markers, plans, and goals. Explaining counterfactual plans implies having counterfactual goals where the actor has no plan to achieve them. We introduced an uncertainty code as we found that observers use words expressing uncertainty to indicate their confidence level. Additionally, as the problem is to explain goals, ‘goal’ and ‘counterfactual goal’ codes were also included. Finally, our data show the presence of different reasoning processes in the explanations. Observers associate a simple causal, conditional, contrastive, temporal, or spatial relationship when generating explanations. A combination of different concepts was used to form the given explanations.

Given that we conducted two distinct studies, we developed specific codes tailored to each. In the context of the Sokoban game, the code ‘counterfactual observational marker’ is explicitly used in the explanations. This code refers to observed preconditions that are against the counterfactual goals. However, observers in the StarCraft game did not incorporate this concept in their explanations, likely due to time constraints and the assumed expertise level of the StarCraft audience.

In the StarCraft game, observers added additional object properties, such as quality and quantity, to describe the characteristics and measured values of objects. This detailed level of description is attributed to the greater complexity of the StarCraft game compared to Sokoban. Furthermore, observers included general domain knowledge (referred to as ”belief”) in their explanations to clarify facts that were inaccessible to the audience due to the partial observability of the game domain.

3.6.3 Frequencies

Figure 5 illustrates the frequency distribution of 17 codes across two case studies. Given the navigational nature of both domains, observers predominantly referenced objects and their spatial properties to reflect the players’ strategies.

Among the various codes, the observational marker emerges as the most significant finding. This code, which ranks fourth in frequency, provides crucial insights into how observers infer players’ intentions and strategies. For instance, in the Sokoban game dataset, an observer noted, ”The player positioned itself on top of the box, leading me to believe it is going to push down on the box to reach goal 2.” Here, the action ”positioned itself on top of the box” is used to explain the entire observed sequence, forming a critical precondition for achieving the predicted goal. This demonstrates how a single observed action can be pivotal in understanding the player’s overall strategy. The counterfactual observational marker was coded only in the Sokoban game, as participants tended to use it when responding to ’why not’ questions. In the dynamic and time-constrained environment of live StarCraft commentary, shoutcasters were observed to focus heavily on observational markers in their explanations, even when addressing ’why not’ questions.

In addition, the third most frequently occurring code is the ‘plan’ code, where the actor’s goal is explained in terms of how future actions (plans) contribute to achieving that goal. This explanation, provided in terms of future actions, offers insights into potential actions and their execution (?). We believe that people tend to explain in terms of future actions as a way to resolve uncertainty. Causality is also frequently involved, as observers often associate causal relationships to generate explanations.

3.6.4 Questions

In human studies, two forms of causal reasoning are used to answer certain questions (?): retrospective reasoning involves explaining past events through counterfactual reasoning, which considers what could have happened if the observed facts were different, prospective reasoning involves explaining future events through transfactual reasoning, which considers what could happen in the future if certain conditions are met.

In the Sokoban game, we asked two questions: ‘Why?’ and ‘Why Not’, since it has been proved that they are the most demanded explanatory questions (?). ”Why” questions typically demand contrastive explanations, which are addressed through counterfactual reasoning (?). In such explanations, people answer ‘Why A’ in the form of ‘Why A instead of B?’, where B is some counterfactual goal(s) that did not happen. From the data collected, we classified the provided contrastive explanations into three categories: implicit, where observers implicitly contrast and identify relevant causes for A (the predicted goal(s)); explicit, where observers explicitly contrast and identify relevant causes for B (the counterfactual goal(s)); and extensive, where observers provide explanations for both by identifying relevant causes for A and also for B. It is important to note that the observer answered a “why” question in the ‘why’ condition, a “why not” question in the ‘why not’ condition, and both questions sequentially in the ’dual’ condition.

Figure 6 illustrates the differences between conditions. In the dual condition, observers tended to adopt an implicit mode when they answered the why question–after having answered why-not—more frequently compared to the why’ condition. A similar trend was observed for the why-not question in the dual condition, where observers tended to adopt an explicit mode when they answered the why-not question–after having answered why —more frequently compared to the Why-not condition. The contrastive nature of these explanations becomes particularly evident in the ’dual’ condition, where observers can differentiate between the two types of questions.

Participants primarily engaged in transfactual reasoning when they were highly uncertain about the agent’s goal. This uncertainty is a result of the agent’s observed behavior being suboptimal (irrational) to all goal hypotheses. For example, from the dataset: ”If the player keeps pushing the two boxes together, it would be impossible for box 2 to be put back onto a goal.”

The key codes highlight the important concepts used to explain goal recognition. Additionally, we coded implicit questions that shoutcasters tried to answer through their predictions.

In the StarCraft game, there are no pre-specified questions for the observers (shoutcasters) to answer. Instead, they gather information and craft explanations to address the audience’s implicit questions. We coded implicit questions that shoutcasters tried to answer through their predictions. Table 7 shows these questions and their frequencies. The shoutcasters are primarily focused on reasoning prospectively, addressing the ”What could happen?” question allows them to anticipate future events. Despite the time constraints, they managed to provide reasoning for their predictions by answering other questions of interest.

The most frequently answered question is the “Why?” question, involving retrospective reasoning over past events that mostly influence goal prediction. They further support their predictions by anticipating factors that control the context, allowing them to prospectively answer the “How?” question through future projections.

3.6.5 Discussion

Causal reasoning is essential for constructing mental representations of events (?, ?, ?). These representations form causal chains that illustrate how a sequence of causes leads to an outcome. In the realm of explainable AI, an agent aiming to explain observed events may need to use abductive reasoning to identify a plausible set of causes (?). While numerous causes can contribute to an event, individuals typically select a subset they deem most relevant for their explanation (?).

Our human studies focus on understanding how people explain others’ predicted goals based on observed behavior. By coding these explanations, we identify a key concept, referred to as an ’observational marker’ that participants use to build their explanations. Our findings align with social and cognitive research indicating that people prefer explanatory causes that seem sufficient in the given context for the event to occur (?, ?, ?, ?).

People make causal inferences about others’ beliefs and goals based on their observed behavior and prior domain knowledge (?). A key aspect to explain those inferences is the ability to decide to what degree the observed evidence from a causal chain supports a goal hypothesis. To this end, we propose an explanation model for GR agents based on concepts such as causality and observational markers.

4 Preliminaries

In this section, we provide the essential background needed to follow the rest of the paper.

4.1 Planning

Planning aims to find a sequence of actions given an environment model, a current situation, and the goal to be achieved (?). The concept of planning is key to understanding GR algorithms that use planners in the recognition process. We build upon the following planning problem definition as defined in (?):

Definition 4.1.

A planning task is represented by a triple , in which is a planning domain definition that consists of a finite set of facts that define the state of the world, and a finite set of actions; I is the initial state, and is the goal state. A solution to a planning task is a plan that reaches a goal state from the initial state I by following transitions defined in . Since actions have an associated cost, we assume that this cost is 1 for all actions.

4.2 Goal Recognition (GR)

Goal recognition (GR) involves identifying an agent’s goal by observing its interactions within an environment (?). We consider GR definition as defined by (?).

Definition 4.2.

A goal recognition problem is a tuple , in which is a planning domain definition where and are sets of facts and actions, respectively; is the initial state; is the goals set, and is a sequence of observations such that each is a pair composed of an observed action and a fact set that represent the state . A solution to a GR problem is a probability distribution over giving the corresponding likelihood of each goal, i.e. the posterior probability for each . The most likely goal is the one whose generated plan “best satisfies” the observations.

4.2.1 The Mirroring GR Algorithm

We focus on providing explanations for the output of the Mirroring GR algorithm (?, ?). However, our approach is agnostic of the underlying GR algorithm and will work for any GR algorithm that fits Definition 4.2. The Mirroring algorithmis inspired by people’s ability to perform online GR, originating from the brain’s mirror neuron system, which is responsible for matching the observation and execution of actions (?). The approach falls under the plan recognition as planning GR methods (?, ?) and uses a planner within the recognition process to compute alternative plans.

Specifically, the Mirroring algorithm uses a planner to compute optimal plans from an initial state to each goal and to compute suffix plans from the last observation to each goal . Observations are processed and evaluated incrementally. These suffix plans are then concatenated with a prefix plan (the observation sequence at time step ) to generate new plan hypotheses. The algorithm subsequently provides a likelihood distribution, i.e., posterior probabilities for each by evaluating which of the generated plans, incorporating the observations , best matches the optimal plan. The following example illustrates The Mirroring approach to solving a GR task.

Example 4.1.

Figure 8 presents a navigational domain where an agent can navigate through the unblocked grid to reach one of 3 possible goal locations. The GR task is composed of an initial state where an agent at the start is located (marked ), a set of goal hypotheses, , and a sequence of observations (represented as blue arrows). The domain definition includes a fact set comprising the cells (45 states in total) and an action set defined by four types of moves: up, down, left, and right, all with equal cost. The domain model is deterministic and discrete, meaning each action has only one possible outcome — although our model does not assume deterministic actions. A goal state specification is defined as the agent being in one of the three possible goal cells. Thus, the Mirroring GR would infer that, most likely the agent’s goal is to reach since the observed sequence confirms the optimal plan to achieve this goal.

4.3 Weight of Evidence (WoE)

The principle of rational action (?) states that people explain goal hypotheses by assessing the extent to which each observed action contributes to a specific goal hypothesis over others. Building on this idea, ? (?) defines a causal explanation as the set of features most responsible for an outcome. By incorporating this approach, we model our explanation framework using the Weight of Evidence (WoE) concept.

Weight of Evidence (WoE) is a statistical concept used to describe the effects of variables in prediction models (?). It is defined in terms of log-odds (see supplementary material) to measure the strength of evidence supporting a hypothesis against an alternative hypothesis , conditioned on additional information . Assuming uniform prior probabilities111The derivation of the formula for non-uniform priors is provided in Appendix B., WoE is expressed as:

| (1) |

Melis et al. (?) propose a framework based on WoE for explaining machine learning classification problems, arguing that it aligns with how people naturally explain phenomena to one another (?). They found that WoE effectively captures contrastive statements, such as evidence for or against a particular outcome. This helps answer questions like why a goal is predicted, why not goal , and what should have happened instead if the goal is . We adopt this concept and apply it to GR problems.

5 eXplainable Goal Recognition (XGR) Model

Building on insights from the human studies discussed in Section 3, we propose a simple and elegant explainability model for goal recognition algorithms called eXplainable Goal Recognition (XGR). This model extends the WoE framework to generate explanations for goal hypotheses. In the following section, we use the navigational GR example (Example 4.1) as a running example to support the definitions.

5.1 Overview

Extending ?’s (?) WoE framework, our model addresses ‘why’ and ‘why not’ questions, which are the most demanded explanatory questions (?). ? (?) compared a range of intelligibility-type questions and showed that explanations describing why a system behaved in a certain way resulted in better understanding and increased trust in the system, which is also supported by our findings in Section 3.6.4 (Figure 7).

The model accepts four components as an input, which any GR model can provide:

-

1.

The observed action sequence .

-

2.

The set of predicted goals, .

-

3.

The set of counterfactual (not predicted) goals, , where and .

-

4.

The posterior probabilities and for each , , and .

We define an explanation as a pair containing: (1) an explanandum, the event to be explained (i.e., goal hypotheses); and (2) an explanan, a list of causes provided as the explanation (?). The model answers two questions: ‘Why goal ?’, where is a predicted goal hypothesis, and ‘Why not goal ?’, where is a counterfactual goal hypothesis. Assuming a full observation sequence, the explanation is given as a list of an observed action and its WoE value. Given that list, the explanation selection is based on the type of question to be answered.

5.2 Explanation Generation

We generate explanations by extending the WoE framework presented by ? (?). By generating explanation lists using WoE, we can measure the relative importance of each observation to the goal hypotheses. This approach enables us to explain using the observational marker, which is the predominant concept used in the explanation of the agent’s goals (refer to Section 3.6.3).

Referring to Equation 1, we substitute the hypotheses and with a predicted goal and counterfactual goal hypotheses, and . The evidence is replaced by an observed action and the posterior probabilities are represented as and . A complete explanation is defined as follows:

Definition 5.1.

A complete explanan for a goal is a list of pairs , in which the conditional for each paired hypothesis and is computed for each added observation to the observed sequence . The WoE is computed as follows:

| (2) |

Input: , , , and over

Output: Explanation list of all paired with

Informally, this defines a complete explanan for a goal as the complete list of computed WoE scores for each observation. An algorithm for extracting this is shown below (Algorithm 1).

In the navigational GR scenario presented previously (Figure 8), the WoE would be the same for all goal hypotheses after the first three observations, to . This is because the Mirroring GR algorithm predicts them as equally likely since the first three observations are part of the optimal plan to achieve all three goals. However, this uniformity does not hold for the rest of the observation sequence. For the observations to , the Mirroring GR outputs would be goal and as equally likely since the observed actions are consistent with the optimal actions needed to reach either goal, with the counterfactual goal being . Table 4 presents the posterior probabilities and WoE values associated with either or as the leading goal candidate. The model computes the WoE value of each observed action for the pair of the predicted and counterfactual goals.

5.3 Explanation Selection

Explaining the output of a GR algorithm in terms of the complete observation sequence can be tedious or even impossible, especially in scenarios where the domain model contains hundreds of thousands of states and actions. XAI best practice deems that for explanations to be effective they should be selective, focusing on one or two possible causes instead of all possible causes for a decision or recommendation (?). In the context of GR explanations, we found that people pointed to the observational marker and the counterfactual observational marker when they answered ‘why’ and ‘why not’ questions (refer to Sections 3.6.2, and 3.6.3). To this end, we focus on selecting explanations to answer the ‘Why g?’ and ‘Why not g’?’ questions.

| 0.36 | 0.27 | 0.28 | |||

| 0.36 | 0.27 | 0.28 | |||

| 0.38 | 0.23 | 0.51 | |||

| 0.38 | 0.23 | 0.51 | |||

| 0.40 | 0.20 | 0.69 | |||

| 0.40 | 0.20 | 0.69 |

5.3.1 ‘Why’ Questions

Answering why goal ? questions, such as Why was goal predicted as the most likely goal over all other alternatives?, rely on identifying the most important observation(s) that support the achievement of that goal. We call such observations observational markers (OMs).

Definition 5.2 (Observational Marker).

Given a complete explanan of , the observational markers (OMs) are the observed actions that have the highest WoE value:

| (3) |

It is generated for every possible alternative and in case of having multiple such actions, we select them all. Consider the navigational GR scenario presented in Figure 8. Let us answer the question Why ?. From the complete explanan of , shown in Table 4:

After ranking them from highest to lowest, we obtain that has the highest value. This indicates that this observation is the , as in the observation that best explains the predicted goal hypothesis instead of the counterfactual goal hypotheses, . Therefore the explanation would be Because the agent has moved up from cell 26 to cell 17.

5.3.2 ‘Why Not’ Questions

The question of why not ? relies on identifying the most important observation(s) related to , which are called counterfactual observational markers.

Definition 5.3.

Given a complete explanan of , the counterfactual observational markers (counterfactual OMs) are the observation(s) that have the lowest WoE value:

| (4) |

There may be multiple such observations, in which case we select them all. Consider the navigational GR scenario (Figure 8) and the question Why not and ? From the complete explanation of and , shown in Table 4:

After ranking them from lowest to highest, we obtain as the lowest value for and as the lowest value for . This indicates that these observations are the counterfactualOM, the observations that best explain the counterfactual goal hypotheses, . Therefore, the explanation would be: Because the agent moved right from cell 23 to cell 24, away from , and up from cell 26 to cell 17, away from .

Counterfactual Action

Pointing to the lowest WoE action is not enough to answer why not . Part of answering ‘why not’ questions is the ability to reason about the counterfactual plan that should have occurred instead of counterfactual OM for to be the predicted goal (refer to Section 3.6.2).

Building on this idea, we obtain the counterfactual action that should have happened instead of the observed one by planning the agent’s route to and simply taking the first action. We approach this problem by generating a plan for from the state that precedes obtaining the counterfactual OM, the state from which the lowest WoE is measured. We define the counterfactual action as follows.

Definition 5.4.

Given a counterfacual OM at state for counterfactual goal , a counterfactual action is the first action from the plan that is generated by solving the planning problem , where is the planning domain.

Consider again the example from Figure 8. The counterfactual action to would be the move up action from cell 23 to 14, and to would be the move right action from cell 26 to 27 (as indicated by the red arrows). Verbally, the answer to ”Why not goal ?” would be: Because the agent moved right from cell 23 to cell 24. It would have moved up from cell 23 to 14 if the goal was , and to ”Why not goal ?” would be: Because the agent moved up from cell 26 to cell 17. It would have moved right from cell 26 to 27 if the goal was

As noted in the navigational example (Figure 8), the counterfactual action for which is moving right from 26 to 27 (represented as a red arrow) is part of a suboptimal plan to (moving right to 27, up to 18, up to 9, and left to ). The framework operates by identifying the lowest observed evidence for a goal against goal at time step , and generating the counterfactual action from that point towards , even if that action is part of a plan for .

6 Evaluation

In this section, we present a comprehensive analysis of the XGR framework as obtained through a combination of a computational study and three user studies to assess the effectiveness of our model.

6.1 Computational Evaluation

We evaluate the computational cost of the XGR model over eight online GR benchmark domains (?) to determine whether the cost of our approach is suitable for real-time explainability. The benchmark domains vary in levels of complexity and size, including different numbers of observations and goal hypotheses. We measure the overall time taken to run the XGR model. As the explanation model uses an off-the-shelf planner for counterfactual planning, we also separate the planner’s cost and the explanation generation and show its effect on overall model performance.

Table 5 presents the run time performance of the XGR model over the benchmark domains. The run times vary greatly depending on the complexity of the domain, ranging from an average of 0.15 seconds over the 15 problems in the relatively simple Kitchen domain, to 221.77 seconds over the 16 problems in the complex Zeno-Travel domain (column 1). Regardless of the run time, adding our explainability model to the GR approach is typically not expensive, adding an increase of between 0.2%-45% (column 3). However, most of this increase can be attributed to calling the planner to generate counterfactual explanations (column 4). We can see that between 70%-99% of the XGR model run time is spent on planning. The varying percentage increases between domains like Zeno-Travel and Kitchen emphasize the relationship between domain complexity and planning time: the higher the domain complexity, the greater the influence the planner has. This highlights the significant impact of planner selection on the model performance. Since our model is independent of the underlying GR model, it has the potential to scale effectively with the integration of more efficient planners, such as domain-specific planners.

|

|

|

|

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

0.21 (0.08) | 0.019 (0.017) | 10.15 | 87.11 | ||||||||||

|

71.22 (36.16) | 6.276 (8.070) | 09.66 | 99.69 | ||||||||||

|

0.69 (0.36) | 0.215 (0.087) | 44.61 | 70.18 | ||||||||||

|

0.14 (0.07) | 0.014 (0.002) | 11.12 | 73.61 | ||||||||||

|

135.23 (73.11) | 3.710 (7.271) | 02.82 | 99.64 | ||||||||||

|

16.76 (10.05) | 1.794 (10.052) | 11.98 | 99.27 | ||||||||||

|

109.12 (22.61) | 1.636 (2.861) | 01.52 | 98.72 | ||||||||||

|

221.77 (68.85) | 8.856 (11.721) | 04.15 | 99.65 |

6.2 Empirical Evaluation

We consider human studies experiments essential to the XGR model evaluation and conduct three human studies. The studies were conducted after obtaining institutional HREC approval.

6.3 Study 1 - Generating Human-Like Explanations

Our first study evaluates whether the model output is grounded on human-like explanations.

6.3.1 Methodology and Experiment Design

We used the method of annotator agreement and ground truth, where human annotations of representative features provided the ground truth for quantitative evaluation of explanation quality (?).

Task Setup

In this study, participants interacted with the output of the Mirroring GR algorithm across a series of problems within the Sokoban game domain (refer to Section 3.3 for additional details). The game was divided into three versions:

-

•

Game Version 1: Required the delivery of a single box to a single destination.

-

•

Game Versions 2 and 3: Involved delivering two boxes to two sequential destinations, with interleaved plans to achieve each goal. The key distinction between these versions was that, in version 3, the agent could push multiple boxes, whereas in version 2, the agent could only push one box at a time.

This progression shifted the task from a straightforward navigation challenge to a more strategic one, where the player needed to manage multiple goals while striving to minimize the number of steps taken. Each game version included five scenarios of varying complexity, for a total of 15 scenarios. Each scenario presented a different goal recognition problem, with multiple competing goal hypotheses. Participants were required to answer ”why” and ”why not” questions regarding the predicted and counterfactual goal sets.

Procedure

Each experiment lasted approximately 60 minutes and included the following four stages:

-

1.

The game instructions were introduced to the annotators, along with a training scenario to help them understand the task.

-

2.

The annotators watched a partial scenario (video clip) in which a Sokoban player attempted to achieve a goal (see Figure 9). The goals involved either delivering/pushing a box to a single destination cell or delivering/pushing two boxes to two different destination cells.

-

3.

After watching the incomplete observation sequence, annotators were given the set of predicted and counterfactual goals generated by the Mirroring goal recognition algorithm.

-

4.

The annotators were asked to identify the most important action, or optionally the two most important actions, from the observation sequence that addressed the questions: ’Why goal ?’ and ’Why not goal ?’ Here, was the predicted goal, and was the counterfactual goal. Additionally, participants were asked to annotate a counterfactual action for ’Why not goal ?’. This involved proposing a non-observed action that they believed would indicate a move towards the alternative goal (see Appendix C1. for an example scenario screenshot).

Participants

We recruited three annotators (one male, two female) from the graduate student cohort at our university. Participants were aged between 29 and 40, with a mean age of 33. No prior knowledge of the task was required.

Metrics

Using a majority vote, we combined participants’ annotations into a single ground truth. Disagreements between annotations were typically resolved by an extra annotator. Then we calculated the mean absolute error (MAE) to assess how closely the obtained explanation of the XGR model matched the ground truth, defined by the agreement between annotators. The MAE was calculated as the average difference between each ground truth value () and the corresponding XGR value () over the length of the observation sequence (). These values will be discussed in the following section.

| (5) |

For evaluating the selection of a counterfactual action, since there is only one counterfactual action per plan, this was obtained by a binary agreement between the ground truth and the XGR model. For each domain, we calculated the percentage of agreements using the method described by (?):

| (6) |

6.3.2 Results

We applied our XGR model to the online Mirroring implementation for each of the 15 scenarios. To determine the value of (), we obtained the ranked explanation list from the XGR model based on the Weight of Evidence (WoE) values. For the question ‘Why goal ?’, we ranked the explanations in descending order of their WoE values, assigning a rank of 1 to the explanation with the highest WoE (Observational Marker, OM). Similarly, for the question ”Why not goal ?”, we ranked the explanations in ascending order of their WoE values, assigning a rank of 1 to the explanation with the lowest WoE (counterfactual OM). Using ground truth obtained through human annotation, we matched these ranks with their equivalents in the ranked explanation list for each question to determine . We then calculated the Mean Absolute Error (MAE) for each question and across all 15 scenarios.

| WhyQ | WhyNotQ | |

|---|---|---|

| 1 | 6 | |

| 2 | 5 | |

| 3 | 4 | |

| 4 | 4 | |

| 5 | 3 | |

| 6 | 2 | |

| 7 | 1 | |

| 0 | 0 |

Example 6.1.

Consider the example in Figure 9. We obtained the ranked explanation list for both questions from our model (Table 6). The annotated actions from the ground truth are and , which explain ‘Why AND ?’, and , which explains ‘Why not ( AND ) OR ( AND )?’. We then determined (), which is the equivalent rank of the annotated action in the list. For the ”Why” question, these values are and , and for the ”Why not” question, the value is .

The Mean Absolute Error (MAE) is the average of the errors; hence, the larger the number, the larger the error. An error of 0 indicates full agreement between the models.

The results of the comparison are presented in Table 7. Each row shows the MAE calculated for each game scenario. The ‘Why’ and ‘Why not’ columns represent the MAE for our model compared to the human ground truth, while the CF(%) column represents the percentage of counterfactual action explanations that agree with the human ground truth.

For the majority of instances, the XGR model agreed with the ground truth obtained through human annotation. When answering ‘Why ?’ questions, the model had a full agreement with the ground truth in 11 out of the 15 scenarios (73.3%). For ‘Why not ?’ questions, the model had a full agreement with the ground truth in 14 out of the 15 scenarios (93.3%). By ‘full agreement’, we mean that the human annotators identify the same two actions as the primary explanation.

The CF column represents the percentage of counterfactual action explanations that agree with the human ground truth. Higher values are better, with 100% indicating full agreement with the ground truth counterfactual actions. The model achieved full agreement in 11 out of the 15 scenarios (73.3%), demonstrating excellent performance.

| Game | Scenario | Why | Why Not | CF (%) |

| 1 | S1 | 0.00 | 0.00 | 100 |

| S2 | 0.00 | 0.00 | 100 | |

| S3 | 0.37 | 0.00 | 100 | |

| S5 | 0.25 | 0.00 | 100 | |

| S5 | 0.00 | 0.00 | 100 | |

| 2 | S1 | 0.00 | 0.00 | 66.6 |

| S2 | 0.00 | 0.12 | 33.3 | |

| S3 | 0.00 | 0.00 | 100 | |

| S4 | 0.00 | 0.00 | 100 | |

| S5 | 0.00 | 0.00 | 33.3 | |

| 3 | S1 | 0.50 | 0.00 | 100 |

| S2 | 0.00 | 0.00 | 50 | |

| S3 | 0.00 | 0.00 | 100 | |

| S4 | 0.00 | 0.00 | 100 | |

| S5 | 0.44 | 0.00 | 100 | |

| Mean | 0.10 | 0.008 | 89.40 | |

| SD | 0.65 | 0.031 | 00.25 |

To better understand the performance of our model we investigated scenario 5 in game 3, which has a relatively high Mean Absolute Error (MAE) for the Why goal ? question, we refer to Figure 9. In this scenario, the agent delivers 2 boxes to 2 different locations and can push 2 boxes simultaneously. The blue arrows in the figure represent the observation sequence, indicating that the agent started at the blue circle and followed the arrows to its current location.

In this scenario, the most likely goal candidate, as predicted by the Mirroring GR algorithm, was delivering Box1 to and Box2 to , i.e., . The counterfactual goal candidates involved delivering the boxes to either and or and , with . It is important to note that to push both boxes simultaneously to goal , the agent would need to stand to the right of the boxes. Conversely, to push both boxes simultaneously to either or , the agent would need to position itself to the left of the boxes.

The XGR model’s explanation for the Why goal ? question is highlighted by the green, hollow circle in the figure. This observation suggests that the agent aims to position itself to the right of both boxes, confirming the hypothesis that the goal is . Considering the agent’s ability to push multiple boxes, this observation constitutes the Observation Model (OM) with the highest Weight of Evidence (WoE).

On the other hand, the annotators established the ground truth explanation by choosing the second observation, , for both the Why goal ? and Why not ? questions. According to our model, this observation is the one with the lowest Weight of Evidence (WoE), actually making it the counterfactual observation and the answer to the question Why not ? This is because this observation moves away from both goals and .

Participants choosing to use the same answer for both Why goal ? and Why not ? questions can also be found in other instances of discrepancies between the output of our model and the ground truth. The difference in explanations can be attributed to some confusion and/or preference between why? and why not? questions on the part of the participants.

To address this, we conducted a follow-up experiment where we presented the participants with the scenarios they were confused with (Table 7, scenarios with bold values). For each scenario, we provided them with explanations from two systems: the first system’s explanation from our model, and the second system’s explanation from the ground truth. We then asked them which system provided a better explanation. All three participants preferred the explanations provided by our model which leads us to our model’s explainability as perceived by users.

6.4 Study 2 - Perceived Explainability

The second human subject experiment aimed to evaluate the explainability of our model. We considered the following two hypotheses for our evaluation: 1) Our model (XGR) leads to a better understanding of a GR agent; and 2) A better understanding of a GR agent fosters user trust.

6.4.1 Experiment Design and Methodology

Conditions

We conducted a between-subjects study in which participants were randomly assigned to one of two conditions: 1) the explanation model (XGR), where an explanation was provided for the GR output; 2) the No Explanation model, where no explanation was provided for the GR output. We did not include a baseline for another explanation method due to the lack of existing alternatives.

Task Setup

We used the Sokoban game as our test bed, following the same task setup as in our previous study (refer to Section 6.3). Participants engaged with the output of the Mirroring GR algorithm across a series of problems within the Sokoban game domain. For each problem, we used a STRIPS-like discrete planner to generate ground truth plan hypotheses based on the domain theory and observations. Participants were then tasked with predicting the player’s possible goal based on the observed behavior. Following this, they rated their trust on a 5-point Likert scale (?). In the XGR condition, participants also used a 5-point Likert scale to rate the given explanation according to their satisfaction with it (?).

Procedure

Participants were presented with six partial scenarios (video clips) showing a Sokoban player attempting to deliver boxes to designated locations. The experiment was divided into four phases:

-

1.

Phase 1: Collection of demographic information and participant training. Using two video clips, the participant is trained to understand the player task and how to use GR and explainable system outputs.

-

2.

Phase 2: Presentation of a 10-second video clip of the Sokoban player’s actions, along with the GR system output. Participants were asked to predict the agent’s goals. For the No Explanation condition, participants made predictions without receiving any explanations. In the XGR condition, explanations for ‘why’ and ‘why not’ questions were presented (see Appendix C2. for example scenarios of the two conditions). Explanations were pre-generated by our algorithm and displayed on an annotated image of the video clip’s final frame. The explanations were converted into natural language using a template, as exemplified in Figure 10.

-

3.

Phase 3: Completion of the trust scale by participants.

-

4.

Phase 4 (XGR condition only): Completion of the explanation satisfaction scale.

Participants

Prior to running the study, we performed a power analysis to determine the needed sample size. We calculated Cohen’s F and obtained an effect size of 0.35. Using a power of 0.80 and a significance alpha of 0.05, this resulted in a total sample size of 60 for the two conditions. We therefore recruited a total of 70 participants from Amazon MTurk, allocated randomly and evenly to each condition. To ensure data quality, we recruited only ’master class’ workers whose first language is English and who have at least a 98% approval rate on previous submissions. After excluding inattentive participants, we obtained 65 valid responses (No Explanation: 33, XGR: 32). Demographics included 28 males and 37 females, aged between 20 and 69, with a mean age of 40. Participants were compensated $4.00 USD, with an additional bonus of $0.20 USD for each correct prediction, up to a total of $1.20 USD.

Metrics

To test the first hypothesis, we used the task prediction method as described by ? (?), which acts as a proxy measure for user understanding. Participants were scored one point for correct prediction and penalized one point for incorrect prediction. For the second hypothesis, we used the trust scale from ? (?), where participants rated their trust on a 5-point Likert scale ranging from 0 (Strongly Disagree) to 100 (Strongly Agree) based on four metrics. To evaluate the subjective quality of the explanations, participants used the Explanation Satisfaction Scale from ? (?), a 5-point Likert scale ranging from 0 (Strongly Disagree) to 100 (Strongly Agree) based on four metrics.

Analysis Method

After conducting a homogeneity test to assess the variance of the collected data, we proceeded with Welch’s t-test.

6.4.2 Results

Hypothesis 1: Our model (XGR) leads to a better understanding of a GR agent

Figure (11) presents the variance in task prediction scores for the two models. A Welch’s t-test indicated a significant difference (p-value ) in favor of the XGR cohort and the No Explanation cohort. These results suggest that our model (XGR) provides a significantly better understanding of the agent’s behavior compared to the baseline model, as evidenced by the task prediction scores. Therefore, we accept our first hypothesis.

| Understanding | Satisfying | Sufficient Detail | Complete |

| 88.93 (10.8) | 86.09 (12.2) | 87.93 (13.1) | 86.15 (19.6) |

Table 8 shows the mean and standard deviation of the explanation quality metrics for the XGR model on a Likert scale. Higher values indicate stronger agreement. These results suggest a satisfactory level across all four metrics.

Hypothesis 2: A better understanding of a GR agent fosters user trust

Figure (12) illustrates the Likert scale data distribution of the participant’s perceived level of trust across the two models. A Welch t-test yielded p-values () for the trust metrics (Confident, Predictable, Reliable, and Safe) respectively. These results indicate a significant difference in the Reliable and Safe, and a marginally significant difference for the Confident and Predictable metrics. Thus, we also accept our second hypothesis.

6.5 Study 3 - Effectiveness in Supporting Decision-Making

This study aims to evaluate our model within the context of human-AI decision-making, focusing on several key hypotheses for empirical assessment. Specifically, we intend to investigate the impact of incorporating counterfactual explanations into our model. Our hypotheses are as follows: 1) Decision Accuracy: We hypothesize that our model enhances decision-making performance; 2) Decision Efficiency: We anticipate that the model will contribute to greater overall efficiency in task completion; 3) Appropriate Reliance on the GR Model: We propose that our model will promote more appropriate reliance on the GR model; and 4) User Trust: We expect that our model will lead to increased trust in the GR agent. For this hypothesis, the aim is to further validate our findings (refer to Section 6.4.2) in a different context. Additionally, we seek to address the research question: How do participants’ reasoning processes vary across the four conditions?

Conditions

We conducted a between-subjects study where participants were randomly assigned to one of four conditions:

-

•

NoGR: Participants made their decisions with no goal recognition output received.

-

•

GR: Participants made their decisions with goal recognition output received.

-

•

XGR_WoE: Participants made their decisions with goal recognition output received along with explanations of our XGR model based on WoE only.

-

•

XGR_WoE_CF: Participants made their decisions with goal recognition output received along with explanations of our XGR model based on both WoE and counterfactual explanations.

We have the NoGR condition to understand the impact of AI assistance on decision-making processes. In addition, we decompose our model into XGR_WoE, where the explanation is generated based on observational markers only, and XGR_WoE_CF, where explanations are generated based on both observational markers and counterfactuals, to facilitate comparison.

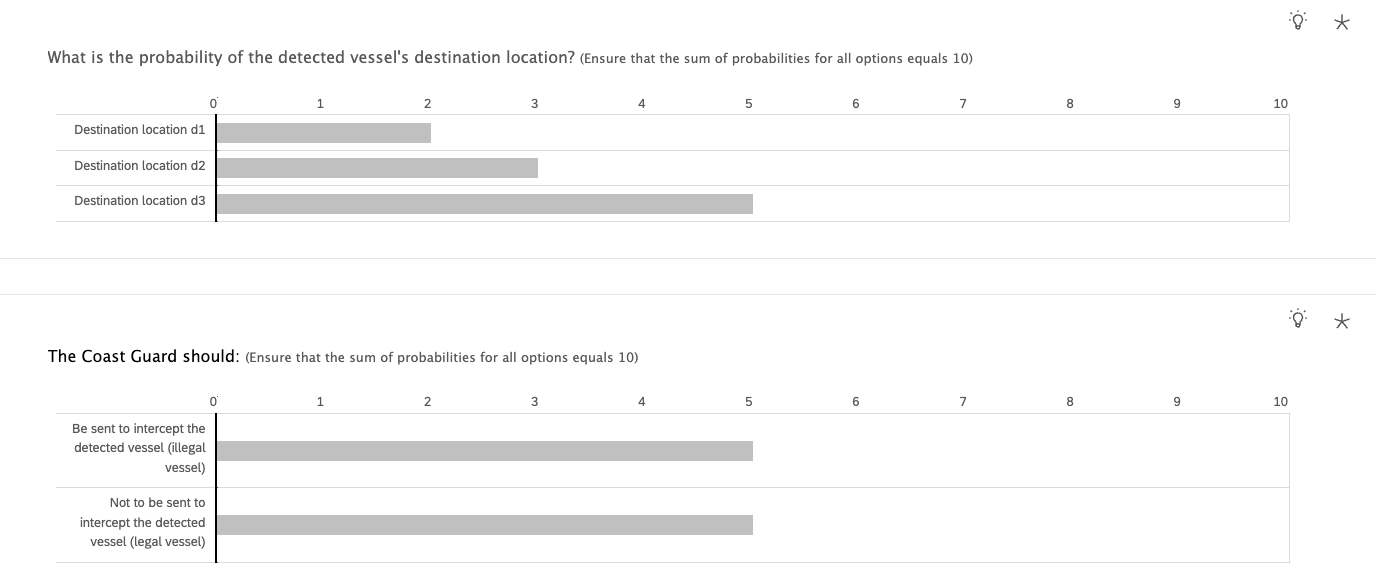

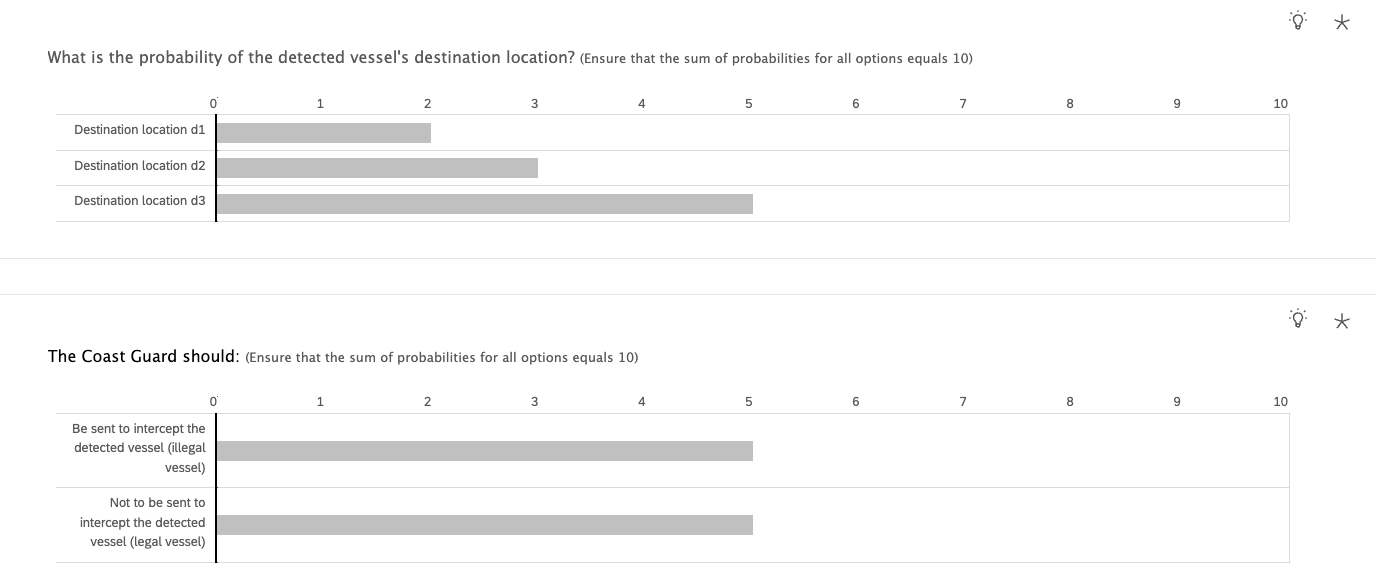

Task Setup

This time we opted to test our model on a more complex domain, inspired by real-world applications (?, ?). We used a maritime domain as our test bed and designed eight scenarios to assess whether a vessel was involved in illegal maritime activities. In the eighth scenario, we introduced two detected vessels (8a, 8b), adding a level of complexity in dealing with multiple vessel interactions within the same environment. The scenarios include invading prohibited areas, deliberately avoiding surveillance areas, or concealing illegal operations by turning off signals.