Towards Carbon-Neutral Edge Computing: Greening Edge AI by Harnessing Spot and Future Carbon Markets

Abstract

Provisioning dynamic machine learning (ML) inference as a service for artificial intelligence (AI) applications of edge devices faces many challenges, including the trade-off among accuracy loss, carbon emission, and unknown future costs. Besides, many governments are launching carbon emission rights (CER) for operators to reduce carbon emissions further to reverse climate change. Facing these challenges, to achieve carbon-aware ML task offloading under limited carbon emission rights thus to achieve green edge AI, we establish a joint ML task offloading and CER purchasing problem, intending to minimize the accuracy loss under the long-term time-averaged cost budget of purchasing the required CER. However, considering the uncertainty of the resource prices, the CER purchasing prices, the carbon intensity of sites, and ML tasks’ arrivals, it is hard to decide the optimal policy online over a long-running period time. To overcome this difficulty, we leverage the two-timescale Lyapunov optimization technique, of which the -slot drift-plus-penalty methodology inspires us to propose an online algorithm that purchases CER in multiple timescales (on-preserved in carbon future market and on-demanded in the carbon spot market) and makes decisions about where to offload ML tasks. Considering the NP-hardness of the -slot problems, we further propose the resource-restricted randomized dependent rounding algorithm to help to gain the near-optimal solution with no help of any future information. Our theoretical analysis and extensive simulation results driven by the real carbon intensity trace show the superior performance of the proposed algorithms.

Index Terms:

carbon-aware task offloading, inference accuracy loss minimization, carbon emission rights purchasing, -slot drift-plus-penalty methodologyI Introduction

With the rapid development of machine learning (ML) technique and the spread of mobile and Internet of Things (IoT) devices running artificial intelligence (AI) applications, using Deep Neural Network (DNN) model to infer ML tasks becomes increasingly popular [1, 2, 3]. However, devices usually have limited energy in the battery and are restricted by the physical size [4], thus failing to support the inference execution of ML tasks that need more computation resources. To efficiently compute ML tasks, one way is to utilize the resource-rich cloud server. However, transferring ML tasks through the wide area network (WAN) will incur extravagant energy consumption, let alone the expensive price of energy and high carbon intensity in the cloud server. Another way is offloading ML tasks to edge servers, which can take advantage of the green energy to achieve low-carbon computation at the network edge [5, 6, 7]. Unfortunately, the achieved inference accuracy using edge resources is usually worse than that in the cloud server owing to the limited resource. Hence, neither an edge-only nor a cloud-only solution is the best to support the low-carbon and high-accuracy ML inference services.

Additionally, to mitigate the problem of global warming incurred by carbon emissions, carbon emission rights (CER) trading (e.g., UN Carbon Offset Platform [8]), which can be viewed as an offsetting mechanism for trading carbon credits, has been adopted globally [9]. As for a CER trading scheme, the purchaser needs to purchase CER from a central authority or government body, and can only discharge a specific quantity of pollutants during a specific time. For example, there is a purchaser who is an operator owning multiple edge servers and a cloud server, and its’ annual CER is 6 million tons. Therefore, the operator’s all servers can only emit a total of 6 million tons of carbon per year. When the CER is not sufficient, the operator needs to purchase additional CER. Usually, the operator can purchase CER in the following two ways, including but not limited to: one is purchasing CER in the spot market in a timely manner for what the system needs (on-demanded) in a shorter period (e.g., seconds or minutes); the other is to purchase in the carbon future market in advance (on-preserved) in a specified period (e.g., minutes or hours). Obviously, for the same value of CER, the cost of the latter method is cheaper, which is consistent with the real situations in the market [10]. Usually, the operator has a limited budget to purchase CER, e.g. annual budget. Therefore, a proper policy that can be integrated with the above two methods is needed to obey the CER purchasing budget.

Carbon-aware ML task offloading in the collaborative edge computing framework, illustrated in Fig. 1, which coordinates resource-constrained but carbon-intensity lower edge servers and the resource-rich but carbon-intensity higher cloud server, will provide low-carbon and high-accuracy inference services. In this framework, ML tasks can be computed by edge servers or the cloud server. Considering the computing resource capacity, at each edge server, a tiny neural network (TNN) model (i.e., a lightweight compressed DNN model) [11] is deployed to reduce carbon emission, at the expense of higher accuracy loss. Edge servers can use green energy, e.g., solar energy [12], and thus the mixture of energy supplied to edge servers varies from site to site caused by the volatility of green energy [13]. As a result, the carbon intensity of such mixed energy fluctuates over time and across locations [14, 15]. At the cloud server, an uncompressed DNN model is deployed to achieve lower accuracy loss at the cost of higher carbon emissions. Usually, the cloud server is more carbon-intensive than edge servers. Clearly, total carbon emission in this framework can not exceed the purchased CER, thus the cost of purchasing CER should be below the operator’s budget (e.g., pre-defined hourly or daily). Therefore, it is imperative to design a proper policy to coordinate the two methods (i.e., on-demanded and on-preserved) to control the cost of purchasing CER. Besides, through ML tasks offloading between devices to servers, the performance (i.e., carbon emission and accuracy loss) of inference services will be enhanced.

Given the above intuition, we design carbon-aware ML task offloading with the CER purchasing budget to achieve the minimization of all ML tasks’ inference accuracy loss in a long-running time. Therefore, here comes our challenge: for the collaborative edge computing inference tasks, how to offload each of them (e.g., to which edge server or to the cloud server) and how to use diversified CER purchasing methods to minimize the total inference accuracy loss under the long-term time-averaged CER purchasing budget.

Unfortunately, it is tough to tackle such a problem. On the one hand, the accuracy loss with the cost of purchasing CER should be carefully weighed in an efficient manner, due to the dilemma that offloading ML tasks to edge servers may emit less carbon dioxide with the cost of higher accuracy loss, while choosing the cloud server may get better performance in inference accuracy at the expense of carbon emission. On the other hand, coordinating various ways of purchasing CER at multiple timescales is challenging, due to the fact that it is cheaper to on-preserved purchase CER but requires future information; in contrast, purchasing CER on-demanded is in a real-time manner to support the operator but with the higher price. What is more, the uncertainty and fluctuating on prices of CER bring a plethora of difficulties in obeying the long-term time-averaged CER purchasing budget.

To cope with these challenges and achieve green edge AI, we will make carbon-aware ML task offloading decisions and CER purchasing decisions to minimize accuracy loss under the CER purchasing budget only using current system statistics. To the best of our knowledge, our work is a low-carbon and high-accuracy early effort to green edge AI by harnessing spot and future carbon markets that optimizes ML tasks in the collaborative edge computing setup with an offloading function, facing the uncertainty and fluctuating of the carbon intensity in edge servers and CER purchasing prices within the constraint of a long time average CER procurement cost budget. The contributions are summarized below:

-

1.

Carbon-aware ML task offloading model: We propose a carbon-aware ML task offloading model in a collaborative edge computing environment, and formulate the long-term problem for low-carbon and high-accuracy inference services for ML tasks to achieve greening edge AI. Our goal is to minimize the total inference accuracy loss by making proper offloading decisions as well as efficient CER purchasing policies on the fly, subject to a long-term time-averaged CER purchasing budget.

-

2.

An online algorithm in multi-timescale to deal with coupled formulation: We extend the two-timescale Lyapunov optimization technique [16] to propose an efficient approximate dynamic optimization scheme of the two-timescale online algorithm (TTOA). It can properly coordinate with the multiple ways of CER purchasing through spot and future carbon markets and the carbon-aware ML tasks offloading in the collaborative edge computing framework. Considering the NP-hardness of the -slot problems, we further propose the resource-restricted randomized dependent rounding algorithm (R3DRA) to help to gain the near-optimal solution.

-

3.

Detailed theoretical analysis and trace-driven performance evaluation: The detailed theoretical analysis gives the rounding gap of R3DRA which is proportional to the optimal value, and TTOA achieves a fine-tuned trade-off between the accuracy loss and the CER purchasing cost. Extensive simulations show the excellent performance of our algorithms. Specifically, compared with the non-trivial baselines, our TTOA can achieve up to cost of purchase cost reduction with at most performance loss incurred based on the real carbon intensity trace.

The rest of this article is structured as follows. Related work of machine learning (ML) tasks offloading and CER purchasing is presented in Sec. II and the long-term problem of low-carbon and high-accuracy inference services for ML task offloading is shown in Sec. III. In Sec. IV, we give the illustration of the approximated dynamic optimization algorithm. The performance analysis including the rounding gap of optimal value and the achieved bound is presented in Sec. V. Performance evaluation driven by the real carbon intensity trace is performed in Sec. VI while the conclusion is illustrated in Sec. VII.

II Related work

There are many works toward achieving high inference accuracy in ML tasks offloading [17, 18, 19, 20]. Yang et al. [17] studied accurate live video analytics by introducing the coordination framework of end-edge-cloud with the aim of low latency and accurate analytics. To achieve high-quality and real-time performance through the synergy of device-edge, Li et al. [18] proposed a framework named Edgent which contains DNN partitioning and right-sizing. Wu et al. [19] focus on studying the sampling rate adaption. By exploiting deep reinforcement learning, Zhang et al. [20] proposed a new scheme of resource management to efficiently improve inference accuracy. The above-mentioned works enhance the inference performance but lack consideration of the varies of the CER purchasing price and the carbon intensity of edge servers and the cloud server.

Orthogonally, low-carbon computing has been widely studied to mitigate the problem of global warming caused by increasing carbon emissions. To reduce the carbon emission in a scheduling policy, Yang et al. [21] devoted to leveraging Lyapunov optimization to adapt to renewable energy’s nature of variable and unpredictable and design a scheduling policy based on carbon intensity. In [22], Yu et al. studied the minimization of the carbon footprint of the task offloading oriented by carbon footprint considering energy sharing as well as battery charging. Savazzi et al. [23] aimed at designing green federated learning which is distributed and the proposed framework can quantify the energy footprints and the carbon equivalent emissions. Lannelongue et al. [24] researched a methodological framework to help welly achieve the estimation of the computational task’s carbon footprint standardized and reliably. Works of [21] and [22] mainly focused on exploring the advantages of renewable energy and may be overly dependent on green energy, while works of [23] and [24] looked into the quantization of carbon footprints, the results of which may be less accurate and fail to be used in reality.

Carbon emission rights is proposed as a tradable commodity to achieve emission reduction target [25]. and the research efforts in this area are increasing. Guo et al. [26] researched the comparison of trading generation lights and carbon emission and found a correlation between these two. To exploit the determinants of the carbon emission price, Guan et al. [27] conducted an empirical analysis based on the VEC model. These studies, however, the first look of which is not buyers. From the perspective of buyers, i.e., operators, these works focused on the minimization of CER purchasing costs while achieving better service performance. In [28], Wu et al. studied the microgrid’s market model to trade electricity and CER while ensuring the total CER constraint. Zhang et al. [29] devoted to studying the closed-loop supply chain network’s equilibrium decision. These efforts fall short of exploring the advantages of the lower price in the carbon future market and the convenience in the spot market.

III System model and problem formulation

III-A System Model

The considered situation is shown in Fig. 1, where multiple Internet of Things (IoT) devices are offloading their machine learning (ML) tasks to edge servers and the cloud server. In analogy with the assumption in work [30][31], edge servers may have multiple energy supplies including the power of grid and green. Thus carbon intensity in edge servers may be cheaper and lower than in the cloud server and changes dynamically because it relatives to the weather. According to the resource capacity of edge servers, we assume that there is a tiny neural network (TNN) model maintained at each edge server to process ML tasks, and a deep neural network (DNN) model is placed in the cloud server to achieve higher inference accuracy. Intuitively, processing ML tasks at the cloud server may incur higher carbon emissions due to the high carbon intensity, and high energy consumption of transmission and processing energy. Although executing ML tasks in edge servers causes less carbon emission, the accuracy loss may be higher which reduces the performance. Besides, to reduce carbon emission, the operator has its carbon emission rights (CER) issued by the government or government agency which is not free of charge and would be bought in a timely manner (in the carbon spot market with a higher price) or in a specified period (in the carbon future market with a relatively cheaper price) 111https://en.wikipedia.org/wiki/Carbon emission trading.

The operator is assumed to run the system in a discrete-time horizon in which is the number of time frames (e.g., minutes or hours) each of which contains time slots (e.g., seconds or minutes). Therefore, the operator can purchase CER at each time slot on demand and at the beginning of each time frame on preserved. Generally, each ML task arrives in each time slot . Similar to the study [32], we construct each computation-intensive ML tasks ( is the set of arriving tasks at with ) with a two-parameter tuple, i.e., . Specifically, represents the input data size, and denotes the computation workload. Through respective wireless channels, devices offload their tasks to edge servers and the cloud server, here we denote as the total offloading locations, in which 0 denotes the cloud server and others denote edge servers. For each server, i.e., location , we denote as the processed accuracy loss. Note that is the accuracy loss processed by the TNN model in edge server or by the DNN model in the cloud server , and each location only maintains one model. As we have illustrated above, the accuracy loss achieved by the cloud server is lower than in edge servers. Besides, and are denoted to illustrate the energy consumption for ML task processing (in terms of processing per input data bit) in the edge server and the cloud server. The carbon intensity of edge server is denoted as while the carbon intensity of the cloud server is denoted as . We use and to denote the price of CER purchased on-preserved and on-demanded accordingly. Furthermore, we introduce , to illustrate the multi-timescale purchased CER amount in our collaboration system, respectively. We summarize the notations used in Table I.

| Notation | Description |

|---|---|

| the number of time frames | |

| the length of each time frame | |

| , | the indicator of each time slot |

| the total number of ML tasks at time slot | |

| the indicator of ML tasks | |

| the indicator of offloading locations | |

| the input data size of ML task at time slot | |

| the computation workload of ML task at time slot | |

| the total offloading locations | |

| the accuracy loss processed by location | |

| the resource price in edge server | |

| the resource price in cloud server | |

| the price of on-preserved CER | |

| the price of on-demanded CER | |

| the carbon intensity of edge server | |

| the carbon intensity of cloud server | |

| long-term time-averaged CER purchasing budget | |

| Lyapunov control parameter | |

| Variable | Definition |

| the on-preserved CER purchasing policy | |

| the on-demanded CER purchasing policy | |

| the ML task offloading decision |

III-B Online Control Decisions of CER Purchasing and ML Task Offloading

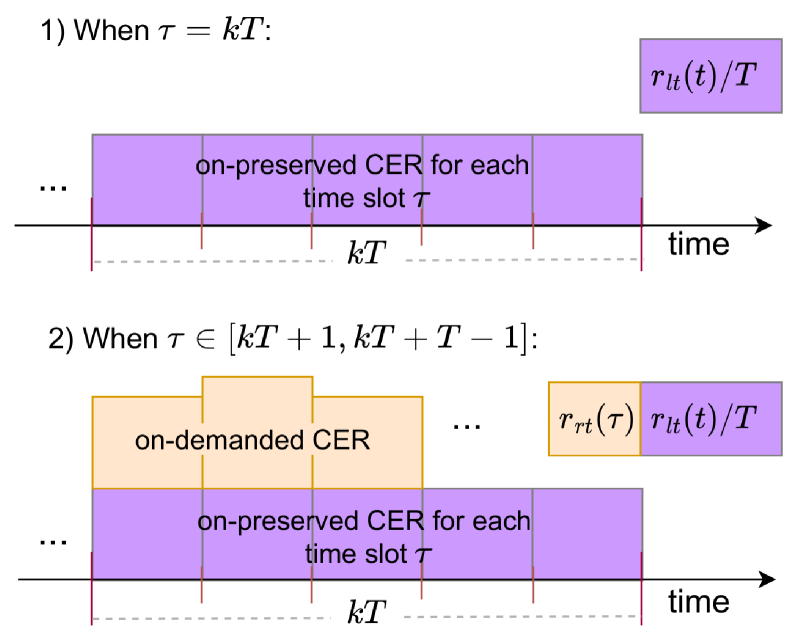

For each time frame , in the beginning, i.e., , the operator will adopt the system information including the energy consumption metrics of each edge server and of the cloud server, the carbon intensity of each edge server and of the cloud server, and the on-preserved CER purchasing price , then makes the decisions of CER purchasing on-preserved for the running of real-time slots in carbon future market. To avoid complicated deployment based on accurate but cost-significant forecast methods for on-preserved CER usage planning over multiple time slots in each time frame, the on-preserved CER in a time frame will be divided evenly, which means at time slot , there is preserved CER. The purchased amount of on-preserved CER can be optimized adaptively over time frames and the CER in need at each time slot can also be well supplemented by optimizing the on-demanded CER purchase. After the operator gets the information mentioned above and the on-demanded CER purchasing price , it decides how much amount of CER purchased in the carbon spot market. Note that, the price of CER at different timescales is not the same, i.e., , and it is difficult to maintain the right scale of CER in advance because of the difficulty of accurately capturing future demand.

Let be the binary indicator of ML task offloading () the task arriving at the beginning of to edge server or the cloud server .

In each time slot , for edge servers, according to the ML task ’s input size , the energy consumption is given by ; while for the cloud server, the energy consumption is given by . As we have mentioned, we use and to denote the carbon intensity of edge server and the cloud server, respectively. So the carbon emission is

| (1) |

And the cost of purchasing CER can be expressed as

| (2) |

The inference accuracy loss of all ML tasks can be expressed as

| (3) |

Our objective mathematical problem P1 is

| (4) |

And the constraints are shown below:

| (5) |

| (6) |

| (7) |

| (8) |

Constraint (5) denotes that is a binary variable that is one if and only if task is offloaded to location in time slot . Constraint (6) indicates that each ML task can be offloaded to only one location and constraint (7) shows that the physical resources in the edge server are restricted by . Constraint (8) indicates that the real needed CER cannot surplus the available CER. Besides, the cost of buying CER should not be beyond the following constraint:

| (9) |

However, it is not easy to minimize the inference accuracy loss (4) under the long-term time-averaged CER purchasing budget due to the unknown future system information and the coupling influence caused by the budget. Also, both the computing resource and CER constraints in (7) and (8) make the combinatorial nature of the ML task offloading decisions much more involved. Hence, an online algorithm is designed to coordinate the on-demanded and on-preserved CER purchasing methods in our work of minimizing problem P1, i.e., minimizing (4) subject to (5), (6), (7), (8) and (9) only using the current known information. Moreover, an efficient approximate scheme based on resource-restricted randomized dependent rounding is integrated into the two-timescale Lyapunov optimization to gain a near-optimal solution.

IV Approximated dynamic optimization algorithm

IV-A Problem Transformation

We first apply the Lyapunov optimization technique [16] to satisfy the carbon emission rights (CER) purchasing cost constraint in (9). Introducing a virtual queue for the operator:

| (10) |

Obviously, a larger value of means that the cost of purchasing CER exceeded the budget . Then we introduce the quadratic Lyapunov function

| (11) |

To further preserves system stability, the -slot conditional Lyapunov drift is defined as

| (12) |

Inspired by the Lyapunov optimization technique [16] which minimizes -slot drift-plus-penalty term, we further get:

| (13) |

here evaluates how much attention is paid to the minimization of inference accuracy loss compared with the queue stability. The motivation for such a control parameter is as follows: Keeping towards a lower congestion state means to bug fewer CER, and consequently leads to a larger accuracy loss. On the contrary, keeping at a smaller value to help us achieve a better performance in accuracy at the cost of the bigger value of queue. In the following, we will give Theorem 1 to further present the upper bound of (13).

Theorem 1.

For any value of , task offloading policy and CER purchasing policy , , the upper bound of (13) is

| (14) |

where and .

Due to the page limit, we leave the proof of Theorem 1 in Appendix A of [33].

According to the deterministic bound shown in Theorem 1, therefore, solving our original problem equals minimizing the right hand of (13) subject to (5), (6), (7) and (8).

Unfortunately, solving this problem needs knowing future information, e.g., the resource’s price and carbon intensity as well as the queue length information. Though the operator can predict future statistics, the results may be less accurate and the prediction errors may degrade the operator’s achieved performance which may need to be considered. Looking deeper into the , it depends on the CER purchasing decisions and . Hence, we propose to address the unknown information by making an approximation to set the future queue backlog values as the current value, i.e., when . After taking this approximation, the “loosened -slot drift-plus-penalty bound” is shown in the following theorem.

Theorem 2.

Let , and . The loosened -slot drift-plus-penalty bound of Theorem 1 satisfies

| (15) |

where .

We prove Theorem 2 in Appendix B of [33].

Substituting and into inequality (15), we finally get the final optimization objective P2

| (16) |

In the following, we first decompose problem P2 then design an online algorithm to deal with it.

| (17) | ||||

| (18) | ||||

IV-B Two-timescale online algorithm for P2

Algorithm 1 presents our algorithm explicitly. We ignore the term in Eq. (17) and Eq. (18) because these terms are constants at each real-time slot and have no influence on decisions. Besides, the variable and the price are ignored in Eq. (17) since we assumed that the price of CER purchasing on-preserved is always lower than on-demanded. That is to say, the operator will buy enough on-preserved CER which is cheaper, and does not need to bug any on-demanded CER at time slot . Since each slot’s is already known, and the expectation is conditioned on it, the expectation of Eq. (17) and Eq. (18) can be eliminated. As presented in Fig. 2, for each time frame , at the beginning , the operator devotes to solving problem P3 to obtain the on-preserved CER for each time slot in frame ; while at each time slot , the operator intends to solve problem P4 to get the on-demanded CER at each time slot.

Unfortunately, problem P3 and P4 both can be proven are NP-hard [34], to solve it, we will introduce an efficient approximated algorithm to get the near-optimal solution.

IV-C Approximate Algorithm for P3 and P4

There comes the idea that once the integral constraint (5) is relaxed into the linear constraint, the relaxed problem P3 (P4) becomes the standard linear programming (LP) problem and thus using simplex method [35] or other linear programming optimization technique such as [36] to easily solve. After getting the optimal fractional policies, we need a proper rounding method to get binary solutions while maintaining the capacity constraint (7) and CER constraint (8). Algorithm 2 shows our rounding algorithm in detail.

We first relax the integral constraint (5), and obtain the relaxed-P3 named problem as follows:

| (19) | ||||

In the same way, problem (relaxed-P4) is presented by:

| (20) | ||||

Then we invoke simplex method on solving () thus get fractional offloading decisions (i.e., ) and purchase amount of CER decisions (i.e., and ). For fractional offloading solutions , a straightforward way is use Pr to obtain binary decisions . However, using this method will sometimes incur infeasible solutions. For example, there may be a situation that all fractional solutions where equals all are rounded to 1, incurring the high cost of purchasing CER in cloud or violating constraint (7) of edge servers . Considering this deficiency, we are encouraged to exploit the dependence of fractional solutions to improve optimally while obeying the computing resource and CER constraints in (7) and (8) to design a proper resource-restricted randomized dependent rounding algorithm (R3DRA). Therefore, we will explore the dependence of decisions randomly. The key idea of R3DRA is the existence of correspondence between one rounded-up variable and another rounded-down variable, which means that there exists a compensatory between these two random variables to ensure the capacity constraint in the edge server.

Input:

The current system information;

Output:

Integral solution and CER purchasing decision ;

We give a simple illustration of R3DRA for solving problem (). After we get the optimal fractional solutions , then we denote two sets for each ML task : one floating set and one rounded set . We further denote a probability coefficient as well as a weight coefficient for each fractional . The probability coefficient is initialized as while the weight coefficient is initialized as . R3DRA runs in a series iteration to round each fractional element in set . Specifically, in each iteration, there are two elements and randomly selected from set , and we further introduce the coupled coefficient and in order to adjust the value of and . Pay attention to that, after each adjustment, one of and is at least 0 or 1, and then we set or . Therefore, in each round, fractional elements in set will at least decrease by 1. Eventually, when there is only one element in , we set if the capacity is not longer than and otherwise set . As we have mentioned, the detailed expression can be seen in Algorithm 2.

It’s worth noting that R3DRA is cost-efficient because it avoids the occurrence to offload all ML tasks to clouds to avoid incurring the high cost of purchasing CER, and is computation-efficient with the complexity only of . Therefore, the complexity of TTOA is .

V Performance analysis

In the next, through rigorous analysis, we present the rounding gap caused by applying R3DRA to problem P2 (i.e., P3 for each frame’s beginning and P4 in each frame) and performance analysis of TTOA algorithm for problem P1 after involving -slot drift-plus-penalty methodology [16] to our objective.

Note that since we build upon the approximate solutions by R3DRA algorithm and hence the TTOA algorithm is an approximate dynamic optimization solution, our performance analysis results are different from the standard cases which require obtaining the optimal solutions for all time scales.

V-A Rounding Gap of R3DRA

Theorem 3.

By applying R3DRA to get policy , and , we have:

| (21) |

where is P2’s value under rounded solutions and (), is P2’s optimal value, , and are constants.

Due to space limits, the proof is given in Appendix C of our online technical report [33]. The theorem above indicates that our proposed R3RDA solution is efficient, and can guarantee an approximate solution within a bounded neighborhood of the optimal solution for problem P2.

V-B Performance Analysis of TTOA

Theorem 4.

After designing TTOA to deal with problem P1 and R3DRA to address problem P2, the time-average accuracy loss satisfies:

| (22) |

and the CER purchasing cost queue length satisfies:

| (23) |

and the cost of CER purchasing is bounded:

| (24) |

where is the optimal time-average accuracy loss, is the largest accuracy loss, and is a constant.

We prove Theorem 3 and Theorem 4 in detail in Appendix C and D of [33], respectively. From the above theorems, we can adjust the value of thus to balance the performance gap of inference accuracy well. Next, we will give the performance evaluation driven by the real carbon intensity trace to verify our theoretical analysis.

| Parameters | Value Range |

|---|---|

| simulation timespan | 1500 |

| number of offloading locations | {5,10,15,20} |

| number of tasks | {5,10,20,50} |

| task input size | bits |

| the amount of computing resources | cycles |

| resource price in edge server | bit/J |

| resource price in cloud server | bit/J |

| computation capacity of edge server | CPU cycles |

| accuracy loss of TNN model | |

| accuracy loss of DNN model | |

| price of purchasing on-preserved CER | |

| price of purchasing on-demanded CER | |

| CER purchasing budget | |

| Lyapunov control parameter |

VI Performance evaluation

VI-A Simulation Setup

We consider there are offloading locations consisting of edge servers and one cloud server and the number of ML tasks . Similar to many existing studies which researched the model compressing influenced the accuracy loss such as [37], the diverse inference accuracy loss of the TNN models of edge servers is set by randomly generating over the range . In contrast, the inference accuracy loss of the DNN model in the cloud server is set as . The input data of each ML task is set as bits, and the workload of each ML task is set to consist of cycles both of them can be referenced to [38]. We consider the resource price in each edge server to be in bit/J while in the cloud server is in bit/J both of which are similar to [39]. And the computation capacity of edge servers is assumed to be CPU cycles. The number of arriving ML tasks in the system is randomly generated over the range [1,10]. The carbon intensity of edge servers and the cloud server is according to the regional carbon intensity data in the UK (per 30 mins) of National Grid ESO [40], and we use the 5 regions’ data to represent the carbon intensity of edge servers and the cloud server. We assume the price of purchasing CER and are subject to uniform random distribution and satisfy , we also assume that , . We evaluate our algorithms’ performance by setting the control parameter under the long-term time-averaged CER purchasing budget cost units. The simulation runs on Matlab, where a total of (in which and ) randomized realizations are averaged for the current given system information. Table II gives the simulation parameters in detail.

We compare our algorithm’s performance with three benchmarks which are shown below:

-

1.

All to Cloud algorithm (ACloud): Always choose the cloud server to execute ML tasks, which will achieve the best performance in inference accuracy loss but incur the highest CER purchasing cost and is an extreme contrast.

-

2.

One Time-scale algorithm (OTime): We zoom in on our smaller timescale, which means making our two-timescale time slot units into one. In our following experiments, the one-time slot is set as 30 minutes in this algorithm, and thus we need to decide the offloading decisions and each slot’s CER purchasing amount.

-

3.

Greedy algorithm (Greedy): The core idea of this algorithm is to achieve performance greedily while obeying the constraint of CER purchasing, which compares the cost of each possible offloading mode and then selects the better performance one greedily to offload the ML tasks under the CER purchasing constraint.

VI-B The Accuracy Loss vs Queue Backlog trade-off

We first show the performance of our TTOA with different values of with (7.5 hours a time frame). As shown in Fig. 3(a), our proposed TTOA achieves excellent performance. For instance, it can reduce of the inference accuracy loss compared to Greedy and gets at most performance loss compared with ACloud when . Besides, with the control parameter increasing, the accuracy loss will reduce, this verifies the analytical analysis in Eq. (22). From Fig. 3(b), we can see the average queue backlog is approximately proportional to the control parameter which verifies the analytical analysis in Eq. (23), and our TTOA achieves the lowest queue backlog size. Obviously, a larger value of means that we pay more attention to minimizing accuracy loss than obeying the purchasing CER cost budget. From Fig. 3(c), it is clearly seen that with a larger value of , more ML tasks will be influenced by the cloud server hence achieving lower accuracy loss in Fig. 3(a) and higher queue backlog size in Fig. 3(b).

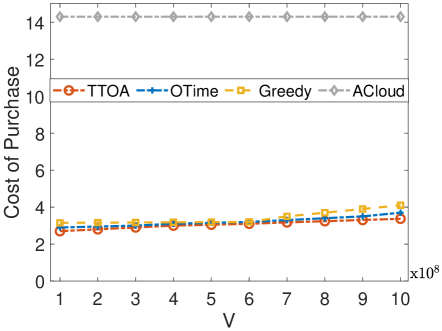

The performance of the cost of purchase with different values of control parameter can be seen in Fig. 4(a), in which we can see the cost of purchasing CER grows with the values of increasing, and our TTOA uses the lowest cost while the ACloud causes the highest cost. For instance, when , our TTOA achieves an up to cost of purchase reduction over the ACloud. And we further evaluate the performance of the cost of purchasing CER with different time slots in Fig. 4(b). We can know that as the time slot increases, the cost of the CER purchase in our TTOA decreases and finally blows the long-term CER purchasing budget . Therefore, the performances as shown in Fig. 4(a) and Fig. 4(b) have verified the analytical analysis in Eq. (24), i.e., the time-average carbon emission purchasing cost bound is roughly proportional to and lower than CER purchasing budget . It is obvious to know that our TTOA achieves the lowest carbon emission. As can be seen in Fig. 4(c), to verify the robustness of our TTOA, we further evaluate the performance while the CER purchasing price is in the Gaussian distribution in which the mean emission right price equals the uniform distribution above. It is clear to know that TTOA still performs the best performance in terms of the accuracy loss of the implementation which is the same as in the uniform case above.

VI-C Impact of budget , time frame length and CER purchasing prices on algorithms’ performance

There is an intuition that a larger budget may supply more effort for ML tasks offloading. In Fig. 5(a), Low, Middle, and Large are set separately as , , and cost units while the control parameter . We see that with the long-term CER purchasing budget increasing, compared to OTime and Greedy, our TTOA is significantly improved. For example, given the long-term CER purchasing budget cost units, the accuracy loss of TTOA is reduced by .

In Fig. 5(b), we increase the value of each time frame from 2.5 hours to 15 hours with (i.e., and each single time slot is set as 30min in coordinate with the carbon intensity data trace), finding out that though the fluctuation of accuracy loss is not large, our TTOA still gains better performance than OTime. Especially, in our simulation, the accuracy loss is minimized when . The accuracy loss of the OTime is always higher than TTOA no matter what ’s value is. This further evaluates TTOA which contains a two-timescale and is robust to the change of . Therefore though setting the value of appropriately, we can as far as possible meet the on-preserved CER period and minimize the accuracy loss. We realize that although the values of are inappropriate, we can still make real-time CER purchasing to offset supply and demand nicely.

We conduct the performance of accuracy loss and queue backlog size under different CER purchasing prices and in Fig. 5(c) where . Particularly, we increase the price of from to (i.e., increase the price of from to ). Obviously, when the price is small, we can not only get a lower accuracy loss but also gain a lower purchase cost. Besides, when changes from to the accuracy loss increase seriously, while changes from to the queue backlog increase a lot. This is due to the limitation of the pre-defined long-term CER purchasing budget.

VI-D The impact of the number of ML tasks and the number of offloading locations on algorithms’ performance

As shown in Fig. 6(a), the accuracy loss in different algorithms with and the number of ML tasks is presented. Our TTOA always achieves the best accuracy loss under different values of , while the performance in the Greedy is the worst because it ignores the coupling influence of each time slot’s decisions when making the policy. As we have illustrated that we zoom in on our smaller timescale in OTime algorithm to make two-timescale time slot units into one. We can see that OTime’s performance is not better than TTOA’s all the time, this verifies the validity of our harnessing spot and future carbon markets.

To further show the scalability of our proposed TTOA, we illustrate the accuracy loss in different algorithms with and the number of offloading locations in Fig. 6(b). It is clear to know that TTOA always achieves the best performance in terms of inference accuracy, and with the number of edge servers increasing, more computing resources are provided, thus the accuracy loss of all algorithms decreases. Though the computing resources of edge servers become rich, the CER purchasing budget is the same as before, this gives the reason that the accuracy loss of and in TTOA is approximate equality.

VI-E R3DRA’s performance in TTOA

To show the performance of our proposed rounding algorithm R3DRA, we compare R3RDR with two other rounding benchmark algorithms: the first one is OPT which uses the MILP solver to obtain the policy; the other one is IRR, i.e., uses Pr to obtain binary decisions while ignoring the capacity of edge servers. As presented in Fig. 7, it is obvious to see that R3DRA achieves near-optimal performance in accuracy loss compared with OPT the running time of which is nearly 6 times longer than R3DRA. Besides, though IRR’s running time is not long, it violates the edge servers’ capacity. To sum up, our rounding algorithm R3DRA is efficient and achieves near-optimal performance.

VII Conclusion

In this paper, we harness spot and future carbon markets for greening edge AI towards carbon-neutral edge computing. We propose an online carbon-aware algorithm in order to minimize ML tasks inference accuracy loss under the long-term time-averaged carbon emission rights purchasing budget in a collaborative edge computing framework. We formulate ML tasks offloading problem P1 with the goal of optimizing inference accuracy loss under the carbon emission rights purchasing budget. We transform and loosen the original time coupling problem to get -slot loosened problem P2. To solve it, we develop an online algorithm TTOA that aims to solve mixed-integer linear programming problems P3 and P4. In TTOA, by relaxing the feasible region to the real range, we get problems and , to solve which we design a resource-restricted randomized dependent rounding algorithm R3DRA. We finally show the superior performance of TTOA and R3DRA through rigorous theoretical analysis and detailed experiments driven by the real carbon intensity trace.

In the future, we intend to add the prediction of green energy into the whole algorithm design so as to further contribute to achieving carbon-neutral edge computing.

References

- [1] S. Han, X. Liu, H. Mao, J. Pu, A. Pedram, M. A. Horowitz, and W. J. Dally, “Eie: Efficient inference engine on compressed deep neural network,” in 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), 2016, pp. 243–254.

- [2] C. Hu, W. Bao, D. Wang, and F. Liu, “Dynamic adaptive dnn surgery for inference acceleration on the edge,” in IEEE INFOCOM 2019 - IEEE Conference on Computer Communications, 2019, pp. 1423–1431.

- [3] H. Yang, A. Alphones, Z. Xiong, D. Niyato, J. Zhao, and K. Wu, “Artificial-intelligence-enabled intelligent 6g networks,” IEEE Network, vol. 34, no. 6, pp. 272–280, 2020.

- [4] Y. Jiang, Q. Ye, B. Sun, Y. Wu, and D. H. Tsang, “Data-driven coordinated charging for electric vehicles with continuous charging rates: A deep policy gradient approach,” IEEE Internet of Things Journal, vol. 9, no. 14, pp. 12 395–12 412, 2022.

- [5] S. Ulukus, A. Yener, E. Erkip, O. Simeone, M. Zorzi, P. Grover, and K. Huang, “Energy harvesting wireless communications: A review of recent advances,” IEEE Journal on Selected Areas in Communications, vol. 33, no. 3, pp. 360–381, 2015.

- [6] D. Liu, Y. Chen, K. K. Chai, T. Zhang, and M. Elkashlan, “Two-dimensional optimization on user association and green energy allocation for hetnets with hybrid energy sources,” IEEE Transactions on Communications, vol. 63, no. 11, pp. 4111–4124, 2015.

- [7] Z. Xiong, Y. Zhang, D. Niyato, R. Deng, P. Wang, and L.-C. Wang, “Deep reinforcement learning for mobile 5g and beyond: Fundamentals, applications, and challenges,” IEEE Vehicular Technology Magazine, vol. 14, no. 2, pp. 44–52, 2019.

- [8] “United nations carbon offset platform,” https://unfccc.int/climate-action/climate-neutral-now/united-nations-carbon-offset-platform, Accessed: 2022-12-22.

- [9] C. Batlle and J. Barquin, “Emission rights allocation criteria,” in 2007 IEEE Power Engineering Society General Meeting, 2007, pp. 1–3.

- [10] “Eu carbon permits,” https://tradingeconomics.com/commodity/carbon, Accessed: 2022-12-24.

- [11] S. Teerapittayanon, B. McDanel, and H. Kung, “Branchynet: Fast inference via early exiting from deep neural networks,” in 2016 23rd International Conference on Pattern Recognition (ICPR), 2016, pp. 2464–2469.

- [12] B. Martinez and X. Vilajosana, “Exploiting the solar energy surplus for edge computing,” IEEE Transactions on Sustainable Computing, vol. 7, no. 1, pp. 135–143, 2022.

- [13] P. D. Mitcheson, E. M. Yeatman, G. K. Rao, A. S. Holmes, and T. C. Green, “Energy harvesting from human and machine motion for wireless electronic devices,” Proceedings of the IEEE, vol. 96, no. 9, pp. 1457–1486, 2008.

- [14] Z. Zhou, F. Liu, Y. Xu, R. Zou, H. Xu, J. C. Lui, and H. Jin, “Carbon-aware load balancing for geo-distributed cloud services,” in 2013 IEEE 21st International Symposium on Modelling, Analysis and Simulation of Computer and Telecommunication Systems, 2013, pp. 232–241.

- [15] M. A. Islam, H. Mahmud, S. Ren, and X. Wang, “A carbon-aware incentive mechanism for greening colocation data centers,” IEEE Transactions on Cloud Computing, vol. 8, no. 1, pp. 4–16, 2020.

- [16] M. J. Neely, “Stochastic network optimization with application to communication and queueing systems,” Synthesis Lectures on Communication Networks, vol. 3, no. 1, pp. 1–211, 2010.

- [17] P. Yang, F. Lyu, W. Wu, N. Zhang, L. Yu, and X. Shen, “Edge coordinated query configuration for low-latency and accurate video analytics,” IEEE Transactions on Industrial Informatics, vol. 16, no. 7, pp. 4855–4864, 2020.

- [18] E. Li, L. Zeng, Z. Zhou, and X. Chen, “Edge ai: On-demand accelerating deep neural network inference via edge computing,” IEEE Transactions on Wireless Communications, vol. 19, no. 1, pp. 447–457, 2020.

- [19] W. Wu, P. Yang, W. Zhang, C. Zhou, and X. Shen, “Accuracy-guaranteed collaborative dnn inference in industrial iot via deep reinforcement learning,” IEEE Transactions on Industrial Informatics, vol. 17, no. 7, pp. 4988–4998, 2021.

- [20] W. Zhang, D. Yang, H. Peng, W. Wu, W. Quan, H. Zhang, and X. Shen, “Deep reinforcement learning based resource management for dnn inference in industrial iot,” IEEE Transactions on Vehicular Technology, vol. 70, no. 8, pp. 7605–7618, 2021.

- [21] C. Yang, C. Huang-Fu, and I. Fu, “Carbon-neutralized task scheduling for green computing networks,” CoRR, vol. abs/2209.02198, 2022. [Online]. Available: https://doi.org/10.48550/arXiv.2209.02198

- [22] Z. Yu, Y. Zhao, T. Deng, L. You, and D. Yuan, “Less carbon footprint in edge computing by joint task offloading and energy sharing,” CoRR, vol. abs/2211.05529, 2022. [Online]. Available: https://doi.org/10.48550/arXiv.2211.05529

- [23] S. Savazzi, V. Rampa, S. Kianoush, and M. Bennis, “An energy and carbon footprint analysis of distributed and federated learning,” CoRR, vol. abs/2206.10380, 2022. [Online]. Available: https://doi.org/10.48550/arXiv.2206.10380

- [24] L. Lannelongue, J. Grealey, and M. Inouye, “Green algorithms: Quantifying the carbon footprint of computation,” Advanced Science, vol. 8, no. 12, p. 2100707, 2021. [Online]. Available: https://onlinelibrary.wiley.com/doi/abs/10.1002/advs.202100707

- [25] M. Jiang, X. Feng, and L. Li, “Market power, intertemporal permits trading, and economic efficiency,” Frontiers in Energy Research, vol. 9, 2021. [Online]. Available: https://www.frontiersin.org/articles/10.3389/fenrg.2021.704556

- [26] Z. Fuwei, G. Ying, and Y. Jing, “Comparison of generation rights trade and carbon emissions trading,” in World Automation Congress 2012, 2012, pp. 1–3.

- [27] H. Guan, “The determinants of carbon emission price—empirical analysis based on the vec model,” in 2021 2nd International Conference on Big Data Economy and Information Management (BDEIM), 2021, pp. 68–71.

- [28] C. Mu, T. Ding, S. Zhu, O. Han, P. Du, F. Li, and P. Siano, “A decentralized market model for a microgrid with carbon emission rights,” IEEE Transactions on Smart Grid, pp. 1–1, 2022.

- [29] C. Zhang, J. Xu, and F. Cheng, “Equilibrium decision research on closed-loop supply chain network based upon carbon emission rights constraint and risk aversion,” in 2018 5th International Conference on Information Science and Control Engineering (ICISCE), 2018, pp. 206–215.

- [30] C.-S. Yang, C.-C. Huang-Fu, I. Fu et al., “Carbon-neutralized task scheduling for green computing networks,” arXiv preprint arXiv:2209.02198, 2022.

- [31] R. Li, Z. Zhou, X. Chen, and Q. Ling, “Resource price-aware offloading for edge-cloud collaboration: A two-timescale online control approach,” IEEE Transactions on Cloud Computing, vol. 10, no. 1, pp. 648–661, 2022.

- [32] W. Fan, Z. Chen, Z. Hao, Y. Su, F. Wu, B. Tang, and Y. Liu, “Dnn deployment, task offloading, and resource allocation for joint task inference in iiot,” IEEE Transactions on Industrial Informatics, vol. 19, no. 2, pp. 1634–1646, 2023.

- [33] R. Mahui, Z. Zhou, and X. Chen, “Low-carbon edge: A carbon-aware machine learning task offloading under limited carbon emission rights purchasing cost budget,” https://bit.ly/3H051mC.

- [34] R. Li, Z. Zhou, X. Zhang, and X. Chen, “Joint application placement and request routing optimization for dynamic edge computing service management,” IEEE Transactions on Parallel and Distributed Systems, vol. 33, no. 12, pp. 4581–4596, 2022.

- [35] J. A. Nelder and R. Mead, “A simplex method for function minimization comput,” Computer Journal, no. 4, p. 4, 1965.

- [36] S. Singer and J. Nelder, “Nelder-mead algorithm,” Scholarpedia, vol. 4, no. 7, p. 2928, 2009.

- [37] “Angus galloway. 2018. tensorflow xnor-bnn,” https://github.com/AngusG/tensorflow-xnor-bnn.

- [38] F. Wang, S. Cai, and V. K. N. Lau, “Decentralized dnn task partitioning and offloading control in mec systems with energy harvesting devices,” IEEE Journal of Selected Topics in Signal Processing, pp. 1–16, 2022.

- [39] H. Ma, P. Huang, Z. Zhou, X. Zhang, and X. Chen, “Greenedge: Joint green energy scheduling and dynamic task offloading in multi-tier edge computing systems,” IEEE Transactions on Vehicular Technology, vol. 71, no. 4, pp. 4322–4335, 2022.

- [40] “Regional carbon intensity forecast.” https://data.nationalgrideso.com/carbon-intensity1/regional-carbon-intensity-forecast, Accessed: 2022-12-06.