Towards Better Data Exploitation in Self-Supervised Monocular Depth Estimation

Abstract

Depth estimation plays an important role in robotic perception systems. The self-supervised monocular paradigm has gained significant attention since it can free training from the reliance on depth annotations. Despite recent advancements, existing self-supervised methods still underutilize the available training data, limiting their generalization ability. In this paper, we take two data augmentation techniques, namely Resizing-Cropping and Splitting-Permuting, to fully exploit the potential of training datasets. Specifically, the original image and the generated two augmented images are fed into the training pipeline simultaneously and we leverage them to conduct self-distillation. Additionally, we introduce the detail-enhanced DepthNet with an extra full-scale branch in the encoder and a grid decoder to enhance the restoration of fine details in depth maps. Experimental results demonstrate our method can achieve state-of-the-art performance on the KITTI and Cityscapes datasets. Moreover, our KITTI models also show superior generalization performance when transferring to Make3D, NYUv2 and Cityscapes datasets. Our codes are available at https://github.com/LiuJF1226/BDEdepth.

Index Terms:

Deep learning for visual perception, deep learning methods, visual Learning.I Introduction

Depth estimation is one of the critical tasks in robotics, which can help robots perceive the scene structure and navigate in the 3D world. Due to the simplicity, flexibility and low cost of monocular cameras, monocular depth estimation recently has become a research hotspot in the realm of robotics and computer vision. Nonetheless, amassing extensive annotated training datasets for supervised learning [1, 2, 3, 4, 5, 6, 7, 8] is extremely expensive. To reduce the reliance on labeled data, self-supervised paradigm [9, 10, 11] has emerged as a viable approach, leveraging monocular videos, stereo pairs, or even both to provide supervisory signals via novel view synthesis. This paradigm frames training as minimizing photometric reprojection error, which is also the core idea of unsupervised flow [12] and direct visual odometry (DVO) in simultaneous localization and mapping (SLAM) systems.

Recent self-supervised monocular depth estimation methods [13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37] have achieved remarkable results, but they still fail to make full use of the potential information within the training datasets. Consequently the generalization ability is limited, which is significant in robotics since robots should adapt to diverse scenarios. Data augmentation is a common and cost-effective way in computer vision to improve the generalization ability of the model. However, most existing methods just simply take horizontal flipping and color jittering. Although some [24, 28] also use resizing and cropping, they only train with augmented images and discard the original images. In order to fully dig into the potential of training datasets for various robotic applications, we use two data augmentation approaches, aforementioned Resizing-Cropping and novel Splitting-Permuting. The latter first splits an image into patches and then permutes them to generate a new image with context broken. Hence, there are two augmented images generated from each target image. Each of the three image views participates in the view synthesis of the self-supervised training process. And depth maps from the augmented views are also used for self-distillation with the original one.

On the other hand, most approaches follow Monodepth2 [16] to adopt a prevalent encoder-decoder architecture for depth estimation. The encoder extracts five-level features from the input image. The features encapsulate both global and local information of the image, with varying receptive fields at different scales, which are subsequently fed into a U-Net [38] decoder to generate the depth map. This architecture empowers Monodepth2 to gain advanced performance. However, it still struggles to recover fine details in depth maps. If the depth map is rough, robots could miss details or misinterpret object relationships when mapping, leading to inaccurate environmental perception. To tackle this limitation, we propose a detail-enhanced DepthNet in this paper, with an extra full-scale branch in the encoder and a grid decoder.

Our main contributions can be summarized as follows:

-

•

To fully excavate the potential of datasets for better generalization performance, we use classic Resizing-Cropping and novel Splitting-Permuting augmentation to generate another two augmented views for self-distillation.

-

•

To enhance the fine details of depth maps, we propose to add a full-scale branch and adopt a grid decoder, forming our detail-enhanced DepthNet.

- •

II Related Work

II-A Supervised Monocular Depth Estimation

Fully supervised methods for depth estimation in the field of robotics or autonomous driving require depth ground truth when training. Among this category of methods, Eigen et al. [1] first propose to use convolutional neural network (CNN) for monocular depth estimation. They employ a multi-scale neural network to estimate the depth map. Liu et al. [2] combine a depth CNN with continuous conditional random fields (CRFs) for depth estimation from a single image. Li et al. [3] propose a multi-scale approach, which uses a deep neural network to regress depth at the super-pixel scale and applies CRFs for post-processing. Laina et al. [4] introduce an innovative up-sampling module and utilize the reverse Huber loss to improve training. And Fu et al. [5] employ a multi-scale network and regard depth estimation as an ordinal regression task, resulting in improved accuracy and faster convergence.

II-B Self-supervised Monocular Depth Estimation

The high expense of collecting large annotated datasets for depth estimation has led to the emergence of self-supervised methods that do not rely on the ground truth. Garg et al. [9] first consider the training of monocular depth estimation as a view synthesis problem with a photometric-consistency loss between stereo pairs. Building upon this idea, Godard et al. [10] introduce a left-right disparity consistency loss, which can improve the accuracy of depth estimation. For training with monocular video frames, Zhou et al. [11] propose a pioneering method which jointly learns monocular depth and ego-motion from monocular videos in a self-supervised way. They also use an explainability prediction network to exclude pixels that violate view synthesis assumptions, to improve the robustness when meeting with occlusion and moving objects. For dynamic scenes, other works introduce multi-task learning such as optical flow estimation [13] and semantic segmentation [14], or introduce additional constraints, such as uncertainty estimation [15]. Then Godard et al. [16] propose Monodepth2, which employs a minimum reprojection loss to mitigate occlusion issues and an auto-masking loss to filter out moving objects with the similar velocity as the camera. This work achieves competitive results without introducing extra tasks, which presents promising prospects for robotic perception. Therefore many subsequent methods including ours follow and base on it.

II-C Data Augmentation and Self-distillation

For data augmentation, many existing methods just simply utilize horizontal flipping and color jittering. Some approaches [28, 24, 27] additionally use resizing and cropping. And Peng et al. [29] proposes a data augmentation approach called data grafting, which forces the model to explore more cues to infer depth besides the vertical image position. Among them, [24], [28] and [29] only utilize augmented images for training. Especially, PlaneDepth [24] achieves striking performance when using stereo pairs, but poor with monocular videos. The most similar to our work is RA-Depth [27], which uses resizing and cropping to generate images with arbitrary scales for the same scene. It modifies the camera intrinsics to keep the world coordinates, whereas we follow the assumption in [24] that all input images, including augmented views and original one, are captured by the same camera system.

Self-distillation is commonly used in self-supervised learning which means using a pretrained teacher network to generate pseudo labels for training a better student network. In depth estimation, for example, Pilzer et al. [30] obtain multiple predictions from teacher network to generate pseudo labels for the student network training. Self-distillation can also be used to reduce the uncertainty of depth prediction [31], and solve the artifact problem caused by occlusion especially under stereo training [24], which actually feeds a flipped version of the original image into a depth network to synthesize pseudo labels. In our work, we also use two data augmentation methods and generate pseudo labels from augmented images.

III Methodology

III-A Preliminaries

Self-supervised monocular depth estimation paradigm aims at training a depth network to predict a per-pixel depth map from the input RGB image , without providing any depth ground truth. Specifically, considering a target view in a consecutive image sequence from a monocular video, the neighbour frames are used as the source views to carry out view synthesis. For each source view , an auxiliary pose network is utilized to estimate the relative camera pose , where and represent the rotation and translation component respectively. Note that the pose network is only used for training and could be discarded in the inference, and it is not needed when training with only stereo pairs since the stereo baseline is already known. Then the target depth map and the relative camera pose from the target view to the source view can be derived from:

| (1) |

And the scene at the viewpoint of can be synthesized from the source view as:

| (2) |

where is the synthesized image, is the sampling operator, returns the 2D coordinates of the depths in when reprojected into the viewpoint of , and is the camera intrinsics matrix assumed to be known. Finally the per-pixel photometric loss between and is used to optimize the full network. We follow [16] to use the combinaton of structural similarity (SSIM) [43] and L1 distance as the photometric error function and take the minimum across all the source views at each pixel, which is formulated as:

| (3) |

| (4) |

where . And also, the edge-aware smoothness loss is used to cope with depth discontinuities:

| (5) |

where is the mean-normalized inverse depth from [32] to discourage shrinking of the estimated depth.

III-B Data Augmentation for Self-distillation

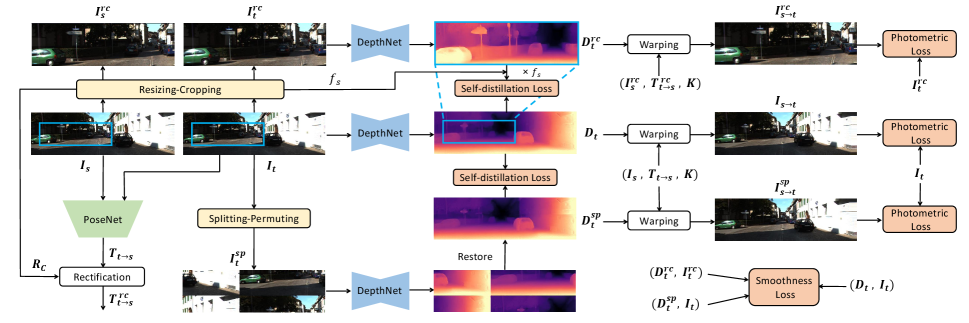

In this paper, We adopt two data augmentation strategies, Resizing-Cropping and Splitting-Permuting, to generate another two augmented views and from the original target image for self-distillation. It is worth noting that both the original image and augmented images are involved in the training process. The augmentation detail for a single image view is provided in Algorithm 1 and the overview of our training pipeline is depicted in Fig. 1.

III-B1 Resizing-Cropping

We follow [24] to leverage an essential monocular cue that the closer an object is, the larger its relative size is. Therefore, it is assumed that when a image is scaled by a factor , the relative size of an object also increases by and its depth decreases by . This augmentation is applied to both target image and source images, generating from and from . As proposed in [24], given the original relative pose , the pose from to should be rectified as:

| (6) |

where is the rectification matrix which transforms the original world coordinates to the new coordinates originated at the augmented image. Denote the center coordinate in the original image coordinate system as , the center of cropped patch as , the camera focal length as , then can be written as:

| (7) |

And the corresponding synthesized image from and the photometric loss can be obtained by:

| (8) |

| (9) |

where is the predicted depth map from .

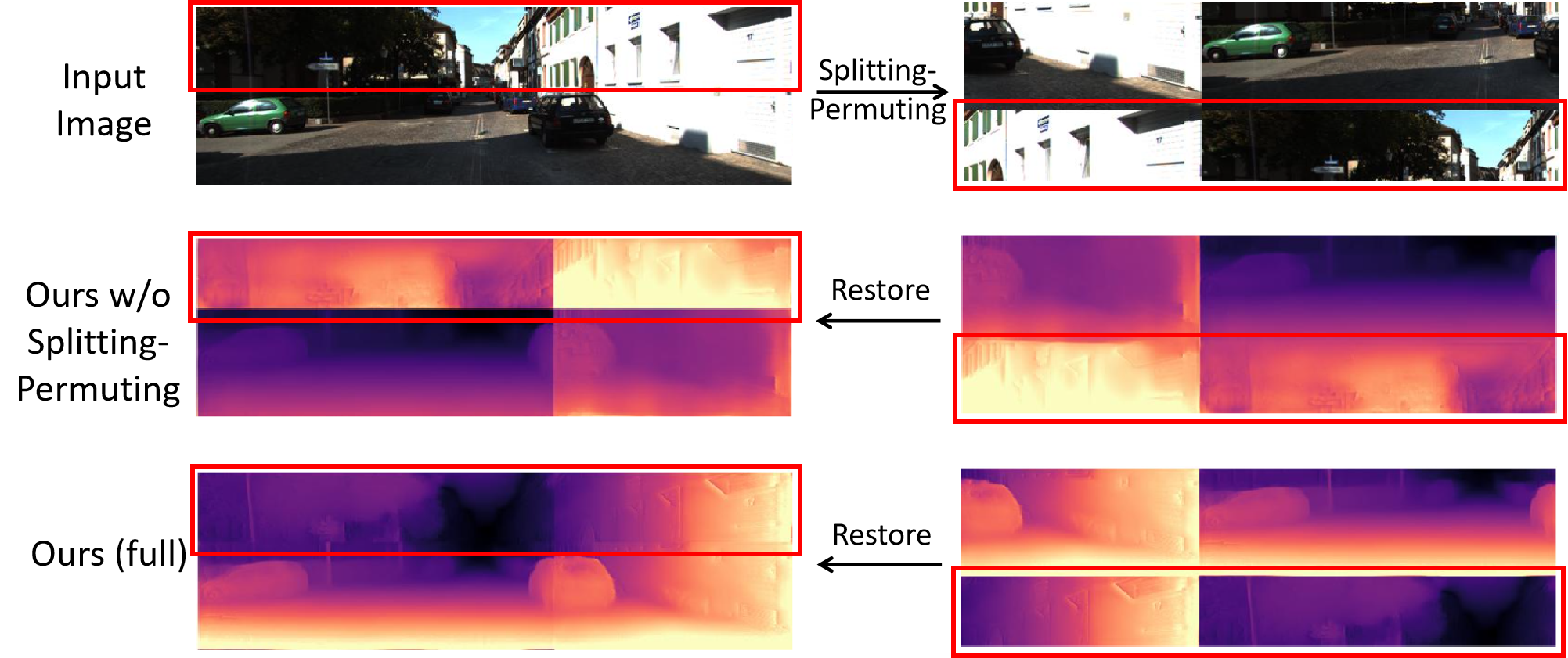

III-B2 Splitting-Permuting

Monocular depth estimation can easily get stuck in overfitting since its excessive dependence on the vertical image position [29]. To improve the generalization ability, we propose a novel augmentation approach, Splitting-Permuting, which provides perturbation and breaks the image context both vertically and horizontally. Specifically, the original image is firstly split into top part and bottom part with a random split ratio and the two parts are permuted to generate a new image. Then it is successively split into left and right parts and permuted, finally generating another augmented view. This is only conducted on the target image to produce , from which we can obtain the depth map as . The operation can restore the predicted map to the original context structure for reprojection, as shown in Fig. 1. And we use the original and the restored to do view synthesis and calculate photometric loss as:

| (10) |

| (11) |

III-B3 Self-distillation

To fully leverage the valid information from data augmentation, the depth maps predicted from augmented views are used to conduct self-distillation, which means we utilize and from Resizing-Cropping and Splitting-Permuting respectively as pseudo labels to provide more supervisory signals. Specifically, for , we first select the corresponding area in , from which the is cropped. And then is resized to the same resolution as , denoted as . Therefore, this part of self-distillation loss is formulated as:

| (12) |

where is the widely used scale-invariant error function proposed in [1]. Here the scale factor is due to aforementioned assumption that depth from original image is times of that from resized image. Then let and denote the pixel of , the scale-invariant loss can be calculated as:

| (13) |

where and is the number of pixels. And for , we can similarly obtain the self-distillation loss as:

| (14) |

III-B4 Loss Function

The standard self-supervised loss for the original image contains the photometric loss and the edge-aware smoothness loss:

| (15) |

where and is the auto-masking introduced in [16]. This loss is applied to both two augmented views, formuating and as:

| (16) |

| (17) |

where and are the corresponding smoothness loss terms for the augmented views and can be calculated similarly as (5). Considering both self-supervised losses and self-distillation losses, we can write the total loss function as:

| (18) |

where is set to 0.07 in our experiments.

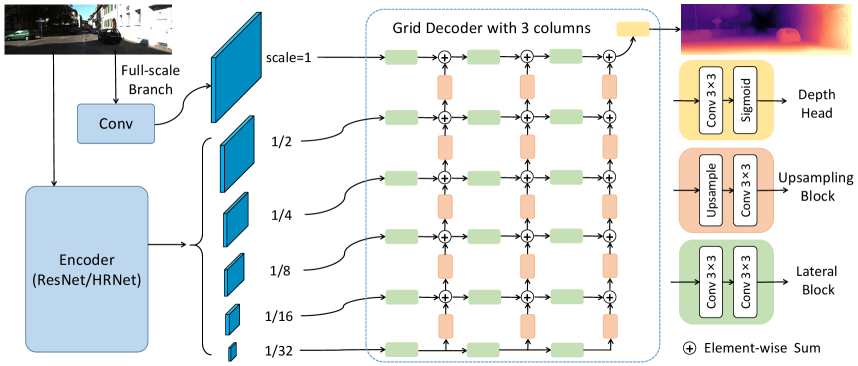

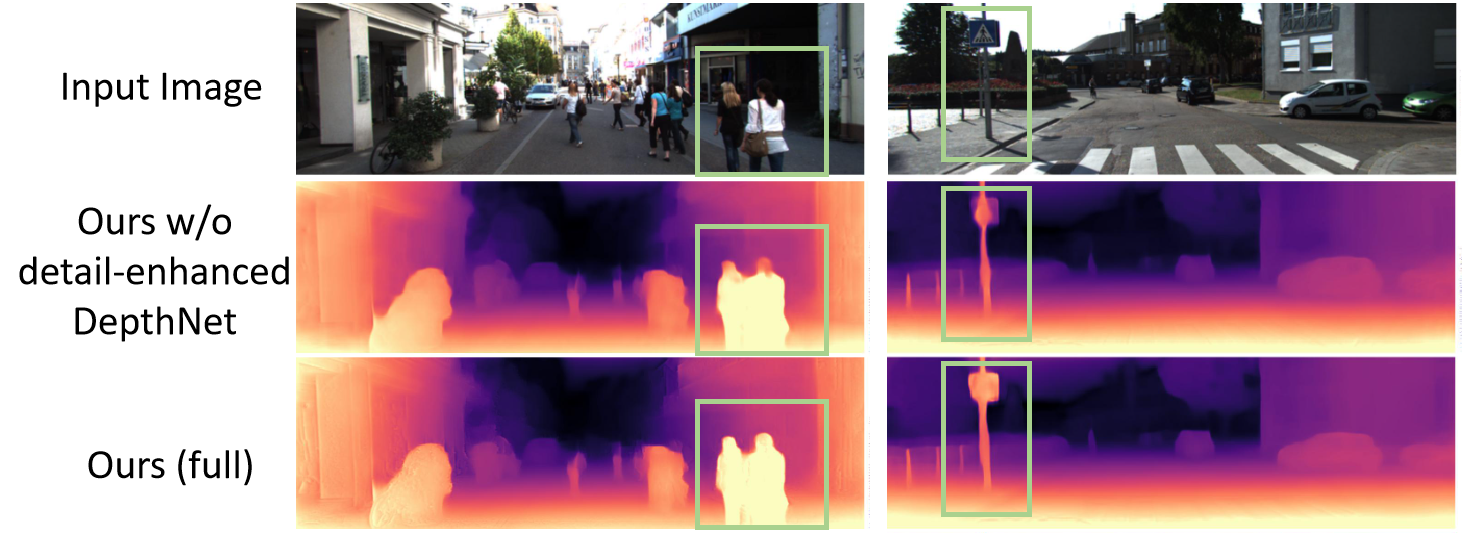

III-C Detail-enhanced DepthNet

To enhance the fine details in depth maps, in this paper we propose to add a full-scale branch to the encoder and use grid-style decoder, forming the detail-enhanced DepthNet, as illustated in Fig. 2.

III-C1 Full-scale Branch

One reason can cause the loss of details is that the encoder preserves features with a maximum scale of and the decoder upsamples from scale to get the depth map at the full scale. BRNet [21] obtains detailed information by reducing the stride of the first convolution, to maintain the full scale at the first feature level. Nevertheless, this will double calculation amount. For less computational overhead, we introduce a full-scale branch separated from the original encoder, which is actually a convolution layer copied from the stem convolution of the encoder backbone, with the stride adjusted to 1. Hence, we can additionally preserve a feature at the full scale, constructing a six-level feature pyramid, which can provide more detailed information for the decoder to restore the depth map.

III-C2 Grid Decoder

Another point lies in that we replace the U-Net [38] decoder with a grid-style decoder, inspired from GridNet [44]. As shown in Fig. 2 , each row consists several lateral blocks to form a stream, within which the feature resolution keeps constant. Each row operates at a different scale, and the columns of the decoder connect these row streams, allowing information transfer through upsampling blocks. Compared to U-Net architecture, our grid decoder can autonomously learn how to integrate information at different scales and use low-resolution information to guide high-resolution predictions, which is beneficial to the detail restoration in pixel-wise tasks like depth estimation. And the columns (or lateral blocks at each row) of the grid decoder is set to 3 in this paper.

| Method | Backbone | #Params | Type | Abs Rel | Sq Rel | RMSE | RMSE log | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Monodepth2 [16] | ResNet18 | 14.3M | M | 0.115 | 0.903 | 4.863 | 0.193 | 0.877 | 0.959 | 0.981 |

| Monodepth2 [16] | ResNet50 | 32.5M | M | 0.110 | 0.831 | 4.642 | 0.187 | 0.883 | 0.962 | 0.982 |

| PackNet-SfM [17] | PackNet | 128M | M | 0.111 | 0.785 | 4.601 | 0.189 | 0.878 | 0.960 | 0.982 |

| HR-Depth [18] | ResNet18 | 14.6M | M | 0.109 | 0.792 | 4.632 | 0.185 | 0.884 | 0.962 | 0.983 |

| R-MSFM6 [19] | ResNet18 | 3.8M | M | 0.112 | 0.806 | 4.704 | 0.191 | 0.878 | 0.960 | 0.981 |

| ADAADepth [20] | ResNet18 | - | M | 0.111 | 0.817 | 4.685 | 0.188 | 0.883 | 0.961 | 0.982 |

| BRNet [21] | ResNet18 | 19M | M | 0.105 | 0.698 | 4.462 | 0.179 | 0.890 | 0.965 | 0.984 |

| Cha et al. [22] | ResNet18 | - | M | 0.110 | 0.763 | 4.648 | 0.184 | 0.877 | 0.961 | 0.983 |

| Lite-Mono [23] | Lite-Mono | 3.1M | M | 0.107 | 0.765 | 4.561 | 0.183 | 0.886 | 0.963 | 0.983 |

| PlaneDepth [24] | ResNet50 | - | M | 0.113 | 1.049 | 4.943 | - | 0.859 | - | - |

| DevNet [25] | ResNet50 | - | M | 0.100 | 0.699 | 4.412 | 0.174 | 0.893 | 0.966 | 0.985 |

| DIFFNet [26] | HRNet18 | 10.9M | M | 0.102 | 0.764 | 4.483 | 0.180 | 0.896 | 0.965 | 0.983 |

| RA-Depth [27] | HRNet18 | 10M | M | 0.096 | 0.632 | 4.216 | 0.171 | 0.903 | 0.968 | 0.985 |

| Ours | ResNet18 | 18M | M | 0.102 | 0.697 | 4.454 | 0.178 | 0.890 | 0.965 | 0.984 |

| Ours | HRNet18 | 10.2M | M | 0.095 | 0.621 | 4.183 | 0.170 | 0.904 | 0.968 | 0.985 |

| Monodepth2 [16] | ResNet18 | - | MS | 0.106 | 0.818 | 4.750 | 0.196 | 0.874 | 0.957 | 0.979 |

| HR-Depth [18] | ResNet18 | - | MS | 0.107 | 0.785 | 4.612 | 0.185 | 0.887 | 0.962 | 0.982 |

| R-MSFM6 [19] | ResNet18 | - | MS | 0.111 | 0.787 | 4.625 | 0.189 | 0.882 | 0.961 | 0.981 |

| DIFFNet [26] | HRNet18 | - | MS | 0.101 | 0.749 | 4.445 | 0.179 | 0.898 | 0.965 | 0.983 |

| BRNet [21] | ResNet18 | - | MS | 0.099 | 0.685 | 4.453 | 0.183 | 0.885 | 0.962 | 0.983 |

| Ours | ResNet18 | - | MS | 0.104 | 0.717 | 4.433 | 0.178 | 0.890 | 0.964 | 0.984 |

| Ours | HRNet18 | - | MS | 0.096 | 0.644 | 4.166 | 0.170 | 0.905 | 0.968 | 0.985 |

For the training type, M means monocular setting and MS means monocular plus stereo setting. In each category, the best results are in bold.

IV Experiments

IV-A Datasets

Our models are trained and evaluated on the KITTI [39] and Cityscapes [40] datasets, which are two widely used outdoor datasets for depth estimation. KITTI consists of 200 street scene videos captured with RGB cameras. For fair comparison, we use the data split in [6] and follow pre-processing operation in [11] to remove static frames, resulting in 39,810 monocular triplets for training and 697 for evaluation. For Cityscapes, we pre-process and train on 69,731 images from the monocular sequences following [11, 37]. And We evaluate on the 1,525 test images using the provided groundtruth by [37].

Furthermore, we use Make3D [41] and NYUv2 [42] datasets for evaluation to validate the generalization ability of the models trained on KITTI. Specifically, Make3D is a small outdoor dataset containing 134 test images with aligned depth information. And NYUv2 is an indoor scene for depth estimation with 654 labeled test images.

IV-B Implementation Details

For the PoseNet to estimate camera ego-motion when using monocular video sequences, we follow [16] to construct it from ResNet18 [45] and modify it to accept a pair of color images (or six channels) as input and to predict a 6-DoF relative pose. For the encoder of our DepthNet, we experiment with two kinds of backbone, ResNet18 and HRNet18 [46], to validate the effectiveness of our methods. Each of the two backbones is initialized with a pretrained model on ImageNet [47]. Different from [16], we only predict a single depth map at the full scale to save memory and accelerate training. Our experiment framework is implemented in PyTorch [48]. And we train our models on KITTI with two types, monocular (M), and monocular plus stereo (MS). For each type, the models are trained for 20 epochs using AdamW [49] optimizer, with a learning rate of for the first 15 epochs and for the remainder. The training resolution for KITTI is set to , the batch size is set to 10, and the maximum depth is 100m. As for Cityscapes, only the monocular (M) setting is trainable. And all the training settings are the same as KITTI, except for a different resolution of following [37]. All the experiments are conducted on a single NVIDIA RTX 3090 GPU. For evaluation, we adopt error-based metrics for which lower is better (Abs Rel, Sq Rel, RMSE, RMSE log, ) and accuracy-based metrics for which higher is better ( , , ). And we employ median scaling before evaluation to tackle the scale ambiguity when using monocular videos.

IV-C Comparison on KITTI Benchmark

For evaluation on KITTI [39], we cap the depth to 80m following [16]. We compare the results of our models on KITTI benchmark with several recent methods, which are listed in Table I. On the raw ground truth under the monocular (M) type, our model with ResNet18 backbone already performs better than several approaches [16, 17, 18, 19, 21, 23, 24]. Furthermore, when using a more powerful backbone, HRNet18, our model can surpass them by a large margin. Besides, under the same backbone of HRNet18, our method also shows superior performance than DIFFNet [26] and RA-Depth [27]. Overall, whether the training is under monocular (M) or monocular plus stereo (MS) type, our method can always achieve state-of-the-art performance.

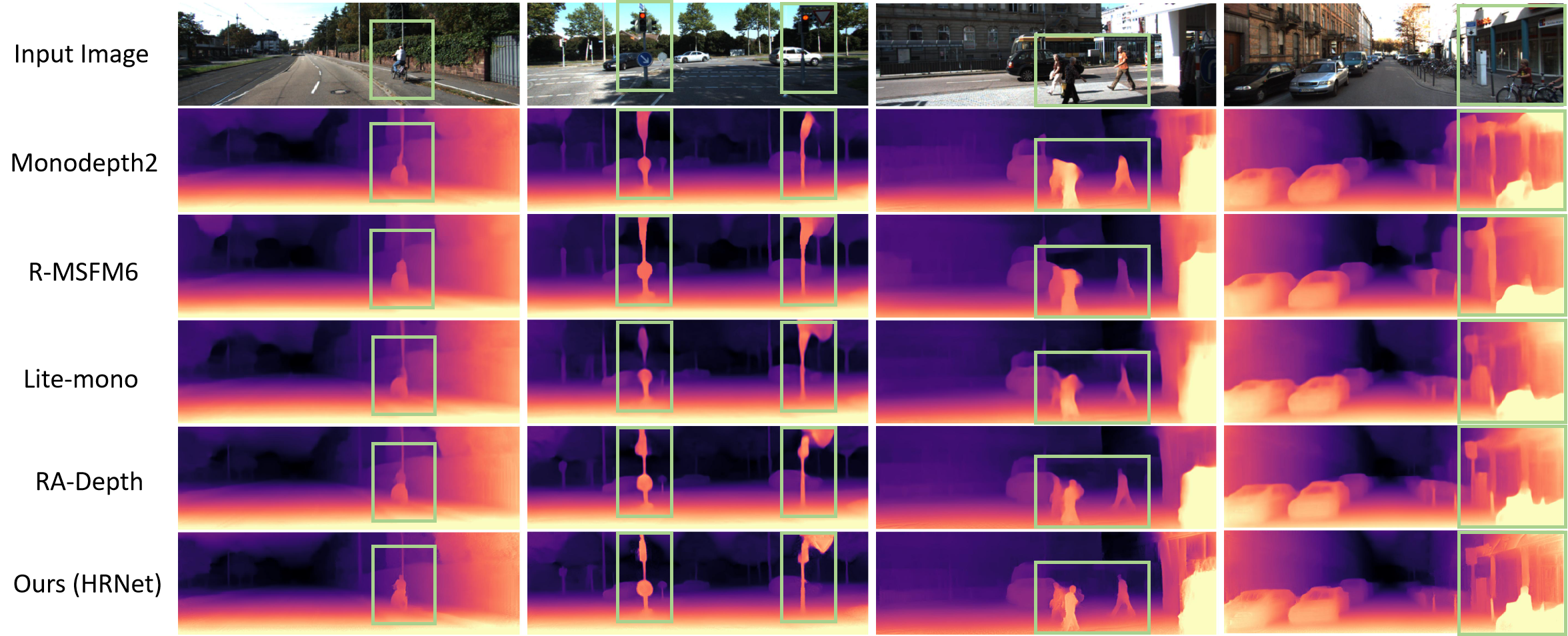

We also provide some qualitative comparison results on different scenes of the KITTI dataset, which are shown in Fig. 3. Our model is qualitatively compared with Monodepth2 [16], R-MSFM6 [19], Lite-mono [23] and RA-Depth [27]. It can be seen in the box region of each scene that our model can obtain higher quality depth maps with finer depth edges.

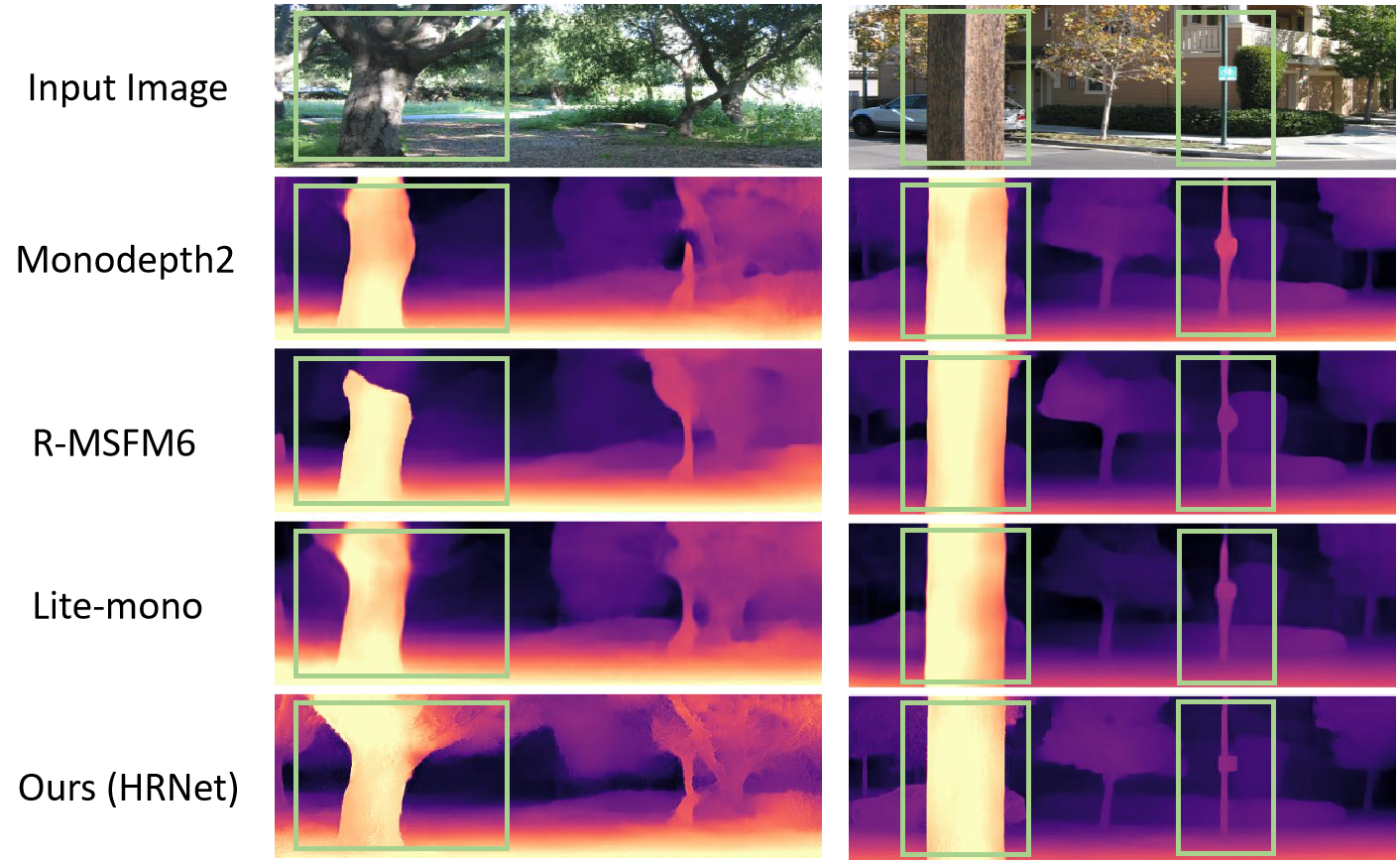

IV-D Generalization to Make3D and NYUv2

To validate the generalization ability of our models trained on KITTI, we directly evaluate them on Make3D [41] and NYUv2 [42] datasets without any fine-tuning. The maximum detph is set to 70m and 10m for Make3D and NYUv2 respectively as common practice. The results, as summarized in Table II and Table III, demonstrate that our models still outperform other methods when transfering to out-of-distribution datasets, including outdoor scenes different from KITTI and indoor scenes. It is worth noting that monocular plus stereo (MS) training has poorer generalization performance than monocular (M). We also show the qualitative results on Make3D in Fig. 4 and It can be seen that our model can perceive objects more accurately.

| Method | Backbone | Type | Abs Rel | Sq Rel | RMSE | |

|---|---|---|---|---|---|---|

| Monodepth2 [16] | ResNet18 | M | 0.322 | 3.589 | 7.417 | 0.163 |

| HR-Depth [18] | ResNet18 | M | 0.315 | 3.208 | 7.024 | 0.159 |

| R-MSFM6 [19] | ResNet18 | M | 0.334 | 3.285 | 7.212 | 0.169 |

| DIFFNet [26] | HRNet18 | M | 0.309 | 3.313 | 7.008 | 0.155 |

| Lite-Mono [23] | Lite-Mono | M | 0.305 | 3.060 | 6.981 | 0.158 |

| BRNet [21] | ResNet18 | M | 0.302 | 3.133 | 7.068 | 0.156 |

| Cha et al. [22] | ResNet18 | M | 0.316 | 2.938 | 6.863 | 0.160 |

| Ours | ResNet18 | M | 0.282 | 2.626 | 6.650 | 0.149 |

| Ours | HRNet18 | M | 0.284 | 2.711 | 6.592 | 0.147 |

| Monodepth2 [16] | ResNet18 | MS | 0.374 | 3.792 | 8.238 | 0.201 |

| Ours | ResNet18 | MS | 0.285 | 2.659 | 6.640 | 0.148 |

| Ours | HRNet18 | MS | 0.282 | 2.664 | 6.546 | 0.147 |

| Method | Backbone | Type | Abs Rel | Sq Rel | RMSE | |

|---|---|---|---|---|---|---|

| Monodepth2 [16] | ResNet18 | M | 0.388 | 0.839 | 1.441 | 0.434 |

| R-MSFM6 [19] | ResNet18 | M | 0.372 | 0.803 | 1.344 | 0.494 |

| DevNet [25] | ResNet50 | M | 0.333 | 0.605 | 1.142 | 0.541 |

| Ours | ResNet18 | M | 0.321 | 0.512 | 1.111 | 0.511 |

| Ours | HRNet18 | M | 0.284 | 0.382 | 0.943 | 0.573 |

| Monodepth2 [16] | ResNet18 | MS | 0.419 | 1.031 | 1.611 | 0.425 |

| Ours | ResNet18 | MS | 0.308 | 0.448 | 1.033 | 0.508 |

| Ours | HRNet18 | MS | 0.301 | 0.420 | 1.010 | 0.523 |

IV-E Cityscapes Results

In Table IV we train and evaluate our models on the Cityscapes [40] dataset. We also check the generalization ability to Cityscapes of our models trained on KITTI, without any finetuning. When evaluating on Cityscapes, we cap the depth to 80m as with KITTI. Note that all models in Table IV are trained under monocular (M) setting. And the evaluating resolution is the same as the training ( for KITTI and for Cityscapes). It can be seen that out models still show significant improvement in the two cases.

| Method | Backbone | Train | Test | Abs Rel | Sq Rel | RMSE | |

|---|---|---|---|---|---|---|---|

| Monodepth2 [16] | ResNet18 | CS | CS | 0.129 | 1.569 | 6.876 | 0.849 |

| ManyDepth [37] | ResNet18 | CS | CS | 0.114 | 1.193 | 6.223 | 0.875 |

| Ours | ResNet18 | CS | CS | 0.116 | 1.107 | 6.061 | 0.868 |

| Ours | HRNet18 | CS | CS | 0.112 | 1.027 | 5.862 | 0.874 |

| Monodepth2 [16] | ResNet18 | K | CS | 0.164 | 1.890 | 8.985 | 0.756 |

| Lite-Mono [23] | Lite-Mono | K | CS | 0.158 | 1.715 | 8.432 | 0.777 |

| Ours | ResNet18 | K | CS | 0.151 | 1.633 | 8.415 | 0.778 |

| Ours | HRNet18 | K | CS | 0.135 | 1.354 | 7.557 | 0.815 |

CS means Cityscapes and K means KITTI.

IV-F Ablation Study

In this section, we verify the effectiveness of each component in our method. All ablation experiments are trained with ResNet18 backbone and monocular setting, and are evaluated on the KITTI raw ground truth. We add each component independently to the baseline model, Monodepth2 [16], and also experiment with each component removed individually on the basis of our full method. The ablation results are listed in Table V, which demonstrate that all the components can bring significant gains individually. One thing to note is that the self-distillation loss plays the most important part in our method. However, it cannot do without data augmentation.

| Method | Abs Rel | Sq Rel | RMSE | |

|---|---|---|---|---|

| Baseline (Monodepth2) | 0.115 | 0.903 | 4.863 | 0.877 |

| Baseline + resizing-cropping | 0.108 | 0.778 | 4.568 | 0.885 |

| Baseline + splitting-permuting | 0.109 | 0.807 | 4.581 | 0.885 |

| Baseline + full-scale branch | 0.112 | 0.804 | 4.704 | 0.878 |

| Baseline + grid decoder | 0.111 | 0.835 | 4.752 | 0.880 |

| Ours w/o resizing-cropping | 0.107 | 0.762 | 4.539 | 0.888 |

| Ours w/o splitting-permuting | 0.106 | 0.733 | 4.501 | 0.888 |

| Ours w/o self-distillation loss | 0.110 | 0.828 | 4.638 | 0.884 |

| Ours w/o detail-enhanced DepthNet | 0.106 | 0.730 | 4.474 | 0.887 |

| Ours | 0.102 | 0.697 | 4.454 | 0.890 |

Models are trained with ResNet18 backbone and monocular (M) setting.

Besides, we explore more about the two data augmentation strategies and detail-enhanced DepthNet in our method.

| Method | Backbone | Test Res. | Abs Rel | Sq Rel | RMSE | |

|---|---|---|---|---|---|---|

| Monodepth2 [16] | ResNet18 | 0.184 | 1.365 | 6.146 | 0.719 | |

| Ours w/o RC | ResNet18 | 0.155 | 1.109 | 6.209 | 0.780 | |

| Ours | ResNet18 | 0.116 | 0.803 | 5.146 | 0.855 | |

| RA-Depth [27] | HRNet18 | 0.111 | 0.723 | 4.768 | 0.874 | |

| Ours | HRNet18 | 0.107 | 0.709 | 4.721 | 0.876 | |

| Monodepth2 [16] | ResNet18 | 0.193 | 1.335 | 6.059 | 0.673 | |

| Ours w/o RC | ResNet18 | 0.265 | 2.060 | 6.862 | 0.565 | |

| Ours | ResNet18 | 0.108 | 0.694 | 4.272 | 0.890 | |

| RA-Depth [27] | HRNet18 | 0.097 | 0.608 | 4.131 | 0.901 | |

| Ours | HRNet18 | 0.099 | 0.610 | 4.049 | 0.901 |

Models are trained with resolution and monocular (M) setting. RC means Resizing-Cropping and Res. means resolution.

IV-F1 Resizing-Cropping

We claim that our models also have the trait of resolution adaption like RA-Depth [27] due to the Resizing-Cropping augmentation. To verify this, we evaluate the models trained with on two other resolutions, and , which is listed in Table VI. When adapted to resolutions different from training, our method has comparable performance to RA-Depth. And the adaption ability becomes much worse without this augmentation.

IV-F2 Splitting-Permuting

To better understand how Splitting-Permuting makes sense, we estimate and then restore the depth of an image augmented by this strategy, both with and without this augmentation method, as shown in Fig. 5. Without Splitting-Permuting, the model fails to perceive the scene structure when the image context is broken, especially in the regions (see the box area in Fig. 5) where there are no roads to find clues from. When incorporating Splitting-Permuting, our method can restore depths of these regions even with context broken and clues missing. That accounts for our models’ generalization capacity to diverse scenes.

IV-F3 Detail-enhanced DepthNet

We additionally provide the qualitative comparison results between our full method and that without detail-enhanced DepthNet, as shown in Fig. 6. It is obviously that detail-enhanced DepthNet can help to restore more fine details in the estimated depth maps.

V Conclusion

In conclusion, this paper presents a novel approach for self-supervised monocular depth estimation which utilizes data augmentation and self-distillation techniques. By incorporating Resizing-Cropping and Splitting-Permuting, we can fully and effectively exploit the potential of training datasets and improve the generalization ability of the models. Moreover, we introduce the detail-enhanced DepthNet with an additional full-scale branch in the encoder and a grid decoder, which significantly improves the restoration of fine details in depth maps. Experimental results demonstrate the effectiveness and superior generalization capability of our method.

References

- [1] D. Eigen, C. Puhrsch, and R. Fergus, “Depth map prediction from a single image using a multi-scale deep network,” in Proc. Adv. Neural Inf. Process. Syst., 2014, pp. 2366–2374.

- [2] F. Liu, C. Shen, and G. Lin, “Deep convolutional neural fields for depth estimation from a single image,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2015, pp. 5162–5170.

- [3] B. Li, C. Shen, Y. Dai, A. Hengel, and M. He, “Depth and surface normal estimation from monocular images using regression on deep features and hierarchical CRFs,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2015, pp. 1119–1127.

- [4] I. Laina, C. Rupprecht, V. Belagiannis, F. Tombari, and N. Navab, “Deeper depth prediction with fully convolutional residual networks,” in Proc. IEEE Int. Conf. 3D Vis., 2016, pp. 239–248.

- [5] H. Fu, M. Gong, C. Wang, K. Batmanghelich, and D. Tao, “Deep ordinal regression network for monocular depth estimation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2018, pp. 2002–2011.

- [6] D. Eigen and R. Fergus, “Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture,” in Proc. IEEE Int. Conf. Comput. Vis., 2015, pp. 2650–2658.

- [7] H. Zhang, C. Shen, Y. Li, Y. Cao, Y. Liu, and Y. Yan, “Exploiting temporal consistency for real-time video depth estimation,” in Proc. IEEE Int. Conf. Comput. Vis., 2019, pp. 1725–1734.

- [8] J. Liu, L. Kong, and Y. Jie, “Designing and searching for lightweight monocular depth network,” in Proc. Int. Conf. Neural Inf. Process., 2021, pp. 477–488.

- [9] R. Garg, V. K. B.G., G. Carneiro, and I. Reid, “Unsupervised CNN for single view depth estimation: Geometry to the rescue,” in Proc. Eur. Conf. Comput. Vis., 2016, pp. 740–756.

- [10] C. Godard, O. M. Aodha, and G. J. Brostow, “Unsupervised monocular depth estimation with left-right consistency,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2017, pp. 6602–6611.

- [11] T. Zhou, M. Brown, N. Snavely, and D. G. Lowe, “Unsupervised learning of depth and ego-motion from video,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2017, pp. 6612–6619.

- [12] L. Kong and Y. Jie, “MDFlow: Unsupervised optical flow learning by reliable mutual knowledge distillation,” in IEEE Trans. Circuits Syst. Video Tech., 2023, pp. 677–688.

- [13] Z. Yin and J. Shi, “GeoNet: Unsupervised learning of dense depth, optical flow and camera pose,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2018, pp. 1983–1992.

- [14] M. Klingner, J. Termöhlen, J. Mikolajczyk, and T. Fingscheidt, “Self-supervised monocular depth estimation: Solving the dynamic object problem by semantic guidance,” in Proc. Eur. Conf. Comput. Vis., 2020, pp. 582–600.

- [15] M. Poggi, F. Aleotti, F. Tosi, and S. Mattoccia, “On the uncertainty of self-supervised monocular depth estimation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2020, pp. 3224–3234.

- [16] C. Godard, O. Mac Aodha, M. Firman, and G. J. Brostow, “Digging into self-supervised monocular depth estimation,” in Proc. IEEE Int. Conf. Comput. Vis., 2019, pp. 3828–3838.

- [17] V. Guizilini, R. Ambrus, S. Pillai, A. Raventos, and A. Gaidon, “3D packing for self-supervised monocular depth estimation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2020, pp. 2482–2491.

- [18] X. Lyu et al., “HR-Depth: High resolution self-supervised monocular depth estimation,” in Proc. AAAI Conf. Artif. Intell., 2021, pp. 2294–2301.

- [19] Z. Zhou, X. Fan, P. Shi, and Y. Xin, “R-MSFM: Recurrent multi-scale feature modulation for monocular depth estimating,” in Proc. IEEE Int. Conf. Comput. Vis., 2021, pp. 12757–12766.

- [20] V. Kaushik, K. Jindgar, and B. Lall, “ADAADepth: Adapting data augmentation and attention for self-supervised monocular depth estimation,” IEEE Robot. Automat. Lett., vol. 6, no. 4, pp. 7791–7798, Oct. 2021.

- [21] W. Han, J. Yin, X. Jin, X. Dai, and J. Shen, “BRNet: Exploring comprehensive features for monocular depth estimation,” in Proc. Eur. Conf. Comput. Vis., 2022, pp. 586–602.

- [22] G. Cha, H.-D. Jang, and D. Wee, “Self-supervised depth estimation with isometric-self-sample-based learning,” IEEE Robot. Automat. Lett., vol. 8, no. 4, pp. 2173–2180, Apr. 2023.

- [23] N. Zhang, F. Nex, G. Vosselman, and N. Kerle, “Lite-Mono: A lightweight CNN and Transformer architecture for self-supervised monocular depth estimation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2023, pp. 18537–18546.

- [24] R. Wang, Z. Yu, and S. Gao, “PlaneDepth: Self-supervised depth estimation via orthogonal planes,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2023, pp. 21425–21434.

- [25] K. Zhou et al., “DevNet: Self-supervised monocular depth learning via density volume construction,” in Proc. Eur. Conf. Comput. Vis., 2022, pp. 125–142.

- [26] H. Zhou, D. Greenwood, and S. Taylor, “Self-supervised monocular depth estimation with internal feature fusion,” in Proc. Bri. Mach. Vis. Conf., 2021.

- [27] M. He, L. Hui, Y. Bian, J. Ren, J. Xie, and J. Yang, “RA-Depth: Resolution adaptive self-supervised monocular depth estimation,” in Proc. Eur. Conf. Comput. Vis., 2022, pp. 565–581.

- [28] J. Bian et al., “Unsupervised scale-consistent depth and ego-motion learning from monocular video,” in Proc. Adv. Neural Inf. Process. Syst., 2019, pp. 35–45.

- [29] R. Peng, R. Wang, Y. Lai, L. Tang, and Y. Cai, “Excavating the potential capacity of self-supervised monocular depth estimation,” in Proc. IEEE Int. Conf. Comput. Vis., 2021, pp. 15540–15549.

- [30] A. Pilzer, S. Lathuiliere, N. Sebe, and E. Ricci, “Refine and distill: Exploiting cycle-inconsistency and knowledge distillation for unsupervised monocular depth estimation,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2019, pp. 9760–9769.

- [31] J. Bello and M. Kim, “Self-supervised deep monocular depth estimation with ambiguity boosting,” in IEEE Trans. Pattern Anal. Mach. Intell., vol. 44, no. 12, pp. 9131–9149, Dec. 2022.

- [32] C. Wang, J. M. Buenaposada, R. Zhu, and S. Lucey, “Learning depth from monocular videos using direct methods,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2018, pp. 2022–2030.

- [33] S. Pillai, R. Ambru¸s, and A. Gaidon, “Superdepth: Self-supervised, super-resolved monocular depth estimation,” in Proc. IEEE Int. Conf. Robot. Automat, 2019, pp. 9250–9256.

- [34] H. Jiang, L. Ding, Z. Sun, and R. Huang, “Dipe: Deeper into photometric errors for unsupervised learning of depth and ego-motion from monocular videos,” in Proc. IEEE. Int. Conf. Intell. Robot. Syst., 2020, pp. 10061–10067.

- [35] H. Li, A. Gordon, H. Zhao, V. Casser, and A. Angelova, “Unsupervised monocular depth learning in dynamic scenes,” in n Proc. Conf. Robot Learn., 2021, pp. 1908–1917.

- [36] B. Wagstaff, V. Peretroukhin, and J. Kelly, “On the coupling of depth and egomotion networks for self-supervised structure from motion,” IEEE Robot. Automat. Lett., vol. 7, no. 3, pp. 6766–6773, Jul. 2022.

- [37] J. Watson, O. Mac Aodha, V. Prisacariu, G. Brostow, and M. Firman, “The temporal opportunist: Self-supervised multi-frame monocular depth,” Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2021, pp. 1164–1174.

- [38] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional networks for biomedical image segmentation,” in Proc. Med. Image Comput. and Computer-Assisted Interv., 2015, pp. 234–241.

- [39] A. Geiger, P. Lenz, C. Stiller, and R. Urtasun, “Vision meets robotics: The kitti dataset,” Int. J. Robot. Res., vol. 32, no. 11, pp. 1231–1237, Sept. 2013.

- [40] M. Cordts et al., “The cityscapes dataset for semantic urban scene understanding,” Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2016, pp. 3213–3223.

- [41] A. Saxena, M. Sun, and A. Y. Ng, “Make3d: Learning 3d scene structure from a single still image,” in IEEE Trans. Pattern Anal. Mach. Intell., vol. 31, no. 5, pp. 824–840, May. 2009.

- [42] N. Silberman et al., “Indoor segmentation and support inference from RGBD images,” in Proc. Eur. Conf. Comput. Vis., 2012, pp. 746–760.

- [43] Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, “Image quality assessment: From error visibility to structural similarity,” IEEE Trans. Image Process., vol. 13, no. 4, pp. 600–612, Apr. 2004.

- [44] D. Fourure, R. Emonet, E. Fromont, D. Muselet, A. Tremeau, and C. Wolf, “Residual conv-deconv grid network for semantic segmentation,” in Proc. Bri. Mach. Vis. Conf., 2017.

- [45] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2016, pp. 770–778.

- [46] J. Wang et al., “Deep high-resolution representation learning for visual recognition,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 43, no. 10, pp. 3349–3364, Oct. 2021.

- [47] J. Deng et al., “Imagenet: A large-scale hierarchical image database,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2009, pp. 248–255.

- [48] A. Paszke et al., “PyTorch: An imperative style, high-performance deep learning library,” in Proc. Adv. Neural Inf. Process. Syst., 2019, pp. 8026–8037.

- [49] I. Loshchilov and F. Hutter, “Decoupled weight decay regularization,” in Proc. Int. Conf. Learn. Representations, 2019.