Towards an Awareness of Time Series Anomaly Detection Models’ Adversarial Vulnerability

Abstract.

Time series anomaly detection is extensively studied in statistics, economics, and computer science. Over the years, numerous methods have been proposed for time series anomaly detection using deep learning-based methods. Many of these methods demonstrate state-of-the-art performance on benchmark datasets, giving the false impression that these systems are robust and deployable in many practical and industrial real-world scenarios. In this paper, we demonstrate that the performance of state-of-the-art anomaly detection methods is degraded substantially by adding only small adversarial perturbations to the sensor data. We use different scoring metrics such as prediction errors, anomaly, and classification scores over several public and private datasets ranging from aerospace applications, server machines, to cyber-physical systems in power plants. Under well-known adversarial attacks from Fast Gradient Sign Method (FGSM) and Projected Gradient Descent (PGD) methods, we demonstrate that state-of-the-art deep neural networks (DNNs) and graph neural networks (GNNs) methods, which claim to be robust against anomalies and have been possibly integrated in real-life systems, have their performance drop to as low as 0%. To the best of our understanding, we demonstrate, for the first time, the vulnerabilities of anomaly detection systems against adversarial attacks. The overarching goal of this research is to raise awareness towards the adversarial vulnerabilities of time series anomaly detectors.

1. Introduction

Machine learning and deep learning have profoundly impacted numerous fields of research and society over the last decade (LeCun et al., 2015; Goodfellow et al., 2016). Medical imaging (Litjens et al., 2017), speech recognition (Kumar et al., 2018), environmental sciences (Tariq et al., 2021b; Loy-Benitez et al., 2022) and smart manufacturing systems (Wang et al., 2018) are a few of these areas. With the proliferation of smart sensors, massive advances in data collection and storage, and the ease with which data analytics and predictive modeling can be applied, multivariate time series data obtained from collections of sensors can be analyzed to identify particular patterns that can be interpreted and exploited. Numerous researchers have been interested in time series anomaly detection (Pang et al., 2021; Tariq et al., 2019; Shin et al., 2020, 2019; Cho et al., 2019; Kim et al., 2021; Yun et al., 2022; Park et al., 2020). For instance, time series anomaly detection methods are used in the aerospace industry for satellite health monitoring (Tariq et al., 2019; Shin et al., 2020; Su et al., 2019). These deep neural network-based solutions outperform the competition on a variety of benchmark datasets. However, as deep learning became more prevalent, researchers began to investigate the vulnerability of deep neural networks, particularly to adversarial attacks. In the context of image recognition, an adversarial attack entails modifying an original image in such a way that the modifications are nearly imperceptible to the human eye (Yuan et al., 2019). The modified image is referred to as an adversarial image, as it will be classified incorrectly by the neural network, whereas the original image will be classified correctly. One of the most well-known real-world attacks involves manipulating the image of a traffic sign in such a way that it is misinterpreted by an autonomous vehicle (Eykholt et al., 2018). The most common type of attack is gradient-based, in which the attacker modifies the image in the direction of the gradient of the loss function relative to the input image, thereby increasing the rate of misclassification (Yuan et al., 2019; Goodfellow et al., 2014; Madry et al., 2017).

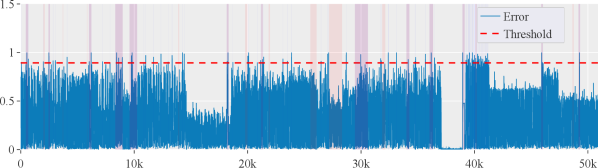

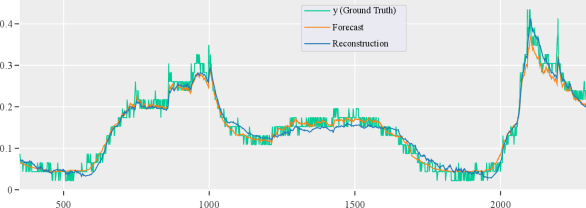

While adversarial attacks have been extensively studied in the context of computer vision areas, they have not been extensively investigated for anomaly detection systems with time-series data. It is surprising to see much less research performed, given the increasing popularity of deep learning models for classifying time series (Ma et al., 2018; Zheng et al., 2017; Wang et al., 2017). Additionally, adversarial attacks are possible in a large number of applications that require the use of time series data. For instance, Figure 1 (top) depicts the original and perturbed time series for the Korean Aerospace Research Institute’s KOMPSAT-5 satellite (KARI) (Tariq et al., 2019). The prediction error (see Figure 1, right) is generated by the Convolutional LSTM with Mixtures of Probabilistic Principal Component Analyzers (CLMPPCA) method (Tariq et al., 2019), which is currently incorporated at KARI, to predict anomalies. While CLMPPCA accurately predicts the anomaly for the original time series, adding small perturbations in the form of FGSM and PGD attacks causes the entire input samples to be classified as an anomaly. This attack can have a severe impact on the satellite health monitoring.

We present, transfer, and apply adversarial attacks that have been demonstrated to work well on images to time series data (containing anomalies) in this work. Additionally, we present an experimental study utilizing benchmark datasets from the aerospace and power plant industries and server machines, demonstrating that state-of-the-art anomaly detection methods are vulnerable to adversarial attacks. We highlight specific real-world use cases to emphasize the critical nature of such attacks in real-world scenarios. Our key findings indicate that deep networks for time series data, similar to their computer vision counterparts, are vulnerable to adversarial attacks. As a result, this paper emphasizes the importance of protecting against such attacks, particularly when anomaly detection systems are used in sensitive industries such as aerospace and power plants. Finally, we discuss some mechanisms for avoiding these attacks while strengthening the models’ resistance to adversarial examples.

Aim, Scope and Contribution. In this work, we do not propose any novel adversarial attack method. However, we apply and demonstrate the threat of well-known existing adversarial attacks such as FGSM and PGD towards state-of-the-art anomaly detection methods for multivariate time-series data. In comparison to the computer vision domain, where adversarial attack has been extensively studied and investigated, the literature on novelty detection, and particularly on anomaly detection, is noticeably devoid of such studies. The purpose of this paper is to bring attention to this critical issue, whereas time series anomaly detection models also play pivotal roles in real-world scenarios as other vision tasks. Additionally, we hope to encourage researchers to consider robustness to adversarial attacks when evaluating time-series based future detectors. The paper’s scope was limited to analyzing SOTA anomaly detectors. Finally, we successfully degraded the detection performance of deployed systems in the power plant and aerospace industries by employing adversarial attacks. It highlights how vulnerable the current generation of anomaly detectors is to adversarial attacks. Our source code and other implementation details are available here: https://github.com/shahroztariq/Adversarial-Attacks-on-Timeseries.

2. Related Work

In this section, we present background information, notations, and related works, with a particular emphasis on time series anomaly detection and adversarial attacks.

Background and Notations. When performing a supervised learning task, we define to represent a dataset containing N data samples. Each data sample is composed of a -dimensional multivariate time series and a single target value for classification. However, the majority of anomaly detection occurs in an unsupervised setting. As a result, we take a slightly different approach from the supervised task. Hence, for unsupervised learning, each data sample is again composed of a -dimensional multivariate time series however, is an -dimensional multivariate time series obtained from an autoregressive model, predicting the future. In most cases, however, they can be different as well. Moreover, we define any deep learning method as and loss function (e.g., cross entropy or mean squared error) as . Finally, generating an adversarial instance can be described as an optimization problem given a trained deep learning model and an original input time series , as follows:

| (1) |

Adversarial Attacks. In 2014, Szegedy et al. (2013) introduced adversarial examples against deep neural networks for image recognition tasks for the first time. Following these inspiring discoveries, an enormous amount of research has been devoted to generating, understanding, and preventing adversarial attacks on deep neural networks (Eykholt et al., 2018; Goodfellow et al., 2014; Madry et al., 2017). Adversarial attacks can be broadly classified into two types: White-box and Black-box attacks. As White-box attacks presume access to the model’s design and parameters, they can attack the model effectively and efficiently using gradient information. By contrast, Black-box attacks do not require access to the output probabilities or even the label, making them more practical in real-world situations. However, Black-box attacks frequently take hundreds, if not millions, of model queries to calculate a single adversarial case.

The majority of adversarial attack techniques have been proposed for use in image recognition. For instance, a Fast Gradient Sign Method attack was developed by Goodfellow et al. (2014) as a substitute for expensive optimization techniques (Szegedy et al., 2013). Madry et al. (2017) proposed Projected Gradient Descent (PGD) in response to the success of FGSM. PGD seeks to find the perturbation that maximizes a model’s loss on a particular input over a specified number of iterations while keeping the perturbation’s size below a specified value called epsilon (). This constraint is typically expressed as the perturbation’s or norm. It is added to ensure that the content of the adversarial example is identical to that of the unperturbed sample — or even to ensure that the adversarial example is imperceptibly different from the unperturbed sample. Carlini-Wagner is another well-known attack (Carlini and Wagner, 2017). However, it is primarily intended for norm-based attacks, whereas this study focuses exclusively on norm-based attacks.

Adversarial Attacks on Time Series Anomaly Detectors. Surprisingly, limited efforts have been made to extend computer vision-based adversarial attacks to time series anomaly detection domain. However, a few adversarial attack approaches have been proposed recently for the time series classification task, which are tangentially related to our work. For instance, in their work on adopting a soft K Nearest Neighbors (KNN) classifier with Dynamic Time Warping (DTW), Oregi et al. (2018) demonstrated that adversarial examples could trick the proposed nearest neighbors classifier on a single simulated synthetic control dataset from the UCR archive (Dau et al., 2019). Given that the KNN classifier is no longer considered the state-of-the-art classifier for time series data (Bagnall et al., 2017), Fawaz et al. (2019) extend this work by examining the effect of adversarial attack on the more recent and commonly used ResNet classifier (He et al., 2016). Fawaz et al. (2019), on the other hand, focused mainly on univariate datasets from the UCR repository. As a result, Harford et al. (2020) investigate the influence of adversarial attacks on multivariate time series classification using the multivariate dataset from UEA repository (Bagnall et al., 2018). However, Harford et al. (2020) only consider basic methods such as 1-Nearest Neighbor Dynamic Time Warping (Seto et al., 2015) (1-NN DTW) and a Fully Convolutional Network (FCN). Karim et al. (2020) and Harford et al. (2020) attacked models using Gradient Adversarial Transformation Networks (GATNs). However, they examined just transfer attacks, a relatively weak sort of Black-box attack. Only Siddiqui et al. (2019) demonstrated the effectiveness of gradient-based adversarial attacks on time series classification and regression networks. However, they considered a very simple baseline for the attack, containing only 3 convolutional, 2 max-pooling, and 1 dense layer.

Note: Our study differs from previous research in that we focus on time series anomaly detection rather than the broader classification problem. More precisely, we explore autoregressive models that have been mostly overlooked in prior works. Additionally, rather than targeting generic deep neural networks KNN with DTW or ResNet, we investigate state-of-the-art anomaly detection methods. For instance, when it comes to anomaly detection, we focus on the most contemporary and commonly used techniques, such as MSCRED (Zhang et al., 2019), CLMPPCA (Tariq et al., 2019), and MTAD-GAT (Zhao et al., 2020). Section 5 will cover these methods in further depth.

3. Threat Model

To fully define the adversary, we divide the threat model into three subsections based on the adversary’s capabilities, knowledge, and goals.

Adversary’s Capabilities. We consider an adversary whose objective is to reduce the effectiveness of a victim model. The attacker can apply the perturbations by modifying the victim’s test-time samples, for example, by compromising a sensor or the data link that collects the data for inference. We investigate a norm threat model with a epsilon. Due to the variable input range of time series data, there are no box constraints, in contrast to the visual image, where the pixels take on a definite value between . As a result, the data was standardized in our case using a zero-mean and unit standard deviation which justified the choice of as the epsilon value.

Adversary’s Knowledge. To evaluate the vulnerability of anomaly detection systems, we examine non-targeted White-box and Black-box scenarios. Typically, the attacker is given complete knowledge of the victim model, including its training data and the model’s tunable parameters and weights. However, we believe it to be unpractical in our scenario. As most of the system in our analysis are behind some layer of firewall or defense protection and most of the model parameters are hidden. Therefore, we consider two types of adversary’s knowledge as follows:

1) Complete Knowledge: The attacker understands how the model and its parameters works. We can consider a White-box attack to be the most appropriate method for this type of adversary.

2) Partial or No Knowledge: Given that the attacker has no or limited knowledge of the system, a Black-box attack is the most appropriate method in this case. As a result, strategies such as transfer-based priors (Cheng et al., 2019) can be applied by the adversary.

Adversary’s Goals. The adversary considers two cases: (i) normal to anomaly and (ii) anomaly to normal. In (i), the adversary creates a for each test sample so that the models interpret it as an anomaly, thereby generating a false-positive. However, in (ii), the adversary fabricates to achieve the inverse effect, namely, to cause the model to predict an anomaly as normal, hence generating false-negative examples. As anomalies are rare events, even a few misclassifications caused by the adversary can have a detrimental effect on the model’s performance.

4. Adversarial Attack Generation

The Fast Gradient Sign Method (FGSM) attack was proposed for the first time by Goodfellow et al. (2014). The training of neural networks entails minimizing a loss function by adjusting the network weights. FGSM, on the other hand, does the opposite by adjusting the input samples in the direction opposite to the loss function’s minimum. Thus, the FGSM attack is concerned with the computation of optimal perturbation series , which can be added/summed to an input sample pointwise (i.e., a point refers to a single timestep) in order to maximize the classification loss function, i.e., cause misclassifications. This is mathematically expressed as:

| (2) |

where denotes the derivative of the network’s loss, , with respect to each timestep in (calculated for an input datapoint and it’s true output ). To control the magnitude of the perturbation (i.e., to keep it imperceptibly small), is used as a multiplier factor. After that, the perturbed sample can be computed as . Note that FGSM requires the attacker to compute the loss function gradient with respect to a given input, which may not be possible directly. Due to the fact that FGSM requires knowledge of the internal workings of the network it is therefore referred to as a White-box attack. However, a surrogate model can be used to simulate the target model. An FGSM attack can be applied to the surrogate to generate adversarial examples (Papernot et al., 2017), allowing for the use of such White-box attacks in practical scenarios (Kurakin et al., 2016b).

Madry et al. (2017) proposed a more robust adversarial attack called Projected Gradient Descent (PGD). This attack employs a multi-step procedure and a negative loss function. It overcomes the problem of network overfitting and the shortcomings of the FGSM attack. It is more robust than first-order network information-based FGSM, and it performs well under large-scale constraints. Gradient Descent is essentially identical to the Basic Iterative Method (BIM) (Kurakin et al., 2016b) or the Iterative FGSM (IFGSM) (Kurakin et al., 2016a) attacks. The only difference is that PGD initializes the example at a random location within the ball of interest (determined by the norm) and performs random restarts, whereas BIM initializes at the original location.

| (3) |

where is a nonempty compact topological space, is the total number of iterations, and is the control rate. An illustration of the overall pipeline is provided in Figure 2.

5. Experimental Setup

This section contains information on the benchmark datasets, evaluation metrics, criteria for selecting baselines, and chosen baselines.

Datasets For anomaly detection we employ three public datasets: (i) Mars Science Laboratory rover (MSL) (Hundman et al., 2018), (ii) Soil Moisture Active Passive satellite (SMAP) (Hundman et al., 2018), and (iii) Server Machine Dataset (SMD) (Su et al., 2019), as well as one private dataset: (vi) Korean Aerospace Research Institute KOMPSAT-5 satellite (KARI) (Tariq et al., 2019) and one synthetic dataset: (v) from the MSCRED paper (Zhang et al., 2019). The datasets were chosen based on our baselines’ shown ability to provide state-of-the-art performance on them. Table 1 summarize these datasets.

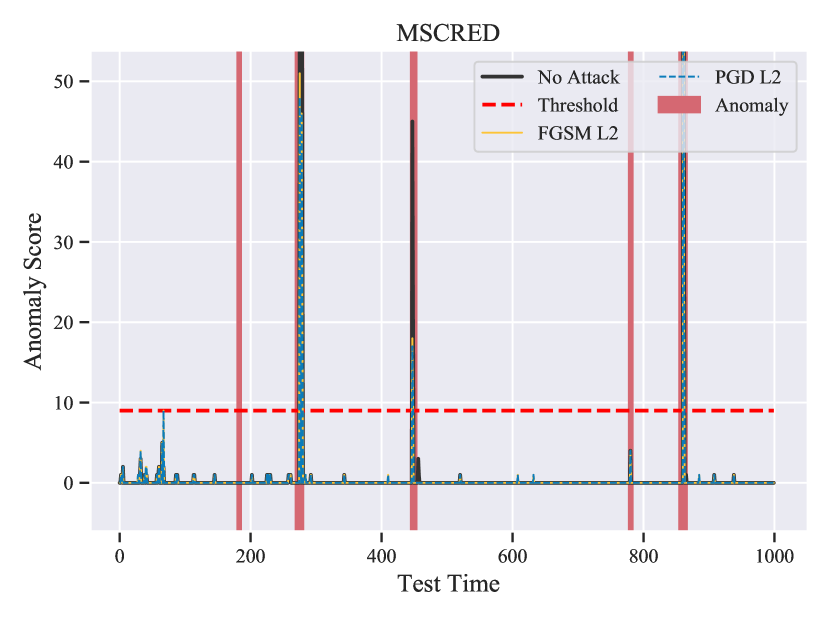

Evaluation Metrics To obtain the final classification result for anomaly detection methods, we observed that the majority of detectors use a thresholding method on top of the neural network’s predictions, which are expressed as an anomaly score or prediction error. The precision, recall, and F1-score are then calculated using the results from thresholding methods. While these metrics are beneficial, the true impact of the adversarial attack is visible primarily in anomaly detectors’ anomaly score and prediction errors. Therefore, we include Figure 1, 3(b) and 3(a), as illustrations of this impact. Additionally, we include more related figures in Appendix B– D.

| Statistics | SMAP | MSL | SMD | KARI | Synthetic |

|---|---|---|---|---|---|

| Dimensions | 55 | 27 | 28 | 4-35 | 30 |

| Anomalies | 13.13% | 10.27% | 4.16% | 1.00% | 1.10% |

| Train Size | 135,183 | 58,317 | 708,405 | 4,405,636 | 8,000 |

| Test Size | 427,617 | 73,729 | 708,420 | 17,622,546 | 10,000 |

5.1. Anomaly Detection Baselines

5.1.1. Selection Criteria

We conduct experiments on the following baselines to demonstrate that the vulnerability to adversarial attacks is common among several state-of-the-art anomaly detection architectures. Anomaly detectors based on Deep Neural Networks (DNNs) are the most frequently used method. However, some methods based on Graph Neural Networks (GNNs) have also been proposed recently. As a result, we evaluated both DNNs- and GNNs-based anomaly detectors. We used the following criteria to determine the baseline:

-

(1)

Diverse Architecture: To ensure that we cover a broad range of methods, we decide that the baselines should be diverse, i.e., no two baselines have similar model architecture.

-

(2)

Diverse pre-processing: They should consider a different pre-processing technique (e.g., using raw data or feature vectors).

-

(3)

Diverse post-processing: They should take into account various post-processing techniques for prediction (e.g., anomaly score, prediction error, or classification score).

-

(4)

Peer-reviewed: The method is widely accepted and peer-reviewed. For this criterion, we take into account GitHub Forks, paper citations, and publication venues.

-

(5)

Open-source: The source code is freely available or can be obtained upon request.

5.1.2. Selected Baselines

We choose the following baselines based on the aforementioned criteria:

MSCRED (Zhang et al., 2019) [AAAI’19]: Taking advantage of the temporal dependencies inherent in multivariate time series, Zhang et al. (2019) proposed a Multi-Scale Convolutional Recurrent Encoder-Decoder (MSCRED) for anomaly detection on two datasets: (i) synthetic and (ii) power plant. Sidenote: Shen et al. (2020) demonstrated that MSCRED outperforms all SOTA anomaly detection methods except Temporal Hierarchical One-Class (THOC), but we were unable to evaluate THOC as the code is not available (see more details below this list). As a result, we chose the second best method (i.e., MSCRED) among recently developed SOTA anomaly detection methods. Because the power plant dataset is not publicly available, we compare MSCRED with and without adversarial attack using the synthetic dataset used by Zhang et al. (2019) in their work.

CLMPPCA (Tariq et al., 2019) [KDD’19]: Tariq et al. (2019) proposed a hybrid approach for anomaly detection in multivariate satellite telemetry data. Based on the accomplishments of Convolutional LSTM-based networks in understanding spatiotemporal data in various domains (Tariq et al., 2020b, 2021a; Kim et al., 2017; Tariq et al., 2022), they propose a Convolutional LSTM with Mixtures of Probabilistic Principal Component Analyzers (CLMPPCA) method for transforming the time window containing several telemetry data samples into a feature vector that is used to train the model and to predict the future data instances. To make final classification, the prediction errors calculated from the prediction and ground truth are combined with a moving average-based threshold method. In their work Tariq et al. (2019) used a private dataset from the Korean Aerospace Research Institute’s (KARI) KOMPSAT-5 satellite for evaluation. We were able to obtain the same private dataset and demonstrate how adversarial attacks affect the performance of CLMPPCA. One of the primary reasons for selecting CLMPPCA is that it is currently deployed at KARI. Thus, successfully demonstrating an attack on this method will demonstrate its applicability in a practical scenario.

MTAD-GAT (Zhao et al., 2020) [ICDM’20]: Zhao et al. (2020) proposed a multivariate time series anomaly detector based on Graph Attention Networks. The authors treat each univariate time series as a separate feature and employ two parallel graph attention layers to learn the complex dependencies between multivariate time series in both temporal and feature dimensions by jointly optimizing a forecasting-based and reconstruction-based model. MTAD-GAT outperformed several recent time series anomaly detectors such as OmniAnomaly (Su et al., 2019), MAD-GAN (Li et al., 2019), and DAGMM (Zong et al., 2018) from ICLR 2018, on three publicly available anomaly datasets (SMAP, MSL, and SMD). As a result, MTAD-GAT is one of the best SOTA methods currently available. We evaluate MTAD-GAT with and without adversarial attacks on all three datasets (i.e., SMAP, MSL, and SMD).

Note: We chose these three baselines based on their compliance with our defined criteria. Additionally, we were unable to evaluate some recent methods, such as Temporal Hierarchical One-Class (THOC) published at NeurIPS 2020 because the source code is not publicly available and our request to obtain the source code from the author was not answered. We discuss this further in Section A.

5.1.3. White- and Black-box Attack Settings.

As the attacker will have complete knowledge of the underlying system in a White-box attack, we create attack vectors using the same selected baselines, namely MSCRED, CLMPPCA, and MTAD-GAT. Whereas for the Black-box attack, we build attack vectors using a model that is similar to but simpler than the victim model. For example, we utilise a vanilla recurrent autoencoder to create attack vectors for MSCRED, a simple CNN+LSTM model for CLMPPCA, and a vanilla GNN for MTAD-GAT.

6. Empirical Evaluation

We present results for the FGSM and PGD attacks against three SOTA anomaly detection methods—MSCRED, CLMPPCA, and MTAD-GAT. The Appendix includes additional details about the , , and attacks results (Appendix B); more details on impact of adversarial attacks on MTAD-GAT (Appendix C); some original vs. perturbed time series samples (Appendix D. Moreover, results from the FGSM, PGD, BIM, Carlini-Wagner, and Momentum Iterative Method (MIM) (Dong et al., 2018) attacks on 71 datasets from the UCR repository are available on our GitHub repository. In general, we observe that perturbations that are -bounded are more effective. This could be explained by optimization challenges, as and attacks are typically more difficult to optimize (Carlini and Wagner, 2017; Tramer and Boneh, 2019).

6.1. Adversarial Attack on MSCRED

6.1.1. MSCRED (White-box)

We employ non-targeted FGSM and PGD methods to attack MSCRED. As a result, only from the test set is made available to the attack methods. The is set to for the FGSM attack, and is set to for the PGD attack with . The MSCRED method determines the appropriate threshold between normal and anomalous data points based on the training data. As a result, any modification to the test samples should not affect the threshold. As shown in Table 2, the victim model (MSCRED) has no efficacy on the samples perturbed by FGSM and PGD attacks and thus fails to detect all anomalies. Additionally, MSCRED classifies all instances of normal data as anomalies. We demonstrate in Figure 3(a) that MSCRED (No Attack) can accurately predict the majority of anomalies with an F1 score of (see Table 2). According to Figure 3(a), the anomaly scores under FGSM (yellow) and PGD (blue) attacks are always higher than the threshold (red dashed line), which means that MSCRED is predicting everything as an anomaly, resulting in an F1 score of less than . It is intriguing that such a small amount of change in the time series, which is primarily imperceptible to the naked eye, can greatly affect the MSCRED’s anomaly scores, even when the perturbations are so minute. The results in Table 2 are demonstrating that MSCRED is not robust against adversarial attacks.

6.1.2. MSCRED (Black-box)

As with the White-box attack, the Black-box attack significantly reduced MSCRED’s F1-score. This reduction, however, is slightly less than that caused by a White-box attack. Moreover, the PGD attack reduced F1-scores more than the FGSM attack, as shown in Table 2. This experiment demonstrates that even when we build the attack vector using a different backbone model, we can still achieve significant success by transferring the adversarial attack.

6.2. Adversarial Attack on MTAD-GAT

6.2.1. MTAD-GAT (White-box)

As with MSCRED, we attack MTAD-GAT using a non-targeted FGSM and PGD method with , , and . The results of adversarial attacks against MTAD-GAT trained on the MSL, SMAP, and SMD datasets are shown in Table 3. MTAD-GAT demonstrates state-of-the-art performance for anomaly detection in the absence of an adversarial attack (No Attack). However, when adversarial examples from FGSM and PGD are used to evaluate it, the detection performance drops to as low as 66%. The impact of the PGD attack is more significant than that of the FGSM attack, which is understandable given that PGD is a more powerful attack than FGSM. It leads us to ponder that if more sophisticated attacks are explicitly developed for time series data, they will have a significantly greater impact on SOTA anomaly detectors. As a result, future anomaly detection methods should take adversarial examples into account.

| Method | White-box | Black-box | ||||

|---|---|---|---|---|---|---|

| Pre. | Rec. | F1 | Pre. | Rec. | F1 | |

| No Attack | 1.000 | 0.800 | 0.890 | 1.000 | 0.800 | 0.890 |

| FGSM | 0.487 | 0.500 | 0.493 | 0.651 | 0.693 | 0.671 |

| PGD | 0.485 | 0.500 | 0.492 | 0.634 | 0.677 | 0.655 |

| Method | White-box | Black-box | ||||

|---|---|---|---|---|---|---|

| MSL | SMAP | SMD | MSL | SMAP | SMD | |

| No Attack | 0.950 | 0.894 | 0.999 | 0.950 | 0.894 | 0.999 |

| FGSM | 0.719 | 0.804 | 0.803 | 0.751 | 0.847 | 0.852 |

| PGD | 0.687 | 0.775 | 0.665 | 0.727 | 0.815 | 0.749 |

Additionally, Figure 3(b) illustrates the effect of adversarial examples from FGSM (yellow) and PGD (blue) attacks on the MTAD-GAT anomaly score for the MSL dataset. We can see that the anomaly scores for FGSM and PGD frequently exceed the threshold (red dashed line), resulting in a large number of false positives and lowering the F1 score from 94.98% to 71.90% for FGSM and 68.69% for PGD.

6.2.2. MTAD-GAT (Black-box)

Like the Black-box attack on MSCRED, the attack on MTAD-GAT has a similar effect, lowering the F1-score for MSL, SMAP, and SMD to 0.751, 0.847, and 0.852 with the FGSM attack and 0.727, 0.815, and 0.749 with the PGD attack, as shown in Table 3. As with the Autoencoder, this experiment indicates that it is possible to transfer adversarial attack to graph neural networks. Thus, demonstrating that the adversary may not require extensive knowledge of the backbone to launch a successful attack.

6.3. Adversarial Attack on CLMPPCA

6.3.1. CLMPPCA (White-box)

The KARI dataset is divided into ten subsystems. As a result, we trained the CLMPPCA model on each subsystem separately, as described in the original paper. We then used FGSM and PGD attacks to evaluate each of these trained models. For FGSM, we use , for PGD, we use , and . Table 4 summarizes the prediction errors for each subsystem prior to and following the attack. We can see that when adversarial attacks are used, the prediction error increases up to twentyfold. Note: For brevity and space constraints, we omit the F1 score from Table 4, as it is for all subsystems. CLMPPCA fails to predict any anomalies under FGSM and PGD attacks because the prediction error is always higher than the threshold (see Figure 1). We believe that by employing these straightforward yet effective attacks, an adversary can easily introduce false positives into CLMPPCA’s predictions at will, posing significant difficulties for satellite operators.

| Methods | SS1 | SS2 | SS3 | SS4 | SS5 | SS6 | SS7 | SS8 | SS9 | SS10 |

|---|---|---|---|---|---|---|---|---|---|---|

| No Attack | 0.025 | 0.020 | 0.646 | 0.018 | 0.078 | 0.081 | 0.028 | 0.015 | 0.043 | 0.106 |

| FGSM | 0.306 | 0.327 | 5.657 | 0.153 | 1.744 | 1.708 | 0.246 | 0.201 | 1.303 | 0.314 |

| (0.132) | (0.159) | (3.163) | (0.092) | (0.680) | (0.616) | (0.115) | (0.098) | (0.724) | (0.191) | |

| PGD | 0.688 | 0.748 | 11.20 | 0.205 | 2.459 | 3.391 | 0.430 | 0.231 | 1.798 | 0.555 |

| (0.333) | (0.382) | (5.216) | (0.135) | (1.301) | (1.630) | (0.206) | (0.139) | (1.105) | (0.249) |

6.3.2. CLMPPCA (Black-box)

We generated the attack vector using a CNNLSTM surrogate model and evaluated the CLMPPCA model in a Black-box scenario. As with the other two Black-box experiments (i.e., MSCRED and MTAD-GAT), we saw a similar trend. The CLMPPCA model’s prediction error does increase consistently for all subsystems when the attack vector is constructed using the surrogate model, as shown in Table 4; in round brackets. We can deduce from the CLMPPCA Black-box results that all three types of models investigated in this work (i.e., Autoencoder, DNNs, GNNs) are relatively equally susceptible to transferable adversarial attacks via surrogate models.

6.4. Summary of Results

Our findings indicate that the majority of SOTA anomaly detectors prioritized performance over robustness. This could have dire consequences if such systems are deployed in real-world systems. CLMPPCA is one such example, which is currently being deployed at KARI. Please note that we have informed KARI of the vulnerability in CLMPPCA; additional information is available in our Ethics Statement (see Section 7). Additionally, leveraging a surrogate model to conduct a Black-box attack can have a severe effect on the performance of the victim model. However, there are several limitations to surrogate, which we shall discuss in Section 7.

7. Discussion

Adversarial Time Series Defense. Adversarial training is one of the most commonly used defense methods against adversarial examples. However, as Kang et al. (2019) suggest, training a network to withstand one type of attack may weaken it against others. Additionally, Tramer et al. (2020) outline various methods to conduct an adaptive attack and demonstrate that none of the 13 recently developed defense methods can withstand all types of adaptive attacks. Recently, a few techniques for defending against adversarial time series have been proposed. For example, Goodge et al. (2020) propose an Approximate Projection Autoencoder (APAE) resistant to IFGSM attacks. However, it only considers autoencoder-based anomaly detectors. Moreover, the performance of several SOTA baselines reported in the paper is significantly lower than that reported in their original paper using the same publicly available benchmark dataset. As a result, a thorough examination of the defense methods is required.

In order to encourage the studies of adversarial robustness for time series anomaly detection models, we will discuss here some possible approaches that are primarily motivated by computer vision areas. From the perspective of adversarial generation, perturbations created by attackers have mainly relied on gradients of model predictions w.r.t its invaded inputs. We can apply the input-output Jacobian regularization in order to agnostically silent the model’s gradients regardless of its input as was shown in (Co et al., 2021; Hoffman et al., 2019). On the other hand, when we have multiple classes in the training dataset, we can focus on aligning distributions of adversarial samples to be resembling to clean ones in the latent space, namely adversarial training (Zhang and Wang, 2019; Bai et al., 2021; Bouniot et al., 2021). In the one-class training manner, we expect our defense model to learn the intrinsic representative features from the training dataset and be more robust to adding noise in the test set. Therefore, regularizing the embedding space to be more compact is an appealing approach that so far has not been investigated in time-series anomaly detection areas thoroughly. This objective can be achieved via sparing the latent space with principal component analysis as demonstrated in (Lai et al., 2019; Lo et al., 2021).

Limitations and Future Work. There are some limitations to our work, and future work will try to solve them. For instance, we could not evaluate all of the recent anomaly detectors in our work due to the following reasons: (i) The most important reason is that the codes are not publicly available in many cases or the code is outdated, making it hard to compare (we discuss this in detail in reproducibility section). (ii) It is hard to reproduce the same results as demonstrated by the paper, mainly when the codes are not from the original authors but developed by the community. Therefore, future work should look for more methods. Moreover, we have only applied FGSM, PGD, and SL1D (see Appendix B) attacks on the detectors. We do provide results from other attacks such as Carlini-Wagner L2 and MIM on the UCR dataset on our GitHub repository. Another future work will be to transfer these and new adversarial attacks to anomaly detectors.

We assumed that the training data for the surrogate model is either publicly available or obtained by probing the simulation results at multiple intelligently chosen places in the design parameter space. However, such an assumption may not hold true in a closed loop system. As a result, future research should focus on developing a more comprehensive strategy for acquiring training data for surrogate models. Finally, developing robust detectors should be considered in future studies.

New Adversarial Attacks. We have not developed a novel type of adversarial attack in this study and have instead utilised some of the more prevalent adversarial methods for the following reasons: (i) We believe that if a simple attack can demonstrate a system’s vulnerability, then developing a new more complex attack solely to increase the novelty of the paper is futile, as the primary objective of this paper is to expose anomaly detector’s vulnerabilities, not to develop new adversarial attacks. (ii) At the time the baselines reviewed in this study were published, the FGSM and PGD attacks were already well-known; thus, establishing that those baselines are not resilient against FGSM and PGD adversarial attacks provides a fair comparison.

Attack on Intrusion Detection System. Intrusion detection is frequently associated with anomaly detection and, more broadly, novelty detection systems. In contrast to the realm of anomaly detection, numerous attempts have been made to investigate adversarial attacks against intrusion detection systems (Apruzzese and Colajanni, 2018; Apruzzese et al., 2019; Corona et al., 2013). As a proof of concept, we also deployed similar adversarial attacks (i.e., FGSM and PGD) to an intrusion detection system for Controller Area Networks and discovered that the attacks are just as effective against them. Owing to the fact that this experiment requires extensive background information, and due to a shortage of space, we provide further details on our GitHub111https://github.com/shahroztariq/Adversarial-Attacks-on-Timeseries and more context here (Tariq and Woo, 2022).

Ethics Statement. Our study, in our opinion, raises only one significant ethical issue (i.e., presenting the vulnerabilities of a deployed system). Now, we will describe how we deal with it. To begin, we downloaded the CLMPPCA code from GitHub. Second, we contacted the authors of the CLMPPCA paper and requested the dataset. Following KARI’s security clearance. We were able to obtain access to the dataset and some code associated with the driver, which was kept private on purpose. We contacted the authors and informed them of our findings after identifying the vulnerabilities in CLMPPCA. The authors replicated our findings on the deployed system using the same attacks. For the time being, the system is offline, and the authors of the CLMPPCA paper and other KARI developers are investigating possible defense methods. We believe that adhering to this entire procedure resolves any ethical concerns regarding this matter.

8. Conclusion

The concept of adversarial attacks on deep learning models for time series anomaly detection was considered in this paper. We defined and adapted adversarial attacks initially proposed for image recognition to time series data. On several benchmark datasets, we demonstrated how adversarial perturbations could reduce the accuracy of state-of-the-art anomaly detectors. As data scientists and developers increasingly implement deep neural network-based solutions for time series related real-world critical decision-making systems (e.g., in aerospace industries), we shed light on several critical use cases where adversarial attacks could have severe and dangerous repercussions. Additionally, we demonstrate empirically that White- and Black-box attacks are both conceivable and can result in significant performance deterioration. Finally, we discuss several defense strategies and possible future directions for adversarially resilient anomaly detector development.

Acknowledgements.

This work was partially supported by the Basic Science Research Program through National Research Foundation of Korea (NRF) grant funded by the Korean Ministry of Science and ICT (MSIT) under No. 2020R1C1C1006004 and Institute for Information & communication Technology Planning & evaluation (IITP) grants funded by the Korean MSIT: (No. 2022-0-01199, Graduate School of Convergence Security at Sungkyunkwan University), (No. 2022-0-01045, Self-directed Multi-Modal Intelligence for solving unknown, open domain problems), (No. 2022-0-00688, AI Platform to Fully Adapt and Reflect Privacy-Policy Changes), (No. 2021-0-02068, Artificial Intelligence Innovation Hub), (No. 2019-0-00421, AI Graduate School Support Program at Sungkyunkwan University), and (No. 2021-0-02309, Object Detection Research under Low Quality Video Condition).References

- (1)

- Apruzzese and Colajanni (2018) Giovanni Apruzzese and Michele Colajanni. 2018. Evading botnet detectors based on flows and random forest with adversarial samples. In 2018 IEEE 17th International Symposium on Network Computing and Applications (NCA). IEEE, 1–8.

- Apruzzese et al. (2019) Giovanni Apruzzese, Michele Colajanni, and Mirco Marchetti. 2019. Evaluating the effectiveness of adversarial attacks against botnet detectors. In 2019 IEEE 18th International Symposium on Network Computing and Applications (NCA). IEEE, 1–8.

- Bagnall et al. (2018) Anthony Bagnall, Hoang Anh Dau, Jason Lines, Michael Flynn, James Large, Aaron Bostrom, Paul Southam, and Eamonn Keogh. 2018. The UEA multivariate time series classification archive, 2018. arXiv preprint arXiv:1811.00075 (2018).

- Bagnall et al. (2017) Anthony Bagnall, Jason Lines, Aaron Bostrom, James Large, and Eamonn Keogh. 2017. The great time series classification bake off: a review and experimental evaluation of recent algorithmic advances. Data mining and knowledge discovery 31, 3 (2017), 606–660.

- Bai et al. (2021) Yang Bai, Yuyuan Zeng, Yong Jiang, Shu-Tao Xia, Xingjun Ma, and Yisen Wang. 2021. Improving adversarial robustness via channel-wise activation suppressing. International Conference on Learning Representations (ICLR) (2021).

- Bouniot et al. (2021) Quentin Bouniot, Romaric Audigier, and Angelique Loesch. 2021. Optimal transport as a defense against adversarial attacks. In 2020 25th International Conference on Pattern Recognition (ICPR). IEEE, 5044–5051.

- Carlini and Wagner (2017) Nicholas Carlini and David Wagner. 2017. Towards evaluating the robustness of neural networks. In 2017 ieee symposium on security and privacy (sp). IEEE, 39–57.

- Cheng et al. (2019) Shuyu Cheng, Yinpeng Dong, Tianyu Pang, Hang Su, and Jun Zhu. 2019. Improving black-box adversarial attacks with a transfer-based prior. Advances in neural information processing systems 32 (2019).

- Cho et al. (2019) Jinwoo Cho, Shahroz Tariq, Sangyup Lee, Young Geun Kim, Jeong-Han Yun, Jonguk Kim, Hyoung Chun Kim, and Simon S Woo. 2019. Robust Anomaly Detection in Cyber Physical System using Kullback-Leibler Divergence in Error Distributions. In 5th Workshop on Mining and Learning from Time Series (MileTS’19), Anchorage, Alaska, USA.

- Co et al. (2021) Kenneth T Co, David Martinez Rego, and Emil C Lupu. 2021. Jacobian regularization for mitigating universal adversarial perturbations. In International Conference on Artificial Neural Networks. Springer, 202–213.

- Corona et al. (2013) Igino Corona, Giorgio Giacinto, and Fabio Roli. 2013. Adversarial attacks against intrusion detection systems: Taxonomy, solutions and open issues. Information Sciences 239 (2013), 201–225.

- Dau et al. (2019) Hoang Anh Dau, Anthony Bagnall, Kaveh Kamgar, Chin-Chia Michael Yeh, Yan Zhu, Shaghayegh Gharghabi, Chotirat Ann Ratanamahatana, and Eamonn Keogh. 2019. The UCR time series archive. IEEE/CAA Journal of Automatica Sinica 6, 6 (2019), 1293–1305.

- Dong et al. (2018) Yinpeng Dong, Fangzhou Liao, Tianyu Pang, Hang Su, Jun Zhu, Xiaolin Hu, and Jianguo Li. 2018. Boosting adversarial attacks with momentum. In Proceedings of the IEEE conference on computer vision and pattern recognition. 9185–9193.

- Eykholt et al. (2018) Kevin Eykholt, Ivan Evtimov, Earlence Fernandes, Bo Li, Amir Rahmati, Chaowei Xiao, Atul Prakash, Tadayoshi Kohno, and Dawn Song. 2018. Robust physical-world attacks on deep learning visual classification. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1625–1634.

- Fawaz et al. (2019) Hassan Ismail Fawaz, Germain Forestier, Jonathan Weber, Lhassane Idoumghar, and Pierre-Alain Muller. 2019. Adversarial attacks on deep neural networks for time series classification. In 2019 International Joint Conference on Neural Networks (IJCNN). IEEE, 1–8.

- Goodfellow et al. (2016) Ian Goodfellow, Yoshua Bengio, Aaron Courville, and Yoshua Bengio. 2016. Deep learning. Vol. 1. MIT Press.

- Goodfellow et al. (2014) Ian J Goodfellow, Jonathon Shlens, and Christian Szegedy. 2014. Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572 (2014).

- Goodge et al. (2020) Adam Goodge, Bryan Hooi, See-Kiong Ng, and Wee Siong Ng. 2020. Robustness of Autoencoders for Anomaly Detection Under Adversarial Impact.. In IJCAI. 1244–1250.

- Harford et al. (2020) Samuel Harford, Fazle Karim, and Houshang Darabi. 2020. Adversarial attacks on multivariate time series. arXiv preprint arXiv:2004.00410 (2020).

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.

- Hoffman et al. (2019) Judy Hoffman, Daniel A Roberts, and Sho Yaida. 2019. Robust learning with jacobian regularization. arXiv preprint arXiv:1908.02729 (2019).

- Hundman et al. (2018) Kyle Hundman, Valentino Constantinou, Christopher Laporte, Ian Colwell, and Tom Soderstrom. 2018. Detecting spacecraft anomalies using lstms and nonparametric dynamic thresholding. In Proceedings of the 24th ACM SIGKDD international conference on knowledge discovery & data mining. 387–395.

- Kang et al. (2019) Daniel Kang, Yi Sun, Dan Hendrycks, Tom Brown, and Jacob Steinhardt. 2019. Testing robustness against unforeseen adversaries. arXiv preprint arXiv:1908.08016 (2019).

- Karim et al. (2020) Fazle Karim, Somshubra Majumdar, and Houshang Darabi. 2020. Adversarial attacks on time series. IEEE transactions on pattern analysis and machine intelligence (2020).

- Kim et al. (2017) Seongchan Kim, Seungkyun Hong, Minsu Joh, and Sa-kwang Song. 2017. Deeprain: Convlstm network for precipitation prediction using multichannel radar data. arXiv preprint arXiv:1711.02316 (2017).

- Kim et al. (2021) Young Geun Kim, Jeong-Han Yun, Siho Han, Hyoung Chun Kim, and Simon S Woo. 2021. Revitalizing Self-Organizing Map: Anomaly Detection Using Forecasting Error Patterns. In IFIP International Conference on ICT Systems Security and Privacy Protection. Springer, 382–397.

- Kumar et al. (2018) Akshi Kumar, Sukriti Verma, and Himanshu Mangla. 2018. A survey of deep learning techniques in speech recognition. In 2018 International Conference on Advances in Computing, Communication Control and Networking (ICACCCN). IEEE, 179–185.

- Kurakin et al. (2016a) Alexey Kurakin, Ian Goodfellow, and Samy Bengio. 2016a. Adversarial machine learning at scale. arXiv preprint arXiv:1611.01236 (2016).

- Kurakin et al. (2016b) Alexey Kurakin, Ian Goodfellow, Samy Bengio, et al. 2016b. Adversarial examples in the physical world.

- Lai et al. (2019) Chieh-Hsin Lai, Dongmian Zou, and Gilad Lerman. 2019. Robust subspace recovery layer for unsupervised anomaly detection. arXiv preprint arXiv:1904.00152 (2019).

- LeCun et al. (2015) Yann LeCun, Yoshua Bengio, and Geoffrey Hinton. 2015. Deep learning. nature 521, 7553 (2015), 436–444.

- Li et al. (2019) Dan Li, Dacheng Chen, Baihong Jin, Lei Shi, Jonathan Goh, and See-Kiong Ng. 2019. MAD-GAN: Multivariate anomaly detection for time series data with generative adversarial networks. In International Conference on Artificial Neural Networks. Springer, 703–716.

- Litjens et al. (2017) Geert Litjens, Thijs Kooi, Babak Ehteshami Bejnordi, Arnaud Arindra Adiyoso Setio, Francesco Ciompi, Mohsen Ghafoorian, Jeroen Awm Van Der Laak, Bram Van Ginneken, and Clara I Sánchez. 2017. A survey on deep learning in medical image analysis. Medical image analysis 42 (2017), 60–88.

- Lo et al. (2021) Shao-Yuan Lo, Poojan Oza, and Vishal M Patel. 2021. Adversarially Robust One-class Novelty Detection. arXiv preprint arXiv:2108.11168 (2021).

- Loy-Benitez et al. (2022) Jorge Loy-Benitez, Shahzeb Tariq, Hai Tra Nguyen, Usman Safder, KiJeon Nam, and ChangKyoo Yoo. 2022. Neural circuit policies-based temporal flexible soft-sensor modeling of subway PM2. 5 with applications on indoor air quality management. Building and Environment 207 (2022), 108537.

- Ma et al. (2018) Tengfei Ma, Cao Xiao, and Fei Wang. 2018. Health-atm: A deep architecture for multifaceted patient health record representation and risk prediction. In Proceedings of the 2018 SIAM International Conference on Data Mining. SIAM, 261–269.

- Madry et al. (2017) Aleksander Madry, Aleksandar Makelov, Ludwig Schmidt, Dimitris Tsipras, and Adrian Vladu. 2017. Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083 (2017).

- Oregi et al. (2018) Izaskun Oregi, Javier Del Ser, Aritz Perez, and Jose A Lozano. 2018. Adversarial sample crafting for time series classification with elastic similarity measures. In International Symposium on Intelligent and Distributed Computing. Springer, 26–39.

- Pang et al. (2021) Guansong Pang, Chunhua Shen, Longbing Cao, and Anton Van Den Hengel. 2021. Deep learning for anomaly detection: A review. ACM Computing Surveys (CSUR) 54, 2 (2021), 1–38.

- Papernot et al. (2018) Nicolas Papernot, Fartash Faghri, Nicholas Carlini, Ian Goodfellow, Reuben Feinman, Alexey Kurakin, Cihang Xie, Yash Sharma, Tom Brown, Aurko Roy, Alexander Matyasko, Vahid Behzadan, Karen Hambardzumyan, Zhishuai Zhang, Yi-Lin Juang, Zhi Li, Ryan Sheatsley, Abhibhav Garg, Jonathan Uesato, Willi Gierke, Yinpeng Dong, David Berthelot, Paul Hendricks, Jonas Rauber, and Rujun Long. 2018. Technical Report on the CleverHans v2.1.0 Adversarial Examples Library. arXiv preprint arXiv:1610.00768 (2018).

- Papernot et al. (2017) Nicolas Papernot, Patrick McDaniel, Ian Goodfellow, Somesh Jha, Z Berkay Celik, and Ananthram Swami. 2017. Practical black-box attacks against machine learning. In Proceedings of the 2017 ACM on Asia conference on computer and communications security. 506–519.

- Park et al. (2020) Seoyoung Park, Siho Han, and Simon S Woo. 2020. Forecasting Error Pattern-Based Anomaly Detection in Multivariate Time Series. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Springer, 157–172.

- Seto et al. (2015) Skyler Seto, Wenyu Zhang, and Yichen Zhou. 2015. Multivariate time series classification using dynamic time warping template selection for human activity recognition. In 2015 IEEE Symposium Series on Computational Intelligence. IEEE, 1399–1406.

- Shen et al. (2020) Lifeng Shen, Zhuocong Li, and James Kwok. 2020. Timeseries anomaly detection using temporal hierarchical one-class network. Advances in Neural Information Processing Systems 33 (2020), 13016–13026.

- Shin et al. (2019) Youjin Shin, Sangyup Lee, Shahroz Tariq, Myeong Shin Lee, Daewon Chung, Simon Woo, et al. 2019. Integrative Tensor-based Anomaly Detection System For Satellites. (2019).

- Shin et al. (2020) Youjin Shin, Sangyup Lee, Shahroz Tariq, Myeong Shin Lee, Okchul Jung, Daewon Chung, and Simon S. Woo. 2020. ITAD: Integrative Tensor-Based Anomaly Detection System for Reducing False Positives of Satellite Systems. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management (Virtual Event, Ireland) (CIKM ’20). Association for Computing Machinery, New York, NY, USA, 2733–2740. https://doi.org/10.1145/3340531.3412716

- Siddiqui et al. (2019) Shoaib Ahmed Siddiqui, Dominique Mercier, Mohsin Munir, Andreas Dengel, and Sheraz Ahmed. 2019. Tsviz: Demystification of deep learning models for time-series analysis. IEEE Access 7 (2019), 67027–67040.

- Su et al. (2019) Ya Su, Youjian Zhao, Chenhao Niu, Rong Liu, Wei Sun, and Dan Pei. 2019. Robust anomaly detection for multivariate time series through stochastic recurrent neural network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 2828–2837.

- Szegedy et al. (2013) Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. 2013. Intriguing properties of neural networks. arXiv preprint arXiv:1312.6199 (2013).

- Tariq et al. (2022) Shahroz Tariq, Sowon Jeon, and Simon S Woo. 2022. Am I a Real or Fake Celebrity? Evaluating Face Recognition and Verification APIs under Deepfake Impersonation Attack. In Proceedings of the ACM Web Conference 2022. 512–523.

- Tariq et al. (2018) Shahroz Tariq, Sangyup Lee, Huy Kang Kim, and Simon S Woo. 2018. Detecting in-vehicle CAN message attacks using heuristics and RNNs. In International Workshop on Information and Operational Technology Security Systems. Springer, 39–45.

- Tariq et al. (2020c) Shahroz Tariq, Sangyup Lee, Huy Kang Kim, and Simon S Woo. 2020c. CAN-ADF: The controller area network attack detection framework. Computers & Security 94 (2020), 101857.

- Tariq et al. (2019) Shahroz Tariq, Sangyup Lee, Youjin Shin, Myeong Shin Lee, Okchul Jung, Daewon Chung, and Simon S Woo. 2019. Detecting anomalies in space using multivariate convolutional LSTM with mixtures of probabilistic PCA. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 2123–2133.

- Tariq et al. (2021a) Shahroz Tariq, Sangyup Lee, and Simon Woo. 2021a. One detector to rule them all: Towards a general deepfake attack detection framework. In Proceedings of the Web Conference 2021. 3625–3637.

- Tariq et al. (2020a) Shahroz Tariq, Sangyup Lee, and Simon S Woo. 2020a. CANTransfer: transfer learning based intrusion detection on a controller area network using convolutional LSTM network. In Proceedings of the 35th Annual ACM Symposium on Applied Computing. 1048–1055.

- Tariq et al. (2020b) Shahroz Tariq, Sangyup Lee, and Simon S Woo. 2020b. A convolutional LSTM based residual network for deepfake video detection. arXiv preprint arXiv:2009.07480 (2020).

- Tariq et al. (2021b) Shahzeb Tariq, Jorge Loy-Benitez, KiJeon Nam, Gahye Lee, MinJeong Kim, DuckShin Park, and ChangKyoo Yoo. 2021b. Transfer learning driven sequential forecasting and ventilation control of PM2. 5 associated health risk levels in underground public facilities. Journal of Hazardous Materials 406 (2021), 124753.

- Tariq and Woo (2022) Shahroz Tariq and Simon S. Woo. 2022. Evaluating the Robustness of Time Series Anomaly and Intrusion Detection Methods against Adversarial Attacks. https://openreview.net/forum?id=C5u6Z9voQ1

- Tramer and Boneh (2019) Florian Tramer and Dan Boneh. 2019. Adversarial training and robustness for multiple perturbations. arXiv preprint arXiv:1904.13000 (2019).

- Tramer et al. (2020) Florian Tramer, Nicholas Carlini, Wieland Brendel, and Aleksander Madry. 2020. On Adaptive Attacks to Adversarial Example Defenses. In Advances in Neural Information Processing Systems, H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin (Eds.), Vol. 33. Curran Associates, Inc., 1633–1645. https://proceedings.neurips.cc/paper/2020/file/11f38f8ecd71867b42433548d1078e38-Paper.pdf

- Wang et al. (2018) Jinjiang Wang, Yulin Ma, Laibin Zhang, Robert X Gao, and Dazhong Wu. 2018. Deep learning for smart manufacturing: Methods and applications. Journal of manufacturing systems 48 (2018), 144–156.

- Wang et al. (2017) Zhiguang Wang, Weizhong Yan, and Tim Oates. 2017. Time series classification from scratch with deep neural networks: A strong baseline. In 2017 International joint conference on neural networks (IJCNN). IEEE, 1578–1585.

- Yuan et al. (2019) Xiaoyong Yuan, Pan He, Qile Zhu, and Xiaolin Li. 2019. Adversarial examples: Attacks and defenses for deep learning. IEEE transactions on neural networks and learning systems 30, 9 (2019), 2805–2824.

- Yun et al. (2022) Jeong-Han Yun, Jonguk Kim, Won-Seok Hwang, Young Geun Kim, Simon S Woo, and Byung-Gil Min. 2022. Residual size is not enough for anomaly detection: improving detection performance using residual similarity in multivariate time series. In Proceedings of the 37th ACM/SIGAPP Symposium on Applied Computing. 87–96.

- Zhang et al. (2019) Chuxu Zhang, Dongjin Song, Yuncong Chen, Xinyang Feng, Cristian Lumezanu, Wei Cheng, Jingchao Ni, Bo Zong, Haifeng Chen, and Nitesh V Chawla. 2019. A deep neural network for unsupervised anomaly detection and diagnosis in multivariate time series data. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 33. 1409–1416.

- Zhang and Wang (2019) Haichao Zhang and Jianyu Wang. 2019. Defense against adversarial attacks using feature scattering-based adversarial training. Advances in Neural Information Processing Systems (NeurIPS) 32 (2019), 1831–1841.

- Zhao et al. (2020) Hang Zhao, Yujing Wang, Juanyong Duan, Congrui Huang, Defu Cao, Yunhai Tong, Bixiong Xu, Jing Bai, Jie Tong, and Qi Zhang. 2020. Multivariate time-series anomaly detection via graph attention network. In 2020 IEEE International Conference on Data Mining (ICDM). IEEE, 841–850.

- Zheng et al. (2017) Zibin Zheng, Yatao Yang, Xiangdong Niu, Hong-Ning Dai, and Yuren Zhou. 2017. Wide and deep convolutional neural networks for electricity-theft detection to secure smart grids. IEEE Transactions on Industrial Informatics 14, 4 (2017), 1606–1615.

- Zong et al. (2018) Bo Zong, Qi Song, Martin Renqiang Min, Wei Cheng, Cristian Lumezanu, Daeki Cho, and Haifeng Chen. 2018. Deep autoencoding gaussian mixture model for unsupervised anomaly detection. In International conference on learning representations.

Appendix A Reproducibility

Issues in Baselines. According to our research, the majority of recent anomaly detection methods do not make their source code publicly available. Additionally, many methods whose source code was made publicly available by their authors (or implemented unofficially) were outdated. As a result, we were unable to run them directly on the most recent machines. For instance, in our experiment, we used an Nvidia RTX 3090 GPU. We discovered that, due to some issues with the CUDA version, we could not run an older version of TensorFlow optimally. As a result, the code either takes an eternity to execute or does not execute at all.

Our Solution. We chose to port the baselines to the latest versions of TensorFlow and PyTorch, respectively, which were 2.5.0 for TensorFlow and 1.9.0 for PyTorch at the time of our experiments. We used the CleverHans Library’s (Papernot et al., 2018) FGSM, PGD, BIM, Carlini-Wagner L2, SL1D, and MIM attacks, which were recently ported to TensorFlow2 and PyTorch in version 4.0.0. As a result, our workflows are compatible with the latest libraries. Additionally, after cleaning the code, we will include some tutorial attacks (similar to those included in the CleverHans library for image datasets) that can be used to assess future detectors to adversarial attacks.

Guidelines for Baseline. Note that it is difficult to port or implement all of the most recent methods on our own. Therefore, we tried our best with the limited resources that we had to make the baselines compatible with the latest version of libraries. We will provide some guidelines for creating new baselines and evaluating them against adversarial attacks on our GitHub page. We will leave it up to the community to add additional methods in the future.

Links to Baselines and Datasets. We will include the updated codes for each baseline in our repository as well. We obtained the code of the baselines from the following repositories:

-

•

MSCRED (Zhang et al., 2019): https://github.com/Zhang-Zhi-Jie/Pytorch-MSCRED

-

•

MTAD-GAT (Zhao et al., 2020): https://github.com/ML4ITS/mtad-gat-pytorch

-

•

CLMPPCA (Tariq et al., 2019): https://github.com/shahroztariq/CL-MPPCA

-

•

CAN-ADF (Tariq et al., 2020c, 2018): https://github.com/shahroztariq/CAN-ADF

-

•

CANTransfer (Tariq et al., 2020a): https://github.com/shahroztariq/CANTransfer

Note that we are unable to share the KARI dataset as it is proprietary and requires security clearance to access. The link to rest of the dataset used in our evaluation are as follows:

-

•

SMAP and MSL: https://s3-us-west-2.amazonaws.com/telemanom/data.zip

- •

- •

- •

Appendix B Supplementary Results: MSCRED

Details on FGSM and PGD Attacks. In Figure 4(a), we detail MSCRED’s performance against -norm FGSM and PGD attacks. Under normal conditions, we can see that the model correctly predicted three large anomalies but missed two minor ones. As a result, an F1 score of is obtained. However, when attacked with either FGSM or PGD, the MSCRED model produces no meaningful results because it predicts everything as an anomaly. Furthermore, the patterns of anomaly score under FGSM and PGD attack are very similar to those observed during non-anomalous (or normal) periods. As a result, adjusting the threshold to account for changes in the anomaly score will not be as effective.

SL1D and FGSM Attack. In Figure 4(b), we present the results from two attacks: (i) FGSM and (ii) Sparse Descent (SL1D) attacks. As discussed previously in the main paper, optimizing and -based attacks can be challenging. We can see an excellent illustration of this with the FGSM attack, where adversarial examples from the -based FGSM attack produce nearly identical results to the No Attack data samples (with a few minor differences). However, the SL1D attack, also an -based attack, performs similar to the attack discussed previously. Although the range of anomaly scores produced by SL1D attacks is slightly less than that produced by attacks, it is still significantly higher than the threshold making the MSCRED model to predict the whole input time series as an anomaly.

FGSM and PGD Attack. The results of the -based FGSM and PGD attacks are shown in Figure 4(c). Almost identical to the -based FGSM attack, the -based FGSM attack produces adversarial samples that have no effect on the anomaly score and are thus deemed ineffective. Similar results are obtained using the -based PGD attack. As illustrated in Figure 4(c), the Anomaly scores for No Attack, FGSM , and PGD all overlap significantly.

Appendix C Supplementary Results: MTAD-GAT

Detailed view of MTAD-GAT Results on MSL Dataset. In this section, we discuss the MTAD-GAT results on the MSL dataset in greater detail. Figure 5(a)– 5(c) show No Attack, FGSM attack, and PGD attack results on the entire test data, respectively. We can see that MTAD-GAT predicts fewer anomalies under FGSM and PGD attacks than normal conditions (i.e., No Attack), resulting in a higher rate of false negatives. We have now discussed both of these scenarios in detail in this work: (i) adversarial attack to generate false positives and (ii) adversarial attack to generate false negatives. Additionally, consistent with our previous findings, PGD performs better than FGSM and generates more false negatives than FGSM.

Results on SMD Dataset for MTAD-GAT. We present additional details on the MTAD-GAT results using the Server Machine Dataset (SMD) in Figure 6(a), 6(b) and 6(c) . In the figures, the top row (in red) represents the Anomaly scores, the middle row (in brown) represents the MTAD-GAT predictions, and the bottom row (in blue) represents the ground truth. We can see that MTAD-GAT performs at a state-of-the-art level under normal conditions. However, when subjected to FGSM and PGD attacks, it generates a large number of false positives, resulting in a significant decrease in overall performance. Also, we can observe that when PGD is used, MTAD-GAT produces more false positives than when FGSM is used.

Effects of FGSM and PGD attacks on MTAD-GAT’s Features. As previously stated, MTAD-GAT is composed of two components (i.e., forecasting and reconstruction). We demonstrate in Figure 7(a)– 7(c) that both components become equally ineffective when subjected to adversarial attacks. For example, in normal circumstances (as illustrated in Figure 7(a)), the forecast and reconstruction are quite close to the (ground truth). However, when attacked by FGSM, they deviate from the ground truth, fooling the system into believing it is an anomaly. Additionally, forecast and reconstruction are more chaotic during a PGD attack. As a result, detection performance is even lower than that of a FGSM attack.

Appendix D Supplementary Results: CLMPPCA

Original vs. Perturbed Samples. We compare some samples of original and perturbed time series in this section. The ground truth (in black), the FGSM (in yellow), and the PGD (in Blue) are depicted in Figure 8. We can easily see that all three of the time series overlap, rendering them largely indistinguishable to the naked eye. Additionally, Figure 8(a)– 8(c) show an expanded version of the time series depicted in Figure 1. Each of the three time series (i.e., No Attack, FGSM, and PGD) appears identical. Here, we demonstrate that even simpler adversarial attacks such as FGSM and PGD can be highly effective on time series data. Such perturbations will go unnoticed by a human observer.

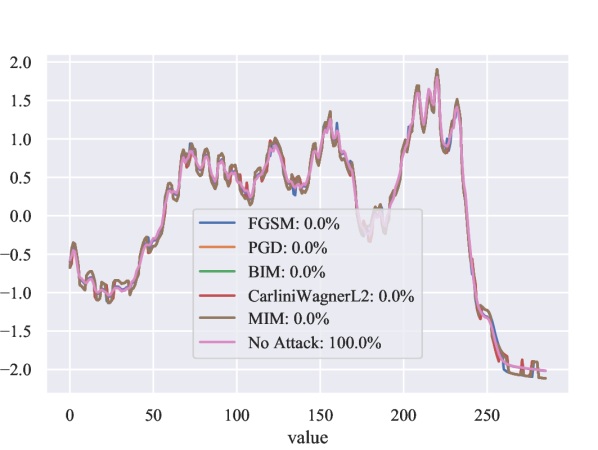

Appendix E UCR Dataset Results

In addition to all the experiment on state-of-the-art anomaly and intrusion detection system. We also cover general time series classification task where we attack a multilayer perception (MLP), a fully convolutional network and ResNet trained on different dataset from the UCR repository. We conduct an analysis of 71 datasets from the University of California, Riverside (UCR) repository. In future work, we will expand on this experiment by including additional neural networks (MobileNet, EfficientNet, DenseNet, and Inception Time) and datasets (the remainder of the UCR dataset, datasets from the UEA repository).

We find that the Carlini-Wagner attack provides the best adversarial examples, resulting in the most significant performance degradation. In Figure 9, we show some original samples and the corresponding perturbed samples generated by FGSM, PGD, BIM, Carlini-Wagner , and MIM attacks on UCR datasets. Additionally, we present the ResNet classification results in Figure 9. Finally, in Table LABEL:app_tab:MLP– LABEL:app_tab:ResNet, we present the classification results for MLP, FCN, and ResNet.

| MLP | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Datasets | FGSM | PGD | BIM |

|

MIM |

|

|||||

| 50words | 44±0.8 | 42±1.3 | 42±1.3 | 35±1 | 43±1 | 63±1.1 | |||||

| Adiac | 14±1.8 | 15±1.6 | 15±1.6 | 16±1.3 | 16±1.8 | 53±2.7 | |||||

| ArrowHead | 29±3.9 | 27±3.3 | 27±3.3 | 24±4.3 | 27±3.1 | 74±2.6 | |||||

| Beef | 32±5.1 | 26±3.9 | 26±3.9 | 29±3.9 | 27±3.4 | 78±3.9 | |||||

| BeetleFly | 74±7.7 | 74±7.7 | 74±7.7 | 70±5 | 74±7.7 | 75±13.3 | |||||

| BirdChicken | 62±5.8 | 62±5.8 | 62±5.8 | 57±10.5 | 62±5.8 | 69±2.9 | |||||

| Car | 49±1 | 35±2.9 | 35±2.9 | 52±1 | 45±1 | 83±1 | |||||

| CBF | 76±2.6 | 76±2.4 | 76±2.4 | 63±3.5 | 76±2.6 | 94±2.6 | |||||

|

24±0.3 | 24±0.5 | 24±0.5 | 24±0.7 | 24±0.4 | 65±0.4 | |||||

| Coffee | 9±2.1 | 9±2.1 | 9±2.1 | 9±4.2 | 9±2.1 | 100±0 | |||||

| Computers | 46±1.1 | 45±1.1 | 45±1.1 | 45±1.1 | 45±1.1 | 58±0.9 | |||||

| Cricket_X | 26±0.7 | 25±0.7 | 25±0.7 | 21±1 | 26±0.9 | 45±1 | |||||

| Cricket_Y | 30±0.8 | 29±1.7 | 29±1.7 | 24±0.6 | 29±1.7 | 48±1.6 | |||||

| Cricket_Z | 32±0.7 | 30±1.1 | 30±1.1 | 25±1 | 31±0.2 | 44±1.2 | |||||

|

40±1.2 | 37±1.4 | 37±1.4 | 31±4 | 38±1.5 | 95±2.4 | |||||

|

16±0.9 | 16±1 | 16±1 | 16±1 | 16±0.9 | 83±0.8 | |||||

|

29±1.4 | 28±1.8 | 28±1.8 | 25±0.9 | 29±1.7 | 77±0.9 | |||||

|

13±0.8 | 12±1.1 | 12±1.1 | 12±0.9 | 12±1.2 | 78±0.7 | |||||

| Earthquakes | 69±1.5 | 69±1.5 | 69±1.5 | 52±4.2 | 69±1.5 | 73±1.1 | |||||

| ECG200 | 60±1.8 | 60±2.1 | 60±2.1 | 29±5.8 | 60±2.1 | 84±0.6 | |||||

| ECG5000 | 65±0.2 | 64±0.3 | 64±0.3 | 61±0.3 | 64±0.4 | 93±0.2 | |||||

| ECGFiveDays | 48±2.3 | 46±2.1 | 46±2.1 | 35±4.8 | 47±2.1 | 95±3.3 | |||||

| ElectricDevices | 22±0.4 | 21±0.5 | 21±0.5 | 21±0.6 | 21±0.6 | 55±0.8 | |||||

| FaceAll | 57±0.3 | 56±0.4 | 56±0.4 | 39±0.9 | 56±0.2 | 74±0.6 | |||||

| FaceFour | 79±2.4 | 77±2 | 77±2 | 76±1.8 | 79±1.4 | 88±0.7 | |||||

| FacesUCR | 67±1.7 | 63±1.6 | 63±1.6 | 55±1.6 | 65±1.8 | 83±1.2 | |||||

| FISH | 16±2.1 | 8±1.2 | 8±1.2 | 14±1.9 | 12±1.2 | 85±0.4 | |||||

| Gun_Point | 48±6.1 | 47±6.2 | 47±6.2 | 34±5.4 | 47±6.2 | 92±1.4 | |||||

| Ham | 34±2.4 | 34±2.6 | 34±2.6 | 48±3.5 | 34±2.6 | 70±2 | |||||

| Haptics | 21±0.9 | 21±0.8 | 21±0.8 | 21±1.2 | 20±0.7 | 41±0.7 | |||||

| Herring | 50±1.9 | 50±1.9 | 50±1.9 | 50±1.9 | 50±1.9 | 51±1.9 | |||||

| InlineSkate | 21±1.1 | 19±0.8 | 19±0.8 | 20±1.4 | 20±1.4 | 34±0.7 | |||||

|

37±0.7 | 30±0.3 | 30±0.3 | 42±0.3 | 34±0.4 | 62±0.7 | |||||

|

82±0.8 | 82±0.9 | 82±0.9 | 11±1.4 | 82±0.9 | 96±0.2 | |||||

|

33±2.2 | 32±1.3 | 32±1.3 | 34±0.6 | 33±2.1 | 51±0.5 | |||||

| Lighting2 | 70±2.6 | 70±2.6 | 70±2.6 | 58±3.8 | 70±2.6 | 65±3.5 | |||||

| Lighting7 | 53±4.2 | 53±3.7 | 53±3.7 | 35±3.7 | 53±3.7 | 64±2.4 | |||||

| Meat | 26±1 | 26±1 | 26±1 | 25±1.7 | 26±1 | 74±1 | |||||

| MedicalImages | 39±1.9 | 36±2.2 | 36±2.2 | 26±0.5 | 37±2.2 | 67±0.5 | |||||

|

32±10.7 | 26±4.8 | 26±4.8 | 20±0.8 | 27±5.7 | 73±1.5 | |||||

|

46±1.5 | 46±1.6 | 46±1.6 | 45±1.5 | 46±1.6 | 56±1.5 | |||||

|

18±2.9 | 18±2.8 | 18±2.8 | 18±1.7 | 18±2.9 | 56±2.4 | |||||

| MoteStrain | 79±0.7 | 79±0.7 | 79±0.7 | 53±2.3 | 79±0.7 | 84±1.1 | |||||

| OliveOil | 28±2 | 28±2 | 28±2 | 28±2 | 28±2 | 59±2 | |||||

| OSULeaf | 29±0.7 | 29±1.1 | 29±1.1 | 29±0.9 | 30±0.7 | 45±0.3 | |||||

|

33±3.2 | 33±2.6 | 33±2.6 | 33±2.3 | 33±2.7 | 68±2.4 | |||||

| Plane | 89±2 | 87±1.1 | 87±1.1 | 85±4.3 | 88±1.1 | 98±1.1 | |||||

|

18±2 | 18±2.3 | 18±2.3 | 18±1.8 | 18±2.3 | 81±1.9 | |||||

|

36±1.4 | 34±1.1 | 34±1.1 | 33±1.6 | 34±0.9 | 68±1.6 | |||||

|

41±3.9 | 42±4 | 42±4 | 42±4 | 42±3.9 | 53±4.1 | |||||

|

36±1.8 | 36±1.6 | 36±1.6 | 36±1.3 | 36±1.9 | 43±1.2 | |||||

| ScreenType | 39±1.4 | 38±1.8 | 38±1.8 | 38±1 | 39±1.6 | 36±0.3 | |||||

| ShapeletSim | 50±1.7 | 50±1.4 | 50±1.4 | 49±1.7 | 50±1.4 | 48±0.9 | |||||

| ShapesAll | 49±1.6 | 42±1.1 | 42±1.1 | 43±1.3 | 46±1.8 | 70±0.2 | |||||

|

33±1.4 | 34±1 | 34±1 | 36±1.6 | 34±1.1 | 49±2.2 | |||||

|

68±2.6 | 68±2.6 | 68±2.6 | 62±7.3 | 68±2.6 | 68±4.6 | |||||

|

81±0.8 | 81±0.8 | 81±0.8 | 71±0.6 | 81±0.8 | 83±0.8 | |||||

| Strawberry | 7±0.3 | 6±0.3 | 6±0.3 | 9±0.7 | 7±0.2 | 96±0.3 | |||||

| SwedishLeaf | 32±1.2 | 26±2.1 | 26±2.1 | 25±0.8 | 29±1.4 | 82±0.3 | |||||

| Symbols | 76±1.5 | 74±1.2 | 74±1.2 | 76±1.4 | 75±1 | 89±0.2 | |||||

| synthetic_control | 80±1.6 | 80±1.7 | 80±1.7 | 37±3.6 | 80±1.6 | 95±1 | |||||

| ToeSegmentation1 | 51±1.5 | 51±1.5 | 51±1.5 | 50±1.2 | 51±1.5 | 57±0.7 | |||||

| ToeSegmentation2 | 63±1.8 | 63±1.8 | 63±1.8 | 55±5.5 | 63±1.8 | 67±3 | |||||

| Trace | 29±2.7 | 29±2.4 | 29±2.4 | 29±2.4 | 29±2.9 | 89±1.8 | |||||

| TwoLeadECG | 45±2.2 | 44±2.3 | 44±2.3 | 37±1.8 | 45±2.2 | 77±0.7 | |||||

| Two_Patterns | 32±1.8 | 31±1.6 | 31±1.6 | 12±0.2 | 31±1.7 | 96±0.4 | |||||

| wafer | 39±1.5 | 39±1.5 | 39±1.5 | 21±1.5 | 39±1.5 | 96±0.9 | |||||

| Wine | 45±0 | 45±0 | 45±0 | 45±0 | 45±0 | 56±0 | |||||

| WordsSynonyms | 40±1.2 | 38±0.5 | 38±0.5 | 32±1 | 39±1.1 | 53±0.4 | |||||

| Worms | 28±0.4 | 27±0.9 | 27±0.9 | 24±1.5 | 28±0.6 | 36±1.2 | |||||

| WormsTwoClass | 49±1.2 | 49±1 | 49±1 | 47±1.4 | 49±1 | 60±1 | |||||

| FCN | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Datasets | FGSM | PGD | BIM |

|

MIM |

|

|||||

| 50words | 3±0.5 | 6±1.4 | 6±1.4 | 18±3.6 | 4±1.3 | 29±16 | |||||

| Adiac | 5±1.8 | 7±3.8 | 7±3.8 | 11±2.1 | 7±3.5 | 24±17.7 | |||||

| ArrowHead | 40±0 | 14±6.2 | 14±6.2 | 14±6.5 | 15±6 | 80±6.6 | |||||

| Beef | 26±10.2 | 23±9.7 | 23±9.7 | 23±12.7 | 22±7.7 | 52±9.7 | |||||

| BeetleFly | 50±0 | 20±5 | 20±5 | 20±5 | 20±5 | 80±5 | |||||

| BirdChicken | 50±0 | 15±10 | 15±10 | 7±2.9 | 22±2.9 | 94±2.9 | |||||

| Car | 22±0 | 40±27.5 | 40±27.5 | 40±26.2 | 40±25.1 | 47±23.4 | |||||

| CBF | 83±1.2 | 79±1.6 | 79±1.6 | 1±0.1 | 81±1.3 | 100±0.2 | |||||

|

39±19.5 | 39±19.8 | 39±19.8 | 38±19.1 | 39±19.8 | 54±18.5 | |||||

| Coffee | 0±0 | 0±0 | 0±0 | 0±0 | 0±0 | 100±0 | |||||

| Computers | 44±10 | 19±5.7 | 19±5.7 | 16±6.1 | 28±11 | 85±6.1 | |||||

| Cricket_X | 16±5.7 | 11±1.8 | 11±1.8 | 13±2.3 | 11±3 | 72±3.7 | |||||

| Cricket_Y | 19±1.9 | 16±3.1 | 16±3.1 | 16±2.9 | 16±3.3 | 69±7.5 | |||||

| Cricket_Z | 13±1.1 | 11±3.2 | 11±3.2 | 14±3.5 | 11±2.1 | 72±5.1 | |||||

|

16±4.9 | 6±0.9 | 6±0.9 | 7±0.5 | 7±0.7 | 93±0.7 | |||||

|

19±4.7 | 19±4.4 | 19±4.4 | 19±4.4 | 19±4.4 | 80±4.3 | |||||

|

38±9.6 | 32±6.1 | 32±6.1 | 32±6.2 | 33±6.6 | 69±6.1 | |||||

|

15±1.1 | 17±1.2 | 17±1.2 | 17±1.1 | 17±1.1 | 73±2.1 | |||||

| Earthquakes | 36±4.1 | 34±3.2 | 34±3.2 | 25±2.5 | 35±3.3 | 76±2.5 | |||||

| ECG200 | 49±6.5 | 16±3.1 | 16±3.1 | 11±1.8 | 24±5 | 89±1.8 | |||||

| ECG5000 | 69±6.9 | 33±24.7 | 33±24.7 | 4±0.4 | 51±12.5 | 94±0.4 | |||||

| ECGFiveDays | 38±9.5 | 2±0.2 | 2±0.2 | 2±0.3 | 2±0.3 | 99±0.3 | |||||

| ElectricDevices | 43±1.3 | 32±2.7 | 32±2.7 | 14±3.3 | 35±2.9 | 70±3.7 | |||||

| FaceAll | 66±0.7 | 41±0.4 | 41±0.4 | 8±2.7 | 57±0.4 | 90±2.8 | |||||

| FaceFour | 6±2.3 | 3±1.8 | 3±1.8 | 5±1.8 | 3±1.2 | 94±0.7 | |||||

| FacesUCR | 68±2.4 | 40±7.9 | 40±7.9 | 4±0.7 | 56±4.4 | 93±0.8 | |||||

| FISH | 13±0.4 | 19±11.5 | 19±11.5 | 22±11.9 | 18±11 | 60±2.9 | |||||

| Gun_Point | 51±2.7 | 2±0.7 | 2±0.7 | 1±0.4 | 4±2.4 | 100±0.4 | |||||

| Ham | 37±3.4 | 37±3.5 | 37±3.5 | 37±3.5 | 37±3.5 | 64±3.5 | |||||

| Haptics | 23±3.1 | 18±4.8 | 18±4.8 | 19±5 | 18±4.8 | 29±3.4 | |||||

| Herring | 60±0 | 46±8.2 | 46±8.2 | 49±11.9 | 54±5.5 | 60±0 | |||||

| InlineSkate | 16±0.5 | 13±5.2 | 13±5.2 | 16±6.7 | 13±4.5 | 22±7.6 | |||||

|

13±1.8 | 11±1.3 | 11±1.3 | 12±1.5 | 11±1.4 | 23±4.4 | |||||

|

84±1 | 81±1.7 | 81±1.7 | 5±0.5 | 83±1.5 | 96±0.3 | |||||

|

50±4.9 | 32±23.7 | 32±23.7 | 21±17.5 | 45±13.9 | 74±16 | |||||

| Lighting2 | 40±1.7 | 29±1 | 29±1 | 29±1 | 30±1.7 | 72±1 | |||||

| Lighting7 | 32±7.6 | 19±2.9 | 19±2.9 | 17±3.5 | 23±4.2 | 74±1.6 | |||||

| Meat | 34±0 | 45±13.7 | 45±13.7 | 52±24.9 | 47±11.7 | 34±0 | |||||

| MedicalImages | 23±6.8 | 14±2 | 14±2 | 14±3.1 | 16±1.2 | 77±2.8 | |||||

|

18±6.6 | 18±5.9 | 18±5.9 | 17±5.7 | 18±6.1 | 70±6.7 | |||||

|

44±22.5 | 43±21.6 | 43±21.6 | 45±24.2 | 43±21.6 | 58±21.4 | |||||

| MiddlePhalanxTW | 20±10 | 23±11 | 23±11 | 21±9 | 23±10.7 | 48±12.8 | |||||

| MoteStrain | 80±1 | 78±1.2 | 78±1.2 | 10±0.5 | 79±1.5 | 91±0.5 | |||||

| OliveOil | 18±19.3 | 16±21.2 | 16±21.2 | 18±19.3 | 18±19.3 | 56±15.1 | |||||

| OSULeaf | 14±0 | 12±4 | 12±4 | 12±4.4 | 11±4.1 | 75±16.7 | |||||

|

36±2.5 | 36±2.5 | 36±2.5 | 36±2.6 | 36±2.5 | 65±2.6 | |||||

| Plane | 40±5.8 | 11±3.9 | 11±3.9 | 0±0 | 25±6.5 | 100±0 | |||||

|

32±23.7 | 22±8.8 | 22±8.8 | 25±10.7 | 22±8.8 | 64±18.9 | |||||

|

32±26.8 | 31±26.4 | 31±26.4 | 31±26.2 | 31±26.8 | 70±26.2 | |||||

|

18±8.2 | 14±3.1 | 14±3.1 | 15±4.7 | 14±2.9 | 75±2.9 | |||||

|

40±3.5 | 36±0.9 | 36±0.9 | 35±1.7 | 36±1 | 46±1.7 | |||||

| ScreenType | 33±3.3 | 28±3.6 | 28±3.6 | 27±3.6 | 29±4.3 | 62±5.2 | |||||

| ShapeletSim | 8±3.7 | 8±3.1 | 8±3.1 | 8±2.8 | 8±3.1 | 93±2.8 | |||||

| ShapesAll | 4±1.4 | 3±2.9 | 3±2.9 | 7±0.6 | 3±1.9 | 19±18 | |||||

|

53±16.7 | 37±18.1 | 37±18.1 | 39±22.6 | 41±11.1 | 43±12.3 | |||||

|

84±2.2 | 82±2.7 | 82±2.7 | 5±0.3 | 83±2.7 | 97±0.6 | |||||

|

86±1.5 | 84±2.1 | 84±2.1 | 3±0.5 | 85±1.7 | 98±0.5 | |||||

| Strawberry | 44±20.8 | 31±8.8 | 31±8.8 | 31±8.9 | 31±9.1 | 70±8.8 | |||||

| SwedishLeaf | 28±1.7 | 10±2.6 | 10±2.6 | 6±3.6 | 13±3.3 | 93±3.6 | |||||

| Symbols | 36±3.2 | 6±1.6 | 6±1.6 | 5±0.6 | 15±1.9 | 94±1.3 | |||||

| synthetic_control | 95±1 | 95±1.3 | 95±1.3 | 3±0.9 | 95±1.2 | 98±0.7 | |||||

| ToeSegmentation1 | 41±6.2 | 11±0.8 | 11±0.8 | 3±0.7 | 18±3 | 98±0.7 | |||||

| ToeSegmentation2 | 43±1.4 | 26±2.3 | 26±2.3 | 14±2.8 | 36±0.5 | 87±2.8 | |||||

| Trace | 52±18.6 | 18±8.9 | 18±8.9 | 1±0.6 | 43±2.9 | 100±0.6 | |||||

| TwoLeadECG | 7±3.1 | 2±0.4 | 2±0.4 | 1±0.1 | 3±0.7 | 100±0.1 | |||||

| Two_Patterns | 34±7.3 | 15±0.7 | 15±0.7 | 15±0.7 | 19±2.3 | 86±0.7 | |||||

| wafer | 8±3.2 | 3±0.9 | 3±0.9 | 1±0.2 | 3±1.3 | 100±0.2 | |||||

| Wine | 50±0 | 50±0 | 50±0 | 50±0 | 50±0 | 50±0 | |||||

| WordsSynonyms | 5±2.2 | 9±3.3 | 9±3.3 | 12±1.5 | 6±1.9 | 30±10.2 | |||||

| Worms | 17±1.7 | 21±3.6 | 21±3.6 | 21±5.3 | 21±3.4 | 48±7.3 | |||||

| WormsTwoClass | 48±5 | 39±2.3 | 39±2.3 | 39±2.5 | 40±4.2 | 62±2.3 | |||||

| ResNet | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Datasets | FGSM | PGD | BIM |

|

MIM |

|

|||||

| 50words | 8±2.3 | 10±1 | 10±1 | 13±1.5 | 9±1.5 | 67±0.7 | |||||

| Adiac | 5±0.2 | 10±1.2 | 10±1.2 | 10±0.2 | 10±0.4 | 82±0.7 | |||||

| ArrowHead | 34±11.5 | 13±0.9 | 13±0.9 | 13±1.5 | 15±1 | 79±2.3 | |||||

| Beef | 24±8.9 | 19±5.1 | 19±5.1 | 18±3.9 | 22±3.9 | 74±3.4 | |||||