Toward the nonequilibrium thermodynamic analog of complexity and the Jarzynski identity

Abstract

The Jarzynski identity can describe small-scale nonequilibrium systems through stochastic thermodynamics. The identity considers fluctuating trajectories in a phase space. The complexity geometry frames the discussions on quantum computational complexity using the method of Riemannian geometry, which builds a bridge between optimal quantum circuits and classical geodesics in the space of unitary operators. Complexity geometry enables the application of the methods of classical physics to deal with pure quantum problems. By combining the two frameworks, i.e., the Jarzynski identity and complexity geometry, we derived a complexity analog of the Jarzynski identity using the complexity geometry. We considered a set of geodesics in the space of unitary operators instead of the trajectories in a phase space. The obtained complexity version of the Jarzynski identity strengthened the evidence for the existence of a well-defined resource theory of uncomplexity and presented an extensive discussion on the second law of complexity. Furthermore, analogous to the thermodynamic fluctuation-dissipation theorem, we proposed a version of the fluctuation-dissipation theorem for the complexity. Although this study does not focus on holographic fluctuations, we found that the results are surprisingly suitable for capturing their information. The results obtained using nonequilibrium methods may contribute to understand the nature of the complexity and study the features of the holographic fluctuations.

1 Introduction

After Wheeler proposed the “It from bit” 1 , an increasing number of concepts in information theory have been introduced into every corner of physics and have played important roles. One fascinating and novel example of such concepts is the quantum computational complexity222“Quantum computational complexity” is referred to as “complexity”, defined as the minimal number of primitive quantum gates required to generate a given unitary operator :

| (1) |

where the fixed gate set comprises the primitive gates required to generate . Complexity has been introduced as a theoretical tool for quantifying the difficulty faced in implementing a desired quantum computational task. It measures the hardness in constructing a given unitary operator (unitary complexity) or approximating a target quantum state from a reference state (state complexity).

In the context of AdS/CFT correspondence 12 ; 13 ; 14 , several information quantities have natural duality in terms of geometric objects, which is considered to encode the features of holographic spacetime (e.g., entanglement entropy is dual to the area of extremal surfaces) 15 . Similar to the entanglement entropy, complexity was recently conjectured to have a holographic dual. The two main holographic correspondences for complexity are “Complexity=Volume” 16 ; 17 ; 18 and “Complexity=Action” 19 ; 20 . Chemissany and Osborne 21 developed a procedure to directly associate a pseudo-Riemannian manifold with the dual AdS space (bulk spacetime) arising from a natural causal set induced by local quantum circuits. Additionally, they studied the fluctuations of the AdS space, which is caused by the dynamics of the dual boundary quantum system via the principle of minimal complexity333This will be explained in the next section., and argued that the Brownian motion in the space of unitary operators might simulate such a fluctuation. Furthermore, they introduced a partition function by introducing a path integral in the space of unitary operators to capture information on holographic fluctuations.

To obtain a more quantitative comprehension of the complexity, a geometric treatment was proposed for the complexity in 2 and solidified in 3 ; 4 ; 5 . Based on previous works, a framework, called “complexity geometry” was gradually established 6 ; 7 . We summarized the main idea of complexity geometry as follows: introduction of a Riemannian (or a Finsler) metric in the space of unitary operators444The group manifold, the space of unitary operators, and the configuration space in this article all refer to special unitary group with an introduced metric structure. (note that the elements of the space of unitary operators act on a given number of qubits). Accordingly, the distance or action functional obtained from the metric is defined as the two measures of the complexity. Therefore, pure quantum (quantum scenario) complexity-related problems are changed into geometric problems that can be solved with classical mechanics (classical scenario) 8 . In particular, geodesics in the space of unitary operators can be obtained by geodesic equations. Recently, complexity and its geometry have been used as an efficient tool to study extensive topics, such as the second law of complexity 8 , black hole thermodynamics 9 , the accelerated expansion of the universe 10 , and quantum gravity 11 .

In the past few decades, the study of nonequilibrium systems in high energy physics has become increasingly popular. Accordingly, several remarkable theoretical frameworks have been implemented to capture the features of nonequilibrium systems. One of the most eye-catching frameworks is the Jarzynski identity 22 ; 23 , which connects equilibrium quantities with nonequilibrium processes. In particular, the Jarzynski identity builds a bridge between the equilibrium free energy difference, , and the work done on the system during a non-equilibrium process, . The Jarzynski identity is expressed in the following form:

| (2) |

where denotes the inverse temperature. The bracket, , represents the ensemble average of all possible values of . There are several proofs of the Jarzynski identity 24 ; 25 ; 26 ; 27 ; 28 . Hummer and Szabo proposed an elegant path integral proof 27 of the Jarzynski identity based on the Feynman-Kac formula 29 ; 30 ; 31 ; 32 . This has played a pivotal role in stochastic thermodynamics. Furthermore, as a novel tool, the Jarzynski identity has been used as diverse as renormalization group 33 , Out-of-Time-Order correlators (OTOCs) 34 , and the Rnyi entropy 35 in holography and quantum information. Thus, it is natural to propose that the Jarzynski identity can be generalized to connect with another important information quantity, the complexity, which may provide us with deeper insights into high-energy physics.

The Jarzynski identity not only interrelates with the second law of thermodynamics but also characterizes a few fluctuation relations, including the fluctuation-dissipation theorem that significantly connects with entropy. Based on this, one of the core ideas we explored in this study was the derivation of a version of the Jarzynski identity for complexity using the path integral approach 27 . In addition, we generalized the discussions in 8 for systems with time-dependent Hamiltonians and derived a version of the fluctuation-dissipation relation for complexity. Because 21 found that the fluctuations of a boundary quantum system are diametrically associated with those of a bulk spacetime, we suggested that the proposed fluctuation-dissipation theorem is a feasible tool for quantitatively exploring the holographic fluctuations555We will not delve into this issue or invoke any gravitational model in our paper. In this paper, we mainly focus on presenting the relationship between the complexity itself and the Jarzynski identity..

In this study, we derived a complexity version of the Jarzynski identity, which is our main result. In addition, we argued that the obtained identity might bring us insights into several topics about complexity, in particular uncomplexity as a computational resource, generalization of the second law of complexity, complexity fluctuation-dissipation theorem, and holographic fluctuations. The remainder of this paper is organized as follows. In Section 2, we briefly review the central concepts of the complexity geometry and the complexity version of the least action principle, namely the principle of minimal complexity. We review a special derivation of the Jarzynski identity based on the path integral method. In Section 3, we introduce the path integral in the space of unitary operators, and apply it to obtain the complexity version of the Jarzynski identity. The application of the Hamilton-Jacobi equation helps us rewrite the identity to a more intuitive form. In Section 4, we discuss four issues about the complexity based on the obtained Jarzynski identity, which are the resource theory of uncomplexity, generalization of the second law of complexity for stochastic auxiliary system , fluctuation-dissipation theorem in the context of quantum complexity, and holographic fluctuations. In Section 5, we perform a numerical simulation of the transverse field Ising model to support our discussions on the second law of complexity, where the complexity version of the Jarzynski identity plays a vital role. Finally, in Section 6, we summarize our results and provide the conclusions and outlooks. This paper is structured in accordance with the flowchart presented in Fig. 1.

2 Preliminaries

In this section, we first briefly review the notion of the complexity geometry. Two significant concepts are retrospected: the - correspondence and the principle of minimal complexity. Second, we present a review of the derivation of the Jarzynski identity presented in 27 , which relies on the Feynman-Kac formula and path integral method. Finally, we generalize the derivation to adapt to our later discussions.

2.1 Complexity geometry

The complexity geometry is a powerful tool for quantifying the hardness to generate a specific unitary operator from the identity , where “” denotes the dimensions of the Hilbert space of the quantum systems (i.e., ) comprising a fixed number of qubits.

Instead of using gate complexity, we minimize a smooth function (the cost) in a smooth manifold (the space of unitary operators) 12 . Then, our purpose changes from how to determine the optimal quantum circuit comprising quantum gates to how to generate the target unitary operator

| (3) |

from a given Hamiltonian with minimal cost (the central idea is presented in Fig. 2) in a certain time interval , where represents the time-ordered operator. denotes the trajectory in the space of unitary operators. A cost with fixed boundary conditions , is defined as follows:

| (4) |

where is a local functional of the in the space of unitary operators666The subscript “” indicates an auxiliary system, which will be described in Section 2.1.2. that has the four following characteristics:

-

(1)

Continuity: .

-

(2)

Non-negativity: takes an equal sign if and only if .

-

(3)

Positive homogeneity: , .

-

(4)

Triangle inequality: , satisfies the triangle inequality such that .

If we regard in Eq. (4) as a Lagrangian, becomes the action functional of the trajectory connecting endpoints and in the space of unitary operators. Thus, complexity is defined as the minimal value of ,

| (5) |

and the infimum is over all possible trajectories. The four properties of define a smooth manifold 777To be precise, the Finsler manifold is a type of generalization of the Riemannian manifold. See Chapter 8 of 36 for the Finsler geometry. equipped with a local metric, called the complexity metric,

| (6) |

where is the tangent space at . Note that in this study, is nothing but the group manifold. We will use to represent the group manifold (the space of unitary operators) throughout this study. More details can be found in 2 ; 3 ; 4 ; 5 ; 6 ; 7 .

2.1.1 Real quantum system “”

The quantum system we considered comprises qubits. The interactions between these qubits are taken as -local. Here, -local means that the Hamiltonian of the system contains the interaction terms of not more than qubits. For example, a -local Hamiltonian

| (7) |

where is a Hermitian operator acting on two arbitrary qubits and . The general expression of a -local Hamiltonian is

| (8) |

Schematically, it can be written as

| (9) |

where and are the set of generalized Pauli matrices and coupling functions. runs over all Pauli matrices with corresponding nonzero couplings888 is also the dimensions of group.. We follow the Einstein’s summation convention here and thereafter. This time-dependent Hamiltonian generates Eq. (3) and determines the dynamics of a quantum system.

What are the dynamics of the quantum system ? The system is a standard quantum system. Thus, when referring to its dynamics, we usually consider the evolution process of the states in its Hilbert space (the space of states) with dimensions. We choose a reference state and a target state as the initial and final system states, respectively. We then define a moving point . According to the Schrödinger’s picture, the evolution starting from the initial state at time to the final state at is achieved by applying a particular unitary operator ,

| (10) |

and the time-evolution (with constraints and ) of itself satisfies the Schrödinger equation

| (11) |

where is a traceless Hermitian operator, i.e., Eq. (9).

In this study, we mainly focus on the time-dependent Hamiltonian form of Eq. (9), which is a generalization of the case presented in 8 . The only difference between these two is that the coupling in the present study varies with time but is constant in 8 . Two typical examples with the time-independent Hamiltonian are the SYK model 37 ; 38 ; 39 ; 40 and thermofield-double (TFD) state 41 ; 42 .

2.1.2 Auxiliary classical system “”

Because of the application of the complexity geometry, we define a classical auxiliary system , along the lines of 8 , as a system that describes the evolution of the unitary operators of the quantum system . We must consider the following questions to define such a classical auxiliary system:

-

(1)

What does system look like?

-

(2)

How do we define the distance (metric) in the configuration space of ?

-

(3)

What is the equation of motion?

Let us answer these questions one by one. The classical auxiliary system describes the evolution of the unitary operators in ; thus, the configuration space is the space of unitary operators and each point in corresponds to an element of the special unitary group. The number of degrees of freedom of system is equal to the dimension of . Moreover, the evolution of a unitary operator in the space of unitary operators can be regarded as the motion of a fictitious nonrelativistic free particle with unit mass in the configuration space. The particle velocity is described by a tangent vector along the trajectory in . The tangent vector’s dimension is consistent with the dimension of (the number of degrees of freedom of system ). For instance, consider a system comprising qubits and . Then, the dual auxiliary system has degrees of freedom and the tangent vectors of are described by -dimensional variables.

Because any Hermitian operator can be expanded in generalized Pauli matrices, such as Eq. (9), we can consider the set of Pauli matrices as a set of basis in . The generalized Pauli matrices satisfy

| (12) |

where we assume that the trace “” is always normalized and denotes the Kronecker-delta. Therefore, coupling can be solved as

| (13) |

If we set , then at Eq. (13) is in form of

| (14) |

where the right-hand side is the projection of the initial velocity onto the tangent space axes oriented along the Pauli basis. Brown and Susskind regarded as the initial velocity of a fictitious particle of system (i.e., ). This is called the velocity-coupling correspondence 8 . Hence, plays the role of a time-dependent velocity. Then, couplings can be written in terms of general (local) coordinates, that is, {}, where {} denotes the coefficients (components) of some vectors in expanded in the Pauli basis. Notably, the selection of local coordinates is not unique. An example of different choices is presented in 43 .

Next, let us introduce the standard inner-product metric (bi-invariant) in , that is,

| (15) | ||||

which equally treats all tangent directions . Mathematically, it means that if is equipped with a bi-invariant metric, then is homogeneous and isotropic. Such a bi-invariant metric induces the system with characteristics similar to those in a classical system in the Euclidean space.

Recall that the complexity is a tool for measuring how difficult it is to generate a target unitary . We generalize to a symmetric positive-definite penalty factor by extending Eq. (15) to the complexity geometry condition 6 . The metric then becomes

| (16) |

which is a right-invariant local metric on . The metric is rewritten in terms of general coordinates as follows:

| (17) |

Eq. (17) provides a homogeneous but anisotropic curved space (with negative curvature for a large number of qubits 6 ; 44 ) as the configuration space. “Anisotropic” means that it is hard for a particle of the system to move in some directions. In quantum computation, this means that it is tough to impose quantum gates in some directions to generate the unitary operator , because these directions are severely penalized. A more detailed discussion can be found in 6 . We mainly consider the (irreducible999In this study we consider that all Markov chains are irreducible.) Markov processes in in the following sections. The state-space of these processes consists of the possible trajectories starting at the origin and has a fixed endpoint , , where indicates each moment and satisfies and . We can obtain the action functional of the trajectories in using the metric presented in Eq. (17)

| (18) |

where subscript “” denotes a quantity of the system . Eq. (18) is a rewritten form of Eq. (4) after considering the Lagrangian

| (19) |

Next, the complexity is calculated by minimizing in Eq. (18) as

| (20) |

which is an explicit form of Eq. (5).

For any classical system, the equation of motion can be derived from an action functional by applying the Euler-Lagrange equation. Thus, for any auxiliary system , the equation of motion reads

| (21) |

Substituting the right-hand side of Eq. (19) into Eq. (21), we obtain

| (22) |

where the Christoffel symbol is defined as

| (23) |

and . Eq. (22) is the geodesic equation in , which is the equivalent expression to the equation of motion101010There is one more equivalent form commonly used in Lie algebra, namely, the Euler-Arnold equation 45 ..

We further extend the discussion made by Brown and Susskind 8 . Consider a system governed by a time-dependent Hamiltonian . Consequently, the dual auxiliary system is a stochastic classical system, and the evolution of the unitary operator corresponds to a Markov process in . The equation of motion becomes a stochastic differential equation, e.g., Quantum Brownian Circuit 46 ,

| (24) |

where are independent Wiener processes with a unit variance per unit time. denotes the number of qubits in the quantum system . The configuration space is (equipped with a complexity metric). The system has degrees of freedom.

In summary, although the understanding of the complexity geometry is still incomplete, it helps us change pure quantum problems (i.e., finding the optimal circuits) to classical geometric problems (i.e., finding geodesics in ). This quantum-classical duality is called the - correspondence.

2.1.3 Principle of minimal complexity

The principle of minimal complexity is the complexity version of the principle of least action, namely the application of the principle of least action to the auxiliary system 21 . The statement of the principle of minimal complexity is the rewritten form of the principle of least action 47 : “A true dynamical trajectory of the system between an initial and a final configuration in a specified time interval is found by imagining all possible trajectories that the system could conceivably take. Then, we compute the complexity for each of these trajectories and select one that makes the complexity stationary (or ‘minimal’). Thus, true trajectories are those that have the minimal complexity.”

By applying the variational method to the first order of Eq. (20), the Euler-Lagrange equation for the principle of minimal complexity can be obtained. However, Eq. (21) is a necessary condition for the minimal value of complexity. To determine whether a trajectory is minimal, we must consider its second-order variation. A trajectory with minimal complexity has a positive second-order derivative.

A similar statement arises from the complexity=action conjecture, that is, the principle of least computation 20 . The conjecture states that the complexity of the boundary state is proportional to the on-shell action of the bulk spacetime. Therefore, we can apply the principle of least action to obtain the equations of motion in the bulk spacetime and minimize the complexity.

2.2 Jarzynski identity

As one of the most remarkable achievements in recent decades, the Jarzynski identity can be derived or proved by various means such as microscopic 22 or stochastic 27 dynamics. In this study, our discussion is mainly based on the path integral derivation of the Jarzynski identity presented by Hummer and Szabo 27 .

2.2.1 Path integral derivation

First, suppose there is a system whose phase space is denoted by . The evolution of the system follows the canonical Liouville equation, i.e.,

| (25) |

where is the phase space density function and is a time-dependent operator. Its stationary solution is a Boltzmann distribution 48 . Therefore, we consider a distribution at time that satisfies the condition of the stationary solution . At the same time, it obeys

| (26) |

If we combine the stationary solution condition of Eq. (25) and Eq. (26), a Fokker-Planck type equation with a sink term can be obtained, i.e.,

| (27) |

Now, we consider the system evolves from an equilibrium state at to a nonequilibrium state at under an arbitrary force. Under this condition, Hummer and Szabo determined that the solution of Eq. (27) could be expressed using the Feynman-Kac formula 29 ; 30 ; 31 as follows:

| (28) |

where the bracket represents the ensemble average. Each trajectory in the phase space is weighted by a factor that can be defined as the external work done on the system,

| (29) |

Based on the famous relation between the free energy and partition function in statistical mechanics (i.e., ), the exponent of the free energy difference is given as

| (30) |

The Boltzmann distribution reads

| (31) |

In Eq. (31), the numerator is divided by such a denominator because the initial distribution is exact. Thus the Jarzynski identity

| (32) |

is derived by integrating both sides of Eq. (28) over .

2.2.2 Generalized form

To generalize their formalism to a configuration space, we must re-interpret the meaning of each symbol in Eq. (25). We re-interpret and as a random variable and distribution function, respectively. Then, the time-dependent operator becomes the Fokker-Planck operator that satisfies a Fokker-Planck equation 49 . Consequently, the stationary solution of becomes Gaussian that can be expressed as

| (33) |

where is a positive constant. The quadratic action is given by

| (34) |

where and represent components of . If we consider a curved space, we only need to transform the Kronecker-delta into a general metric tensor . Then, Eq. (26) becomes

| (35) |

Similarly, combining this equation with Eq. (33) yields

| (36) |

We apply the Feynman-Kac formula to Eq. (36) to obtain

| (37) |

We can further define the generalized work111111We sometimes refer to the action functional as the energy functional, which is why the generalized work is defined in this way, e.g., 33 . as

| (38) |

By integrating on both sides of Eq. (37), we obtain a Jarzynski-like identity, that is,

| (39) |

where is the partition function and and are suitable path integral measures in the configuration space. Note that if we regard the constant as the inverse temperature of the system and set , using the relation, Eq. (39) becomes the Jarzynski identity (i.e., Eq. (32)).

To apply this generalized method to the space of unitary operators, we will introduce the Haar measure and ergodicity in the subsequent section. Furthermore, we will use the Hamilton-Jacobi (HJ) equation to rewrite the complexity version of the Jarzynski identity.

3 Jarzynski identity under the background of the complexity geometry

In Section 3.1, we introduce the Haar measure and path integral in . Then, we apply the same logic as in the path integral derivation in the last section to obtain the Jarzynski identity for complexity. We argue that one can use the Hamilton-Jacobi equation to rewrite the obtained identity into a more meaningful form and raise several nonequilibrium analogs of complexity dynamical issues. We will present these in the next section.

3.1 Path integral in

3.1.1 Path integral

Before discussing the path integral in (mathematically, the path integral is somewhat consistent with the Wiener measure; see 50 for the definition of the Wiener measure), we must first clarify a prerequisite that enables to integrate in the group manifold. We define a Haar measure as a unique measure that is invariant under translations by group elements. We express a Haar measure in terms of general coordinates:

| (40) |

We use to represent the Haar measure (see 43 for an example for choosing a specific parameterization of a normalized Haar measure). is the normalization coefficient. denotes the determinant of the Jacobian matrix . The Jacobian matrix identifies an invariant measure under coordinate transformations. We follow the notation in Eq. (17), i.e., are the coefficients (or components) of expanded on a local basis in all the following contents. The Haar measure must meet two requirements:

-

(1)

normalization condition: ; and

-

(2)

orthogonal completeness condition: .

Here, is considered as the group representation of some pseudo-quantum states that satisfies . Note that the system described by these pseudo-quantum states is not a real quantum system, but a hypothetical quantum system obtained by applying “stochastic quantization” 51 to system , a classical system.

Note that the pseudo-quantum states only help us derive the path integral. To derive the path integral in quantum mechanics, the evolution kernel is obtained by constantly inserting the orthogonal completeness condition, which is familiar to most physicists. Thus, we can assume that there exists a hypothetical quantum system with such an orthogonal completeness condition. Then, we can derive the path integral by inserting the orthogonal completeness condition instead of introducing an unfamiliar concept, “i.e., stochastic quantization” 51 . In this system, a stochastic differential equation, e.g., Langevin equation, is analogous to the Heisenberg operator equation in quantum mechanics. Although the introduction of the pseudo-quantum state is not mathematically rigorous, it is convenient for us to introduce a path integral in . A more rigorous discussion of “stochastic quantization” can be found in 51 . It must be emphasized that the hypothetical quantum system is essentially a classical stochastic system, and its uncertainty comes from stochastic motion not from the Heisenberg uncertainty principle. Therefore, the system described here is purely classical even if we use Dirac notations.

Once a Haar measure is defined, we can do integral in . Thus, we can derive the evolution kernel. Consider a Markov chain in with a finite state space (i.e., with ). We set the unit time interval as for any . Thus, the propagator is defined as

| (41) |

We can obtain the evolution kernel by repeatedly inserting the orthogonal completeness condition similar to what we usually do in Feynman path integrals. If we take N such that , the evolution kernel (or heat kernel) from at to at is obtained as

| (42) | ||||

The sum of Lagrangians, , can be written in the form of the complexity

| (43) |

However, the path integral in a curved configuration space inevitably introduces a correction term with a scalar curvature in the complexity to maintain the covariance of the path integral under any coordinate transformation. Consequently, we must deal with an additional curvature term when calculating the path integral.

Fortunately, an elegant proposal 52 provides a novel form without any curvature modification; it introduces a factor called Van Vleck-Morette determinant 53 ; 54 in Eq. (43)

| (44) |

where denotes the normalized factor of the th Haar measure and is the Van Vleck-Morette determinant:

| (45) |

where is defined as the geodesic interval 55 ; 56 between a fixed point and . Note that

| (46) |

where is the length of the geodesic connecting point to point . With the replacement, i.e., , the expression of evolution kernel becomes

| (47) |

The detailed derivation is presented in Appendix B (see also 52 ). In time limit we consider the continuation of time to the complex plane. A Wick rotation, , is then applied to Eq. (47) such that one can rewrite the evolution kernel as

| (48) |

where refers to the partition function of the system and is a positive constant121212It has two meanings, a Lagrangian multiplier and the inverse temperature of the system 8 .. Let us give some remarks on this equation. In principle, one can consider a trajectory with a large complexity in . Then, the contribution of this trajectory to Eq. (48) is incredibly small because the complexity exists in the form of an exponential function, specifically, , in Eq. (48). We will further consolidate this fact in Appendix C by discussing the relationship between the principle of minimal complexity and the second law of complexity.

3.1.2 Ergodicity

The ergodic motion in the configuration space ensures that the contributions of all possible trajectories are included in Eq. (48). The ergodicity for the system can be fulfilled in two ways: partial ergodic (chaotic evolution with a time-independent Hamiltonian 8 ) and complete ergodic (stochastic process with a time-dependent Hamiltonian 21 ).

A time-independent -local Hamiltonian generates the partial ergodic motion in . To understand this, consider a quantum system comprising qubits that evolves by applying the unitary operator

| (49) |

where are the eigenstates of Hamiltonian with eigenvalues . Because ergodicity is equivalent to the incommensurability of the energy eigenvalues in this case and there are energy eigenvalues for the Hamiltonian , the unitary operator moves on a dimensional torus (subspace of ) 8 in an ergodic motion.

Complete ergodicity can be achieved in the case with a time-dependent -local Hamiltonian, i.e., Eq. (8). In this case, the motions in are considered as the ergodic Markov process filling up all dimensions of . An ergodic Markov process must rigorously satisfy irreducible and non-periodic conditions, and all states are persistent 57 . The necessary and sufficient condition for the existence of a stationary distribution of an irreducible Markov chain is an ergodic Markov chain. We can write a stationary distribution according to our settings as follows:

| (50) |

where represents the normalized constant. Thus, the ergodicity can be satisfied. In conclusion, the existence of a stationary distribution indicates that the motion of the unitary operator in is ergodic when system is governed by a time-dependent Hamiltonian.

3.2 Complexity version of the Jarzynski identity

3.2.1 Derivation of the Jarzynski identity

In this section, we derive the complexity version of the Jarzynski identity from the Fokker-Planck equation with a sink term in . Consider a stochastic auxiliary system that describes a particle moving from a fixed point to another fixed point . The dual quantum system is governed by a time-dependent Hamiltonian evolving in a time interval . Here, the fixed endpoints of the trajectories in play a similar role to the two fixed states in a common Jarzynski case. The evolution equation of the system is a Fokker-Planck equation, i.e.,

| (51) |

where represents the distribution function, and the time-dependent operator denotes the Fokker-Planck operator131313The derivations of the Fokker-Planck equation are presented in Appendix B.. Because of Eq. (50) and Eq. (48), we can construct a distribution as the stationary solution of Eq. (51) at similar to what we did in Section 2.2:

| (52) |

where refers to the partition function at and complexity plays the role of the action in Section 2.2, such that satisfies . Moreover, one can check that

| (53) |

Hence, using this equation and , we can obtain the Fokker-Planck equation with a sink term

| (54) |

Solving this equation with constraint , we obtain

| (55) |

The ensemble average is over all the possible trajectories departing from the identity to reach the fixed point at . The Dirac function indicates the termination condition. Equating this equation with Eq. (52) gives:

| (56) |

By integrating on both sides of this equality and defining a quantity, called computational work as a type of general work defined in Eq. (38) with the following form:

| (57) |

the complexity version of Jarzynski identity is given as

| (58) |

which is one of our main proposals in this paper.

To simplify Eq. (58), we introduce an analog of the thermodynamic free energy in complexity, that is, the “computational free energy”:

| (59) |

If we assume as the inverse temperature of the system and set , can be regarded as the thermodynamic free energy of system and takes the same form as the partition function of a free particle141414Essentially, this is the relationship between the partition function obtained from the path integral approach and the thermodynamic free energy 58 .. A similar discussion between the complexity-related and thermodynamic quantities was made in 8 . By substituting Eq. (59) into Eq. (58), the equality takes a more familiar form:

| (60) |

where depends on the two endpoints of evolution in system .

Even though we have already defined the computational work , intuitively understanding its physical meaning remains hard. Hence, we expect a more instructive interpretation of exists within the abovementioned discussion of the complexity version of the Jarzynski identity. Eq. (57) suggests that the definition of contains the time derivative of complexity. We intend to rewrite the complexity version of the Jarzynski identity using the Hamilton-Jacobi (HJ) equation that describes the change of complexity.

3.2.2 Hamilton-Jacobi equation

To further explore the Jarzynski identity in the context of complexity, a proper rewriting of the expression is required. In this section, we start from the derivation of the HJ equation considering the complexity geometry and use the HJ equation to rewrite Eq. (60). The rewriting of the Jarzynski identity will facilitate the development of the thermodynamic analog of complexity, particularly for the second law of complexity and the related topic uncomplexity as a resource 8 .

Recall that the trajectories in follow the principle of minimum complexity, that is,

| (61) |

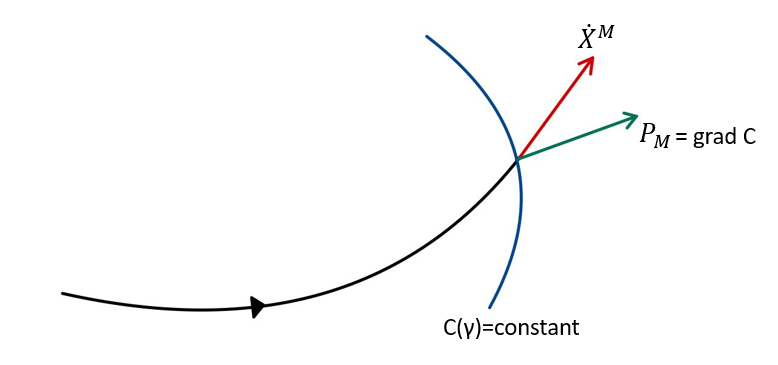

Now, let us consider the principle of minimal complexity from a different perspective, that is, the Hamiltonian mechanics. We first determine the starting and ending points on a configuration space (i.e., and ), respectively. Next, we assume that the trajectories connecting those points are obtained using Eq. (61), which satisfies Eq. (21). The generalized momentum is defined as

| (62) |

whose direction is depicited in Fig. 3. Next, we rewrite Eq. (61) as

| (63) |

By substituting Eq. (21) into this equation and assuming a small variation at the endpoint, namely , Eq. (61) is reformulated as

| (64) | ||||

We take the limit 151515In Lagrangian mechanics, we always assume the variations of endpoints to be zero when deriving the Euler-Lagrange equation. Why do we need to consider an infinitesimal nonzero variation at the endpoint when deriving the HJ equation, though the Euler-Lagrange equation and the HJ equation can be used to describe the same classical system? The difference comes from different essential settings. In Lagrangian mechanics, we identify the equation of motions by varying trajectories between two fixed endpoints. However, when deriving the HJ equation, we consider that the trajectory satisfies the equation of motion. Therefore, instead of varying trajectories, we infinitesimally vary the endpoint of the trajectory to study the corresponding change of the action. So does for the same case in complexity story. In particular, 59 ; 60 have investigated a specific dynamic law, i.e., the first law of complexity by studying the variation of a trajectory’s endpoint in the complexity geometry., such that . Thus, the complexity can be regarded as a functional of , and its infinitesimal variation is written as

| (65) |

Dividing its both sides by and assuming that we obtain

| (66) |

where is the Hamiltonian of the system and Eq. (66) represents the HJ equation in the complexity story. However, a new question arises: what is the form of ? We look at the auxiliary Lagrangian, i.e., Eq. (19), in which has the following form:

| (67) | ||||

The last equal sign is established because the metric tensor is Hessian 2 , that is, . Based on the above construction, we have

| (68) |

for which we have

| (69) |

Substituting this into Eq. (57), the computational work is recast as

| (70) |

We then obtain the equivalent expression of the Jarzynski identity as

| (71) |

This equality directly builds a bridge between the computational free energy difference and the complexity.

4 On the nonequilibrium thermodynamic analog of complexity

The Jarzynski identity can provide a theoretical framework for exploring the thermodynamics of nonequilibrium systems, including stochastic systems. Because we have derived the Jarzynski identity, Eq. (71), and the system is stochastic, this section primarily aims to construct the thermodynamic analogs and deepen our understanding of complexity. In addition, several interesting issues are discussed in this section. First, we will review the content and development for each topic. Next, we will further explore these topics based on the previously obtained results.

4.1 Uncomplexity as a computational resource

To understand this statement, we must first know what a resource is. The resource theory has a wide range of applications in quantum physics 61 ; 62 ; 63 ; 64 ; 65 ; 66 , and we do not need to know all about them. All we need to learn from the resource theory in this paper can be summarized by the following sentence: a resource is something one needs to do X 67 . For example, negentropy is a resource needed for doing work 61 ; 62 ; 63 , which is defined as the difference between the maximal and actual entropies,

| (72) |

The system that does work (to achieve some goals) must expend negentropy. Therefore, negentropy is a resource for doing work. Because complexity shows its analogs with classical entropies 8 , by analogy, a complexity version of negentropy, namely uncomplexity, is defined as

| (73) |

where is the possible maximal complexity and denotes the actual complexity of the system at a certain moment. This quantity is a resource that can be expended for doing direct computations 8 ; 67 . The central idea is expressed as

| (74) |

where refers to the thermodynamic free energy of system 8 ; 67 obtained by assuming as the inverse temperature of the system and setting in Eq. (59). Equivalently,

| (75) |

where resource is denoted by .

Suppose that a particle is initialized at with zero complexity in the system . Equivalently, no gate is initially applied to any qubit in the system . Recall that uncomplexity is the space for complexity to grow 8 . We can write

| (76) |

because represents the ability of the system to do computation161616 always takes non-negative values because . (analogous to the case in thermodynamics). Substituting Eq. (75) and Eq. (76) into Eq. (71), we obtain

| (77) |

This equation provides new evidence supporting the existence of a well-defined resource theory of uncomplexity 87 .

4.2 Second law of complexity and fluctuation theorem

The second law of complexity is obtained by applying the thermodynamic method to the auxiliary system and has been studied when the system is chaotic 8 . Based on the same logic, we should use stochastic thermodynamics to study the second law of complexity when the system is stochastic rather than chaotic. We need two important pieces to complete this “puzzle”: the second law of thermodynamics and the trajectory thermodynamics 28 . In Section 4.2.1, we will first review these two important pieces in the framework of nonequilibrium thermodynamics. Subsequently, in Section 4.2.2, we will discuss the second law of complexity for stochastic auxiliary systems using an analogy with discussions of Section 4.2.1. To avoid confusion, we specify a few significant notations and make a clarification before the discussion.

Notations: in the following content, we use

| (78) |

to represent the stationary distribution of a trajectory in the forward process (starting from to ). A superscript “tilde” denotes the quantities related to the reverse process. By implementing time-reverse, such that and for , we define the stationary distribution of a time-reverse trajectory (starting from to ) as

| (79) |

where refers to the partition function meeting the initial conditions of the reverse process. In the common thermodynamics’ case, the stationary distribution of a forward trajectory in a phase space 70

| (80) |

and the integral is over the phase space. Similar to Eq. (79) the counterpart of Eq. (80), represents the stationary distribution of a time-reverse trajectory in the phase space.

represents the relative entropy between any two distributions and

| (81) |

which is equal to zero if and only if . Such a quantity provides a measure of distinguishability and is a handy tool for quantifying time-asymmetry in thermodynamics.

Clarification: our discussion on the second law of complexity is an extension of that made by Brown and Susskind 8 . However, there are two main differences between our discussion and theirs. The first difference is that the system they discussed was a classical chaotic system. The Hamiltonian of the corresponding quantum system was time-independent. In contrast, our system is a classical stochastic system, whose dual quantum system has a time-dependent Hamiltonian. The second difference is that we used different methods to study the second law of complexity. In particular, we used the approach developed by Brock and Esposito 28 the trajectory thermodynamics to explore the second law of complexity by analogy with their discussions on the second law of thermodynamics of nonequilibrium systems.

4.2.1 Second law of thermodynamics and trajectory thermodynamics

This section provides a brief review on the second law of thermodynamics for nonequilibrium systems and the trajectory thermodynamics 28 . Note that the most common expression of the second law of thermodynamics is known as the Clausius inequality, that is,

| (82) |

where is the heat absorbed by the system during a process and and represent the constant inverse temperature and the system’s thermodynamic entropy, respectively. We define the free energy of the system as

| (83) |

where denotes the system’s internal energy. By combining this definition with the first law of thermodynamics,

| (84) |

we obtain

| (85) |

which corresponds to the Kelvin-Planck statement of the second law of thermodynamics: it is impossible to extract energy from a sole heat bath and converse all that energy into work without introducing any other influence. Equivalently, Eq. (85) can be written as

| (86) |

where denotes the combined entropy change of the system and environment 70 and is the average dissipated work for the forward process171717Because is a physical measure quantifying the dissipation, Eq. (86) is also a measure of dissipation.. is defined as the cumulative entropy production along a trajectory 28 , which is the time integration of the entropy production . Therefore, the non-negativity of can be converted into the following form:

| (87) |

which is one of the basic features of the thermodynamic second law 28 . Eq. (85) can also be derived from Eq. (2) by directly applying the Jensen’s inequality, that is, . Thus, Eq. (2) is closely related to the second law of thermodynamics.

Next, we review the second piece of the “puzzle,” that is, the trajectory thermodynamics 28 . The cumulative entropy production along a forward trajectory in phase space is defined as the log-ratio of the distributions for observing its trajectory in the forward and reverse processes.

| (88) |

where denotes the work done on the system in a forward experiment. Instead of the trajectories in the phase space, we treat the cumulative entropy production as a random variable because it encodes each trajectory. Consequently, the distribution of the cumulative entropy production is given by the path integral in phase space in combination with Eq. (80):

| (89) | ||||

and because and , the cumulative entropy production along a reverse trajectory is obtained by

| (90) |

Furthermore, because the Jacobian for the transformation to the time-reverse variables is equal to one 28 , we can conclude from Eq. (89) that

| (91) |

which is called detailed fluctuation theorem 77 . Eq. (91) has the corresponding statement 28 : the probability of stochastic entropy’s increase in the forward process is exponentially more probable than that of a corresponding decrease in the reverse process. We can rewrite the Eq. (91) as

| (92) |

by integrating in Eq. (91). Hence by directly applying Jensen’s inequality to Eq. (92), we obtain Eq. (86). Finally, we note that the average cumulative entropy production can be given by:

| (93) |

where represents the relative entropy between and .

4.2.2 Discussions on the second law of complexity

The second law of complexity was first conjectured in 44 and developed in 8 ; 67 . This conjecture has two equivalent statements:

-

1.

Conditioning on the complexity being less than maximal, it will most likely increase, both into the future and into the past (Statement 1).

-

2.

Decreasing complexity is unstable (Statement 2).

These statements initially described the features of complexity growth for a chaotic auxiliary system, which is dual to a quantum system with a time-independent Hamiltonian. To avoid confusion, we stipulate that the first statement is called “Statement 1,” and the second statement is called “Statement 2.” We mainly focus on Statement 2. Analogous to the canvass in Section 4.2.1, we extend the discussion on the second law of complexity to the case in which system is stochastic (i.e., corresponds to a quantum system with a time-dependent Hamiltonian). Notably, we argue that the complexity version of the Jarzynski identity and trajectory thermodynamics provide a “Kelvin-Planck-like” statement and a new version of Statement 2 of the second law of complexity for stochastic auxiliary systems.

The distribution for a forward trajectory in is presented as Eq. (78). Moreover, the distribution for its reverse is represented by Eq. (79). By analogy with Eq. (88) we introduce a new quantity similar to the cumulative entropy production as follows:

| (94) |

and we refer to it as the cumulative complexity production along the forward trajectory . Moreover, we replace , work , and free energy difference in Eq. (93) with Eq. (94), computational work , and computational free energy difference , respectively. We obtain

| (95) |

This inequality can be obtained by applying the Jensen’s inequality to Eq. (71). Thus, the complexity version of the Clausius inequality is obtained as follows:

| (96) |

Because Eq. (95) and Eq. (96) are similar to Eqs. (86) and (85), respectively, we conclude that Eqs. (95) and (96) are the mathematical expressions of the second law of complexity for stochastic auxiliary systems. Eq. (87) denotes the equivalent expression of Eq. (86), which describes the increase of entropy for nonequilibrium systems. After making an analog with Eq. (86), Eq. (95) corresponds to a “Kelvin-Planck-like” statement of the second law of complexity and describes the increasing nature of complexity, which is the stochastic generalization of the second law of complexity.

Let us consider as a random variable. Analogous to Eq. (89), the resulting distribution for can be obtained by doing a path integral in :

| (97) | ||||

that can be rewritten as

| (98) |

Eq. (98) is the complexity version of the detailed fluctuation theorem analogous to Eq. (91) and the mathematical expression of the new version of Statement 2 for the stochastic system . We summarize the statement as follows: the possibility for an increase in stochastic complexity production is exponentially greater than that of a corresponding decrease. The average cumulative complexity production is equal to zero if and only if , . This reveals that the time-asymmetry only vanishes when all possible trajectories in are reversible (i.e., the maintenance of time-reversal symmetry). However, this vanishing condition is extremely hard to satisfy because the probability of reversing even a small fraction of the trajectory in the space of unitary operators is negligible for a stochastic system , which has been already discussed in Section 9 of 67 .

This section ends with the exploration of the probability to observe a complexity value that rises beyond the complexity upper bound obtained by applying the Jensen’s inequality to Eq. (71), that is, . Same as before, let us resolve this problem within thermodynamics and give a thermodynamic analog of the complexity. To experimentally obtain the average work, we measure the fluctuating work of a single trajectory in a specific carry out of the experiment in statistical mechanics 69 . A protocol defines a family of the Hamiltonian governing the system evolution from to . The experiments are run by controlling a parameter, such as

| (99) |

where is a set of external controlled parameters changing in time. One can consider a similar situation in the complexity context, because the trajectories in are generated by time-dependent Hamiltonians and each trajectory corresponds to a specific value of complexity. In particular, we consider a similar protocol that defines a family of and evolves the unitary operator181818Note that we evolve a unitary operator using a time-dependent Hamiltonian instead by evolving a quantum state. in a time interval . Then, we repeat the experiment times and compute the complexity for each experiment of the quantum system 191919We must reinitialize the system to the same initial state after each experiment.. Taking the arithmetic mean of these values to build a complexity ensemble (i.e., ), we can construct a probability distribution for complexity in the limit . The average complexity is obtained as

| (100) |

Notably, the considered Hamiltonians are time-dependent and the evolution of the system follows a Markov process202020If the system has a time-independent Hamiltonians (e.g., SYK model), the randomness in the distribution function comes from the random couplings . We leave this for our future study.. Now, suppose several experiments with are included in the ensemble . In combination with Eq. (71), the probability of their appearance is as follows:

| (101) | ||||

where is an arbitrary positive number. Eq. (101) shows a behavior similar to a thermodynamic case 70 where the left tail of the distribution becomes exponentially suppressed in the forbidden region . Consequently, it is hard to measure a complexity value that rises significantly more than the multiples of beyond , which can be considered as a phenomenon related to the second law of complexity. Unlike nonequilibrium thermodynamics 76 , the lower limit of the integral in Eq. (101) is not negative infinity but zero because the complexity metric should be non-negative 2 .

4.3 Fluctuation-dissipation theorem and complexity

Because we obtained a fluctuation theorem for the complexity in Eq. (98), it is interesting to ask whether it is possible to relate the complexity fluctuation to the cumulative complexity production representing the dissipation of the system by analogy with thermodynamic fluctuation-dissipation theorem. To answer this question, we propose a complexity version of the fluctuation-dissipation theorem in this section.

Let us start by discussing the situation in thermodynamics. Using Eq. (2) and the nonequilibrium work to derive the equilibrium free energy difference, we obtain

| (102) |

We can expand the series on the left-hand side of this equation to the second-order term.

| (103) |

where the second cumulant is

| (104) |

as the variance of entropy . Notably, Eq. (104) represents the fluctuation of the entropy . The discussion on the entropy production can be alternatively phrased in terms of dissipation work (i.e., ). From the Callen-Welton theorem 71 ,

| (105) |

the fluctuation-dissipation theorem can be obtained by combining Eq. (103) with Eq. (88), namely

| (106) |

This fluctuation-dissipation theorem was studied in 72 and has many potential applications, including the construction of some hydrodynamic approaches 57 .

We now go back to the content of complexity. Eq. (71) gives

| (107) |

where the average value of the exponential function on the right-hand side is obtained using the probability :

| (108) |

We expand the left-hand side of Eq. (107) into an infinite series:

| (109) |

where here denotes -order cumulant212121Note the distinction between and the positive number in Section 4.2.. Among these cumulants, the first and second order terms are

| (110) |

and the second-order cumulant denotes the variance (fluctuation) of the complexity. Recall that the state space is that contains mutually independent random variables. If we take the limit , then is approximately Gaussian for the central limit theorem.

| (111) |

Moreover, in this case, we can expand Eq. (107) only to the second-order terms. Then, Eqs. (109) and (95) imply

| (112) |

This is the version of the fluctuation-dissipation theorem for complexity that connects the fluctuation of complexity with the dissipation of the auxiliary system during the evolution.

Notably, Eq. (112) essentially links the fluctuation of the trajectories (each trajectory corresponds to a specific complexity value) in with the time-dependent perturbation applied to the quantum system because any trajectory in is generated by the Hamiltonian of the system . This connection implies that Eq. (112) may play a vital role in quantifying holographic fluctuations, which will be discussed in Section 4.4.

4.4 Remarks on holographic fluctuations and complexity

The discussions in this section are inspired by the remarkable work of Chemissany and Osborne 21 , who developed a method for identifying the relation between the fluctuation of the bulk geometry and the perturbation applied to the boundary quantum system via the principle of minimal complexity. We argue that the obtained Jarzynski framework provides a potential tool for quantitatively investigating the holographic fluctuations. We divide this section into two subsections. First, we briefly review the settings and main contribution of 21 . Second, we give a remark based on the Jarzynski framework obtained in the previous sections.

4.4.1 Basic settings and construction of the bulk spacetime

The boundary system is a -local quantum system comprising distinct subsystems (qubits)222222For simplicity, we only consider the system has -local Hamiltonian. In principle, one can consider a -local Hamiltonian for any ., which is initialized in a trivial reference state . We use different numbers that form a point set to label different subsystems. A unitary operator is generated in a certain time interval that diagonalizes a Hamiltonian of the boundary system. The focus here is the evolution of the unitary operator from to . It is equivalent to the evolution of the trajectory of a fictitious particle with unit mass (of the system ) moving on from to . The time interval forms another set , and a topological space is appointed as the bulk spacetime, i.e., , where and is an undetermined topology denoting the causality of the bulk spacetime. The point set corresponds to holographic spacetime with discrete boundary spatial coordinates and “radial” holographic time coordinates as . We can completely identify a bulk spacetime from the trajectories via the principle of minimal complexity by determining the topology 21 .

The target unitary form for the boundary system is presented in Eq. (3). This expression can be approximately replaced by a discrete quantum circuit , where denote the gates acting on one or two qubits at a moment. Therefore, the set becomes . This forms a simple graph in Fig. 4.

We put an edge between the two vertices if a two-qubit gate acts nontrivially on a pair of qubits. To obtain the topology (causality) of the bulk spacetime, we first sample points from a Poisson distribution on set with density to give a new finite set . The causality relation on is then constructed by sending a detectable signal from a spacetime point to another point via a unitary process . We are allowed to interrupt the evolution of by introducing arbitrary fast local interventions232323Local unitary operations introduce these interventions 21 . at any holographic time . Consequently, this method of building causal structures connecting with trajectory gives us a topology for building the topological space regarded as the bulk spacetime.

4.4.2 Holographic fluctuations and Jarzynski identity

According to the above discussion, any geodesic gives rise to the bulk spacetime. Therefore, the fluctuating trajectories in (i.e., the trajectories with near-minimal complexity) can be interpreted as fluctuations in the bulk geometry considered as holographic fluctuations. To capture structures of holographic fluctuations, we only need to describe the structures of the fluctuating trajectories in based on the three following premises:

-

1.

The complexity, , is sensitive to the applied 2-local interactions (quantum gates) between an arbitrary pair of qubits, but not to a particular pair of qubits to which the unitary gate is applied 21 .

-

2.

A complexity functional determines a geodesic in similar to an action functional specifies a geodesic in classical mechanics.

-

3.

Any trajectory in arises from the boundary system via the - correspondence; thus, perturbing the boundary system by inserting quantum gates is equivalent to perturbing the trajectory in .

Fig. 5 depicts the structure of the holographic fluctuations summarized as follows: the trajectories are equal to for all , except at one moment when a local unitary gate242424One can regard this gate as the arbitrary fast local intervention. is applied to an arbitrary pair of qubits and , followed immediately by its inverse gate 21 . Because the applied gate generates an instantaneous interaction between qubits and and the inverse gate cancels the interaction effect, a “wormhole” is created between two points and in the dual bulk spacetime that immediately “evaporates”. In 21 , Eq. (48) was introduced to model these fluctuations. Recall that the complexity has a quadratic action form in Eq. (48); hence, the fluctuating trajectories in can be understood as the stochastic trajectories of the Brownian motions in and are the solutions of Eq. (24) invoked as a toy model of the black hole in 46 . In summary, the bulk geometry modeled by Eq. (48) constitutes a spacetime where “wormholes” are fluctuating in and out of existence between all pairs of spacetime points 21 .

Now, we make a remark on the holographic fluctuations from the perspective of the obtained Jarzynski framework. Applying the results we obtained in the previous sections helps us obtain a better quantitative realization of the holographic fluctuations and several clues strengthen our confidence about that. First, the holographic fluctuation follows a stochastic process in , which means that we can introduce a partition function Eq. (48) using the path integral approach to model its structure. Because we have the partition function, the Jarzynski identity Eq. (71) can be obtained to further give a framework that provides us with a version of the fluctuation theorem for complexity, Eq. (98), which describes the complexity fluctuations. Second, the complexity fluctuations can equivalently describe the fluctuations of trajectories in based on the second premise, and the fluctuating trajectories can give rise to the bulk spacetime. Therefore, the fluctuation theorem describes the fluctuations of complexity and bulk geometry. Third, the fluctuation-dissipation theorem connects the average cumulative complexity production with fluctuations of complexity, which is usually used to study the response of a system to external influences. Thus, Eq. (112) may be applicable to detect the response of the bulk geometry to some perturbations applied to the boundary quantum system. In particular, this equality can be used to quantitatively measure the fluctuations of the complexity by capturing the information on the average cumulative complexity production.

5 Example: transverse field Ising model

The obtained Jarzynski framework gives us few interesting conclusions that need testing. Camilo and Teixeira 73 studied the complexity of the transverse field Ising model (TFIM). We follow their steps to numerically test two of our main proposals, Eqs. (95) and (96). For simplicity, we only consider two phases with the ferromagnetic order along the direction (FMZ) and the paramagnetic phase (PM). We do not focus on the detailed derivation here but will only present a brief review of the derivation with minimal efforts. One can refer to 71 for a detailed derivation.

5.1 Model settings

The TFIM is determined as follows by the time-dependent Hamiltonian

| (113) |

where denotes the definite numbers representing couplings and and are the Pauli matrices acting on the th lattice site. is the transverse field denoting the perturbation comprising a constant and monochromatic driving term with frequency . Assuming that the system is a closed lattice with periodical boundaries , restricting to be even and applying the Fourier transformation, the Hamiltonian can be rewritten as follows in terms of Jordan-Wigner fermions as :

| (114) |

where denotes the Brillouin zone, , , and the trivial contribution is neglected. Eq. (114) is called the Bogoliubov-de Gennes (BdG) Hamiltonian which conserves momentum and parity; the latter implements the symmetry resulting in a decomposition of the Hilbert space into a direct sum of Neveu-Schwarz (NS) sectors. The system evolution dynamically obeys the Schrödinger’s equation. The dynamics is confined to the two-level Nambu subspace spanned by . The system state at any time will acquire the following form:

| (115) |

where the coefficients follow the Schrödinger equation, and the spinor is denoted by the symbol .

Imagine that the system is initialized in state at and evolves during a time interval to a target state through a specific unitary operator , where represents the th momentum sector of . The boundary conditions and are fixed. The application of the Bogoliubov transformation suggests that the complexity metric for each momentum sector is presented as follows in terms of Hopf coordinates

| (116) |

where and correspond to two phases and

| (117) |

denotes the linear profile, where . The anisotropic parameter 74 ; 75 and the eigenvalues of BdG Hamiltonian are represented by

| (118) |

respectively. They are obtained from the Bogoliubov transformation and the high-frequency driving approximation 73 . Here represents the Bessel functions and is called the detuning parameter. After summing over all for Eq. (116), from Eq. (20), the complexity is derived in the following form:

| (119) | ||||

where is defined as the complexity of the th momentum sector solely.

A numerical simulation is performed after the parameters , , , and and the value () are set. We use time-average instead of ensemble-average in the simulation because the motion on satisfies ergodicity. Therefore, we take the limit and precisely replace the ensemble-average with the time-average:

| (120) |

where represents two related quantities, that is, complexity and the cumulative complexity production .

5.2 Numerical results

Two features of the numerical simulation support our previous analytical results:

-

1.

The computational free energy difference provides an average complexity upper bound, which is not violated. This supports Eq. (96).

-

2.

The non-negative average cumulative complexity production supports Eq. (95), which is the mathematical expression corresponding to the “Kelvin-Planck-like” statement of the second law of complexity.

The Hamiltonian Eq. (113) corresponds to various regimes according to the different values of the detuning parameter . The phases can be changed from the FMZ phase through a quantum critical point (QCP) to the PM phase by varying from to and to 73 . For simplicity, we do not consider the critical behavior of the QCP herein and use Eq. (120) to calculate the time-averaged values of the complexity-related quantities for the FMZ and PM phases.

Recall that correspond to the FMZ and PM phases. We plot the complexity , time-averaged complexity , computational free energy difference , and average cumulative complexity production , as a function of time interval in Figs. 6(a) and 6(b).

The FMZ and PM phases present an approximately linear growth initially but subsequently show distinct behaviors. Because the FMZ phase is susceptible to the time-dependent transverse field, its complexity finds it hard to maintain stability and violently fluctuates around a certain value for a long time interval (Fig. 6(a)). In contrast, the complexity of the PM phase will remain stable around a certain value with time interval (Fig. 6(b)). The average cumulative complexity production of the PM phase gradually becomes more negligible and eventually turns to zero. Physically, this comes from the disordered character of the PM phase: “non-local operations are required to create order in a state of the PM phase, but local operations would maintain disorder of such a state. Consequently, the influence of the transverse field is suppressed to prevent the system from creating non-local gates to order the system when is large 73 .” However, for the FMZ phase, even though the average cumulative complexity production will gradually decrease, it will not drop to zero for a long period. The computational free energy differences are obtained by applying the Jarzynski identity corresponding to the changes for the FMZ and PM phases shown in Figs. 6(a) and 6(b), respectively.

We have simulated the dissipation for the FMZ (Fig. 6(a)) and PM (Fig.6(b)) phases and obtained a fluctuation-dissipation for the complexity. Therefore, in Eq. (112), we can make some further discussions about the dissipative behaviors of the two phases and their relation with the holographic fluctuations. First, let us discuss the evolution of the average cumulative complexity production (dissipative behaviors) for the large regime, where is simply Gaussian because of the central limit theorem. Fig. 6(a) depicts that for the FMZ phase, no steady state can be found in a short period (the complexity violently fluctuates) and its average cumulative complexity production always takes large values. Meanwhile, the average cumulative complexity production of the PM phase (plotted in Fig. 6(b)) shows a downward trend and tends to be zero for , indicating that the dissipation vanishes for large . Physically, this means that the quantum system reaches its average complexity upper bound (i.e., ), such that no resource can be extracted from the system due to the breaking of the time-asymmetry for any possible trajectory in 28 , and reversibility holds for all possible trajectories. Additionally, since can only be obtained when , we assume as a complexity quasi-static limit analogous to thermodynamics. By taking this limit, Eq. (96) takes the equal sign and the average complexity of system reaches its upper bound252525Brown and Susskind called this “complexity equilibrium” 8 . However, because “equilibrium” is usually used to describe macroscopic quantities in thermodynamics, to avoid confusion, we do not use this word in this study.. As mentioned in Section 4.4.2, the complexity fluctuations can be regarded as bulk geometry fluctuations and the fluctuation-dissipation theorem states that ; hence, theoretically, we can construct a bulk spacetime from a topological space and simulate its fluctuation using Eq. (112). In particular, let the TFIM be our boundary quantum system with a time-dependent perturbation (transverse field). Note that the transverse field in the system causes the geodesics in to fluctuate. We can then utilize the method of 21 to construct a dual topological space from the TFIM as our bulk spacetime. The geometric structures of the bulk spacetime are changed because the transverse field affects the complexity to vary. We leave the exploration of this part to our future work.

We end this section with a remark. In the sense of average, Eq. (120) may not be sufficiently accurate to describe behaviors of small regime because it holds strictly only when . Therefore, performing the time average might not be the best approach to run simulations. In comparison, employing the Metropolis algorithm over Monte Carlo sweeps may be more practical in analogy with the cases of performing ensemble average, which has already been used to model a similar scenario of the common Jarzynski identity 76 .

6 Conclusions and Outlooks

This study is motivated by the Nielsen’s complexity geometry and the elegant proof of the Jarzynski identity done by Hummer and Szabo. We introduced the path integral in the context of the complexity geometry and used it to derive a complexity version of the Jarzynski identity. In addition, we made remarks on different complexity-related topics based on the obtained identity. The first remark is that provides us a new evidence of the existence of a well-defined resource theory of uncomplexity 8 ; 87 . The second and most crucial remark is an extension of the proposal made by Brown and Susskind, that is, the second law of complexity 8 . Our focus was slightly different from theirs such that the quantum system we considered is governed by a time-dependent Hamiltonian that forms a classical auxiliary system with stochastic features. However, Brown and Susskind considered a quantum system with a time-independent Hamiltonian forming a chaotic auxiliary system. We extended their original second law to the case involving a stochastic auxiliary system. Based on the trajectory thermodynamics, we argued that the complexity version of the Jarzynski identity provides two mathematical expressions of the second law of complexity. Third, we derived a fluctuation-dissipation theorem for complexity by analogy with thermodynamics, which links the fluctuation of complexity to a crucial quantity, namely the average cumulative complexity production. The last remark is on holographic fluctuations. Because any geodesic in the space of unitary operators encodes a bulk spacetime with an extra dimension via the principle of minimal complexity 21 and any geodesic in the space of unitary operators corresponds to a value of complexity, the complexity fluctuations can play the role of bulk geometry fluctuations. Furthermore, our framework connects with the complexity fluctuations, therefore, our results can provide us with a new perspective on the exploration of holographic fluctuations by applying the complexity version of the Jarzynski framework.

We only touched some aspects of these issues, and extensive topics are waiting to be tackled. Several of them are presented below:

-

To explore the holographic fluctuations, one must sample points from the Poisson distribution on point set , which is a discrete process. Consequently, integrals in are hard to solve. It is significant to ask if there is a proper continuum limit. Moreover, taking the continuum limit, the resulting bulk spacetime for CFTs should then converge to AdS 21 .

-

In our discussions, the considered boundary system is a normal quantum system comprising qubits but not a standard quantum field theory. The path integral complexity 78 ; 79 should be a candidate for generalizing our formalism to the quantum field theory. Choosing a suitable definition of quantum complexity would facilitate directly linking the quantum computational complexity with holographic complexity 80 . This generalization may provide us with deeper insights into the AdS/CFT duality, e.g., for Complexity=Action 19 ; 20 and Complexity=Volume conjectures 16 ; 17 ; 18 .

-

We chose the TFIM as an example. One would like to know if our results are applicable for other models, such as the SYK model 37 ; 38 ; 39 ; 40 , or if we can directly simulate Quantum Brownian Circuit. The Quantum Brownian Circuit is quite complicated, and a quantum simulation might be needed. As a reference, 81 recently proposed a quantum simulation for calculating the Jarzynski identity.

Acknowledgements.

We would like to thank Peng Cheng, Shao-Feng Wu and Yu-qi Lei for helpful discussions. This work is partly supported by NSFC (No.11875184).Appendix A Fokker-Planck equations

The time-dependent operators in Eqs. (25) and (51) are Fokker-Planck operators. Hence, understanding the derivation of the Fokker-Planck equations is helpful 82 . We first review the common derivation of the Fokker-Planck equation and generalize it to the cases in a curved space equipped with a non-Euclidean metric 83 . The latter can be directly used in Eq. (25).

Let us consider the stochastic differential equations (SDEs)

| (121) |

where denotes a stochastic variable, and are the functions of , and denotes the independent Brownian motion with unit variance per unit time. We introduce an arbitrary function and use the Ito’s rule to derive the Fokker-Planck equation:

| (122) |

If we take averages on both sides, we immediately obtain:

| (123) | ||||

where represents the distribution function satisfying and . Using the part-by-part integration, and , we obtain

| (124) |

where is independent of . This equality can be transformed into the Fokker-Planck equation, i.e.

| (125) |

where the time-dependent operator refers to the Fokker-Planck operator and represents the Nabla operator. Eq. (25) is the Fokker-Planck equation for a vector Ito stochastic equation, in which , and become a matrix with the same dimensions of .

In the context of the complexity geometry, the configuration space is the group manifold equipped with a non-Euclidean metric, Eq. (17). Thus, we must introduce some modifications to derive the Fokker-Planck equation governing the time evolution of distributions on a Riemannian manifold. The first modification denotes the volume element

| (126) |

where denotes the Haar measure, namely Eq. (40), which makes the volume element independent of the choice of coordinate systems. The gradient, divergence, and Laplacian (or Laplacian-Beltrami operator 83 ) are modified accordingly.

| (127) |

| (128) |

where denotes the component of vector and

| (129) |

represents an arbitrary function. Applying these modifications to the Fokker-Planck equation yields

| (130) |

as the Fokker-Planck equation for the group manifold , where is the vector in . We generally regard Eq. (130) as the general form of the Fokker-Planck equation governing the time evolution of distributions in any curved space by considering as a vector in that space.

We provide a special example, i.e. Eq. (24), to obtain a better understanding of the Fokker-Planck equation. Note that is independent of ; thus, we use to represent it. Recall that is a generalized Pauli matrix that is an anti-Hermitian operator. Hence, the square of is given as follows:

| (131) |

We notice that . Substituting and Eq. (131) into Eq. (130), we finally obtain the Fokker-Planck equation governing the time evolution of the distributions of the Quantum Brownian Circuit in .

Appendix B Path integral in

A path integral measure is required to do path integrals in which is a curved manifold equipped with the complexity metric. This measure generally contains an additional curvature modification on its exponent because the vector operation of the two points on is involved in the derivation of the path integral. However, because the additional term cannot be included in the measure, this takes the form of a partition function varying from Eq. (48). 52 provided a method for doing the path integral in a curved space without any additional curvature modification on the exponent. 52 introduced a factor that is contained in the measure. Therefore, we briefly introduce this method here.

For consistency, we set as the eigenstates of the position operators denoted by symbol .

| (132) |

We consider that a particle in the system evolves from at to at . Before deriving a more general case, we first assume that if is flat, the complexity metric reduces to the standard inner-product metric. We also suppose that a source term is added to contribute to the complexity. The complexity then becomes .

| (133) |