Toward Fine-Grained 3D Visual Grounding through Referring Textual Phrases

Abstract

Recent progress in 3D scene understanding has explored visual grounding (3DVG) to localize a target object through a language description. However, existing methods only consider the dependency between the entire sentence and the target object, ignoring fine-grained relationships between contexts and non-target ones. In this paper, we extend 3DVG to a more fine-grained and interpretable task, called 3D Phrase Aware Grounding (3DPAG). The 3DPAG task aims to localize the target objects in a 3D scene by explicitly identifying all phrase-related objects and then conducting the reasoning according to contextual phrases. To tackle this problem, we manually labeled about 227K phrase-level annotations using a self-developed platform, from 88K sentences of widely used 3DVG datasets, i.e., Nr3D, Sr3D and ScanRefer. By tapping on our datasets, we can extend previous 3DVG methods to the fine-grained phrase-aware scenario. It is achieved through the proposed novel phrase-object alignment optimization and phrase-specific pre-training, boosting conventional 3DVG performance as well. Extensive results confirm significant improvements, i.e., previous state-of-the-art method achieves 3.9%, 3.5% and 4.6% overall accuracy gains on Nr3D, Sr3D and ScanRefer respectively.

1 Introduction

Hitherto, vision-language referring achieves significant progress in 2D computer vision community [19, 29, 41, 26, 30], and has more and more applications for 3D scene understanding [5, 2, 7]. At the initial stage of 3D visual-language understanding, several approaches are proposed for 3D Visual Grounding (3DVG) [4, 1, 13, 44], i.e., localizing a target object in the 3D scene based on an object-related description. However, 3DVG follows the mode of ‘one sentence to one object’, ignoring fine-grained cues of the 3D scene, i.e., multiple object entities are generally mentioned in the query sentence. Different from a limited number of objects in images for 2DVG, the object number in a real-world 3D scene is much larger and the spatial relationship is usually more complicated. As shown in Figure 1 (a), when a neural listener aims to localize the target “cabinet”, it is difficult to simultaneously capture the “door” at a long-distance “opposite” position of the room. Existing methods learn the above relationship only through data-driven ways, i.e., implicitly exploiting positional encoding[43] or graph’s edges[44]. Thus, they are difficult to capture fine-grained cues of non-target objects. Moreover, these methods cannot give an explainable result when the 3DVG model fails due to the inference error of the non-target objects. Hence, there is still a great demand for a more fine-grained 3DVG paradigm.

In this work, we address the above problem following the principle of ‘one sentence to multiple objects’. To this end, we propose the novel Phrase-Aware 3D Grounding (3DPAG) task as an extension of the existing 3DVG. Compared with 3DVG only focusing on the described target object, 3DPAG also simultaneously localizes non-target objects in the 3D scene by referring to contextual phrases from the description. As illustrated in Figure 1 (b), 3DPAG requires the neural listener to localize all the objects mentioned in the sentence. Such a scheme enables the model to explore the complicated relationships between multiple objects and language much easier, thus achieving a more comprehensive understanding. To further facilitate the task, we extend the previous 3DVG datasets, i.e., ReferIt3D [1] and ScanRefer [4], to their phrase-annotated versions. Specifically, we develop three new datasets and name them as Sr3D++, Nr3D++ and ScanRefer++, respectively. The former two are built upon ReferIt3D [1], which contains 174.2K phrases annotations from 83.6K template sentences and 116.3K phrases annotations from 41.5K natural sentences, respectively. Similarly, ScanRefer++ has 110.5K phrase annotations from 46.2K natural descriptions.

Based on these newly annotated datasets, we modify previous approaches with proposed techniques and make them accessible to the more challenging 3DPAG task, benchmarking the new task as well. There are two plug-and-play techniques proposed: 1) Optimizing the phrase-object alignment (POA) map, which is obtained from the multi-head cross-attention layer. 2) Conducting phrase-specific pre-training strategy. Several phrase-specific masks alternate the target object to other mentioned objects, making the model easier to capture fine-grained contexts in the 3D scene. Experimental results confirm that adopting 3DPAG can effectively boost the performance of several existing 3DVG methods. By applying our proposed datasets and methodology, even state-of-the-art method [14] gains great improvements on standard 3DVG task, i.e., achieving 59.0%, 68.0%, and 59.9% accuracies on Nr3D, Sr3D, and ScanRefer datasets, respectively.

Our main contributions can be summarized as follows:

-

-

We introduce the phrase-aware 3D visual grounding (3DPAG) task based on the fine-grained 3DVG datasets, containing about 227K newly phrase-level manual annotations from 88K sentences.

-

-

We propose novel techniques, named phrase-object alignment map optimization and phrase-specific pre-training. They make previous 3DVG models accessible to the more challenging 3DPAG task.

-

-

The developed dataset and components confirm that the 3DPAG greatly improves the 3DVG performance, and the enhanced approach achieves state-of-the-art performance on several benchmarks.

2 Related Work

2.1 3D Visual Grounding

3D visual grounding (3DVG) is an emerging research field. ScanRefer [4] and ReferIt3D [1] are pioneers for the 3DVG, in which both datasets and baseline methods are concurrently proposed. Previous studies [4, 1, 13, 44] formulate 3DVG as a matching problem. They first generate multiple 3D object proposals through either ground truths [1] or a 3D object detector [4], and then fuse 3D proposals with the language embeddings to predict proposals’ similarity scores. The proposal with the highest score will be selected as the target object. Recent works [37, 43, 47, 15] use Transformer [40] architecture to encode the relationship between language and objects’ proposals, where [43] and [37] exploit additional 2D images and orientation annotation during the training stage to boost the performance, respectively. Recently, [48, 3] combine the task of 3DVG with the task of 3D dense captioning to mutually enhance the performance of the two tasks. MVT [14] explores multi-view information in the 3D scene to make a more reliable grounding. Nevertheless, all of these methods exploit the entire sentence to identify a single target object. Such a scheme overlooks the fine-grained relationships between the language and all objects, especially non-target objects, making the grounding process unexplainable as well.

2.2 Phrase Grounding on Images

Different from visual grounding which adopts the whole sentence to localize the target object on images [41], phrase grounding is defined as spatial localization with a textual phrase. Existing approaches exploit different techniques to realize phrase grounding on 2D images, where some of them map the visual and textual modalities onto the same feature space [32, 41], and the others aim to reconstruct the corresponding phrase [38]. For the development of architecture, Hinami & Satoh [12] train the object detectors with an open vocabulary, Plummer et al. [31] condition the textual representation on the phrase category. Recent approaches integrate object proposals with Transformer models [22, 28]. Besides, some previous works focus on utilizing unsupervised [38], weakly supervised [46, 42, 6] manners to achieve phrase grounding.

Different from image-based phrase grounding, our 3D phrase aware grounding (3DPAG) has the following properties: 1) In the dataset aspect, sentences used in 2D phrase grounding are generally captions for the entire scene. In contrast, since our datasets are initiated for visual grounding, the majority of sentences are descriptions of the target object. 2) In the methodology aspect, approaches in 2D phrase grounding only take a textual phrase and the corresponding image as inputs. By contrast, we utilize the entire sentence and the 3D scene as inputs to localize the target object, while identifying all the phrase-related objects mentioned in the context is an auxiliary task.

2.3 Vision-Language Pre-training

Vision-language pre-training has become a hotspot in cross-modal learning [39, 23, 16, 36]. CLIP [35] uses independent encoders to extract features of language and vision separately and then aligns textual and visual information into a joint semantic space with a contrastive criterion. Existing methods usually pre-train transformer models on large-scale image-text paired datasets [25, 20] and fine-tune on downstream tasks. These approaches have achieved state-of-the-art results across various multi-modal tasks such as visual question answering [21], image captioning [24], phrase grounding [18] and image-text retrieval [45]. Nevertheless, due to the limited number of available 3D data and the difficulty of 3D representation learning, there is no pre-training study for 3DVG till now.

3 Problem Statement

The problem of 3DVG is to localize a specific object in a 3D scene using natural language. In line with previous studies, the 3D scene is represented by object proposals , which can be extracted either through ground truth objects [1] or a 3D object detector [4]. Language query with token length is embedded to word features by the pre-trained text encoder, e.g., BERT [9]. Previous 3DVG approaches conduct cross-modal fusion between and to generate confidence scores for each object:

| (1) | ||||

| (2) |

where are object features with dimensions, and are confidence scores of objects. The proposal that has the highest score will be selected as the target object.

Since previous works only focus on localizing a single target object, other non-target objects mentioned in the input sentence are overlooked. Such information about all objects is important for humans to identify the target objects, but it is hard for neural networks to understand without direct supervision. To address this problem, we propose a task, namely 3D phrase-aware grounding (3DPAG), that grounding the non-target objects along with the target one:

| (3) |

To achieve this goal, we simultaneously predict a phrase-object alignment (POA) map together with confidence scores . In a POA map, the element in the -th row and -th column represents the alignment score between -th object proposal and -th language token, as shown in Figure 2(b). Since not all object proposals are mentioned in the sentence, we set an extra [NoObj] token besides the original tokens to represent the proposal without the corresponding phrase.

In this manner, we can not only obtain the target object by ranking the confidence scores , but also gain fine-grained correspondence of non-target ones through the proposed POA map. In practice, if we want to find out an object related to a certain token, we can rank the corresponding column and select the object that has the highest score. If there are multiple tokens describing the same object, we average the scores of these columns before ranking them.

4 Methodology

In this section, we introduce our proposed components for 3DPAG. We introduce our baseline in Sec. 4.1. In Sec. 4.2, we demonstrate the phrase-object alignment (POA) map optimization, which makes the grounding more fine-grained and effective. In Sec. 4.3, we design a phrase-specific pre-training, which utilizes the phrase annotations and fine-grained cues, further boosting the performance.

4.1 3D Visual Grounding Baseline

Figure 2 (a) illustrates how we can make a typical architecture for 3D visual grounding accessible to 3D phrase aware grounding (3DPAG). Generally, the network consists of two encoders to independently deal with the object proposals and language descriptions. On the one hand, for the visual encoder, the global features for objects are extracted by a feature extractor such as PointNet++ [34]. Then the fine-grained classes of the global features are predicted. Afterward, the position and size information, i.e., center and bounding box, are embedded into a positional encoding vector through linear transformation, which is subsequently concatenated with global features. On the other hand, the language encoder directly embeds the text input with a pre-trained BERT model [9], where an extra [CLS] token is set to predict the target category through the input sentence. After that, vision and language tokens are fed into a 3DVG model, obtaining updated object and language features, respectively. In practice, previous approaches design different 3DVG models through diverse architectures, such as Transformer [43] and language-guided GCN [44]. All of these methods can be directly adopted in our architecture. Moreover, we add an extra cross-attention layer [40] to fuse the language and object predictions. Thus the obtained attention maps can be used to predict the subsequently introduced phrase-object alignment (POA) map. Finally, the output features are fed into two fully connected layers, producing a set of confidence scores. The object proposal with the highest grounding score is selected as the final grounding prediction.

| Dataset | Nr3D++ | Sr3D++ | ScanRefer++ | |||

|---|---|---|---|---|---|---|

| train | val | train | val | train | val | |

| Number of sentences | 32,919 | 8,584 | 65,846 | 17,726 | 36,665 | 9,508 |

| Number of phrases | 92,691 | 23,620 | 137,158 | 37,124 | 87,391 | 23,103 |

| Number of phrases per sentence | 2.82 | 2.75 | 2.08 | 2.09 | 2.38 | 2.43 |

| Average length of phrases | 2.34 | 2.37 | 1.17 | 1.17 | 1.76 | 1.77 |

4.2 Phrase-Object Alignment Optimization

During the training phase, the phrase-object alignment (POA) map is not predicted directly as depicted in Eqn. (3). Intuitively, we find that the attention map in the last cross-attention layer is a nature POA map, which can be directly optimized. As shown in Figure 2 (b), we extract the multi-head attention maps from the last cross-attention layer and average them along the head dimension. It shares the same property as the POA map described in Eqn. (3). The ground truth (GT) map in Figure 2 (b) can be generated by phrase annotations. Assume there are object proposals and language tokens, the GT map should have the shape of , where the additional dimension in the column represents [NoObj] correspondence.

For each object proposal (i.e., the row of the map), if it is mentioned by any token, the column of the corresponding token should be 1 (0 for other columns). If there is no correspondence, the column of [NoObj] is set to 1. For example, for the last two tokens, “the monitor” in Figure 2, which correspond to the third object, the last two columns in the third row should be set to 1. After that, we optimize the POA map using a multi-class cross entropy (CE) loss. During the inference, the POA map conducts a fine-grained 3DVG. If one wants to know which object corresponds to “the monitor” in Figure 2, the score for each object can be gained by averaging the last two columns and the object that has the highest score is the desired output.

4.3 Phrase-Specific Training

Except for utilizing phrase information in the training stage, we also propose a phrase-specific pre-training strategy, which is demonstrated in Figure 3. The pre-training strategy is trained only on the 3DVG task but can be applied to both 3DVG and 3DPAG models. Specifically, we manually set several phrase-specific masks according to the number of objects mentioned in the sentence for pre-training. Suppose there are phrases, represented as , in a query sentence, we define their phrase-specific masks as follows:

| (4) |

where each is a phrase-specific mask corresponding to the phrase . Taking Figure 3 (a) as an example, since there are three objects mentioned in the sentence, i.e., “the office chair”, “the one” (another chair) and “the monitor”, we set three masks () whose length are equal to the number of tokens in the sentence. For each mask, only positions that are related to the specific object are equal to 1. After that, we use these masks as extra positional encoding and concatenate them with language features . In each iteration, we randomly choose one mask and object features to identify the object referred by via

| (5) |

where means the model generates confidence scores for the object related to the phrase . Through such a manner, fine-grained information can be exploited, making the model easier to capture non-target objects.

After pre-training, we fine-tune our model on either 3DVG or 3DPAG tasks, as shown in Figure 3 (b). Specifically, we add a linear layer to predict the mask that is only related to the target object, supervised by the ground truth.

5 Dataset

To extend 3D visual grounding (3DVG) to 3D phrase-aware grounding (3DPAG), another contribution of this paper lies in the development of 3DVG datasets with extra phrase-level annotations. In this section, we first introduce the original 3DVG datasets in Sec. 5.1. Then, the data labeling process and statistics of the proposed 3DPAG datasets are demonstrated in Sec. 5.2.

5.1 3D Visual Grounding Datasets

- -

-

-

ReferIt3D [1] uses the same train/valid split with ScanRefer on ScanNet and contains two sub-datasets, where Sr3D (Spatial Reference in 3D) has 83.5K synthetic expressions generated by templates and Nr3D (Natural Reference in 3D) consists of 41.5K human annotations collected similarly as ReferItGame [19].

5.2 3D Phrase Aware Grounding Datasets

Data Annotations. Since the Sr3D dataset is generated from language templates, we can acquire the corresponding phrase annotations. For the other two datasets, we deploy a web-based annotation interface modified by ScanRefer Browser111github.com/daveredrum/ScanRefer_Browser on Amazon Mechanical Turk (AMT) to identify objects’ phrases. During the annotation process, annotators need to identify the phrases in the original sentence and find out the corresponding object in the 3D scene.

The 3D web-based UI allows users to select arbitrary texts, and it will automatically save the phrase by recording the start and end positions. When the annotators search for an object, the selected object is highlighted while others are faded out. Meanwhile, a set of captured image frames are shown to compensate for incomplete details of the 3D reconstruction. The position and viewpoint of the virtual camera are allowed for adjusting flexibility to examine the target object better. To ensure the annotation quality, we allow users to select a “not make sure” button during the annotation, and these suspicious sentences will go through a double-check by us. Each sentence will be labeled by two annotators. After labeling, the annotators will verify the results according to each other’s annotations. When labeling ScanRefer dataset, it takes an average of 40 minutes for an annotator to complete a scene. There are 3664 man-hours to complete the whole labeling.

| Method | Nr3D | Sr3D | ScanRefer |

|---|---|---|---|

| ScanRefer [4] | - | 44.5 | |

| ReferIt3D [1] | 40.8 | 46.9 | |

| TGNN [13] | 37.6 | 45.2 | - |

| InstanceRefer [44] | 48.0 | 49.2 | |

| 3DVG-Trans. [47] | 40.8 | 51.4 | - |

| FFL-3DOG [10] | - | - | |

| TransRefer3D [11] | 42.1 | 57.4 | - |

| LanguageRefer [37] | 43.9 | 56.0 | - |

| BUTD-DETR [15] | 54.6 | 67.0 | - |

| SAT [43] | 49.2 | 57.9 | 53.8 |

| MVT [14] | 55.1 | 64.5 | 55.3 |

| SAT + Ours | |||

| MVT + Ours |

Data Statistics. Thanks to the high-quality data annotations, we can extend the previous 3DVG datasets to phrase-aware versions, i.e., Nr3D++, Sr3D++, and ScanRefer++. As shown in Table 1, among three 3DPAG datasets, Sr3D++ has the largest number of sentences and phrases since it is originally generated by machine templates. Nr3D++ has the smallest number of sentences, but the number of phrases per sentence achieves 2.82 in the training split, surpassing that of Sr3D++ (2.08) and ScanRefer++ (2.38). Also, the average length of phrases in Nr3D++ is the longest.

6 Experiments

6.1 Experiment settings

Baselines. There are several evaluation modes in 3DVG. The 3DVG models are tested with (1) detector-generated proposals (i.e., 3DVG-Det); and (2) the ground truth proposals (i.e., 3DVG-GT) to prevent the experimental bias via a more powerful detector. We rigorously benchmark the baseline and enhanced model in this paper and tested them in both modes. Foremost, we follow the settings [1, 43, 37] of using the ground truth proposals on each dataset, where SAT [43] and MVT [14] are used as our baseline. After that, we evaluate models with detector-generated proposals on ScanRefer dataset, spanning different detectors, e.g., SAT [43] with VoteNet [33] and D3Net [48] with more powerful PointGroup [17]. The comprehensive experiments show our proposed datasets and methods significantly improve 3DVG.

| Method | Detector | Uni. | Mul. | Overall |

| ScanRefer [4] | VN | 53.51 | 21.11 | 27.40 |

| TGNN [13] | PG | 56.80 | 23.18 | 29.70 |

| InsRefer [44] | PG | 66.83 | 24.77 | 32.93 |

| FFL-3DOG [10] | VN | 67.94 | 25.70 | 34.01 |

| 3DVG-Trans. [47] | Other | 60.64 | 28.42 | 34.67 |

| SAT [43] | VN | 50.83 | 25.16 | 30.14 |

| D3Net* [48] | PG | 71.04 | 27.40 | 35.62 |

| B-DETR [15] | GF | 66.30 | 35.10 | 39.80 |

| SAT + Ours | VN | 58.21 | 26.76 | |

| D3Net + Ours | PG | 72.50 | 28.84 | |

| B-DETR + Ours | GF | 67.16 | 37.05 |

Evaluation Metric. In existing 3DVG-GT, models are evaluated by the overall accuracy , i.e., whether the model correctly selects the target object among proposals given a language query. For the mode of 3DVG-Det, it calculates the 3D intersection over union (IoU) between the predicted bounding box and ground truth. The [email protected] is adopted as the evaluation metric. Moreover, the accuracy is reported in “unique”(19%) and “multiple” (81%) categories, respectively.

In this paper, we also design a novel metric for the 3DPAG task. Specifically, for each sentence, we simultaneously evaluate the accuracy of a target object and mentioned non-target objects, and the final result is gained by multiplication of two accuracies:

| (6) |

For each sentence, is 1 if the target object is identified correctly, otherwise 0. is the grounding accuracy of non-target but mentioned objects (excluding the target one). If multiple objects are related by a phrase, we allow the model to choose an arbitrary one (less than 0.1%). The on the whole dataset is the averaged accuracy of all sentences, and thus it can be treated as the weighted version of . By using this weighted accuracy, we can prevent the model from only predicting the right result through learning data distribution, i.e., equals to 1 only if the model correctly identifies the target object and all non-target objects.

Implementation Details. During the pre-training stage, the model is trained with the Adam optimizer with a batch size of 16. We set an initial learning rate of and reduce the learning rate by a multiplicative factor of every 10 epochs for a total of 100 epochs. During the fine-tuning stage, we reduce the initial learning rate to and keep other hyperparameters the same.

6.2 3D Visual Grounding Results

3DVG-GT. The results of 3D visual grounding (3DVG) with ground truth proposals are shown in Table 2. By effectively utilizing fine-grained phrase annotations in both pre-training and training processes, our proposed dataset and components improve the SoTA method MVT [14], from 55.1/64.5/55.3% to 59.0/68.0/59.9% on Nr3D/Sr3D/ScanRefer datasets in terms of overall accuracy, respectively. Moreover, there is a considerable improvement on the other baseline SAT [43] with lower initial performance. Our dataset and proposed components boost its performance by 5.2%, 5.1%, and 3.7%, respectively. The performance boosts on 3DVG-GT are more reliable, since it only considers the referring ability of the model and ignores the effect of different detection architectures.

3DVG-Det. 3D visual grounding with detected proposals is a more complicated task since it also considers detection results. Table 3 reports the 3DVG on the ScanRefer validation set [4] with detected object proposals. After equipping our POA optimization and pre-training strategy, the enhanced model achieves an accuracy of 41.5%, outperforming the previous state-of-the-arts [48, 43, 15]. Moreover, we find out that our proposed components and dataset work well across different object detectors, i.e., VoteNet [33], PointGroup [17] and GroupFree [27], which further shows the effectiveness.

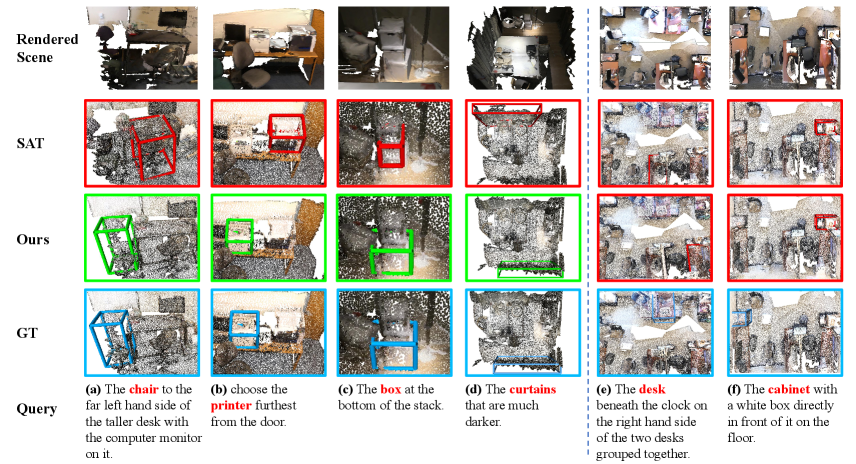

Visualization. In Figure 4, we show some grounding predictions on Nr3D with SAT baseline. As shown in examples (a-d), our method corrects failure cases made by baseline. For instance (a, b), our method correctly understands the relationships between mentioned objects “chair” and “monitor”, “printer” and “door”. Besides, our method also distinguishes the phrases related to the spatial position, e.g., “far left” and “furthest”. In (c, d), our method reasons the target from multiple similar objects by their position and color information, i.e., “bottom” and “darker”. The (e, f) are failure cases, which are caused by severe distractors of the same classes in a complicated scene.

6.3 3D Phrase Aware Grounding Results

Fully supervised 3DPAG-GT. We extend previous 3DVG methods to the newly proposed 3DPAG task by optimizing POA maps, as depicted in Sec. 4.1, whose attention maps are used for phrase-object alignment map generation. The fully supervised 3DPAG results are shown in the upper part of Table 4. Besides computing introduced in Eqn. 6 (PAG), we also provide the overall accuracy of all phrases (PG).

As shown in Table 4, MVT equipping our pre-training strategy (i.e., PSP) achieves the highest results in all metrics, especially on ScanRefer++ dataset which contains more ambiguous phrases as distractors. Specifically, it respectively improves and by 7.4% and 6.0% compared with naive extension of MVT. Moreover, after phrase-specific pre-training (PSP) with phrase-level annotations, SAT also significantly improves the performance for 3DPAG, increasing 8.6% and 6.2% for PAG and PG.

| Nr3D++ | Sr3D++ | ScanRefer++ | ||||

| Method | PAG | PG | PAG | PG | PAG | PG |

| ScanRefer [4] | 23.8 | 30.3 | 31.4 | 36.8 | 24.4 | 33.1 |

| ReferIt3D [1] | 25.6 | 33.7 | 35.5 | 43.2 | 25.8 | 34.2 |

| InstanceRefer [44] | 28.7 | 40.2 | 37.4 | 51.1 | 27.8 | 35.4 |

| SAT [43] | 33.2 | 48.4 | 48.2 | 62.8 | 32.3 | 40.3 |

| MVT [14] | 36.3 | 52.6 | 53.1 | 66.2 | 36.2 | 43.1 |

| SAT + PSP | 37.9 | 52.8 | 55.5 | 67.4 | 40.9 | 46.5 |

| MVT + PSP | 42.1 | 56.9 | 61.0 | 72.6 | 43.6 | 49.1 |

| RandSelect | 8.6 | 12.8 | 13.9 | 21.7 | 10.5 | 15.0 |

| SAT + PSP | 13.1 | 20.4 | 19.6 | 28.6 | 13.3 | 20.1 |

| MVT + PSP | 17.1 | 24.7 | 22.5 | 32.2 | 21.2 | 24.2 |

Weakly Supervised 3DPAG-GT. Since labeling phrase-level annotation is time-consuming as well, in this paper, we also explore whether we can conduct 3DPAG predictions using only 3DVG data. By exploiting our phrase-specific pre-training technique, our method can also conduct weakly supervised 3DPAG. Specifically, we first identify all object-related phrases through rule-based language parser [10], and then construct the phrase-specific mask only for the target object (as shown in Sec. 4.3) during the training. During the inference, we manually provide phrase-specific masks for non-target objects, which do not appear in training. In other words, we only use a subset of our 3DPAG annotations (only target phrases) in training, which can be extracted from the existing VG dataset with high precision, and evaluate on the whole dataset (both target and non-target phrases). We also demonstrate the results through random selection (RandSelect), and the final results are shown in the lower part of Table 4.

3DPAG-Det. We also evaluate our PAG task with detected proposals. Specifically, we evaluate the previous state-of-the-art in Table 3, i.e., BUTD-DETR [15]. Although BUTD-DETR utilizes phrase-object alignment scheme during the training, it only aligns the target object due to the lack of annotation. During our experiment, it achieves 22.6 score on ScanRefer++ dataset, and gain a boost to 32.3 with our component. It indicates that explicit supervision of phrase-object alignment is necessary for an accurate localization.

Fine-grained Grounding. Figure 5 shows the 3DPAG prediction on Nr3D++ dataset, which predicts the corresponding object of each phrase (each object is shown with the same color of the phrase). Compared with traditional 3DVG, 3DPAG provides fine-grained results which would give inherent cues for explainable VG in the future.

| Method | Trans. layer | PSP | POA | SAT | MVT |

|---|---|---|---|---|---|

| Baseline | 49.2 | 55.1 | |||

| model A | ✓ | 48.3 | 54.8 | ||

| model B | ✓ | ✓ | 50.4 | 56.0 | |

| model C | ✓ | ✓ | 53.0 | 56.4 | |

| full model | ✓ | ✓ | ✓ | 54.4 | 59.0 |

6.4 Ablation studies

In this section, we investigate the effect of different components proposed in our paper, as shown in Table 5. The baseline indicates the origin SAT and MVT models, which achieve 49.2% and 55.1% on Nr3D, respectively. Purely exploiting an additional transformer layer leads to a slight performance decrease in both baselines (model A). With POA optimization, model B increases the accuracy to 50.4% and 56.0%, which outperforms model A by 2.1% and 1.2%, respectively. Exploiting phrase-specific pre-training (model C) also improves from 48.3/54.8% to 53.0/56.4%. Both model B and model C demonstrate the effectiveness of phrase-level annotations. Finally, by merging all proposed components, the full architecture obtains the highest score, i.e., 54.4% and 59.0%, beyond our baseline by 5.2% and 3.9%, respectively.

7 Conclusion

In this paper, we introduce the task of 3D phrase aware grounding (3DPAG), which aims to localize the target object in the 3D scenes while identifying non-target ones mentioned in the sentence. Furthermore, we label about 227K phrase-level annotations from 88K sentences in existing 3DVG datasets. We exploit newly proposed datasets and introduce phrase-object alignment optimization and phrase-specific pre-training for learning object-level, phrase-aware representations. In the experiment, we extend previous 3DVG methods to the phrase-aware scenario and prove that 3DPAG can effectively boost the 3DVG results. Moreover, our enhanced models achieve state-of-the-art results on three datasets for both 3DVG and 3DPAG tasks.

References

- [1] Panos Achlioptas, Ahmed Abdelreheem, Fei Xia, Mohamed Elhoseiny, and Leonidas Guibas. Referit3d: Neural listeners for fine-grained 3d object identification in real-world scenes. In European Conference on Computer Vision, pages 422–440. Springer, 2020.

- [2] Panos Achlioptas, Judy Fan, Robert Hawkins, Noah Goodman, and Leonidas J Guibas. Shapeglot: Learning language for shape differentiation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 8938–8947, 2019.

- [3] Daigang Cai, Lichen Zhao, Jing Zhang, Lu Sheng, and Dong Xu. 3djcg: A unified framework for joint dense captioning and visual grounding on 3d point clouds. In 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 16464–16473, 2022.

- [4] Dave Zhenyu Chen, Angel X Chang, and Matthias Nießner. Scanrefer: 3d object localization in rgb-d scans using natural language. In European Conference on Computer Vision, pages 202–221. Springer, 2020.

- [5] Kevin Chen, Christopher B Choy, Manolis Savva, Angel X Chang, Thomas Funkhouser, and Silvio Savarese. Text2shape: Generating shapes from natural language by learning joint embeddings. In Asian Conference on Computer Vision, pages 100–116. Springer, 2018.

- [6] Kan Chen, Jiyang Gao, and Ram Nevatia. Knowledge aided consistency for weakly supervised phrase grounding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 4042–4050, 2018.

- [7] Zhenyu Chen, Ali Gholami, Matthias Nießner, and Angel X Chang. Scan2cap: Context-aware dense captioning in rgb-d scans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3193–3203, 2021.

- [8] Angela Dai, Angel X Chang, Manolis Savva, Maciej Halber, Thomas Funkhouser, and Matthias Nießner. Scannet: Richly-annotated 3d reconstructions of indoor scenes. pages 5828–5839, 2017.

- [9] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding, 2018.

- [10] Mingtao Feng, Zhen Li, Qi Li, Liang Zhang, XiangDong Zhang, Guangming Zhu, Hui Zhang, Yaonan Wang, and Ajmal Mian. Free-form description guided 3d visual graph network for object grounding in point cloud. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 3722–3731, 2021.

- [11] Dailan He, Yusheng Zhao, Junyu Luo, Tianrui Hui, Shaofei Huang, Aixi Zhang, and Si Liu. Transrefer3d: Entity-and-relation aware transformer for fine-grained 3d visual grounding. In Proceedings of the 29th ACM International Conference on Multimedia, pages 2344–2352, 2021.

- [12] Ryota Hinami and Shin’ichi Satoh. Discriminative learning of open-vocabulary object retrieval and localization by negative phrase augmentation. arXiv preprint arXiv:1711.09509, 2017.

- [13] Pin-Hao Huang, Han-Hung Lee, Hwann-Tzong Chen, and Tyng-Luh Liu. Text-guided graph neural networks for referring 3d instance segmentation. 35th AAAI Conference on Artificial Intelligence, 2021.

- [14] Shijia Huang, Yilun Chen, Jiaya Jia, and Liwei Wang. Multi-view transformer for 3d visual grounding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 15524–15533, 2022.

- [15] Ayush Jain, Nikolaos Gkanatsios, Ishita Mediratta, and Katerina Fragkiadaki. Bottom up top down detection transformers for language grounding in images and point clouds. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXXVI, pages 417–433. Springer, 2022.

- [16] Chao Jia, Yinfei Yang, Ye Xia, Yi-Ting Chen, Zarana Parekh, Hieu Pham, Quoc Le, Yun-Hsuan Sung, Zhen Li, and Tom Duerig. Scaling up visual and vision-language representation learning with noisy text supervision. In International Conference on Machine Learning, pages 4904–4916. PMLR, 2021.

- [17] Li Jiang, Hengshuang Zhao, Shaoshuai Shi, Shu Liu, Chi-Wing Fu, and Jiaya Jia. Pointgroup: Dual-set point grouping for 3d instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4867–4876, 2020.

- [18] Aishwarya Kamath, Mannat Singh, Yann LeCun, Gabriel Synnaeve, Ishan Misra, and Nicolas Carion. Mdetr-modulated detection for end-to-end multi-modal understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 1780–1790, 2021.

- [19] Sahar Kazemzadeh, Vicente Ordonez, Mark Matten, and Tamara Berg. Referitgame: Referring to objects in photographs of natural scenes. In Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pages 787–798, 2014.

- [20] Ranjay Krishna, Yuke Zhu, Oliver Groth, Justin Johnson, Kenji Hata, Joshua Kravitz, Stephanie Chen, Yannis Kalantidis, Li-Jia Li, David A Shamma, et al. Visual genome: Connecting language and vision using crowdsourced dense image annotations. International journal of computer vision, 123(1):32–73, 2017.

- [21] Chenliang Li, Ming Yan, Haiyang Xu, Fuli Luo, Wei Wang, Bin Bi, and Songfang Huang. Semvlp: Vision-language pre-training by aligning semantics at multiple levels. arXiv preprint arXiv:2103.07829, 2021.

- [22] Liunian Harold Li, Mark Yatskar, Da Yin, Cho-Jui Hsieh, and Kai-Wei Chang. Visualbert: A simple and performant baseline for vision and language. arXiv preprint arXiv:1908.03557, 2019.

- [23] Wei Li, Can Gao, Guocheng Niu, Xinyan Xiao, Hao Liu, Jiachen Liu, Hua Wu, and Haifeng Wang. Unimo: Towards unified-modal understanding and generation via cross-modal contrastive learning. arXiv preprint arXiv:2012.15409, 2020.

- [24] Xiujun Li, Xi Yin, Chunyuan Li, Pengchuan Zhang, Xiaowei Hu, Lei Zhang, Lijuan Wang, Houdong Hu, Li Dong, Furu Wei, et al. Oscar: Object-semantics aligned pre-training for vision-language tasks. In European Conference on Computer Vision, pages 121–137. Springer, 2020.

- [25] Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C Lawrence Zitnick. Microsoft coco: Common objects in context. In European conference on computer vision, pages 740–755. Springer, 2014.

- [26] Xihui Liu, Zihao Wang, Jing Shao, Xiaogang Wang, and Hongsheng Li. Improving referring expression grounding with cross-modal attention-guided erasing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1950–1959, 2019.

- [27] Ze Liu, Zheng Zhang, Yue Cao, Han Hu, and Xin Tong. Group-free 3d object detection via transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 2949–2958, 2021.

- [28] Jiasen Lu, Dhruv Batra, Devi Parikh, and Stefan Lee. Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. Advances in Neural Information Processing Systems, 2019.

- [29] Junhua Mao, Jonathan Huang, Alexander Toshev, Oana Camburu, Alan L Yuille, and Kevin Murphy. Generation and comprehension of unambiguous object descriptions. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 11–20, 2016.

- [30] Aditya Mogadala, Marimuthu Kalimuthu, and Dietrich Klakow. Trends in integration of vision and language research: A survey of tasks, datasets, and methods. arXiv preprint arXiv:1907.09358, 2019.

- [31] Bryan A Plummer, Paige Kordas, M Hadi Kiapour, Shuai Zheng, Robinson Piramuthu, and Svetlana Lazebnik. Conditional image-text embedding networks. In Proceedings of the European Conference on Computer Vision (ECCV), pages 249–264, 2018.

- [32] Bryan A Plummer, Arun Mallya, Christopher M Cervantes, Julia Hockenmaier, and Svetlana Lazebnik. Phrase localization and visual relationship detection with comprehensive image-language cues. In Proceedings of the IEEE International Conference on Computer Vision, pages 1928–1937, 2017.

- [33] Charles R Qi, Or Litany, Kaiming He, and Leonidas J Guibas. Deep hough voting for 3d object detection in point clouds. In proceedings of the IEEE/CVF International Conference on Computer Vision, pages 9277–9286, 2019.

- [34] Charles Ruizhongtai Qi, Li Yi, Hao Su, and Leonidas J Guibas. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Advances in neural information processing systems, 30, 2017.

- [35] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In International Conference on Machine Learning, pages 8748–8763. PMLR, 2021.

- [36] Aditya Ramesh, Mikhail Pavlov, Gabriel Goh, Scott Gray, Chelsea Voss, Alec Radford, Mark Chen, and Ilya Sutskever. Zero-shot text-to-image generation. In International Conference on Machine Learning, pages 8821–8831. PMLR, 2021.

- [37] Junha Roh, Karthik Desingh, Ali Farhadi, and Dieter Fox. Languagerefer: Spatial-language model for 3d visual grounding. In 5th Annual Conference on Robot Learning, 2021.

- [38] Anna Rohrbach, Marcus Rohrbach, Ronghang Hu, Trevor Darrell, and Bernt Schiele. Grounding of textual phrases in images by reconstruction. In European Conference on Computer Vision, pages 817–834. Springer, 2016.

- [39] Hao Tan and Mohit Bansal. Lxmert: Learning cross-modality encoder representations from transformers. arXiv preprint arXiv:1908.07490, 2019.

- [40] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. pages 5998–6008, 2017.

- [41] Liwei Wang, Yin Li, Jing Huang, and Svetlana Lazebnik. Learning two-branch neural networks for image-text matching tasks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(2):394–407, 2018.

- [42] Fanyi Xiao, Leonid Sigal, and Yong Jae Lee. Weakly-supervised visual grounding of phrases with linguistic structures. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5945–5954, 2017.

- [43] Zhengyuan Yang, Songyang Zhang, Liwei Wang, and Jiebo Luo. Sat: 2d semantics assisted training for 3d visual grounding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 1856–1866, 2021.

- [44] Zhihao Yuan, Xu Yan, Yinghong Liao, Ruimao Zhang, Zhen Li, and Shuguang Cui. Instancerefer: Cooperative holistic understanding for visual grounding on point clouds through instance multi-level contextual referring. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 1791–1800, 2021.

- [45] Pengchuan Zhang, Xiujun Li, Xiaowei Hu, Jianwei Yang, Lei Zhang, Lijuan Wang, Yejin Choi, and Jianfeng Gao. Vinvl: Revisiting visual representations in vision-language models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5579–5588, 2021.

- [46] Fang Zhao, Jianshu Li, Jian Zhao, and Jiashi Feng. Weakly supervised phrase localization with multi-scale anchored transformer network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5696–5705, 2018.

- [47] Lichen Zhao, Daigang Cai, Lu Sheng, and Dong Xu. 3dvg-transformer: Relation modeling for visual grounding on point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 2928–2937, 2021.

- [48] Dave Zhenyu Chen, Qirui Wu, Matthias Nießner, and Angel X Chang. D3net: A speaker-listener architecture for semi-supervised dense captioning and visual grounding in rgb-d scans. arXiv e-prints, pages arXiv–2112, 2021.