torchmSAT: A GPU-Accelerated Approximation To The Maximum Satisfiability Problem

Abstract

The remarkable achievements of machine learning techniques in analyzing discrete structures have drawn significant attention towards their integration into combinatorial optimization algorithms. Typically, these methodologies improve existing solvers by injecting learned models within the solving loop to enhance the efficiency of the search process. In this work, we derive a single differentiable function capable of approximating solutions for the Maximum Satisfiability Problem (MaxSAT). Then, we present a novel neural network architecture to model our differentiable function, and progressively solve MaxSAT using backpropagation. This approach eliminates the need for labeled data or a neural network training phase, as the training process functions as the solving algorithm. Additionally, we leverage the computational power of GPUs to accelerate these computations. Experimental results on challenging MaxSAT instances show that our proposed methodology outperforms two existing MaxSAT solvers, and is on par with another in terms of solution cost, without necessitating any training or access to an underlying SAT solver. Given that numerous NP-hard problems can be reduced to MaxSAT, our novel technique paves the way for a new generation of solvers poised to benefit from neural network GPU acceleration.

1 Introduction

In recent years, machine learning has emerged as a powerful method for solving complex combinatorial optimization problems, a class of problems that are notably difficult to solve due to their inherent combinatorial nature. These problems often fall within the realm of Karp’s NP-hard complexity class [18], requiring a substantial amount of computational resources to find exact solutions as the problem size increases. The application of machine learning, particularly deep neural networks, has demonstrated remarkable potential in tackling such problems [8]. Machine learning techniques encompass a diverse range of approaches, including those that substitute traditional heuristics within existing algorithms [13, 26, 44, 20], enhance solvers by incorporating learned configurations [14, 43, 37], or reformulate a problem as a Markov Decision Process and employ reinforcement learning strategies [30, 21, 19, 7]. These techniques have been successfully employed across various domains, from operations research and graph theory to constraint satisfaction and scheduling problems, offering a new perspective on addressing the challenges posed by NP-hard problems [8].

Satisfiability (SAT) and Maximum Satisfiability (MaxSAT) problems represent two prominent classes of combinatorial optimization problems. In the case of SAT, the objective is to determine whether there exists an assignment of truth values to a given set of Boolean variables that satisfies a given set of logical clauses, typically expressed in conjunctive normal form (CNF). When the problem is non-satisfiable, MaxSAT extends the SAT problem by seeking an assignment that maximizes the number of satisfied clauses, rather than trying to satisfy all clauses. MaxSAT problems are of particular interest due to their ability to model optimization scenarios where some degree of constraint violation is tolerable, capturing a broader range of real-world applications [39, 40, 17]. Both SAT and MaxSAT problems have been extensively studied, giving rise to a variety of algorithmic techniques and heuristics aimed at efficiently solving or approximating solutions to these problems [5].

Existing MaxSAT solvers employ a diverse range of techniques to efficiently tackle the Maximum Satisfiability problem. These techniques can generally be classified into two main categories: complete and incomplete methods. Complete methods, such as Branch and Bound algorithms [24], search exhaustively for an optimal solution, guaranteeing the best possible assignment of truth values to satisfy the maximum number of clauses. Some complete solvers utilize SAT solvers as a core engine, iteratively refining their search space by tightening the upper bound of unsatisfied clauses, as seen in iterative SAT-based MaxSAT algorithms [32, 33]. In contrast, incomplete methods, like local search algorithms, do not guarantee optimality but aim to find high-quality solutions in less time. These methods involve exploring the solution space by iteratively making small changes to the current assignment of truth values, guided by various heuristics and neighborhood search strategies [29, 31]. Both complete and incomplete MaxSAT solvers have been successfully applied to real-world problems, with the choice of solver being dependent on the problem size, required optimality guarantees, and available computational resources.

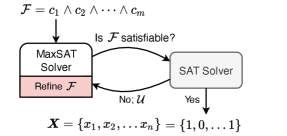

In this paper, we present a novel MaxSAT solver, called torchmSAT, that leverages neural networks and falls within the incomplete category. Departing from the conventional practice of incrementally enhancing existing SAT solvers for MaxSAT resolution, we propose a completely new algorithm developed from scratch. As depicted in Figure 1, our method forgoes the necessity for a traditional SAT solver as a fundamental component of the search algorithm in incomplete techniques for MaxSAT. Rather than training a neural network to predict assignments that maximize satisfied clauses, an inherently complex task, we develop a novel neural network architecture with a differentiable loss function for solving MaxSAT. The key intuition is that by relaxing the binary constraint of the problem and allowing the Boolean variables to be represented in a continuous domain, advances in deep learning libraries would allow this optimization to be executed efficiently. Accordingly, the training process can be seen as a process that iteratively explores the solution space, generating progressively improved variable assignments. Consequently, our approach eliminates the need for labeled training data, or the need to call an underlying SAT solver to testify (un)satisfiability. Moreover, since our proposed solver is natively built using a reliable deep learning library [35], we are able to run the solver on GPUs without any change to the data structure, the solving algorithm or the optimization process. Therefore, we investigate the advantages of hardware acceleration for solving MaxSAT, demonstrating that the acceleration of such computations enables the solver to traverse the feasible solution space more rapidly. In essence, torchmSAT presents a fresh approach for solving MaxSAT that could potentially open doors for a new generation of combinatorial optimization solvers.

2 Preliminaries

MaxSAT Formulation. In propositional logic, a Boolean formula is composed of Boolean variables, , and logical operators, including negations (), conjunctions (), and disjunctions (). A common representation for Boolean formulas is the conjunctive normal form (CNF), which is structured as a conjunction of multiple clauses, . Each clause is a disjunction of literals, where a literal can be either a variable or its negation.

| (1) |

This format facilitates the systematic analysis and manipulation of Boolean expressions for various computational tasks. A formula is deemed satisfiable if there is at least one assignment of Boolean variables, , that satisfies all clauses. In numerous applications, a formula may not be entirely satisfiable, and the objective of MaxSAT solvers is to identify an assignment that satisfies the greatest number of clauses. In this scenario, the number of unsatisfied clauses is referred to as the cost of the CNF – a lower cost corresponds to a better assignment. In some applications, (weighted) partial CNF formulas are considered. Clauses in a partial CNF formula are characterized as hard, , meaning that these must be satisfied, or soft, , meaning that these are to be satisfied, if at all possible.

SAT Oracle. Existing MaxSAT solvers employ SAT oracles to handle CNF formulas. A SAT oracle is any algorithm that can determine the satisfiability of any given Boolean formula in the conjunctive normal form (CNF). If the formula is satisfiable, the SAT oracle provides a satisfying assignment; if not, it returns additional information such as an unsatisfiable core, . The unsatisfiable core, , represents a subset of ’s clauses that are inherently unsatisfiable. The task of determining the satisfiability of a given CNF is indeed NP-complete [18].

Conflict-Driven Clause Learning (CDCL) is a method used by modern SAT solvers to efficiently search the solution space and resolve conflicts during the search process. State-of-the-art SAT solvers build upon the basic DPLL (Davis-Putnam-Logemann-Loveland) algorithm [34], which employs backtracking and unit propagation, by adding clause learning and non-chronological backtracking [10]. When a CDCL-based solver encounters a conflict, which occurs when the current partial assignment of leads to an unsatisfiable core , it analyzes the conflict to generate a new learned clause. This learned clause represents the assignments of variables contributing to the conflict and helps in preventing the solver from introducing similar conflicts in the future. The solver then performs jumping back several levels in the search tree instead of just one, using the learned clause to guide the process. The main advantage of CDCL-based solvers is their ability to learn from conflicts and use that knowledge to prune the search space more effectively. This results in faster and more efficient SAT solving for many real-world problem instances.

MaxSAT Solvers. In an unweighted MaxSAT problem, a typical MaxSAT algorithm proceeds by making several calls to an underlying SAT oracle such as CaDiCaL [12], Glucose [6] or Minisat [41]. The difference amongst algorithms is how they orchestrate the calls to the SAT solver. For example, the RC2 algorithm sends to the SAT solver, which reports that is unsatisfiable and returns an unsatisfiable core, . At least one of the clauses of the core will have to be disregarded in order to fix the core. So, the algorithm proceeds by relaxing each clause in (i.e. augmenting the clause with a fresh variable called relaxation variable) and constrains the sum of the relaxation variables to be at most one [16]. In other words, the algorithm starts by assuming all clauses can be satisfied and iteratively relaxes this assumption until it finds a satisfying assignment. Similarity, the FM algorithm [27] involves a sequence of calls to an unsatisfiability oracle, each of which generates an unsatisfiable core. The clauses that are part of this unsatisfiable core are then relaxed, followed by the introduction of a new constraint that pertains to the relaxation variables in the formula. On the other hand, the LSU algorithm runs a series of satisfiability oracle calls refining an upper bound on the MaxSAT cost, followed by one unsatisfiability call, which stops the algorithm [31]. In other words, it finds an initial assignment with a suboptimal cost, and iteratively search for better assignments to reduce the cost.

In our work, we present a novel MaxSAT algorithm that does not rely on a SAT oracle during the search process. Instead, it adopts a progressive strategy akin to the one described in [31] and relies entirely on the neural network for identifying unsatisfiable cores, , and discovering improved solutions through backpropagation.

3 Related Work

ML for MaxSAT. Several recent efforts have investigated the integration of machine learning in solving SAT and MaxSAT problems. Selsam et al. [38] train a graph neural network classifier to predict satisfiability of random SAT problems. The model learns to search for satisfying assignments during inference for problem instances that are larger than the ones seen during training. Similarily, Li and Si [25] present a framework for SAT solving utilizing Belief Propagation (BP). They introduce a Graph Neural Network (GNN) architecture that embeds BP in the latent space and use the trained model for marginal inference to obtain satisfying assignments for SAT. Other learning-based methods have been proposed for MaxSAT. For example, Kumar et al. [23] propose a method for learning combinatorial optimization problems from contextual examples, which indicate whether solutions are adequate in specific contexts. The framework uses the MaxSAT formulation and considers a specific setting where example solutions and negative solutions are context-specific. In the same way, Berden et al. [9] train MaxSAT models from examples and use a genetic algorithm that decreases the number of evaluations needed to find good models. Marino [28] uses a supervised learning approach to develop an algorithm that can fix Boolean variables based on local information from the Survey Propagation algorithm. In general, this line of research collects training data on MaxSAT instances, and trains a model that generalizes for bigger problems. Our method is different as it does not necessitate the gathering of any training data.

NN-based Combinatorial Optimization. An emerging approach to incorporating machine learning for tackling combinatorial optimization problems involves regarding the neural network’s training process as the problem-solving procedure for a given instance. In this paradigm, a neural network is dynamically generated based on the problem instance, and through multiple iterations of forward and backpropagation, a series of learnable parameters embody the ultimate solution to the problem instance. The absence of data requirements for training makes this method particularly attractive for deployment in diverse settings. For instance, Alkhouri et al. [4] introduce a technique that operates on graphs and addresses the Maximum Independent Set (MIS) problem [42]. The core concept involves representing the MIS problem as a single differentiable function, which facilitates the utilization of differentiable solutions. Schuetz et al. [36] present a Graph Neural Network (GNN)-based solver for approximately solving combinatorial optimization problems by encoding the optimization problem using a Hamiltonian (cost function) and associating binary decision variables with vertices in an undirected graph. A relaxation strategy is applied to generate a differentiable loss function for learning the GNN’s node representations. After several iterations, a softmax activation is applied to obtain one-dimensional probabilistic node assignments, which are then mapped back to integer variables using a projection heuristic. Both work can be viewed as a Linear Programming (LP) relaxation of the Mixed-Integer Linear Programming (MILP) formulation of the investigated problems, i.e. Maximum Independent Set (MIS), and Maximim Cut (MaxCUT). While our approach is inspired by the same ideas, we address a different problem (i.e. MaxSAT), and eliminate the use of GNNs.

4 Methodology

Key Idea. Our proposed method, torchmSAT, is conceptually inspired by the technique of Linear Programming (LP) relaxations often utilized in the field of Mixed Integer Linear Programming (MILP) [1]. In MILP, certain variables are constrained to take only integer values which makes the optimization problem NP-hard. A common strategy to tackle this issue is to apply LP relaxation, where the integer constraints on the variables are relaxed, allowing them to take on continuous values. Similarly, in our method, we treat the binary variables of the MaxSAT problem as continuous, allowing us to leverage the power of differentiable optimization methods. The challenge we try to address becomes constructing a single differentiable function capable of approximating solutions for MaxSAT problems. Once derived, implementing the optimization process using a neural network allows us to capitalize on existing deep learning libraries, and their acceleration capabilities.

In what comes next, we begin by deriving a single differentiable function for MaxSAT using a novel neural network architecture. We describe the key components, their interactions, and their roles in the solving process. Following this, we show how our solver is different in finding unsatisfiable cores and how the solving process proceeds.

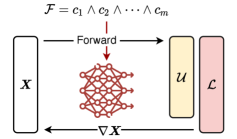

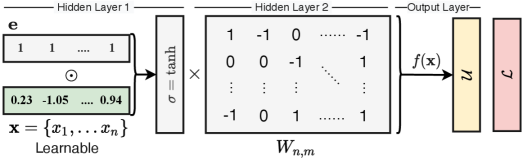

The Neural Network Architecture. Our proposed single differentiable function for MaxSAT is modeled as a neural network. Figure 2 presents a high-level overview of its architecture. At the heart of our solving algorithm lies a vector, , which represents the relaxations of the Boolean variables in a given MaxSAT instance (refer to Equation 4). The vector is the only trainable set of parameters within the neural network. Although consists of real numbers, at any given point in time during the solving process, we can project it back into the binary domain to derive the variable assignments. To reverse the relaxation, we interpret when , and otherwise. At the onset of the solving process, is initialized with random real values. Interpreting the values at this point merely corresponds to assigning random Boolean values to the variables. Throughout the solving process, the values of are incrementally updated in a direction that minimizes the loss. This specific design prompts the network to progressively learn to satisfy an increasing number of clauses.

In the context of backpropagation and the chain rule, the derivative of a multiplication operation with respect to its inputs distributes the gradients. Therefore, the first hidden layer performs a point-wise multiplication between and a vector of identical length containing all values set to 1, denoted as . This vector serves to propagate the gradient to the corresponding s during the backpropagation process. After that, and to stabilize backpropagation, a activation function is applied to constrain the values of the first layer within the range of -1 to 1. The activation prevents the output of the first layer from reaching excessively high or low values, which could potentially terminate the learning (i.e. solving) prematurely. The rest of the neural network is fixed (i.e. non-learnable) and architected to encode our novel single differentiable function.

The second layer uniquely encodes a given MaxSAT instance. A matrix is initialized at the start of the solving process, with rows representing variables ’s and columns representing clauses ’s. A value of is set if a variable appears in clause , while a value of is set if its negation appears in . All other entries in the matrix are set to 0. This formulation results in our unique differentiable function, , which generates a vector of length :

where is element-wise multiplication, and is a matrix multiplication. The entries of this vector, , indicate the (un)satisfiability status of each clause, serving as a dual-purpose: an evaluator for SAT and a guide for variable gradients.

Example. Consider the following MaxSAT problem in CNF:

This formula is not satisfiable. It is evident that any combination of binary assignments for and can satisfy, at most, two clauses. Assume that is initialized randomly as , which is interpreted as and since both values are positive. The output of the forward pass is:

Similar to the reverse relaxation operation of , the output of is interpreted similarly, i.e. is satisfied if its activation in is positive, and vice versa. In this case, the output indicates that the first two clauses are unsatisfied, while only the last clause is satisfied. This represents the first role of as a SAT evaluator. To satisfy the first two clauses, the values of need to become negative so that the activations of the first two clauses in are positive. This is where the role of the loss function comes in. The loss function, , computes the MSE between and a zero vector for the unsatisfied clauses. A single iteration of backpropagation updates the values of and in the negative direction of the gradient, moving their values closer to zero. For instance, with a learning rate of , the new values of will be . The process continues as the forward-loss-backward loop attempts to satisfy the first two clauses. However, once the first two clauses are satisfied (i.e., ’s are negative, representing a Boolean zero when reversing the relaxation), the third clause becomes unsatisfied. This is when the training process explores other ways of satisfying more clauses.

Unsatisfiable Cores Contrary to conventional MaxSAT solvers discussed in Section 3 that rely on a SAT oracle to identify the set of unsatisfied clauses, , if the formula is determined to be unsatisfiable, our proposed neural network model directly infers the unsatisfied clauses from . Nevertheless, for larger problems, the activations of alone might not be enough to ascertain satisfiability. The reason is that the matrix multiplication occurring in the first two layers is a non-injective operation. This implies that distinct assignments for might yield identical activations for . For instance, the inputs and would result in the same negative activation for the aforementioned third clause, despite the fact that the de-relaxed variable assignments are totally different; one corresponds to while the other corresponds to . To overcome this, we use an alternative method to establish (un)satisfiability of clauses. We construct a vector, , of length , where each entry corresponds to the negative of the number of variables present in its respective clause, i.e., . We also project onto its Boolean domain, such that if , and otherwise. This makes a clause in unsatisfiable if and only if . We use this projection to mask out satisfied clauses in the loss function, and only calculate it for unsatisfied clauses. Consequently, gradients are computed exclusively for those variables that contribute to the unsatisfied clauses, resulting in a more efficient optimization.

The Solving Process. In contrast to conventional MaxSAT solvers, which necessitate invoking a SAT oracle, pausing to await a result, and then coordinating the subsequent call, our algorithm operates differently. torchmSAT operates in a progressive manner, which implies that it incrementally improves the solutions as the search process unfolds. Algorithm 1 shows the main steps of our solving process. The solving process is an iterative procedure that alternates between a forward pass, loss calculation, and backward propagation. In Line 1, we construct the neural network layers based on the given problem instance. While the time limit has not been exhausted, the forward pass, in Line 3, takes the current assignments of the Boolean variables, , and computes the output of the network, . In addition, it calculates the reversed relaxation of , denoted as , which is used to determine clauses are currently satisfied by the assignment in Line 4. In Lines 5-7, if the current assignment satisfies more clauses than previously found solutions, it outputs this result and updates the value of the best cost (the lower, the better). In Line 8, the loss function calculates the MSE between and a zero vector of the same length, reflecting the number of unsatisfied clauses. The backpropagation step, in Line 9, adjusts the values of according to the gradient calculated by backpropagation, moving the assignments in a direction that reduces the number of unsatisfied clauses. This process continues until all clauses are satisfied, as indicated by a zero loss, or a given time limit is reached.

5 Experiments

In this section, we present a series of experiments designed to evaluate the performance of our proposed algorithm and compare it with existing state-of-the-art MaxSAT solvers. Our experiments aim to demonstrate the effectiveness of the method in various scenarios and showcase its strengths and limitations. We describe the experimental setup, including the problem instances used, the choice of benchmarks, and the evaluation metrics employed. Additionally, we provide a detailed analysis of the experimental results, highlighting key observations and insights. Through these experiments, we aim to validate the applicability of our approach and its potential impact on combinatorial optimization.

Input: MaxSAT instance in conjunctive normal form (CNF). See Equation 4

Parameters: Time limit ()

Output: Assignment for Boolean variables () that maximizes satisfiability

Setup. We use PyTorch (v1.10.2) [35]. No other dependencies are required to run torchmSAT (e.g. no calls to a SAT oracle is made). We use Adam optimizer for backprogation with a learning rate of 1e-4. For reproducibility and extensibility of our work, we use the PySAT toolkit (v0.1.8.dev1) [15] to synthesize hard problem instances and compare against existing methods. The toolkit provides a unified interface to various state-of-the-art SAT and MaxSAT solvers. The experimental results are obtained using a machine with Intel Xeon E5-2680 [email protected] GHz, 128GB RAM. For experiments on hardware acceleration, a Tesla P40 GPU is utilized to run torchmSAT.

|

|

|||

| T=1min. | T=5mins. | T=10mins. | |

|

CB |

|

|

|

|

GT |

|

|

|

|

PAR |

|

|

|

|

PHP |

|

|

|

Evaluation Criteria. We will use a multi-faceted approach to thoroughly assess the performance of our proposed method. First, we will investigate the cost obtained by our solver on problem instances of varying complexity and sizes, and under different time constraints. The imposition of a time limit in this scenario is crucial given the NP-hard nature of MaxSAT, which implies that exploring and assessing the entire feasible region would necessitate exponential time. Secondly, we will calculate the MaxSAT regret which quantifies the difference between the best solution found by any baseline solver and the solution obtained by our solver. Lastly, we will assess the impact of leveraging GPU acceleration on the performance of our solver.

Dataset. While the datasets of the popular MaxSAT evaluations 111https://maxsat-evaluations.github.io/ represent a set of non-trivial SAT instances, we opt to test our methods on smaller, yet hard, dataset to validate the applicability of the method. Although our method does not outperform one of the state-of-the-art SAT solvers, torchmSAT offers a completely revamped method to solve MaxSAT problems without the need for a SAT oracle. We employ PySAT to synthesize four representative datasets, encompassing combinatorial principles extensively examined in the context of propositional proof complexity. Specifically, they implement encodings for the pigeonhole principle (PHP) [11], the greater-than (ordering) principle (GT) [22], the mutilated chessboard principle (CB) [3], and the parity principle (PAR) [2]. For each principle, we synthesize 50 MaxSAT instances of increasing sizes where all problem instances are not satisfiable. In other words, there is at least one clause that cannot be satisfied, and the goal of MaxSAT solvers to find a feasible variable assignment that maximizes satisfiability. Synthesis scripts are available in Appendix A.

Comparing with Existing MaxSAT Solvers. As discussed in Section 2, existing MaxSAT algorithms proceed by making several calls to an underlying SAT oracle (i.e. solver). In PySAT, both the RC2 and the FM algorithms start their solving process by making a call to the underlying SAT solver, which reports that is unsatisfiable and returns an unsatisfiable core, . The algorithm proceeds by alternating between relaxing clauses and calling the underlying SAT solver. As depicted in Figure 3, both methods struggle to find any assignment for moderately large problem instances. This is primarily due to their initial step, which involves invoking the SAT solver to establish unsatisfiability. Conversely, the LSU algorithm identifies an initial assignment carrying a suboptimal cost, then incrementally searches for superior assignments to lower the cost. This explains its position as the top-performing algorithm on the dataset. Our proposed method, torchmSAT, excels at progressively generating viable solutions that surpass those of FM and RC2. Indeed, when considering the GT dataset, torchmSAT’s performance approaches that of LSU.

In Table 1, we compute the average regret of RC2, FM, and torchmSAT relative to LSU, the top-performing MaxSAT solver. For every problem instance, we calculate a solver’s regret as the difference between the cost it achieves and the cost LSU achieves. As the results clearly show, torchmSAT generally demonstrates a markedly lower average regret. Raw results for individual problem instances can be found in Appendix B.

GPU Acceleration. The main premise of our method is the novel application of contemporary GPUs and the evolving ecosystem of deep learning libraries and accelerators in solving MaxSAT instances. In this section, we aim to demonstrate that by merely altering where the neural network is initialized, without any modifications to its structure or the solving process described earlier. As shown in Figure 4, executing torchmSAT on a GPU yields solutions to larger MaxSAT instances with lower costs within the same time limit. This can be attributed to the enhanced speed of GPU computations, which accelerates the forward-loss-backward loop (Algorithm 1), thereby enabling more extensive exploration of the feasible region of variable assignments.

The use of GPUs in torchmSAT advances MaxSAT solving, offering an accelerated and efficient exploration of the solution space. This acceleration is particularly transformative given that the algorithm’s progressive nature means it benefits directly from more rapid computations, enabling it to find better solutions within the same time frame. In contrast, traditional MaxSAT solvers are inherently sequential and cannot take advantage of GPU acceleration.

6 Discussion

| RC2 | FM | torchmSAT | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Dataset | 1min | 5mins | 10mins | 1min | 5mins | 10mins | 1min | 5mins | 10mins |

| CB | 12396 | 12396 | 12396 | 12408 | 12408 | 12408 | 1981 | 1496 | 1148 |

| GT | 31852 | 31245 | 30425 | 32552 | 32467 | 32206 | 30 | 13 | 2 |

| PAR | 130013 | 130013 | 130013 | 130040 | 130013 | 130013 | 28272 | 13363 | 11777 |

| PHP | 16688 | 16676 | 16676 | 16688 | 16688 | 16688 | 1384 | 55 | 47 |

|

|

Limitations. While our proposed method presents several notable advantages, it also has some limitations. First, it currently only works for unweighted MaxSAT instances, meaning it cannot handle instances where different clauses have different weights or importance. Secondly, the algorithm lacks a definitive stopping criterion for backpropagation unless the optimal solution is zero. This means it can be difficult to determine when the algorithm has reached an optimal solution or when to halt the process except using timeouts. Lastly, the memory requirement for the method is , which can be prohibitive for large instances. Although the matrix W is sparse, which could potentially be leveraged to save memory, our current implementation does not take advantage of this sparsity.

Conclusions and Future Work. In conclusion, we have presented a new method for MaxSAT solving that capitalizes on the use of neural networks. Our method, named torchmSAT, is a progressive approach that continually refines and improves its solutions over time. One of the core advantages of torchmSAT is its independence from a SAT oracle, a feature that differentiates it from traditional MaxSAT solvers. This makes our method more self-sufficient and less reliant on external components. Experimental results show that our method outperforms two existing MaxSAT solvers, and is on par with another state-of-the-art solver for small to medium problem sizes. Additionally, torchmSAT is able to benefit from GPU acceleration, allowing for more rapid exploration of feasible solution regions. Despite some limitations, torchmSAT represents a promising step forward in MaxSAT problem solving. For future work, we aim to extend the capabilities of our method to handle weighted MaxSAT instances, develop a clear stopping criteria for backpropagation, and optimize memory usage by leveraging the sparsity of the matrix . This could lead to a more efficient, versatile and powerful solver that can tackle even more complex problems.

Reproducibility. The implementation of torchmSAT is provided as a Python package in the supplementary material. The dataset can be synthesized using scripts in Appendix A.

References

- Agmon [1954] Shmuel Agmon. The relaxation method for linear inequalities. Canadian Journal of Mathematics, 6:382–392, 1954.

- Ajtai [1990] Miklos Ajtai. Parity and the pigeonhole principle. In Feasible Mathematics: A Mathematical Sciences Institute Workshop, Ithaca, New York, June 1989, pages 1–24. Springer, 1990.

- Alekhnovich [2004] Michael Alekhnovich. Mutilated chessboard problem is exponentially hard for resolution. Theoretical Computer Science, 310(1-3):513–525, 2004.

- Alkhouri et al. [2022] Ismail R Alkhouri, George K Atia, and Alvaro Velasquez. A differentiable approach to the maximum independent set problem using dataless neural networks. Neural Networks, 155:168–176, 2022.

- Ansótegui et al. [2013] Carlos Ansótegui, Maria Luisa Bonet, and Jordi Levy. SAT-based MaxSAT algorithms. Artificial Intelligence, 196:77–105, 2013.

- Audemard and Simon [2018] Gilles Audemard and Laurent Simon. On the glucose SAT solver. International Journal on Artificial Intelligence Tools, 27(01):1840001, 2018.

- Bello et al. [2016] Irwan Bello, Hieu Pham, Quoc V Le, Mohammad Norouzi, and Samy Bengio. Neural combinatorial optimization with reinforcement learning. arXiv preprint arXiv:1611.09940, 2016.

- Bengio et al. [2021] Yoshua Bengio, Andrea Lodi, and Antoine Prouvost. Machine learning for combinatorial optimization: a methodological tour d’horizon. European Journal of Operational Research, 290(2):405–421, 2021.

- Berden et al. [2022] Senne Berden, Mohit Kumar, Samuel Kolb, and Tias Guns. Learning MAX-SAT models from examples using genetic algorithms and knowledge compilation. In 28th International Conference on Principles and Practice of Constraint Programming (CP 2022). Schloss Dagstuhl-Leibniz-Zentrum für Informatik, 2022.

- Biere et al. [2009] Armin Biere, Marijn Heule, and Hans van Maaren. Handbook of satisfiability, volume 185. IOS press, 2009.

- Cook and Reckhow [1979] Stephen A Cook and Robert A Reckhow. The relative efficiency of propositional proof systems. The journal of symbolic logic, 44(1):36–50, 1979.

- Fleury and Heisinger [2020] ABKFM Fleury and Maximilian Heisinger. CaDiCaL, kissat, paracooba, plingeling and treengeling entering the sat competition 2020. SAT COMPETITION, 2020:50, 2020.

- Gasse et al. [2019] Maxime Gasse, Didier Chételat, Nicola Ferroni, Laurent Charlin, and Andrea Lodi. Exact combinatorial optimization with graph convolutional neural networks. Advances in neural information processing systems, 32, 2019.

- Hu et al. [2022] Xinyi Hu, Jasper Lee, Jimmy Lee, and Allen Z Zhong. Branch & learn for recursively and iteratively solvable problems in predict+ optimize. Advances in Neural Information Processing Systems, 35:25807–25817, 2022.

- Ignatiev et al. [2018] Alexey Ignatiev, Antonio Morgado, and Joao Marques-Silva. PySAT: A Python toolkit for prototyping with SAT oracles. In SAT, pages 428–437, 2018. doi: 10.1007/978-3-319-94144-8_26. URL https://doi.org/10.1007/978-3-319-94144-8_26.

- Ignatiev et al. [2019] Alexey Ignatiev, Antonio Morgado, and Joao Marques-Silva. RC2: an efficient maxsat solver. Journal on Satisfiability, Boolean Modeling and Computation, 11(1):53–64, 2019.

- Ivančić et al. [2008] Franjo Ivančić, Zijiang Yang, Malay K Ganai, Aarti Gupta, and Pranav Ashar. Efficient sat-based bounded model checking for software verification. Theoretical Computer Science, 404(3):256–274, 2008.

- Karp [1972] Richard M. Karp. Reducibility among Combinatorial Problems. In Raymond E. Miller, James W. Thatcher, and Jean D. Bohlinger, editors, Complexity of Computer Computations, pages 85–103. Springer US, Boston, MA, 1972. ISBN 978-1-4684-2001-2. doi: 10.1007/978-1-4684-2001-2_9.

- Khalil et al. [2017a] Elias Khalil, Hanjun Dai, Yuyu Zhang, Bistra Dilkina, and Le Song. Learning combinatorial optimization algorithms over graphs. Advances in neural information processing systems, 30, 2017a.

- Khalil et al. [2017b] Elias B Khalil, Bistra Dilkina, George L Nemhauser, Shabbir Ahmed, and Yufen Shao. Learning to run heuristics in tree search. In IJCAI, pages 659–666, 2017b.

- Kool et al. [2018] Wouter Kool, Herke Van Hoof, and Max Welling. Attention, learn to solve routing problems! arXiv preprint arXiv:1803.08475, 2018.

- Krishnamurthy [1985] Balakrishnan Krishnamurthy. Short proofs for tricky formulas. Acta informatica, 22:253–275, 1985.

- Kumar et al. [2023] Mohit Kumar, Samuel Kolb, Stefano Teso, and Luc De Raedt. Learning MAX-SAT from contextual examples for combinatorial optimisation. Artificial Intelligence, 314:103794, 2023.

- Li et al. [2022] Chu-Min Li, Zhenxing Xu, Jordi Coll, Felip Manyà, Djamal Habet, and Kun He. Boosting branch-and-bound MaxSAT solvers with clause learning. AI Communications, 35(2):131–151, 2022.

- Li and Si [2022] Zhaoyu Li and Xujie Si. NSNet: A General Neural Probabilistic Framework for Satisfiability Problems. In S. Koyejo, S. Mohamed, A. Agarwal, D. Belgrave, K. Cho, and A. Oh, editors, Advances in Neural Information Processing Systems, volume 35, pages 25573–25585. Curran Associates, Inc., 2022. URL https://proceedings.neurips.cc/paper_files/paper/2022/file/a40462acc6959034c6aa6dfb8e696415-Paper-Conference.pdf.

- Li et al. [2018] Zhuwen Li, Qifeng Chen, and Vladlen Koltun. Combinatorial optimization with graph convolutional networks and guided tree search. Advances in neural information processing systems, 31, 2018.

- Manquinho et al. [2009] Vasco Manquinho, Joao Marques-Silva, and Jordi Planes. Algorithms for weighted boolean optimization. In Theory and Applications of Satisfiability Testing-SAT 2009: 12th International Conference, SAT 2009, Swansea, UK, June 30-July 3, 2009. Proceedings 12, pages 495–508. Springer, 2009.

- Marino [2021] Raffaele Marino. Learning from survey propagation: a neural network for max-e-3-sat. Machine Learning: Science and Technology, 2(3):035032, 2021.

- Martins et al. [2014] Ruben Martins, Saurabh Joshi, Vasco Manquinho, and Inês Lynce. Incremental cardinality constraints for maxsat. In Principles and Practice of Constraint Programming: 20th International Conference, CP 2014, Lyon, France, September 8-12, 2014. Proceedings 20, pages 531–548. Springer, 2014.

- Mazyavkina et al. [2021] Nina Mazyavkina, Sergey Sviridov, Sergei Ivanov, and Evgeny Burnaev. Reinforcement learning for combinatorial optimization: A survey. Computers & Operations Research, 134:105400, 2021.

- Morgado et al. [2013] António Morgado, Federico Heras, Mark Liffiton, Jordi Planes, and Joao Marques-Silva. Iterative and core-guided MaxSAT solving: A survey and assessment. Constraints, 18:478–534, 2013.

- Morgado et al. [2014a] António Morgado, Carmine Dodaro, and Joao Marques-Silva. Core-guided MaxSAT with soft cardinality constraints. In Principles and Practice of Constraint Programming: 20th International Conference, CP 2014, Lyon, France, September 8-12, 2014. Proceedings 20, pages 564–573. Springer, 2014a.

- Morgado et al. [2014b] Antonio Morgado, Alexey Ignatiev, and Joao Marques-Silva. MSCG: Robust core-guided MaxSAT solving. Journal on Satisfiability, Boolean Modeling and Computation, 9(1):129–134, 2014b.

- Nieuwenhuis et al. [2006] Robert Nieuwenhuis, Albert Oliveras, and Cesare Tinelli. Solving SAT and SAT modulo theories: From an abstract davis–putnam–logemann–loveland procedure to dpll (t). Journal of the ACM (JACM), 53(6):937–977, 2006.

- Paszke et al. [2019] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. Pytorch: An imperative style, high-performance deep learning library. Advances in neural information processing systems, 32, 2019.

- Schuetz et al. [2022] Martin JA Schuetz, J Kyle Brubaker, and Helmut G Katzgraber. Combinatorial optimization with physics-inspired graph neural networks. Nature Machine Intelligence, 4(4):367–377, 2022.

- Selsam and Bjørner [2019] Daniel Selsam and Nikolaj Bjørner. Guiding high-performance SAT solvers with unsat-core predictions. In Theory and Applications of Satisfiability Testing–SAT 2019: 22nd International Conference, SAT 2019, Lisbon, Portugal, July 9–12, 2019, Proceedings 22, pages 336–353. Springer, 2019.

- Selsam et al. [2019] Daniel Selsam, Matthew Lamm, Benedikt Bünz, Percy Liang, Leonardo de Moura, and David L. Dill. Learning a SAT solver from single-bit supervision. In International Conference on Learning Representations, 2019. URL https://openreview.net/forum?id=HJMC_iA5tm.

- Shabani and Alizadeh [2018] Ahmad Shabani and Bijan Alizadeh. PMTP: A MAX-SAT-based approach to detect hardware trojan using propagation of maximum transition probability. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 39(1):25–33, 2018.

- Si et al. [2017] Xujie Si, Xin Zhang, Radu Grigore, and Mayur Naik. Maximum satisfiability in software analysis: Applications and techniques. In Computer Aided Verification: 29th International Conference, CAV 2017, Heidelberg, Germany, July 24-28, 2017, Proceedings, Part I 30, pages 68–94. Springer, 2017.

- Sorensson and Een [2005] Niklas Sorensson and Niklas Een. Minisat v1. 13-a sat solver with conflict-clause minimization. SAT, 53(2005):1–2, 2005.

- Tarjan and Trojanowski [1977] Robert Endre Tarjan and Anthony E Trojanowski. Finding a maximum independent set. SIAM Journal on Computing, 6(3):537–546, 1977.

- Vaezipoor et al. [2021] Pashootan Vaezipoor, Gil Lederman, Yuhuai Wu, Chris Maddison, Roger B Grosse, Sanjit A Seshia, and Fahiem Bacchus. Learning branching heuristics for propositional model counting. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 35, pages 12427–12435, 2021.

- Wang et al. [2021] Runzhong Wang, Zhigang Hua, Gan Liu, Jiayi Zhang, Junchi Yan, Feng Qi, Shuang Yang, Jun Zhou, and Xiaokang Yang. A bi-level framework for learning to solve combinatorial optimization on graphs. Advances in Neural Information Processing Systems, 34:21453–21466, 2021.

Appendices

Appendix A Dataset Details

We employ PySAT to synthesize four representative datasets, encompassing combinatorial principles extensively examined in the context of propositional proof complexity. Specifically, they implement encodings for the pigeonhole principle (PHP) [11], the greater-than (ordering) principle (GT) [22], the mutilated chessboard principle (CB) [3], and the parity principle (PAR) [2]. For each principle, we synthesize 50 MaxSAT instances of increasing sizes where all problem instances are not satisfiable. In other words, there is at least one clause that cannot be satisfied, and the goal of MaxSAT solvers to find a feasible variable assignment that maximizes satisfiability.

Below is the synthesis script.

For each dataset, we provide detailed statistics on the number of Boolean variables and clauses in Table 2.

| CB [3] | GT [22] | PAR [2] | PHP [11] | |||||

|---|---|---|---|---|---|---|---|---|

| # vars | # clauses | # vars | # clauses | # vars | # clauses | # vars | # clauses | |

| 1 | 20 | 32 | 2 | 3 | 3 | 6 | 2 | 3 |

| 2 | 56 | 90 | 6 | 12 | 10 | 35 | 6 | 9 |

| 3 | 108 | 176 | 12 | 34 | 21 | 112 | 12 | 22 |

| 4 | 176 | 290 | 20 | 75 | 36 | 261 | 20 | 45 |

| 5 | 260 | 432 | 30 | 141 | 55 | 506 | 30 | 81 |

| 6 | 360 | 602 | 42 | 238 | 78 | 871 | 42 | 133 |

| 7 | 476 | 800 | 56 | 372 | 105 | 1380 | 56 | 204 |

| 8 | 608 | 1026 | 72 | 549 | 136 | 2057 | 72 | 297 |

| 9 | 756 | 1280 | 90 | 775 | 171 | 2926 | 90 | 415 |

| 10 | 920 | 1562 | 110 | 1056 | 210 | 4011 | 110 | 561 |

| 11 | 1100 | 1872 | 132 | 1398 | 253 | 5336 | 132 | 738 |

| 12 | 1296 | 2210 | 156 | 1807 | 300 | 6925 | 156 | 949 |

| 13 | 1508 | 2576 | 182 | 2289 | 351 | 8802 | 182 | 1197 |

| 14 | 1736 | 2970 | 210 | 2850 | 406 | 10991 | 210 | 1485 |

| 15 | 1980 | 3392 | 240 | 3496 | 465 | 13516 | 240 | 1816 |

| 16 | 2240 | 3842 | 272 | 4233 | 528 | 16401 | 272 | 2193 |

| 17 | 2516 | 4320 | 306 | 5067 | 595 | 19670 | 306 | 2619 |

| 18 | 2808 | 4826 | 342 | 6004 | 666 | 23347 | 342 | 3097 |

| 19 | 3116 | 5360 | 380 | 7050 | 741 | 27456 | 380 | 3630 |

| 20 | 3440 | 5922 | 420 | 8211 | 820 | 32021 | 420 | 4221 |

| 21 | 3780 | 6512 | 462 | 9493 | 903 | 37066 | 462 | 4873 |

| 22 | 4136 | 7130 | 506 | 10902 | 990 | 42615 | 506 | 5589 |

| 23 | 4508 | 7776 | 552 | 12444 | 1081 | 48692 | 552 | 6372 |

| 24 | 4896 | 8450 | 600 | 14125 | 1176 | 55321 | 600 | 7225 |

| 25 | 5300 | 9152 | 650 | 15951 | 1275 | 62526 | 650 | 8151 |

| 26 | 5720 | 9882 | 702 | 17928 | 1378 | 70331 | 702 | 9153 |

| 27 | 6156 | 10640 | 756 | 20062 | 1485 | 78760 | 756 | 10234 |

| 28 | 6608 | 11426 | 812 | 22359 | 1596 | 87837 | 812 | 11397 |

| 29 | 7076 | 12240 | 870 | 24825 | 1711 | 97586 | 870 | 12645 |

| 30 | 7560 | 13082 | 930 | 27466 | 1830 | 108031 | 930 | 13981 |

| 31 | 8060 | 13952 | 992 | 30288 | 1953 | 119196 | 992 | 15408 |

| 32 | 8576 | 14850 | 1056 | 33297 | 2080 | 131105 | 1056 | 16929 |

| 33 | 9108 | 15776 | 1122 | 36499 | 2211 | 143782 | 1122 | 18547 |

| 34 | 9656 | 16730 | 1190 | 39900 | 2346 | 157251 | 1190 | 20265 |

| 35 | 10220 | 17712 | 1260 | 43506 | 2485 | 171536 | 1260 | 22086 |

| 36 | 10800 | 18722 | 1332 | 47323 | 2628 | 186661 | 1332 | 24013 |

| 37 | 11396 | 19760 | 1406 | 51357 | 2775 | 202650 | 1406 | 26049 |

| 38 | 12008 | 20826 | 1482 | 55614 | 2926 | 219527 | 1482 | 28197 |

| 39 | 12636 | 21920 | 1560 | 60100 | 3081 | 237316 | 1560 | 30460 |

| 40 | 13280 | 23042 | 1640 | 64821 | 3240 | 256041 | 1640 | 32841 |

| 41 | 13940 | 24192 | 1722 | 69783 | 3403 | 275726 | 1722 | 35343 |

| 42 | 14616 | 25370 | 1806 | 74992 | 3570 | 296395 | 1806 | 37969 |

| 43 | 15308 | 26576 | 1892 | 80454 | 3741 | 318072 | 1892 | 40722 |

| 44 | 16016 | 27810 | 1980 | 86175 | 3916 | 340781 | 1980 | 43605 |

| 45 | 16740 | 29072 | 2070 | 92161 | 4095 | 364546 | 2070 | 46621 |

| 46 | 17480 | 30362 | 2162 | 98418 | 4278 | 389391 | 2162 | 49773 |

| 47 | 18236 | 31680 | 2256 | 104952 | 4465 | 415340 | 2256 | 53064 |

| 48 | 19008 | 33026 | 2352 | 111769 | 4656 | 442417 | 2352 | 56497 |

| 49 | 19796 | 34400 | 2450 | 118875 | 4851 | 470646 | 2450 | 60075 |

| 50 | 20600 | 35802 | 2550 | 126276 | 5050 | 500051 | 2550 | 63801 |

Appendix B Raw Results

In Table 4, we provide the raw results discussed in the Experiments Section 5. It is a detailed expansion of Table 1. The goal is to give a deeper look into Figure 3, and see how numbers relate to each other.

| RC2 | FM | LSU | torchmSAT | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1m | 5m | 10m | 1m | 5m | 10m | 1m | 5m | 10m | 1m | 5m | 10m | ||

| CB | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 2 | 2 | 2 |

| 2 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 2 | 2 | 2 | |

| 3 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 4 | 2 | 2 | |

| 4 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 11 | 7 | 6 | |

| 5 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 14 | 13 | 13 | |

| 6 | 1 | 1 | 1 | 602 | 602 | 602 | 2 | 2 | 2 | 27 | 21 | 21 | |

| 7 | 800 | 800 | 800 | 800 | 800 | 800 | 2 | 2 | 2 | 30 | 30 | 30 | |

| 8 | 1026 | 1026 | 1026 | 1026 | 1026 | 1026 | 2 | 2 | 2 | 55 | 54 | 53 | |

| 9 | 1280 | 1280 | 1280 | 1280 | 1280 | 1280 | 2 | 2 | 2 | 63 | 63 | 63 | |

| 10 | 1562 | 1562 | 1562 | 1562 | 1562 | 1562 | 2 | 2 | 2 | 74 | 74 | 74 | |

| 11 | 1872 | 1872 | 1872 | 1872 | 1872 | 1872 | 2 | 2 | 2 | 99 | 99 | 99 | |

| 12 | 2210 | 2210 | 2210 | 2210 | 2210 | 2210 | 2 | 2 | 2 | 105 | 105 | 105 | |

| 13 | 2576 | 2576 | 2576 | 2576 | 2576 | 2576 | 2 | 2 | 2 | 153 | 153 | 153 | |

| 14 | 2970 | 2970 | 2970 | 2970 | 2970 | 2970 | 2 | 2 | 2 | 132 | 132 | 132 | |

| 15 | 3392 | 3392 | 3392 | 3392 | 3392 | 3392 | 2 | 2 | 2 | 193 | 193 | 193 | |

| 16 | 3842 | 3842 | 3842 | 3842 | 3842 | 3842 | 2 | 2 | 2 | 230 | 230 | 230 | |

| 17 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 2 | 2 | 2 | 260 | 260 | 260 | |

| 18 | 4826 | 4826 | 4826 | 4826 | 4826 | 4826 | 2 | 2 | 2 | 304 | 304 | 304 | |

| 19 | 5360 | 5360 | 5360 | 5360 | 5360 | 5360 | 2 | 2 | 2 | 288 | 288 | 288 | |

| 20 | 5922 | 5922 | 5922 | 5922 | 5922 | 5922 | 2 | 2 | 2 | 317 | 317 | 317 | |

| 21 | 6512 | 6512 | 6512 | 6512 | 6512 | 6512 | 2 | 2 | 2 | 364 | 364 | 364 | |

| 22 | 7130 | 7130 | 7130 | 7130 | 7130 | 7130 | 2 | 2 | 2 | 391 | 391 | 391 | |

| 23 | 7776 | 7776 | 7776 | 7776 | 7776 | 7776 | 2 | 2 | 2 | 550 | 441 | 441 | |

| 24 | 8450 | 8450 | 8450 | 8450 | 8450 | 8450 | 2 | 2 | 2 | 661 | 489 | 489 | |

| 25 | 9152 | 9152 | 9152 | 9152 | 9152 | 9152 | 2 | 2 | 2 | 1426 | 502 | 502 | |

| 26 | 9882 | 9882 | 9882 | 9882 | 9882 | 9882 | 2 | 2 | 2 | 1569 | 605 | 605 | |

| 27 | 10640 | 10640 | 10640 | 10640 | 10640 | 10640 | 3 | 2 | 2 | 1687 | 698 | 698 | |

| 28 | 11426 | 11426 | 11426 | 11426 | 11426 | 11426 | 3 | 2 | 2 | 1833 | 725 | 725 | |

| 29 | 12240 | 12240 | 12240 | 12240 | 12240 | 12240 | 4 | 2 | 2 | 1995 | 787 | 787 | |

| 30 | 13082 | 13082 | 13082 | 13082 | 13082 | 13082 | 3 | 3 | 3 | 2116 | 799 | 799 | |

| 31 | 13952 | 13952 | 13952 | 13952 | 13952 | 13952 | 5 | 5 | 5 | 2280 | 887 | 887 | |

| 32 | 14850 | 14850 | 14850 | 14850 | 14850 | 14850 | 4 | 3 | 3 | 2423 | 941 | 941 | |

| 33 | 15776 | 15776 | 15776 | 15776 | 15776 | 15776 | 4 | 4 | 3 | 2614 | 920 | 920 | |

| 34 | 16730 | 16730 | 16730 | 16730 | 16730 | 16730 | 6 | 6 | 5 | 2790 | 1093 | 1050 | |

| 35 | 17712 | 17712 | 17712 | 17712 | 17712 | 17712 | 5 | 2 | 2 | 3030 | 1257 | 1117 | |

| 36 | 18722 | 18722 | 18722 | 18722 | 18722 | 18722 | 7 | 7 | 7 | 3227 | 1608 | 1265 | |

| 37 | 19760 | 19760 | 19760 | 19760 | 19760 | 19760 | 5 | 5 | 5 | 3433 | 1536 | 1234 | |

| 38 | 20826 | 20826 | 20826 | 20826 | 20826 | 20826 | 10 | 10 | 9 | 3669 | 1641 | 1364 | |

| 39 | 21920 | 21920 | 21920 | 21920 | 21920 | 21920 | 15 | 15 | 15 | 3797 | 3599 | 1426 | |

| 40 | 23042 | 23042 | 23042 | 23042 | 23042 | 23042 | 6 | 6 | 6 | 4036 | 3785 | 1502 | |

| 41 | 24192 | 24192 | 24192 | 24192 | 24192 | 24192 | 7 | 7 | 7 | 4268 | 4002 | 1688 | |

| 42 | 25370 | 25370 | 25370 | 25370 | 25370 | 25370 | 10 | 10 | 10 | 4465 | 4176 | 1849 | |

| 43 | 26576 | 26576 | 26576 | 26576 | 26576 | 26576 | 9 | 9 | 9 | 4701 | 4424 | 2097 | |

| 44 | 27810 | 27810 | 27810 | 27810 | 27810 | 27810 | 5 | 5 | 5 | 4855 | 4606 | 2242 | |

| 45 | 29072 | 29072 | 29072 | 29072 | 29072 | 29072 | 13 | 13 | 13 | 5109 | 4738 | 2307 | |

| 46 | 30362 | 30362 | 30362 | 30362 | 30362 | 30362 | 12 | 12 | 12 | 5449 | 5034 | 5025 | |

| 47 | 31680 | 31680 | 31680 | 31680 | 31680 | 31680 | 22 | 22 | 22 | 5621 | 5286 | 5243 | |

| 48 | 33026 | 33026 | 33026 | 33026 | 33026 | 33026 | 9 | 9 | 9 | 5857 | 5460 | 5460 | |

| 49 | 34400 | 34400 | 34400 | 34400 | 34400 | 34400 | 16 | 16 | 16 | 6182 | 5740 | 5736 | |

| 50 | 35802 | 35802 | 35802 | 35802 | 35802 | 35802 | 29 | 29 | 29 | 6297 | 5945 | 5936 | |

| Continued on next page | |||||||||||||

| RC2 | FM | LSU | torchmSAT | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1m | 5m | 10m | 1m | 5m | 10m | 1m | 5m | 10m | 1m | 5m | 10m | ||

| GT | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 2 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 3 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 4 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 5 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 6 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 7 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 8 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 9 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 10 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 11 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 12 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 13 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 14 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 15 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 16 | 1 | 1 | 1 | 4233 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 17 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 18 | 1 | 1 | 1 | 6004 | 6004 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 19 | 1 | 1 | 1 | 7050 | 7050 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 20 | 1 | 1 | 1 | 8211 | 8211 | 8211 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 21 | 1 | 1 | 1 | 9493 | 9493 | 9493 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 22 | 10902 | 10902 | 1 | 10902 | 10902 | 10902 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 23 | 12444 | 1 | 1 | 12444 | 12444 | 12444 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 24 | 14125 | 14125 | 1 | 14125 | 14125 | 14125 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 25 | 15951 | 15951 | 1 | 15951 | 15951 | 15951 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 26 | 17928 | 1 | 1 | 17928 | 17928 | 17928 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 27 | 20062 | 20062 | 20062 | 20062 | 20062 | 20062 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 28 | 22359 | 22359 | 22359 | 22359 | 22359 | 22359 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 29 | 24825 | 24825 | 24825 | 24825 | 24825 | 24825 | 1 | 1 | 1 | 9 | 1 | 1 | |

| 30 | 27466 | 27466 | 27466 | 27466 | 27466 | 27466 | 1 | 1 | 1 | 10 | 1 | 1 | |

| 31 | 30288 | 30288 | 30288 | 30288 | 30288 | 30288 | 1 | 1 | 1 | 17 | 1 | 1 | |

| 32 | 33297 | 33297 | 33297 | 33297 | 33297 | 33297 | 4 | 1 | 1 | 19 | 1 | 1 | |

| 33 | 36499 | 36499 | 36499 | 36499 | 36499 | 36499 | 9 | 1 | 1 | 24 | 1 | 1 | |

| 34 | 39900 | 39900 | 39900 | 39900 | 39900 | 39900 | 1 | 1 | 1 | 17 | 1 | 1 | |

| 35 | 43506 | 43506 | 43506 | 43506 | 43506 | 43506 | 3 | 1 | 1 | 31 | 1 | 1 | |

| 36 | 47323 | 47323 | 47323 | 47323 | 47323 | 47323 | 1 | 1 | 1 | 80 | 2 | 1 | |

| 37 | 51357 | 51357 | 51357 | 51357 | 51357 | 51357 | 2 | 2 | 2 | 17 | 5 | 1 | |

| 38 | 55614 | 55614 | 55614 | 55614 | 55614 | 55614 | 4 | 4 | 2 | 39 | 3 | 1 | |

| 39 | 60100 | 60100 | 60100 | 60100 | 60100 | 60100 | 3 | 3 | 3 | 26 | 26 | 1 | |

| 40 | 64821 | 64821 | 64821 | 64821 | 64821 | 64821 | 3 | 3 | 3 | 22 | 9 | 3 | |

| 41 | 69783 | 69783 | 69783 | 69783 | 69783 | 69783 | 1 | 1 | 1 | 59 | 59 | 1 | |

| 42 | 74992 | 74992 | 74992 | 74992 | 74992 | 74992 | 1 | 1 | 1 | 28 | 27 | 1 | |

| 43 | 80454 | 80454 | 80454 | 80454 | 80454 | 80454 | 2 | 2 | 2 | 121 | 13 | 2 | |

| 44 | 86175 | 86175 | 86175 | 86175 | 86175 | 86175 | 4 | 4 | 4 | 85 | 73 | 3 | |

| 45 | 92161 | 92161 | 92161 | 92161 | 92161 | 92161 | 6 | 6 | 6 | 157 | 67 | 2 | |

| 46 | 98418 | 98418 | 98418 | 98418 | 98418 | 98418 | 3 | 3 | 3 | 173 | 49 | 3 | |

| 47 | 104952 | 104952 | 104952 | 104952 | 104952 | 104952 | 3 | 3 | 3 | 121 | 51 | 17 | |

| 48 | 111769 | 111769 | 111769 | 111769 | 111769 | 111769 | 1 | 1 | 1 | 167 | 94 | 1 | |

| 49 | 118875 | 118875 | 118875 | 118875 | 118875 | 118875 | 3 | 3 | 3 | 84 | 84 | 30 | |

| 50 | 126276 | 126276 | 126276 | 126276 | 126276 | 126276 | 3 | 3 | 3 | 167 | 92 | 2 | |

| Continued on next page | |||||||||||||

| RC2 | FM | LSU | torchmSAT | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1m | 5m | 10m | 1m | 5m | 10m | 1m | 5m | 10m | 1m | 5m | 10m | ||

| PAR | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 2 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 3 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 4 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 3 | 1 | 1 | |

| 5 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 3 | 3 | 3 | |

| 6 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 5 | 3 | 3 | |

| 7 | 1 | 1 | 1 | 1380 | 1 | 1 | 1 | 1 | 1 | 7 | 7 | 7 | |

| 8 | 2057 | 2057 | 2057 | 2057 | 2057 | 2057 | 1 | 1 | 1 | 7 | 7 | 7 | |

| 9 | 2926 | 2926 | 2926 | 2926 | 2926 | 2926 | 1 | 1 | 1 | 5 | 5 | 5 | |

| 10 | 4011 | 4011 | 4011 | 4011 | 4011 | 4011 | 1 | 1 | 1 | 9 | 9 | 9 | |

| 11 | 5336 | 5336 | 5336 | 5336 | 5336 | 5336 | 1 | 1 | 1 | 11 | 11 | 9 | |

| 12 | 6925 | 6925 | 6925 | 6925 | 6925 | 6925 | 1 | 1 | 1 | 15 | 10 | 10 | |

| 13 | 8802 | 8802 | 8802 | 8802 | 8802 | 8802 | 1 | 1 | 1 | 17 | 11 | 11 | |

| 14 | 10991 | 10991 | 10991 | 10991 | 10991 | 10991 | 1 | 1 | 1 | 17 | 17 | 15 | |

| 15 | 13516 | 13516 | 13516 | 13516 | 13516 | 13516 | 1 | 1 | 1 | 19 | 17 | 17 | |

| 16 | 16401 | 16401 | 16401 | 16401 | 16401 | 16401 | 1 | 1 | 1 | 23 | 19 | 19 | |

| 17 | 19670 | 19670 | 19670 | 19670 | 19670 | 19670 | 1 | 1 | 1 | 23 | 23 | 23 | |

| 18 | 23347 | 23347 | 23347 | 23347 | 23347 | 23347 | 1 | 1 | 1 | 32 | 28 | 25 | |

| 19 | 27456 | 27456 | 27456 | 27456 | 27456 | 27456 | 1 | 1 | 1 | 38 | 32 | 28 | |

| 20 | 32021 | 32021 | 32021 | 32021 | 32021 | 32021 | 1 | 1 | 1 | 134 | 33 | 31 | |

| 21 | 37066 | 37066 | 37066 | 37066 | 37066 | 37066 | 1 | 1 | 1 | 4553 | 30 | 30 | |

| 22 | 42615 | 42615 | 42615 | 42615 | 42615 | 42615 | 1 | 1 | 1 | 5085 | 34 | 34 | |

| 23 | 48692 | 48692 | 48692 | 48692 | 48692 | 48692 | 1 | 1 | 1 | 5745 | 31 | 31 | |

| 24 | 55321 | 55321 | 55321 | 55321 | 55321 | 55321 | 1 | 1 | 1 | 6344 | 38 | 38 | |

| 25 | 62526 | 62526 | 62526 | 62526 | 62526 | 62526 | 1 | 1 | 1 | 7490 | 45 | 40 | |

| 26 | 70331 | 70331 | 70331 | 70331 | 70331 | 70331 | 1 | 1 | 1 | 8380 | 72 | 46 | |

| 27 | 78760 | 78760 | 78760 | 78760 | 78760 | 78760 | 1 | 1 | 1 | 9294 | 51 | 38 | |

| 28 | 87837 | 87837 | 87837 | 87837 | 87837 | 87837 | 1 | 1 | 1 | 10574 | 107 | 55 | |

| 29 | 97586 | 97586 | 97586 | 97586 | 97586 | 97586 | 1 | 1 | 1 | 11300 | 89 | 73 | |

| 30 | 108031 | 108031 | 108031 | 108031 | 108031 | 108031 | 1 | 1 | 1 | 12677 | 4705 | 53 | |

| 31 | 119196 | 119196 | 119196 | 119196 | 119196 | 119196 | 1 | 1 | 1 | 14268 | 4693 | 44 | |

| 32 | 131105 | 131105 | 131105 | 131105 | 131105 | 131105 | 1 | 1 | 1 | 15063 | 15063 | 126 | |

| 33 | 143782 | 143782 | 143782 | 143782 | 143782 | 143782 | 1 | 1 | 1 | 17086 | 17086 | 258 | |

| 34 | 157251 | 157251 | 157251 | 157251 | 157251 | 157251 | 1 | 1 | 1 | 18917 | 18917 | 466 | |

| 35 | 171536 | 171536 | 171536 | 171536 | 171536 | 171536 | 1 | 1 | 1 | 20864 | 20864 | 1249 | |

| 36 | 186661 | 186661 | 186661 | 186661 | 186661 | 186661 | 1 | 1 | 1 | 21759 | 21759 | 21759 | |

| 37 | 202650 | 202650 | 202650 | 202650 | 202650 | 202650 | 1 | 1 | 1 | 24458 | 24458 | 24458 | |

| 38 | 219527 | 219527 | 219527 | 219527 | 219527 | 219527 | 1 | 1 | 1 | 29072 | 26754 | 26754 | |

| 39 | 237316 | 237316 | 237316 | 237316 | 237316 | 237316 | 1 | 1 | 1 | 37080 | 28506 | 28506 | |

| 40 | 256041 | 256041 | 256041 | 256041 | 256041 | 256041 | 1 | 1 | 1 | 44488 | 30846 | 30846 | |

| 41 | 275726 | 275726 | 275726 | 275726 | 275726 | 275726 | 24 | 1 | 1 | 51984 | 32926 | 32926 | |

| 42 | 296395 | 296395 | 296395 | 296395 | 296395 | 296395 | 1 | 1 | 1 | 57826 | 34824 | 34824 | |

| 43 | 318072 | 318072 | 318072 | 318072 | 318072 | 318072 | 1 | 1 | 1 | 63468 | 37285 | 37285 | |

| 44 | 340781 | 340781 | 340781 | 340781 | 340781 | 340781 | 12 | 1 | 1 | 75878 | 41174 | 41174 | |

| 45 | 364546 | 364546 | 364546 | 364546 | 364546 | 364546 | 92 | 1 | 1 | 92245 | 43744 | 43744 | |

| 46 | 389391 | 389391 | 389391 | 389391 | 389391 | 389391 | 1 | 1 | 1 | 111230 | 46042 | 46042 | |

| 47 | 415340 | 415340 | 415340 | 415340 | 415340 | 415340 | 3 | 1 | 1 | 130513 | 49045 | 49045 | |

| 48 | 442417 | 442417 | 442417 | 442417 | 442417 | 442417 | 58 | 1 | 1 | 157500 | 52467 | 52467 | |

| 49 | 470646 | 470646 | 470646 | 470646 | 470646 | 470646 | 77 | 1 | 1 | 174228 | 55854 | 55854 | |

| 50 | 500051 | 500051 | 500051 | 500051 | 500051 | 500051 | 83 | 1 | 1 | 173900 | 60406 | 60406 | |

| Continued on next page | |||||||||||||

| RC2 | FM | LSU | torchmSAT | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1m | 5m | 10m | 1m | 5m | 10m | 1m | 5m | 10m | 1m | 5m | 10m | ||

| PHP | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 2 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 3 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 4 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 5 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 6 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 7 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 2 | 2 | |

| 8 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 2 | 2 | |

| 9 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 3 | 3 | 3 | |

| 10 | 561 | 1 | 1 | 561 | 561 | 561 | 1 | 1 | 1 | 3 | 3 | 3 | |

| 11 | 738 | 738 | 738 | 738 | 738 | 738 | 1 | 1 | 1 | 5 | 5 | 5 | |

| 12 | 949 | 949 | 949 | 949 | 949 | 949 | 1 | 1 | 1 | 4 | 4 | 4 | |

| 13 | 1197 | 1197 | 1197 | 1197 | 1197 | 1197 | 1 | 1 | 1 | 7 | 6 | 6 | |

| 14 | 1485 | 1485 | 1485 | 1485 | 1485 | 1485 | 1 | 1 | 1 | 9 | 8 | 8 | |

| 15 | 1816 | 1816 | 1816 | 1816 | 1816 | 1816 | 1 | 1 | 1 | 10 | 8 | 8 | |

| 16 | 2193 | 2193 | 2193 | 2193 | 2193 | 2193 | 1 | 1 | 1 | 10 | 10 | 10 | |

| 17 | 2619 | 2619 | 2619 | 2619 | 2619 | 2619 | 1 | 1 | 1 | 13 | 10 | 10 | |

| 18 | 3097 | 3097 | 3097 | 3097 | 3097 | 3097 | 1 | 1 | 1 | 15 | 11 | 11 | |

| 19 | 3630 | 3630 | 3630 | 3630 | 3630 | 3630 | 1 | 1 | 1 | 16 | 12 | 12 | |

| 20 | 4221 | 4221 | 4221 | 4221 | 4221 | 4221 | 1 | 1 | 1 | 13 | 13 | 13 | |

| 21 | 4873 | 4873 | 4873 | 4873 | 4873 | 4873 | 1 | 1 | 1 | 16 | 16 | 15 | |

| 22 | 5589 | 5589 | 5589 | 5589 | 5589 | 5589 | 1 | 1 | 1 | 20 | 19 | 19 | |

| 23 | 6372 | 6372 | 6372 | 6372 | 6372 | 6372 | 1 | 1 | 1 | 32 | 23 | 17 | |

| 24 | 7225 | 7225 | 7225 | 7225 | 7225 | 7225 | 1 | 1 | 1 | 23 | 23 | 22 | |

| 25 | 8151 | 8151 | 8151 | 8151 | 8151 | 8151 | 1 | 1 | 1 | 36 | 27 | 27 | |

| 26 | 9153 | 9153 | 9153 | 9153 | 9153 | 9153 | 1 | 1 | 1 | 37 | 37 | 30 | |

| 27 | 10234 | 10234 | 10234 | 10234 | 10234 | 10234 | 1 | 1 | 1 | 34 | 34 | 34 | |

| 28 | 11397 | 11397 | 11397 | 11397 | 11397 | 11397 | 1 | 1 | 1 | 45 | 31 | 31 | |

| 29 | 12645 | 12645 | 12645 | 12645 | 12645 | 12645 | 1 | 1 | 1 | 43 | 43 | 35 | |

| 30 | 13981 | 13981 | 13981 | 13981 | 13981 | 13981 | 1 | 1 | 1 | 54 | 54 | 43 | |

| 31 | 15408 | 15408 | 15408 | 15408 | 15408 | 15408 | 1 | 1 | 1 | 106 | 47 | 42 | |

| 32 | 16929 | 16929 | 16929 | 16929 | 16929 | 16929 | 1 | 1 | 1 | 75 | 55 | 55 | |

| 33 | 18547 | 18547 | 18547 | 18547 | 18547 | 18547 | 1 | 1 | 1 | 64 | 64 | 57 | |

| 34 | 20265 | 20265 | 20265 | 20265 | 20265 | 20265 | 1 | 1 | 1 | 130 | 49 | 49 | |

| 35 | 22086 | 22086 | 22086 | 22086 | 22086 | 22086 | 1 | 1 | 1 | 84 | 80 | 66 | |

| 36 | 24013 | 24013 | 24013 | 24013 | 24013 | 24013 | 1 | 1 | 1 | 191 | 86 | 85 | |

| 37 | 26049 | 26049 | 26049 | 26049 | 26049 | 26049 | 1 | 1 | 1 | 538 | 117 | 103 | |

| 38 | 28197 | 28197 | 28197 | 28197 | 28197 | 28197 | 1 | 1 | 1 | 1651 | 70 | 70 | |

| 39 | 30460 | 30460 | 30460 | 30460 | 30460 | 30460 | 1 | 1 | 1 | 3596 | 111 | 100 | |

| 40 | 32841 | 32841 | 32841 | 32841 | 32841 | 32841 | 1 | 1 | 1 | 3985 | 115 | 111 | |

| 41 | 35343 | 35343 | 35343 | 35343 | 35343 | 35343 | 1 | 1 | 1 | 4231 | 146 | 128 | |

| 42 | 37969 | 37969 | 37969 | 37969 | 37969 | 37969 | 1 | 1 | 1 | 4607 | 86 | 86 | |

| 43 | 40722 | 40722 | 40722 | 40722 | 40722 | 40722 | 1 | 1 | 1 | 4936 | 145 | 145 | |

| 44 | 43605 | 43605 | 43605 | 43605 | 43605 | 43605 | 1 | 1 | 1 | 5171 | 108 | 98 | |

| 45 | 46621 | 46621 | 46621 | 46621 | 46621 | 46621 | 1 | 1 | 1 | 5399 | 128 | 128 | |

| 46 | 49773 | 49773 | 49773 | 49773 | 49773 | 49773 | 1 | 1 | 1 | 5924 | 161 | 115 | |

| 47 | 53064 | 53064 | 53064 | 53064 | 53064 | 53064 | 1 | 1 | 1 | 6370 | 56 | 56 | |

| 48 | 56497 | 56497 | 56497 | 56497 | 56497 | 56497 | 1 | 1 | 1 | 7023 | 145 | 88 | |

| 49 | 60075 | 60075 | 60075 | 60075 | 60075 | 60075 | 1 | 1 | 1 | 7133 | 355 | 194 | |

| 50 | 63801 | 63801 | 63801 | 63801 | 63801 | 63801 | 1 | 1 | 1 | 7552 | 233 | 233 | |

Appendix C GPU Acceleration

The application of GPU acceleration in computational tasks offers remarkable advantages, particularly when it comes to large-scale computations, such as those involved in combinatorial optimization. The parallel processing capabilities of GPUs allow for significant speed enhancements, enabling faster computation and consequently more rapid exploration of solution spaces. For our torchmSAT method, which is reliant on neural networks and iterative computation, GPU acceleration can significantly enhance the efficiency of the forward-loss-backward loop, enabling more rapid exploration of the feasible region of variable assignments. This implies that given a time limit, we can expect torchmSAT running on a GPU to find solutions of lower cost than it would when executed on a CPU. Future exploration of GPU acceleration could bring about further advancements in MaxSAT solvers and other similar combinatorial optimization problems, leading to more efficient and effective solutions. Figures 5, 6 and 7 show the scalability of our method under different time limits. As expected, the use of GPUs pays off on larger problem instances, especially when the time limit is tight.

|

|

|

|

|

|

|

|

|

|

|

|