Topology-Preserving Image Segmentation

with Spatial-Aware Persistent Feature Matching

Abstract

Topological correctness is critical for segmentation of tubular structures. Existing topological segmentation loss functions are primarily based on the persistent homology of the image. They match the persistent features from the segmentation with the persistent features from the ground truth and minimize the difference between them. However, these methods suffer from an ambiguous matching problem since the matching only relies on the information in the topological space. In this work, we propose an effective and efficient Spatial-Aware Topological Loss Function that further leverages the information in the original spatial domain of the image to assist the matching of persistent features. Extensive experiments on images of various types of tubular structures show that the proposed method has superior performance in improving the topological accuracy of the segmentation compared with state-of-the-art methods.

1 Introduction

Although great advancement in image segmentation of general objects was made by recent models [22, 19], the segmentation of special objects and structures remains a challenge. Tubular structures pervade our world, observed through digital images at various scales-from the twisting cell walls in microscopy view to the meandering rivers and roads in satellite imagery. Such structures usually span the whole image in a net-like pattern and feature many fine details, making them challenging to accurately segment. In general, the segmentation of tubular structures emphasizes the topological correctness over the pixel-level precision. For example, when segmenting the human retinal vessels [15], whether the continuity, branching and intersections are preserved is usually more important than whether a peripheral pixel is correctly classified. In major applications, such as automatic estimation of vessel tortuosity [21] and the artery-vein classification [34], the algorithm performance relies on the vessel topology.

The primary research direction in topology-aware segmentation is the loss function [24, 27, 13, 14, 12, 33, 25, 32, 2, 3, 29]. Typically, a topological constraint is used in addition to the pixel classification loss. The topological constraint can be either a direct measure [13, 12, 33, 25, 2, 3, 29] of the topology of the object or an indirect approximation [24, 27, 32]. The direct methods mostly rely on the persistent homology [8] of the images and attempt to match and minimize the difference between the persistent features computed from the ground truth and the segmentation. A brief introduction of persistent homology will be provided in Sec. 3.1.2. Although many matching algorithms were proposed [13, 12, 33, 25, 2], they only rely on the global information in the topological space, i.e., the lifespan of the persistent features, which is ambiguous since the binary ground truth masks yield overlapping persistent features. This could cause more incorrect topological features to be prolonged and correct topological features to be eliminated, making the topological loss function less effective.

To the best of our knowledge, the only existing work that tried to tackle this issue is the Betti-Matching Loss (BMLoss) [29], which enables a more accurate persistent feature matching via an induced matching method [1]. However, due to both complexity of the algorithm and the limitations in its implementation, the computational cost of the BMLoss is very high, limiting its applicability to practical tasks-the experiments in [29] can only be run on very small datasets with compromised image resolution.

In this paper, we propose a novel Spatial-Aware Topological Segmentation Loss Function (SATLoss) which leverages the spatial relationship between persistent features in the original image in addition to their global topological features (Fig. 1). While achieving comparable performance with the BMLoss, our method is significantly faster. The introduction of spatial-awareness considerably reduces the incorrect matching between persistent features. As a result, when compared with the other state-of-the-art (SOTA) methods on larger datasets, our proposed method achieves a major improvement in the topological accuracy.

2 Related Work

Recent topological segmentation loss functions can be generally divided into indirect methods and direct methods. Indirect methods use certain learned or hand-crafted image features which are proximity of the real topological features while direct methods use strict topological features based on persistent homology.

For indirect methods, an introductory work is [24], which proposed to use an ImageNet pretrained VGG [6, 18] network to extract the topological information of the segmentation and ground truth. It is shown that different layers of the extraction network incorporate perceptual features which are approximations of the image topological features. The loss is then computed between the perceptual features of the prediction and the ground truth. [32] proposed a topological loss more exclusive for gland segmentation. The loss is computed based on the iterations needed to derive the skeleton of the glands by the erosion operation. [27] proposed a clDice loss which approximates the topological features of tubular structures by a relationship between the segmentation and its soft skeleton. It is shown that such a relationship helps strengthen the connectivity within tubular structures, resulting in better topological accuracy in the segmentation.

[13] introduces the revolutionary work that for the first time uses the concept of persistent homology in deep image segmentation and opens the door to the direct methods. The idea of all the following works are similar, which is to match the features in the persistent barcodes or persistent diagrams from the prediction and the ground truth and minimize the difference between them. In [13], the Wasserstein distance [20] is used, which later became the most popular matching method. Another work [33] also uses the Wasserstein distance but apply on 3D point cloud data. [12] proposed to match the longest persistent barcodes from segmentation with barcodes from ground truth and minimize the difference. The matching result of this method is theoretically the same with the Wasserstein distance on 2D image segmentation task, while this work has a slightly different formulation of the final loss function. [29] proposed to use an induced matching method [1] to solve the ambiguous matching issue due to considering only the global topological features in the previous methods. The general idea of this method is to construct a common ambient space for the two images which maps the persistent features in between to find a better matching. [25] proposed to use a Hausdorff distance [11] to identify the outlier matchings of persistent diagrams, which focuses on persistent features with anomalous appearing and disappearing time.

Although out of the scope of this paper, improved segmentation networks and post processing methods were also proposed for topology-aware image segmentation. [25] proposed a Dynamic Snake Convolution, which is a variation of the deformable convolution [16] that learns convolutional kernels into different shapes to better adapt to the features of tubular structures. [10] uses the Discrete Morse Theory [8] to probabilistically estimate the missing tubular structure and refine the original segmentation.

3 Method

3.1 Background

We provide a brief and simplified introduction of related theory and terminologies in computational topology in this part and refer the readers to Edelsbrunner and Harer [8] for more details and strict definitions.

3.1.1 Topology in Digital Images

The topology in digital images are characterized by homology groups. Informally, the p-th homology group is an abelian group that describes the p-th dimensional holes in a topological space. In the context of digital images, the 0-homology group represents the connected components and the 1-homology group represents the loops. Each connected component or loop is called a homology feature. The best way to compute the homology of a digital image is to form the image into a cubical complex. A cubical complex [31, 17] is a set of building elements called cubes, each endowed with a dimension. A 0-cubes is a vertex, 1-cube is a line segment between two vertices and 2-cube is a square enclosed by four 1-cubes. In a common V-construction [31] of the cubical complex from a 2D image, the pixels are treated as 0-cubes (vertices). Additionally, an edge that connects two adjacent pixels is treated as a 1-cube and a square enclosed by four edges is treated as a 2-cube. Higher dimensional cubes are usually not considered for 2D images. Additionally, we say that the (n-1)-cubes which encloses the n-cube are the faces of the n-cube, and the n-cube is a coface of the (n-1)-cubes. The n-homology features (a connected component or a loop) can then be determined by the relationships between n-cubes and their cofaces. For example, a connected component can be constructed by a 0-cube and be destructed by filling a 1-cube between it and another 0-cube. Another useful concept is the n-Betti number, which is the rank of the n-homology group. In digital images, 0-Betti number is the number of connected components while 1-Betti number is the number of independent loops.

3.1.2 Persistent Homology

In practice, a digital image is filled with varying pixel values. To determine the relationship between cubes then further extract the topology from the image, we need to extend the concept of homology to persistent homology. Consider an image formed into a cubical complex , and a function that maps each cube to a value. In practice the values for 0-cubes are simply the pixel values and the values for higher-dimensional cubes are broadcasted from their faces with maximum or minimum values [31]. Furthermore, is monotonic such that if the cube is a face of the cube . Then the sub-level set is a sub-complex of the cubical complex . Suppose we have discrete (pixel) values mapped by , we have different sub-complexes where

| (1) |

This sequence of complexes is called the filtration of , or to be more specific, the sub-level filtration of . Alternatively, a super-level filtration is the sequence of sub-complexes considering an with and the super-level sets . The value is said to be the filtration value. An easier way to look at it is that, given an image with discrete pixel values in , we threshold the image from 1 to 0. The 0-cubes (pixels) and their cofaces larger than the threshold value are included in the cubical sub-complex. As the threshold value decreases, more cubes are included to form the current sub-complex. Finally, at the smallest threshold value, all cubes from the image are included and the final sub-complex becomes the full cubical complex (Fig. 2(b)). A homology feature can be born at a filtration value when a new cube, called the creator, is included into the current sub-complex, and it can be killed at a future filtration value when this feature is destroyed by the inclusion of another higher-dimensional cube, called the destroyer, into a future sub-complex. For example, a pixel whose value is a local maxima gives birth to a 0-homology feature (connected component) at a certain threshold value. As the value decreases, more and more surrounding pixels are included until sometime this 0-feature is connected to another 0-feature in the sub-complex by the introduction of an edge (1-cube) ‘filled’ between two pixels that connects the two areas. Then one of the features is killed (conventionally the newer one). The persistent homology is a method that describes this process where all the homology-features in the image are born and killed in the filtration. A homology-feature that lives in the filtration is said to be a persistent feature and the lifespan of that persistence feature () is said to be the persistence of that feature.

3.1.3 Persistent Barcodes and Diagrams

Persistent barcodes () are useful tools to describe and visualize the persistent homology of an image. As shown in Fig. 2(c), each barcode represents a persistent feature of the image, where the left edge corresponds to its birth filtration value and the right edge to its death filtration value. The persistent barcodes can be further transformed into a persistent diagram (, Fig. 2(d)). In , each persistent feature in the image is represented by a dot whose coordinate is .

3.2 Spatial-Aware Topological Loss Function

Recent topology-aware segmentation methods generally follow the idea of matching the persistent diagrams or barcodes between the target and prediction and try to minimize the difference between them. However, (except for BMLoss) the matching is solely based on the distances between persistent features within the diagram or barcodes, i.e., the birth, death values and the persistence of the feature. Given the segmentation model output-likelihood L, the binarized prediction P and the ground truth T, these methods can make P to have more similar Betti numbers with the T. However, there is no guarantee that each persistent feature from L is matched to the correct one from T. This issue is prominent in grid-like data. For example, in a 2D image segmentation task, the ground truth mask is usually a binary image, which leads all points in to overlap at the upper-left corner of the diagram, i.e., (0, 1). As a result, the distances between each point in and any point in are the same, which cause confusions when matching the diagrams. We therefore propose to reduce this problem by introducing spatial awareness that further leverages the spatial distance between two persistent features in the original image when matching persistent diagrams.

3.2.1 Spatial-Aware Matching and Loss Formulation

We introduce the location of creators as a reference for the spatial location of the persistent features. To be more specific, considering a super-level filtration, we compute the normalized spatial distance between the creators (if dimension of creator is larger than 0, then use its 0-dimensional face with the largest filtration value) of each pair of persistent features in and . Then, the distance is applied as a spatial weight to the distances between two points in the persistent diagram. We follow [13, 33, 4] and use the Wasserstein distance [20]:

| (2) |

as a base method to compute the best matchings between two persistent diagrams. is a persistent feature in and is a candidate matching of in . With the spatial-aware strategy, the modified Wasserstein distance becomes:

| (3) |

where and , are the pixel locations of the creator of and the creator of its matching in , respectively. In practice, we take and formulate the SATLoss as:

| (4) |

where is the optimal matching of in by Eq. 3, , are the birth and death filtration values of the persistent feature and is the corresponding spatial weight. The loss is further performed over the 0 and 1-persistent diagrams and summed. In reality, the number of persistent features in is usually larger than that in and the redundant persistent features in are matched to the diagonal (dotted line in Fig. 2(d)) of the persistent diagram. In another word:

| (5) |

Finally, the topological loss function is used with a conventional volumetric (pixel) loss function to jointly optimize the segmentation network:

| (6) |

Note that we only consider the location of creators but not destroyers for two reasons: (1) There are persistent features which have infinite persistence thus have no destroyer; (2) For 0-features, the destroyer location of one feature can be close to another 0-feature, which could make the spatial-aware matching less accurate. In comparison, the creator of a 0-feature is the maximum pixel in a feature and the creator of a 1-feature appears at where the loop is closed and can be spatially more informative.

Fig. 3 shows matching examples between T and L from a converged segmentation model. From the limited examples we manually compared, it seems the Betti-matching [29] is indeed a more accurate method. But our proposed method still significantly reduces the matching error compared with the Wasserstein matching at a much smaller computational cost than the Betti-matching. Unfortunately, there is no ‘ground truth’ available to compare the matching accuracy numerically. Moreover, it is worth mentioning that although the matching on segmentation from a converged model offers a partial insight on this problem, it does not provide a comprehensive understanding of how the matching precisely influences the whole training process, which can be less straightforward to see. Accordingly, the final segmentation quality on testing images serves as the gold standard for the evaluation and empirically it is shown that a less confused matching enables better topological accuracy in the segmentation ([29], Table 1).

3.2.2 Gradient of SATLoss

Similar with [13, 29], the SATLoss is not differentiable at all pixel locations but only on the critical cells (creators and destroyers) of the persistent features at each iteration. This is because the computation of persistent homology is not differentiable and a surrogate gradient needs to be used. After computing the persistent homology, instead of using the birth and death filtration value from the cubical complex, the pixel values of critical cells are taken from L to compute the loss function. This results in the gradient only acting on the critical cell pixels on L. By the chain rule, the gradient can be expressed as:

| (7) |

where is the model weights, are the pixel values of the creator and destroyer in L. In practice, the gradient pushes the value of creator pixel up and destroyer down if the persistent feature has a match in to prolong the persistence of the persistent feature. On contrary, if the persistent feature is matched to the diagonal, the gradient pushes the creator value down and the destroyer value up to shorten the persistence of this feature. The difference with Wasserstein matching is that our SATLoss enables the gradient to act more selectively on the persistent features and their critical cells, reducing the number of persistent features that are incorrectly prolonged or eliminated.

3.2.3 Computational Cost

Our SATLoss is more efficient than BMLoss [29] as it introduces no additional cost to computing the persistent homology of and . As explained in Sec. 3.2.2, the computation of the critical cell locations is a necessary step in the optimization, whether or not we use them to perform the spatial-aware matching. Additionally, the cost of retrieving spatial location of the critical cells is also small, which simply requires mapping the critical cells back to the unsorted cubical complex, and is trivial comparing with the computation of persistent homology [7, 5]. Given an image and , our SATLoss has a time complexity. In comparison, the BMLoss requires a lot more computationally heavy steps besides the computation of persistent homology of and and has a time complexity. We will show in Sec. 4.5 that even when training on a very small patch size of , there is a huge difference in the runtime. If extended to larger images, the difference can be even much larger.

4 Experiments

| Dataset | Volumetric (%) | Topology | |||||

|---|---|---|---|---|---|---|---|

| Acc. | Dice | clDice | |||||

| bce | - | 97.61 0.05 | 58.57 0.20 | 76.73 0.27 | 6.015 0.178 | 0.867 0.009 | |

| bce | TCLoss | 97.64 0.05 | 58.21 0.68 | 76.99 0.43 | 5.429 0.326 | 0.865 0.017 | |

| bce | WTLoss | 97.70 0.03 | 59.10 0.32 | 78.59 0.24 | 2.907 0.169 | 0.844 0.013 | |

| bce | He et al. | 97.72 0.01 | 59.10 0.14 | 78.93 0.62 | 2.473 0.046 | 0.824 0.039 | |

| soft-Dice | clDice Loss | 97.65 0.05 | 60.35 0.01 | 80.94 0.35 | 2.887 0.135 | 0.803 0.009 | |

| bce | SATLoss (ours) | 97.80 0.02 | 58.71 0.50 | 80.90 0.23 | 0.562 0.017 | 0.720 0.008 | |

| bce | - | 97.75 0.01 | 84.69 0.83 | 89.90 0.22 | 4.547 0.196 | 1.566 0.129 | |

| bce | TCLoss | 97.74 0.04 | 84.96 0.25 | 89.86 0.25 | 3.994 0.278 | 1.475 0.051 | |

| bce | WTLoss | 97.74 0.03 | 85.01 0.19 | 89.98 0.14 | 3.204 0.242 | 1.412 0.083 | |

| bce | He et al. | 97.78 0.01 | 85.21 0.14 | 90.13 0.06 | 3.155 0.159 | 1.475 0.030 | |

| soft-Dice | clDice Loss | 97.72 0.02 | 85.13 0.17 | 90.31 0.16 | 2.840 0.117 | 1.425 0.011 | |

| bce | SATLoss (ours) | 97.78 0.02 | 85.24 0.13 | 90.16 0.15 | 1.534 0.043 | 1.386 0.047 | |

| bce | - | 99.75 0.16 | 58.37 9.74 | 64.27 9.21 | 38.970 19.890 | 1.357 0.799 | |

| bce | TCLoss | 99.78 0.14 | 57.89 10.57 | 63.00 10.69 | 33.510 16.753 | 1.221 0.886 | |

| bce | WTLoss | 99.75 0.17 | 57.62 8.51 | 67.99 8.66 | 10.944 7.778 | 1.407 0.717 | |

| bce | He et al. | 99.76 0.18 | 58.25 6.98 | 72.74 3.93 | 9.044 7.642 | 1.335 0.748 | |

| soft-Dice | clDice Loss | 98.03 3.67 | 38.29 19.57 | 53.57 16.43 | |||

| bce | SATLoss (ours) | 99.77 0.16 | 58.97 9.42 | 73.23 4.59 | 6.191 3.508 | 0.901 0.953 | |

| bce | - | 95.60 0.48 | 73.54 3.01 | 73.92 4.07 | 25.089 6.216 | 7.667 1.702 | |

| bce | TCLoss | 95.76 0.29 | 74.03 3.92 | 73.91 4.62 | 23.208 3.859 | 7.094 1.773 | |

| bce | WTLoss | 95.94 0.26 | 75.46 4.46 | 76.56 5.70 | 6.352 1.030 | 6.218 1.778 | |

| bce | He et al. | 95.85 0.26 | 74.87 3.65 | 75.19 4.69 | 5.199 1.462 | 6.185 1.537 | |

| soft-Dice | clDice Loss | 95.06 0.67 | 74.12 3.02 | 74.79 3.78 | 10.193 2.409 | 6.958 1.118 | |

| bce | SATLoss (ours) | 95.95 0.23 | 75.66 4.12 | 76.69 5.40 | 4.131 0.315 | 6.004 1.546 | |

4.1 Datasets

Our main comparison with the SOTA topological segmentation loss functions excluding the BMLoss is performed over four datasets with diverse tubular structures:

-

•

Roads [23]: Segmentation of various styles of roads in remote images of Massachusetts.

-

•

CREMI [9]: Segmentation of cell boundaries of brain neurons in electronic microscopy images.

-

•

CrackTree [35] Segmentation of cracks on concrete roads in common photographical images.

-

•

DRIVE [28]: Segmentation of human vessels in color fundus retinal images.

Considering that the computational cost of persistent homology is still notably higher than conventional segmentation training objectives, it is a common practice in the literature of this field to crop the full image to small image patches for training and computing the evaluation metrics, for example, [27, 13]. However, too small patch size leads to limited semantic and topological information. In our experiments, we push it to the limit of our computational resources and use a patch size of around among training, validation and testing.

| Acc. | Dice(P) | clDice(P) | (P) | (P) | Dice(F) | clDice(F) | (F) | (F) | |

|---|---|---|---|---|---|---|---|---|---|

| TCLoss | 96.100.64 | 48.665.06 | 63.666.93 | 2.5910.87 | 0.4870.31 | 63.735.51 | 68.555.98 | 197.763.0 | 54.727.1 |

| clDice Loss | 95.500.52 | 46.713.04 | 66.043.11 | 1.9410.94 | 0.4880.30 | 61.043.74 | 68.884.15 | 179.485.2 | 52.327.3 |

| BMLoss | 94.910.87 | 46.953.40 | 64.934.83 | 0.8960.31 | 0.5200.28 | 60.933.69 | 69.103.65 | 61.523.1 | 40.823.0 |

| SATLoss (ours) | 96.120.53 | 47.733.91 | 66.173.16 | 0.8150.13 | 0.4810.31 | 63.344.09 | 68.953.95 | 88.917.9 | 51.925.6 |

| Acc. | Dice(P) | clDice(P) | (P) | (P) | Dice(F) | clDice(F) | (F) | (F) | |

|---|---|---|---|---|---|---|---|---|---|

| TCLoss | 98.660.04 | 90.951.63 | 94.640.84 | 0.4700.05 | 0.2640.09 | 92.650.52 | 96.140.50 | 1.5170.35 | 0.9060.25 |

| clDice Loss | 98.590.04 | 90.221.29 | 94.590.83 | 0.4810.06 | 0.2520.06 | 92.120.53 | 95.920.41 | 1.5000.06 | 0.9220.10 |

| BMLoss | 98.240.11 | 88.031.28 | 92.160.86 | 0.6270.20 | 0.2560.10 | 90.170.78 | 94.050.85 | 2.0720.92 | 0.9170.29 |

| SATLoss (ours) | 98.670.05 | 90.471.94 | 94.970.97 | 0.4350.14 | 0.2350.08 | 92.390.60 | 96.250.31 | 0.8390.19 | 0.8560.23 |

| Dataset | SATLoss (ours) | BMLoss |

|---|---|---|

| Roads-small | 1.60 0.08 hours | 101.64 1.82 hours |

| C.Elegan-small | 0.10 0.01 hours | 6.90 0.10 hours |

For comparison with the BMLoss, we follow the implementation details in [29] and use two much smaller datasets with reduced number of samples and downsampled images. Running BMLoss on the previous full datasets is impractical: It is estimated to cost over 20000 hours using an i9-13900K CPU + RTX 4090 GPU to run a one-time training of BMLoss on the Roads dataset, while the same training takes less than 45 hours using our method. The two datasets are picked from the six datasets used in [29] with the least loss in ground truth quality (especially topological accuracy) caused by the downsampling:

-

•

Roads-small: About 9% of the images are picked from the full Roads dataset and downsampled from to .

-

•

C.Elegan-small [30]: Segmentation of worm groups in microscopy images. All images in the dataset are used, downsampled from to .

The images are cropped to a patch size of to further reduce the computational cost. More details on the 6 datasets can be found in the supplementary materials (SM).

4.2 Implementation Details

We adopt a consistent U-Net [26] network structure and set of hyperparameters for all experiments. An Adam optimizer is used with a weight decay of 1e-3 and an initial learning rate of 1e-3, decayed to 1e-4 at half of the total number of epochs. We train 30 epochs for Roads, 50 epochs for CREMI and CrackTree and 100 epochs for DRIVE. For all experiments, the model with the best Dice score on the validation set is used for testing. We use the best pixel loss reported in the corresponding paper for each of the baseline methods and use the BCE loss as pixel loss with our proposed method. The best weights for the topological loss functions are determined by the ablation studies in Sec. 4.6. All experiments are repeated with 3 random seeds. For the smaller CrackTree and DRIVE datasets, we further adopt a 3-fold cross validation under each seeds. Our implementation is under the PyTorch framework, where the computation of persistent homology relies on the GUDHI (Geometry Understanding in Higher Dimensions) library. The GUDHI library is written in C++ and provides a Python interface.

For Roads-small and C.Elegan-small datasets, we follow [29] to determine the network structures and training hyperparameters. We train all loss functions using the same hyperparameters reported in [29] without finetuning in our method. Additionally, in [29] experiments were run for only once. Consider the small dataset size, we instead use a 3-fold cross validation where each fold is repeated with 3 random seeds to make the results statistically more convincing.

4.3 Evaluation Metrics

Two types of metrics: volumetric and topological are used to evaluate the general segmentation quality and the topological accuracy, respectively. For volumetric metrics, we adopt the pixel-wise accuracy and the Dice score. We also use a clDice score [27], which although is a pixel-based metric, indirectly implies the topological correctness. For topological metrics, we use the 0-th and 1-st Betti number error (, ), which are the most commonly-used measurements of topological accuracy. We quit using the Betti-matching metric proposed in [29] because the computational cost is too high given the amount of our experiments.

4.4 Comparison with SOTA Methods

For the main experiment performed on the four full datasets, we compare our method with the most commonly used WTLoss [13] and a most recent work He et al. [12] which is a slight variation of the WTLoss. We also compare with the clDice loss [27] and another most recent TCLoss [25] that matches the persistent diagram via a Hausdorff distance. As demonstrated in Table 1 and Fig. 4, our proposed method shows superior performance in topological accuracy as well as the overall segmentation quality over the SOTA methods. More discussions on the results can be found in SM.

4.5 Comparison with BMLoss

For comparison with BMLoss, we also include the clDice and TCLoss in the baseline, as the first method is the newest baseline in [29] and TCLoss is a newer method than BMLoss (which did not include BMLoss in their baseline). On another note, although the metrics are commonly computed on the image patches [13, 25, 27], in [29] they are computed over the full image. We therefore report the results on both the patch size P and full image size F. The result are shown in Table 2, Table 3 and Fig. 5. While our proposed method does not achieve the same performance level in global topological accuracy on full image size with BMLoss on the Roads-small dataset, on boarder evaluations which take the other results into consideration, our method demonstrates comparable, if not superior, performance. Furthermore, as reported in Table 4, our method achieves the results at a much smaller training time compared with BMLoss. Such a fundamental constraint of BMLoss is much less a problem of our method. It is important to note that in addition to the algorithm complexity, the coding language and implementation details can also influence the runtime. The bottom-level codes we use to compute persistent homology are written in C++, while the codes for BMLoss are written in Python. So this comparison is unfair in a strict sense. Given the complexity of the BMLoss, a reimplementation in C++ is challenging and is beyond the scope of this study. To the best of our knowledge, the Python version we used is the only and official implementation available. Therefore, despite the differences in implementation context, our proposed method still demonstrates superior computational efficiency.

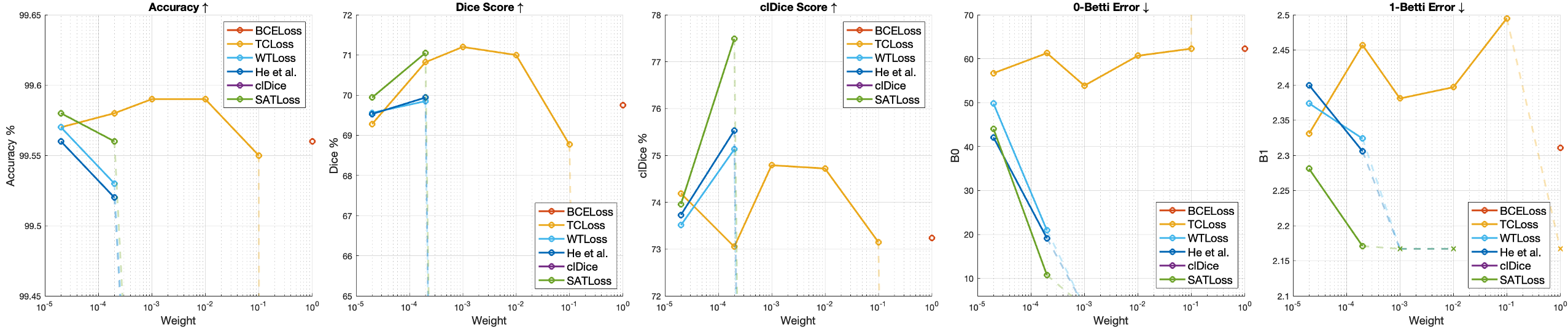

4.6 Ablation Studies on Weight

The results in Table 1, Table 2, Table 3 reports under the best weight (Eq. 6) for the topological loss functions studied in this section. We experiment the WTLoss, He et al. and our proposed method using weights from 1e-5 to 1e-2. For TCLoss whose value is from fewer persistent features, we extend the range to 1e-0. For clDice and BMLoss, we use the optimal weights since they are reported in their papers (0.3 for clDice on CREMI, 0.5 on other datasets; 0.5 for BMLoss on both datasets). All experiments in the ablation study are also repeated with 3 random seeds and the mean value is reported in the charts. For our method and the two methods without spatial-aware matching (WTLoss and He et al.), as reported in Fig. 6, we observe that the topological accuracy is higher if a larger is applied. However, if is too large, the training will either converges badly or produce results with many small holes on the foreground that significantly increase the 1-Betti error (which we denote by dotted lines and crosses in Fig. 6). Our proposed method usually allows using a higher weight to further reduce the topological error. This effect is observed in repeated experiments over different datasets. A potential explanation is that the normalized spatial weights decrease the magnitude of gradient of the loss function. Nevertheless, even under the same weight, our method still consistently outperforms the others.

5 Conclusions

In this paper, we proposed a novel Spatial-Aware Topological Segmentation Loss Function that leverages the spatial information of persistent features in addition to their global topological information to match the persistent diagrams. The introduction of spatial-awareness effectively reduces the incorrect matchings compared with conventional methods and achieves considerably better topological accuracy in the segmentation. When compared with another (and the only) Betti-matching method which tries to tackle the same problem, our proposed method achieves comparable performance at a much lower computational cost. Future works include investigating how to leverage more accurate spatial information of the persistent features without introducing large computational overhead.

References

- Bauer and Lesnick [2015] Ulrich Bauer and Michael Lesnick. Induced matchings of barcodes and the algebraic stability of persistence. In 13th Annual symposium on Computational geometry, pages 355–364, 2015.

- Byrne et al. [2023] Nick Byrne, James R. Clough, Israel Valverde, Giovanni Montana, and Andrew P. King. A persistent homology-based topological loss for cnn-based multiclass segmentation of cmr. IEEE Transactions on Medical Imaging, 42(1):3–14, 2023.

- Clough et al. [2022] James R. Clough, Nicholas Byrne, Ilkay Oksuz, Veronika A. Zimmer, Julia A. Schnabel, and Andrew P. King. A topological loss function for deep-learning based image segmentation using persistent homology. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(12):8766–8778, 2022.

- Cohen-Steiner et al. [2010] David Cohen-Steiner, Herbert Edelsbrunner, and John Harer. Lipschitz functions have l p-stable persistence. Foundations of computational mathematics, 10(2):127–139, 2010.

- Cohen-Steiner et al. [2011] David Cohen-Steiner, Herbert Edelsbrunner, and John Harer. Persistent cohomology and circular coordinates. Discrete & Computational Geometry, 45(4):737–759, 2011.

- Deng et al. [2009] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Li Kai, and Fei-Fei Li. Imagenet: A large-scale hierarchical image database. In IEEE Conference on Computer Vision and Pattern Recognition, pages 248–255, 2009.

- Dey et al. [2014] Tamal Dey, Fengtao Fan, and Yusu Wang. Computing topological persistence for simplicial maps. In Computing Topological Persistence for Simplicial Maps, page 345–354, 2014.

- Edelsbrunner and Harer [2022] Herbert Edelsbrunner and John Harer, editors. Computational Topology: An Introduction. American Mathematical Society, 2022.

- Funke et al. [2019] Jan Funke, Fabian Tschopp, William Grisaitis, Arlo Sheri-dan, Chandan Singh, Stephan Saalfeld, and Srinivas C. Turaga. Large scale image segmentation with structured loss based deep learning for connectome reconstruction. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(7):1669–1680, 2019.

- Gupta et al. [2023] Saumya Gupta, Yikai Zhang, Xiaoling Hu, Prateek Prasanna, and Chao Chen. Topology-aware uncertainty for image segmentation. In Advances in Neural Information Processing Systems, 2023.

- Hausdorff [1914] Felix Hausdorff, editor. Grundzüge der mengenlehre. Leipzig Viet, 1914.

- He et al. [2023] Hongliang He, Jun Wang, Pengxu Wei, Fan Xu, Xiangyang Ji, Chang Liu, and Jie Chen. Toposeg: Topology-aware nuclear instance segmentation. In IEEE/CVF International Conference on Computer Vision, pages 21307–21316, 2023.

- Hu et al. [2019] Xiaoling Hu, Li Fuxin, Dimitris Samaras, and Chao Chen. Topology-preserving deep image segmentation. In Advances in Neural Information Processing Systems, 2019.

- Hu et al. [2021] Xiaoling Hu, Yusu Wang, Li Fuxin, Dimitris Samaras, and Chao Chen. Topology-aware segmentation using discrete morse theory. In International Conference on Learning Representations, 2021.

- Ilesanmi et al. [2023] Ademola E. Ilesanmi, Taiwo Ilesanmi, and Gbenga A. Gbotoso. A systematic review of retinal fundus image segmentation and classification methods using convolutional neural networks. Healthcare Analytics, 4(3):100261, 2023.

- Jin et al. [2019] Qiangguo Jin, Zhaopeng Meng, Tuan D. Pham, Qi Chen, Leyi Wei, and Ran Su. Dunet: A deformable network for retinal vessel segmentation. Knowledge-Based Systems, 178:149–162, 2019.

- Kaczynski et al. [2004] Tomasz Kaczynski, Konstantin Mischaikow, and Marian Mrozek, editors. Computational Homology. Springer, 2004.

- Karen Simonyan [2014] Andrew Zisserman Karen Simonyan. Very deep convolutional networks for large-scale image recognition, 2014. arXiv preprint arXiv:1409.1556.

- Ke et al. [2023] Lei Ke, Mingqiao Ye, Martin Danelljan, Yifan liu, Yu-Wing Tai, Chi-Keung Tang, and Fisher Yu. Segment anything in high quality. In Advances in Neural Information Processing Systems, 2023.

- Kerber et al. [2016] Micheal Kerber, Dmitriy Morozov, and Arnur Nigmetov. Geometry helps to compare persistence diagrams, 2016. arXiv:1606.03357.

- Khanal and Estrada [2023] Aashis Khanal and Rolando Estrada. Fully automated artery-vein ratio and vascular tortuosity measurement in retinal fundus images, 2023. arXiv preprint arXiv:2301.01791.

- Kirillov et al. [2023] Alexander Kirillov, Nikhila Ravi Eric Mintun, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C. Berg, Wan-Yen Lo, Piotr Dollar, and Ross Girshick. Segment anything. In IEEE/CVF International Conference on Computer Vision, pages 4015–4026, 2023.

- Mnih [2013] Volodymyr Mnih. Machine learning for aerial image labeling. PhD thesis, University of Toronto (Canada), 2013.

- Mosinska et al. [2018] Agata Mosinska, Pablo Marquez-Neila, Mateusz Kozin ski, and Pascal Fua. Beyond the pixel-wise loss for topology-aware delineation. In IEEE/CVF International Conference on Computer Vision and Pattern Recognition, pages 3136–3145, 2018.

- Qi et al. [2023] Yaolei Qi, Yuting He, Xiaoming Qi, Yuan Zhang, and Guanyu Yang. Dynamic snake convolution based on topological geometric constraints for tubular structure segmentation. In IEEE/CVF International Conference on Computer Vision, pages 6070–6079, 2023.

- Ronneberger et al. [2015] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention, pages 234–241, 2015.

- Shit et al. [2021] Suprosanna Shit, Johannes C. Paetzold, Anjany Sekuboyina, Ivan Ezhov, Alexander Unger, Andrey Zhylka, Josien P. W. Pluim, Ulrich Bauer, and Bjoern H. Menze. cldice - a novel topology-preserving loss function for tubular structure segmentation. In IEEE/CVF International Conference on Computer Vision and Pattern Recognition, pages 16560–16569, 2021.

- Staal et al. [2004] Joes Staal, Michael Abràmoff, Meindert Niemeijer abd Max Viergever, and Bram Ginneken. Ridge-based vessel segmentation in color images of the retina. IEEE Transactions on Medical Imaging, 23(4):501–509, 2004.

- Stucki et al. [2023] Nico Stucki, Johannes Paetzold, Suprosanna Shit, Bjoern Menze, and Ulrich Bauer. Topologically faithful image segmentation via induced matching of persistence barcodes. In International Conference on Machine Learning, PMLR, pages 32698–32727, 2023.

- Vebjorn et al. [2012] Ljosa Vebjorn, Katherine Sokolnicki, and Anne Carpenter. Annotated high-throughput microscopy image sets for validation. Nature methods, 9(7):637–637, 2012.

- Wagner et al. [2011] Hubert Wagner, Chao Chen, and Erald Vucini. Efficient computation of persistent homology for cubical data, 2011. In Topological methods in data analysis and visualization II: theory, algorithms, and applications.

- Wang et al. [2022] Haotian Wang, Min Xian, and Aleksandar Vakanski. Ta-net: Topology-aware network for gland segmentation. In IEEE/CVF Winter Conference on Applications of Computer Vision, pages 1556–1564, 2022.

- Wong and Vong [2021] Chi-Chong Wong and Chi-Man Vong. Persistent homology based graph convolution network for fine-grained 3d shape segmentation. In IEEE/CVF International Conference on Computer Vision, pages 7098–7107, 2021.

- Zhao et al. [2020] Yitian Zhao, Jianyang Xie, Huaizhong Zhang, Yalin Zheng, Yifan Zhao, Hong Qi, Yangchun Zhao, Pan Su, Jiang Liu, and Yonghuai Liu. Retinal vascular network topology reconstruction and artery/vein classification via dominant set clustering. IEEE Transactions on Medical Imaging, 39(2):341–356, 2020.

- Zou et al. [2012] Qin Zou, Yu Cao, Qingquan Li, Qingzhou Mao, and Song Wang. Cracktree: Automatic crack detection from pavement images. Pattern Recognition Letters, 33(3):227–238, 2012.

Supplementary Material

6 Details on Datasets

6.1 Roads

The (Massachusetts) Roads dataset [23] (Link) has in total 1171 images, and is split into 1108 training, 14 validation and 49 testing by the dataset author. We follow this dataset split in our experiments. Each image is cropped into 25 image patches. In total, there are 27700 for training, 350 for validation and 1225 for testing. The evaluation metrics are computed on the same patch size (the same for the following datasets).

6.2 CREMI

The CREMI dataset [9] (Link) has three volumes (A, B & C), volume A, B has 125 scans, volume C has 123 scans. Each scan is an 2D image. We use volume A,B and scan 1-14, 16-74, 76-81 in volume C for training, scan 82-92 in volume C for validation and scan 93-125 for testing. Each scan is cropped into 25 patches. In total, there are 8225 for training, 275 for validation and 825 for testing. The original CREMI dataset only provide the ground truth mask for each cell. We take the spaces between the cells to form the ground truth for the cell boundaries (extracellular matrix).

6.3 CrackTree

The CrackTree dataset [35] (Link) has 260 images, most of them have a resolution of , while a few others have a resolution of . For consistency, we pad all the images to the right to and crop the images from top and bottom (in equal) and right to . After sorting all the images by name: For fold 1, we used image 1-200 for training, 201-220 for validation, 221-260 for testing; For fold 2, we used image 1-116, 177-260 for training, 117-136 for validation, 137-176 for testing; For fold 3, we used image 61-260 for training, 1-20 for validation, 21-60 for testing. Each image is then cropped to 6 image patches. In total, there are 1200 for training, 120 for validation and 240 for testing.

6.4 DRIVE

The DRIVE dataset [28] (Link) has 20 images. We slightly remove the redundant blank margins around the circular effective imaging area and thus crop each image to . For fold 1, we use 21-32 for training, 33-34 for validation, 35-40 for testing; For fold 2, we use 29-40 for training, 27-28 for validation and 21-26 for testing; For fold 3, we use 23-28, 35-40 for training, 21-22 for validation and 29-34 for testing. Each image is then cropped into 4 image patches. In total, there are 48 for training, 8 for validation and 24 for testing.

6.5 Roads-small

We follow as much details as we can in [29] to prepare the Roads-small dataset. In total 134 images are selected from the original Roads dataset (mixing training, validation and testing, since we use 3-fold cross validation for this dataset). In [29], which images are selected is not provided so we pick image by ourselves. It is difficult to describe the selected images unless providing a long list here but the general rule is to select images with more complexed road networks and less blank regions. The selected images are then divided randomly into training, validation and testing for each fold. The images are then downsampled from to . Again, details of downsampling are not provided in [29], so we use (the same for C.Elegan-small) bicubic interpolation for all images and labels and then binarized the downsampled labels by thresholding them with a value of 0.5. No further manual correction is made to the downsampled labels. Before cropping, the images are padded to the right and bottom to . Then, each image is cropped to 64 patches. In total, there are 6400 for training, 640 for validation and 1536 for testing.

6.6 C.Elegan-small

The C.Elegan dataset [30] (Link) has 200 images. We crop the images and only keep the center effective imaging area and then downsample them to . For fold 1, we use A01-C22 for training, C23-D08 for validation and D09-E04 for testing; For fold 2, we use B07-E04 for training, A01-A10 for validation and A11-B06 for testing; For fold 3, we use A01-B16, C23-E04 for training, C13-C22 for validation and B17-C12 for testing. Each image is cropped into 4 patches. In total, there are 280 for training, 40 for validation and 80 for testing.

7 Additional Implementation Details

We train on Roads, CREMI and CrackTree datasets using a batch size of 16 and on DRIVE dataset using batch size of 2 (since bad convergence on larger batch size). During training, we follow [13] and pad the edges of the image patches with 1 (1 pixel width) to also take the 1-features enclosed by the image boundary into account. We run the main experiments on two computers, one with a i7-12700K CPU + RTX 3090 GPU, another with a i9-13900K CPU + 2 RTX 4090 GPUs. The experiments are randomly distributed into different computers. For comparison with BMLoss on Roads-small and C.Elegan-small datasets, we run on an older computer with a Xeno E5-2650 CPU + 3 GTX 1080Ti GPUs. This is because the implementation of BMLoss requires an old PyTorch and CUDA version where we find using newer GPU models encounters a random interruption issue during the training. Each experiment only takes one GPU. For implementation of the baseline, we use the official implementation for the respective methods if available (clDice [27]: Link, BMLoss [29]: Link). For methods where official implementations are unavailable (TCLoss [25], WTLoss [13], He et al. [12]), we use our own implementation similar with the SATLoss. The computations of persistent homology for these methods are exactly the same, and the difference only exists in the implementation of the loss functions, where we follow the mathematical expressions in the respective papers.

8 More Discussion on Experimental Results

One observation in the results that worth discussing is that the TCLoss [25], although a most recent method, appears at a very poor position in the baseline. On the one hand, unfortunately, in [25] the authors did not provide enough implementation details for us to reproduce their results. And they did not provide ablation studies on the topological loss weight, nor did they report the weight used for their method and the baseline methods. On the other hand, the results shown in [25] seems to have limited improvement over its baselines, including the BCELoss. And in our experiments we observe that the TCLoss has slight improvements over the BCELoss, which are similar with the results in [25]. In our opinion, the main limitation of the TCLoss is that the use of max and min functions in the Hausdorff distance makes only one pair of persistent features to have gradient from the loss function, which can make the loss less effective. In comparison, the other persistent homology-based methods optimize over all persistent features. We tried to increase the weight for TCLoss to 1e-0 until it diverges to compensate the small loss and gradient value (since they are computed from only one pair of persistent features, although it is an outlier which should usually have larger value than normal pairs). But the effort only seems to provide marginal improvement. Perhaps more investigations are needed to determine the performance and usefulness of this method.

Furthermore, we observe that the clDice loss [27] converges badly under some folds and random seeds when applied on the CrackTree dataset. It is potentially because of that the CrackTree dataset has very thin tubular labels (usually only one pixel width). And the labels, according to our examination, do contain inaccuracy and errors. This can make the overlap-based method (both clDice and the soft-Dice it used with) to act poorly. The CrackTree dataset was not used in [27]. Our experiments therefore might provide some insights into a limitation of the clDice method.

9 Additional Results

The ablation results on the other datasets are provided in Fig. 8, Fig. 9, Fig. 10, Fig. 11, Fig. 12, Fig. 13. Due to limited resources and for consistency between datasets, the ablation experiments were only run on the first fold. In addition, we provide more qualitative results in Fig. 14 for our main experiments. In Fig. 7, we also show more qualitative comparison with on the Roads-small dataset where our method fails to outperform the BMLoss in some metrics. In Fig. 15, we show more persistent feature matching results.