Time-aware Hyperbolic Graph Attention Network for Session-based Recommendation

Abstract

Session-based Recommendation (SBR) is to predict users’ next interested items based on their previous browsing sessions. Existing methods model sessions as graphs or sequences to estimate user interests based on their interacted items to make recommendations. In recent years, graph-based methods have achieved outstanding performance on SBR. However, none of these methods consider temporal information, which is a crucial feature in SBR as it indicates timeliness or currency. Besides, the session graphs exhibit a hierarchical structure and are demonstrated to be suitable in hyperbolic geometry. But few papers design the models in hyperbolic spaces and this direction is still under exploration.

In this paper, we propose Time-aware Hyperbolic Graph Attention Network (TA-HGAT) — a novel hyperbolic graph neural network framework to build a session-based recommendation model considering temporal information. More specifically, there are three components in TA-HGAT. First, a hyperbolic projection module transforms the item features into hyperbolic space. Second, the time-aware graph attention module models time intervals between items and the users’ current interests. Third, an evolutionary loss at the end of the model provides an accurate prediction of the recommended item based on the given timestamp. TA-HGAT is built in a hyperbolic space to learn the hierarchical structure of session graphs. Experimental results show that the proposed TA-HGAT has the best performance compared to ten baseline models on two real-world datasets.

Index Terms:

recommender system, graph neural network, hyperbolic embeddingI Introduction

Recommender systems have been an effective solution to help users overcome the information overload on the Internet. Many applications are developed based on this rationale, including online retail [1], music streaming [2], and content sharing [3]. To better understand users, modeling their browsing sessions is a useful solution as sessions indicate their current interests. Session-based recommendation (SBR) predicts the users’ next interested items by modeling users’ sessions. Deep learning models, including Recurrent Neural Networks (RNNs) [4, 5], Memory Networks [6], and Graph Neural Networks (GNNs) [7, 8] are applied to this problem and have achieved state-of-the-art performance.

Recently, the most influential works on dealing with SBR are GNN-based methods. The GNN-based methods [7, 8, 9, 10, 11, 12] take each session as a graph to learn the items’ internal relationship and their complex transitions. The most representative model is SR-GNN [7], which is the first work to apply GNN on session-based recommendation and achieve state-of-the-art performance. Based on SR-GNN, [10, 12] improve SR-GNN with attention layers. [11, 8] consider the item order in the session graph to build the models. [9, 13, 14] take additional information such as global item relationship, item categories, and user representations into account to devise more extensive models. HCGR [15] models session graphs into a hyperbolic space to extract hierarchical information.

Although the existing GNN-based methods have achieved satisfactory performance, they still suffer from two limitations. First, unlike sequence-based models, graph structure cannot explicitly show the temporal information between items. Time interval is a crucial feature and can significantly improve the recommendation performance [16, 17], but it is ignored in the existing graph-based SBR models. Moreover, though modeling sessions into graphs has the advantage of learning items complex transitions [7], the sequential relation between items is unclear in the session graph because the beginning and end of a session are ambiguous under the graph structure. Second, according to [18, 19], graph data exhibits an underlying non-Euclidean structure, and therefore, learning such geometry in Euclidean spaces is not a proper choice. As a result, some recent studies [20, 15, 21] reveal that the real-world datasets of recommender systems usually exhibit tree-like hierarchical structures, and hyperbolic spaces can effectively capture such hierarchical information. Therefore, it is worth trying to learn session graphs in hyperbolic spaces.

Hyperbolic spaces have the ability to model hierarchical structure data because they expand faster than Euclidean spaces. They can expand exponentially, but Euclidean spaces only expand polynomially. Existing work [15] demonstrates the hierarchical structure of session graphs. However, modeling session graphs in hyperbolic spaces is still under exploration. First, time intervals indicate the correlation between two items. Since hyperbolic embedding is a better match to session graphs, it is necessary to define a new framework to identify the time intervals in the edges of session graphs. Second, learning users’ current interests in the graph is crucial, but it is difficult to realize in hyperbolic spaces. Previous works [6, 7] devise models in Euclidean space based on the last item in the session. The last item plays an important role in predicting the next item because it represents the users’ current interest. However, it is more challenging to model this feature in a hyperbolic space as the operations in hyperbolic spaces are more complicated than the Euclidean space. Third, when taking the time information into consideration, we can not only make next-item recommendations, but also provide recommendations based on a specific timestamp.

To tackle the above challenges, we propose Time-aware Hyperbolic Graph Attention Network (TA-HGAT), a hyperbolic GNN considering the comprehensive time-relevant features. Specifically, we project the item’s original features into a Poincaré ball space via a hyperbolic projection layer. Then, we design a time-aware hyperbolic attention mechanism to learn the time intervals and users’ current interests together in a hyperbolic space. It includes two modules: hyperbolic self-attention with time intervals and hyperbolic soft-attention with users’ current interests. Finally, the model is trained via an evolutionary loss to predict which item the user may be interested in at a specific timestamp. All these three components are based on a fully hyperbolic graph neural network framework.

Here, we summarize our contributions as follows:

-

•

To the best of our knowledge, this is the first paper that models temporal information in a hyperbolic space to improve the performance of the recommender system. We go beyond the conventional Euclidean machine learning models to model users’ time-relevant features in a more delicate manner.

-

•

We propose TA-HGAT, a hyperbolic GNN-based framework with three main components: hyperbolic projection, time-aware hyperbolic attention, and evolutionary loss. These three components work together in an end-to-end GNN to model items’ time intervals and users’ current interests. In the end, our model provides a time-specific recommendation.

-

•

We conduct experiments on two real-world datasets and compare our model with ten baseline models. The experiment results demonstrate the effectiveness of the TA-HGAT in MRR and Precision.

II Preliminary

II-A Graph neural network

GNNs [22, 23] are designed to handle the structural graph data. In GNNs, aggregation is the core operation to extract structural knowledge. By aggregating neighboring information, the central node can gain knowledge from its neighbors passed through edges and learn the node embedding. GNNs have been demonstrated to be powerful in learning node embeddings, so they are widely used on many node-related tasks such as node classification[23], graph classification[24], and link prediction[22].

Based on the aggregation operation, the forward propagation of a GNN on graph is to learn the embedding of node via aggregating its neighboring nodes. We suppose that the initial node embedding of each node is , which generally is the feature of the node. In each hidden layer of a GNN, the embedding of the central node is learned from the aggregated embedding of the neighboring nodes in the previous hidden layer . The process is described in math as follows:

| (1) |

where represents the set of all neighbors of node in the graph, including the node itself. The aggregation function integrates the neighboring information together. A non-linear activation function , e.g., sigmoid or LeakyReLU, is applied to generate the embedding of node in the layer .

Based on the vanilla GNN we mentioned above, GAT [25] is proposed to improve GNNs with self-attention mechanism [26]. Specifically, for all the neighbors of node , we need to learn the attention coefficients for all its neighbors to calculate the importance of each neighbor node in the aggregation. Suppose the attention coefficient of the node pair is , the process of learning is

| (2) |

where is the correlation between node and . here can be the joint embeddings of node and , e.g., concatenation of node embeddings or similarity of the node pair.

II-B Hyperbolic spaces

In definition, hyperbolic space is a homogeneous space with negative curvature. It is a smooth Riemannian manifold, which can be modeled in several hyperbolic geometric models, including Poincaré ball model[27], Klein model[28], Lorentz model[29], etc. In this paper, we choose the Poincaré ball model because the distance between two points grows exponentially, which fits well with the hierarchical structure of the session graph. Formally, the space of the -dimensional Poincaré ball is defined as

| (3) |

where is the radius of the ball and is any point in manifold . If , then and the ball is equal to the Euclidean surface. In this paper, we set . The tangent space is a -dimensional vector space approximating around , which is isomorphic to the Euclidean space. With the exponential map, a vector in the Euclidean space can be mapped to the hyperbolic space. The logarithmic map is the inverse of the exponential map, which projects the vector back to the Euclidean space.

In hyperbolic spaces, the fundamental mathematical operations of neural networks (e.g., addition and multiplication) are different from those in Euclidean space. In this paper, we choose Möbius transformation as an algebraic operation for studying hyperbolic geometry. For a pair of random vectors , we list the operations that will be used in our model as follows:

-

•

Möbius addition [30] is to perform addition operation of and .

(4) -

•

Möbius matrix-vector multiplication [31] is employed to transform with matrix .

(5) -

•

Möbius scalar multiplication is the multiplication of a scalar with a vector .

(6) -

•

Exponential map transforms from the Euclidean space to a chosen point in a hyperbolic space.

(7) -

•

Logarithmic map projects the vector back to the Euclidean space.

(8) -

•

is the conformal factor.

(9)

III Model

In this section, we present the framework of our proposed Time-aware Hyperbolic Graph Attention Network (TA-HGAT), which is designed to model the temporal information in the hyperbolic session graph. First, we define the session-based recommendation task. Then we illustrate the three main components of the model: hyperbolic projection, time-aware hyperbolic attention, and hyperbolic evolutionary loss. These three components train the model with time-relevant features and provide the recommendation results given a specific timestamp. The overall structure of TA-HGAT is shown in Figure1.

III-A Problem definition

Session-based recommendation (SBR) is to predict the item a user will click next based on the user-item interaction sessions. Generally, it models the user’s short-term browsing session data to learn the user’s current interest. Here we formulate the SBR problem mathematically as below.

In the SBR problem, a session is denoted as ordered by timestamps. Each in is an item, and the item set is , which consists of all unique items in this session. To model the session into a directed graph, we take all items as nodes and the item-item sequential dependency as the edges to construct the session graph. The graph is denoted as , where are the node and edge sets, respectively. Each edge connects two consecutive items, which is formulated as . Our target is to learn the embeddings of items and the session and generate the ranking of the items that the user may be interested in at the next timestamp.

III-B Hyperbolic projection

In GNN, each node needs input as the initial embedding. Accommodated to SBR, the input of a GNN is the feature of items such as category or description. The initial embedding of item is . However, most feature embedding methods are based on the Euclidean space. To make the item features available in the hyperbolic space, we use the exponential map defined in Eq. 7 to project the initial item embeddings to the hyperbolic space. Specifically, the projection process is formulated as

| (10) |

where is the mapped embedding in the hyperbolic space and is the chosen point in the tangent space.

To achieve a high-level latent representation of the node features, we also add a linear transformation parameterized by a weight matrix , where is the dimension of and is the dimension of the node’s final embedding. Please note that is a shared weight matrix for all nodes. Möbius matrix-vector multiplication defined in Eq. 4 is employed to transform and the process is

| (11) |

where is the transformed embedding, which is also used as the initial node embedding in the following steps.

III-C Time-aware hyperbolic attention

According to [15, 20, 32, 33], embedding users and items in hyperbolic spaces is a significant improvement of graph-based recommender systems. However, none of these works model the time intervals and users’ current interests in hyperbolic spaces. Our proposed model TA-HGAT is the first attempt to solve the problem, in which time-aware hyperbolic attention is the core component. It is composed of two attention layers: 1) Hyperbolic self-attention in the aggregation process, which considers time intervals between items; 2) Hyperbolic soft-attention in the session embedding learning, which models the user’s current interest.

III-C1 Hyperbolic self-attention with time intervals

According to Section II-A, a key step in graph attention is to learn the attention coefficient for each node pair . means the importance of the neighbors to the central node. To learn the , unlike the traditional attention networks which apply linear transformation [25] or inner product[26], here we use the distance of the node embeddings in the hyperbolic space. Specifically, we denote the distance of node pair as , which is calculated as

| (12) |

Then with the node distances, we further learn the attention coefficient of node with all its neighbors (including itself) as

| (13) |

The reason that we use distance in the hyperbolic space to calculate attention coefficients is because of two advantages. First, attention coefficients in Euclidean spaces are usually calculated by linear transformation [25] or inner product[26], which fail to meet the triangle inequality. In hyperbolic space, the learned attention coefficients are able to meet this criterion and preserve the transitivity among nodes. Second, the attention coefficient of the node with itself is , so the effect of the central node itself will not affect the calculation of attention coefficients.

After we achieve attention coefficients, the next step is to aggregate the node embeddings to learn the central node embedding of the next layer. Here the learned attention coefficients serve as the weights applied to the embeddings of neighbor nodes. The process is formulated as

| (14) |

where is the Möbius addition of the weighted neighbor node embeddings and is a nonlinear function such as sigmoid and LeakyReLU. Different from Eq. 11, the in Eq. 14 is Möbius scalar multiplication defined in Eq. 6.

To integrate the temporal information into the attention layer, the core idea is to incorporate the time intervals into the aggregation process. Specifically, we transform the time intervals to the vectors in the hyperbolic space and combine the time vectors with the neighbor node embeddings for aggregation. As time intervals are continuous values, we project the time interval values into vectors with a mapping function. The mapping process is

| (15) |

where is the time interval, here is Möbius matrix-vector multiplication, and is the transition vector to project the time interval to a vector. In this paper, if two items have multiple time intervals between them, we choose the closest one. This process is done in the data preprocessing part before modeling.

Motivated by TransE [34], time-aware hyperbolic attention translates the neighbor node embedding to the central node embedding via temporal information, so the joint embedding of nodes embedding and time embedding is generated by Möbius addition, which is represented as .

In Eq. 14, all neighbors of the central node are aggregated by Möbius addition. As the Möbius addition is complicated and consumes more computation resources than the addition in the Euclidean space, here we simplify the calculation in Eq. 14 using the logarithmic map to project the embeddings into a tangent space (Euclidean space) to conduct aggregation operation. Then the embeddings are projected back to the hyperbolic manifold with the exponential map. Therefore, we can re-write the aggregation process in Eq. 14 as

| (16) |

III-C2 Hyperbolic soft-attention with users’ current interests

In the process above, we update the embedding of node with its neighbors and time intervals. To make recommendations based on the learned node embeddings, we also need to know the global embedding of the session graph by aggregating all node embeddings. Instead of simply adding all node embeddings together, we also provide another solution to learn the graph embedding while considering users’ current interests based on the most recent interacted items.

Understanding users’ current interests are one of the main tasks in SBR. In the previous studies [6, 7, 12], the last item in the session is the most related feature in this task. To learn from the correlation of the last item with each of the other items in the session, we adopt a soft-attention mechanism to generate attention coefficients for item with all other items, which represent the importance of items w.r.t. the current timestamp. The learning process of the global session embedding is

| (17) |

| (18) |

where is the attention coefficient of item to another item in the session . and are weight matrices. is the session embedding that contains the session graph structure, temporal information, and user’s current intent, so we can use to infer the user’s next interaction in our next step.

III-D Hyperbolic evolutionary loss

Here we introduce how to leverage evolutionary loss to provide recommendations given a specific timestamp. Unlike other works [3, 35], our evolutionary loss is also fully hyperbolic.

III-D1 Evolution formulas

The core idea of evolutionary loss is to predict the future session and next-item embeddings given a future timestamp and then make recommendations. The prediction results of evolutionary loss do not rely on the sequences like RNN-based models[4, 5] but are based on the final embeddings learned by the TA-HGAT.

As is the predicted session embedding in the future, we also need an estimated future session embedding to measure whether the predicted embedding is accurate. Assume that the growth of the session embedding is smooth. The embedding vector of the session evolves in a contiguous space. Therefore, we devise a projection function to infer the future session embedding based on the element-wise product of the previous embedding and the time interval. The embedding projection of session after current time to the future time is defined as follows:

| (19) |

where is a vector with all elements and is Möbius element-wise product. is the time interval vector, which is learned in the same way as Eq. 15. The vector is to provide the minimum difference between the last and next session embeddings. With this projection function, the future session embedding grows in a smooth trajectory w.r.t. the time interval.

After learning the projected embedding of the session , the next step is to apply another projection function to generate the future embedding of the next item , which is denoted as . The projected future item embedding is composed of three components: the projected session embedding, the last item embedding, and the time interval, which are learned in the previous steps. Here, we define the projection formula of next item as

| (20) |

where and denote the weight matrix.

III-D2 Loss function

With the above projection functions, we can achieve the estimated future embeddings of the session and the next item. They are utilized as ground truth embeddings in our loss function. To train the model, the loss function is designed to minimize the distances between model-generated embeddings , and estimated ground truth embeddings , at each interaction time . Also, another constraint for the item embeddings is necessary to avoid overfitting. We constrain the distance between the embeddings of the most recent two items and to ensure the last item embeddings are consistent with the previous one. This constraint assumes that the last and next items reflect similar user intent, and the session embedding tends to be stable in a short time. Finally, the loss function is as follows:

| (21) |

where denotes all sessions in the datasets, and and are smooth coefficients, which are used to prevent the embeddings of the session and items from deviating too much during the update process. is the hyperbolic distance function which is described in Eq. 12.

To make recommendations for a user, we calculate the hyperbolic distances between the predicted item embedding obtained from the loss function and all other item embeddings. Then the nearest top- items are what we predict for the user.

Compared with traditional BPR loss [36], the evolutionary loss is more suitable for time-aware recommendations because it takes time intervals into account. As a result, the changing trajectories are modeled by this loss [3], and it can make more precise recommendations for the next item given a specific timestamp.

IV Experiments

In this section, we describe the experimental results on two public datasets and compare our proposed TA-HGAT with ten state-of-the-art baseline models. Our experiments are designed to solve the following research questions:

-

•

RQ1: How does TA-HGAT compare with other state-of-the-art session-based recommendation models?

-

•

RQ2: How do the two modules of time-aware hyperbolic attention, i.e., hyperbolic self-attention with time intervals and hyperbolic soft-attention with users’ current interests, affect the performance of TA-HGAT?

-

•

RQ3: How does the hyperbolic evolutionary loss compare with other loss functions?

-

•

RQ4: How is the influence of different hyper-parameters, i.e. embedding dimensions?

IV-A Experiment settings

IV-A1 Datasets

We conduct our experiments on two widely used public datasets: Yoochoose and Diginetica. The statistics of these datasets are listed in Table I.

-

•

Yoochoose111https://www.kaggle.com/datasets/chadgostopp/recsys-challenge-2015 is a public dataset released by the RecSys Challenge 2015, which contains click streams from yoochoose.com within 6 months.

-

•

Diginetica222https://competitions.codalab.org/competitions/11161 is obtained from the CIKM Cup 2016. We use the item categories to initialize the item embeddings.

| Datasets | Items | train sessions | test sessions | Avg. len | clicks |

|---|---|---|---|---|---|

| Diginetica | 43,097 | 719,470 | 60,858 | 5.12 | 982,961 |

| Yoochoose1/64 | 16,766 | 369,859 | 55,898 | 6.16 | 557,248 |

| Yoochoose1/4 | 29,618 | 5,917,746 | 55,898 | 5.71 | 8,326,407 |

IV-A2 Evaluation Metrics

We evaluate the performance of our model with Mean Reciprocal Rank (MRR@K) and Precision (P@K) in the comparison experiments.

MRR@K considers the position of the target item in the list of recommended items. It is set to 0 if the target item is not in the top- of the ranking list, or otherwise is calculated as follows:

| (22) |

where is the target item and is the number of test sequences in the dataset.

P@K measures whether the target item is included in the top- list of recommended items, which is calculated as

| (23) |

IV-A3 Implementation

Our model 333The datasets and codes will be available after accepted is implemented with PyTorch 1.12.1 [37] and CUDA 10.2. In the testing phase, we take the interval between the session’s last timestamp and the testing item’s timestamp as a part of the input to obtain the recommendation list. This setting is different from other baseline models as they cannot deal with temporal information. In fact, this setting meets the actual situation in the industry because our model can provide recommendations as soon as the user logs into the website, and we can easily obtain the real-time time interval.

IV-B Performance comparison (RQ1)

To demonstrate the effectiveness of TA-HGAT, we conduct experiments on two public datasets and compare the model with ten state-of-the-art baseline models.

IV-B1 Baseline models

-

•

S-POP takes the most popular items of each session as the recommended list.

-

•

FPMC[38] is a Markov chain-method for sequential recommendation, which only takes the item sequences in session-based recommendation since user features are unavailable.

-

•

GRU4REC[39] is the first work that applies RNN to the session-based recommendation to learn the sequential dependency of items.

-

•

NARM[5] utilizes an attention mechanism to model the sequential behaviors and the user’s primary purpose with global and local encoders.

-

•

STAMP[6] employs an attention and memory mechanism to learn the user’s preference and takes the last item as recent intent in the session to make recommendations.

-

•

SR-GNN[7] is the first work that model a session into a graph. It resorts to the gated graph neural networks to learn the complex item transitions in the sessions.

-

•

TAGNN [12] improves SR-GNN by learning the interest representation vector with different target items to improve the performance of the model.

-

•

NISER+ [40] handles the long-tail problem in SBR with L2 normalization and dropout to alleviate the overfitting problem.

-

•

SGNN-HN [41] applies a star graph neural network to consider the items without direct connections.

-

•

HCGR [15] models the session graphs in hyperbolic space and makes use of multi-behavior information to improve performance. In our experiments, we don’t use the behavior information as the datasets didn’t provide it and we are modeling a more general scenario.

| Models | Diginetica | Yoochoose 1/64 | Yoochoose 1/4 | |||

|---|---|---|---|---|---|---|

| MRR@20 | P@20 | MRR@20 | P@20 | MRR@20 | P@20 | |

| S-POP | 13.68 | 21.06 | 18.35 | 30.44 | 17.75 | 27.08 |

| FPMC | 8.92 | 31.55 | 15.01 | 45.62 | - | - |

| GRU4REC | 8.33 | 29.45 | 22.89 | 60.64 | 22.60 | 59.53 |

| NARM | 16.17 | 49.70 | 28.63 | 68.32 | 29.23 | 69.73 |

| STAMP | 14.32 | 45.64 | 29.67 | 68.74 | 30.00 | 70.44 |

| SR-GNN | 17.59 | 50.73 | 30.94 | 70.57 | 31.89 | 71.36 |

| TAGNN | 18.03 | 51.31 | 31.12 | 71.02 | 32.03 | 71.51 |

| HCGR | 18.51 | 52.47 | 31.46 | 71.13 | 32.39 | 71.66 |

| NISER+ | 18.72 | 53.39 | 31.61 | 71.27 | 31.80 | 71.80 |

| SGNN-HN | 19.45 | 55.67 | 32.61 | 72.06 | 32.55 | 72.85 |

| TA-HGAT | 19.73 | 56.28 | 32.90 | 72.75 | 32.94 | 73.56 |

| Improv. | 1.44% | 1.10 % | 0.89% | 0.96% | 1.20% | 0.97% |

IV-B2 Result analysis

The complete experimental results of the comparison study are shown in Table II. From the results, we have the following observations:

-

•

Our proposed TA-HGAT outperforms all baseline models on all datasets and metrics, which demonstrates the effectiveness of the model. Besides, HCGR, which is another hyperbolic graph-based SBR model, has achieved better performance than the graph-based SBR model SR-GNN but worse than our model. Compared to HCGR, our model improves 4.3% and 4.1% on average over three datasets on metrics MRR@20 and P@20, respectively. HCGR is better than SR-GNN, indicating that hyperbolic embeddings match session graphs. And the improvement of TA-HGAT over HCGR shows the importance of temporal information in the SBR task.

-

•

In Table II, we also observe that our model has a better performance on dataset Diginetica than Yoochoose. On average, the performance of TA-HGAT on Diginetica outperforms Yoochoose for 37.8% and 14.0% on metrics MRR@20 and P@20, respectively. This phenomenon may result from the initial features of items. In Diginetica, each item has its category label, and we transform this feature into a one-hot vector as the initial embedding of the item. In HCGR, we model the initial feature to a feature vector in the hyperbolic space, which is shown in Eq. 10 and 11. Differences in performance between Diginetica and Yoochoose indicate that the hyperbolic embeddings have a better expression ability on the item features.

IV-C Ablation study (RQ2)

In the TA-HGAT, we have two main modules in time-aware hyperbolic attention: hyperbolic self-attention with time intervals and hyperbolic soft-attention with users’ current interests. In this section, we evaluate their effectiveness separately to show the improvement compared with the ablation models without these two modules.

We set up four separate ablation models to compare the effectiveness of each attention layer. The first ablation model is no-att, in which we remove both the attention layers and only conduct the aggregation operations directly. The second and third ones are self-att and soft-att, and these two ablation models only include the self-attention and soft-attention layers, respectively. The fourth one is TA-HGAT, which is the complete model. The comparison results of the ablation models on datasets Diginetica and Yoochoose are illustrated in Figure 2.

From Figure 2, we observe the following results:

-

•

On both Diginetica and Yoochoose datasets, the non-att performs worst, and TA-HGAT performs best. The results show the effectiveness of the attention layers. This is because the TA-HGAT makes full use of the temporal information. Compared to the GNNs without temporal information, our model builds the relations between items with time intervals and also considers users’ current interests. Hence, the rich information helps the model to achieve better results.

-

•

Self-att performs better than soft-att, which means time intervals are relatively more meaningful than users’ current interests. This phenomenon may be due to the fact that users’ current interests are more complicated, so the last item cannot fully represent them. In contrast, the time interval is a more straightforward feature, so our proposed hyperbolic self-attention layer can handle this information effectively.

IV-D Comparison of loss functions (RQ3)

In this section, we compare our proposed hyperbolic evolutionary loss to conventional loss functions, i.e., BPR [36] and softmax loss [7]. Because the learned session embeddings in the output of our model are in the hyperbolic space, we need to use the logarithmic map to project the embeddings back to the Euclidean space before applying BPR and softmax loss.

The comparison results are shown in Table III. The hyperbolic evolutionary loss is denoted as TA-HGAT in the table. From this table, we can find that the performance of BPR and softmax loss is similar, but our proposed hyperbolic evolutionary loss has a clear improvement compared to the other losses. This observation demonstrates that considering the specific timestamp is effective for the SBR task models designed in hyperbolic space.

| Loss | Diginetica | Yoochoose 1/64 | Yoochoose 1/4 | |||

|---|---|---|---|---|---|---|

| MRR@20 | P@20 | MRR@20 | P@20 | MRR@20 | P@20 | |

| Softmax | 19.38 | 55.81 | 32.67 | 72.10 | 32.72 | 72.95 |

| BPR | 19.43 | 55.97 | 32.53 | 72.28 | 32.66 | 72.84 |

| TA-HGAT | 19.73 | 56.28 | 32.90 | 72.75 | 32.94 | 73.56 |

IV-E Hyperparameter analysis (RQ4)

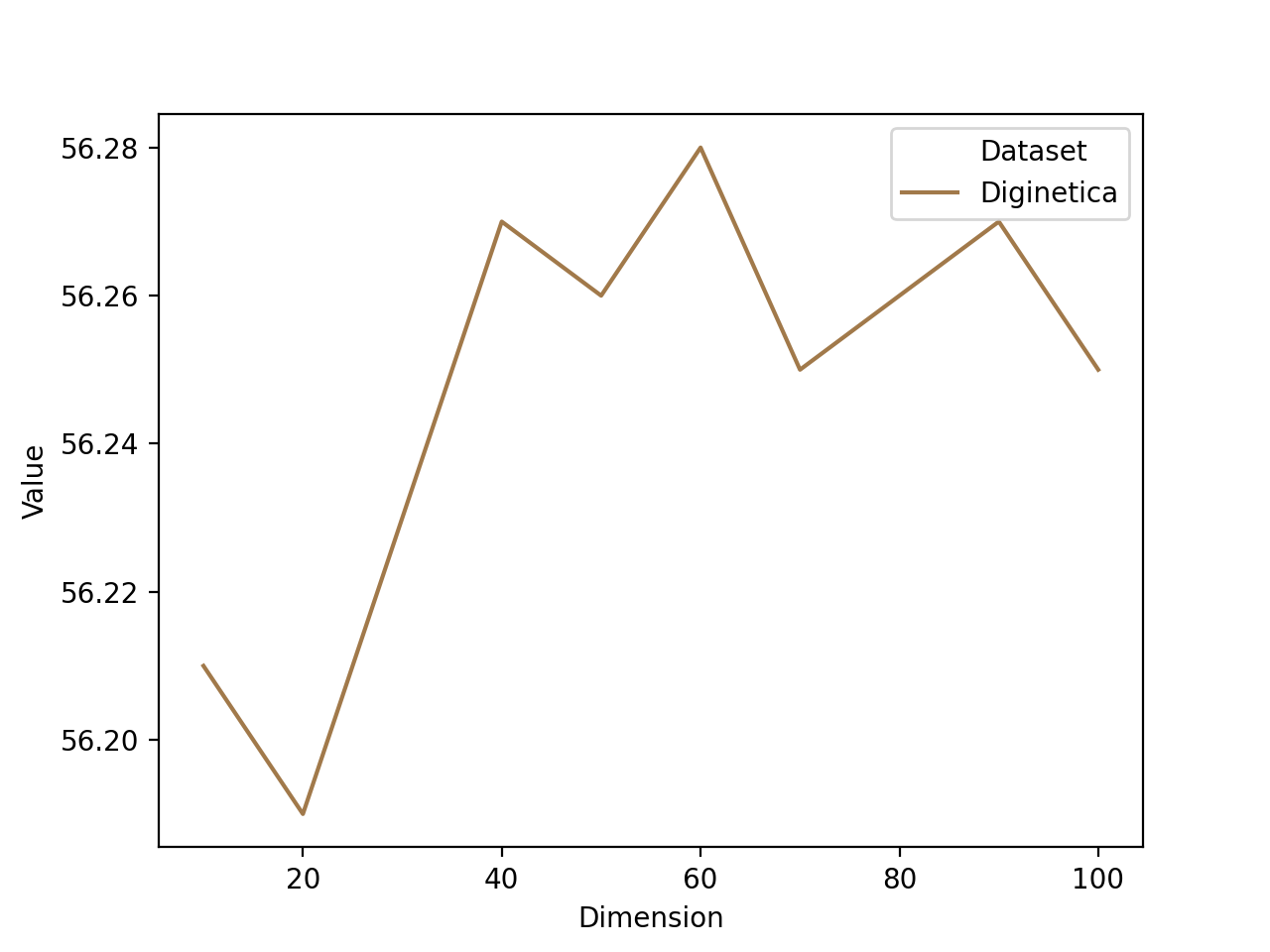

The embedding dimension is the hyperparameter in our proposed model, so we test the influence of different embedding dimensions in this section. The embedding dimensions range from 20 to 100. The results of the hyperparameter analysis are illustrated in Figure 3.

It is observed that a proper embedding dimension is essential for learning the item and session representations. From Figure 3, we can see that the Diginetica and Yoochoose 1/64 all achieve the best performance when the embedding dimension is 60, and the best result of Yoochoose 1/4 is 80. Because Yoochoose 1/4 is much larger than the other two datasets, it indicates that larger datasets need larger embedding space.

V Related works

V-A Hyperbolic spaces

Recent research has shown that many types of complex data exhibit a highly non-Euclidean structure[19]. In many domains, e.g., natural language [42], computer vision[43], and healthcare[44], data usually has a tree-like structure or can be represented hierarchically. Since this type of data contains an underlying hierarchical structure, capturing such representations in Euclidean space is difficult. To solve this problem, current studies are increasingly attracted by the idea of building neural networks in Riemannian space, such as the hyperbolic space, which is a homogeneous space with constant negative curvature [27]. Compared with Euclidean space, hyperbolic space in which the volume of a ball grows exponentially with radius instead of growing polynomially. Because of its powerful representation ability, hyperbolic space has been applied in many areas. For instance, [45] learns word and sentence embeddings in hyperbolic space in an unsupervised manner from text corpora. [46] demonstrates that hyperbolic embeddings are beneficial for visual data. [47] proposes Hyperbolic Graph Convolutional Neural Networks, which combines the expressiveness of GCNs and hyperbolic geometry to learn graph representations. These works show the potential and advantages of hyperbolic space in learning hierarchical structures of complex data.

Based on the performance of hyperbolic space in these fields, it is natural for researchers to think of applying hyperbolic learning to recommender systems. [48] justifies the use of hyperbolic representations for neural recommender systems. [49] proposes HyperML to bridge the gap between Euclidean and hyperbolic geometry in recommender systems through a metric learning approach. [21] proposes a hyperbolic GCN model for collaborative filtering. [50] presents HyperSoRec, a novel graph neural network (GNN) framework with multiple-aspect learning for social recommendation.

V-B Session-based Recommendation

Session-based Recommendation (SBR) has increasingly engaged attention in both industry and academia due to its effectiveness in modeling users’ current interests. In the recent SBR studies, there are mainly three types of methods that apply deep learning to SBR and have achieved state-of-the-art performance, which are sequence-based [4, 51], attention-based [5, 6] and graph-based models [7]. GRU4REC [4] is the most representative work in the sequence-based SBR models. It employs GRU, a variant of RNN, to model the item sequences and make the next-item prediction. Following GRU4REC, some other papers [51, 52, 39] improve it with data augmentation, hierarchical structure, and top- gains. Attention-based models aim to learn the importance of different items in the session and make the model focus on the important ones. NARM [5] utilizes an attention mechanism to model both local and global features of the session to learn users’ interests. STAMP [6] combines the attention model and memory network to learn the short-term priority of sessions. Graph-based models connect the items in a graph to learn their complex transitions. SR-GNN [7] is the first work that models the sessions into graphs. It leverages the Gated Graph Neural Network (GGNN) to model the session graphs and achieve state-of-the-art performance. Based on SR-GNN, [10, 12] improve SR-GNN with attention layers. [11, 8] consider the item order in the session graph in the model. [9, 13, 14] take additional information such as global item relationship, item categories, and user representations into account to design more extensive models. However, all these methods fail to consider the hierarchical geometry of the session graphs and the temporal information.

V-C GNN-based Recommendation Models

GNNs have proven to be useful in different research fields [53, 54, 55, 56, 57, 58]. There also exist many works considering the graph structures in data modeling of recommender systems. Generally, there are two main ways regarding the graph structure and embedding space. One way is to model the user-item interaction graph in Euclidean spaces. Among them, [59, 60, 61] perform graph convolution on the user-item graph to explore their interactions. [62, 63, 64] utilize layer-to-layer neighborhood aggregation in GNNs to capture the high-order connections. [65] pre-trains user-user and item-item graphs separately to learn the initial embeddings of the user-item interaction graph. [35] models the changes in user-item interactions with a dynamic graph and evolutionary loss. These works apply GNN to learn from high-dimensional graph data and generate low-dimensional node embeddings without feature engineering, but the learned embeddings are all in Euclidean spaces, while some graph data may be more suitable to other geometries in the representation learning.

The other way is to model the recommendation graph in hyperbolic space to learn the hierarchical geometry. HGCF [21] applies hyperbolic GCN to learn the node embeddings using a user-item graph. Wang et al. [20] propose a fully hyperbolic GCN where all operations are conducted in hyperbolic space. Xu et al. [32] model the product graph in a knowledge graph and learn the node embeddings in hyperbolic space. HCGR [15] is a novel hyperbolic contrastive graph representation learning method to make session-based recommendations. None of these models utilize the time-relevant information in the session graphs to improve the recommendation accuracy. In this paper, we propose a novel framework incorporating a time-aware graph attention mechanism in hyperbolic space, which is specifically devised for the session-based recommendation.

VI Conclusion

Session-based Recommendation (SBR) is to predict users’ next interested items based on their previous sessions. Existing works model the graph structure in the sessions and have achieved state-of-the-art performance. However, they fail to consider the hierarchical geometry and temporal information in the sessions. In this paper, we propose TA-HGAT, a hyperbolic GNN-based model that considers the time interval between items and users’ current interests. Experiment results demonstrate that TA-HGAT outperforms other SBR models on two real-world datasets.

For future work, we will extend our model to more general recommender systems. The time intervals are not only in the SBR problem, but also in almost all recommender systems. As a result, we want to test how our model performs on other recommendation problems, e.g., next-basket recommendation and point-of-interest recommendation, where temporal information plays a crucial role in providing recommendations.

VII Acknowledgement

This work is supported in part by NSF under grants III-1763325, III-1909323, III-2106758, and SaTC-1930941.

References

- [1] G. Zhou, X. Zhu, C. Song, Y. Fan, H. Zhu, X. Ma, Y. Yan, J. Jin, H. Li, and K. Gai, “Deep interest network for click-through rate prediction,” in SIGKDD. ACM, 2018, pp. 1059–1068.

- [2] A. Van den Oord, S. Dieleman, and B. Schrauwen, “Deep content-based music recommendation,” Advances in neural information processing systems, vol. 26, 2013.

- [3] S. Kumar, X. Zhang, and J. Leskovec, “Predicting dynamic embedding trajectory in temporal interaction networks,” in SIGKDD. ACM, 2019, pp. 1269–1278.

- [4] B. Hidasi, A. Karatzoglou, L. Baltrunas, and D. Tikk, “Session-based recommendations with recurrent neural networks,” arXiv preprint arXiv:1511.06939, 2015.

- [5] J. Li, P. Ren, Z. Chen, Z. Ren, T. Lian, and J. Ma, “Neural attentive session-based recommendation,” in CIKM. ACM, 2017, pp. 1419–1428.

- [6] Q. Liu, Y. Zeng, R. Mokhosi, and H. Zhang, “Stamp: short-term attention/memory priority model for session-based recommendation,” in SIGKDD. ACM, 2018, pp. 1831–1839.

- [7] S. Wu, Y. Tang, Y. Zhu, L. Wang, X. Xie, and T. Tan, “Session-based recommendation with graph neural networks,” in Proceedings of the AAAI conference on artificial intelligence, vol. 33, no. 01, 2019, pp. 346–353.

- [8] T. Chen and R. C.-W. Wong, “Handling information loss of graph neural networks for session-based recommendation,” in SIGKDD. ACM, 2020, pp. 1172–1180.

- [9] Z. Wang, W. Wei, G. Cong, X.-L. Li, X.-L. Mao, and M. Qiu, “Global context enhanced graph neural networks for session-based recommendation,” in SIGIR. ACM, 2020, pp. 169–178.

- [10] C. Xu, P. Zhao, Y. Liu, V. S. Sheng, J. Xu, F. Zhuang, J. Fang, and X. Zhou, “Graph contextualized self-attention network for session-based recommendation.” in IJCAI, vol. 19, 2019, pp. 3940–3946.

- [11] R. Qiu, J. Li, Z. Huang, and H. Yin, “Rethinking the item order in session-based recommendation with graph neural networks,” in CIKM. ACM, 2019, pp. 579–588.

- [12] F. Yu, Y. Zhu, Q. Liu, S. Wu, L. Wang, and T. Tan, “Tagnn: Target attentive graph neural networks for session-based recommendation,” in SIGIR. ACM, 2020, pp. 1921–1924.

- [13] L. Liu, L. Wang, and T. Lian, “Case4sr: Using category sequence graph to augment session-based recommendation,” Knowledge-Based Systems, vol. 212, p. 106558, 2021.

- [14] Y. Pang, L. Wu, Q. Shen, Y. Zhang, Z. Wei, F. Xu, E. Chang, B. Long, and J. Pei, “Heterogeneous global graph neural networks for personalized session-based recommendation,” in WSDM. ACM, 2022, pp. 775–783.

- [15] N. Guo, X. Liu, S. Li, Q. Ma, Y. Zhao, B. Han, L. Zheng, K. Gao, and X. Guo, “Hcgr: Hyperbolic contrastive graph representation learning for session-based recommendation,” arXiv preprint arXiv:2107.05366, 2021.

- [16] J. Li, Y. Wang, and J. McAuley, “Time interval aware self-attention for sequential recommendation,” in WSDM, 2020, pp. 322–330.

- [17] W. Ye, S. Wang, X. Chen, X. Wang, Z. Qin, and D. Yin, “Time matters: Sequential recommendation with complex temporal information,” in SIGIR, 2020, pp. 1459–1468.

- [18] R. C. Wilson, E. R. Hancock, E. Pekalska, and R. P. Duin, “Spherical and hyperbolic embeddings of data,” IEEE transactions on pattern analysis and machine intelligence, vol. 36, no. 11, pp. 2255–2269, 2014.

- [19] M. M. Bronstein, J. Bruna, Y. LeCun, A. Szlam, and P. Vandergheynst, “Geometric deep learning: going beyond euclidean data,” IEEE Signal Processing Magazine, vol. 34, no. 4, pp. 18–42, 2017.

- [20] L. Wang, F. Hu, S. Wu, and L. Wang, “Fully hyperbolic graph convolution network for recommendation,” in CIKM, 2021, pp. 3483–3487.

- [21] J. Sun, Z. Cheng, S. Zuberi, F. Pérez, and M. Volkovs, “Hgcf: Hyperbolic graph convolution networks for collaborative filtering,” in Proceedings of the Web Conference 2021, 2021, pp. 593–601.

- [22] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” ICLR, 2016.

- [23] W. Hamilton, Z. Ying, and J. Leskovec, “Inductive representation learning on large graphs,” in Advances in neural information processing systems, 2017, pp. 1024–1034.

- [24] Z. Ying, J. You, C. Morris, X. Ren, W. Hamilton, and J. Leskovec, “Hierarchical graph representation learning with differentiable pooling,” Advances in neural information processing systems, vol. 31, 2018.

- [25] P. Veličković, G. Cucurull, A. Casanova, A. Romero, P. Lio, and Y. Bengio, “Graph attention networks,” arXiv preprint arXiv:1710.10903, 2017.

- [26] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, pp. 5998–6008, 2017.

- [27] M. Nickel and D. Kiela, “Poincaré embeddings for learning hierarchical representations,” Advances in neural information processing systems, vol. 30, 2017.

- [28] C. Gulcehre, M. Denil, M. Malinowski, A. Razavi, R. Pascanu, K. M. Hermann, P. Battaglia, V. Bapst, D. Raposo, A. Santoro et al., “Hyperbolic attention networks,” arXiv preprint arXiv:1805.09786, 2018.

- [29] M. Nickel and D. Kiela, “Learning continuous hierarchies in the lorentz model of hyperbolic geometry,” in International Conference on Machine Learning. PMLR, 2018, pp. 3779–3788.

- [30] Q. Liu, M. Nickel, and D. Kiela, “Hyperbolic graph neural networks,” Advances in neural information processing systems, vol. 32, 2019.

- [31] O. Ganea, G. Bécigneul, and T. Hofmann, “Hyperbolic neural networks,” Advances in neural information processing systems, vol. 31, 2018.

- [32] D. Xu, C. Ruan, E. Korpeoglu, S. Kumar, and K. Achan, “Product knowledge graph embedding for e-commerce,” in WSDM. ACM, 2020, pp. 672–680.

- [33] Y. Li, H. Chen, X. Sun, Z. Sun, L. Li, L. Cui, P. S. Yu, and G. Xu, “Hyperbolic hypergraphs for sequential recommendation,” in CIKM, 2021, pp. 988–997.

- [34] A. Bordes, N. Usunier, A. Garcia-Duran, J. Weston, and O. Yakhnenko, “Translating embeddings for modeling multi-relational data,” in Advances in neural information processing systems, 2013, pp. 2787–2795.

- [35] X. Li, M. Zhang, S. Wu, Z. Liu, L. Wang, and S. Y. Philip, “Dynamic graph collaborative filtering,” in ICDM. IEEE, 2020, pp. 322–331.

- [36] S. Rendle, C. Freudenthaler, Z. Gantner, and L. Schmidt-Thieme, “Bpr: Bayesian personalized ranking from implicit feedback,” arXiv preprint arXiv:1205.2618, 2012.

- [37] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga et al., “Pytorch: An imperative style, high-performance deep learning library,” Advances in neural information processing systems, vol. 32, 2019.

- [38] S. Rendle, C. Freudenthaler, and L. Schmidt-Thieme, “Factorizing personalized markov chains for next-basket recommendation,” in Proceedings of the 19th international conference on World wide web, 2010, pp. 811–820.

- [39] B. Hidasi and A. Karatzoglou, “Recurrent neural networks with top-k gains for session-based recommendations,” in CIKM, 2018, pp. 843–852.

- [40] P. Gupta, D. Garg, P. Malhotra, L. Vig, and G. M. Shroff, “Niser: normalized item and session representations with graph neural networks,” arXiv preprint arXiv:1909.04276, 2019.

- [41] Z. Pan, F. Cai, W. Chen, H. Chen, and M. de Rijke, “Star graph neural networks for session-based recommendation,” in CIKM, 2020, pp. 1195–1204.

- [42] A. Tifrea, G. Bécigneul, and O.-E. Ganea, “Poincar’e glove: Hyperbolic word embeddings,” arXiv preprint arXiv:1810.06546, 2018.

- [43] M.-M. Yau and S. N. Srihari, “A hierarchical data structure for multidimensional digital images,” Communications of the ACM, vol. 26, no. 7, pp. 504–515, 1983.

- [44] S. J. Wilkens, J. Janes, and A. I. Su, “Hiers: hierarchical scaffold clustering using topological chemical graphs,” Journal of medicinal chemistry, vol. 48, no. 9, pp. 3182–3193, 2005.

- [45] B. Dhingra, C. Shallue, M. Norouzi, A. Dai, and G. Dahl, “Embedding text in hyperbolic spaces,” in Proceedings of the Twelfth Workshop on Graph-Based Methods for Natural Language Processing (TextGraphs-12), 2018, pp. 59–69.

- [46] V. Khrulkov, L. Mirvakhabova, E. Ustinova, I. Oseledets, and V. Lempitsky, “Hyperbolic image embeddings,” in CVPR, 2020, pp. 6418–6428.

- [47] I. Chami, Z. Ying, C. Ré, and J. Leskovec, “Hyperbolic graph convolutional neural networks,” Advances in neural information processing systems, vol. 32, 2019.

- [48] B. P. Chamberlain, S. R. Hardwick, D. R. Wardrope, F. Dzogang, F. Daolio, and S. Vargas, “Scalable hyperbolic recommender systems,” arXiv preprint arXiv:1902.08648, 2019.

- [49] L. Vinh Tran, Y. Tay, S. Zhang, G. Cong, and X. Li, “Hyperml: A boosting metric learning approach in hyperbolic space for recommender systems,” in WSDM, 2020, pp. 609–617.

- [50] H. Wang, D. Lian, H. Tong, Q. Liu, Z. Huang, and E. Chen, “Hypersorec: Exploiting hyperbolic user and item representations with multiple aspects for social-aware recommendation,” ACM Transactions on Information Systems (TOIS), vol. 40, no. 2, pp. 1–28, 2021.

- [51] Y. K. Tan, X. Xu, and Y. Liu, “Improved recurrent neural networks for session-based recommendations,” in Proceedings of the 1st workshop on deep learning for recommender systems, 2016, pp. 17–22.

- [52] M. Quadrana, A. Karatzoglou, B. Hidasi, and P. Cremonesi, “Personalizing session-based recommendations with hierarchical recurrent neural networks,” in proceedings of the Eleventh ACM Conference on Recommender Systems, 2017, pp. 130–137.

- [53] H. Peng, J. Li, Q. Gong, Y. Song, Y. Ning, K. Lai, and P. S. Yu, “Fine-grained event categorization with heterogeneous graph convolutional networks,” arXiv preprint arXiv:1906.04580, 2019.

- [54] Z. Liu, X. Li, Z. You, T. Yang, W. Fan, and P. Yu, “Medical triage chatbot diagnosis improvement via multi-relational hyperbolic graph neural network,” in SIGIR. ACM, 2021, pp. 1965–1969.

- [55] Y. Dou, Z. Liu, L. Sun, Y. Deng, H. Peng, and P. S. Yu, “Enhancing graph neural network-based fraud detectors against camouflaged fraudsters,” in CIKM. ACM, 2020, pp. 315–324.

- [56] Z. Liu, X. Li, H. Peng, L. He, and S. Y. Philip, “Heterogeneous similarity graph neural network on electronic health records,” in 2020 IEEE International Conference on Big Data (Big Data). IEEE, 2020, pp. 1196–1205.

- [57] J. Li, H. Peng, Y. Cao, Y. Dou, H. Zhang, P. Yu, and L. He, “Higher-order attribute-enhancing heterogeneous graph neural networks,” IEEE Transactions on Knowledge and Data Engineering, 2021.

- [58] Y. Hei, R. Yang, H. Peng, L. Wang, X. Xu, J. Liu, H. Liu, J. Xu, and L. Sun, “Hawk: Rapid android malware detection through heterogeneous graph attention networks,” IEEE Transactions on Neural Networks and Learning Systems, 2021.

- [59] L. Zheng, C.-T. Lu, F. Jiang, J. Zhang, and P. S. Yu, “Spectral collaborative filtering,” in Proceedings of the 12th ACM Conference on Recommender Systems, 2018, pp. 311–319.

- [60] R. v. d. Berg, T. N. Kipf, and M. Welling, “Graph convolutional matrix completion,” arXiv preprint arXiv:1706.02263, 2017.

- [61] W. Yu and Z. Qin, “Graph convolutional network for recommendation with low-pass collaborative filters,” in ICML. PMLR, 2020, pp. 10 936–10 945.

- [62] X. Wang, X. He, M. Wang, F. Feng, and T.-S. Chua, “Neural graph collaborative filtering,” in SIGIR. ACM, 2019, pp. 165–174.

- [63] X. He, K. Deng, X. Wang, Y. Li, Y. Zhang, and M. Wang, “Lightgcn: Simplifying and powering graph convolution network for recommendation,” in SIGIR. ACM, 2020, pp. 639–648.

- [64] Z. Liu, X. Li, Z. Fan, S. Guo, K. Achan, and S. Y. Philip, “Basket recommendation with multi-intent translation graph neural network,” in 2020 IEEE International Conference on Big Data (Big Data). IEEE, 2020, pp. 728–737.

- [65] X. Li, Z. Liu, S. Guo, Z. Liu, H. Peng, S. Y. Philip, and K. Achan, “Pre-training recommender systems via reinforced attentive multi-relational graph neural network,” in 2021 IEEE International Conference on Big Data (Big Data). IEEE, 2021, pp. 457–468.