Time-adaptive Lagrangian Variational Integrators

for Accelerated Optimization on Manifolds

Abstract.

A variational framework for accelerated optimization was recently introduced on normed vector spaces and Riemannian manifolds in Wibisono et al. [65] and Duruisseaux and Leok [19]. It was observed that a careful combination of time-adaptivity and symplecticity in the numerical integration can result in a significant gain in computational efficiency. It is however well known that symplectic integrators lose their near energy preservation properties when variable time-steps are used. The most common approach to circumvent this problem involves the Poincaré transformation on the Hamiltonian side, and was used in Duruisseaux et al. [20] to construct efficient explicit algorithms for symplectic accelerated optimization. However, the current formulations of Hamiltonian variational integrators do not make intrinsic sense on more general spaces such as Riemannian manifolds and Lie groups. In contrast, Lagrangian variational integrators are well-defined on manifolds, so we develop here a framework for time-adaptivity in Lagrangian variational integrators and use the resulting geometric integrators to solve optimization problems on vector spaces and Lie groups.

1. Introduction

Many machine learning algorithms are designed around the minimization of a loss function or the maximization of a likelihood function. Due to the ever-growing scale of data sets, there has been a lot of focus on first-order optimization algorithms because of their low cost per iteration. In 1983, Nesterov’s accelerated gradient method was introduced in [54], and was shown to converge in to the minimum of the convex objective function , improving on the convergence rate exhibited by the standard gradient descent methods. This convergence rate was shown in [55] to be optimal among first-order methods using only information about at consecutive iterates. This phenomenon in which an algorithm displays this improved rate of convergence is referred to as acceleration, and other accelerated algorithms have been derived since Nesterov’s algorithm. More recently, it was shown in [59] that Nesterov’s accelerated gradient method limits, as the time-step goes to 0, to a second-order differential equation and that the objective function converges to its optimal value at a rate of along the trajectories of this ordinary differential equation. It was later shown in [65] that in continuous time, the convergence rate of can be accelerated to an arbitrary convergence rate in normed spaces, by considering flow maps generated by a family of time-dependent Bregman Lagrangian and Hamiltonian systems which is closed under time-rescaling. This framework for accelerated optimization in normed vector spaces has been studied and exploited using geometric numerical integrators in [3; 31; 8; 22; 21; 20]. In [20], time-adaptive geometric integrators have been proposed to take advantage of the time-rescaling property of the Bregman family and design efficient explicit algorithms for symplectic accelerated optimization. It was observed that a careful use of adaptivity and symplecticity could result in a significant gain in computational efficiency, by simulating higher-order Bregman dynamics using the computationally efficient lower-order Bregman integrators applied to the time-rescaled dynamics.

More generally, symplectic integrators form a class of geometric integrators of interest since, when applied to Hamiltonian systems, they yield discrete approximations of the flow that preserve the symplectic 2-form and as a result also preserve many qualitative aspects of the underlying dynamical system (see [26]). In particular, when applied to conservative Hamiltonian systems, symplectic integrators exhibit excellent long-time near-energy preservation. However, when symplectic integrators were first used in combination with variable time-steps, the near-energy preservation was lost and the integrators performed poorly (see [7; 24]). There has been a great effort to circumvent this problem, and there have been many successes, including methods based on the Poincaré transformation [69; 25]: a Poincaré transformed Hamiltonian in extended phase space is constructed which allows the use of variable time-steps in symplectic integrators without losing the nice conservation properties associated to these integrators. In [20], the Poincaré transformation was incorporated in the Hamiltonian variational integrator framework which provides a systematic method for constructing symplectic integrators of arbitrarily high-order based on the discretization of Hamilton’s principle [49; 27], or equivalently, by the approximation of the generating function of the symplectic flow map. The Poincaré transformation was at the heart of the construction of time-adaptive geometric integrators for Bregman Hamiltonian systems which resulted in efficient, explicit algorithms for accelerated optimization in [20].

In [60; 42], accelerated optimization algorithms were proposed in the Lie group setting for specific choices of parameters in the Bregman family, and [2] provided a first example of Bregman dynamics on Riemannian manifolds. The entire variational framework was later generalized to the Riemannian manifold setting in [19], and time-adaptive geometric integrators taking advantage of the time-rescaling property of the Bregman family have been proposed in the Riemannian manifold setting as well using discrete variational integrators incorporating holonomic constraints [16] and projection-based variational integrators [18]. Note that both these strategies relied on exploiting the structure of the Euclidean spaces in which the Riemannian manifolds are embedded. Although the Whitney and Nash Embedding Theorems [64; 63; 53] imply that there is no loss of generality when studying Riemannian manifolds only as submanifolds of Euclidean spaces, designing intrinsic methods that would exploit and preserve the symmetries and geometric properties of the manifold could have advantages both in terms of computational efficiency and in terms of improving our understanding of the acceleration phenomenon on Riemannian manifolds. Developing an intrinsic extension of Hamiltonian variational integrators to manifolds would require some additional work, since the current approach involves Type II/III generating functions , , which depend on the position at one boundary point, and the momentum at the other boundary point. However, this does not make intrinsic sense on a manifold, since one needs the base point in order to specify the corresponding cotangent space. On the other hand, Lagrangian variational integrators involve a Type I generating function which only depends on the position at the boundary points and is therefore well-defined on manifolds, and many Lagrangian variational integrators have been derived on Riemannian manifolds, especially in the Lie group [38; 39; 5; 27; 56; 43; 28; 37; 36] and homogeneous space [40] settings. This gives an incentive to construct a mechanism on the Lagrangian side which mimics the Poincaré transformation, since it is more natural and easier to work on the Lagrangian side on more general spaces than on the Hamiltonian side. However, a first difficulty is that the Poincaré transformed Hamiltonian is degenerate and therefore does not have a corresponding Type I Lagrangian formulation. As a result, we cannot exploit the usual correspondence between Hamiltonian and Lagrangian dynamics and need to come up with a different strategy. A second difficulty is that all the literature to this day on the Poincaré transformation constructs the Poincaré transformed system by reverse-engineering, which does not provide much insight into the origin of the mechanism and how it can be extended to different systems.

Outline. We first review the basics of variational integration of Lagrangian and Hamiltonian systems, and the Poincaré transformation in Section 2. We then introduce a simple but novel derivation of the Poincaré transformation from a variational principle in Section 3.1. This gives additional insight into the transformation mechanism and provides natural candidates for time-adaptivity on the Lagrangian side, which we then construct both in continuous and discrete time in Sections 3.2 and 3.3. We then compare the performance of the resulting time-adaptive Lagrangian accelerated optimization algorithms to their Poincaré Hamiltonian analogues in Section 4. Finally, we demonstrate in Section 5 that our time-adaptive Lagrangian approach extends naturally to more general spaces without having to face the obstructions experienced on the Hamiltonian side.

Contributions. In summary, the main contributions of this paper are:

-

•

A novel derivation of the Poincaré transformation from a variational principle, in Section 3.1

- •

-

•

New explicit symplectic accelerated optimization algorithms on normed vector spaces

-

•

New intrinsic symplectic accelerated optimization algorithms on Riemannian manifolds

2. Background

2.1. Lagrangian and Hamiltonian Mechanics

Given a -dimensional manifold , a Lagrangian is a function . The corresponding action integral is the functional

| (2.1) |

over the space of smooth curves . Hamilton’s variational principle states that where the variation is induced by an infinitesimal variation of the trajectory that vanishes at the endpoints. Given local coordinates on the manifold , Hamilton’s variational principle can be shown to be equivalent to the Euler–Lagrange equations,

| (2.2) |

The Legendre transform of is defined fiberwise by , and we say that a Lagrangian is regular or nondegenerate if the Hessian matrix is invertible for every and , and hyperregular if the Legendre transform is a diffeomorphism. A hyperregular Lagrangian on induces a Hamiltonian system on via

| (2.3) |

where is the conjugate momentum of . A Hamiltonian is called hyperregular if defined by , is a diffeomorphism. Hyperregularity of the Hamiltonian implies invertibility of the Hessian matrix and thus nondegeneracy of . Theorem 7.4.3 in [48] states that hyperregular Lagrangians and hyperregular Hamiltonians correspond in a bijective manner. We can also define a Hamiltonian variational principle on the Hamiltonian side in momentum phase space which is equivalent to Hamilton’s equations,

| (2.4) |

These equations can also be shown to be equivalent to the Euler–Lagrange equations (2.2), provided that the Lagrangian is hyperregular.

2.2. Variational Integrators

Variational integrators are derived by discretizing Hamilton’s principle, instead of discretizing Hamilton’s equations directly. As a result, variational integrators are symplectic, preserve many invariants and momentum maps, and have excellent long-time near-energy preservation (see [49]).

Traditionally, variational integrators have been designed based on the Type I generating function commonly known as the discrete Lagrangian, . The exact discrete Lagrangian that generates the time- flow of Hamilton’s equations can be represented both in a variational form and in a boundary-value form. The latter is given by

| (2.5) |

where and satisfies the Euler–Lagrange equations over the time interval . A variational integrator is defined by constructing an approximation to , and then applying the discrete Euler–Lagrange equations,

| (2.6) |

where denotes a partial derivative with respect to the -th argument, and these equations implicitly define the integrator map . The error analysis is greatly simplified via Theorem 2.3.1 of [49], which states that if a discrete Lagrangian, , approximates the exact discrete Lagrangian to order , i.e.,

| (2.7) |

then the discrete Hamiltonian map , viewed as a one-step method, has order of accuracy . Many other properties of the integrator, such as momentum conservation properties of the method, can be determined by analyzing the associated discrete Lagrangian, as opposed to analyzing the integrator directly.

Variational integrators have been extended to the framework of Type II and Type III generating functions, commonly referred to as discrete Hamiltonians (see [34; 46; 57]). Hamiltonian variational integrators are derived by discretizing Hamilton’s phase space principle. The boundary-value formulation of the exact Type II generating function of the time- flow of Hamilton’s equations is given by the exact discrete right Hamiltonian,

| (2.8) |

where satisfies Hamilton’s equations with boundary conditions and . A Type II Hamiltonian variational integrator is constructed by using a discrete right Hamiltonian approximating , and applying the discrete right Hamilton’s equations,

| (2.9) |

which implicitly defines the integrator, .

Theorem 2.3.1 of [49], which simplified the error analysis for Lagrangian variational integrators, has an analogue for Hamiltonian variational integrators. Theorem 2.2 in [57] states that if a discrete right Hamiltonian approximates the exact discrete right Hamiltonian to order , i.e.,

| (2.10) |

then the discrete right Hamilton’s map , viewed as a one-step method, is order accurate. Note that discrete left Hamiltonians and corresponding discrete left Hamilton’s maps can also be constructed in the Type III case (see [46; 20]).

Examples of variational integrators include Galerkin variational integrators [46; 49], Prolongation-Collocation variational integrators [45], and Taylor variational integrators [58]. In many cases, the Type I and Type II/III approaches will produce equivalent integrators. This equivalence has been established in [58] for Taylor variational integrators provided the Lagrangian is hyperregular, and in [46] for generalized Galerkin variational integrators constructed using the same choices of basis functions and numerical quadrature formula provided the Hamiltonian is hyperregular. However, Hamiltonian and Lagrangian variational integrators are not always equivalent. In particular, it was shown in [57] that even when the Hamiltonian and Lagrangian integrators are analytically equivalent, they might still have different numerical properties because of numerical conditioning issues. Even more to the point, Lagrangian variational integrators cannot always be constructed when the underlying Hamiltonian is degenerate. This is particularly relevant in variational accelerated optimization since the time-adaptive Hamiltonian framework for accelerated optimization presented in [20] relies on a degenerate Hamiltonian which has no associated Lagrangian description. We will thus not be able to exploit the usual correspondence between Hamiltonian and Lagrangian dynamics and will have to come up with a different strategy to allow time-adaptivity on the Lagrangian side.

We now describe the construction of Taylor variational integrators as introduced in [58] as we will use them in our numerical experiments. A discrete approximate Lagrangian or Hamiltonian is constructed by approximating the flow map and the trajectory associated with the boundary values using a Taylor method, and approximating the integral by a quadrature rule. The Taylor variational integrator is generated by the implicit discrete Euler–Lagrange equations associated with the discrete Lagrangian or by the Hamilton’s equations associated with the discrete Hamiltonian. More explicitly, we first construct -order and -order Taylor methods and approximating the exact time- flow map corresponding to the Euler–Lagrange equation in the Type I case or the exact time- flow map corresponding to Hamilton’s equation in the Type II case. Let and . Given a quadrature rule of order with weights and nodes for , the Taylor variational integrators are then constructed as follows:

Type I Lagrangian Taylor Variational Integrator (LTVI):

-

(i)

Approximate by the solution of the problem

-

(ii)

Generate approximations via

-

(iii)

Apply the quadrature rule to obtain the associated discrete Lagrangian,

-

(iv)

The variational integrator is then defined by the implicit discrete Euler–Lagrange equations,

Type II Hamiltonian Taylor Variational Integrator (HTVI):

-

(i)

Approximate by the solution of the problem

-

(ii)

Generate approximations via

-

(iii)

Approximate via

-

(iv)

Use the continuous Legendre transform to obtain .

-

(v)

Apply the quadrature rule to obtain the associated discrete right Hamiltonian,

-

(vi)

The variational integrator is then defined by the implicit discrete right Hamilton’s equations,

Theorem 2.1.

Suppose the Lagrangian is Lipschitz continuous in both variables, and is sufficiently regular for the Taylor method to be well-defined.

Then approximates with at least order of accuracy .

By Theorem 2.3.1 in [49], the associated discrete Hamiltonian map has the same order of accuracy.

Theorem 2.2.

Suppose the Hamiltonian and its partial derivative are Lipschitz continuous in both variables, and is sufficiently regular for the Taylor method to be well-defined.

Then approximates with at least order of accuracy .

By Theorem 2.2 in [57], the associated discrete right Hamilton’s map has the same order of accuracy.

2.3. Time-adaptive Hamiltonian integrators via the Poincaré transformation

Symplectic integrators form a class of geometric numerical integrators of interest since, when applied to conservative Hamiltonian systems, they yield discrete approximations of the flow that preserve the symplectic 2-form [26], which results in the preservation of many qualitative aspects of the underlying system. In particular, symplectic integrators exhibit excellent long-time near-energy preservation. However, when symplectic integrators were first used in combination with variable time-steps, the near-energy preservation was lost and the integrators performed poorly [7; 24]. Backward error analysis provided justification both for the excellent long-time near-energy preservation of symplectic integrators and for the poor performance experienced when using variable time-steps (see Chapter IX of [26]): it shows that symplectic integrators can be associated with a modified Hamiltonian in the form of a formal power series in terms of the time-step. The use of a variable time-step results in a different modified Hamiltonian at every iteration, which is the source of the poor energy conservation. The Poincaré transformation is one way to incorporate variable time-steps in geometric integrators without losing the nice conservation properties associated with these integrators.

Given a Hamiltonian , consider a desired transformation of time described by the monitor function via

| (2.11) |

The time shall be referred to as the physical time, while will be referred to as the fictive time, and we will denote derivatives with respect to and by dots and apostrophes, respectively. A new Hamiltonian system is constructed using the Poincaré transformation,

| (2.12) |

in the extended phase space defined by and where is the conjugate momentum for with . The corresponding equations of motion in the extended phase space are then given by

| (2.13) |

Suppose are solutions to these extended equations of motion, and let solve Hamilton’s equations for the original Hamiltonian . Then

| (2.14) |

Therefore, the components in the original phase space of the augmented solutions satisfy

| (2.15) |

Then, and both satisfy Hamilton’s equations for the original Hamiltonian with the same initial values, so they must be the same. Note that the Hessian is given by

| (2.16) |

which will be singular in many cases. The degeneracy of the Hamiltonian implies that there is no corresponding Type I Lagrangian formulation. This approach works seamlessly with the existing methods and theorems for Hamiltonian variational integrators, but where the system under consideration is the transformed Hamiltonian system resulting from the Poincaré transformation. We can use a symplectic integrator with constant time-step in fictive time on the Poincaré transformed system, which will have the effect of integrating the original system with the desired variable time-step in physical time via the relation .

3. Time-adaptive Lagrangian Integrators

The Poincaré transformation for time-adaptive symplectic integrators on the Hamiltonian side presented in Section 2.3 with autonomous monitor function was first introduced in [69], and extended to the case where can also depend on time based on ideas from [25]. All the literature to date on the Poincaré transformation have constructed the Poincaré transformed system by reverse-engineering: the Poincaré transformed Hamiltonian is chosen in such a way that the corresponding component dynamics satisfy Hamilton’s equations in the original space.

3.1. Variational Derivation of the Poincaré Hamiltonian

We now depart from the traditional reverse-engineering strategy for the Poincaré transformation and present a new way to think about the Poincaré transformed Hamiltonian by deriving it from a variational principle. This simple derivation gives additional insight into the transformation mechanism and provides natural candidates for time-adaptivity on the Lagrangian side and for more general frameworks.

As before, we work in the extended space where and is the corresponding conjugate momentum, and consider a time transformation given by

| (3.1) |

We define an extended action functional by

| (3.2) | ||||

| (3.3) | ||||

| (3.4) |

where we have performed a change of variables in the integral. Then,

| (3.5) |

Computing the variation of yields

and using integration by parts and the boundary conditions and gives

Thus, the condition that is stationary with respect to the boundary conditions and is equivalent to satisfying Hamilton’s canonical equations corresponding to the Poincaré transformed Hamiltonian,

| (3.6) | |||

| (3.7) | |||

| (3.8) | |||

| (3.9) |

An alternative way to reach the same conclusion is by interpreting equation (3.5) as the usual Type II action functional for the modified Hamiltonian,

| (3.10) |

which coincides with the Poincaré transformed Hamiltonian.

3.2. Time-adaptivity from a Variational Principle on the Lagrangian side

We will now derive a mechanism for time-adaptivity on the Lagrangian side by mimicking the derivation of the Poincaré Hamiltonian. We will work in the extended space where and is a Lagrange multiplier used to enforce the time rescaling Consider the action functional given by

| (3.11) | ||||

| (3.12) | ||||

| (3.13) |

where, as before, we have performed a change of variables in the integral. This is the usual Type I action functional for the extended autonomous Lagrangian,

| (3.14) |

Theorem 3.1.

If satisfies the Euler–Lagrange equations corresponding to the extended Lagrangian , then its components satisfy and the original Euler–Lagrange equations

| (3.15) |

Proof.

Substituting the expression for into the Euler–Lagrange equations, and , gives

and

Now, so , and the chain rule gives

Using the equation and replacing by recovers the original Euler–Lagrange equations. ∎

We now introduce a discrete variational formulation of these continuous Lagrangian mechanics. Suppose we are given a partition of the interval , and a discrete curve in denoted by such that , , and . Consider the discrete action functional,

| (3.16) |

where is obtained by approximating the exact discrete Lagrangian, which is related to Jacobi’s solution of the Hamilton–Jacobi equation and is the generating function for the exact time- flow map. It is given by the extremum of the action integral from to over twice continuously differentiable curves satisfying the boundary conditions and :

| (3.17) |

In practice, we can obtain an approximation by replacing the integral with a quadrature rule, and extremizing over a finite-dimensional function space instead of . This discrete functional is a discrete analogue of the action functional given by

| (3.18) | ||||

| (3.19) |

We can derive the following result which relates a discrete Type I variational principle to a set of discrete Euler–Lagrange equations:

Theorem 3.2.

The Type I discrete Hamilton’s variational principle,

| (3.20) |

is equivalent to the discrete extended Euler–Lagrange equations,

| (3.21) |

| (3.22) |

| (3.23) | ||||

where denotes .

Proof.

See Appendix A.1. ∎

Defining the discrete momenta via the discrete Legendre transformations,

| (3.24) |

and using a constant time-step in , the discrete Euler–Lagrange equations can be rewritten as

| (3.25) | ||||

| (3.26) | ||||

| (3.27) | ||||

| (3.28) | ||||

| (3.29) |

3.3. A Second Time-Adaptive Framework obtained by Reverse-Engineering

As mentioned earlier, all the literature to date on the Poincaré transformation have constructed the Poincaré transformed system by reverse-engineering. The Poincaré transformed Hamiltonian is chosen in such a way that the corresponding component dynamics satisfy the Hamilton’s equations in the original space. We will follow a similar strategy to derive a second framework for time-adaptivity from the Lagrangian perspective.

Given a time-dependent Lagrangian consider a transformation of time ,

| (3.30) |

described by the monitor function . The time shall be referred to as the physical time, while will be referred to as the fictive time, and we will denote derivatives with respect to and by dots and apostrophes, respectively. We define the autonomous Lagrangian,

| (3.31) |

in the extended space with where , and where is a multiplier used to impose the constraint that the time evolution is guided by the monitor function . Note that in contrast to the earlier framework, the Lagrange multiplier term lacks an extra multiplicative factor of .

Theorem 3.3.

If satisfies the Euler–Lagrange equations corresponding to the extended Lagrangian , then its components satisfy and the original Euler–Lagrange equations

| (3.32) |

Proof.

Substituting the expression for in the Euler–Lagrange equations and gives

and

We can divide by and use the chain rule to get and

Using the equations and , and replacing by recovers the desired equations. ∎

We now introduce a discrete variational formulation of these continuous Lagrangian mechanics. Suppose we are given a partition of the interval , and a discrete curve in denoted by such that , , and . Consider the discrete action functional,

| (3.33) |

where,

| (3.34) |

This discrete functional is a discrete analogue of the action functional given by

| (3.35) | ||||

| (3.36) |

We can derive the following result which relates a discrete Type I variational principle to a set of discrete Euler–Lagrange equations:

Theorem 3.4.

The Type I discrete Hamilton’s variational principle,

| (3.37) |

is equivalent to the discrete extended Euler–Lagrange equations,

| (3.38) |

| (3.39) |

| (3.40) |

where denotes .

Proof.

See Appendix A.2. ∎

Defining the discrete momenta via the discrete Legendre transformations,

| (3.41) |

and using a constant time-step in , the discrete Euler–Lagrange equations can be rewritten as

| (3.42) | ||||

| (3.43) | ||||

| (3.44) | ||||

| (3.45) | ||||

| (3.46) |

3.4. Remarks on the Framework for Time-Adaptivity

Time-adaptivity comes more naturally on the Hamiltonian side through the Poincaré transformation. Indeed, in the Hamiltonian case, the time-rescaling equation emerged naturally through the change of time variable inside the extended action functional. By contrast, in the Lagrangian case, we need to impose the time-rescaling equation as a constraint via a multiplier, which we then consider as an extra position coordinate. This strategy can be thought of as being part of the more general framework for constrained variational integrators (see [49; 16]).

The Poincaré transformation on the Hamiltonian side was presented in [69; 25; 20] for the general case where the monitor function can depend on position, time and momentum, . For the accelerated optimization application which was our main motivation to develop a time-adaptive framework for geometric integrators, the monitor function only depends on time, . For the sake of simplicity and clarity, we have decided to only present the theory for time-adaptive Lagrangian integrators for monitor functions of the form in this paper. Note however that this time-adaptivity framework on the Lagrangian side can be extended to the case where the monitor function also depends on position, . The action integral remains the same with the exception that is now a function of . Unlike the case where , the corresponding Euler–Lagrange equation yields an extra term in the original phase-space,

| (3.47) |

The discrete Euler–Lagrange equations become more complicated and involve terms with partial derivatives of with respect to . Furthermore, when , the discrete Euler–Lagrange equations involve but the time-evolution of the Lagrange multiplier is not well-defined, so the discrete Hamiltonian map corresponding to the discrete Lagrangian is not well-defined, as explained in [49, page 440]. Although there are ways to circumvent this problem in practice, this adds some difficulty and makes the time-adaptive Lagrangian approach with less natural and desirable than the corresponding Poincaré transformation on the Hamiltonian side. It might also be tempting to generalize further and consider the case where . However, in this case, the time-rescaling equation becomes implicit and it becomes less clear how to generalize the variational derivation presented in this paper. There are examples where time-adaptivity with these more general monitor functions proved advantageous (see for instance Kepler’s problem in [20]). This motivates further effort towards developing a better framework for time-adaptivity on the Lagrangian side with more general monitor functions.

It might be more natural to consider these time-rescaled Lagrangian and Hamiltonian dynamics as Dirac mechanics [44; 66; 67] on the Pontryagin bundle . Dirac dynamics are described by the Hamilton-Pontryagin variational principle where the momentum acts as a Lagrange multiplier to impose the kinematic equation ,

| (3.48) |

This provides a variational description of both Lagrangian and Hamiltonian mechanics, yields the implicit Euler–Lagrange equations

| (3.49) |

and suggests the introduction of a more general quantity, the generalized energy

| (3.50) |

as an alternative to the Hamiltonian.

4. Application to Accelerated Optimization on Vector Spaces

4.1. A Variational Framework for Accelerated Optimization

A variational framework was introduced in [65] for accelerated optimization on normed vector spaces. The -Bregman Lagrangians and Hamiltonians are defined to be

| (4.1) |

| (4.2) |

which are scalar-valued functions of position , velocity or momentum , and time . In [65], it was shown that solutions to the -Bregman Euler–Lagrange equations converge to a minimizer of the convex objective function at a convergence rate of . Furthermore, this family of Bregman dynamics is closed under time dilation: time-rescaling a solution to the -Bregman Euler–Lagrange equations via yields a solution to the -Bregman Euler–Lagrange equations. Thus, the entire subfamily of Bregman trajectories indexed by the parameter can be obtained by speeding up or slowing down along the Bregman curve corresponding to any value of . In [20], the time-rescaling property of the Bregman dynamics was exploited together with a carefully chosen Poincaré transformation to transform the -Bregman Hamiltonian into an autonomous version of the -Bregman Hamiltonian in extended phase-space, where . This strategy allowed us to achieve the faster rate of convergence associated with the higher-order -Bregman dynamics, but with the computational efficiency of integrating the lower-order -Bregman dynamics. Explicitly, using the time rescaling within the Poincaré transformation framework yields the adaptive approach -Bregman Hamiltonian,

| (4.3) |

and when , the direct approach -Bregman Hamiltonian,

| (4.4) |

In [20], a careful computational study was performed on how time-adaptivity and symplecticity of the numerical scheme improve the performance of the resulting optimization algorithm. In particular, it was observed that time-adaptive Hamiltonian variational discretizations, which are automatically symplectic, with adaptive time-steps informed by the time-rescaling of the family of -Bregman Hamiltonians yielded the most robust and computationally efficient optimization algorithms, outperforming fixed-timestep symplectic discretizations, adaptive-timestep non-symplectic discretizations, and Nesterov’s accelerated gradient algorithm which is neither time-adaptive nor symplectic.

4.2. Numerical Methods

4.2.1. A Lagrangian Taylor Variational Integrator (LTVI)

We will now construct a time-adaptive Lagrangian Taylor variational integrator (LTVI) for the -Bregman Lagrangian,

| (4.5) |

using the strategy outlined in Section 2.2 together with the discrete Euler–Lagrange equations derived in Sections 3.2 and 3.3.

Looking at the form of the continuous -Bregman Euler–Lagrange equations,

| (4.6) |

we can note that appears in the expression for . Now, the construction of a LTVI as presented in Section 2.2 requires -order and -order Taylor approximations of . This means that if we take , then and higher-order derivatives of will appear in the resulting discrete Lagrangian , and as a consequence, the discrete Euler–Lagrange equations,

| (4.7) |

will yield an integrator which is not gradient-based. Keeping in mind the machine learning applications where data sets are very large, we will restrict ourselves to explicit first-order optimization algorithms, and therefore the highest value of that we can choose to obtain a gradient-based algorithm is .

With , the choice of quadrature rule does not matter, so we can take the rectangular quadrature rule about the initial point ( and ). We first approximate by the solution of the problem , that is . Then, applying the quadrature rule gives the associated discrete Lagrangian,

| (4.8) |

The variational integrator is then defined by the discrete extended Euler–Lagrange equations derived in Sections 3.2 and 3.3. In practice, we are not interested in the evolution of the conjugate momentum , and since it will not appear in the updates for the other variables, the discrete equations of motion from Sections 3.2 and 3.3 both reduce to the same updates,

| (4.9) | ||||

| (4.10) | ||||

| (4.11) |

Now, for the adaptive approach, substituting and

| (4.12) |

yields the adaptive LTVI algorithm,

| (4.13) | ||||

| (4.14) | ||||

| (4.15) |

In the direct approach, so and we obtain the direct LTVI algorithm,

| (4.16) | ||||

| (4.17) | ||||

| (4.18) |

4.2.2. A Hamiltonian Taylor Variational Integrator (HTVI)

In [20], a Type II Hamiltonian Taylor Variational Integrator (HTVI) was derived following the strategy from Section 2.2 with for the adaptive approach -Bregman Hamiltonian,

| (4.19) |

This adaptive HTVI is the most natural Hamiltonian analogue of the LTVI described in Section 4.2.1, and its updates are given by

| (4.20) | ||||

| (4.21) | ||||

| (4.22) |

When , it reduces to the direct HTVI,

| (4.23) | ||||

| (4.24) | ||||

| (4.25) |

4.3. Numerical Experiments

Numerical experiments using the numerical methods presented in the previous section have been conducted to minimize the quartic function,

| (4.26) |

where and . This convex function achieves its minimum value at .

As was observed in [20] for the HTVI algorithm, the numerical experiments showed that a carefully tuned adaptive approach algorithm enjoys a significantly better rate of convergence and requires a much smaller number of steps to achieve convergence than the direct approach, as can be seen in Figure 1 for the LTVI methods. Although the adaptive approach requires a smaller fictive time-step than the direct approach, the physical time-steps resulting from in the adaptive approach grow rapidly to values larger than the constant physical time-step of the direct approach. The results of Figure 1 also show that the adaptive and direct LTVI methods become more and more efficient as is increased, which was also the case for the HTVI algorithm in [20].

The LTVI and HTVI algorithms presented in Section 4.2 perform empirically almost exactly in the same way for the same parameters and time-step, as can be seen for instance in Figure 2. As a result, the computational analysis carried in [20] for the HTVI algorithm extends to the LTVI algorithm. In particular, it was shown in [20] that the HTVI algorithm is much more efficient than non-adaptive non-symplectic and adaptive non-symplectic integrators for the Bregman dynamics, and that it can be a competitive first-order explicit algorithm which can outperform certain popular optimization algorithms such as Nesterov’s Accelerated Gradient [54], Trust Region Steepest Descent, ADAM [33], AdaGrad [15], and RMSprop [61], for certain objective functions. Since the computational performance of the LTVI algorithm is almost exactly the same as that of the HTVI algorithm, this means that the LTVI algorithm is also much more efficient than non-symplectic integrators for the Bregman dynamics and can also be very competitive as a first-order explicit optimization algorithm.

5. Accelerated Optimization on More General Spaces

5.1. Motivation and Prior Work

The variational framework for accelerated optimization on normed vector spaces from [65; 20] was extended to the Riemannian manifold setting in [19] via a Riemannian -Bregman Lagrangian and a corresponding Riemannian -Bregman Hamiltonian , for , of the form

| (5.1) |

| (5.2) |

where and are constants having to do with the curvature of the manifold and the convexity of the objective function . These yield the associated -Bregman Euler–Lagrange equations,

| (5.3) |

Here, denotes the Riemannian gradient of , is the covariant derivative of along , and is the fiber metric on induced by the Riemannian metric on whose local coordinates representation is the inverse of the local representation of . See [1; 6; 48; 35; 32; 19] for a more detailed description of these notions from Riemannian geometry and of this Riemannian variational framework for accelerated optimization. Note that some work was done on accelerated optimization via numerical integration of Bregman dynamics in the Lie group setting [42; 60] before the theory for more general Bregman families on Riemannian manifolds was established in [19].

It was shown in [19] that solutions to the -Bregman Euler–Lagrange equations converge to a minimizer of at a convergence rate of , under suitable assumptions, and proven that time-rescaling a solution to the -Bregman Euler–Lagrange equations via yields a solution to the -Bregman Euler–Lagrange equations. As a result, the adaptive approach involving the Poincaré transformation was extended to the Riemannian manifold setting via the adaptive approach Riemannian Bregman Hamiltonian,

| (5.4) |

This adaptive framework was exploited using discrete variational integrators incorporating holonomic constraints [16] and projection-based variational integrators [18]. Both these strategies relied on embedding the Riemannian manifolds into an ambient Euclidean space. Although the Whitney and Nash Embedding Theorems [64; 63; 53] imply that there is no loss of generality when studying Riemannian manifolds only as submanifolds of Euclidean spaces, designing intrinsic methods that would exploit and preserve the symmetries and geometric properties of the Riemannian manifold and of the problem at hand could have advantages, both in terms of computation and in terms of improving our understanding of the acceleration phenomenon on Riemannian manifolds.

Developing an intrinsic extension of Hamiltonian variational integrators to manifolds would require some additional work, since the current approach involves Type II/III generating functions , , which depend on the position at one boundary point, and on the momentum at the other boundary point. However, this does not make intrinsic sense on a manifold, since one needs the base point in order to specify the corresponding cotangent space, and one should ideally consider a Hamiltonian variational integrator construction based on the discrete generalized energy in the context of discrete Dirac mechanics [44; 66; 67].

On the other hand, Lagrangian variational integrators involve a Type I generating function which only depends on the position at the boundary points and is therefore well-defined on manifolds, and many Lagrangian variational integrators have been derived on Riemannian manifolds, especially in the Lie group [38; 39; 5; 27; 56; 43; 28; 37; 36] and homogeneous space [40] settings. The time-adaptive framework developed in this paper now makes it possible to design time-adaptive Lagrangian integrators for accelerated optimization on these more general spaces, where it is more natural and easier to work on the Lagrangian side than on the Hamiltonian side.

5.2. Accelerated Optimization on Lie Groups

Although it is possible to work on Riemannian manifolds, we will restrict ourselves to Lie groups for simplicity of exposition since there is more literature available on Lie group integrators than Riemannian integrators. Note as well that prior work is available on accelerated optimization via numerical integration of Bregman dynamics in the Lie group setting [42; 60].

Here, we will work in the setting introduced in [42]. The setting of [60] can be thought of as a special case of the more general Lie group framework for accelerated optimization presented here. Consider a -dimensional Lie group with associated Lie algebra , and a left-trivialization of the tangent bundle of the group , via , where is the left action defined by for all . Suppose that is equipped with an inner product which induces an inner product on via left-trivialization,

With this inner product, we identify and via the Riesz representation. Let be chosen such that is positive-definite and symmetric as a bilinear form of . Then, the metric with serves as a left-invariant Riemannian metric on . The adjoint and ad operators are denoted by and , respectively. We refer the reader to [48; 41; 23] for a more detailed description of Lie group theory and mechanics on Lie groups.

As mentioned earlier, there is a lot of literature available on Lie group integrators. We refer the reader to [30; 11; 12; 13] for very thorough surveys of the literature on Lie group methods, which acknowledge all the foundational contributions leading to the current state of Lie group integrator theory. In particular, the Crouch and Grossman approach [14], the Lewis and Simo approach [47], Runge–Kutta–Munthe–Kaas methods [51; 52; 50; 9], Magnus and Fer expansions [68; 4; 29], and commutator-free Lie group methods [10] are outlined in these surveys. Variational integrators have also been derived on the Lagrangian side in the Lie group setting [38; 39; 5; 27; 56; 43; 28; 37; 36].

We now introduce a discrete variational formulation of time-adaptive Lagrangian mechanics on Lie groups. Suppose we are given a partition of the interval , and a discrete curve in denoted by such that , , and . The discrete kinematics equation is chosen to be

| (5.5) |

where represents the relative update over a single step.

Consider the discrete action functional,

| (5.6) |

where,

| (5.7) |

We can derive the following result which relates a discrete Type I variational principle to a set of discrete Euler–Lagrange equations:

Theorem 5.1.

The Type I discrete Hamilton’s variational principle,

| (5.8) |

where,

| (5.9) |

is equivalent to the discrete extended Euler–Lagrange equations,

| (5.10) |

| (5.11) |

| (5.12) | ||||

where denotes .

Proof.

See Appendix A.3. ∎

Now, define

| (5.13) |

and

| (5.14) |

Then,

| (5.15) |

With these definitions, if we use a constant time-step in fictive time and substitute , the discrete Euler–Lagrange equations can be rewritten as

| (5.16) | ||||

| (5.17) | ||||

| (5.18) | ||||

| (5.19) |

In the Lie group setting, the Riemannian -Bregman Lagrangian becomes

| (5.20) |

with corresponding Euler–Lagrange equation,

| (5.21) |

where is the left-trivialized derivative of , given by . We then consider the discrete Lagrangian,

| (5.22) |

where , which approximates

| (5.23) |

5.3. Numerical Experiment on

We work on the 3-dimensional Special Orthogonal group,

| (5.24) |

Its Lie algebra is

| (5.25) |

with the matrix commutator as the Lie bracket. We have an identification between and given by the hat map , defined such that for any . The inverse of the hat map is the vee map . The inner product on is given by

| (5.26) |

and the metric is chosen so that

| (5.27) |

where is a symmetric positive-definite matrix and .

On , for any and ,

| (5.28) |

Identifying , we have for any and that

| (5.29) |

On , the Riemannian -Bregman Lagrangian becomes

| (5.30) |

and the corresponding Euler–Lagrange equations are given by

| (5.31) |

The discrete kinematics equation is written as

| (5.32) |

where , and so we get the discrete Lagrangian,

| (5.33) |

As in [38; 42], the angular velocity is approximated by so we can take

| (5.34) |

Differentiating this equation and using the identity yields

| (5.35) |

Then, the discrete Euler–Lagrange equations for and become

| (5.36) | ||||

| (5.37) |

Now, equation (5.36) can be solved explicitly when as described in [42]:

| (5.38) |

Therefore, we get the Adaptive LLGVI (Adaptive Lagrangian Lie Group Variational Integrator)

| (5.39) | |||

| (5.40) | |||

| (5.41) | |||

| (5.42) |

We will use this integrator to solve the problem of minimizing the objective function,

| (5.43) |

over , where denotes the Frobenius norm. Its left-trivialized gradient is given by

| (5.44) |

Minimizing this objective function appears in the least-squares estimation of attitude, commonly known as Wahba’s problem [62]. The optimal attitude is explicitly given by

| (5.45) |

where is the singular value decomposition of with and diagonal.

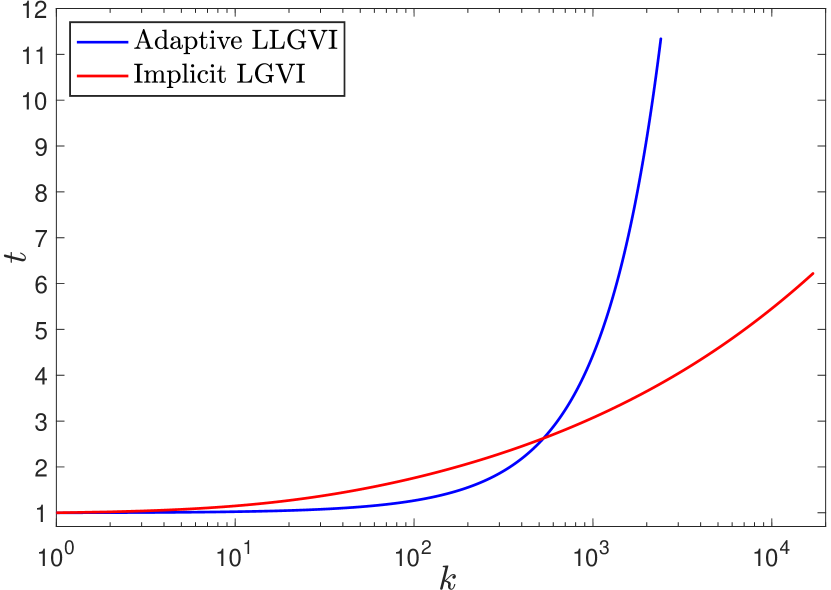

We have tested the Adaptive LLGVI integrator on Wahba’s problem against the Implicit Lie Group Variational Integrator (Implicit LGVI) from [42]. The Implicit LGVI is a Lagrangian Lie group variational integrator which adaptively adjusts the step size at every step. It should be noted that these two adaptive approaches use adaptivity in two fundamentally different ways: our Adaptive LLGVI method uses a priori adaptivity based on known global properties of the family of differential equations considered (i.e. the time-rescaling symmetry of the family of Bregman dynamics), while the implicit method from [42] adapts the time-steps in an a posteriori way, by solving a system of nonlinear equations coming from an extended variational principle. The results of our numerical experiments are presented in Figures 3 and 4. In these numerical experiments, we have used the termination criteria

| (5.46) |

We can see from Figure 3 that both algorithms preserve the orthogonality condition very well. Now, we can observe from Figure 3 that although both algorithms follow the same curve in time , they do not travel along this curve at the same speed. Despite the fact that the Adaptive LLGVI algorithm initially takes smaller time-steps, those time-steps eventually become much larger than for the Implicit LGVI algorithm, and as a result, the Adaptive LLGVI algorithm achieves the termination criteria in a smaller number of iterations, which can also be seen more explicitly in the table from Figure 4. Unlike the Implicit LGVI algorithm, the Adaptive LLGVI algorithm is explicit, so each iteration is much cheaper and is therefore significantly faster, as can be seen from the running times displayed in Figure 4. Furthermore, the Adaptive LLGVI algorithm is significantly easier to implement.

6. Conclusion

A variational framework for accelerated optimization on vector spaces was introduced [65] by considering a family of time-dependent Bregman Lagrangian and Hamiltonian systems which is closed under time-rescaling. This variational framework was exploited in [20] by using time-adaptive geometric Hamiltonian integrators to design efficient, explicit algorithms for symplectic accelerated optimization. It was observed that a careful use of adaptivity and symplecticity, which was possible on the Hamiltonian side thanks to the Poincaré transformation, could result in a significant gain in computational efficiency, by simulating higher-order Bregman dynamics using the computationally efficient lower-order Bregman integrators applied to the time-rescaled dynamics.

The variational framework and time-adaptive approach on the Hamiltonian side were later extended to the Riemannian manifolds setting in [19]. However, the current formulations of Hamiltonian variational integrators do not make sense intrinsically on manifolds, so this framework was only exploited using methods which take advantage of the structure of the Euclidean spaces in which the Riemannian manifolds are embedded [16; 18] instead of the structure of the Riemannian manifolds themselves. On the other hand, existing formulations of Lagrangian variational integrators are well-defined on manifolds, and many Lagrangian variational integrators have been derived on Riemannian manifolds, especially in the Lie group setting. This motivated exploring whether it is possible to construct a mechanism on the Lagrangian side which mimics the Poincaré transformation, since it is more natural and easier to work on the Lagrangian side on curved manifolds.

The usual correspondence between Hamiltonian and Lagrangian dynamics could not be exploited here since the Poincaré Hamiltonian is degenerate and therefore does not have a corresponding Lagrangian formulation. Instead, we introduced a novel derivation of the Poincaré transformation from a variational principle which gave us additional insight into the transformation mechanism and provided natural candidates for a time-adaptive framework on the Lagrangian side. Based on these observations, we constructed a theory of time-adaptive Lagrangian mechanics both in continuous and discrete time, and applied the resulting time-adaptive Lagrangian variational integrators to solve optimization problems by simulating Bregman dynamics, within the variational framework introduced in [65]. We observed empirically that our time-adaptive Lagrangian variational integrators performed almost exactly in the same way as the time-adaptive Hamiltonian variational integrators coming from the Poincaré framework of [20], whenever they are used with the same parameters and time-step. As a result, the computational analysis carried in [20] for the HTVI algorithm extends to the LTVI algorithm, and thus the LTVI algorithm is much more efficient than non-symplectic integrators for the Bregman dynamics and can be a competitive first-order explicit algorithm since it can outperform commonly used optimization algorithms for certain objective functions.

Finally, we showed that our time-adaptive Lagrangian approach extends naturally to more general spaces such as Riemannian manifolds and Lie groups without having to face the difficulties experienced on the Hamiltonian side, and we then applied time-adaptive Lie group Lagrangian variational integrators to solve an optimization problem on the three-dimensional Special Orthogonal group . In particular, the resulting Lie group accelerated optimization algorithms were significantly faster and easier to implement than other recently proposed time-adaptive Lie group variational integrators for accelerated optimization.

In future work, we will explore the issue of time-adaptive Lagrangian mechanics for more general monitor functions, using the primal-dual framework of Dirac mechanics. We will also study the convergence properties of the discrete-time algorithms, and try to better understand how to reconcile the Nesterov barrier theorem with the convergence properties of the continuous Bregman flows. It would also be useful to study the extent to which the practical considerations recently presented in [17], which significantly improved the computational performance of the symplectic optimization algorithms in the normed vector space setting, extend to the Riemannian manifold and Lie group settings with the Lagrangian Riemannian and Lie group variational integrators.

Acknowledgments

The authors would like to thank Taeyoung Lee and Molei Tao for sharing the code for the implicit Lie group variational integrator from [42], which was used in the numerical comparison with the method introduced in this paper. The authors would also like to thank the referees for their helpful comments and suggestions.

Funding

The authors were supported in part by NSF under grants DMS-1345013, DMS-1813635, CCF-2112665, by AFOSR under grant FA9550-18-1-0288, and by the DoD under grant HQ00342010023 (Newton Award for Transformative Ideas during the COVID-19 Pandemic).

Appendix A Proofs of Theorems

A.1. Proof of Theorem 3.2

Theorem A.1.

The Type I discrete Hamilton’s variational principle,

where,

is equivalent to the discrete extended Euler–Lagrange equations,

where denotes .

Proof.

We use the notation , and we will use the fact that throughout the proof. We have

Thus,

As a consequence, if

then . Conversely, if , then a discrete fundamental theorem of the calculus of variations yields the above equations. ∎

A.2. Proof of Theorem 3.4

Theorem A.2.

The Type I discrete Hamilton’s variational principle,

where,

is equivalent to the discrete extended Euler–Lagrange equations,

where denotes .

Proof.

We use the notation , and we will use the fact that throughout the proof. We have

Thus,

As a consequence, if

then . Conversely, if , then a discrete fundamental theorem of the calculus of variations yields the above equations. ∎

A.3. Proof of Theorem 5.1

Theorem A.3.

The Type I discrete Hamilton’s variational principle,

where,

is equivalent to the discrete extended Euler–Lagrange equations,

where denotes .

Proof.

We use the notation and we will use the fact that throughout the proof. We have

We can write as for some . Then, taking the variation of the discrete kinematics equation gives the equation and . Therefore,

so

Then,

As a consequence, if

then . Conversely, if , then a discrete fundamental theorem of the calculus of variations yields the above equations. ∎

The authors have no competing interests to declare that are relevant to the content of this article.

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

- Absil et al. [2008] P. A. Absil, R. Mahony, and R. Sepulchre. Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton, NJ, 2008. ISBN 978-0-691-13298-3.

- Alimisis et al. [2020] F. Alimisis, A. Orvieto, G. Bécigneul, and A. Lucchi. A continuous-time perspective for modeling acceleration in Riemannian optimization. In Proceedings of the 23rd International AISTATS Conference, volume 108 of PMLR, pages 1297–1307, 2020.

- Betancourt et al. [2018] M. Betancourt, M. I. Jordan, and A. Wilson. On symplectic optimization. 2018.

- Blanes et al. [2008] S. Blanes, F. Casas, J. A. Oteo, and J. Ros. The Magnus expansion and some of its applications. Physics Reports, 470:151–238, 11 2008. doi: 10.1016/j.physrep.2008.11.001.

- Bou-Rabee and Marsden [2009] N. Bou-Rabee and J. E. Marsden. Hamilton–Pontryagin integrators on Lie groups part I: Introduction and structure-preserving properties. Foundations of Computational Mathematics, 9(2):197–219, 2009.

- Boumal [2020] N. Boumal. An introduction to optimization on smooth manifolds, 2020. URL http://www.nicolasboumal.net/book.

- Calvo and Sanz-Serna [1993] J. P. Calvo and J. M. Sanz-Serna. The development of variable-step symplectic integrators, with application to the two-body problem. SIAM J. Sci. Comp., 14(4):936–952, 1993.

- Campos et al. [2021] C. M. Campos, A. Mahillo, and D. Martín de Diego. A discrete variational derivation of accelerated methods in optimization. 2021. URL https://arxiv.org/abs/2106.02700.

- Casas and Owren [2003] F. Casas and B. Owren. Cost efficient Lie group integrators in the RKMK class. BIT, 43:723–742, 11 2003. doi: 10.1023/B:BITN.0000009959.29287.d4.

- Celledoni et al. [2003] E. Celledoni, A. Marthinsen, and B. Owren. Commutator-free Lie group methods. Future Gener. Comput. Syst., 19:341–352, 2003.

- Celledoni et al. [2014] E. Celledoni, H. Marthinsen, and B. Owren. An introduction to Lie group integrators – basics, new developments and applications. Journal of Computational Physics, 257:1040–1061, 2014. ISSN 0021-9991. doi: 10.1016/j.jcp.2012.12.031.

- Celledoni et al. [2022] E. Celledoni, E. Çokaj, A. Leone, D. Murari, and B. Owren. Lie group integrators for mechanical systems. International Journal of Computer Mathematics, 99(1):58–88, 2022. doi: 10.1080/00207160.2021.1966772.

- Christiansen et al. [2011] S. H. Christiansen, H. Z. Munthe-Kaas, and B. Owren. Topics in structure-preserving discretization. Acta Numerica, 20:1–119, 2011. doi: 10.1017/S096249291100002X.

- Crouch and Grossman [1993] P. E. Crouch and R. L. Grossman. Numerical integration of ordinary differential equations on manifolds. Journal of Nonlinear Science, 3:1–33, 1993.

- Duchi et al. [2011] J. Duchi, E. Hazan, and Y. Singer. Adaptive subgradient methods for online learning and stochastic optimization. Journal of Machine Learning Research, 12(61):2121–2159, 2011.

- Duruisseaux and Leok [2022a] V. Duruisseaux and M. Leok. Accelerated optimization on Riemannian manifolds via discrete constrained variational integrators. Journal of Nonlinear Science, 32(42), 2022a. URL https://doi.org/10.1007/s00332-022-09795-9.

- Duruisseaux and Leok [2022b] V. Duruisseaux and M. Leok. Practical perspectives on symplectic accelerated optimization. 2022b. URL https://arxiv.org/abs/2207.1146.

- Duruisseaux and Leok [2022c] V. Duruisseaux and M. Leok. Accelerated optimization on Riemannian manifolds via projected variational integrators. 2022c. URL https://arxiv.org/abs/2201.02904.

- Duruisseaux and Leok [2022d] V. Duruisseaux and M. Leok. A variational formulation of accelerated optimization on Riemannian manifolds. SIAM Journal on Mathematics of Data Science, 4(2):649–674, 2022d. URL https://doi.org/10.1137/21M1395648.

- Duruisseaux et al. [2021] V. Duruisseaux, J. Schmitt, and M. Leok. Adaptive Hamiltonian variational integrators and applications to symplectic accelerated optimization. SIAM Journal on Scientific Computing, 43(4):A2949–A2980, 2021. URL https://doi.org/10.1137/20M1383835.

- [21] G. França, M. I. Jordan, and R. Vidal. On dissipative symplectic integration with applications to gradient-based optimization. Journal of Statistical Mechanics: Theory and Experiment, 2021(4):043402. doi: 10.1088/1742-5468/abf5d4.

- França et al. [2021] G. França, A. Barp, M. Girolami, and M. I. Jordan. Optimization on manifolds: A symplectic approach, 07 2021.

- Gallier and Quaintance [2020] J. Gallier and J. Quaintance. Differential Geometry and Lie Groups: A Computational Perspective. Geometry and Computing. Springer International Publishing, 2020. ISBN 9783030460402.

- Gladman et al. [1991] B. Gladman, M. Duncan, and J. Candy. Symplectic integrators for long-time integrations in celestial mechanics. Celestial Mech. Dynamical Astronomy, 52:221–240, 1991.

- Hairer [1997] E. Hairer. Variable time step integration with symplectic methods. Applied Numerical Mathematics, 25(2-3):219–227, 1997.

- Hairer et al. [2006] E. Hairer, C. Lubich, and G. Wanner. Geometric Numerical Integration, volume 31 of Springer Series in Computational Mathematics. Springer-Verlag, Berlin, second edition, 2006.

- Hall and Leok [2015] J. Hall and M. Leok. Spectral Variational Integrators. Numer. Math., 130(4):681–740, 2015.

- Hussein et al. [2006] I. I. Hussein, M. Leok, A. K. Sanyal, and A. M. Bloch. A discrete variational integrator for optimal control problems on SO(3). Proceedings of the 45th IEEE Conference on Decision and Control, pages 6636–6641, 2006.

- Iserles and Nørsett [1999] A. Iserles and S. P. Nørsett. On the solution of linear differential equations in Lie groups. Philosophical Transactions: Mathematical, Physical and Engineering Sciences, 357(1754):983–1019, 1999. ISSN 1364503X.

- Iserles et al. [2000] A. Iserles, H. Z. Munthe-Kaas, S. P. Nørsett, and A. Zanna. Lie group methods. In Acta Numerica, volume 9, pages 215–365. Cambridge University Press, 2000.

- [31] M. I. Jordan. Dynamical, symplectic and stochastic perspectives on gradient-based optimization. In Proceedings of the International Congress of Mathematicians (ICM 2018), pages 523–549. doi: 10.1142/9789813272880˙0022.

- Jost [2017] J. Jost. Riemannian Geometry and Geometric Analysis. Universitext. Springer, Cham, 7th edition, 2017.

- Kingma and Ba [2014] D. Kingma and J. Ba. Adam: A method for stochastic optimization. International Conference on Learning Representations, 12 2014.

- Lall and West [2006] S. Lall and M. West. Discrete variational Hamiltonian mechanics. J. Phys. A, 39(19):5509–5519, 2006.

- Lee [2018] J. M. Lee. Introduction to Riemannian Manifolds, volume 176 of Graduate Texts in Mathematics. Springer, Cham, second edition, 2018.

- Lee [2008] T. Lee. Computational geometric mechanics and control of rigid bodies. Ph.D. dissertation, University of Michigan, 2008.

- [37] T. Lee, N. H. McClamroch, and M. Leok. A Lie group variational integrator for the attitude dynamics of a rigid body with applications to the 3d pendulum. Proceedings of 2005 IEEE Conference on Control Applications. (CCA 2005), pages 962–967.

- Lee et al. [2007a] T. Lee, M. Leok, and N. H. McClamroch. Lie group variational integrators for the full body problem. Comput. Methods Appl. Mech. Engrg., 196(29-30):2907–2924, 2007a.

- Lee et al. [2007b] T. Lee, M. Leok, and N. H. McClamroch. Lie group variational integrators for the full body problem in orbital mechanics. Celestial Mechanics and Dynamical Astronomy, 98(2):121–144, 2007b.

- Lee et al. [2009] T. Lee, M. Leok, and N. H. McClamroch. Lagrangian mechanics and variational integrators on two-spheres. International Journal for Numerical Methods in Engineering, 79(9):1147–1174, 2009.

- Lee et al. [2017] T. Lee, M. Leok, and N. H. McClamroch. Global Formulations of Lagrangian and Hamiltonian Dynamics on Manifolds: A Geometric Approach to Modeling and Analysis. Interaction of Mechanics and Mathematics. Springer International Publishing, 2017. ISBN 9783319569536.

- Lee et al. [2021] T. Lee, M. Tao, and M. Leok. Variational symplectic accelerated optimization on Lie groups. 2021.

- Leok [2004] M. Leok. Generalized Galerkin variational integrators: Lie group, multiscale, and pseudospectral methods. (preprint, arXiv:math.NA/0508360), 2004.

- Leok and Ohsawa [2011] M. Leok and T. Ohsawa. Variational and geometric structures of discrete Dirac mechanics. Found. Comput. Math., 11(5):529--562, 2011.

- Leok and Shingel [2012] M. Leok and T. Shingel. Prolongation-collocation variational integrators. IMA J. Numer. Anal., 32(3):1194--1216, 2012.

- Leok and Zhang [2011] M. Leok and J. Zhang. Discrete Hamiltonian variational integrators. IMA Journal of Numerical Analysis, 31(4):1497--1532, 2011.

- Lewis and Simo [1994] D. Lewis and J. C. Simo. Conserving algorithms for the dynamics of Hamiltonian systems on Lie groups. Journal of Nonlinear Science, 4(1):253--299, December 1994. doi: 10.1007/BF02430634.

- Marsden and Ratiu [1999] J. E. Marsden and T. S. Ratiu. Introduction to mechanics and symmetry, volume 17 of Texts in Applied Mathematics. Springer-Verlag, New York, second edition, 1999.

- Marsden and West [2001] J. E. Marsden and M. West. Discrete mechanics and variational integrators. Acta Numer., 10:357--514, 2001.

- Munthe-Kaas [1995] H. Z. Munthe-Kaas. Lie-Butcher theory for Runge-Kutta methods. BIT, 35:572--587, 1995.

- Munthe-Kaas [1998] H. Z. Munthe-Kaas. Runge-Kutta methods on Lie groups. BIT Numerical Mathematics, 38:92--111, 1998.

- Munthe-Kaas [1999] H. Z. Munthe-Kaas. High order Runge-Kutta methods on manifolds. Applied Numerical Mathematics, 29:115--127, 1999.

- Nash [1956] J. Nash. The imbedding problem for Riemannian manifolds. Annals of Mathematics, 63(1):20--63, 1956. ISSN 0003486X.

- Nesterov [1983] Y. Nesterov. A method of solving a convex programming problem with convergence rate . Soviet Mathematics Doklady, 27(2):372--376, 1983.

- Nesterov [2004] Y. Nesterov. Introductory Lectures on Convex Optimization: A Basic Course, volume 87 of Applied Optimization. Kluwer Academic Publishers, Boston, MA, 2004.

- Nordkvist and Sanyal [2010] N. Nordkvist and A. K. Sanyal. A Lie group variational integrator for rigid body motion in SE(3) with applications to underwater vehicle dynamics. In 49th IEEE Conference on Decision and Control (CDC), pages 5414--5419, 2010. doi: 10.1109/CDC.2010.5717622.

- Schmitt and Leok [2017] J. M. Schmitt and M. Leok. Properties of Hamiltonian variational integrators. IMA Journal of Numerical Analysis, 38(1):377--398, 03 2017.

- Schmitt et al. [2018] J. M. Schmitt, T. Shingel, and M. Leok. Lagrangian and Hamiltonian Taylor variational integrators. BIT Numerical Mathematics, 58:457--488, 2018. doi: 10.1007/s10543-017-0690-9.

- Su et al. [2016] W. Su, S. Boyd, and E. Candes. A differential equation for modeling Nesterov’s Accelerated Gradient method: theory and insights. Journal of Machine Learning Research, 17(153):1--43, 2016.

- Tao and Ohsawa [2020] M. Tao and T. Ohsawa. Variational optimization on Lie groups, with examples of leading (generalized) eigenvalue problems. In Proceedings of the 23rd International AISTATS Conference, volume 108 of PMLR, 2020.

- Tieleman and Hinton [2012] T. Tieleman and G. Hinton. Coursera: Neural Networks for Machine Learning - Lecture 6.5: RMSprop. 2012.

- Wahba [1965] G. Wahba. A Least Squares Estimate of Satellite Attitude. SIAM Review, 7(3):409, July 1965. doi: 10.1137/1007077.

- Whitney [1944a] H. Whitney. The singularities of a smooth -manifold in -space. Annals of Mathematics, 45(2):247--293, 1944a. ISSN 0003486X.

- Whitney [1944b] H. Whitney. The self-intersections of a smooth -manifold in -space. Annals of Mathematics, 45(2):220--246, 1944b. ISSN 0003486X.

- Wibisono et al. [2016] A. Wibisono, A. Wilson, and M. Jordan. A variational perspective on accelerated methods in optimization. Proceedings of the National Academy of Sciences, 113(47):E7351--E7358, 2016.

- Yoshimura and Marsden [2006a] H. Yoshimura and J. E. Marsden. Dirac structures in Lagrangian mechanics Part I: Implicit Lagrangian systems. Journal of Geometry and Physics, 57(1):133--156, 2006a.

- Yoshimura and Marsden [2006b] H. Yoshimura and J. E. Marsden. Dirac structures in Lagrangian mechanics Part II: Variational structures. Journal of Geometry and Physics, 57(1):209--250, 2006b.

- [68] A. Zanna. Collocation and relaxed collocation for the Fer and the Magnus expansions. SIAM Journal on Numerical Analysis, 36(4):1145--1182. ISSN 00361429.

- Zare and Szebehely [1975] K. Zare and V. G. Szebehely. Time transformations in the extended phase-space. Celestial mechanics, 11:469--482, 1975.