TIFace: Improving Facial Reconstruction through Tensorial Radiance

Fields and Implicit Surfaces

Abstract.

This report describes the solution that secured the first place in the ”View Synthesis Challenge for Human Heads (VSCHH)” at the ICCV 2023 workshop.

Given the sparse view images of human heads, the objective of this challenge is to synthesize images from novel viewpoints.

Due to the complexity of textures on the face and the impact of lighting, the baseline method TensoRF yields results with significant artifacts, seriously affecting facial reconstruction.

To address this issue, we propose TI-Face, which improves facial reconstruction through tensorial radiance fields (T-Face) and implicit surfaces (I-Face), respectively.

Specifically, we employ an SAM-based approach to obtain the foreground mask, thereby filtering out intense lighting in the background.

Additionally, we design mask-based constraints and sparsity constraints to eliminate rendering artifacts effectively.

The experimental results demonstrate the effectiveness of the proposed improvements and superior performance of our method on face reconstruction.

The code will be available at https://github.com/RuijieZhu94/TI-Face.

1 Introduction

Reconstructing 3D objects from multi-view 2D images is a fundamental and long-standing challenge in the fields of computer vision and graphics, with critical applications in robotics, 3D modeling, virtual reality, and beyond. Traditional methods [12, 13] typically involve finding matching point pairs across multiple viewpoints and leveraging the principles of multi-view geometry to reconstruct 3D objects. However, these approaches face difficulties in handling scenes with a lack of texture or repetitive patterns and often struggle to generate dense reconstructions.

Therefore, some learning-based multi-view stereo (MVS) methods have been proposed [16, 3, 11], using plane assumptions to aggregate cost volumes and predict dense depth end-to-end for the recovery of 3D objects. However, they struggle to effectively handle occlusions and non-Lambertian surfaces. Recently, NeRF [9] and a plethora of subsequent works [14, 1, 10] have been introduced, demonstrating the significant power of neural networks in the implicit representation of 3D objects. By learning to map 3D coordinates to volume density and view-dependent colors, these methods efficiently achieve novel view synthesis and produce remarkably realistic rendering effects. However, they are often optimized for a specific scene, making the training of the network lack prior knowledge.

To explore efficient and realistic representations of 3D objects, a novel challenge [5] was introduced at the ICCV 2023 workshop111https://sites.google.com/view/vschh/home. Various methods are evaluated in a new public dataset [17] for their performance in the task of synthesizing new views of human heads. Given a set of sparse views of the human head, this challenge requires the reconstruction of images from specified new perspectives. As views are limited and sparse, and include uncertain halos as background noise, existing methods for novel view synthesis face significant challenges in this context.

To address this challenge, we propose TI-Face, which enhances facial reconstruction through tensorial radiance fields and implicit surfaces. Firstly, we analyze the shortcomings of the baseline method TensoRF [1], specifically the artifacts caused by background noise. To address this issue, we employ an SAM-based image segmentation algorithm, ViTMatte [15], which achieves high-precision segmentation of the background region with manual placement of a few prompt points. The proposed TI-Face further constrains the volume density sampling points in the background region based on the generated mask, ensuring that the color of the background region is transparent rather than black. On the other hand, we introduce I-Face, which reconstructs human heads by enhancing implicit surface reconstruction through the combination of InstantNGP [10] and NeuS [14]. By combining mask constraints and sparsity constraints, I-Face can rapidly and efficiently reconstruct the surface of human heads, rendering surface colors from new perspectives. By combining T-Face and I-Face, our approach faithfully reconstructs realistic human heads, particularly preserving fine details in the facial features. The proposed method surpassed the approaches of other participants in VCSHH, securing the first place and highlighting the outstanding performance of TI-Face in the task of novel view synthesis.

The main contributions of our work are as follows:

-

•

We propose a novel framework TI-Face, which improves facial reconstruction through tensorial radiance fields (T-Face) and implicit surfaces (I-Face).

-

•

To remove floating artifacts during rendering, we adopt an SAM-based mask generation method and make mask constraints on both T-Face and I-Face. Meanwhile, a sparsity loss is additionally applied to I-Face for better rendering quality.

-

•

Experiments on the ILSH dataset demonstrate the effectiveness of the proposed improvements. Furthermore, we win the first place in VSCHH at the ICCV 2023 workshop.

2 Methodology

2.1 Baseline

To address the View Synthesis Challenge for Human Heads (VSCHH), we use TensoRF [1] as our baseline. TensoRF utilizes 4D tensors to model the radiance field of a scene. The key idea mainly focuses on the low-rank factorization of 4D tensors to achieve better rendering quality and smaller model size with fast speed. Following the baseline model, we implement a vector-matrix decomposition version of TensoRF with some modifications to fit the ILSH dataset. As shown in Figure 3, although the baseline model achieves relatively accurate facial reconstruction, the reconstructed results often have floating artifacts, which severely affects the reconstruction quality of the edges of the face. We speculate that this is because there are light sources in the background area of the rendered image, which causes a sudden change in the background color. To avoid this, we use an efficient way to get the masks corresponding to the input images. Furthermore, we improve both explicit and implicit rendering methods and build additional constraints based on masks to improve rendering quality.

2.2 T-Face

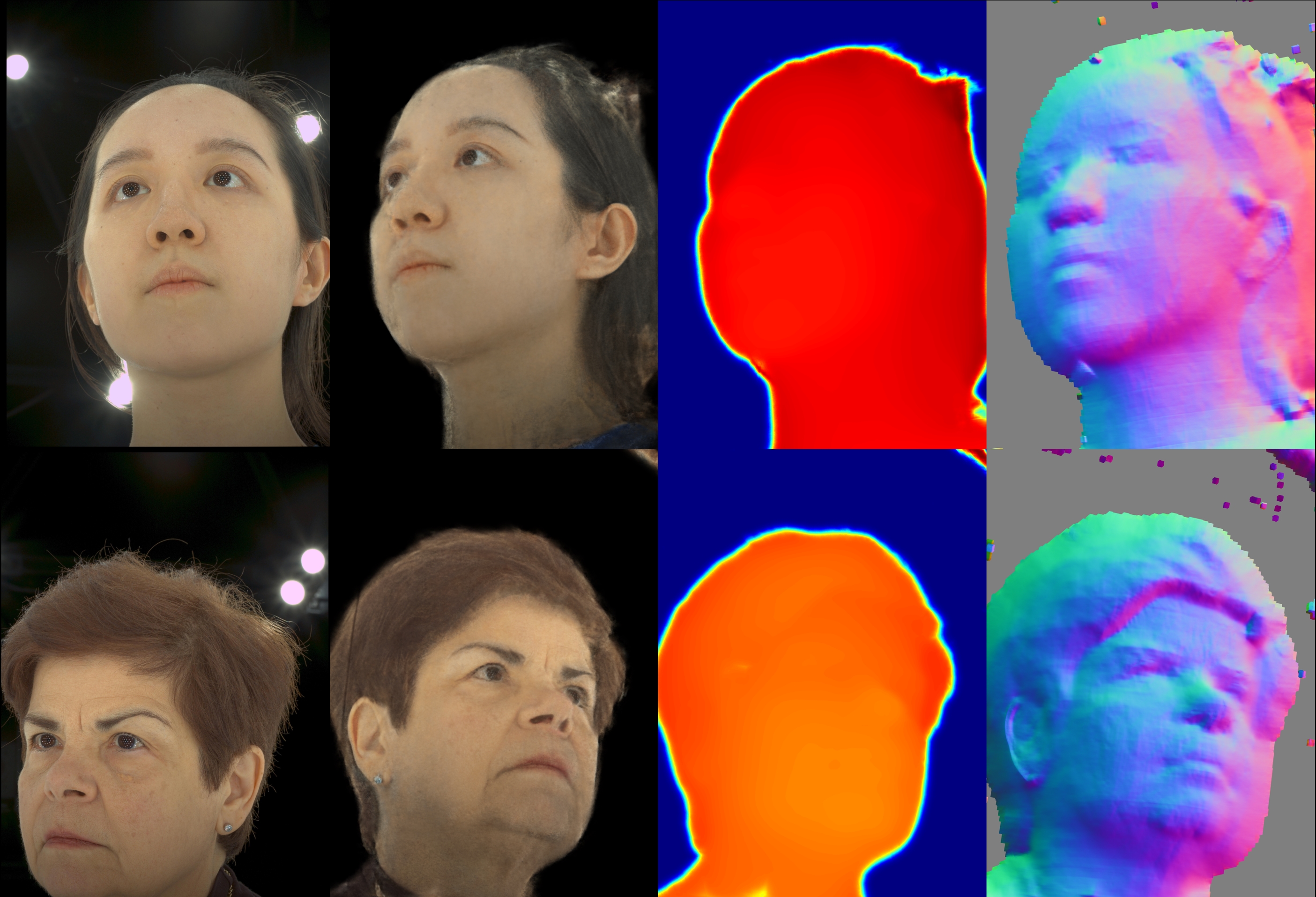

Recently, SAM-based image segmentation methods have attracted a lot of attention [7]. Following ViTMatte [15], we obtain the corresponding masks of the input images through a small number of label points as prompts. As shown in Figure 2, we get masks fine enough to distinguish the foregrounds and the backgrounds of the images, even in the hair strand region.

A natural way to utilize masks is to combine masks with RGB images into RGBA images, as implemented on the Blender dataset. This actually uses masks to set the values of background area in the image to a fixed color (e.g. black). Although it appears to remove the effect of cluttered backgrounds on rendering faces, this method still exhibits some artifacts in our experiments, as shown in Figure 4 and Figure 5. We speculate that the reason is that the generated mask is not completely accurate and that the model lacks constraints on the background, resulting in a not so fine sampling on the surface.

To address this issue, we propose a constraint on the mask and demonstrate its effectiveness through experiments. For each pixel, we march along a ray, sampling shading points along the ray and computing the accumulated density weights:

| (1) |

Here, is the density at the sample location , is the ray step size, and denotes the ray transmittance. The mask loss is:

| (2) |

where is the pixel corresponding to the ray, is the background areas according to the masks, is the indicator function. Here, we set in our experiments. Note that we also tried to use cross entropy in mask loss function, and found that it is not as effective as the proposed loss.

Finally, the total loss is defined as:

| (3) |

where is a MSE loss on RGB color, is the regularization term and is the total variation (TV) loss that measures the difference between neighboring values in the matrix or vector factors.

2.3 I-Face

To further improve the face rendering quality, we explore another way to render photo-realistic faces. We observe that although T-Face shows promising results, it does not perform well in facial reconstruction details. Inspired by InstantNGP [10] and NeuS [14], we use implicit surface rendering for face reconstruction based on Instant-NeuS implementation [4]. Before this, we also tried Instant-NeRF [4] (a combination of InstantNGP [10] and NeRF [9]) to complete face reconstruction, but gave up due to the difficulty of imposing constraints on neural radiance fields to remove artifacts. In the experiment, we use the binary cross entropy loss as the mask loss, as mentioned in NeuS [14]. To avoid floating artifacts, we also add sparsity loss:

| (4) |

where is the sampled point, is the corresponding SDF values, represents the collection of sampling points. Here we set . Finally, the total loss is defined as:

| (5) |

where is a MSE loss on RGB color and is the Eikonal regularization [2].

2.4 Model Ensemble

To further improve the results, we use a simple and effective linear weighted ensemble method to obtain the final results. In practice, we use a set of linear weights corresponding to the baseline, T-Face, and I-Face to group the rendered images.

3 Experiments

This section presents the experimental results during the challenge phase. We first compare our approach with baseline method and then analyse the effectiveness of the proposed improvements.

3.1 Implementation Details

We implement our T-Face and I-Face in PyTorch. In T-Face, we follow the baseline configurations, using Adam optimizer [6] with and initial learning rate of for tensor factors and for the MLP decoder. We train our model T-Face for 50000 steps with a batch size of 4096 rays on a single NVIDIA RTX 3090 (40-60 minutes per scene). Following TensoRF [1], we start from an initial coarse grid with voxels and then upsample them at steps 2000, 3000, 4000, 5500, and 7000 to a fine grid with voxels. In I-Face, we use AdamW optimizer [8] with and the learning rate . We train our model I-Face for 20000 steps with a dynamic batch size (256-8192) on a single NVIDIA RTX 3090 (10-20 minutes per scene).

| Methods | Full Region | Masked Region | ToI (Sec.) | Devices | ||

| PSNR | SSIM | PSNR | SSIM | |||

| DINER-SR* | 22.37 | 0.72 | 28.50 | 0.83 | 87.25 | V100 |

| MPFER-H* | 28.05 | 0.84 | 28.90 | 0.83 | 1.50 | V100 |

| KHAG | 22.14 | 0.64 | 23.39 | 0.79 | 2.58 | RTX A6000 |

| xoft | 20.01 | 0.64 | 25.02 | 0.80 | 727.00 | A10 |

| Y-KIST-NeRF | 20.73 | 0.71 | 25.54 | 0.82 | 15.10 | RTX 6000 ADA |

| CUBE | 21.07 | 0.66 | 25.72 | 0.81 | 95.00 | A100 & H100 |

| CogCoVi | 21.49 | 0.70 | 26.33 | 0.82 | 806.00 | A40 |

| NoNeRF | 20.37 | 0.69 | 26.43 | 0.82 | 175.58 | RTX3090 |

| TI-Face(Ours) | 21.66 | 0.68 | 27.02 | 0.83 | 76.88 | RTX 3090 |

3.2 Experimental Results

Comparison with other methods. We compare our TI-Face with other methods sumbitted to the VSCHH benchmark [5]. The VSCHH selects the PSNR metric of mask region to be the ranking indicator, which emphasize the rendering quality of high-value areas (i.e., face and hair). Masks for high-value areas are generated by the organizers and are invisible to participants in the challenge. As show in Table 1, the experimental results demonstrate that our TI-Face significantly outperforms methods from other challenge participants in the evaluation of masked image region. Meanwhile, compared with the second and third place methods NoNeRF and CogCoVi, our method TI-Face has a clear advantage in time of inference (ToI), reflecting the high efficiency of the proposed method.

Comparison with the baseline. We evaluate our TI-Face in the ILSH dataset. As shown in Table 2, the results obtained by emsembling baseline, T-Face and I-Face perform better than those rendered by either method alone. We also selected several examples to qualitatively compare these methods, as shown in Figures 6, LABEL: and 7. Interestingly, although I-Face appears to render clearer images, it does not perform as well as T-Face in actual evaluations. We speculate that the reason is that the results generated by I-Face have an overall deviation from the ground truth, as shown in the Figure 8, the rendering results of I-Face have unexpected color differences and geometric inconsistency.

| Methods | Full Region | Masked Region | ||

|---|---|---|---|---|

| PSNR | SSIM | PSNR | SSIM | |

| TensoRF [1] | 20.28 | 0.70 | 24.70 | 0.81 |

| T-Face(Ours) | 21.42 | 0.67 | 26.63 | 0.82 |

| I-Face(Ours) | 20.99 | 0.66 | 25.84 | 0.81 |

| TI-Face(Ours) | 21.66 | 0.68 | 27.02 | 0.83 |

| Settings | Masked Region | |

|---|---|---|

| PSNR | SSIM | |

| baseline | 24.03 | 0.83 |

| + SAM mask | 25.24 | 0.83 |

| + our mask | 25.39 | 0.84 |

| + our mask + (CE) | 25.52 | 0.84 |

| + our mask + (L2) | 26.77 | 0.84 |

3.3 Ablation Study

To demonstrate the effectiveness of the proposed improvement, we ablate our methods in the first three scenes of the ILSH dataset.

Ablation of T-Face. As shown in Table 3, the ablation experiments demonstrate the effectiveness of the proposed mask and corresponding loss function. When adding the mask into baseline, we set the background region to black to eliminate artifacts. However, the results indicated that if the mask is not properly constrained, floating artifacts still persist, as shown in Figures 3, LABEL: and 4. Furthermore, the mask generated by our approach outperforms the mask generated directly by SAM, demonstrating the effectiveness of refining the mask generated by SAM. Furthermore, in the choice of the mask loss function, we surprisingly find that the use of L2 loss performs better than the use of cross entropy (CE) loss, resulting in a 1.25dB improvement in the PSNR metric in the masked region.

Ablation of I-Face. The use of qualitative results to demonstrate the ablation experiment of I-Face is clearly more intuitive. As shown in Figure 9, the vanilla Instant-NeuS fails to reconstruct human head from unmasked sparse input views. After adding the generated mask, the proposed sparsity loss function further improves the quality of implicit surface reconstruction. Although I-Face exhibits finer details visually, the overall reconstruction quality of I-Face is still not as high as that of T-Face. Our speculation is that this issue is attributed to overall color discrepancies caused by geometric inaccuracies.. Therefore, improving the rendering quality of implicit surfaces remains a huge challenge.

4 Conclusion

This report introduces the TI-Face model, designed to reconstruct human heads for novel view synthesis on a novel public dataset. The proposed model consists of two components: T-Face and I-Face, leveraging Tensorial Radiance Fields and Implicit Surfaces, respectively, to eliminate visual artifacts and enhance image rendering from new perspectives. The experiments demonstrate the effectiveness of the generated mask and the proposed loss function constraints, resulting in the first-place achievement at ICCV 2023 workshop VSCHH. Our proposed approach represents an exploration of explicitly modeling masks in the task of novel view synthesis. Investigating how to model masks for specific objects in more complex scenes and achieving reconstruction will be a valuable avenue for future research.

References

- [1] Anpei Chen, Zexiang Xu, Andreas Geiger, Jingyi Yu, and Hao Su. Tensorf: Tensorial radiance fields. In European Conference on Computer Vision (ECCV), 2022.

- [2] Amos Gropp, Lior Yariv, Niv Haim, Matan Atzmon, and Yaron Lipman. Implicit geometric regularization for learning shapes. arXiv preprint arXiv:2002.10099, 2020.

- [3] Xiaodong Gu, Zhiwen Fan, Siyu Zhu, Zuozhuo Dai, Feitong Tan, and Ping Tan. Cascade cost volume for high-resolution multi-view stereo and stereo matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2495–2504, 2020.

- [4] Yuan-Chen Guo. Instant neural surface reconstruction, 2022. https://github.com/bennyguo/instant-nsr-pl.

- [5] Youngkyoon Jang, Jiali Zheng, Jifei Song, Helisa Dhamo, Eduardo Pérez-Pellitero, Thomas Tanay, Matteo Maggioni, Richard Shaw, Sibi Catley-Chandar, Yiren Zhou, Jiankang Deng, Ruijie Zhu, Jiahao Chang, Ziyang Song, Jiahuan Yu, Tianzhu Zhang, Khanh-Binh Nguyen, Joon-Sung Yang, Andreea Dogaru, Bernhard Egger, Heng Yu, Aarush Gupta, Joel Julin, László A. Jeni, Hyeseong Kim, Jungbin Cho, Dosik Hwang, Deukhee Lee, Doyeon Kim, Dongseong Seo, SeungJin Jeon, YoungDon Choi, Jun Seok Kang, Ahmet Cagatay Seker, Sang Chul Ahn, Ales Leonardis, and Stefanos Zafeiriou. Vschh 2023: A benchmark for the view synthesis challenge of human heads. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, pages 1121–1128, October 2023.

- [6] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- [7] Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C Berg, Wan-Yen Lo, et al. Segment anything. arXiv preprint arXiv:2304.02643, 2023.

- [8] Ilya Loshchilov and Frank Hutter. Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101, 2017.

- [9] Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. Nerf: Representing scenes as neural radiance fields for view synthesis. Communications of the ACM, 65(1):99–106, 2021.

- [10] Thomas Müller, Alex Evans, Christoph Schied, and Alexander Keller. Instant neural graphics primitives with a multiresolution hash encoding. ACM Transactions on Graphics (ToG), 41(4):1–15, 2022.

- [11] Rui Peng, Rongjie Wang, Zhenyu Wang, Yawen Lai, and Ronggang Wang. Rethinking depth estimation for multi-view stereo: A unified representation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

- [12] Johannes Lutz Schönberger and Jan-Michael Frahm. Structure-from-motion revisited. In Conference on Computer Vision and Pattern Recognition (CVPR), 2016.

- [13] Johannes Lutz Schönberger, Enliang Zheng, Marc Pollefeys, and Jan-Michael Frahm. Pixelwise view selection for unstructured multi-view stereo. In European Conference on Computer Vision (ECCV), 2016.

- [14] Peng Wang, Lingjie Liu, Yuan Liu, Christian Theobalt, Taku Komura, and Wenping Wang. Neus: Learning neural implicit surfaces by volume rendering for multi-view reconstruction. arXiv preprint arXiv:2106.10689, 2021.

- [15] Jingfeng Yao, Xinggang Wang, Shusheng Yang, and Baoyuan Wang. Vitmatte: Boosting image matting with pretrained plain vision transformers. arXiv preprint arXiv:2305.15272, 2023.

- [16] Yao Yao, Zixin Luo, Shiwei Li, Tian Fang, and Long Quan. Mvsnet: Depth inference for unstructured multi-view stereo. In Proceedings of the European conference on computer vision (ECCV), pages 767–783, 2018.

- [17] Jiali Zheng, Youngkyoon Jang, Athanasios Papaioannou, Christos Kampouris, Rolandos Alexandros Potamias, Foivos Paraperas Papantoniou, Efstathios Galanakis, Aleš Leonardis, and Stefanos Zafeiriou. Ilsh: The imperial light-stage head dataset for human head view synthesis. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, pages 1112–1120, October 2023.