Threshold-Based Fast Successive-Cancellation Decoding of Polar Codes

Abstract

Fast SC decoding overcomes the latency caused by the serial nature of the SC decoding by identifying new nodes in the upper levels of the SC decoding tree and implementing their fast parallel decoders. In this work, we first present a novel sequence repetition node corresponding to a particular class of bit sequences. Most existing special node types are special cases of the proposed sequence repetition node. Then, a fast parallel decoder is proposed for this class of node. To further speed up the decoding process of general nodes outside this class, a threshold-based hard-decision-aided scheme is introduced. The threshold value that guarantees a given error-correction performance in the proposed scheme is derived theoretically. Analysis and hardware implementation results on a polar code of length with code rates , , and show that our proposed algorithm reduces the required clock cycles by up to , and leads to a improvement in the maximum operating frequency compared to state-of-the-art decoders without tangibly altering the error-correction performance. In addition, using the proposed threshold-based hard-decision-aided scheme, the decoding latency can be further reduced by at dB.

Index Terms:

Polar codes, Fast successive-cancellation decoding, Sequence repetition node, Threshold-based hard-decision-aided scheme.I Introduction

Polar codes represent a channel coding scheme that can provably achieve the capacity of binary-input memoryless channels [1]. The explicit coding structure and low-complexity successive-cancellation (SC) decoding algorithm has generated significant interest in polar code research across both industry and academia. In particular, in the latest cellular standard of 5G [2], polar codes are adopted in the control channel of the enhanced mobile broadband (eMBB) use case. Although SC decoding provides a low-complexity capacity-achieving solution for polar codes with long block length, its sequential bit-by-bit decoding nature leads to high decoding latency, which constrains its application in low-latency communication scenarios such as the ultra-reliable low-latency communication (URLLC) [2] scheme of 5G. Therefore, the design of fast SC-based decoding algorithms for polar codes with low decoding latency has received a lot of attention [3].

A look-ahead technique was adopted to speed up SC decoding in [4, 5, 6] by pre-computing all the possible likelihoods of the bits that have not been decoded yet, and selecting the appropriate likelihood once the corresponding bit is estimated. Using the binary tree representation of SC decoding of polar codes, instead of working at the bit-level that corresponds to the leaf nodes of the SC decoding tree, parallel multi-bit decision is performed at the intermediate nodes of the SC decoding tree. An exhaustive-search decoding algorithm is used in [7, 8, 9, 10] to make multi-bit decisions and to avoid the latency caused by the traversal of the SC decoding tree to compute the intermediate likelihoods. However, due to the high complexity of the exhaustive search, this method is generally only suitable for nodes that represent codes of very short lengths.

It was shown in [11] that a node in the SC decoding tree that represents a code of rate (Rate-0 node) or a code of rate (Rate-1 node) can be decoded efficiently without traversing the SC decoding tree. In [12], fast decoders of repetition (REP) and single parity-check (SPC) nodes were proposed for the SC decoding. Using these nodes, a fully-unrolled hardware architecture was proposed in [13]. Techniques were developed in [14, 15, 16, 17, 18] to adjust the codes that are represented by the nodes in the SC decoding tree to increase the number of nodes that can be decoded efficiently. However, these methods result in a degraded error-correction performance. On the basis of the works in [11, 12], five new nodes (Type-I, Type-II, Type-III, Type-IV, and Type-V) were identified and their fast decoders were designed in [19]. In [20], a generalized REP (G-REP) node and a generalized parity-check (G-PC) node were proposed to reduce the latency of the SC decoding even further. In [21], seven of the most prevalent node patterns in short polar codes were analysed and efficient algorithms for processing these node patterns in parallel are proposed. However, the decoding of some of these node patterns leads to significant performance loss. Memory footprint optimization and operation merging were described in [22, 23] to improve the speed of SC decoding. The combination of fast SC and fast SC flip decoding algorithms was investigated in [24, 25]. Moreover, the identification and the utilization of the aforementioned special nodes were also extended to SC list (SCL) [26] decoding [27, 28, 29, 30, 31]. All these works require the design of a separate decoder for each class of node, which inevitably increases the implementation complexity. In addition, as shown in this work, the achievable parallelism in decoding can be further increased without degrading the error-correction performance.

For general nodes that do not fall in one of the above node categories, [32] proposed a hard-decision scheme based on node error probability. Specifically, in that work it was shown that extra latency reduction can be achieved when the communications channel has low noise. However, the hard-decision threshold is calculated empirically rather than for a desired error-correction performance. In [33], a hypothesis-testing-based strategy is designed to select reliable unstructured nodes for hard decision. However, additional operations are required to be performed to calculate the decision rule, thus, incurring extra decoding latency. For all the existing hard-decision schemes, a threshold comparison operation is required each time a general constituent code is encountered in the course of the SC decoding algorithm.

In this paper, a fast SC decoding algorithm with a higher degree of parallelism than the state of the art is proposed. First, a class of sequence repetition (SR) nodes is proposed which provides a unified description of most of the existing special nodes. This class of nodes is typically found at a higher level and has a higher frequency of occurrence in the decoding tree than other existing special nodes. 5G polar codes with different code lengths and code rates can be represented using only SR nodes. Utilizing this class of nodes, a simple and efficient fast simplified SC decoding algorithm called the SR node-based fast SC (SRFSC) decoding algorithm is proposed, which achieves a high degree of parallelism without degrading the error-correction performance. Employing only SR nodes, the SRFSC decoder requires a lower number of time steps than most existing works. Moreover, SR nodes can be implemented together with other operation mergers, such as node-branch and branch mergers, to outperform the state-of-the-art decoders in terms of the required number of time steps. In addition, if a realistic hardware implementation is considered, the proposed SRFSC decoder provides up to reduction in terms of the required number of clock cycles and improvement in terms of the maximum operating frequency with respect to the state-of-the-art decoders on a polar codes of length changedwith code rates , , and .

Second, a threshold-based hard-decision-aided (TA) scheme is proposed to speed up the decoding of the nodes that are not SR nodes for a binary additive white Gaussian noise (BAWGN) channel. Consequently, a TA-SRFSC decoding algorithm is proposed that adopts a simpler threshold for hard-decision than that in [32]. The effect of the defined threshold on the error-correction performance of the proposed TA-SRFSC decoding algorithm is analyzed. Moreover, a systematic way to derive the threshold value for a desired upper bound for its block error rate (BLER) is determined. Performance results show that, with the help of the proposed TA scheme, the decoding latency of SRFSC decoding can be further reduced by at dB on a polar code of length and rate . In addition, a multi-stage decoding strategy is introduced to mitigate the possible error-correction performance loss of TA-SRFSC decoding with respect to SC decoding, while achieving average decoding latency comparable to TA-SRFSC decoding.

The rest of this paper is organized as follows. Section II gives a brief introduction to the basic concept of polar codes and fast SC decoding. In Section III, the SRFSC decoding algorithm is introduced. With the help of the proposed TA scheme, the TA-SRFSC decoding is presented in Section IV. Section V analyzes the decoding latency and simulation results are shown in Section VI. Finally, Section VII gives a summary of the paper and concluding remarks.

II Preliminaries

II-A Notation Conventions

In this paper, blackboard letters, such as , denote a set and denotes the number of elements in . Bold letters, such as , denote a row vector, denotes the transpose of , and notation , represents a subvector . is used as the bitwise XOR operation and , . The Kronecker product of two matrices and is written as . represents an square matrix and denotes the -th Kronecker power of . Throughout this paper, denotes the natural logarithm and indicates the base- logarithm of , respectively.

II-B Polar Codes

A polar code with code length and information length is denoted by and has rate . The encoding can be expressed as , where is the input bit sequence and is the encoded bit sequence. is the generator matrix, where is a bit-reversal permutation matrix and .

The input bit sequence consists of information bits and frozen bits. The information bits form set , transmitting information bits, while the frozen bits form set , transmitting fixed bits known to the receiver. For symmetric channels, without loss of generality, all frozen bits are set to zero [1]. To distinguish between frozen and information bits, a vector of flags is used where each flag is assigned as

| (1) |

The codeword is transmitted through a channel after modulation. In this paper, non-systematic polar codes and binary phase-shift keying (BPSK) modulation that maps to are considered. Transmission takes place over an additive white Gaussian noise (AWGN) channel.

II-C SC Decoding and Binary Tree Representation

SC decoding can be illustrated on the factor graph of polar codes as shown in Fig. 2. The factor graph consists of levels and, by grouping all the operations that can be performed in parallel, SC decoding can be represented as the traversal of a binary tree. This traversal is shown in Fig. 2, starting from the left side of the binary tree. At level of the SC decoding tree with levels, there are nodes (), and the -th node at level () of the SC decoding tree is denoted as . The left and the right child nodes of are and , respectively, as illustrated in Fig. 2. For , , , indicates the -th input logarithmic likelihood ratio (LLR) value, and , , denotes the -th output binary hard-valued message. For the AWGN channel, the received vector from the channel can be used to calculate the channel LLR as , where is the variance of the Gaussian noise. SC decoding starts by setting . A node will be activated once all its inputs are available. When LLR messages pass through a node in the factor graph which is indicated by the sign, the function over the LLR domain is executed as

| (2) |

and when LLR messages pass through a node in the factor graph which is indicated by the sign, the function over the LLR domain is executed as

| (3) |

where

| (4) | ||||

| (5) |

The function can be approximated as [34]

| (6) |

When the LLR value of the -th bit at level zero , is calculated, the estimation of , denoted as , can be obtained as

| (7) |

The hard-valued messages are propagated back to the parent node as

| (8) |

After traversing all the nodes in the SC decoding tree, contains the decoding result. Thus the latency of SC decoding for a polar code of length in terms of the number of time steps can be represented by the number of nodes in the SC decoding tree as [1]

| (9) |

II-D Fast SC Decoding

The SC decoding has strong data dependencies that limit the amount of parallelism that can be exploited within the algorithm because the estimation of each bit depends on the estimation of all previous bits. This leads to a large latency in the SC decoding algorithm. It was shown in [9] that for a node , can be estimated without traversing the decoding tree as

| (10) |

where is the set of all the codewords associated with node . Multibit decoding can be performed directly in an intermediate level instead of bit-by-bit sequential decoding at level , in order to traverse fewer nodes in the SC decoding tree and consequently, to reduce the latency caused by data computation and exchange. However, the evaluation of (10) generally requires exhaustive search over all the codewords in the set which is computationally intensive in practice. In [12], a fast SC (FSC) decoding algorithm was proposed which performs fast parallel decoding when specific special node types are encountered. The sequences of information and frozen bits of these node types have special bit-patterns. Therefore, they can be decoded more efficiently without the need for exhaustive search. Using the vector , the five special node types proposed in [12] are described as:

-

•

Rate-0 node: all bits are frozen bits, .

-

•

Rate-1 node: all bits are non-frozen bits, .

-

•

REP node: all bits are frozen bits except the last one, .

-

•

SPC node: all bits are non-frozen bits except the first one, .

-

•

ML node: a code of length with .

By merging the aforementioned special nodes, five additional nodes were proposed, namely, the REP-SPC node, which is a REP node followed by a SPC node; the P-01/P-0SPC node, which is generated by merging a Rate-0 node with a Rate-1/SPC node; and the P-R1/P-RSPC node, which is generated by merging a function branch operation in (5) with the following Rate-1/SPC node. In [19], five additional special node types and their corresponding fast decoders were introduced. This enhanced FSC decoding algorithm can achieve a lower decoding latency than FSC decoding. The five special node types are:

-

•

Type-I node: all bits are frozen bits except the last two, .

-

•

Type-II node: all bits are frozen bits except the last three, .

-

•

Type-III node: all bits are non-frozen bits except the first two, .

-

•

Type-IV node: all bits are non-frozen bits except the first three, .

-

•

Type-V node: all bits are frozen bits except the last three and the fifth to last, .

A generalized FSC (GFSC) decoding algorithm was proposed in [20] by introducing the G-PC node and the G-REP node (previously identified as Multiple-G0 nodes in [23]). The G-PC node is a node at level having all its descendants as Rate-1 nodes except the leftmost one at a certain level , that is a Rate-0 node. The G-REP node is a node at level for which all its descendants are Rate-0 nodes, except the rightmost one at a certain level , which is a generic node of rate (Rate-C).

In [18], in addition to the nodes in [12], three special node types are added: the REP1 node, with the REP node on the left and the Rate-1 node on the right; the 0REPSPC node, which is a concatenation of the Rate-0, REP, and SPC nodes; and the 001 node, whose left of bits are frozen bits and right of bits are unfrozen bits.

To further reduce the latency, the operation merging scenarios are generalized in [22] and [23] where, in addition to merging special nodes, branch operation ((4),(5) and (8)) merging is also considered. Consequently, REP-REPSPC, REP-Rate1, and Rate0-ML nodes are adopted as the merging of special nodes, and F-REP node is adopted as a node-branch merger, which is generated by performing an function operation followed by a REP node. The following branch operation mergers are further introduced:

-

•

: two consecutive function operations in (4).

-

•

: two consecutive G0 operations, where G0 is the function in (5) assuming .

-

•

C×2, C×3: up to three consecutive operations in (8).

-

•

C0×2, C0×3: up to three consecutive operations in (8), assuming the estimations from the left branch are all zeros.

-

•

G-F: function operation followed by an function operation.

-

•

F-G0: function operation followed by a G0 operation.

The key advantage of using specific parallel decoders for the aforementioned special nodes is that, since the SC decoding tree is not traversed when one of these nodes is encountered, significant latency saving can be achieved. For example, if is a Rate-1 node, hard decision decoding can be used to immediately obtain the decoding result as

| (11) |

If is a SPC node, hard decision based on (11) is first derived followed by the calculation of the parity of the output using modulo-2 addition. The index of the least reliable bit is found as

| (12) |

Finally, the bits in a SPC node are estimated as

| (13) |

This operation can be performed in a single time step [19]. Finally, if is a G-PC node, the decoding can be viewed as a parallel decoding of several separate SPC nodes. The decoding of a G-PC node can generally be performed in one time step considering parallel SPC decoders [20].

All of the aforementioned fast SC decoding algorithms perform decoding at an intermediate level of the decoding tree in order to reduce the number of traversed nodes. A decoding algorithm that can decode a node at a higher level of the decoding tree generally results in more savings in terms of latency than one that decodes a node at a lower level of the decoding tree.

II-E Threshold-based Hard-decision-aided Scheme

For a binary AWGN channel with standard deviation , it was shown in [35] that, considering all the previous bits are decoded correctly, the LLR value , , input into node can be approximated as a Gaussian variable using a Gaussian approximation as

| (14) |

where is the expectation of [36] such that

| (15) |

and can be calculated recursively offline assuming the all-zero codeword is transmitted as

| (16) |

where

| (17) |

It was shown in [32] that, when the magnitude of the LLR values at a certain node in the SC decoding tree is large enough, the node has enough reliability to perform hard decision directly at the node without tangibly altering the error-correction performance. To determine the reliability of the node, a threshold is defined in [32] as

| (18) |

where is a constant that is selected empirically. The hard-decision estimate of the received LLR values is calculated using

| (19) |

The issue with the method in [32] is that the threshold defined in (18) contains complex calculations of complementary error functions , making the corresponding calculation inefficient. Moreover, the hard-decision threshold is calculated empirically rather than for a desired error-correction performance and the threshold comparison in (19) is performed every time a node with no specific structure is encountered in the SC decoding process.

III Fast SC Decoding with Sequence Repetition Nodes

This section introduces a class of nodes that is at a higher level of the SC decoding tree and shows how parallel decoding at this class of nodes can be exploited to achieve significant latency savings in comparison with the state of the art.

III-A Sequence Repetition (SR) Node

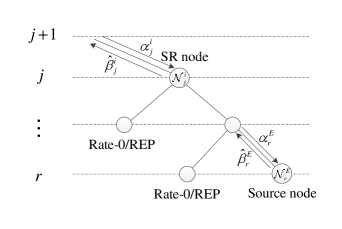

Let be a node at level of the tree representation of SC decoding as shown in Fig. 2. An SR node is any node at stage whose descendants are all either Rate-0 or REP nodes, except the rightmost one at a certain stage , , that is a generic node of rate . The structure of an SR node is depicted in Fig. 4. The rightmost node at stage is denoted as the source node of the SR node . Let so the source node can be denoted as .

An SR node can be represented by three parameters as , where is the level of the SC decoding tree in which is located, SNT is the source node type, and is a vector of length such that for the left child node of the parent node of at level , , is calculated as

| (20) |

Note that when , is a source node and thus is an empty vector denoted as .

III-B Source Node

To define the source node type, an extended class of G-PC (EG-PC) nodes is first introduced. The structure of the EG-PC node is depicted in Fig. 4. The EG-PC node is different from the G-PC node in its leftmost descendant node that can be either a Rate-0 or a REP node. The bits in an EG-PC node satisfy the following parity check constraint,

| (21) |

where , and is the parity. Unlike G-PC nodes whose parity is always even (), the EG-PC node can have either even parity () or odd parity (). The parity of the EG-PC node can be calculated as

| (22) |

where . After computing , Wagner decoders [37] can be used to decode the SPC codes with either even or odd parity constraints. A Wagner decoder performs hard decisions on the bits, and if the parity does not hold, it flips the bit with the lowest absolute LLR value.

SPC, Type-III, Type-IV, and G-PC nodes can be represented as special cases of EG-PC nodes. As a result, most of the common special nodes can be represented as SR nodes, as shown in Table I. Note that the node-branch mergers like P-RSPC and F-REP, and branch operation mergers such as , G‐F, , F‐G0, do not fit into the category of SR nodes. It is worth mentioning that other parameter choices for SR nodes may lead to the discovery of more types of special nodes that can be decoded efficiently, but this exploration is beyond the scope of this work.

| Node Type | SR Node Representation | Length of |

| Rate-0 | 0 | |

| REP | ||

| SPC | 0 | |

| Rate-1 | 0 | |

| P-01 | ||

| P-0SPC | ||

| Type-I | ||

| Type-II | ||

| Type-III | 0 | |

| Type-IV | 0 | |

| Type-V | ||

| G-PC | 0 | |

| G-REP | ||

| REP-SPC | ||

| 0REPSPC | ||

| 001 | ||

| REP-REPSPC | ||

| Rate0-ML | ||

| REP-Rate1(REP1) |

III-C Repetition Sequence

In this subsection, a set of sequences, called repetition sequences, is defined that can be used to calculate the output bit estimates of an SR node based on the output bit estimates of its source node. To derive the repetition sequences, is used to generate all the possible sequences that have to be XORed with the output of the source node to generate the output bit estimates of the SR node. Let denote the rightmost bit value of the left child node of the parent node of at level . When , the left child node is a Rate-0 node so . When , the left child node is a REP node, thus can take the value of either or . The number of repetition sequences is dependent on the number of different values that can take. Let denote the number of ‘’s in . The number of all possible repetition sequences is thus . Let denote the set of all possible repetition sequences.

The output bits of SR node have the property that their repetition sequence is repeated in blocks of length . Let denote the output bits of the source node of an SR node . The output bits for each block of length in with respect to the output bits of its source node can be written as

| (23) |

where and is the -th repetition sequence in . To obtain the repetition sequence and with a slight abuse of terminology and notation for convenience, the Kronecker sum operator is used, which is equivalent to the Kronecker product operator, except that addition in GF() is used instead of multiplication. For each set of values that ’s can take, can be calculated as

| (24) |

Example 1 (Repetition sequences for ).

Consider an example in which the SR node is located at level of the decoding tree and its source node is an EG-PC node located at level . Since , and . For and ,

∎

For a polar code with a given , the locations of SR nodes in the decoding tree are fixed and can be determined off-line. Therefore, the repetition sequences in of all of the SR nodes can be pre-computed and used in the course of decoding.

III-D Decoding of SR Nodes

To decode SR nodes, the LLR values of the source node are calculated based on the LLR values of the SR node for every repetition sequence as follows:

Proposition 1.

Let be the LLR values of the SR node and be the LLR values of its source node associated with the -th repetition sequence . For and ,

| (25) |

Proof:

See Appendix A. ∎

Using (23) and (25), (10) can be written as

| (26) | ||||

| (27) |

Thus, the bit estimates of an SR node can be calculated by finding the bit estimates of its source node using (26) and the repetition sequences as shown in (23).

The decoding algorithm of an SR node is described in Algorithm 1. It first computes for and generates new paths by extending the decoding path at the rightmost bits corresponding to . Note that the -th path is generated when the repetition sequence is and , , and are its soft and hard messages. Then, the source node is decoded under the rule of the SC decoding. If the source node is a special node, a hard decision is made directly. Parity check and bit flipping will be performed further using Wagner decoder if the source node is an EG-PC node. Finally, the optimal decoding path index can be selected according to the comparison in (28) below and the decoding result is obtained using (23).

Based on Algorithm 1, the SR node-based fast SC (SRFSC) decoding algorithm is proposed. It follows the SC decoding algorithm schedule until an SR node is encountered where Algorithm 1 is executed. Note that the paths can be processed simultaneously and the path selection operation in step 3 of Algorithm 1 can be performed in parallel with the decoding of the source node in step 2 and the following function operation. Once the selected index is obtained, only the -th decoding path corresponding to is retained and the remaining paths are deleted.

IV Hard-decision-aided Fast SC Decoding with Sequence Repetition Nodes

In this section, a novel threshold-based hard-decision-aided scheme is proposed, which speeds up the decoding of general nodes with no specific structure in the SRFSC decoding at high signal-to-noise ratios. Consequently, the threshold-based hard-decision-aided SRFSC (TA-SRFSC) decoding algorithm is proposed. In addition, a multi-stage decoding strategy is introduced to mitigate the possible error-correction performance loss of the proposed TA-SRFSC decoding.

IV-A Proposed Threshold-based Hard-decision-aided Scheme

In a binary AWGN channel, the LLR value of any node can be approximated as a Gaussian variable as shown in Fig. 5. The red area in Fig. 5 represents the approximate probability of correct hard decision and the blue area represents the approximate probability of incorrect hard decision when such that

| (29) |

where . The area between the two dashed lines represents the approximate probability that a hard decision is not performed.

To simplify the calculation of the threshold, we take a different approach than [32] by using the Gaussian distribution of and constraining the approximate probability of error when to be

| (30) |

where (and thus ) is a positive constant, whose selection method will be given in Observation 1. This is equivalent to . Therefore, the threshold is

| (31) |

where the absolute value ensures is positive for all values of with any .

Under the Gaussian approximation in (14), for a node that undergoes hard decision in (19), the proposed threshold leads to a bounded probability of correct hard decision as shown in the following observation. Note that the analytical derivations are accurate under the assumption of Gaussian approximation.

Observation 1.

Explanation: See Appendix B.

The proposed TA scheme performs hard decision on a node only if (33) is satisfied. Furthermore, a hard decision is performed on a node if all of its input LLR values satisfy (19). Otherwise, standard SC decoding is applied on to obtain the decoding result. Compared to the TA scheme in [32], the threshold values of both methods can be computed off-line. However, the proposed TA scheme in this paper has the following three advantages: 1) The proposed method can provide a higher latency reduction than the best case in [32] as shown in the simulation results; 2) The effect of the proposed threshold values on the error-correction performance is approximately predictable according to Observation 1; 3) According to (33), only a fraction of nodes undergo hard decision in the decoding process of the proposed TA scheme, which avoids unnecessary threshold comparisons. To speed up the decoding process, the proposed TA scheme is combined with SRFSC decoding that results in the TA-SRFSC decoding algorithm. In TA-SRFSC decoding, when one of the special nodes considered in SRFSC decoding is encountered, SRFSC decoding is performed and when a general node with no special structure is encountered, the proposed TA scheme is applied. The following observation provides an approximate upper bound on the BLER of the proposed TA-SRFSC decoding.

Observation 2.

Let and denote the BLER of the TA-SRFSC decoding and the SRFSC decoding respectively. We can have the following approximate inequality

| (34) |

Explanation: See Appendix C.

Observation 2 provides a method to derive the threshold value for a desired upper bound of the BLER for TA-SRFSC decoding approximately. In fact, a large threshold value results in a better error-correction performance than a small threshold value at the cost of lower decoding speed. Therefore, a trade-off between the error-correction performance and the decoding speed can be achieved with the proposed TA-SRFSC decoding algorithm.

IV-B Multi-stage Decoding

To mitigate the possible error-correction performance loss of the proposed TA-SRFSC decoding, a multi-stage decoding strategy is adopted in which a maximum of two decoding attempts is conducted. In the first decoding attempt, TA-SRFSC decoding is used. If this decoding fails and if there existed a node that underwent hard decision in this first decoding attempt, then a second decoding attempt using SRFSC decoding is conducted. To determine if the TA-SRFSC decoding failed, a cyclic redundancy check (CRC) is concatenated to the polar code and it is verified after the TA-SRFSC decoding. Additionally, the CRC is verified after the second decoding attempt by the SRFSC decoding to determine if the overall decoding process succeeded.

As shown in the next section, most of the received frames are decoded correctly by the proposed TA-SRFSC decoding in the first decoding attempt. As a result, the average decoding latency of the proposed multi-stage SRFSC decoding is very close to that of the TA-SRFSC decoding with CRC bits. However, the error-correction performance of the proposed multi-stage SRFSC decoding algorithm is slightly worse than that of SRFSC decoding due to the addition of CRC bits. As such, multi-stage SRFSC decoding trades off a slight degradation in error-correction performance to obtain a significant reduction in the average decoding latency. It is worth noting that, in order to improve the error-correction performance of the proposed scheme, the proposed multi-stage decoding strategy can be generalized to have more than two decoding attempts. In this scenario the first two attempts are the same as described above, i.e., TA-SRFSC decoding is used first followed by SRFSC decoding.111If the TA-SRFSC decoder does not satisfy the CRC, the SRFSC decoding either should start from the beginning or from the first node which underwent the hard decision. In this paper, the SRFSC decoder starts decoding from the first bit to avoid storing intermediate LLRs. The third and any subsequent attempts would use increasingly more powerful decoding techniques than the first two, such as SCL decoding.

V Decoding Latency

In this section, the decoding latency of the proposed fast decoders is analyzed under two cases: 1) with no resource limitation and 2) with hardware resource constraints. The former is to facilitate the comparisons with the works that do not consider hardware implementation constraints [19, 20], and the latter is for comparison with those that do [12, 18, 23].

V-A No Resource Limitation

In this case, the same assumptions as in [1, 19] are used and the decoding latency is measured in terms of the number of required time steps. More specifically: 1) there is no resource limitation so that all the parallelizable instructions are performed in one time step, 2) bit operations are carried out instantaneously, 3) addition/subtraction of real numbers and check-node operation consume one time step, and 4) Wagner decoding can be performed in one time step.

V-A1 SRFSC and TA-SRFSC

For any node that has no special structure, e.g. a parent node of an SR node, and that satisfies (33), the threshold comparison in (19) is performed in parallel with the calculation of the LLR values of its left child node. Therefore, the proposed hard decision scheme does not affect the latency requirements for the nodes that undergo hard decision.

The number of time steps required for the decoding of the SR node is calculated according to Algorithm 1. In Step 1, the calculation of the LLR values for the source node requires one time step if . If , then the LLR values of the source node are available immediately. Thus, the required number of time steps for Step 1 is

| (35) |

The time step requirement of Step 2 depends on the source node type. If , the time step requirement of Step 2 is the time step requirement of the Rate-C node. If or Rate-1, then there is no latency overhead in Step 2. If and in accordance with Section III-B, can be estimated in two time steps if the leftmost node is a REP node (one time step for performing the check-node operation and one time step for adding the LLR values). Also, Wagner decoding can be performed in parallel with the estimation of assuming or . As such, at most two time steps are required for parity check and bit flipping of the EG-PC node. The required number of time steps for Step 2 is

| (36) |

Step 3 consumes two time steps using an adder tree and a comparison tree if and no time steps otherwise. Thus, the number of required time steps for Step 3 is

| (37) |

Since path selection in Step 3 can be executed in parallel with the decoding of source node in Step 2 and the following function calculation, the total number of time steps required to decode an SR node can be expressed as

| (38) |

where indicates that at least one time step in can be reduced by parallelizing Step 3 and the function calculation. Therefore, is a variable that is dependent on its parameters. However, with a given polar code, the total number of time steps required for the decoding of the polar code using SRFSC decoding is fixed, regardless of the channel conditions.

V-A2 Multi-stage SRFSC

Let and denote the average decoding latency of the proposed TA-SRFSC decoding and the SRFSC decoding using CRC bits, respectively. The average decoding latency of multi-stage SRFSC decoding, , is

| (39) |

where indicates the probability that TA-SRFSC decoding fails and there is at least one node that undergoes hard decision. Note that is less than or equal to the probability that the output of TA-SRFSC decoding fails the CRC verification, which can be approximated by . The approximation is due to the fact that the undetected error probability of CRC is negligible when its length is long enough [38]. In accordance with Observation 2, the average decoding latency requirement for the proposed multi-stage SRFSC decoding is

| (40) |

Since the decoding latency of SRFSC decoding is fixed, the average decoding latency and the worst case decoding latency of SRFSC decoding are equivalent. The worst case decoding latency of the proposed TA-SRFSC decoding can be calculated when none of the nodes in the decoding tree undergo hard decision. This occurs when the channel has a high level of noise. Thus, the worst case decoding latency of the proposed TA-SRFSC decoding is equivalent to the decoding latency of the SRFSC decoding. Moreover, when the channel is too noisy, , because almost none of the nodes undergo hard decision. Thus, the worst case decoding latency of the proposed multi-stage SRFSC decoding is equivalent to the worst case decoding latency of TA-SRFSC decoding using a CRC, which is the latency of SRFSC decoding with a CRC.

V-B With Hardware Resource Constraints

In this case, the decoding latency is measured in terms of the number of clock cycles in the actual hardware implementation. Contrary to Section V-A, a time step may take more than one clock cycle in a realistic hardware implementation. First, the idea of a semi-parallel decoder in [39] is adopted in which at most processing elements can run at the same time. Thus, parallel function or function operations on more than processing elements require more than one clock cycle. Second, the adder tree used for the calculation of LLR values in step 1 of Algorithm 1, and the compare-and-select (CS) tree used by the Wagner decoder to find the index of the least reliable input bit in step 2 of Algorithm 1, both require pipeline registers to reduce the critical path delay and improve the operating frequency and the overall throughput. The addition of these pipeline registers increases the number of decoding clock cycles. The exact number depends on the implementation and the given polar code.

VI Results and Comparison

In this section, the average decoding latency and the error-correction performance of the proposed decoding algorithms are analyzed and compared with state-of-the-art fast SC decoding algorithms. To derive the results, polar codes of length , which are adopted in the 5G standard [40], are used. To do a fair comparison, for baseline decoding algorithms without hardware implementation, the latency is calculated under the assumptions in Section V-A. Otherwise, the hardware implementation results are reported.

To simulate the effect of on the error-correction performance and the latency of the proposed decoding algorithms, the assumption in [32] is adopted and three values of are selected. In accordance with (32), for , for , and for . According to (30), with the increasing of , decreases. At the same time, fewer nodes undergo hard decision and the decoding latency increases. To get a good trade-off between error-correction performance and latency, we set for , for , and for . Consequently, for , for , and for in accordance with (33). Using these values, the threshold defined in (31) and the BLER upper bound for the TA-SRFSC decoding in (34) can be calculated for different values of .

| SR | General | ||||||||

| Total | Total | ||||||||

| 1 | 2 | 4 | 8 | 16 | |||||

| 128 | 1 | 0 | 2 | 1 | 0 | 4 | 3 | ||

| 4 | 3 | 1 | 0 | 0 | 8 | 7 | |||

| 8 | 2 | 0 | 0 | 0 | 10 | 9 | |||

| 512 | 12 | 2 | 2 | 1 | 0 | 17 | 16 | ||

| 15 | 5 | 2 | 0 | 1 | 23 | 22 | |||

| 13 | 5 | 1 | 0 | 1 | 20 | 19 | |||

| 1024 | 17 | 6 | 2 | 2 | 1 | 28 | 27 | ||

| 25 | 8 | 2 | 3 | 1 | 39 | 38 | |||

| 29 | 8 | 2 | 1 | 0 | 40 | 39 | |||

| Length | ||||||

| 1 | 2 | 4 | 8 | 16 | ||

| 128 | 2 | 2 | 0 | 0 | 0 | |

| 0 | 1 | 1 | 0 | 0 | ||

| 2 | 0 | 0 | 0 | 0 | ||

| 512 | 7 | 3 | 0 | 0 | 0 | |

| 4 | 1 | 2 | 0 | 0 | ||

| 3 | 1 | 0 | 0 | 0 | ||

| 1 | 0 | 0 | 0 | 0 | ||

| 0 | 0 | 0 | 0 | 1 | ||

| 1024 | 10 | 6 | 0 | 0 | 0 | |

| 7 | 1 | 2 | 0 | 0 | ||

| 4 | 0 | 0 | 3 | 0 | ||

| 2 | 1 | 0 | 0 | 1 | ||

| 2 | 0 | 0 | 0 | 0 |

Table III reports the number of SR nodes with different , the total number of SR nodes, and the total number of general nodes with no special structure, at different code lengths and rates. It can be seen that when the code length is , , and , the codes with rate have the largest proportion of nodes with , respectively. This in turn results in more latency savings because a higher degree of parallelism can be exploited with these nodes. Thanks to the binary tree structure and the fact that SR nodes are always present at an intermediate level of the decoding tree, the number of traversed general nodes with no special structure equals the total number of SR nodes minus one, since they are the parent nodes of SR nodes. Table III shows the length of SR nodes with different at different code lengths when . The length of the SR nodes in the decoding tree corresponds to the level in the decoding tree that they are located. SR nodes with larger that are located on a higher level of the decoding tree contribute more in the overall latency reduction.

Table IV reports the number of time steps required to decode polar codes of lengths and rates with the proposed SRFSC decoding algorithm, and compares it with the required number of time steps of the decoders in [12, 18, 19, 20, 23]. Note that the decoder in [23] has the minimum required time steps amongst all the previous works considered in Table IV. Three versions of the proposed SRFSC decoder are considered in Table IV: the SRFSC decoder that only utilizes SR nodes; the SRFSC decoder that considers SR nodes and P-0SPC nodes that is adopted in all other baseline decoders in Table IV; and the SRFSC decoder that uses SR nodes, P-0SPC nodes, and the operation mergers and G-F. It can be seen that the SRFSC decoder requires fewer time steps with respect to other decoders, except for the case of and , which has a frequent occurrence of REP-SPC nodes that provides an advantage for [23]. This is due to the fact that the REP-SPC decoder in other works decodes REP and SPC nodes in parallel, consuming only a single time step, while two time steps are needed to decode the REP-SPC node in the proposed SRFSC decoder.

| [12] | [18] | [19] | [20] | [23] | SRFSC | SRFSC | SRFSC | ||

| 128 | 25 | 23 | 24 | 23 | 10 | 13 | 12 | 10 | |

| 33 | 31 | 24 | 24 | 21 | 25 | 24 | 20 | ||

| 22 | 22 | 22 | 22 | 16 | 29 | 25 | 20 | ||

| 512 | 73 | 70 | 63 | 63 | 42 | 57 | 52 | 40 | |

| 87 | 83 | 77 | 77 | 56 | 72 | 66 | 52 | ||

| 79 | 73 | 64 | 64 | 50 | 63 | 56 | 45 | ||

| 1024 | 122 | 115 | 110 | 108 | 68 | 92 | 85 | 66 | |

| 156 | 144 | 138 | 138 | 94 | 127 | 115 | 90 | ||

| 138 | 132 | 116 | 116 | 89 | 123 | 111 | 86 |

Table V compares the required number of clock cycles and the maximum operating frequency in the hardware implementation of different decoders for a polar code with , , and for the proposed SRFSC decoding algorithm (taken from [41]) and the decoders in [12, 22, 23, 18]. All FPGA results are for an Altera Stratix IV EP4SGX530KH40C2 FPGA device and all ASIC results use TSMC 65nm CMOS technology. The required number of clock cycles for the proposed SRFSC decoder can be further reduced if we consider node-branch merging and branch operation merging similar to [23]. We have verified that the introduction of P-RSPC node to the proposed SRFSC decoder lengthens the critical path of the decoder, but the merging of branch operations, and G-F, does not have an effect on the operating frequency. Thus, an enhanced SRFSC decoder with merging branch operations and G-F is implemented and denoted as SRFSC∗ in Table V. It can be seen that the SRFSC∗ decoder requires a smaller number of clock cycles compared to other decoders and the reduction with respect to [23] is , , and at , respectively. Moreover, the SRFSC decoder and the SRFSC∗ decoder both have the highest maximum operating frequency when implemented on the FPGA. Compared with the works that reported results for FPGA implementations, our decoders achieve an improvement of at least .

| clock cycles | |||||

| FPGA | ASIC | ||||

| SRFSC | 186 | 222 | 200 | 109.6 | — |

| 155 | 191 | 166 | 109.6 | — | |

| [23] | 168 | 198 | 181 | — | 430 |

| [22] | 189 | 214 | 185 | 89.6 | 420 |

| [18] | 221 | 252 | 216 | — | 450 |

| [12] | 225 | 270 | 225 | 99.8 | 450 |

Fig. 6 shows the BLER performance of different decoding algorithms when and , for different values of energy per bit to noise power spectral density ratio (). For each value of , the BLER of TA-SRFSC decoding is depicted together with the upper bound calculated in Observation 2. It can be seen that the introduction of the TA scheme results in BLER performance loss for the proposed TA-SRFSC decoding with respect to SC and SRFSC decoding, especially at higher values of . The simulations confirm that the BLER curves of TA-SRFSC decoding fall below their respective upper bounds. It can also be seen that, as the value increases beyond a specific point, the BLER performance of the TA-SRFSC decoding degrades. This is because of the difference in the performance of hard-decision and soft-decision decoding. In accordance with (33), more nodes undergo hard decision decoding for larger values of . Therefore, while the channel conditions improve, the hard decision decoding introduces errors that reduce the error-correction performance gain associated with these large values. As a result, the BLER performance degrades after a certain value of . This phenomenon exists as long as there are nodes that can undergo hard decision decoding. After all the nodes are decoded using hard decision, the BLER performance improves again as increases.

The proposed multi-stage SRFSC decoder is implemented using three different CRC lengths that are adopted in 5G to identify whether the decoding succeeded or failed: the CRC of length (CRC) with generator polynomial , the CRC of length (CRC) with generator polynomial , and the CRC of length (CRC) with generator polynomial . For all values of , the proposed multi-stage SRFSC decoding results in almost the same BLER performance. Therefore, only the curve with is plotted in Fig. 6 for the multi-stage SRFSC decoding. CRC provides a better error-correction performance compared to CRC and CRC, especially at high values of , due to high undetected probability of error for short CRC lengths. Hence, CRC is selected for the polar code of length in this paper. It can be seen that the multi-stage SRFSC decoding with CRC demonstrates a slight performance loss compared to the conventional SC and SRFSC decoders as a result of adding extra CRC bits. For polar codes of other lengths and rates, a similar trend can also be observed when comparing the BLER performance of different schemes.

Fig. 7 presents the average decoding latency in terms of the required number of time steps for the proposed TA-SRFSC decoding and multi-stage decoding with CRC. In particular, it compares them with the latency of SRFSC decoding and the decoder in [32] at different values of when and . It can be seen that the required number of time steps for the proposed TA-SRFSC decoding decreases as increases and is reduced by for , by for , and by for , compared to SRFSC decoding at dB. In addition, the proposed multi-stage SRFSC decoding reduces the required number of time steps by for , by for , and by for , with respect to SRFSC decoding at dB. The required number of time steps for the proposed multi-stage SRFSC decoding outperforms the method in [32] with by for at dB while providing a significantly better BLER performance. Fig. 7 also presents the approximate upper bound derived in (40). It can be seen in the figure that the upper bound in (40) becomes tighter as increases.

Fig. 8 compares the average number of threshold comparisons in (19) for the proposed TA-SRFSC decoder with , and the decoder in [32] with for the 5G polar code of length and rate . It can be seen that the proposed TA-SRFSC decoder shows significant benefits with respect to the decoder of [32] in terms of the average number of threshold comparisons. The TA-SRFSC decoder with provides at least reduction with respect to [32] with while having a lower decoding latency. This means the decoder in [32] executes many unnecessary threshold comparison operations, while TA-SRFSC decoding only makes hard decisions when a node satisfies the condition in Equation (33).

VII Conclusion

In this work, a new sequence repetition (SR) node is identified in the successive-cancellation (SC) decoding tree of polar codes and an SR node-based fast SC (SRFSC) decoder is proposed. In addition, to speed up the decoding of nodes with no specific structure, the SRFSC decoder is combined with a threshold-based hard-decision-aided (TA) scheme and a multi-stage decoding strategy. We show that this method further reduces the decoding latency at high SNRs. In particular, hardware implementation results on an FPGA for a polar code of length with code rates , , and show that the proposed SRFSC decoder with merging branch operations requires up to fewer clock cycles and achieves higher maximum operating frequency compared to state-of-the-art decoders. In addition, the proposed TA-SRFSC decoding reduces the average decoding latency by with respect to SRFSC decoding at dB on a polar code of length and rate . This average latency saving is particularly important in real-time applications such as video. Future work includes the design of a fast SC list decoder using SR nodes.

Appendix A Proof of Proposition 1

Let denote the identity matrix for . Since the source node is the rightmost node in an SR node, the function calculation in (3) can be used as

Using the identity with , , , and results in

which can be written as

where the identity is used. Repeating the above procedures results in

Thus for ,

Appendix B Explanation of Observation 1

To explain Observation 1, a lemma is first introduced as follows.

Lemma 1. Under the Gaussian approximation assumption in (14), for any node whose bits undergo a hard decision in (19), assuming all prior bits are decoded correctly, the probability of correct decoding can be calculated as .

Proof:

In accordance with Fig. 5, for any node , considering all the previous bits are decoded correctly, the probability that the -th bit () in the node undergoes a hard decision is . Moreover, The probability of a correct hard decision for the -th bit in the node is , regardless of the value of . Thus, the conditional probability that a hard decision on the -th bit is correct given that the -th bit undergoes a hard decision is . Since the LLR values of bits in a node are independent of each other, the conditional probability that hard decisions on all the bits of node are correct given that all its bits undergo hard decisions can be calculated as . ∎

To have a probability of correct decoding of at least for all the nodes that undergo hard decision in a polar code of length , any such node is assumed to have the probability of correct decoding of at least . Therefore and by using the result in Lemma 1, we have

| (41) |

which is equivalent to

| (42) |

If , then , , and . When , (42) can be written as

| (43) |

which requires

| (44) |

If , then , , and . Thus (42) can be written as

| (45) |

If (44) holds and by using the fact that , then

| (46) |

Thus , which always holds based on the initial assumption. Therefore, it suffices that

| (47) |

and (44) to ensure (42). In other words, assuming all previous bits are decoded correctly, the probability that any node that undergo hard decision (19) in the decoding process is decoded correctly is lower bounded by if (44) and (47) are satisfied.

Appendix C Explanation of Observation 2

Note that based on Observation 1, any node that is decoded using (19) has a probability of correct hard decision of approximately greater than or equal to . For any node that undergoes the SRFSC decoding, the probability of correct decoding is determined by the error rate of SRFSC decoding. Thus, the probability of correct decoding for TA-SRFSC decoding is approximately greater than or equal to . Consequently, .

References

- [1] E. Arıkan, “Channel polarization: A method for constructing capacity-achieving codes for symmetric binary-input memoryless channels,” IEEE Trans. Inf. Theory, vol. 55, no. 7, pp. 3051–3073, Jul. 2009.

- [2] 3GPP, “Final report of 3GPP TSG RAN WG1 #87 v1.0.0,” Reno, USA, Nov. 2016. [Online]. Available: http://www.3gpp.org/ftp/tsg_ran/WG1_RL1/TSGR1_87.

- [3] K. Niu, K. Chen, J. Lin, and Q. Zhang, “Polar codes: Primary concepts and practical decoding algorithms,” IEEE Commun. Mag., vol. 52, no. 7, pp. 192–203, 2014.

- [4] C. Zhang, B. Yuan, and K. K. Parhi, “Reduced-latency SC polar decoder architectures,” in Proc. IEEE Int. Conf. Commun. (ICC), Jun. 2012, pp. 3471–3475.

- [5] B. Yuan and K. K. Parhi, “Low-latency successive-cancellation polar decoder architectures using 2-bit decoding,” IEEE Trans. Circ. Syst. I: Regul., vol. 61, no. 4, pp. 1241–1254, April. 2014.

- [6] A. Mishra, A. J. Raymond, L. G. Amaru, G. Sarkis, C. Leroux, P. Meinerzhagen, A. Burg, and W. J. Gross, “A successive cancellation decoder ASIC for a 1024-bit polar code in 180nm cmos,” in Proc. Asian Solid-State Circuits Conf., Nov. 2012, pp. 205–208.

- [7] B. Yuan and K. K. Parhi, “Reduced-latency LLR-based SC list decoder for polar codes,” in Proc. 25th Ed. Great Lakes Symp. VLSI, May. 2015, pp. 107–110.

- [8] ——, “Low-latency successive-cancellation list decoders for polar codes with multibit decision,” IEEE Trans. VLSI Syst., vol. 23, no. 10, pp. 2268–2280, Oct. 2015.

- [9] G. Sarkis and W. J. Gross, “Increasing the throughput of polar decoders,” IEEE Commun. Lett., vol. 17, no. 4, pp. 725–728, Apr. 2013.

- [10] C. Husmann, P. C. Nikolaou, and K. Nikitopoulos, “Reduced latency ML polar decoding via multiple sphere-decoding tree searches,” IEEE Trans. Veh. Technol., vol. 67, no. 2, pp. 1835–1839, Feb. 2017.

- [11] A. Alamdar-Yazdi and F. R. Kschischang, “A simplified successive-cancellation decoder for polar codes,” IEEE Commun. Lett., vol. 15, no. 12, pp. 1378–1380, Dec. 2011.

- [12] G. Sarkis, P. Giard, A. Vardy, C. Thibeault, and W. J. Gross, “Fast polar decoders: Algorithm and implementation,” IEEE J. Sel. Areas Commun., vol. 32, no. 5, pp. 946–957, May. 2014.

- [13] P. Giard, G. Sarkis, C. Thibeault, and W. J. Gross, “Multi-mode unrolled architectures for polar decoders,” IEEE Trans. Circ. Syst. I: Regul., vol. 63, no. 9, pp. 1443–1453, Sep. 2016.

- [14] Z. Huang, C. Diao, and M. Chen, “Latency reduced method for modified successive cancellation decoding of polar codes,” Electron. Lett., vol. 48, no. 23, pp. 1505–1506, Nov. 2012.

- [15] A. Balatsoukas-Stimming, G. Karakonstantis, and A. Burg, “Enabling complexity-performance trade-offs for successive cancellation decoding of polar codes,” in Proc. IEEE Int. Symp. Inf. Theory Process. (ISIT), Jun. 2014, pp. 2977–2981.

- [16] L. Zhang, Z. Zhang, X. Wang, C. Zhong, and L. Ping, “Simplified successive-cancellation decoding using information set reselection for polar codes with arbitrary blocklength,” IET Commun., vol. 9, no. 11, pp. 1380–1387, Jul. 2015.

- [17] P. Giard, G. Sarkis, C. Thibeault, and W. J. Gross, “A 638 Mbps low-complexity rate 1/2 polar decoder on FPGAs,” in Proc. IEEE Int. Workshop Signal Process. Syst. (SiPS), Oct. 2015, pp. 1–6.

- [18] P. Giard, A. Balatsoukas-Stimming, G. Sarkis, C. Thibeault, and W. J. Gross, “Fast low-complexity decoders for low-rate polar codes,” J Sign Process Syst, vol. 90, no. 5, pp. 675–685, May. 2018.

- [19] M. Hanif and M. Ardakani, “Fast successive-cancellation decoding of polar codes: identification and decoding of new nodes,” IEEE Commun. Lett., vol. 21, no. 11, pp. 2360–2363, Nov. 2017.

- [20] C. Condo, V. Bioglio, and I. Land, “Generalized fast decoding of polar codes,” in Proc. IEEE Global Commun. Conf. (GLOBECOM), Dec. 2018, pp. 1–6.

- [21] H. Gamage, V. Ranasinghe, N. Rajatheva, and M. Latva-aho, “Low latency decoder for short blocklength polar codes,” arXiv preprint arXiv:1911.03201, 2019.

- [22] F. Ercan, C. Condo, and W. J. Gross, “Reduced-memory high-throughput Fast-SSC polar code decoder architecture,” in Proc. IEEE Int. Workshop Signal Process. Syst. (SiPS), Oct. 2017, pp. 1–6.

- [23] F. Ercan, T. Tonnellier, C. Condo, and W. J. Gross, “Operation merging for hardware implementations of fast polar decoders,” J Sign Process Syst, vol. 91, no. 9, pp. 995–1007, Sep. 2019.

- [24] P. Giard and A. Burg, “Fast-SSC-flip decoding of polar codes,” in Proc. IEEE Wireless Commun. Netw. Conf. Workshops (WCNCW), Apr. 2018, pp. 73–77.

- [25] F. Ercan, T. Tonnellier, and W. J. Gross, “Energy-efficient hardware architectures for fast polar decoders,” IEEE Trans. Circ. Syst. I: Regul., vol. 67, no. 1, pp. 322–335, Jan. 2020.

- [26] I. Tal and A. Vardy, “List decoding of polar codes,” IEEE Trans. Inf. Theory, vol. 61, no. 5, pp. 2213–2226, May. 2015.

- [27] G. Sarkis, P. Giard, A. Vardy, C. Thibeault, and W. J. Gross, “Fast list decoders for polar codes,” IEEE J. Sel. Areas Commun., vol. 34, no. 2, pp. 318–328, Feb. 2016.

- [28] S. A. Hashemi, C. Condo, and W. J. Gross, “A fast polar code list decoder architecture based on sphere decoding,” IEEE Trans. Circ. Syst. I: Regul., vol. 63, no. 12, pp. 2368–2380, Dec. 2016.

- [29] S. A. Hashemi, C. Condo, and W. J. Gross, “Fast and flexible successive-cancellation list decoders for polar codes,” IEEE Trans. Signal Process., vol. 65, no. 21, pp. 5756–5769, Nov. 2017.

- [30] S. A. Hashemi, C. Condo, M. Mondelli, and W. J. Gross, “Rate-flexible fast polar decoders,” IEEE Trans. Signal Process., vol. 67, no. 22, pp. 5689–5701, Nov. 2019.

- [31] M. H. Ardakani, M. Hanif, M. Ardakani, and C. Tellambura, “Fast successive-cancellation-based decoders of polar codes,” IEEE Trans. Commun., vol. 67, no. 7, pp. 4562–4574, Jul. 2019.

- [32] S. Li, Y. Deng, L. Lu, J. Liu, and T. Huang, “A low-latency simplified successive cancellation decoder for polar codes based on node error probability,” IEEE Commun. Lett., vol. 22, no. 12, pp. 2439–2442, Dec. 2018.

- [33] H. Sun, R. Liu, and C. Gao, “A simplified decoding method of polar codes based on hypothesis testing,” IEEE Commun. Lett., pp. 1–1, Jan. 2020.

- [34] C. Leroux, I. Tal, A. Vardy, and W. J. Gross, “Hardware architectures for successive cancellation decoding of polar codes,” in Proc. IEEE Int. Conf. Acoust., Speech Signal Process., May. 2011, pp. 1665–1668.

- [35] P. Trifonov, “Efficient design and decoding of polar codes,” IEEE Trans. Commun., vol. 60, no. 11, pp. 3221–3227, Nov. 2012.

- [36] Z. Zhang and L. Zhang, “A split-reduced successive cancellation list decoder for polar codes,” IEEE J. Sel. Areas Commun., vol. 34, no. 2, pp. 292–302, Feb. 2016.

- [37] R. Silverman and M. Balser, “Coding for constant-data-rate systems,” Trans. IRE Prof. Group Inf. Theory, vol. 4, no. 4, pp. 50–63, Sep. 1954.

- [38] M. El-Khamy, J. Lee, and I. Kang, “Detection analysis of CRC-assisted decoding,” IEEE Commun. Lett., vol. 19, no. 3, pp. 483–486, Mar. 2015.

- [39] C. Leroux, A. J. Raymond, G. Sarkis, and W. J. Gross, “A semi-parallel successive-cancellation decoder for polar codes,” IEEE Trans. Signal Process., vol. 61, no. 2, pp. 289–299, Jan. 2013.

- [40] 3GPP, “3GPP TS RAN 38.212 v1.2.1,” Dec. 2017. [Online]. Available: http://www.3gpp.org/ftp/Specs/archive/38_series/38.212/38212-f30.zip.

- [41] H. Zheng, A. Balatsoukas-Stimming, Z. Cao, and A. M. J. Koonen, “Implementation of a high-throughput Fast-SSC polar decoder with sequence repetition node,” arXiv:2007.11394, in IEEE Int. Workshop Signal Process. Syst. (SIPS), Oct. 2020.