Thomson’s Multitaper Method Revisited

Abstract

Thomson’s multitaper method estimates the power spectrum of a signal from equally spaced samples by averaging tapered periodograms. Discrete prolate spheroidal sequences (DPSS) are used as tapers since they provide excellent protection against spectral leakage. Thomson’s multitaper method is widely used in applications, but most of the existing theory is qualitative or asymptotic. Furthermore, many practitioners use a DPSS bandwidth and number of tapers that are smaller than what the theory suggests is optimal because the computational requirements increase with the number of tapers. We revisit Thomson’s multitaper method from a linear algebra perspective involving subspace projections. This provides additional insight and helps us establish nonasymptotic bounds on some statistical properties of the multitaper spectral estimate, which are similar to existing asymptotic results. We show using tapers instead of the traditional tapers better protects against spectral leakage, especially when the power spectrum has a high dynamic range. Our perspective also allows us to derive an -approximation to the multitaper spectral estimate which can be evaluated on a grid of frequencies using FFTs instead of FFTs. This is useful in problems where many samples are taken, and thus, using many tapers is desirable.

1 Introduction

Perhaps one of the most fundamental problems in digital signal processing is spectral estimation, i.e., estimating the power spectrum of a signal from a window of evenly spaced samples. The simplest solution is the periodogram, which simply takes the squared-magnitude of the discrete time Fourier transform (DTFT) of the samples. Obtaining only a finite number of samples is equivalent to multiplying the signal by a rectangular function before sampling. As a result, the DTFT of the samples is the DTFT of the signal convolved with the DTFT of the rectangular function, which is a slowly-decaying sinc function. Hence, narrow frequency components in the true signal appear more spread out in the periodogram. This phenomenon is known as “spectral leakage”.

The most common approach to mitigating the spectral leakage phenomenon is to multiply the samples by a taper before computing the periodogram. Since multiplying the signal by the taper is equivalant to convolving the DTFT of the signal with the DTFT of the taper, using a taper whose DTFT is highly concentrated around will help mitigate the spectral leakage phenomenon. Numerous kinds of tapers have been proposed [2] which all have DTFTs which are highly concentrated around . The Slepian basis vectors, also known as the discrete prolate spheroidal sequences (DPSSs), are designed such that their DTFTs have a maximal concentration of energy in the frequency band subject to being orthonormal [3]. The first of these Slepian basis vectors have DTFTs which are highly concentrated in the frequency band . Thus, any of the first Slepian basis vectors provides a good choice to use as a taper.

In 1982, David Thomson [4] proposed a multitaper method which computes a tapered periodogram for each of the first Slepian tapers, and then averages these periodograms. Due to the spectral concentration properties of the Slepian tapers, Thomson’s multitaper method also does an excellent job mitigating spectral leakage. Furthermore, by averaging tapered periodograms, Thomson’s multitaper method is more robust than a single tapered periodogram. As such, Thomson’s multitaper method has been used in a wide variety of applications, such as cognitive radio [5, 6, 7, 8, 9], digital audio coding [10, 11], as well as to analyze EEG [12, 13] and other neurological signals [14, 15, 16, 17, 18], climate data [19, 20, 21, 22, 23, 24, 25], breeding calls of Adélie penguins [26] and songs of other birds [27, 28, 29, 30], topography of terrestrial planets [31], solar waves[32], and gravitational waves [33].

The existing theoretical results regarding Thomson’s multitaper method are either qualitative or asymptotic. Here, we provide a brief summary of these results. See [4, 34, 35, 8, 36, 37] for additional details. Suppose that the power spectral density of the signal is “slowly varying”. Let denote the multitaper spectral estimate. Then, the following results are known.

-

•

The estimate is approximately unbiased, i.e., .

-

•

The variance is roughly .

-

•

For any two frequencies that are at least apart, and are approximately uncorrelated.

-

•

The multitaper spectral estimate has a concentration behavior about its mean which is similar to a scaled chi-squared random variable with degrees of freedom.

-

•

If the power spectral density is twice differentiable, then choosing a bandwidth of and using tapers minimizes the mean squared error of the multitaper spectral estimate .

These asymptotic results demonstrate that using more than a small constant number of tapers improves the quality of the multitaper spectral estimate. However, using more tapers increases the computational requirements. As a result, many practitioners often use significantly fewer tapers than what is optimal.

The main contributions of this work are as follows:

-

•

We revisit Thomson’s multitaper method from a linear algebra based perspective. Specifically, for each frequency , the multitaper spectral estimate computes the -norm of the projection of the vector of samples onto a -dimensional subspace. The subspace chosen can be viewed as the result of performing principle component analysis on a continuum of sinusoids whose frequency is between and .

-

•

Using this perspective, we establish non-asymptotic bounds on the bias, variance, covariance, and probability tails of the multitaper spectral estimate. These non-asymptotic bounds are comparable to the known asymptotic results which assume that the spectrum is slowly varying. Also, these bounds show that using tapers (instead of the traditional choice of or tapers) provides better protection against spectral leakage, especially in scenarios where the spectrum has a large dynamic range.

-

•

We also use this perspective to demonstrate a fast algorithm for evaluating an -approximation of the multitaper spectral estimate on a grid of equally spaced frequencies. The complexity of this algorithm is while the complexity of evaluating the exact multitaper spectral estimate on a grid of equally spaced frequencies is . Computing the -approximation is faster than the exact multitaper provided tapers are used.

The rest of this work is organized as follows. In Section 2 we formally introduce Thompson’s multitaper spectral estimate, both from the traditional view involving tapered periodograms as well as from a linear algebra view involving projections onto subspaces. In Section 3, we state non-asymptotic bounds regarding the bias, variance, and probability concentration of the multitaper spectral estimate. These bounds are proved in Appendix A. In Section 4, we state our fast algorithm for evaluating the multitaper spectral estimate at a grid of frequencies. The proofs of the approximation error and computational requirements are in Appendix B. In Section 5, we show numerical experiments which demonstrate that using tapers minimizes the impact of spectral leakage on the multitaper spectral estimate, and that our -approximation to the multitaper spectral estimate can be evaluated at a grid of evenly spaced frequencies significantly faster than the exact multitaper spectral estimate. We finally conclude the paper in Section 6.

2 Thomson’s Multitaper Method

2.1 Traditional view

Let , be a stationary, ergodic, zero-mean, Gaussian process. The autocorrelation and power spectral density of are defined by

and

respectively. The goal of spectral estimation is to estimate from the vector of equispaced samples for . 111We use the notation to denote the set . We will also use and in a similar manner.

One of the earliest, and perhaps the simplest estimator of is the periodogram [38, 39]

This estimator can be efficiently evaluated at a grid of evenly spaced frequencies via the FFT. However, the periodogram has high variance and suffers from the spectral leakage phenomenon [40].

A modification to the periodogram is to pick a data taper with , and then weight the samples by the taper as follows

If is small near and , then this “smoothes” the “edges” of the sample window. Note that the expectation of the tapered periodogram is given by a convolution of the true spectrum and the spectral window of the taper,

where

Hence, a good taper will have its spectral window concentrated around so that , i.e., the tapered periodogram will be approximately unbiased.

For a given bandwidth parameter , we can ask “Which taper maximizes the concentration of its spectral window, , in the interval ?” Note that we can write

where is the prolate matrix [41, 42] whose entries are given by

Hence, the taper whose spectral window, , is maximally concentrated in is the eigenvector of corresponding to the largest eigenvalue.

The prolate matrix was first studied extensively by David Slepian [3]. As such, we will refer to the orthonormal eigenvectors of as the Slepian basis vectors, where the corresponding eigenvalues are sorted in descending order. Slepian showed that all the eigenvalues of are distinct and strictly between and . Furthermore, the eigenvalues of exhibit a particular clustering behavior. Specifically, the first slightly less than eigenvalues are very close to , and the last slightly less than eigenvalues are very close to .

While is the taper whose spectral window is maximally concentrated in , any of the Slepian basis vectors for which will also have a high energy concentration in , and thus, make good tapers. Thomson [4] proposed a multitaper spectral estimate by using each of the first Slepian basis vectors as tapers, and taking an average of the resulting tapered periodograms, i.e.,

The expectation of the multitaper spectral estimate satisfies

where

is known as the spectral window of the multitaper spectral estimate. It can be shown that when , the spectral window approximates on . Thus, the multitaper spectral estimate behaves in expectation like a smoothed version of the true spectrum .

It can be shown that if the spectrum is slowly varying around a frequency , then the tapered spectral estimates are approximately uncorrelated, and . Hence, . Thus, Thomson’s multitaper method produces a spectral estimate whose variance is a factor of smaller than the variance of a single tapered periodogram.

As we increase , the width of the spectral window increases, which causes the expectation of the multitaper spectral estimate to be further smoothed. However, increasing also allows us to increase the number of tapers , which reduces the variance of the multitaper spectral estimate. Intuitively, Thomson’s multitaper method introduces a tradeoff between resolution and robustness.

2.2 Linear algebra view

Here we provide an alternate perspective on Thomson’s multitaper method which is based on linear algebra and subspace projections. Suppose that for each frequency , we choose a low-dimensional subspace , and form a spectral estimate by computing , i.e., the energy in the projection of onto the subspace . One simple choice is the one-dimensional subspace where

is a vector of equispaced samples from a complex sinusoid with frequency . For this choice of , we have

which is exactly the classic periodogram.

We can also choose a low-dimensional subspace which minimizes the average representation error of sinusoids with frequency for some small , i.e.,

where the dimension of the subspace is fixed. Using ideas from the Karhunen-Loeve (KL) transform [43], it can be shown that the optimal -dimensional subspace is the span of the top eigenvectors of the covariance matrix

The entries of this covariance matrix are

where again is the prolate matrix. Hence, we can write

where is a unitary matrix which modulates vectors by pointwise multiplying them by the sinusoid . Therefore, the eigenvectors of are the modulated Slepian basis vectors for , and the corresponding eigenvalues are . Hence, we can choose , i.e., the span of the first Slepian basis vectors modulated to the frequency . Since are orthonormal vectors, and is a unitary matrix, are orthonormal vectors. Hence, where , and thus,

Up to a constant scale factor, this is precisely the multitaper spectral estimate. Hence, we can view the the multitaper spectral estimate as the energy in after it is projected onto the -dimensional subspace which best represents the collection of sinusoids .

2.3 Slepian basis eigenvalues

Before we proceed to our main results, we elaborate on the clustering behavior of the Slepian basis eigenvalues, as they are critical to our analysis in the rest of this paper. For any , slightly fewer than eigenvalues lie in , slightly fewer than eigenvalues lie in , and very few eigenvalues lie in the so-called “transition region” . In Figure 1, we demonstrate this phenomenon by plotting the first Slepian basis eigenvalues for and (so ). The first eigenvalues lie in and the last eigenvalues lie in . Only eigenvalues lie in .

Slepian [3] showed that for any fixed and ,

From this result, it is easy to show that for any fixed and ,

In [44], the authors of this paper derive a non-asymptotic bound on the number of Slepian basis eigenvalues in the transition region. For any , , and ,

This shows that the width of the transition region grows logarithmically with respect to both the time-bandwidth product and the tolerance parameter . Note that when and are small, this result is a significant improvement over the prior non-asymptotic bounds in [45], [46], and [47], which scale like , , and respectively. In Section 4, we will exploit the fact that the number of Slepian basis eigenvalues in the transition region grows like to derive a fast algorithm for evaluating at a grid of evenly spaced frequencies.

Furthermore, in [44], the authors of this paper combine the above bound on the width of the transition region with the fact from [45] that to obtain the following lower bound on the first eigenvalues

This shows that as decreases from , the quantity decays exponentially to . In particular, this means that the first Slepian basis tapers have spectral windows which have very little energy outside . However, the spectral windows of the Slepian basis tapers for near will have a significant amount of energy outside . In Section 3, we show that the statistical properties of the multitaper spectral estimate are significantly improved when using tapers instead of the traditional tapers.

3 Statistical Properties and Spectral Leakage

For a given vector of signal samples , using Thomson’s multitaper method for spectral estimation requires selecting two parameters: the half-bandwidth of the Slepian basis tapers and the number of tapers which are used in the multitaper spectral estimate. The selection of these parameters can greatly impact the accuracy of the multitaper spectral estimate.

In some applications, a relatively small number of samples are taken, and the desired frequency resolution for the spectral estimate is , i.e., a small multiple of the fundamental Rayleigh resolution limit. In such cases, many practitioners [14, 17, 19, 20, 22, 25, 28, 29] choose the half-bandwidth parameter such that is between and , and then choose the number of Slepian basis tapers to be between and . However, in applications where a large number of samples are taken, and some loss of resolution is acceptable, choosing a larger half-bandwidth parameter can result in a more accurate spectral estimate. Furthermore, if the power spectral density has a high dynamic range (that is ), we aim to show that choosing tapers for some small (instead of tapers) can provide significantly better protection against spectral leakage.

For all the theorems in this section, we assume that is a vector of samples from a complex Gaussian process whose power spectral density is bounded and integrable. Note that the analogous results for a real Gaussian process would be similar, but slightly more complicated to state. To state our results, define

i.e., the global maximum of the power spectral density, and for each frequency we define:

i.e. the minimum, maximum, average, and root-mean-squared values of the power spectral density over the interval . We also define the quantities

Before we proceed to our results, we make note of the fact that , , , and are all “local” properties of the power spectral density, i.e., they depend only on values of for , whereas is a “global” property. Note that if the power spectral density is “slowly varying” over the interval , then . However, could be several orders of magnitude larger than , , , and if the power spectral density has a high dynamic range.

By using the bound on the Slepian basis eigenvalues from Section 2.3, we can obtain for some suitably small by choosing the number of tapers to be . This choice of guarantees that , i.e., , , and are all small, and thus, the global property of the power spectral density will have a minimal impact on the non-asymptotic results below. In other words, using tapers mitigates the ability for values of the power spectral density at frequencies to impact the estimate . However, if tapers are used, then the quantities , , and could be large enough for the global property of the power spectral density to significantly weaken the non-asymptotic results below. In other words, energy in the power spectral density at frequencies can “leak” into the estimate .

We begin with a bound on the bias of the multitaper spectral estimate under the additional assumption that the power spectral density is twice differentiable. Note this assumption is only used in Theorem 1.

Theorem 1.

For any frequency , if is twice continuously differentiable in , then the bias of the multitaper spectral estimate is bounded by

where

If tapers are used for some small , then this upper bound is slightly larger than , which is similar to the asymptotic results in [4, 35, 48, 36, 37] which state that the bias is roughly . However, if tapers are used, the term could dominate this bound, and the bias could be much larger than the asymptotic result.

If the power spectral density is not twice-differentiable, we can still obtain the following bound on the bias of the multitaper spectral estimate.

Theorem 2.

For any frequency , the bias of the multitaper spectral estimate is bounded by

If tapers are used for some small , then this upper bound is slightly larger than . This guarantees the bias is small when the power spectral density is “slowly varying” over . However, if tapers are used, the term could dominate this bound, and the bias could be much larger than the asymptotic result.

Next, we state our bound on the variance of the multitaper spectral estimate.

Theorem 3.

For any frequency , the variance of the multitaper spectral estimate is bounded by

If tapers are used for some small , then this upper bound is slightly larger than , which is similar to the asymptotic results in [4, 35, 48, 36, 37] which state that the variance is roughly . However, if tapers are used, the term could dominate this bound, and the variance could be much larger than the asymptotic result.

We also note that if the frequencies are more than apart, then the multitaper spectral estimates at those frequencies have a very low covariance.

Theorem 4.

For any frequencies such that , the covariance of the multitaper spectral estimates at those frequencies is bounded by

If tapers are used for some small , then the covariance is guaranteed to be small. However, if tapers are used, the upper bound is no longer guaranteed to be small, and the covariance could be large.

Finally, we also provide a concentration result for the multitaper spectral estimate.

Theorem 5.

For any frequency , the multitaper spectral estimate satisfies the concentration inequalities

and

where the frequency dependent constant satisfies

We note that these are identical to the concentration bounds for a chi-squared random variable with degrees of freedom. If tapers are used for some small and the power spectral density is “slowly varying” over , then this lower bound on is slightly less than . Hence, has a concentration behavior that is similar to a chi-squared random variable with degrees of freedom, as the asymptotic results in [4, 34] suggest. However, if tapers are used, then could be much smaller, and thus, the multitaper spectral estimate would have significantly worse concentration about its mean.

The proofs of Theorems 1-5 are given in Appendix A. In Section 5, we perform simulations demonstrating that using tapers results in a multitaper spectral estimate that is vulnerable to spectral leakage, whereas using tapers for a suitably small significantly reduces the impact of spectral leakage on the multitaper spectral estimate.

4 Fast Algorithms

Given a vector of samples , evaluating the multitaper spectral estimate at a grid of evenly spaced frequencies (where we assume ) can be done in operations and using memory via length- fast Fourier transforms (FFTs). In applications where the number of samples is small, the number of tapers used is usually a small constant, and so, the computational requirements are a small constant factor more than that of an FFT. However in many applications, using a large number of tapers is desirable, but the computational requirements make this impractical. As mentioned in Section 1, if the power spectrum is twice-differentiable, then the MSE of the multitaper spectral estimate is minimized when the bandwidth parameter is and tapers are used [36]. For medium to large scale problems, precomputing and storing tapers and/or performing FFTs may be impractical.

In this section, we present an -approximation to the multitaper spectral estimate which requires operations and memory. This is faster than the exact multitaper spectral estimation provided the number of tapers satisfies .

To construct this approximation, we first fix a tolerance parameter , and suppose that the number of tapers, , is chosen such that and . Note that this is a very mild assumption as it only forces to be slightly less than . Next, we partition the indices into four sets:

and define the approximate estimator

where

Both and are weighted sums of the single taper estimates for . Additionally, it can be shown that the weights are similar, i.e., the first weights are exactly or approximately , and the last weights are exactly or approximately . Hence, it is reasonable to expect that . The following theorem shows that is indeed the case.

Theorem 6.

The approximate multitaper spectral estimate defined above satisfies

Furthermore, we show in Lemma 8 that . This formula doesn’t involve any of the Slepian tapers. By exploiting the fact that the prolate matrix is Toeplitz, we also show in Lemma 8 that if , then

where is a matrix which zero-pads length- vectors to length-, is a length- FFT matrix, is the first column of the matrix formed by extending the prolate matrix to an circulant matrix, denotes the pointwise magnitude-squared, and denotes a pointwise multiplication. Hence, can be evaluated at a grid of evenly spaced frequencies in operations via three length- FFTs/inverse FFTs. Evaluating the other terms in the expression for at the grid frequencies can be done in operations via length- FFTs. Using these results, we establish the following theorem which states how quickly can be evaluated at the grid frequencies.

Theorem 7.

For any vector of samples and any number of grid frequencies , the approximate multitaper spectral estimate can be evaluated at the grid frequencies in operations and using memory.

5 Simulations

In this section, we show simulations to demonstrate three observations. (1) Using tapers instead of the traditional choice of tapers significantly reduce the effects of spectral leakage. (2) Using a larger bandwidth , and thus, more tapers can produce a more robust spectral estimate. (3) As the number of samples and the number of tapers grows, our approximation becomes significantly faster to use than the exact multitaper spectral estimate .

5.1 Spectral leakage

First, we demonstrate that choosing tapers instead of the traditional choice of tapers significantly reduces the effects of spectral leakage. We fix a signal length of , a bandwidth parameter of (so ) and consider four choices for the number of tapers: , , , and . Note that is the traditional choice as to how many tapers to use, while , , and are the largest values of such that is at least , , and respectively.

In Figure 2, we show three plots of the spectral window

of the multitaper spectral estimate for each of those values of . At the top of Figure 2, we plot over the entire range using a logarithmic scale. The lines outside appear thick due to the highly oscillatory behavior of . This can be better seen in the middle of Figure 2, where we plot over using a logarithmic scale. The behavior of inside can be better seen at the bottom of Figure 2, where we plot over using a linear scale.

All four spectral windows have similar behavior in that is small outside and large near . However, outside of the spectral windows using tapers are multiple orders of magnitude smaller than the spectral window using tapers. Hence, the amount of spectral leakage can be reduced by multiple orders of magnitude by trimming the number of tapers used from to .

We further demonstrate the importance of using tapers to reduce spectral leakage by showing a signal detection example. We generate a vector of samples of a Gaussian random process with a power spectral density function of

This simulates an antenna receiving signals from four narrowband sources with some background noise. Note that one source is significantly stronger than the other three sources. In Figure 3, we plot:

-

•

the periodogram of ,

-

•

the multitaper spectral estimate of with and tapers,

-

•

the multitaper spectral estimate of with and tapers (chosen so ).

We note that all three spectral estimates yield large values in the frequency band . However, in the periodogram and the multitaper spectral estimate with tapers, the energy in the frequency band “leaks” into the frequency bands occupied by the smaller three sources. As a result, the smaller three sources are hard to detect using the periodogram or the multitaper spectral estimate with tapers. However, all four sources are clearly visible when looking at the multitaper spectral estimate with tapers. For frequencies not within of the edges of the frequency band, the multitaper spectral estimate is within a small constant factor of the true power spectral density.

5.2 Comparison of spectral estimation methods

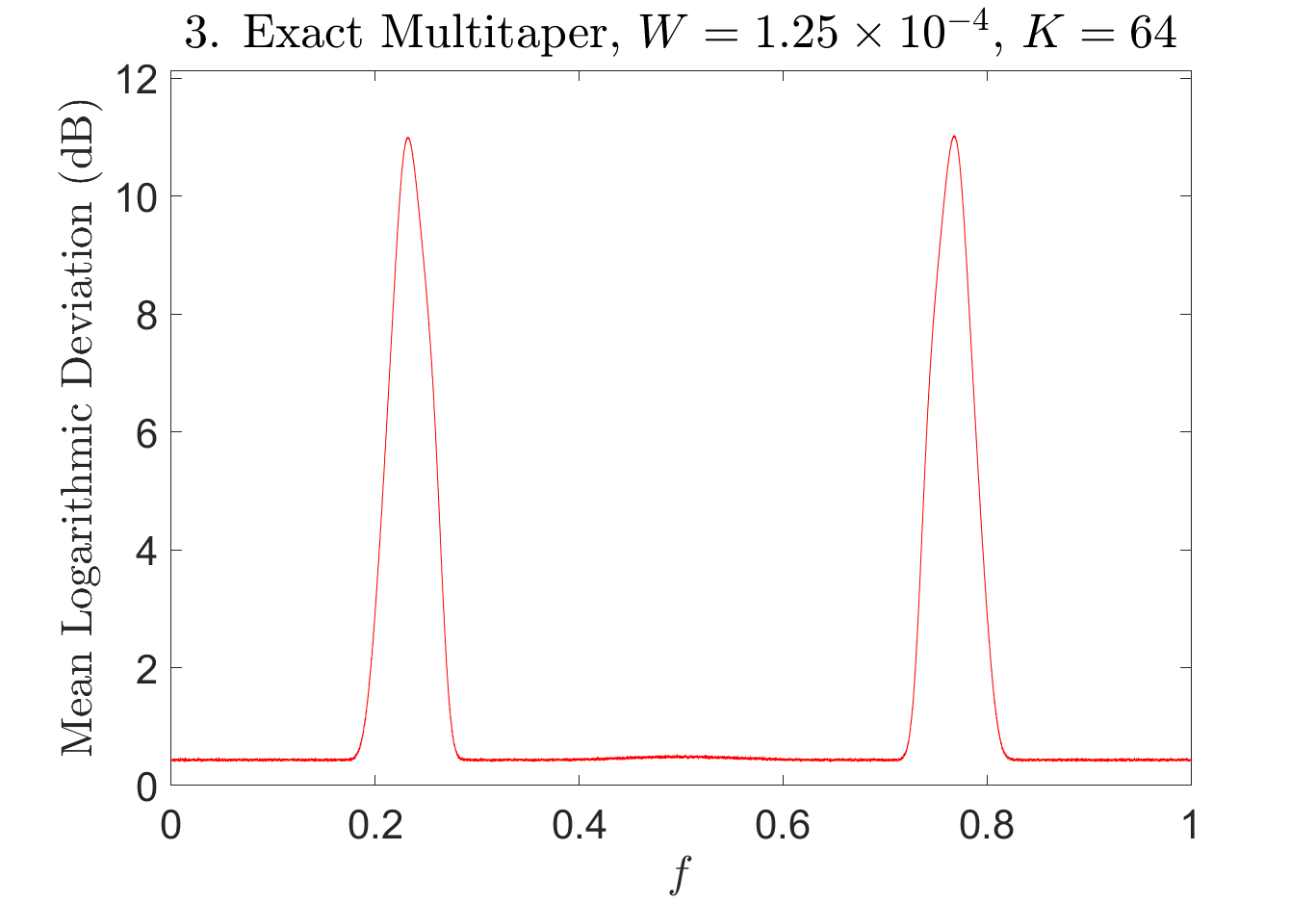

Next, we demonstrate a few key points about selecting the bandwidth parameter and the number of tapers used in the multitaper spectral estimate. We compare the following eight methods to estimate the power spectrum of a Gaussian random process from a vector of samples:

-

1.

The classic periodogram

-

2.

A tapered periodogram using a single DPSS taper with (chosen such that )

-

3.

The exact multitaper spectral estimate with a small bandwidth parameter of and tapers

-

4.

The exact multitaper spectral estimate with a small bandwidth parameter of and tapers (chosen such that )

-

5.

The approximate multitaper spectral estimate with a larger bandwidth parameter of , tapers, and a tolerance parameter of

-

6.

The approximate multitaper spectral estimate with a larger bandwidth parameter of , tapers (chosen such that ), and a tolerance parameter of

-

7.

The exact multitaper spectral estimate with the adaptive weighting scheme suggested by Thomson[4] with a small bandwidth parameter of and tapers

-

8.

The exact multitaper spectral estimate with the adaptive weighting scheme suggested by Thomson[4] with a larger bandwidth parameter of and tapers

The adaptive weighting scheme computes the single taper periodograms for , and then forms a weighted estimate

where the frequency dependent weights satisfy

where . Of course, solving for the weights directly is difficult, so this method requires initializing the weights and alternating between updating the estimate and updating the weights . This weighting procedure is designed to keep all the weights large at frequencies where is large and reduce the weights of the last few tapers at frequencies where is small. Effectively, this allows the spectrum to be estimated with more tapers at frequencies where is large while simultaneously reducing the spectral leakage from the last few tapers at frequencies where is small. The cost is the increased computation time due to setting the weights iteratively. For more details regarding this adaptive scheme, see [8].

In Figure 4, we plot the power spectrum and the eight estimates for a single realization of the Gaussian random process. Additionally, for realizations , of the Gaussian random process, we compute a spectral estimate using each of the above eight methods. In Figure 5, we plot the empirical mean logarithmic deviation in dB, i.e.,

for each of the eight methods. In Table 1, we list the average time needed to precompute the DPSS tapers, the average time to compute the spectral estimate after the tapers are computed, and the average of the empirical mean logarithmic deviation in dB across the frequency spectrum.

We make the following observations:

-

•

The periodogram and the single taper periodogram (methods 1 and 2) are too noisy to be useful spectral estimates.

-

•

Methods 3, 4, and 7 yield a noticeably noisier spectral estimate than methods 5, 6, and 8. This is due to the fact that methods 5, 6, and 8 use a larger bandwidth parameter and more tapers.

-

•

The spectral estimates obtained with methods 1, 3 and 5 suffer from spectral leakage, i.e., the error is large at frequencies where is small, as can be seen in Figure 5. This is due to the fact that they use tapers, and thus, include tapered periodograms for which is not very close to .

-

•

Methods 4 and 6 avoid using tapered periodograms for which and methods 7 and 8 use these tapered periodograms but assign a low weight to them at frequencies where is small. Hence, methods 4, 6, 7, and 8 are able to mitigate the spectral leakage phenomenon.

-

•

Methods 5 and 6 are slightly faster than methods 3 and 4 due to the fact that our approximate multitaper spectral estimate only needs to compute tapers and tapered periodograms.

-

•

Method 7 takes noticeably longer than methods 3 and 4, and method 8 takes considerably longer than methods 5 and 6. This is because the iterative method for computing the adaptive weights requires many iterations to converge when the underlying power spectral density has a high dynamic range.

-

•

Methods 6 and 8 exhibit very similar performance. This is to be expected, as using a weighted average of tapered periodograms is similar to using the unweighted average of the first tapered periodograms. The empirical mean logarithmic deviation is larger at frequencies where is rapidly varying and smaller at frequencies where is slowly varying. This is to be expected as the local bias (caused due to the smoothing effect of the tapers) dominates the variance at these frequencies.

| Method | Avg MLD (dB) | Avg Precomputation Time (sec) | Avg Run Time (sec) |

|---|---|---|---|

| 1 | 5.83030 | N/A | 0.0111 |

| 2 | 4.41177 | 0.1144 | 0.0111 |

| 3 | 1.48562 | 4.3893 | 0.3771 |

| 4 | 0.47836 | 3.6274 | 0.3093 |

| 5 | 1.56164 | 3.3303 | 0.2561 |

| 6 | 0.12534 | 3.3594 | 0.2533 |

| 7 | 0.45080 | 4.3721 | 1.4566 |

| 8 | 0.12532 | 78.9168 | 17.2709 |

From this, we can draw several conclusions. First, by slightly trimming the number of tapers from to , one can significantly mitigate the amount of spectral leakage in the spectral estimate. Second, using a larger bandwidth parameter and averaging over more tapered periodograms will result in a less noisy spectral estimate. Third, our fast approximate multitaper spectral estimate can yield a more accurate estimate in the same amount of time as an exact multitaper spectral estimate because our fast approximation needs to compute significantly fewer tapers and tapered periodograms. Fourth, a multitaper spectral estimate with tapers and adaptive weights can yield a slightly lower error than a multitaper spectral estimate with tapers and fixed weights, but as increases, this effect becomes minimal and not worth the increased computational cost.

5.3 Fast multitaper spectral estimation

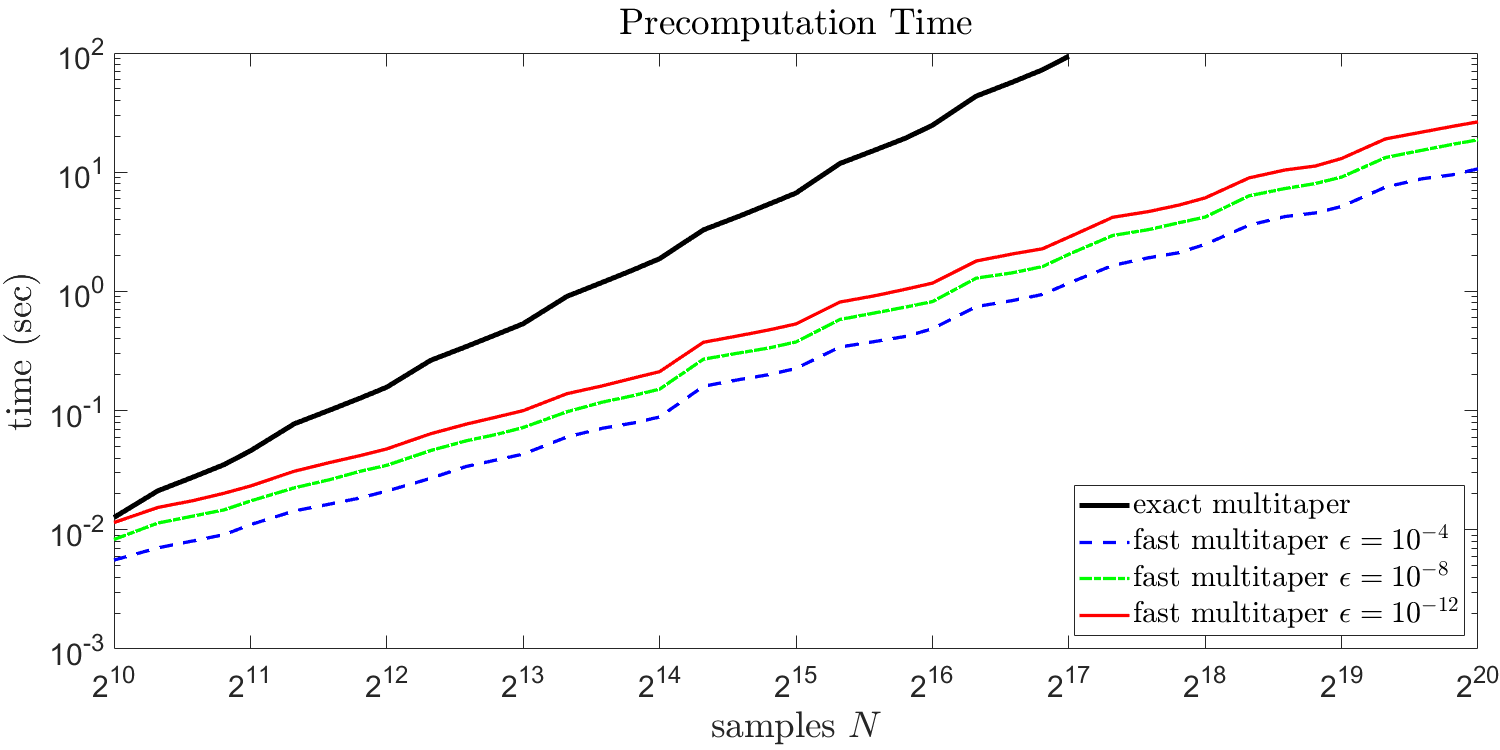

Finally, we demonstrate that the runtime for computing the approximate multitaper spectral estimate scales roughly linearly with the number of samples. We vary the signal length over several values between and . For each value of , we set the bandwidth parameter to be as this is similar to what is asymptotically optimal for a twice differentiable spectrum [36]. We then choose a number of tapers such that , and then compute the approximate multitaper spectral estimate at for each of the tolerances , , and . If , we also compute the exact multitaper spectral estimate at . For , we did not evaluate the exact multitaper spectral estimate due to the excessive computational requirements.

We split the work needed to produce the spectral estimate into a precomputation stage and a computation stage. The precomputation stage involves computing the Slepian tapers which are required for the spectral estimate. In applications where a multitaper spectral estimate needs to be computed for several signals (using the same parameters ), computing the tapers only needs to be done once. The exact multitaper spectral estimate requires computing for , while the approximate multitaper spectral estimate requires computing for . The computation stage involves evaluating or at with the required tapers already computed.

In Figures 6 and 7, we plot the precomputation and computation time respectively versus the signal length for the exact multitaper spectral estimate as well as the approximate multitaper spectral estimate for , , and . The times were averaged over trials of the procedure described above. The plots are on a log-log scale. We note that the precomputation and computation times for the approximate multitaper spectral estimate grow roughly linearly with while the precomputation and computation times for the exact multitaper spectral estimate grow significantly faster. Also, the precomputation and computation times for the approximate multitaper spectral estimate with the very small tolerance are only two to three times more than those for the larger tolerance .

6 Conclusions

Thomson’s multitaper method is a widely-used tool for spectral estimation. In this paper, we have presented a linear algebra based perspective of Thomson’s multitaper method. Specifically, the multitaper spectral estimate can be viewed as the -norm energy of the projection of the signal onto a -dimensional subspace . The subspace is chosen to be the optimal -dimensional subspace for representing sinusoids with frequencies between and .

We have also provided non-asymptotic bounds on the bias, variance, covariance, and probability tails of the multitaper spectral estimate. In particular, if the multitaper spectral estimate uses tapers, the effects of spectral leakage are mitigated, and our non-asymptotic bounds are very similar to existing asymptotic results. However, when the traditional choice of tapers is used, the non-asymptotic bounds are significantly worse, and our simulations show the multitaper spectral estimate suffers severely from spectral leakage.

Finally, we have derived an -approximation to the multitaper spectral estimate which can be evaluated at a grid of evenly spaced frequencies in operations. Since evaluating the exact multitaper spectral estimate at a grid of evenly spaced frequencies requires operations, our -approximation is faster when tapers are required. In problems involving a large number of samples, minimizing the mean-squared error of the multitaper spectral estimate requires using a large number of tapers, and thus, our -approximation can drastically reduce the required computation.

References

- [1] S. Karnik, J. Romberg, and M. A. Davenport. Fast multitaper spectral estimation. In 2019 13th International conference on Sampling Theory and Applications (SampTA), pages 1–4, Bordeaux, France, July 2019. IEEE.

- [2] J. H. McClellan, R. W. Schafer, and M. A. Yoder. Signal Processing First. Pearson, Upper Saddle River, NJ, 2003.

- [3] D. Slepian. Prolate spheroidal wave functions, Fourier analysis, and uncertainty. V – The discrete case. Bell Sys. Tech. J., 57(5):1371–1430, 1978.

- [4] D. J. Thomson. Spectrum estimation and harmonic analysis. Proc. IEEE, 70(9):1055–1096, September 1982.

- [5] S. Haykin. Cognitive radio: brain-empowered wireless communications. IEEE J. Sel. Areas Commun., 23(2):201–220, February 2005.

- [6] B. Farhang-Boroujeny. Filter Bank Spectrum Sensing for Cognitive Radios. IEEE Trans. Signal Process., 56(5):1801–1811, May 2008.

- [7] B. Farhang-Boroujeny and R. Kempter. Multicarrier communication techniques for spectrum sensing and communication in cognitive radios. IEEE Communications Magazine, 46(4):80–85, April 2008.

- [8] S. Haykin, D. J. Thomson, and J. H. Reed. Spectrum sensing for cognitive radio. Proc. IEEE, 97(5):849–877, May 2009.

- [9] E. Axell, G. Leus, E. Larsson, and H. Poor. Spectrum sensing for cognitive radio : State-of-the-art and recent advances. IEEE Signal Processing Magazine, 29(3):101–116, May 2012.

- [10] K. N. Hamdy, M. Ali, and A. H. Tewfik. Low bit rate high quality audio coding with combined harmonic and wavelet representations. In 1996 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP) Conference Proceedings, volume 2, pages 1045–1048, Atlanta, GA, USA, 1996. IEEE.

- [11] T. Painter and A. Spanias. Perceptual coding of digital audio. Proc. IEEE, 88(4):451–515, April 2000.

- [12] A. Delorme and S. Makeig. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neuroscience Methods, 134(1):9–21, March 2004.

- [13] A. Delorme, T. Sejnowski, and S. Makeig. Enhanced detection of artifacts in EEG data using higher-order statistics and independent component analysis. NeuroImage, 34(4):1443–1449, February 2007.

- [14] R. R. Llinas, U. Ribary, D. Jeanmonod, E. Kronberg, and P. P. Mitra. Thalamocortical dysrhythmia: A neurological and neuropsychiatric syndrome characterized by magnetoencephalography. Proc. Nat. Acad. Sci., 96(26):15222–15227, December 1999.

- [15] B. Pesaran, J. S. Pezaris, M. Sahani, P. P. Mitra, and R. A. Andersen. Temporal structure in neuronal activity during working memory in macaque parietal cortex. Nature Neuroscience, 5(8):805–811, August 2002.

- [16] J. Csicsvari, B. Jamieson, K. D. Wise, and G. Buzsáki. Mechanisms of gamma oscillations in the hippocampus of the behaving rat. Neuron, 37(2):311–322, January 2003.

- [17] P. P. Mitra and B. Pesaran. Analysis of dynamic brain imaging data. Biophysical Journal, 76(2):691–708, February 1999.

- [18] M. W. Jones and M. A. Wilson. Theta rhythms coordinate hippocampal–prefrontal interactions in a spatial memory task. PLoS Biology, 3(12):e402, November 2005.

- [19] G. Bond, W. Showers, M. Cheseby, R. Lotti, P. Almasi, P. de Menocal, P. Priore, H. Cullen, I. Hajdas, and G. Bonani. A pervasive millennial-scale cycle in north atlantic holocene and glacial climates. Science, 278(5341):1257–1266, November 1997.

- [20] M. Ghil, M. R. Allen, M. D. Dettinger, K. Ide, D. Kondrashov, M. E. Mann, A. W. Robertson, A. Saunders, Y. Tian, F. Varadi, and P. Yiou. Advanced spectral methods for climatic time series. Rev. Geophys., 40(1):3–1–3–41, 2002.

- [21] R. Vautard and M. Ghil. Singular spectrum analysis in nonlinear dynamics, with applications to paleoclimatic time series. Physica D: Nonlinear Phenomena, 35(3):395–424, May 1989.

- [22] M. E. Mann and J. M. Lees. Robust estimation of background noise and signal detection in climatic time series. Climatic Change, 33(3):409–445, July 1996.

- [23] S. Minobe. A 50-70 year climatic oscillation over the north pacific and north america. Geophys. Res. Lett., 24(6):683–686, March 1997.

- [24] J. Jouzel, N. I. Barkov, J. M. Barnola, M. Bender, J. Chappellaz, C. Genthon, V. M. Kotlyakov, V. Lipenkov, C. Lorius, J. R. Petit, D. Raynaud, G. Raisbeck, C. Ritz, T. Sowers, M. Stievenard, F. Yiou, and P. Yiou. Extending the Vostok ice-core record of palaeoclimate to the penultimate glacial period. Nature, 364(6436):407–412, July 1993.

- [25] D. J. Thomson. Time series analysis of holocene climate data. Philos. Trans. Roy. Soc. London. Ser. A, Math. and Physical Sci., 330(1615):601–616, 1990.

- [26] D. Brunton, A. Rodrigo, and E. Marks. Ecstatic display calls of the Adélie penguin honestly predict male condition and breeding success. Behaviour, 147(2):165–184, 2010.

- [27] S. Saar and P. P. Mitra. A technique for characterizing the development of rhythms in bird song. PLoS ONE, 3(1):e1461, January 2008.

- [28] M. Hansson-Sandsten, M. Tarka, J. Caissy-Martineau, B. Hansson, and D. Hasselquist. SVD-based classification of bird singing in different time-frequency domains using multitapers. In 2011 19th European Signal Processing Conference, pages 966–970, August 2011. ISSN: 2076-1465.

- [29] A. Leonardo and M. Konishi. Decrystallization of adult birdsong by perturbation of auditory feedback. Nature, 399(6735):466–470, June 1999.

- [30] O. Tchernichovski, F. Nottebohm, C. E. Ho, B. Pesaran, and P. P. Mitra. A procedure for an automated measurement of song similarity. Animal Behaviour, 59(6):1167–1176, June 2000.

- [31] M. A. Wieczorek. Gravity and topography of the terrestrial planets. In G. Schubert, editor, Treatise on Geophysics, pages 165–206. Elsevier, Amsterdam, 2nd edition, January 2007.

- [32] S. G. Claudepierre, S. R. Elkington, and M. Wiltberger. Solar wind driving of magnetospheric ULF waves: Pulsations driven by velocity shear at the magnetopause: ULF waves driven by velocity shear at the magnetopause. J. Geophys. Res.: Space Phys., 113(A5), May 2008.

- [33] B. Allen and J. D. Romano. Detecting a stochastic background of gravitational radiation: Signal processing strategies and sensitivities. Phys. Rev. D, 59(10):102001, March 1999.

- [34] D. B. Percival and A. T. Walden. Spectral analysis for physical applications: multitaper and conventional univariate techniques. Cambridge University Press, Cambridge ; New York, NY, USA, 1993.

- [35] A. T. Walden. A unified view of multitaper multivariate spectral estimation. Biometrika, 87(4):767–788, December 2000.

- [36] L. D. Abreu and J. L. Romero. MSE estimates for multitaper spectral estimation and off-grid compressive sensing. IEEE Trans. Inf. Theory, 63(12):7770–7776, December 2017.

- [37] C. L. Haley and M. Anitescu. Optimal bandwidth for multitaper spectrum estimation. IEEE Sig. Proc. Lett., 24(11):1696–1700, November 2017.

- [38] G. G. Stokes. On a method of detecting inequalities of unknown periods in a series of observations. Proc. Roy. Soc. London, 29:122–123, 1879.

- [39] A. Schuster. On the investigation of hidden periodicities with application to a supposed 26 day period of meteorological phenomena. Terr. Magnetism, 3(1):13–41, 1898.

- [40] F. J. Harris. On the use of windows for harmonic analysis with the discrete Fourier transform. Proc. IEEE, 66(1):51–83, 1978.

- [41] J. M. Varah. The prolate matrix. Linear Algebra and its Applications, 187:269–278, July 1993.

- [42] A. W. Bojanczyk, R. P. Brent, F. R. De Hoog, and D. R. Sweet. On the stability of the Bareiss and related Toeplitz factorization algorithms. SIAM J. Matrix Anal. Appl., 16(1):40–57, January 1995.

- [43] H. Stark and J. W. Woods. Probability, random processes, and estimation theory for engineers. Prentice-Hall, Englewood Cliffs, N.J, 1986.

- [44] S. Karnik, J. Romberg, and M. A. Davenport. Improved bounds for the eigenvalues of prolate spheroidal wave functions and discrete prolate spheroidal sequences. arXiv:2006.00427 [math], September 2020. arXiv: 2006.00427.

- [45] Z. Zhu and M. B. Wakin. Approximating sampled sinusoids and multiband signals using multiband modulated DPSS dictionaries. J. Fourier Anal. Appl., 23(6):1263–1310, December 2017.

- [46] M. Boulsane, N. Bourguiba, and A. Karoui. Discrete prolate spheroidal wave functions: Further spectral analysis and some related applications. Journal of Scientific Computing, 82(3):1–19, February 2020.

- [47] S. Karnik, Z. Zhu, M. B. Wakin, J. Romberg, and M. A. Davenport. The fast Slepian transform. Applied and Computational Harmonic Analysis, 46(3):624–652, May 2019.

- [48] K. S. Lii and M. Rosenblatt. Prolate spheroidal spectral estimates. Statist. & Probability. Lett., 78(11):1339–1348, August 2008.

- [49] P. H. M. Janssen and P. Stoica. On the expectation of the product of four matrix-valued Gaussian random variables. IEEE Trans. Autom. Control, 33(9):867–870, September 1988.

- [50] S. Janson. Tail bounds for sums of geometric and exponential variables. Statist. & Probability. Lett., 135:1–6, April 2018.

- [51] D. L. Hanson and F. T. Wright. A bound on tail probabilities for quadratic forms in independent random variables. Ann. Math. Statist., 42(3):1079–1083, June 1971.

- [52] F. T. Wright. A bound on tail probabilities for quadratic forms in independent random variables whose distributions are not necessarily symmetric. Ann. Probability, 1(6):1068–1070, December 1973.

- [53] D. M. Gruenbacher and D. R. Hummels. A simple algorithm for generating discrete prolate spheroidal sequences. IEEE Trans. Signal Process., 42(11):3276–3278, November 1994.

Appendix A Proof of Results in Section 3

A.1 Norms of Gaussian random variables

In this subsection, we develop results on the -norm of linear combinations of Gaussian random variables, which will be critical to our proofs of the theorems in Section 3.

First, we state a result from [49] regarding the product of four jointly complex Gaussian vectors.

Lemma 1.

It should be noted that [49] proves a more general result for the product of four joint complex Gaussian matrices, but this is a bit harder to state. So we give the result for vectors.

We now prove a result regarding the norm-squared of linear combinations of complex Gaussians.

Lemma 2.

Let for some positive semidefinite and let . Then, we have:

Proof.

The expectation of can be computed as follows

Since the entries of are jointly Gaussian with mean , the entries of and are also jointly Gaussian with mean . Then, by applying Lemma 1 (with and ), we find that

We proceed to evaluate each of these three terms.

Since , we can write where and is the unique positive semidefinite squareroot of . Then, by using the identity for appropriately sized matrices , we get

Since the entries of are i.i.d. , for all indices . (Note that if the entries of were i.i.d. instead of .) Hence, , and thus, . Similarly, , and so, .

Using the cyclic property of the trace operator, linearity of the trace and expectation operators, and the fact that , we find that

Finally, since , we have and . Hence,

Adding these three terms yields,

∎

A.2 Concentration of norms of Gaussian random variables

Next, we state a result from [50] regarding concentration bounds for sums of independent exponential random variables.

Lemma 3.

We now apply this lemma to derive concentration bounds for , where is a vector of Gaussian random variables and is a matrix.

Lemma 4.

Let for some positive semidefinite . Also, let . Then, the random variable satisfies

and

where

Proof.

Since , we can write where and is the unique positive semidefinite squareroot of . Then, using eigendecomposition, we can write where is unitary and . Since is unitary, . Then, we have:

Since , we have that are i.i.d. . Hence, , and are independent. If we apply Lemma 3, the fact that the trace and operator norm are invariant under unitary similarity transforms, and the matrix identities and , we obtain

and

where

∎

A.3 Intermediate results

We continue by presenting a lemma showing that certain matrices can be represented as an integral of a frequency dependent rank- matrix.

Lemma 5.

For any frequency , define a complex sinusoid by for . Then, we have:

where . Furthermore, if is a stationary, ergodic, zero-mean, Gaussian stochastic process with power spectral density , and is a vector of equispaced samples for , then the covariance matrix of can be written as

Proof.

For any , we have

and

We can put these into matrix form as

From this, it follows that

Finally, using the definition of the power spectral density, we have

for . Again, we can put this into matrix form as

∎

Next, we show that the expectation of the multitaper spectral estimate is the convolution of the power spectral density with the spectral window .

Lemma 6.

The expectation of the multitaper spectral estimate can be written as

where

is the spectral window of the multitaper estimate.

Proof.

where the last line follows since is -periodic (by definition) and is -periodic (because is -periodic for all ).

Finally, it’s easy to check that can be written in the following alternate forms:

∎

Lemma 7.

The spectral window defined in Lemma 6, satisfies the following properties:

-

•

for all

-

•

and where

-

•

Proof.

First, the spectral window is an even function since

for all .

As a consequence, we can set and write . Then, the integral of the spectral window over is

where we have used the notation . Similarly, the integral of the spectral window over is

Finally, the spectral window is bounded by

where we have used the facts that (because is orthonormal) and . ∎

A.4 Proof of Theorem 1

Since is twice continuously differentiable, for any , there exists a between and such that

Then, since , , and for all , we can bound the local bias as follows:

Since , we can bound the broadband bias as follows:

A.5 Proof of Theorem 2

Without the assumption that is twice differentiable, we can still obtain a bound on the bias. Using the bounds and along with the integrals and , we can obtain the following upper bound on :

Similarly, we can obtain the following lower bound on :

From the above two bounds, we have

which establishes Theorem 2.

A.6 Proof of Theorem 3

Since where , by Lemma 2, we have

We focus on bounding the Frobenius norm of . To do this, we first split it into two pieces - an integral over and an integral over :

We will proceed by bounding the Frobenius norm of the two pieces above, and then applying the triangle inequality. Since for all , we trivially have

Then, since implies , we have

Obtaining a good bound on the first piece requires a more intricate argument. We define if and if . For convenience, we also extend to such that is -periodic. With this definition, we can write

where the second line holds since , , and are -periodic. Now, for any , the vectors form an orthonormal basis of . Hence, we have

for any choice of coefficients and offset frequency . By applying this formula, along with the triangle inequality and the Cauchy-Shwarz Integral inequality, we obtain

Since is orthonormal, for any Hermitian matrix . Hence,

Finally, by applying the two bounds we’ve derived, we obtain

and thus,

A.7 Proof of Theorem 4

Since where , by Lemma 2, we have

We focus on bounding the Frobenius norm of . To do this, we first split it into three pieces - an integral over , an integral over , and an integral over :

By using the triangle inequality, the identity for vectors , the Cauchy-Shwarz Inequality, and the facts that and , we can bound the Frobenius norm of the first piece by

In a nearly identical manner, we can bound the second piece by

The third piece can also be bounded in a similar manner, but the details are noticeably different, so we show the derivation:

where and .

Finally, by applying the three bounds we’ve derived, we obtain

and thus,

A.8 Proof of Theorem 5

We can get an upper bound on in the Loewner ordering by splitting it into two pieces as done in the proof of Theorem 3, and then bounding each piece:

Then, by using the fact that for PSD matrices and , we can bound,

We can also get a lower bound on by using the formula for from Lemma 6 along with the properties of the spectral window derived in Lemma 7 as follows:

Combining the upper bound on with the lower bound on , yields

Appendix B Proof of Results in Section 4

B.1 Fast algorithm for computing at grid frequencies

To begin developing our fast approximations for , we first show that an eigenvalue weighted sum of tapered spectral estimates can be evaluated at a grid of frequencies where in operations and using memory.

Lemma 8.

For any vector and any integer , the quantity

can be evaluated at the grid frequencies in operations and using memory.

Proof.

Using eigendecomposition, we can write , where

and

For any , we let be a diagonal matrix with diagonal entries

With this definition, can be written as

This gives us a a formula for which does not require computing any of the Slepian tapers. We will now use the fact that is a Toeplitz matrix to efficiently compute for all .

First, note that we can “extend” to a larger circulant matrix, which is diagonalized by a Fourier Transform matrix. Specifically, define a vector of sinc samples by

and let be defined by

It is easy to check that , i.e., the upper-left submatrix of is . Hence, we can write

where

is a zeropadding matrix. Since is a circulant matrix whose first column is , we can write

where is an FFT matrix, i.e.,

Note that with this normalization, the inverse FFT satisfies

as well as the conjugation identity

Next, for any , let be a diagonal matrix with diagonal entries

and for each , let be a cyclic shift matrix, i.e.,

Since and are both diagonal matrices and for , we have

for all . Also, it is easy to check that cyclically shifting each column of by indices is equivalent to modulating the rows of , or more specifically

for all . Additionally, for any vectors , we will denote to be the pointwise multiplication of and , i.e.,

and to be the circluar cross-correlation of and , i.e.,

Note that the circular cross-correlation of and can be computed using FFTs via the formula

We will also use the notation for convenience.

We can now manipulate our formula for as follows

Therefore,

So to compute at all grid frequencies , we only need to compute , which can be done in operations using memory via three length- FFTs/IFFTs and a few pointwise operations on vectors of length . ∎

B.2 Approximations for general multitaper spectral estimates

Next, we present a lemma regarding approximations to spectral estimates which use orthonormal tapers.

Lemma 9.

Let be a vector of equispaced samples, and let be any orthonormal set of tapers in . For each , define a tapered spectral estimate

Also, let and be real coefficients, and then define a multitaper spectral estimate and an approximation by

Then, for any frequency , we have

Proof.

Let , and let , , and be diagonal matrices whose diagonal entries are , , and for . Then,

In a nearly identical manner,

Since is orthonormal, . Since is diagonal, and all the diagonal entries have modulus , . Hence, for any , we can bound

as desired. ∎

B.3 Proof of Theorem 6

Recall that the indices are partitioned as follows:

Using the partitioning above, the unweighted multitaper spectral estimate can be written as

and the approximate estimator can be written as

Thus, and can be written as

where

We now bound for all . For , we have , and thus,

For we have , i.e., . For , we have , and thus,

Hence, for all , and thus by Lemma 9,

for all frequencies .

B.4 Proof of Theorem 7

To evaluate the approximate multitaper estimate

at the grid frequencies one needs to:

-

•

For each , precompute the Slepian basis vectors and eigenvalues .

Computing the Slepian basis vector and the corresponding eigenvalue for a single index can be done in operations and memory via the method described in [53]. This needs to be done for values of , so the total cost of this step is operations and memory.

-

•

For , evaluate .

-

•

For each and each , evaluate .

Evaluating for all can be done by pointwise multiplying and , zeropadding this vector to length , computing a length- FFT, and then computing the squared magnitude of each FFT coefficient. This takes operations and memory. This needs to be done for values of , so the total cost of this step is operations and memory.

-

•

For each , evaluate the weighted sum above for .

Once and for are computed, evaluating requires multiplications and additions. This has to be done for each , so the total cost is operations.

Since , the total cost of evaluating the approximate multitaper estimate at the grid frequencies is operations and memory.