Thompson Sampling for Parameterized Markov Decision Processes with Uninformative Actions

Abstract

We study parameterized MDPs (PMDPs) in which the key parameters of interest are unknown and must be learned using Bayesian inference. One key defining feature of such models is the presence of “uninformative” actions that not provide no information about the unknown parameters. We contribute a set of assumptions for PMDPs under which Thompson sampling guarantees an asymptotically optimal expected regret bound of , which are easily verified for many classes of problems such as queuing, inventory control, and dynamic pricing.

Index Terms:

Bayesian Inference, Exploration–Exploitation, Markov Decision Process, Parameter Uncertainty, Regret Bound, Thompson SamplingI Introduction

Parameterized MDPs (PMDPs) are dynamic control problems described by parameters of interest whose values are typically unknown, arising in many applications such as queuing and supply chain control, maintenance, and network design. Such problems can be naturally formulated as POMDPs, in which a learnt posterior distribution over the model parameters is incorporated directly into the system state [1, 3, 5]. However, the corresponding dynamic programming equations are typically computationally or analytically intractable due to the so-called “curse of dimensionality”. To remedy this, a variety of approaches have been proposed to do approximately optimal control including myopic and lookahead policies [16], least-squares methods [7, 19], non-parametric approaches [10, 8] and reinforcement learning [12]

One particular approach, which we investigate in this work, is Thompson sampling (TS) [27, 26], which samples a parameter according to the current posterior distribution at each time step, and then solves the underlying MDP assuming that the sampled parameter is correct. The decoupling of the posterior update and sample phase from the optimization phase makes Thompson sampling a computationally and analytically tractable alternative for solving general PMDPs, and it has been applied in a variety of problems settings, notably multi-armed bandit problems [2, 25]. However, these models can be classified as purely Bayesian optimization problems since, when stripped of all parameter uncertainty, they are fundamentally single-stage optimization problems. On the other hand, much less is known about the theoretical performance of Thompson sampling for stochastic control problems with parameter uncertainty.

Our work builds upon the stream of literature on Thompson sampling for PMDPs [20, 6, 24, 15, 13]. Specifically, the closest work to ours is [20], which showed that Thompson sampling is asymptotically optimal assuming each action taken reveals some additional information about the unknown parameter(s). On the other hand, many important PMDPs contain “uninformative” actions that do not reveal information about the parameters, e.g. setting lower inventory levels in an inventory control problem may lead to stock-outs [18], preventative maintenance provides less statistical information about failures [21], and so-called “uninformative prices” in dynamic pricing problems [1]. To this end, Gopalan and Mannor [15] showed – under rather general assumptions – that the number of instants where sub-optimal actions are chosen scales logarithmically with time, with high probability. On the other hand, this paper provides a different set of assumptions and analysis, and contributes more precise regret and learning rate bounds for an important class of PMDPs with uninformative actions that hold in expectation. Our work contributes more general assumptions and regret analysis than found in Kim [20], extending the previous optimal regret guarantees for a broader classes of problems with uninformative actions that are of practical interest.

The remainder of the paper is structured as follows. Section II defines the Thompson sampling algorithm in the context of PMDPs. Section III provides a formal statement of the notion of an “uninformative” action, a set of general assumptions on the problem structure, and corresponding optimal regret and learning rate bounds for Thompson sampling. These results are proved in Section IV, and empirically validated on three important classes of problems, namely admission control, inventory control and dynamic pricing.

II Preliminaries

II-A Parameterized Markov Decision Process

Decision-making in this paper be summarized as a parameterized Markov decision process (PMDP), which consists of a finite set of possible states , a finite set of possible actions or controls , and a finite set of parameters (e.g. hypotheses) that specify both the reward and the state distribution. Specifically, given a control applied at time in state , and a parameter , the reward and the next state are sampled according to

We also define the reward function as , which is assumed to be uniformly bounded on .

An admissible policy is defined as a sequence of mappings , where maps the history of information observed up to time , , to a probabilitity distribution over the action space, and be the set of all admissible policies. On the other hand, a Markov policy is a sequence of mappings ; it is further a stationary policy if . Let denote the set of all stationary policies. Given a fixed parameter , the goal is to maximize over all , the long-run expected average reward starting from an initial state , given as

| (1) |

in which the expectation is computed with respect to and are sampled according to . We assume that an optimal policy exists, which must necessarily be a stationary Markov policy. Furthermore, the state process induced by constitutes a time-homogeneous Markov chain, and we assume that

Assumption 1.

The state process induced by is ergodic unichain.

II-B Thompson Sampling

The decision maker accounts for uncertainty in by modeling it as a random variable in each decision epoch. Starting with prior distribution , and given history , the decision maker updates his belief about by computing the posterior distribution using Bayes’ theorem:

| (2) |

where

is the likelihood. Note that is a function of and is therefore a random variable.

The agent selects controls that are consistent with the learned belief by following the Thompson sampling algorithm. Formally, this corresponds to an admissible (e.g. history-dependent) policy defined in Algorithm 1.

Intuitively, the posterior update (2) should only improve upon the decision maker’s belief about when is distinguishable for different values of . To see this, we can restate (2) as

from which we can see that precisely when the ratio of the two likelihoods does not change. Conceptually, the existence of such uninformative state-action pairs can significantly “stall” the posterior updates and prevent the decision maker from learning the correct PMDP parameters. On the other hand, recent analysis of posterior convergence [20] in general PMDPs precludes such scenarios, by imposing rather strong assumptions on the problem structure that do not always hold in practice. In the following section, we will formalize the notion of an uninformative action and provide an alternative and complementary set of assumptions that still provide an asymptotically optimal regret bound.

III Thompson Sampling for PMDPs with Uninformative Actions

III-A Uninformative Actions

In order to assess the convergence speed of more precisely, it is necessary to quantify the magnitude of the posterior update. In particular, we define as the joint probability distribution specified by and the KL-divergence

We require that is absolutely continuous with respect to , so that the above quantity is finite.

Formalizing the above intuition, an action is called informative in state if, for any two distinct ,

Assumption 2.

There exists such that is an informative action in state for all .

Note that Assumption 2 is easy to check in practice since, in the context of Algorithm 1, the policies are typically computed in advance and cached. Furthermore, it naturally partitions the state-action space into two classes. The first class consists of state-action pairs for which is informative in , and is non-empty since it contains for every . The second class is comprised of those state-action pairs for which are not informative with respect to . Thus, Assumption 2 is much weaker than the one required in [20], where it is simply assumed that .

Finally, to ensure that the posterior belief converges at the optimal asymptotic rate, it is necessary that the Markov chain induced by Thompson sampling eventually visits an informative state a positive fraction of the time. One way to guarantee this is to ensure that the induced Markov chain under Thompson sampling mixes with respect to the special state , which is now known to be informative. More formally, define as the set of controls that are informative in state , and the following random variables for every and :

Finally, for each , we define . We then define to be the set of policies for which holds for all , e.g. the set of policies whose controls are consistent with respect to the policy set .

Assumption 3.

There exists a policy , such that the Markov chain induced by is ergodic, and holds (with probability 1) for all and .

One way to interpret Assumptions 2 and 3 is to define another policy class , consisting of all policies for which . Clearly, according to Assumption 2, and by construction. Furthermore, by the design of Thompson sampling (Algorithm 1), we can also conclude that (although ). Finally, Assumption 3 requires that for all , including . These facts are summarized conceptually in Fig. 1. Note that can be any policy in the shaded region for which the induced Markov chain is ergodic. As illustrated later in our examples, this policy also often lies in , but this is not required under our assumptions in general.

In the next section, we will prove that Assumptions 1-3 are sufficient to achieve asymptotically optimal regret bounds for Thompson sampling with uninformative actions. However, these assumptions are not in general necessary. To see this, suppose Assumption 3 is relaxed by defining a secondary state instead of , that need not satisfy Assumption 2. Now, if we further assume that there is a non-zero probability of visiting between successive visits to under any policy in , then states and belong in the same communicating class, and the same convergence guarantees also extend to this setting. This generalization would allow us to establish convergence guarantees for learning the service rate parameters in the examples of the following paragraph.

We now provide several important classes of stochastic control problems that satisfy the required assumptions in this paper. Please note, however, that these examples do not satisfy the stronger assumption on the KL-divergence in [20], and thus the analysis in that paper cannot be applied to them.

Example 1 (Admission Control).

At the beginning of each decision epoch, a decision maker decides whether to open or close a server with a fixed capacity (no backlogging of orders is allowed). A single customer arrives in each period with unknown probability . If the server is open, the customer pays a toll and joins the end of the queue, while if the server is closed, the customer arrival is unobserved. For each customer waiting in the server in each epoch, the decision maker incurs a penalty of . Meanwhile at the end of each period, the first customer in queue completes service and leaves with probability . Opening (closing) the server corresponds to an informative (uninformative) action. It is also easy to check that, if (see, e.g., [23]), the optimal policy satisfies for every , e.g. an empty server should be open, and we set . Finally, let be the policy that always admits a customer unless the server is full. The Markov chain induced by is ergodic, and Assumption 3 holds for any admissible policy that admits when the server is empty. This example can also be generalized to multiple servers, multiple customer types, and general demand or service distributions.

Example 2 (Inventory Management).

A store sells different types of goods. At the beginning of each decision epoch , the store manager observes the amount of each type of good in stock, , and decides whether or not to fully restock the inventories for each type of good up to a level . The delivery time is negligible compared to the length of each decision epoch, and so goods are delivered instantaneously. The wholesale price of a good of type is and it is sold for . Meanwhile, the cost of holding a good of type is per item per period. Furthermore, the demand for each good is modeled as a Poisson random variable with mean , and stock-outs (e.g. demand exceeding the inventory) are unobserved. Since the manager profits from selling every type of good, it is always optimal to reorder inventory when a particular item is out of stock, and so requires that all types of goods are restocked. In this case, the inventory level is guaranteed to be positive at the beginning of each epoch and demand for each type of good is observed. Finally, let be the policy that chooses to restock every type of good in every decision epoch regardless of the inventory level. Since demand is unbounded, the induced Markov chain is clearly ergodic, and Assumption 3 holds for all admissible policies that reorder in state .

Example 3 (Dynamic Pricing).

Customer demand in each period follows a Poisson distribution with parameter . When customers arrive, they observe the firm’s posted price and the number of customers already in queue , and decide whether to join the queue or leave. Given that the value of the service to the customer is , the waiting cost per epoch is , and the service time per customer is geometric with parameter , the customer will only join the queue if (see, e.g., [9, 11]). At the beginning of each decision epoch, the firm fixes a price from the set , where without loss of generality we may assume . We also assume that so the firm can choose to reject customers, and also assume that so the problem is non-trivial. Clearly, the queue has an effective capacity, given by . Similar to the admission control problem, the cost incurred by the firm is per customer in queue per period. Finally, let and consider the policy that always sets the lowest price . The induced Markov chain is ergodic, and Assumption 3 holds for all admissible policies that fix an attractive price when the queue is empty.

III-B Asymptotically Optimal Convergence Rates

The main theoretical result of this paper establishes that Thompson sampling with uninformative actions achieves asymptotically optimal learning rates (as measured by expected posterior probability of sampling the incorrect parameter) and regret. Here, we sketch out the main reasoning necessary to establish both results, and provide formal proofs in the following sections.

First, it is necessary to understand how fast the time-homogeneous Markov chain induced by the policy mixes. In the following section, it will be shown that the Markov chain induced by the policy mixes at an exponential (e.g. geometric) rate, and so Assumption 3 ensures that the special state will be visited at a linear rate asymptotically.

Proposition 1.

For sufficiently small, there exists and such that

By making use of Proposition 1, we then prove that the posterior error converges to zero exponentially fast under our more general assumptions.

Theorem 2.

IV Proofs of Theoretical Results

IV-A Proof of Proposition 1

We first cite the following result.

Proposition 4 ([14]).

Let be a Markov chain taking values in . Suppose there exists a probability measure on , , and integer such that

| (3) |

Let , and define and , and suppose that . Then,

for .

The condition (3) is introduced earlier in [4], in which the authors provide sufficient conditions under which it holds for general Markov chains. In particular, if is discrete, then (3) holds if the -step transition matrix of has a column whose elements are uniformly bounded away from zero. Furthermore, if is finite, then (3) holds if is aperiodic and irreducible.

Let denote the ergodic Markov chain induced by policy specified in Assumption 3. First, Assumption 2 states that is an informative action for every . Since Thompson sampling (randomly) selects one of the optimal policies in each decision epoch, actions selected by Thompson sampling upon each visit to state must also be informative and thus . By replacing the abstract policy with Thompson sampling in Assumption 3, we have for all , or in other words:

which hold for all . Therefore, it remains to bound the rightmost quantity.

To this end, let . By Assumption 3, is time-homogeneous and ergodic, and hence a limiting distribution exists such that as for some . More precisely, for each there exists (dependent on ) such that for , in which case:

Now, choosing any and substituting , we obtain:

which is valid for all where . Finally, we apply Proposition 4 by setting , , and , so that there exists and dependent on and such that

Therefore, by setting , we have found and such that

This completes the proof.

IV-B Proof of Theorem 2

The proof begins in an identical manner to [20] but diverges after the second paragraph. We begin by writing as:

| (4) |

where , and where:

and where the processes and are understood to evolve under Thompson sampling.

We define the processes as

and for convenience, the filtration as the sequence of sigma algebras ., e.g, by concatenating to . We can now separate into a martingale and a predictable process using the Doob Decomposition theorem [22]:

where is an -martingale under by construction, with since .

Since is -measurable, for every , with , we have:

where the constants follow from the definition of an informative action. As a result, is a non-decreasing process with and:

where .

We now take expectation of the posterior in (IV-B):

For , we define the event and condition as follows:

For , we define the event and the scalar . Then:

which yields the lower bound

| (5) |

Next, we apply DeMorgan’s law and the union bound as follows:

| (6) |

Using conditional probability, the summand can be further simplified to

Next, we apply Proposition 1, so there exists (dependent on and ) such that for , and thus

Since for all , is an -martingale with bounded increments, and applying Azuma’s inequality [22] obtains:

which holds for large, small and . Plugging this into (IV-B):

and therefore (5) leads to:

for sufficiently large and some dependent on and . Finally:

for sufficiently large and some . It is easy to find so that holds for all . This completes the proof.

V Numerical Examples

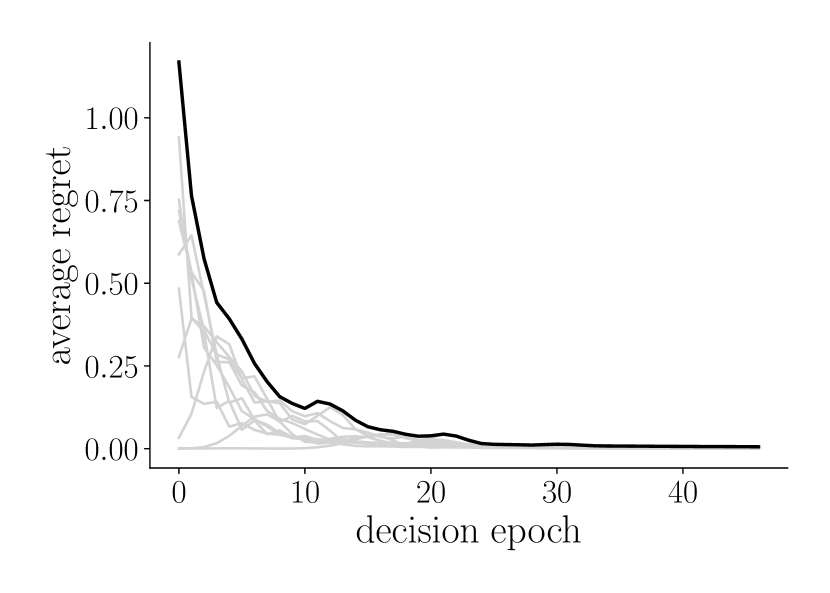

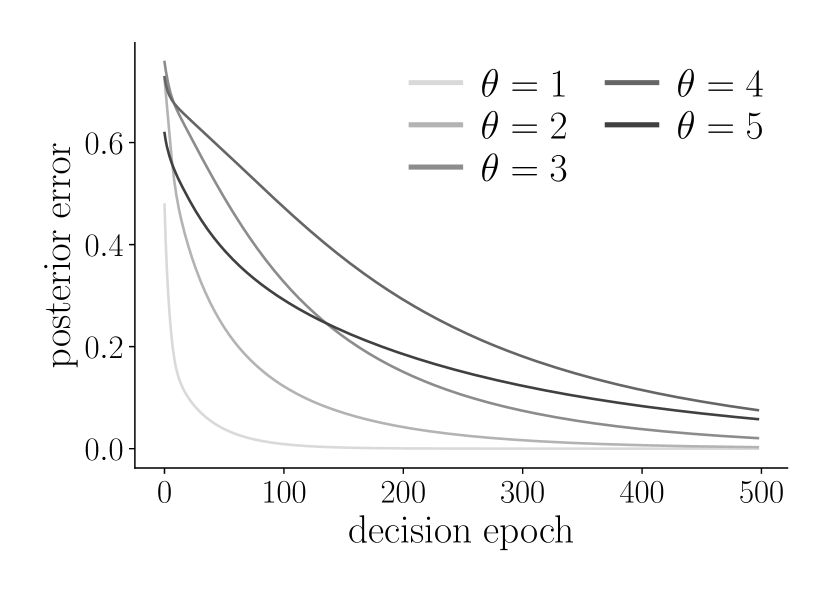

(a)-(c) empirically estimated average regret (top) and posterior error rate for admission control, inventory management and dynamic pricing problems. The dark curve in the topmost figures corresponds to regret of the true unknown parameter value, while the gray curves corresponds to regret of the other parameters. (d) inverse of the empirically estimated regret bounds, showing approximately linear growth for large .

In this section, we apply Thompson sampling (Algorithm 1) to three instantiations of PMDPs with arrival rate uncertainty and uninformative actions given in Section III-A. The goal of these experiments is to verify the asymptotically optimal regret bounds hold when Thompson sampling is applied in the online setting with uninformative actions.

The admission control problem assumes and . The inventory management problem assumes a single type of good and and . Finally, the dynamic pricing problem assumes , prices for , and . The prior is assigned to a uniform distribution for all problems, and relative value iteration is used to calculate for each . Finally, to estimate the learning rate and regret for each problem, Thompson sampling is run for sample paths, and the results across paths are averaged for each decision epoch .

The results are reported in Figure 2(d). As illustrated in plots (a)-(c), the empirical expected regret for the true parameter value (shown in black) tends to zero over decision epochs for all problems. To validate that the rate is indeed , (d) illustrates the corresponding inverse of the empirical regret values, which becomes linear for large and confirms Theorem 3. The analysis was conducted on an Intel quad core processor at 2.5 GHz with 8 GB ram, with an average running time of around seconds per sample path and parameter pair. Due to its low time complexity, Thompson sampling can be easily implemented for larger parameter spaces and longer planning horizons, which converging at the asymptotically optimal rate.

VI Conclusion

We studied parameterized MDPs described by a set of unknown parameters learned using Bayesian inference. A crucial feature of such models was the presence of “uninformative” actions, which do not provide any information about the unknown parameters and slow down the rate of learning. We contribute a set of assumptions for PMDPs under which Thompson sampling guarantees an asymptotically optimal expected regret bound of , which are easily verified for many classes of problems such as queuing, inventory control, and dynamic pricing. Numerical experiments validated the theory and showed that, when our assumptions can be verified, provides a computationally efficient algorithm for solving parameterized MDPs.

References

- Afèche and Ata [2013] Philipp Afèche and Barış Ata. Bayesian dynamic pricing in queueing systems with unknown delay cost characteristics. Manufacturing & Service Operations Management, 15(2):292–304, 2013.

- Agrawal and Goyal [2012] Shipra Agrawal and Navin Goyal. Analysis of thompson sampling for the multi-armed bandit problem. In Conference on learning theory, pages 39–1. JMLR Workshop and Conference Proceedings, 2012.

- Araman and Caldentey [2009] Victor F Araman and René Caldentey. Dynamic pricing for nonperishable products with demand learning. Operations research, 57(5):1169–1188, 2009.

- Asmussen et al. [1992] Søren Asmussen, Peter W Glynn, and Hermann Thorisson. Stationarity detection in the initial transient problem. ACM Transactions on Modeling and Computer Simulation (TOMACS), 2(2):130–157, 1992.

- Aviv and Pazgal [2005] Yossi Aviv and Amit Pazgal. A partially observed markov decision process for dynamic pricing. Management science, 51(9):1400–1416, 2005.

- Banjević and Kim [2019] Dragan Banjević and Michael Jong Kim. Thompson sampling for stochastic control: The continuous parameter case. IEEE Transactions on Automatic Control, 64(10):4137–4152, 2019. doi: 10.1109/TAC.2019.2895253.

- Bertsimas and Perakis [2001] Dimitris J Bertsimas and Georgia Perakis. Dynamic pricing: A learning approach. Massachusetts Institute of Technology, Operations Research Center, 2001.

- Besbes and Zeevi [2012] Omar Besbes and Assaf Zeevi. Blind network revenue management. Operations research, 60(6):1537–1550, 2012.

- Borgs et al. [2014] Christian Borgs, Jennifer T Chayes, Sherwin Doroudi, Mor Harchol-Balter, and Kuang Xu. The optimal admission threshold in observable queues with state dependent pricing. Probability in the Engineering and Informational Sciences, 28(1):101, 2014.

- Burnetas and Smith [2000] Apostolos N Burnetas and Craig E Smith. Adaptive ordering and pricing for perishable products. Operations Research, 48(3):436–443, 2000.

- Chen and Frank [2001] Hong Chen and Murray Z Frank. State dependent pricing with a queue. IIE Transactions, 33(10):847–860, 2001.

- Chowdhury et al. [2021] Sayak Ray Chowdhury, Aditya Gopalan, and Odalric-Ambrym Maillard. Reinforcement learning in parametric mdps with exponential families. In Arindam Banerjee and Kenji Fukumizu, editors, Proceedings of The 24th International Conference on Artificial Intelligence and Statistics, volume 130 of Proceedings of Machine Learning Research, pages 1855–1863. PMLR, 13–15 Apr 2021.

- Ferreira et al. [2018] Kris Johnson Ferreira, David Simchi-Levi, and He Wang. Online network revenue management using thompson sampling. Operations research, 66(6):1586–1602, 2018.

- Glynn and Ormoneit [2002] Peter W Glynn and Dirk Ormoneit. Hoeffding’s inequality for uniformly ergodic markov chains. Statistics & probability letters, 56(2):143–146, 2002.

- Gopalan and Mannor [2015] Aditya Gopalan and Shie Mannor. Thompson sampling for learning parameterized markov decision processes. In Conference on Learning Theory, pages 861–898, 2015.

- Harrison et al. [2012] J Michael Harrison, N Bora Keskin, and Assaf Zeevi. Bayesian dynamic pricing policies: Learning and earning under a binary prior distribution. Management Science, 58(3):570–586, 2012.

- Huang et al. [1976] Cheng-Chi Huang, Dean Isaacson, and B Vinograde. The rate of convergence of certain nonhomogeneous markov chains. Zeitschrift für Wahrscheinlichkeitstheorie und Verwandte Gebiete, 35(2):141–146, 1976.

- Jain et al. [2015] Aditya Jain, Nils Rudi, and Tong Wang. Demand estimation and ordering under censoring: Stock-out timing is (almost) all you need. Operations Research, 63(1):134–150, 2015.

- Keskin and Zeevi [2014] N Bora Keskin and Assaf Zeevi. Dynamic pricing with an unknown demand model: Asymptotically optimal semi-myopic policies. Operations Research, 62(5):1142–1167, 2014.

- Kim [2017] Michael Jong Kim. Thompson sampling for stochastic control: The finite parameter case. IEEE Transactions on Automatic Control, 62(12):6415–6422, 2017.

- Kim and Makis [2012] Michael Jong Kim and Viliam Makis. Optimal control of a partially observable failing system with costly multivariate observations. Stochastic Models, 28(4):584–608, 2012.

- Klenke [2013] A. Klenke. Probability Theory: A Comprehensive Course. Universitext. Springer London, 2013. ISBN 9781447153610.

- Naor [1969] Pinhas Naor. The regulation of queue size by levying tolls. Econometrica: journal of the Econometric Society, pages 15–24, 1969.

- Osband et al. [2013] Ian Osband, Daniel Russo, and Benjamin Van Roy. (more) efficient reinforcement learning via posterior sampling. In Advances in Neural Information Processing Systems, pages 3003–3011, 2013.

- Russo and Van Roy [2014] Daniel Russo and Benjamin Van Roy. Learning to optimize via posterior sampling. Mathematics of Operations Research, 39(4):1221–1243, 2014.

- Russo et al. [2018] Daniel J Russo, Benjamin Van Roy, Abbas Kazerouni, Ian Osband, Zheng Wen, et al. A tutorial on thompson sampling. Foundations and Trends® in Machine Learning, 11(1):1–96, 2018.

- Thompson [1933] William R Thompson. On the likelihood that one unknown probability exceeds another in view of the evidence of two samples. Biometrika, 25(3/4):285–294, 1933.