The training response law explains how deep neural networks learn

Abstract

Deep neural network is the widely applied technology in this decade. In spite of the fruitful applications, the mechanism behind that is still to be elucidated. We study the learning process with a very simple supervised learning encoding problem. As a result, we found a simple law, in the training response, which describes neural tangent kernel. The response consists of a power law like decay multiplied by a simple response kernel. We can construct a simple mean-field dynamical model with the law, which explains how the network learns. In the learning, the input space is split into sub-spaces along competition between the kernels. With the iterated splits and the aging, the network gets more complexity, but finally loses its plasticity.

I Introduction

Our intelligence or nervous system changes its own shape through our experience. In other words, the nervous system has a dynamics driven by external stimuli. In the field of artificial intelligence, we implement such a dynamical system with artificial neural networks. In a few decades, we actually have an astonishing progress in the performance of the deep neural networks (Ciresan et al., 2012; Krizhevsky et al., 2012; Bengio et al., 2013; LeCun et al., 2015; Schmidhuber., 2015; Goodfellow et al., 2016). In theory, the deep neural networks can approximate any functions in some conditions (K. Hornik, 1989; Liang and Srikant., 2016; Kratsios and Bilokopytov., 2020). If we can optimize the network appropriately, we get the network which captures the relation not only in the training data set but also in unknown ones as the result of generalization (Zhang et al., 2017; Arthur et al., 2018; Lee et al., 2019). In spite of many such applications, our understanding on the mechanism behind the deep neural network is very limited. We study the mechanism of the training with very simple networks here.

In the training, the network parameters are adjusted along the input and output relation in the given data set. In the framework of supervised learning, we usually have a fixed and finite data set, the pairs of input and output, . The network realizes a function, , expected to predict the given output. In general, we optimize the network parameters, , to minimize the loss function, , which evaluates the difference between the predicted value, , and the given output, . This optimization is called as training. In the optimization, we usually rely on the gradient-based method, where the gradient of the loss against the network parameter, , gives the training step. Actually, we can write the step as,

| (1) |

where the parameter, , is a parameter for adjusting the size of each learning step. This is called as a learning rate.

In the optimization, non-gradient-based algorithms, like random search, are usually not adopted. It is known that such algorithms are effective to avoid local optima, but it is believed that we do not have serious problems on the point (Goodfellow et al., 2015). Under such an assumption, we can say gradient-based method is effective enough. We call this optimizing process as learning dynamics.

As the simplest training step, we can consider a pair of data set, . With an optimizing step of the parameters, the predicted value, , is shifted a little, . We should note this shift has the effect on any predictions, . We call the shift, , as a learning response. As the accumulation of the learning responses, the network realizes the generalized solution, if it works well. We can consider on a famous image classification problem, CIFAR10(Alex., 2009). As an example, the problem is convenient for the explanation. In the classification problem, we have dataset with the pairs of an image and a label. Each image includes an object from 10 classes, airplane, car, bird, cat, deer and so on. The label shows the class and the network should give the answer for an input image. When we train a network with an image, , of a car, the learning response, , is expected to have the similar effects on predictions for other images of a car as well. On the contrary, prediction for images without a car should not have similar one.

We know deep neural networks can nicely do that in some ways, but we do not know so much on the process. In a few years, we have intensive studies on the neural tangent kernel theory(Arthur et al., 2018; Lee et al., 2019; Seleznova and Kutyniok, 2021, 2022). The theory says we can solve the learning response in an ideal case, where we have infinite neural units in a layer(Arthur et al., 2018; Lee et al., 2019). In general, deep neural networks consist of layers with the units and the number of layers and units are finite. The theory cannot fully explain the real networks, therefore, but we should note some points here. In the ideal case, the response can be solved and even is constant during the training. On the contrary, the response is not constant with the finite network and it depends on the initial condition as well(Seleznova and Kutyniok, 2021, 2022).

We focus on the finite case as well, but another ideal problem is considered here. Since the real world data, like CIFAR10, is too complex to analyze the data space, we start with a much simpler one. For easier analysis, random problems are convenient, as other problems, e.g. TSP and k-SAT(Schneider and Kirkpatrick., 2006; Percus et al., 2005). We know the structure of the data space and have some techniques, like mean field approximation, in the random cases. By considering the ensemble of such random problems, we expect to get the fundamental understanding independent of a specific dataset instance. As a random problem, we consider random bit encoders, RBE. The input is 1d bit-string and the output is a binary value, 0 or 1. We can generate random problems in the setting and study its statistical features.

In this paper, we show the law of learning response in a simple form. In the form, we can confirm a kind of aging. The response gradually diminishes along the training and varies its specificity to the input, . With the law, we can construct a simple learning dynamics model for the dynamical understanding. It tells us that the dynamics is not a straight forward relaxation to the optimal solution. As we can notice, the prediction often shows back and forth dynamics. Our model gives an explanation for such a complex one. As a typical scenario, we can describe the dynamics as the iterated splitting process of the input space, . The split regions are encoded differently into the output space, . We also discuss on the impact of our findings on the machine learning in general.

II Model

We study the training response of the neural network, , with the loss function, . The network parameter, , should be optimized through the training. In the gradient-based optimization, we can write the update process as the following,

| (2) |

As the training step, , we can use of the gradient,

| (3) |

where the parameter, , is the learning rate. The network is usually trained with training dataset, , at each step. However, we focus on a training response, , for simplicity. Once we understand its behaviour, we should understand the whole training dynamics as the sum of them.

The shift of the prediction, training response, can be described like,

| (4) |

The term, , is called as neural tangent kernel, NTK, and it can be asymptotically solvable if the layer of the neural network has infinite neurons in each layer(Arthur et al., 2018; Lee et al., 2019).

If we can assume the loss function is just the mean squared error, the training response can be written like,

| (5) |

To study the structure of the training response or NTK, we use a very simple neural network with layers,

| (6) |

where each layer is described as a function, . In general, we have input channels and output ones in each layer. We can write the layer function with the input, , and the output, ,

| (7) |

where the function, , is a non-linear one and we call it as activation function. As the function, here, we use ELU or Relu(Clevert et al., 2016). As for the function, , we can use a linear transformation, , or a linear convolution. To reduce the number of parameters, we usually use the convolution and it is called as convolutional neural network(Fukushima., 1980; Zhang., 1988; Zhang. et al., 1990). Here, we use a simple network with convolution layers and fix the number of channels, , for simplicity. Finally, we should output prediction, , and use a fully connected linear transformation with the sigmoidal activation as the last layer.

As the training dataset, we use a pair of 1D bit-string and a binary output. In other words, we want the network to learn the encoding, relation between the bit-string and the output. As a random problem, we can randomly sample a bit-string with the fixed-size, . The output is usually a deterministic value in practical applications, but we assumue an encoding distribution, , and sample a binary value at each training step. This means the distribution can be written like, . To be noted, we can interprete this type of encoding as noised training. If either of one, or , is 0 or 1, it means pure signal without any noise. We call this type of encoding problem as random bit-string encoder, RBE, here.

We firstly study the structure of the training response with the case of single training data point and show a simple form which describes the response. Then the interaction between the training responses is studied with the case of two points.

The simple form of training response tells us that the learning dynamics can be described with very simple mean-field equations in our case, RBE. We also demonstrate the learning dynamics and compare with the simple dynamical model.

III Results

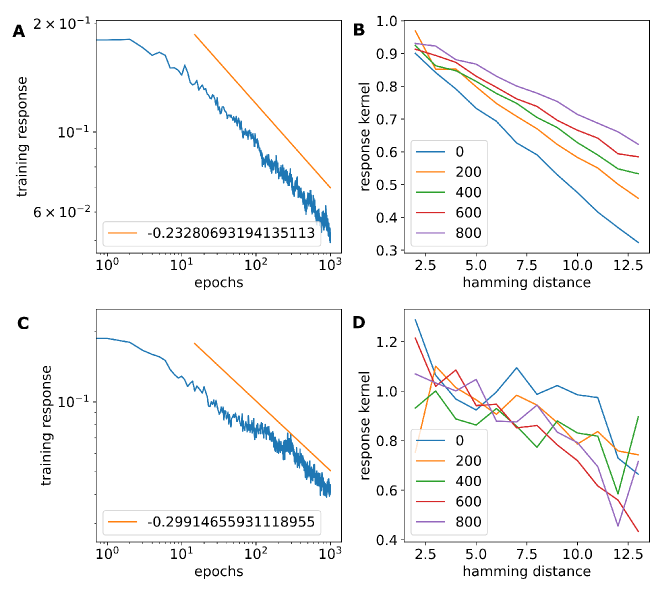

In Fig. 1, we show the averaged response, , along the Hamming distance from the input, . The Hamming distance, , can be described as, . In other words, it means the sum of different bits between the 2 bit-strings.

The network consists of layers and each layer has convolutions filters. Here we set the parameters, and . The learning rate, , is . We used SGD as the optimizer and implemented it with keras (Robbins and Monro., 1951; LeCun et al., 2012; Toulis and Airoldi., 2017; Sutskever et al., 2016; Polyak and Juditsky., 1992; Duchi et al., 2011). We call this as the standard setting in this paper. The averaged response can be expressed with a simple linear kernel and its size. After the initial training phase, the size shows the power law like decay. We can summarize this response in the following,

| (8) |

where K is the response kernel. The dynamics is driven along the epoch, , and the power law term, , means the decay along the training epochs. The kernel is almost linear against the Hamming distance between the training input, , and the affected one, . The slope varies along the training epoch, , depending on the training distribution. If the training is non-biased, , the kernel slope is gradually diminished along the training. On the contrary, the slope is enhanced in the case of biased training, .

We also tested the various conditions, shown in Fig. 2, and the results can be expressed with the same law. We tested the numbers of layers, and , and the number of convolution filters, and , with the standard setting. In addition, we tested one more activation function, Relu (Nair and Hinton., 2010). For all of the cases, the results with non-biased and biased training are shown. We can confirm the same tendency in those results and the simple expression can be applied for the cases as well. The aging, decay in the size of the response, shows clearer power law like behavior with ELU rather than Relu.

As a more complicated response, we show the results with the dropout effect for each convolutional layer, in Fig. 3(Srivastava et al., 2014; Baldi and Sadowski., 2013; Li et al., 2016). The network is the standard one, but the outputs from all of the mid-layers are dropout with the rate, . We should note the slopes of the response kernels are smaller than the cases without dropout. Since the dropout has the effect of coarse graining, the specificity of the response kernels should be reduced and the results are reasonable.

We show the case with multiple training data points for understanding the interaction of the responses, in Fig. 4. As the simplest case, we consider RBE with the two points training distribution, . As the encoding, , we use the non-biased distribution, , and biased one, . We can confirm different aging along the Hamming distances between the inputs, and . This means a training effect for the input, , is not restricted to the training response, , but also to others, like , depending on the distance between the inputs. If the distance between the points is small, the agings are enhanced with each other, but the enhancement is very limited in the distant case. This shows the training with an input, , has a strong effect on the points around that, not only in the prediction, , but also in the training response, , as well. On the other hand, the interaction effect is very limited if the points are distant enough.

As the demonstration of the training response law, we consider another simple situation,

| (9) |

We have two groups of training dataset, and . In the group, , training inputs, , are encoded into and inputs, , are encoded into in the group, .

The short term learning dynamics of the prediction can be written in the following,

| (10) |

We can simplify it with the mean-field approximation under the sparse condition,

| (11) |

where the mean-field densities, and , and averaged predictions, and , are used. The responses would have non-linear interaction with each other in the dense condition, but we can treat it as just the linear summation in this case. The aging effect is ignored in the case of short-term mean-field dynamics, but the equation is not so different except for the time and spatial scale of the dynamics even in the case requiring the original equation. The mean-field model means the dynamics is determined just along the ratio between the densities, and . The loss of majority decreases in the first and the other one decreases later.

We show the corresponding cases with the neural network, in Fig. 5. The network has the standard setting. The training data set consists of 20 pairs, . As the inputs, random bit-strings are generated and those are assigned into or with the given ratio, . In the plot, we show the time series of each prediction, and , with the light color. The bold ones show the average of those dynamics. As we can confirm, all of the lines can be divided into two phases. In the first, all of them go to to the majority side and then the minorities turn its direction into the other side. In other words, the dynamics is determined just along the ratio, .

We also show hierarchically distributed case, in Fig. 6, as an example of a more complicated one. The training data set consists of three groups, , and , and those are hierarchically distributed. All of the input bit-strings have the length, , and those are randomly distributed, but the second and third groups, and , are localized,

| (12) |

where the Hamming distance, , is used. Furthermore, the bit-strings the group, , are localized in a narrower area,

| (13) |

In the fig. 6, the restricted areas have the sizes, and . Among those groups, two ones, and , are encoded as 0 and the other one, is encoded as 1.

In the figure, we show all of the averaged predictions, , in the light color and bold ones show the average of them within the each group. We iterated this for 20 times and the results are shown. Since the encoding majority is 0, all curves show a decrease at the first. In the second, the minor encoding group, , turns its direction, but the last group, , is accompanied with the movement. This is because the group is the majority in the localized groups. Finally, after some epochs, the last one, , gets released from the group, , because the loss of the group, , is reduced enough and the training response, , is diminished as well. In sum, the input space is classified as the same one at the first and the space is split into two classes in the second. The newly split class is split again along its encoding finally.

IV Discussion

In supervised learning, we train a model with the given data. The model is optimized to satisfy the input-output relations in the data and it is generalized in someway. To understand the process, we studied the response function, , and studied its structure with a very simple problem, RBE. As a model network, we studied a very simple convolutional neural network and found a training response law. That consists of a power law like decay and the response kernel. This gives NTK a simple form. It tells us the training dynamics with the finite sized network.

Since our model network is very simple, the response kernel is very simple linear one. However, the kernel can have a more complicated non-linear form, if the network architecture is more sophisticated. In reality, we can show the kernel for the network with drop-out layers, in fig. 3. It shows broader peak around the input, . We believe the response kernel analysis is effective for more complicated networks, in the similar way.

We know NTK can be analytically solved with the central limit theorem, if each layer has infinite units (Lee et al., 2019). It has no dependence on its dynamic state. On the contrary, we can confirm aging in its dynamics, but the form is still kept simple, in our cases. Since our network is finite one, the parameter fluctuates toward the area with less training response, driven by kinesis (Kendeigh., 1961). The shape of response kernel is very similar with NTK of infinite linear network, but it shows some dependence on the dynamics. In the short term, we can derive much simpler dynamical model with mean-field approximation. We can easily understand how the network learns with our simple situation, RBE. Initially the whole input space is encoded into the majority. After the initial encoding, minority clusters turn over its encoding. In other words, the input space is roughly split into the majority and minorities. This type of space split can happen in an iterated manner. Finally, we get to an optimal split, if the network has enough degree of freedom.

Our view tells us how deep neural networks can get to generalized and non-overfit solutions. The network is optimized to minimize the loss, which shows the error only for the training data set. In other words, we have no explicit loss for inputs in general. Rather, all we can know is the loss for training data set. We hope the generalization is achieved in a implicit manner. The training response shows the implicit effect. Since the training is usually done with a lot of training data points, the generalization can be seen as a result of the competition between the training responses of them.

As we noticed, the dynamics is similar with that of kernel machines (Shawe-Taylor and Cristianini., 2004; Koutroumbas and Theodoridis., 2008; Hofmann et al., 2007). In it, we optimize the linear network,

| (14) |

where the activation function, , outputs the prediction along the network parameter, and . In this model, the vector of kernel similarity, or , constitutes the training input. Since this network is very simple linear one, the response kernel would be a linear one, as we shown. In the kernel machine, we need sophisticated design of the kernel similarity, therefore. On the other hand, in the case of deep neural networks, we do not need such a sophisticated kernel design. Instead, the network architecture would realize it as a response kernel and the training dynamics. As we shown, the dynamics is not so complex, if we can ignore the aging or interaction of the kernels. However, it may be much more complex, if those effects cannot be ignored.

In our results, the response kernels are shown with non-deterministic encoding, and , but we already tested the case with deterministic encoding, in Fig. 7. Especially, the aging of the response size shows a clear power law. However, it was hard to confirm the convergence of the response kernels. In this deterministic case, the aging exponent is larger than non-deterministic ones. The kernels seem to be divergent as the training proceeds. The diverged kernel would have no significant effects on the dynamics therefore. Rather, the dynamics would be determined with mainly non-diverged one.

We studied a simple problem, RBE, but the same results can be observed in a real problem. We tested the training response with a known dataset, MNISTDeng (2012). We want to classify images of hand-written digit, , along their digits. To focus on the training response against such real images, we trained the same type of convolutional network with a pair of randomly sampled MNIST image and encoding distribution, , in the similar way with the RBE. The results are shown in FIG. 8 and 9. We can confirm both the power law decay and the distance dependent response kernel. The kernel is not so clear linear one but monotonically decreasing one. To be noted, we can observe some isolated responses at the same time. This suggests a chaotic phase depending on the initial condition(Seleznova and Kutyniok, 2021, 2022). On the contrary, the aging seems to be more stable, but we need more tests to have a clear conclusion.

In our problem setting, RBE, we originally intended to analyse the thermal equilibrium under a noised training. In other words, we expected to describe the training dynamics as a quasistatic process, which can be characterized with the noise ratio. However, we unexpectedly found the aging process instead of the equilibrium. As we discussed, the training dynamics should be directed to zero-response state against the training stimuli. That is because the residence time should be maximized under such a stochastic dynamics. We guess the network is redundant enough to realize not only the input output relation, but also such a no response state.

We studied the non-linear complex dynamics of the neural network with the simple situation. As we know, such a complex system often has a very simple description in spite of the complexity in its appearance (Bar-yam., 1999; Newman., 2011). As one more example of compex system, we found the simple law in the neural network. This type of simplicity suggests the universality of the mechanism in the variety of complex phenomena. We may find the same type of algorithmic aging in the nervous system or complex dynamical networks in general (Haken, 1988). As other studies on complex systems, the algorithmic matters should be fruitful subjects to promote understanding of complexity in general (Schneider and Kirkpatrick., 2006).

Appendix

The training responses for the deterministic encoding, and , are shown in the Fig. 7. The network is the standard one. We show the results for the activation functions, ELU and Relu. In the size, we can confirm the power law, but the kernels do not show convergence. Since the network has the sigmoid function as the activation function for the output, convergence in the output does not mean that of network parameters. This result suggests the fragility of the response kernel and the prediction in the end.

As an realistic example, we show the training response of the same type of network, which is trained with MNIST(Deng, 2012). As the encoding distribution, we used the ratio, . In the FIG. 8, the aging is shown and we can observe a power law decay. In this case, the network has 2 dimensional convolution along the input image size. The activation function is ELU and the other network parameters are the same as the standard one. In the FIG. 9, the response kernels are shown. The horizontal axis is the mean distance between two images, . In other words, the plot shows the response kernel, . After the training of 500 epochs, we randomly sampled 1000 from 60000 images and plotted the response.

Acknowledgements.

This work was originally started from the discussions with M. Takano and H. Kohashiguchi and partially supported by PS/PJ-ETR-JP.References

- Ciresan et al. (2012) U. Ciresan, D. Meier, and J. Schmidhuber., CVPR pp. 3642–3649 (2012).

- Krizhevsky et al. (2012) I. Krizhevsky, A. Sutskever, and G. Hinton., NIPS pp. 1097–1105 (2012).

- Bengio et al. (2013) A. Bengio, Y. Courville, and P. Vincent., IEEE Transactions on Pattern Analysis and Machine Intelligence 35(8), 1798 (2013).

- LeCun et al. (2015) Y. LeCun, Y. Bengio, and G. Hinton., nature 521, 436 (2015).

- Schmidhuber. (2015) J. Schmidhuber., Neural Networks 61, 85 (2015).

- Goodfellow et al. (2016) I. Goodfellow, Y. Bengio, and A. Couville., Deep Learning (MIT Press, 2016).

- K. Hornik (1989) H. W. K. Hornik, M. Stinchcombe, Neural Networks 2, 359 (1989).

- Liang and Srikant. (2016) S. Liang and R. Srikant., ICLR 13 (2016).

- Kratsios and Bilokopytov. (2020) A. Kratsios and E. Bilokopytov., Advances in Neural Information Processing Systems 33, 10635 (2020).

- Zhang et al. (2017) C. Zhang, S. Bengio, M. Hardt, B. Recht, and O. Vinyals., ICLR (2017).

- Arthur et al. (2018) J. Arthur, F. Gabriel, and C. Hongler., Advances in neural information processing systems (2018).

- Lee et al. (2019) J. Lee, L. Xiao, S. Schoenholz, Y. Bahri, R. Novak, J. Sohl-Dickstein, and J. Pennington., Advances in neural information processing systems (2019).

- Goodfellow et al. (2015) I. J. Goodfellow, O. Vinyals, and A. M. Saxe., ICLR (2015).

- Alex. (2009) K. Alex., Tech. Rep. Univ. of Toronto (2009).

- Seleznova and Kutyniok (2021) M. Seleznova and G. Kutyniok, Proc. of Machine Learning Research 145, 1 (2021).

- Seleznova and Kutyniok (2022) M. Seleznova and G. Kutyniok, arXiv:2202.00553 (2022).

- Schneider and Kirkpatrick. (2006) J. Schneider and S. Kirkpatrick., Stochastic Optimization (Springer, 2006).

- Percus et al. (2005) A. Percus, G. Istrate, and C. Moore., Computational Complexity and Statistical Physics (Oxford University Press, 2005).

- Clevert et al. (2016) D. Clevert, T. Unterthiner, and S. Hochreiter., ICLR (2016).

- Fukushima. (1980) K. Fukushima., Biological Cybernetics 36(4), 193 (1980).

- Zhang. (1988) W. Zhang., Proceedings of Annual Conference of the Japan Society of Applied Physics (1988).

- Zhang. et al. (1990) W. Zhang., K. Itoh., J. Tanida., and Y. Ichioka., Applied Optics 29(32), 4790 (1990).

- Robbins and Monro. (1951) H. Robbins and S. Monro., Annals. of Mathematical Statistics 22(3), 400 (1951).

- LeCun et al. (2012) Y. LeCun, L. Bottou, G. Orr, and K. Muller., Efficient BackProp. (Neural Networks: Tricks of the Trade) (Springer, Berlin, Heidelberg, 2012).

- Toulis and Airoldi. (2017) P. Toulis and E. Airoldi., Annals. of Statistics 45(4), 1694 (2017).

- Sutskever et al. (2016) I. Sutskever, J. Martens, G. Dahl, and G. Hinton., ICML 28, 1139 (2016).

- Polyak and Juditsky. (1992) B. Polyak and A. Juditsky., SIAM J. Control Optim. 30(4), 838 (1992).

- Duchi et al. (2011) J. Duchi, E. Hazan, and Y. Singer., JMLR 12, 2121 (2011).

- Nair and Hinton. (2010) V. Nair and G. Hinton., ICML (2010).

- Srivastava et al. (2014) N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov., JMLR 15(1) (2014).

- Baldi and Sadowski. (2013) P. Baldi and P. Sadowski., NIPS (2013).

- Li et al. (2016) Z. Li, B. Gong, and T. Yang., NIPS (2016).

- Kendeigh. (1961) S. Kendeigh., Animal Ecology (Prentice-Hall, Inc., 1961).

- Shawe-Taylor and Cristianini. (2004) J. Shawe-Taylor and N. Cristianini., Kernel Methods for Pattern Analysis (Cambridge University Press, 2004).

- Koutroumbas and Theodoridis. (2008) K. Koutroumbas and S. Theodoridis., Pattern Recognition (Academic Press, 2008).

- Hofmann et al. (2007) T. Hofmann, B. Scholkopf, and A. J. Smola., The Annals of Statistics 36(3) (2007).

- Deng (2012) L. Deng, IEEE Signal Processing Magazine 29, 141 (2012).

- Bar-yam. (1999) Y. Bar-yam., Dynamics of Complex Systems (CRC Press, 1999).

- Newman. (2011) M. Newman., Am. J. Phys. 79, 800 (2011).

- Haken (1988) H. Haken, Information and Slef-Organization (Springer, 1988).