The Study of Highway for Lifelong Multi-Agent Path Finding

Abstract

In modern fulfillment warehouses, agents traverse the map to complete endless tasks that arrive on the fly, which is formulated as a lifelong Multi-Agent Path Finding (lifelong MAPF) problem. The goal of tackling this challenging problem is to find the path for each agent in a finite runtime while maximizing the throughput. However, existing methods encounter exponential growth of runtime and undesirable phenomena of deadlocks and rerouting as the map size or agent density grows. To address these challenges in lifelong MAPF, we explore the idea of highways mainly studied for one-shot MAPF (i.e., finding paths at once beforehand), which reduces the complexity of the problem by encouraging agents to move in the same direction. We utilize two methods to incorporate the highway idea into the lifelong MAPF framework and discuss the properties that minimize the existing problems of deadlocks and rerouting. The experimental results demonstrate that the runtime is considerably reduced and the decay of throughput is gradually insignificant as the map size enlarges under the settings of the highway. Furthermore, when the density of agents increases, the phenomena of deadlocks and rerouting are significantly reduced by leveraging the highway.

Index Terms:

Path Planning for Multiple Mobile Robots or Agents, Multi-Robot Systems, Motion and Path PlanningI Introduction

Multi-Agent Path Finding (MAPF) is the problem of planning collaborative paths for a team of agents while avoiding collisions. MAPF has been widely used in applications like video games [1], traffic management [2], and delivery policies [3]. Several MAPF solvers has been proposed in the past years, such as CA* [4], CBS [5], ECBS [6], which consider the path planning problem in a one-shot manner, assuming each agent has only one pair of start and goal locations. Generally, these methods are not suitable for applications in online scenarios without reasonable modification.

Considering applications in the real world, especially for robots in fulfillment warehouses [7], instead of having only one pair of start and goal locations, new goals are assigned on the fly. This scenario is referred to as lifelong MAPF. Although current solutions for lifelong MAPF [8, 9, 10, 11] can handle a sequence of goals, they present poor computational efficiency or low throughput (i.e, finished tasks per timestep). Therefore, Rolling-Horizon Collision Resolution (RHCR) [12] incorporates the idea of windowed MAPF [4] into the lifelong MAPF framework, separating a lifelong MAPF problem into a sequence of one-shot MAPF problems, which gains computational efficiency without sacrificing throughput. Nevertheless, the approach still encounters undesirable phenomena of deadlocks and rerouting [4, 12].

In order to solve such challenges in lifelong MAPF, we study the methods of highway typically utilized in one-shot MAPF [13, 14, 15]. First, we leverage a directed map [13, 14] by enforcing agents to move along specific directions. This simple yet effective strategy decreases the complexity of the problem, which translates into higher efficiency. Additionally, instead of enforcing a movement direction, we leverage [15] to calculate heuristic values that penalize movements against the highway direction during planning. In short, both strategies encourage agents to move in the same direction and thus effectively avoid face-to-face conflicts. Subsequently, we further discuss the properties after incorporating highway into the lifelong MAPF framework, which can effectively minimize the undesirable phenomena of deadlocks and rerouting, as shown in Fig. 1.

Through several experiments, we present the benefits of utilizing highway in the lifelong MAPF framework and the trade-off between runtime and throughput. The experimental results show that the runtime can be accelerated dozens of times with less than 10% throughput decay in warehouse-like maps with more than 50% obstacles. Furthermore, when the density of agents is high, the throughput even increases after utilizing highway, because the severe impacts of deadlocks and rerouting are significantly reduced by highway.

II Background and Related Work

II-A One-shot MAPF

MAPF is an NP-hard problem [16, 17] and is typically solved in one-shot and offline, where each agent has exactly one goal known beforehand and paths of agents are found at once, respectively. The solution of one-shot MAPF solvers is typically evaluated by sum-of-costs (the sum of the arrival times of each agent) or makespan (the time span for all agents to reach their goals).

There are various one-shot MAPF methods [18], including compilation-based solvers [19, 20], rule-based solvers [21, 22], A*-based solvers [23, 24], and prioritized planning [25], etc. Especially, the search-based solvers [26, 6] and their variants are common. Most of the search-based solvers are two-level MAPF solvers. The low-level solver plans the paths for each agent and the high-level solver handles the conflicts of these paths to guarantee no collisions. A* [27] is a typical algorithm as the low-level solver of search-based solvers. As for the high-level solvers, Conflict-Based Search (CBS) [5] is a popular MAPF solver that is complete and optimal, and its variants [6, 28] sacrifice the optimality for faster computation time. Besides, Priority-Based Search (PBS) [29], which solves the conflicts by priority orderings, shows prominent computational efficiency but is incomplete and not optimal.

II-B Lifelong MAPF

In contrast to one-shot MAPF, lifelong MAPF solves problems that an agent may be assigned more than one task, which is always accompanied by the online scenario. In online MAPF, the tasks will be generated on the fly during execution [3]. Therefore, the solution can not be planned in advance and should be evaluated by throughput (the number of tasks finished per timestep) because the execution time and the tasks assigned to agents can be endless. For instance, endless tasks come at any time in warehouses, and agents are assigned new tasks after they finished the previous ones. Therefore, the path solver needs to consider the solution constantly in real-time and the problems should be solved as lifelong MAPF. In addition, we should consider not only the throughput but also the runtime (computing time of planning) that makes sure the planning of paths can be completed on time to effectively utilize agents.

II-C Lifelong Solutions

Firstly, if all the tasks are known in advance, a lifelong MAPF problem can be solved as a one-shot MAPF problem [10]. Otherwise, the second type of methods [9, 11] replans paths for all the agents at each timestep to handle new tasks on the fly. Nonetheless, these two types of methods are really time-consuming in solving the whole problem offline or constantly replan at each timestep, respectively. Therefore, the third type of method [8] can be followed in that new paths are replanned for the agents which get new goals only, but it still encounters poor throughput without cooperative planning among all agents.

To retain the efficiency of runtime while maximizing the throughput, RHCR [12] incorporated windowed MAPF [4] into lifelong MAPF. The idea is to plan the entire paths to goals and solve conflicts only for the first timesteps and the represents the time horizon. In addition to the time horizon , RHCR has another user-specified parameter that specifies the replanning period. Every timesteps as an episode, the new goals are assigned to the agents which have reached their goals, and the conflict-free paths in subsequent timesteps are planned for the future when the agents are following the planning results from the previous episode. After timesteps pass, agents follow these new conflict-free paths, and the paths for the next episode should be planned. However, due to the incompleteness and the lack of guarantee in the optimality, the windowed MAPF solver encounters deadlocks (i.e., agents idle at their current locations waiting for each other to pass first), which rely on a potential function to evaluate the progress of the agents and will be increased to address deadlocks [12]. Besides, because windowed MAPF [4] only solves conflicts in the first few timesteps and replans paths constantly, it causes agents to revise their path direction or revisit locations that they had previously visited, which also happens in RHCR [12].

II-D Highway

In one-shot MAPF, the highway is an add-on setting on the map to speed up the runtime, which builds a global rule of the moving direction to encourage or enforce MAPF solvers to search paths in a consistent direction. Forbidding agents to move against the highway direction is a simple strategy to reduce computational complexity and avoid collisions. For instance, a one-shot solution [14] used directed maps to reduce the complexity of MAPF for faster computational efficiency. Additionally, another one-shot solution [15] increases the cost on the paths against the highway direction when calculating the heuristic values, which implicitly encourages solvers to explore the path along the highway direction. However, these methods are proposed for one-shot MAPF where undesirable phenomena in lifelong MAPF such as deadlocks and rerouting do not occur. Even if RHCR [12] (a lifelong solution) used directed maps in its experimental section to reduce the planning complexity, it still lacked further discussions and experiments on how highway affects the planning results in lifelong scenarios.

III Problem Definition

The multi-agent path finding (MAPF) problem is formulated as a directed graph of the map and a set of agents . The graph represents the locations and the edges connecting pairs of these locations. For each agent , it starts from its start location and aims at its own goal location . Assuming that time is discretized into timesteps, should decide to move to a neighboring location through the connected edge or wait at its current location at each timestep, and the movement will be completed within the timestep.

However, a collision may occur when two agents arrive at the same location or move through the same edge at the same time, which is referred to as a conflict [30]. Therefore, the objective of MAPF is to find collision-free paths for all the agents to reach their goals jointly, where is the path of agent as a sequence of neighboring locations.

We assume the scenario as lifelong and online MAPF. The agents start from their locations without knowing their task (i.e., moving to a goal location) in advance, and a new task will be assigned to the agent after it finishes its task (i.e., reaching its goal location). The goal of the MAPF solver is to constantly plan the path for each agent and maximize the throughput (the number of tasks finished per timestep).

IV Lifelong MAPF with Highways

IV-A Highway Definition

In one-shot MAPF with highways [15], highway was represented as a subgraph of the given graph of the map , where contained locations involved in the highway and represented the edges following the highway direction.

In this work, we focus on warehouse-like maps [30], the highway settings can be defined by specifying the direction of movement for each corridor. A sequence of locations is called a corridor with length iff is connected to exact two locations and according to for (i.e., only one way in and one way out at each location). The and can be the same location as a loop-corridor. For each corridor, there exists a direction , which is a bool-value representing the direction of the corridor, shown in Fig. 2. Besides, any location as an intersection, which connects two or more corridors, will not belong to any corridors. Finally, given a set of directions (m is the number of corridors), the highway can be represented as a subgraph and the edges that against the highway direction .

IV-B Lifelong MAPF Framework

We follow RHCR [12] as our lifelong MAPF framework. Given user-specified time horizon and replanning period (), the conflict-free paths in the first timesteps will be planned for agents every timesteps, and the new goals will be assigned to the agents which have reached their goals. As for the high-level solver, we use PBS [1], which shows prominent computational efficiency with RHCR.

In our method, we use A* as the low-level solver with the true shortest distance heuristic. The shortest-path heuristic values, which ignore dynamic constraints (i.e., moving agents), can be calculated by shortest-path algorithms in advance. In this way, the precise values of the distances between nodes can guide the low-level solver to traverse fewer locations to the goal than the heuristic values of Manhattan distance. Besides, given a highway setting, the calculated heuristic can influence the low-level solver to find paths following the direction of highway, thereby resulting in fewer conflicts, and the complexity of low-level planning is also relaxed because choices to move against the highway direction are trimmed away.

IV-C Strict-limit Highway and Soft-limit Highway

There are two methods to set up the highway for the low-level solver. Firstly, the movement across edges is allowed for only one direction [14], which we call strict-limit highway in this work. The second one is to increase heuristic values only except following the direction of the highway [15] that encourages low-level solvers to search paths along the edges of the highway, which we call soft-limit highway here.

IV-C1 Strict-limit Highway

While using the strict-limit highway, will be replaced with the subgraph , where . Therefore, the connectivity of the neighboring locations is restricted strictly under the strict-limit setting, which means the movement against the highway is impossible here, as shown in Fig. 3. The strict rule forces agents to follow the directions of the highway but limit the flexibility to use idle locations, because the low-level solver may no longer explore neighboring locations that avoid the highway direction. To utilize the highway with the strict limitation in RHCR, the heuristic values based on the all-pair shortest paths ignoring moving agents should be calculated and stored for usage in advance. The heuristic computed with is defined as:

| (1) |

where is a feasible path from a start location to the goal location and is the length of the path. Hence, represents the shortest distance moving from start to goal . Then, the lower-level solver follows the guidelines from the precise highway heuristic to make agents move along the direction of the highway.

IV-C2 Soft-limit Highway

The limitation is added indirectly to the heuristic while low-level planning. There is a user-specified parameter greater than one. During the calculation of the heuristic values, the cost of crossing an edge along the direction of the highway is normally one, and the cost is increased to when moving in the opposite direction of highway, which is defined as:

| (2) |

where is a feasible path from a start location to the goal location . When is close to one, the heuristic values are close to the shortest paths without any limitation. On the contrary, if the is increasing to infinity, the heuristic values are similar to the shortest paths computed under the highway setting. The highway heuristic values encourage the agents to move in the same direction to avoid collisions. For instance, an example with is shown in Fig. 3, and the heuristic values on the path moving against the highway direction are higher (i.e., 8, 6, 4, 2). However, because of the lack of strict limitations, the movement against the highway direction will still happen, which means there exist conflicts that should be solved. To use the soft-limit highway in RHCR, the heuristic values should be calculated according to the chosen beforehand, and then the lower-level solver will follow the heuristic to find paths.

IV-D Highway Behaviors

Under the soft-limit highway, the low-level solver is planning with the highway heuristic. Because the path against the highway direction has higher heuristic values, the neighboring locations along the highway direction have higher priority to be taken out from the open-set of A* for the lower expected costs. Hence, the direction of paths found by the low-level solver shows consistency in the direction. The consistency of paths for agents causes fewer face-to-face collisions, which decreases the nodes the high-level solver should generate to solve conflicts. When is small, the results are close to the ones without the highway. As increases, the planning results are gradually close to the ones using the shortest paths of the highway as the heuristic values. However, even if is set to infinity, the movement against the highway direction happens when the locations along the shortest path are blocked by the dynamic obstacle, which shows the flexibility for agents to choose the direction of their movement but may cause conflicts that take time to solve. Using the strict-limit highway, the consistent direction of paths of agents can be guaranteed by blocking the edges to neighboring locations during planning, as shown in Fig. 4.

The purpose of utilizing highway is to ensure the paths of agents can share a collaborative rule locally, which relieves the congestion by solving conflicts as well as keeps the consistency of the planning results in neighboring time horizons. Benefiting from the consistent direction of paths, the strict-limit highway has the following properties addressing the issues of deadlocks and rerouting. Additionally, we show that the soft-limit highway can exploit these properties depending on the given in our experimental section.

IV-E Property of Avoiding Deadlocks

Deadlock is a phenomenon caused by the windowed MAPF without considering the entire time horizon [12]. A deadlock happens when two agents move face-to-face with their goals on opposite sides. With a small , neither they can go directly because of the swapping conflict nor go along the reverse direction because the high-level solver prefers the agents waiting at the original location for less sum-of-costs instead of taking a longer path. For example, if time horizon , replanning period and CBS is used as the high-level solver, the windowed MAPF solver returns the paths in Fig. 5-(a), which lets agents stay at the location without moving for timesteps and start to move after that to the goal location. This situation causes by the limited cooperative planning of the windowed MAPF solvers, which only solves the conflicts in the first timesteps and ignores the conflicts behind that. Hence, the low-level solver is not able to consider the further locations because the sum-of-costs is smaller while waiting at the original location. To make one agent move along the top side and the other move along the bottom side, should be set to at least to let the solver consider the further path instead of a cheating solution that waiting across the time horizon to avoid conflicts like in Fig. 5-(b). Worse yet, the bigger value of should be checked to find the solution if the corridor is longer or the goals are further, which increases the runtime.

Generally, a deadlock happens when the MAPF solver can not find feasible moving paths for agents with lower sum-of-costs compared with paths of waiting. That is, the MAPF solver can not find the new locations for agents in the next timesteps that have a lower sum of distances to their goals. Given represents a subset of agents which may cause a deadlock. An agent asks for (, where is the time horizon), which is a feasible path from its current location to the next location in the next timesteps without collisions. With the shortest path heuristic values that represent the distances to the goal locations in the strict-limit highway, a situation that a deadlock may happen can be simplified as:

| (3) |

where is the goal location of .

In strict-limit highway, if an agent moves from any location to its neighboring location and always, then if , where is its goal location. Thus, Eq. (3) can be rewritten as:

| (4) |

where is the set of unique locations in , and reflects the number of unique locations in .

Eq. (4) is not held when . Namely, in the strict-limit highway, if all agents move along the edges , deadlocks will not happen unless every agent has no available neighboring locations to move to.

IV-F Property of Avoiding Rerouting

Rerouting is a phenomenon that results from the lack of long-term consideration of windowed MAPF [4], which causes agents to revise their path direction or revisit locations that they had previously visited. The idea of the windowed MAPF used in RHCR is to separate the lifelong MAPF problem into a sequence of subproblems by time, which is referred to as episode. In each episode, the low-level solver plans the entire path for each agent, and then the high-level solver solves the conflicts of the paths for the finite time horizon . After planning, each agent follows the path of the first (smaller than or equal to ) timesteps. However, although planning the entire path to the goal, only the partial path in the first timesteps is followed by each agent. Therefore, each agent may stop at a location whose heuristic value is higher than the original location, namely waiting is a wiser choice rather than moving. Besides, in most search-based MAPF solvers, special mechanisms are followed to determine the priority orderings in high-level planning to decide which agent should go first when a conflict happens (e.g. CBS [5] adds the constraint to the certain agent as a CT-node, and PBS [29] maintains priority ordering pairs). However, the consistency of the priority orderings of agents is not guaranteed in the subsequent high-level planning, and changes in the priority orderings may lead to an obvious difference in the planning results. These cases may ask agents to reroute or even move backward, resulting in longer distances for agents to reach their goals.

We formally defined that an agent is rerouting when it is assigned a path by the MAPF solver that makes move away from its goal , which can be represented by the shortest path heuristic:

| (5) |

where is the current location of and represents the location where will arrive after timesteps ( is the replanning period smaller than or equal to the time horizon ). In strict-limit highway, given an agent and its goal location , , if . Therefore, when moves along the edges , Eq. (5) is not held because . In summary, using strict-limit highway, these properties guarantee that there are no deadlocks and rerouting as agents move across the edges that are parts of the highway.

V Experiments

Our experiments focus on the comparison between before and after incorporating highway into lifelong MAPF and the analysis of strengths and drawbacks. Firstly, we evaluated the changes in the throughput and runtime when leveraging highway. Secondly, we analyzed the advantages of using highway when map sizes or agent densities grow larger.

V-A Environment

We implement RHCR with , using PBS as the lifelong MAPF solver with a standard location-time A* as the low-level solver in Python and ran all experiments on Intel Core i9-9980XE with 16 GB memory. Before each round of experiments, the start locations and goal locations of agents are selected randomly from the different empty locations on the map. When an agent arrives at its goal location, a location that is not the goal location of any other agents will be assigned. Besides, we randomly initialize 100 episodes for each experiment and simulate 100 iterations of planning in each episode. Each iteration has its time limit of 60 seconds, and the episode will be labeled as a fail case if any iteration times out. All metrics shown are calculated as averages which exclude the fail cases.

Generally, throughput and runtime are the two main metrics for lifelong MAPF. For evaluating that highway can effectively deal with existing phenomena of deadlocks and rerouting, moving timesteps, idle timesteps, and rerouting rate are recorded during the experiments. Rerouting rate is the percentage of the agents who reroute in an iteration. Besides, moving timesteps reflects the number of timesteps that an agent moves during a task and idle timesteps is the number of timesteps that an agent stays at the same location without moving during a task. For observing how increasing c influences agents, highway avoidance rate shows the chance that an agent moves against the highway direction. Similar to [5] and [15], generated nodes is used to explain the reason why our method has lower runtime, which is the number of high-level nodes generated by the MAPF solver before finding the solution during one planning.

Given that the heuristic values are calculated from the shortest paths (e.g. strict-limit highway and soft-limit highway with or ), a trick mentioned in the previous work [4] can be applied to simplify planning. Namely, in windowed MAPF, instead of planning the entire path to the goal, we can directly ignore the planning after timesteps, which yields the same result but saves the time to plan entire paths. We include this trick in Table II for comparison.

V-B Fulfillment Warehouse

In autonomous warehouses, a set of inventory pods are placed closely into a rectangle block, and the blocks with corridors in between are placed into a grid, like in Fig. 7. In our experiments, we follow the settings of the warehouse map in Fig. 7-(a) [30]: (1) each block contains 102 pods, and (2) the corridors are single-rowed. For simplicity, we test maps with NN blocks, where N is an odd number (i.e., 3, 5, 7, etc.), and inventory pods are considered obstacles for the agents. For instance, the smallest 33 version of the maps is shown in Fig. 7-(b). In addition, the percentage of obstacles over the entire map indicates how crowded the map is. Our smallest map (Fig. 7-(b)) has more than 50% of obstacles, and the percentage of obstacles gradually increases as the map grows with the larger N. The high obstacle density and neighboring corridors are suitable for testing highway.

V-C From No Highway to Highway

The parameter in soft-limit highway determines how much the map is influenced by the highway directions. Therefore, we evaluate the influence of highway through the map in Fig. 7-(b) with the increasing and fixed 5% density of agents (i.e., the ratio of agents to empty locations of the map). Fig. 6 shows the relative throughput, runtime, and generated nodes with the , which are compared to the result of .

When the map is small, the value of throughput drops when the value of rises in Fig. 6-(a), though the runtime decreases slightly in Fig. 6-(b). However, in the case of the larger map, the drop in throughput is much slower, and the drop in runtime is significant as increases. Surprisingly, the relative throughput when even surpasses the throughput without highway. Furthermore, a trend shows that the gap in the throughput between no-highway () and highway () narrows as the map size grows larger.

The speedup on the runtime mainly benefits from the more efficient planning under highway, because the global rule of the direction should be followed, and thus planning results are more consistent, which causes fewer conflicts before the solution is found. Referring to Fig. 6, if a higher is used, fewer nodes should be generated to find a solution, and the benefit is even more pronounced in larger maps.

In Table I, the results verified that using highway resulted in fewer average idle timesteps (timesteps that an agent stays at the same point during a task), which reflects the consistent direction of paths and fewer deadlocks, and the average idle timesteps reduce more when a larger map. Also, through c increases, the chance that an agent moves against the highway direction and the rerouting problem decreases, shown as the highway avoidance rate (the chance of an agent moving against the highway direction) and the rerouting rate (the percentage of the rerouting agents in an iteration of planning) in Table I. Besides, following the highway causes agents to have more moving timesteps (timesteps that an agent needs to arrive at its goal). Nevertheless, using highway in a larger map causes fewer extra moving timesteps, which explains another reason that a larger map can benefit more while using highway. For instance, in Table I, using highway () in the 3x3 map causes 69.1% extra moving timesteps while using highway () in the 7x7 map only causes 23.9% extra moving timesteps.

| c | Map Size | |||

|---|---|---|---|---|

| 33 | 55 | 77 | ||

| 1 | 2.49 | 2.66 | 2.83 | |

| Idle | 2 | 2.42 (-2.5%) | 2.55 (-4.1%) | 2.61 (-7.9%) |

| Timesteps | 5 | 2.32 (-6.8%) | 2.38 (-10.5%) | 2.42 (-14.7%) |

| 50 | 2.22 (-10.8%) | 2.29 (-13.8%) | 2.33 (-17.9%) | |

| 1 | 17.65 | 27.76 | 37.69 | |

| Moving | 2 | 18.27 (3.5%) | 27.49 (-0.9%) | 36.42 (-3.4%) |

| Timesteps | 5 | 20.90 (18.4%) | 30.61 (10.3%) | 39.96 (6.0%) |

| 50 | 29.84 (69.1%) | 38.45 (38.5%) | 46.72 (23.9%) | |

| Highway | 1 | 43.2% / 3.8% | 44.3% / 4.5% | 44.9% / 4.7% |

| Avoidance / | 2 | 32.3% / 2.5% | 28.3% / 1.9% | 25.5% / 1.5% |

| Rerouting | 5 | 18.7% / 0.7% | 14.9% / 0.4% | 12.5% / 0.3% |

| Rate | 50 | 2.2% / 0.0% | 2.0% / 0.0% | 1.8% / 0.0% |

-

For comparing how much idle timesteps decrease and moving timesteps increase after using highway (c 1), percentages of difference when c 1 compared to no-highway are represented in parenthesis.

| Types Map Size | 33 | 55 | 77 | 99 | 1111 | 1313 | 1515 | |

|---|---|---|---|---|---|---|---|---|

| Throughput (tasks/step) | Strict-limit | 0.23 (-41.4%) | 0.45 (-28.7%) | 0.69 (-20.5%) | 0.93 (-14.1%) | 1.17 (-10.6%) | 1.43 (-8.3%) | 1.68 (-3.8%) |

| Soft-limit | 0.24 (-38.0%) | 0.47 (-25.9%) | 0.71 (-17.8%) | 0.96 (-11.5%) | 1.21 (-7.9%) | 1.46 (-6.2%) | 1.71 (-2.2%) | |

| w/o Highway | 0.39 | 0.63 | 0.87 | 1.09 [20] | 1.31 [47] | 1.56 [77] | 1.75 [95] | |

| Runtime (seconds) | Strict-limit (P) | 0.002 (6.7) | 0.010 (13.2) | 0.032 (20.4) | 0.069 (32.1) | 0.130 (30.4) | 0.284 (34.0) | 0.553 (39.8) |

| Strict-limit | 0.007 (1.8) | 0.034 (3.9) | 0.097 (6.7) | 0.241 (9.1) | 0.339 (11.7) | 0.681 (14.2) | 1.323 (16.6) | |

| Soft-limit | 0.008 (1.5) | 0.041 (3.2) | 0.111 (5.8) | 0.321 (6.9) | 0.439 (9.0) | 0.870 (11.1) | 2.016 (10.9) | |

| w/o Highway | 0.012 | 0.133 | 0.646 | 2.208 | 3.955 | 9.638 | 21.983 |

-

Each is an average calculated from test cases excluding fail cases (i.e, time out). The results using strict-limit highway, soft-limit highway () and the baseline result without highway are shown respectively, and the result using the partial planning trick (mentioned in Section V-A) to speed up is marked as ”(P)”. Percentages of throughput decay using the highway compared to no-highway are represented in parenthesis, and numbers of fail cases are marked in square brackets in the top column if any (out of 100 test cases). The ratios of speedups using the highway compared to no-highway are represented in parenthesis in the bottom column.

V-D Scaling up

To ensure the runtime is efficient enough to assign the paths on time without idling the agents, the question is whether the advantages of highway are kept after the map of a warehouse scales up. Referring to the results mentioned previously, the answer is clearly yes. Subsequently, we discuss and quantify the benefits of highway as the map size scales up and the agent density grows larger.

V-D1 Map Size

To scale up the size of the map, the block is kept the same as in Fig. 7-(b), which contains 102 pods in each block, and corridors are still single-rowed. Then, the number of blocks are increasing from 33, 55, 77 blocks … to 1515 blocks, and the density of agents is fixed at 5%. The results using strict-limit highway, soft-limit highway (), and the baseline without highway are shown in Table II. When the map grows larger, the gap of throughputs between highway and the baseline becomes smaller, and the speedup gained from using highway keeps increasing. The main reason for the decrease in the throughput gap is that, as the map gets larger, the number of extra moves taken for the highway becomes insignificant relative to the total number of moves. Furthermore, in contrast to the setting using highway, the fail cases appear on larger maps without highway.

Besides, the gap between throughputs of strict-highway and soft-highway is also decreasing in Fig. 8. Therefore, it becomes more cost-effective to convert soft-highway to strict-highway for faster runtime (see Table II).

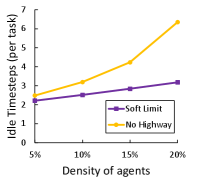

V-D2 Number of Agents

To test the influence of different agent densities on the map, the density of agents is increasing from 5% to 20% with the 33 map in Fig. 7-(b). Referring to the result in Fig. 9, the throughput of using highway increases constantly. However, the trend of the throughput without using highway starts to decline when the density increases. Therefore, the rerouting rate and average idle timesteps are evaluated. Under the setting of highway, rerouting rarely happens and the fewer average idle timesteps potentially indicate that deaklocks and the phenomena of waiting for each other are significantly reduced because of the consistent direction of paths for agents.

VI Conclusion

In this work, we studied the highway idea (previously proposed for one-shot MAPF) in the lifelong MAPF scenario for the trade-off between runtime and throughput. Furthermore, we discussed the properties of combining highway with the lifelong MAPF framework that minimizes the existing problems of deadlocks and rerouting. Finally, we evaluated highway through a sequence of experiments. According to the experimental results, using highway can significantly speed up the runtime, and the decay of throughput gradually diminishes when the map size or agent density grows larger.

References

- [1] H. Ma, J. Yang, L. Cohen, T. S. Kumar, and S. Koenig, “Feasibility study: Moving non-homogeneous teams in congested video game environments,” in Thirteenth Artificial Intelligence and Interactive Digital Entertainment Conference, 2017.

- [2] F. Ho, A. Goncalves, A. Salta, M. Cavazza, R. Geraldes, and H. Prendinger, “Multi-agent path finding for uav traffic management: Robotics track,” 2019.

- [3] H. Ma, J. Li, T. Kumar, and S. Koenig, “Lifelong multi-agent path finding for online pickup and delivery tasks,” arXiv preprint arXiv:1705.10868, 2017.

- [4] D. Silver, “Cooperative pathfinding,” in Proceedings of the aaai conference on artificial intelligence and interactive digital entertainment, vol. 1, no. 1, 2005, pp. 117–122.

- [5] G. Sharon, R. Stern, A. Felner, and N. R. Sturtevant, “Conflict-based search for optimal multi-agent pathfinding,” Artificial Intelligence, vol. 219, pp. 40–66, 2015.

- [6] M. Barer, G. Sharon, R. Stern, and A. Felner, “Suboptimal variants of the conflict-based search algorithm for the multi-agent pathfinding problem,” in Seventh Annual Symposium on Combinatorial Search, 2014.

- [7] P. R. Wurman, R. D’Andrea, and M. Mountz, “Coordinating hundreds of cooperative, autonomous vehicles in warehouses,” AI magazine, vol. 29, no. 1, pp. 9–9, 2008.

- [8] M. Čáp, J. Vokřínek, and A. Kleiner, “Complete decentralized method for on-line multi-robot trajectory planning in well-formed infrastructures,” in Proceedings of the international conference on automated planning and scheduling, vol. 25, 2015, pp. 324–332.

- [9] Q. Wan, C. Gu, S. Sun, M. Chen, H. Huang, and X. Jia, “Lifelong multi-agent path finding in a dynamic environment,” in 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV). IEEE, 2018, pp. 875–882.

- [10] V. Nguyen, P. Obermeier, T. C. Son, T. Schaub, and W. Yeoh, “Generalized target assignment and path finding using answer set programming,” in Twelfth Annual Symposium on Combinatorial Search, 2019.

- [11] F. Grenouilleau, W.-J. van Hoeve, and J. N. Hooker, “A multi-label a* algorithm for multi-agent pathfinding,” in Proceedings of the International Conference on Automated Planning and Scheduling, vol. 29, 2019, pp. 181–185.

- [12] J. Li, A. Tinka, S. Kiesel, J. W. Durham, T. S. Kumar, and S. Koenig, “Lifelong multi-agent path finding in large-scale warehouses,” in Proceedings of the AAAI Conference on Artificial Intelligence, 2021.

- [13] M. R. J. M. R. Jansen and N. Sturtevant, “Direction maps for cooperative pathfinding,” in Proceedings of the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment, vol. 4, no. 1, 2008, pp. 185–190.

- [14] K.-H. C. Wang, A. Botea, et al., “Fast and memory-efficient multi-agent pathfinding.” in ICAPS, 2008, pp. 380–387.

- [15] L. Cohen, T. Uras, and S. Koenig, “Feasibility study: Using highways for bounded-suboptimal multi-agent path finding,” in International Symposium on Combinatorial Search, vol. 6, no. 1, 2015.

- [16] P. Surynek, “An optimization variant of multi-robot path planning is intractable,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 24, no. 1, 2010, pp. 1261–1263.

- [17] J. Yu and S. M. LaValle, “Structure and intractability of optimal multi-robot path planning on graphs,” in Twenty-Seventh AAAI Conference on Artificial Intelligence, 2013.

- [18] A. Felner, R. Stern, S. Shimony, E. Boyarski, M. Goldenberg, G. Sharon, N. Sturtevant, G. Wagner, and P. Surynek, “Search-based optimal solvers for the multi-agent pathfinding problem: Summary and challenges,” in International Symposium on Combinatorial Search, vol. 8, no. 1, 2017.

- [19] P. Surynek, “Unifying search-based and compilation-based approaches to multi-agent path finding through satisfiability modulo theories,” in International Symposium on Combinatorial Search, vol. 10, no. 1, 2019.

- [20] E. Lam, P. Le Bodic, D. Harabor, and P. J. Stuckey, “Branch-and-cut-and-price for multi-agent path finding,” Computers & Operations Research, vol. 144, p. 105809, 2022.

- [21] R. J. Luna and K. E. Bekris, “Push and swap: Fast cooperative path-finding with completeness guarantees,” in Twenty-Second International Joint Conference on Artificial Intelligence, 2011.

- [22] B. De Wilde, A. W. Ter Mors, and C. Witteveen, “Push and rotate: cooperative multi-agent path planning,” in Proceedings of the 2013 international conference on Autonomous agents and multi-agent systems, 2013, pp. 87–94.

- [23] M. Goldenberg, A. Felner, R. Stern, G. Sharon, N. Sturtevant, R. C. Holte, and J. Schaeffer, “Enhanced partial expansion a,” Journal of Artificial Intelligence Research, vol. 50, pp. 141–187, 2014.

- [24] G. Wagner, “Subdimensional expansion: A framework for computationally tractable multirobot path planning,” 2015.

- [25] K. Okumura, M. Machida, X. Défago, and Y. Tamura, “Priority inheritance with backtracking for iterative multi-agent path finding,” Artificial Intelligence, vol. 310, p. 103752, 2022.

- [26] G. Sharon, R. Stern, M. Goldenberg, and A. Felner, “The increasing cost tree search for optimal multi-agent pathfinding,” Artificial intelligence, vol. 195, pp. 470–495, 2013.

- [27] P. E. Hart, N. J. Nilsson, and B. Raphael, “A formal basis for the heuristic determination of minimum cost paths,” IEEE transactions on Systems Science and Cybernetics, vol. 4, no. 2, pp. 100–107, 1968.

- [28] J. Li, W. Ruml, and S. Koenig, “Eecbs: A bounded-suboptimal search for multi-agent path finding,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 14, 2021, pp. 12 353–12 362.

- [29] H. Ma, D. Harabor, P. J. Stuckey, J. Li, and S. Koenig, “Searching with consistent prioritization for multi-agent path finding,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, no. 01, 2019, pp. 7643–7650.

- [30] R. Stern, N. R. Sturtevant, A. Felner, S. Koenig, H. Ma, T. T. Walker, J. Li, D. Atzmon, L. Cohen, T. S. Kumar, et al., “Multi-agent pathfinding: Definitions, variants, and benchmarks,” in Twelfth Annual Symposium on Combinatorial Search, 2019.