The structure of heavily doped impurity band in crystalline host

Abstract

We study the properties of the impurity band in heavily-doped non-magnetic semiconductors using the Jacobi-Davidson algorithm and the supervised deep learning method. The disorder averaged inverse participation ratio (IPR) and thouless number calculation show us the rich structure inside the impurity band. A Convolutional Neural Network(CNN) model, which is trained to distinguish the extended/localized phase of the Anderson model with high accuracy, shows us the results in good agreement with the conventional approach. Together, we find that there are three mobility edges in the impurity band for a specific on-site impurity potential, which means the presence of the extended states while filling the impurity band.

pacs:

71.23.-k, 71.55.-i, 02.60.CbI Introduction

The effect of disorder has been extensively studied since Anderson’s seminal paperAnderson (1958). Diluted magnetic semiconductors (DMS) doped with a small concentration of charged impurities constitute an interesting magnetic system that has a number of novel features for study by numerical simulationAvérous and Balkanski (1991). Much of the research has been focused on II-VI (such as CdTe or ZnSe) and III-V (such as GaAs) compound semiconductors doped with a low concentration () of Manganese (Mn) impurities. Of particular interest in this field is Ga1-xMnxAs which has been shown to exhibit ferromagnetic behavior above 100KOhno (1998). In these samples, the Manganese is substitutions with the Gallium and acts as an acceptor (donating one hole to the crystal), so that the material is p-type. The holes bind to the impurities with an energy of around 130 meV around Beschoten et al. (1999). Since , the overlap between different impurity states can be ignored, thus the interaction between the charge carriers can be neglected. The system can be simply described by a noninteracting tight-binding model. When the system contains only one impurity, and the binding energy is large enough, an impurity state appears below the conductance band (we assume the impurity potential is attractive). It is locally distributed in space near the impurity potential within a localization length . As increasing the concentration , the overlap between different impurity states extends the single impurity energy to an impurity band in the density of state (DOS) and eventually merges with the conductance band. Simultaneously, the states in the impurity band are expected to become more and more extended and ultimately regain their bandlike character Cook and Berciu (2012). However, the details inside the impurity band are rarely studied.

One reason for lacking such a study is the computation difficulty even in the non-interacting case. Generally, the percentage of the state in the impurity band in the total number of states is about at the concentration we are interested in. Taking a 3-dimensional Anderson model with lattice size as an example, the number of states which we need to know in the impurity band is about 3000. The exact diagonalizationWeiße and Fehske (2008) for such a system is very difficult due to the large dimension. On the other hand, we have to do a large number of sample averages. The sparse matrix diagonalization, such as the Lanczos methodOJALVO and NEWMAN (1970), can be adapted to obtain a few lowest-lying states or a few states nearby special energy (the simplest way is diagonalizing by using the original Lanczos diagonalization method).

Machine learning methods have recently emerged as a valuable tool to study the quantum many-body physics problemsCarleo and Troyer (2017); Carrasquilla and Melko (2017); Ch’Ng et al. (2017); Van Nieuwenburg et al. (2017); Venderley et al. (2018); Wetzel (2017); Rodriguez-Nieva and Scheurer (2019); Lidiak and Gong (2020); Hsu et al. (2018); Hendry et al. (2021); Choo et al. (2019); Pfau et al. (2020); Sharir et al. (2020); Hendry et al. (2022); Chen et al. (2022). Its ability to process high dimensional data and recognize complex patterns have been utilized to determine phase diagrams and phase transitionsWang (2016); Ohtsuki and Ohtsuki (2017); Tanaka and Tomiya (2017); Mano and Ohtsuki (2017); Broecker et al. (2017); Schindler et al. (2017); Li et al. (2019); Dong et al. (2019); Kotthoff et al. (2021); Zhang et al. (2019a, b); Käming et al. (2021). In particular, Convolutional Neural Network(CNN)Krizhevsky et al. (2012) model, which initially is designed for image recognition, was widely used to study different kinds of phase transition problems including the Bose-Hubbard modelBohrdt et al. (2021), spin 1/2 Heisenberg modelThéveniaut and Alet (2019), quantum transverse-field Ising modelZhang et al. (2019a) and etc. The power of using machine learning to recognize quantum states lies in their ability to finish tasks without the knowledge of physics background or the Hamiltonian of the system. Even if the neural network is trained in a small energy region of the system, it can be used to obtain the whole phase diagramOhtsuki and Ohtsuki (2017); Mano and Ohtsuki (2017). Also, it can discriminate quantum states with high accuracy even if they are trained from a totally different Hamiltonian. This special feature of machine learning inspires us to try to identify the delocalized states in the “impurity band”.

In this paper, we develop a method to obtain the correct density of states (DOS) and other localization properties, such as inverse participation ratio (IPR)Brndiar and Markoš (2006) and thouless numberEdwards and Thouless (1972), by using Jacobi-Davidson sparse matrix diagonalizationBollhöfer and Notay (2007) with an importance sampling statistics method. Meanwhile, we train a 3-dimensional CNN model using the data generated from the Anderson model, and then the trained model is used to identify the existence of extended states in the impurity band. This manuscript is organized as follows: In sec.II we describe the tight-binding model on the cubic lattice and numerical methods; Sec. III demonstrates the effect of heavy doping studied by studying the IPR and Thouless number; Sec.IV demonstrates the implementation of the deep learning approach and the results from the trained neural network model; finally, we close with a conclusion.

II Model and Methods

We consider a tight-binding model on a D-dimensional hypercubic lattice with the nearest neighbor hopping t, and on-site energies :

| (1) |

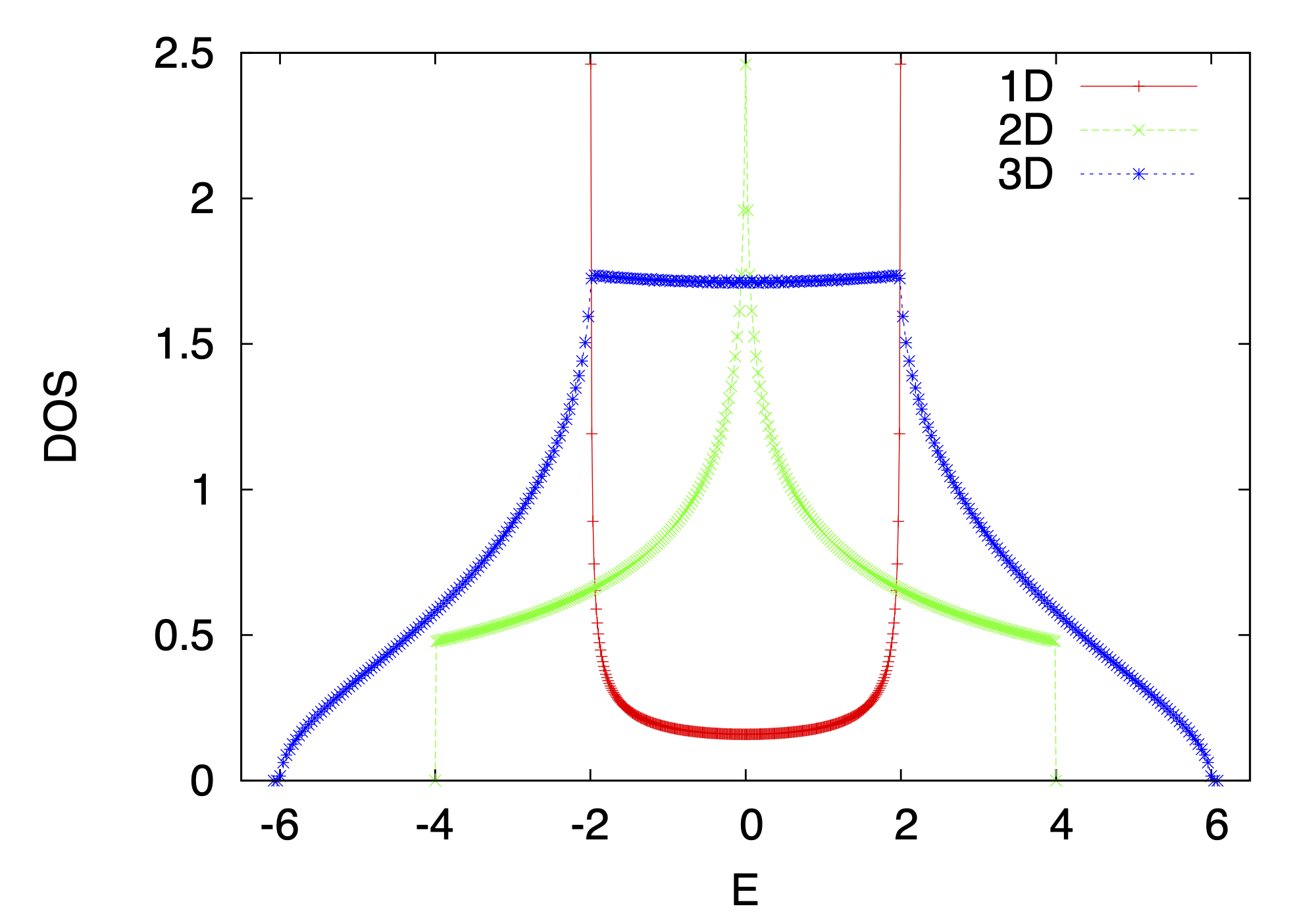

The hopping term simulates the iterative electrons and the on-site energy has a bimodal distribution with probability , and with probability (). This model a host lattice with a single relevant band, with a fraction of substitutional impurities. For one-dimensional (d = 1) free electrons, the energy-momentum dispersion relation is , it is easy to get the DOS with the formula

| (2) |

The result for 1D is:

| (3) |

There is no analytic solution for higher dimensional systems, however, an approximation that is accurate to roughly was given by Andres et al Andres et al. (1981). Instead, the DOS can be calculated numerically by exact diagonalization as shown in Fig. 1 where has been set to the unit. After introducing the impurities, all states become localized in 1D and 2D based on the scaling theory of localization Abrahams et al. (1979). Part of the states become to be localized and develop into an impurity band at the edge of the conducting band. To determine the localized/extended state, namely the location of the mobility gap, we calculate the inverse participation ratio (IPR)Brndiar and Markoš (2006)

| (4) |

for each state, where the is the weight of an eigen wave function on the ’th site. Heuristically, if we compare two trivial states with wave functions for a -site system:

| (5) |

and

| (6) |

where is an extended state which has equal weight on each site and is a localized state which only has weight on the ’th site. It is easy to see that the IPR of decreased with the order of and a constant for . On the other hand, the Thouless numberEdwards and Thouless (1972) is defined as:

| (7) |

where is the energy difference while the boundary condition changes from periodic boundary condition (PBC) to anti-periodic boundary condition (APBC) and the is the average energy distance around . Since only the extended states are sensitive to the change of boundary condition, grows linearly as a function of the system size for the extended state, and conversely, it reduces for the localized state. In this work, we determine the localization properties by systematically studying the IPR and Thouless number for different system sizes, and the crossover points of the Thouless number give us a hint of the mobility edge.

For three dimensional cubic lattice of size , the Hamiltonian matrix has a dimension of . General full exact diagonalization methods, such as Lapack library Anderson et al. (1999), can only deal with small system sizes. The computation time of diagonalizing one matrix with size grows dramatically as a function of the system size. As shown in Fig. 2, we deal with a system with size with doping concentration , after averaging thousands of samples, we obtained the DOS for different doping energies. It is shown that a peak emerges gradually near the band edge as increasing the doping energy . This peak becomes more prominent around at which an obvious depletion is developed at the junction between the impurity band and the conduction band.

Since only the developed impurity band is the interesting part we are focusing on. The number of states in the impurity band is about the lowest of states in the whole band, thus we do not have to fully diagonalize the Hamiltonian. On the other side, we just need to calculate the DOS, IPR, and Thouless number for these lowest states after averaging thousands of samples. According to our demand, we use the sparse matrix diagonalization with Jacobi-Division (JADA) method Bollhöfer and Notay (2007) which can search a few (10 to 20) states efficiently near specific points. For a given sample at fixed doping strength, we randomly distribute the reference points (30-50 points) in the impurity band, taking as an example, the reference points are picked randomly in the region , about 10-20 states can be obtained by JADA around each reference point. The reference points could also be picked by importance sampling based on the DOS for a small system from the full diagonalization. We collect all these energies for each reference point in one sample. After thousands of sample averaging, we obtain the same DOS as that from the full diagonalization for a small system. It is obvious that the JADA method can easily go beyond the limit of the full exact diagonalization. At least on the same price of the computation time, we can nearly double the system size compared to the Lapack method. In this work, we calculate the properties for system sizes up to sites by using the JADA method.

III The effect of heavily doping

As analyzed in the previous section, with typical doping concentration , we find that a clear impurity band in the DOS is developed at about . We plot the DOS and IPR together for different system sizes as shown in Fig.3. The line of the DOS for different system sizes collapses to a single curve and it is the same as that from ED as shown in Fig. 2, which tells us that we have already obtained the essential information of the impurity band. As increasing the system size, the IPR does not change on the edge of the band which means the states on the edge of the whole band are localized. The IPR in the bulk decreases as enlarging the system, especially at the center of the impurity band (), the IPR drops to zero, which is the same as in the system bulk (). However, there is a small peak near which is at the right edge of the impurity band. The IPR in the vicinity of this point tends to saturate to a fixed value as increasing the system size. The nonzero saturation of the IPR at this energy means another possible mobility edge existing near the junction between the conduction band and the impurity band.

In order to justify our conjecture, we systematically study the value of IPR for several system sizes. As shown in Fig. 4(a), we choose four points from the knowledge of the DOS and IPR. (1) is in the bulk of the conduction band, at which the state is extended. (2) is at the right edge of the impurity band. The state here is localized according to our conjecture. (3) is in the bulk of the impurity band, which is extended according to its zero IPR value in large limit. (4) is on the left edge of the impurity band and thus at the edge of the whole energy band. The state at the band edge is supposed to be localized. In Fig. 4(b) we again compare the DOS from JADA with that from Lapack which shows a convergence in large system size. According to the way of choosing these four points, (1) and (3) should have similar behavior as increasing the system size, and vice versa for (2) and (4). Fig. 4(c) shows the IPR for these four energies in different system sizes. We plot the data in log scale and fit it by function

| (8) |

The sign of the curvature tells us whether the state is localized or not. For (1) and (3), means they are extended states, and oppositely for localized states at points (2) and (4).

As another criterion, we calculate the Thouless number for different system sizes. The results are shown in Fig.5 in which we plot the DOS together with the same horizontal axis. The impurity band has been divided into several regions at the crossover of for different sizes. We label these regions by “L” (localized) and “E” (extended) to demonstrate different behavior . As increasing the system size, it is obvious that the increases in the “E” region and decreases in the “L” region. The energies with vertical lines are the locations of the mobility edges, or the boundaries between the localized states and extended states.

IV Deep learning approach

Convolutional neural network(CNN), which is originally designed for 2D image recognition, has been widely adopted in studying phase transition and achieves high accuracy in recognition. A standard image recognition model can be used for a 3D electron system by integrating the 3D electron density in one direction. But the drawback of this approach is that the information of the electron density along one direction is lost during integration. So, we design a 3D CNN model for our 3D lattice model. To distinguish the localized and delocalized state, the CNN model will return two real numbers to represent the probability of the extended state and localized state () for the given wave function. If the probability of the extended state is larger than 0.5, we think the eigenstate is delocalized, and vice localized. Due to the limitation of the graphics memory (8GB) of our graphics card (NVIDIA GTX 1080), we consider a 3D lattice. The hidden layers in the CNN model consist of convolutional layers, max-pooling layers, and fully connected layers. The loss function is defined by the cross entropy . During the training, we use the RMSPropOptimizer solver defined in TensorflowAbadi et al. (2015) as the stochastic gradient descent solver to minimize the loss function. The details of the neural network model are in Appendix A.

The training data for different phases are sampled from the 3-dimensional Anderson model using different disorder parameters. It’s well known that the critical disorder at for the 3D Anderson model is 16.54 ±0.01 MacKinnon and Kramer (1981, 1983); Kramer and MacKinnon (1993). When the disorder strength is larger than the critical value, the wave functions are exponentially localized and the system behaves as an insulator. Otherwise, the wave functions are delocalized and the system behaves as a metal. This phenomenon is known as Metal-Insulator Transition(MIT)Anderson (1958). We get 4000 eigenstates from as the delocalized phase and 4000 eigenstates from as the localized phase by steps of 0.1. For each W, we prepare 40 different realizations of randomness and for each realization, we take five eigenstates around . For the validation data set, we get another 600 eigenstates from and 600 eigenstates from in steps of 0.1. During each step of the training, we randomly select 256 eigenstates from the training data set as the input and calculate the gradient of the loss function with respect to the parameters in the CNN model and update them. After every 50 steps, we test the prediction accuracy on the validation data set and save the model with the highest prediction accuracy.

To show the prediction accuracy for different disorder parameters , we generate another 16000 eigenstates sampled from the Anderson model using and . The prediction accuracy for different disorder strengths is shown in Fig.6(a), and the overall accuracy is . The lowest prediction accuracy around the critical disorder is about . We also test our trained model by producing the phase transition diagram of the 3D Anderson model. The testing data are sampled from by steps of 0.1. In each realization of the same disorder parameter , we pick 5 eigenstates around the band center() as input data and use the averaged delocalized probability of the five eigenstates as the delocalized probability of this realization. We prepare 5 random realizations for each and average the delocalized probability. The phase diagram calculated using our trained CNN model is shown in Fig. 6(b). From Fig. 6(b), we see that the trained CNN model successfully captures the Metal-Insulator Transition(MIT).

Owing to its excellent classification accuracy, the trained neural network model is ready to find the extended state in the impurity band. We generate 1000 random realizations for the Hamiltonian in Eq.1 with doping probability and disorder parameter , and obtain all eigenstates using the exact diagonalization method in Lapack. These quantum states are used as the input data for our trained CNN model to calculate the delocalized probability. We average the probability over 1000 realizations and the result is shown in Fig.7. We can see that the CNN model confirms that delocalized states exist in the impurity band, which is in good agreement with the results obtained by IPR or Thouless number.

V Conclusions

In this work, we numerically investigate the properties of the states in the “impurity band” of heavily-doped non-magnetic semiconductors. By using general full exact diagonalization and sparse matrix diagonalization with Jacobi-Division (JADA) method, we find that with a typical doping probability , the impurity band in the DOS is developed at about . We calculate the IPR, Thouless number, and DOS together for different system sizes and study the relationship between them. The data fitting of IPR and system size on four points suggests the existence of the extended states in the impurity band. The Thouless number calculation supports the same conclusion and gives the exact location of mobility edges.

Besides, we also utilize the supervised deep learning method, which is the state-of-the-art method in pattern recognition, to distinguish the extended and localized states in the impurity band. We train a 3D CNN model using the data generated from the Anderson model and then apply the trained neural network model to classify the states in the “impurity band”. Our trained neural network model achieves high accuracy () in classifying different states in the Anderson model. The prediction of our trained model on “impurity band” also supports the finding from the relationship between IPR, Thouless number and system size though the predicted locations of mobility edges have small discrepancies. Our calculation gives direct evidence that there are three mobility edges in the impurity band for a specific on-site impurity potential in heavily-doped non-magnetic semiconductors.

Acknowledgements.

Z-X. Hu is supported by the National Natural Science Foundation of China Grant No. 11974064 and 12147102, the Chongqing Research Program of Basic Research, and Frontier Technology Grant No. cstc2021jcyjmsxmX0081, Chongqing Talents: Exceptional Young Talents Project No. cstc2021ycjh-bgzxm0147, and the Fundamental Research Funds for the Central Universities Grant No. 2020CDJQY-Z003. HC acknowledges the U.S. Department of Energy, Office of Science, Basic Energy Sciences under Award No. DE-SC0022216.Appendix A Neural network model architecture and hyperparameters

The 3D CNN model used in this paper has a similar architecture to the “AlexNet”Krizhevsky et al. (2012) and “VGGNet”Simonyan and Zisserman (2014), but with a smaller number of convolutional, max pooling, and fully connected layers. This is because we are dealing with a 3D lattice and the edges in the lattice have a much smaller length compared to the images. The architecture of our model is shown in Fig. 8, and the input and output dimension of each layer is also listed in the figure.

The size of the convolution kernel applied in the first and second convolutional layers are and , respectively. Activation function ReLU (rectified linear unit)Nair and Hinton (2010) is performed after the convolutional layer and fully connected layer except for the last layer, which is activated by the softmaxBridle (1989) function. Bias parameters are included for artificial neurons. DropoutSrivastava et al. (2014) is performed with probability after the first fully connected layer to avoid over-fitting and increase the evaluation accuracy.

References

- Anderson (1958) P. W. Anderson, Phys. Rev. 109, 1492 (1958), URL https://link.aps.org/doi/10.1103/PhysRev.109.1492.

- Avérous and Balkanski (1991) M. Avérous and M. Balkanski, in Semimagnetic semiconductors and diluted magnetic semiconductors (Springer, 1991).

- Ohno (1998) H. Ohno, Science 281, 951 (1998), URL https://www.science.org/doi/abs/10.1126/science.281.5379.951.

- Beschoten et al. (1999) B. Beschoten, P. A. Crowell, I. Malajovich, D. D. Awschalom, F. Matsukura, A. Shen, and H. Ohno, Phys. Rev. Lett. 83, 3073 (1999), URL https://link.aps.org/doi/10.1103/PhysRevLett.83.3073.

- Cook and Berciu (2012) A. M. Cook and M. Berciu, Phys. Rev. B 85, 235130 (2012), URL https://link.aps.org/doi/10.1103/PhysRevB.85.235130.

- Weiße and Fehske (2008) A. Weiße and H. Fehske, in Computational many-particle physics (Springer, 2008), pp. 529–544.

- OJALVO and NEWMAN (1970) I. U. OJALVO and M. NEWMAN, AIAA Journal 8, 1234 (1970), eprint https://doi.org/10.2514/3.5878, URL https://doi.org/10.2514/3.5878.

- Carleo and Troyer (2017) G. Carleo and M. Troyer, Science 355, 602 (2017), ISSN 0036-8075, URL http://science.sciencemag.org/content/355/6325/602.

- Carrasquilla and Melko (2017) J. Carrasquilla and R. G. Melko, Nature Physics 13, 431 (2017).

- Ch’Ng et al. (2017) K. Ch’Ng, J. Carrasquilla, R. G. Melko, and E. Khatami, Physical Review X 7, 031038 (2017).

- Van Nieuwenburg et al. (2017) E. P. Van Nieuwenburg, Y.-H. Liu, and S. D. Huber, Nature Physics 13, 435 (2017).

- Venderley et al. (2018) J. Venderley, V. Khemani, and E.-A. Kim, Phys. Rev. Lett. 120, 257204 (2018), URL https://link.aps.org/doi/10.1103/PhysRevLett.120.257204.

- Wetzel (2017) S. J. Wetzel, Phys. Rev. E 96, 022140 (2017), URL https://link.aps.org/doi/10.1103/PhysRevE.96.022140.

- Rodriguez-Nieva and Scheurer (2019) J. F. Rodriguez-Nieva and M. S. Scheurer, Nature Physics 15, 790 (2019).

- Lidiak and Gong (2020) A. Lidiak and Z. Gong, Phys. Rev. Lett. 125, 225701 (2020), URL https://link.aps.org/doi/10.1103/PhysRevLett.125.225701.

- Hsu et al. (2018) Y.-T. Hsu, X. Li, D.-L. Deng, and S. D. Sarma, Physical Review Letters 121, 245701 (2018).

- Hendry et al. (2021) D. Hendry, H. Chen, P. Weinberg, and A. E. Feiguin, Phys. Rev. B 104, 205130 (2021), URL https://link.aps.org/doi/10.1103/PhysRevB.104.205130.

- Choo et al. (2019) K. Choo, T. Neupert, and G. Carleo, Phys. Rev. B 100, 125124 (2019), URL https://link.aps.org/doi/10.1103/PhysRevB.100.125124.

- Pfau et al. (2020) D. Pfau, J. S. Spencer, A. G. Matthews, and W. M. C. Foulkes, Physical Review Research 2, 033429 (2020).

- Sharir et al. (2020) O. Sharir, Y. Levine, N. Wies, G. Carleo, and A. Shashua, Physical review letters 124, 020503 (2020).

- Hendry et al. (2022) D. Hendry, H. Chen, and A. Feiguin, Phys. Rev. B 106, 165111 (2022), URL https://link.aps.org/doi/10.1103/PhysRevB.106.165111.

- Chen et al. (2022) H. Chen, D. G. Hendry, P. E. Weinberg, and A. Feiguin, in Advances in Neural Information Processing Systems, edited by A. H. Oh, A. Agarwal, D. Belgrave, and K. Cho (2022), URL https://openreview.net/forum?id=qZUHvvtbzy.

- Wang (2016) L. Wang, Phys. Rev. B 94, 195105 (2016), URL https://link.aps.org/doi/10.1103/PhysRevB.94.195105.

- Ohtsuki and Ohtsuki (2017) T. Ohtsuki and T. Ohtsuki, Journal of the Physical Society of Japan 86, 044708 (2017), URL https://doi.org/10.7566/JPSJ.86.044708.

- Tanaka and Tomiya (2017) A. Tanaka and A. Tomiya, Journal of the Physical Society of Japan 86, 063001 (2017).

- Mano and Ohtsuki (2017) T. Mano and T. Ohtsuki, Journal of the Physical Society of Japan 86, 113704 (2017), URL https://doi.org/10.7566/JPSJ.86.113704.

- Broecker et al. (2017) P. Broecker, J. Carrasquilla, R. G. Melko, and S. Trebst, Scientific reports 7, 1 (2017).

- Schindler et al. (2017) F. Schindler, N. Regnault, and T. Neupert, Physical Review B 95, 245134 (2017).

- Li et al. (2019) Z. Li, M. Luo, and X. Wan, Phys. Rev. B 99, 075418 (2019), URL https://link.aps.org/doi/10.1103/PhysRevB.99.075418.

- Dong et al. (2019) X.-Y. Dong, F. Pollmann, and X.-F. Zhang, Phys. Rev. B 99, 121104 (2019), URL https://link.aps.org/doi/10.1103/PhysRevB.99.121104.

- Kotthoff et al. (2021) F. Kotthoff, F. Pollmann, and G. De Tomasi, Physical Review B 104, 224307 (2021).

- Zhang et al. (2019a) W. Zhang, L. Wang, and Z. Wang, Physical Review B 99, 054208 (2019a).

- Zhang et al. (2019b) W. Zhang, J. Liu, and T.-C. Wei, Phys. Rev. E 99, 032142 (2019b), URL https://link.aps.org/doi/10.1103/PhysRevE.99.032142.

- Käming et al. (2021) N. Käming, A. Dawid, K. Kottmann, M. Lewenstein, K. Sengstock, A. Dauphin, and C. Weitenberg, Machine Learning: Science and Technology 2, 035037 (2021), URL https://doi.org/10.1088/2632-2153/abffe7.

- Krizhevsky et al. (2012) A. Krizhevsky, I. Sutskever, and G. E. Hinton, in Advances in Neural Information Processing Systems, edited by F. Pereira, C. Burges, L. Bottou, and K. Weinberger (Curran Associates, Inc., 2012), vol. 25, URL https://proceedings.neurips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf.

- Bohrdt et al. (2021) A. Bohrdt, S. Kim, A. Lukin, M. Rispoli, R. Schittko, M. Knap, M. Greiner, and J. Léonard, Physical Review Letters 127, 150504 (2021).

- Théveniaut and Alet (2019) H. Théveniaut and F. Alet, Physical Review B 100, 224202 (2019).

- Brndiar and Markoš (2006) J. Brndiar and P. Markoš, Physical Review B 74, 153103 (2006).

- Edwards and Thouless (1972) J. Edwards and D. Thouless, Journal of Physics C: Solid State Physics 5, 807 (1972).

- Bollhöfer and Notay (2007) M. Bollhöfer and Y. Notay, Comput. Phys. Commun. 177, 951 (2007).

- Andres et al. (1981) K. Andres, R. N. Bhatt, P. Goalwin, T. M. Rice, and R. E. Walstedt, Phys. Rev. B 24, 244 (1981), URL https://link.aps.org/doi/10.1103/PhysRevB.24.244.

- Abrahams et al. (1979) E. Abrahams, P. W. Anderson, D. C. Licciardello, and T. V. Ramakrishnan, Phys. Rev. Lett. 42, 673 (1979), URL https://link.aps.org/doi/10.1103/PhysRevLett.42.673.

- Anderson et al. (1999) E. Anderson, Z. Bai, C. Bischof, S. Blackford, J. Demmel, J. Dongarra, J. Du Croz, A. Greenbaum, S. Hammarling, A. McKenney, et al., LAPACK Users’ Guide (Society for Industrial and Applied Mathematics, Philadelphia, PA, 1999), 3rd ed., ISBN 0-89871-447-8 (paperback).

- Abadi et al. (2015) M. Abadi, A. Agarwal, P. Barham, E. Brevdo, Z. Chen, C. Citro, G. S. Corrado, A. Davis, J. Dean, M. Devin, et al., TensorFlow: Large-scale machine learning on heterogeneous systems (2015), software available from tensorflow.org, URL https://www.tensorflow.org/.

- MacKinnon and Kramer (1981) A. MacKinnon and B. Kramer, Physical Review Letters 47, 1546 (1981).

- MacKinnon and Kramer (1983) A. MacKinnon and B. Kramer, Zeitschrift für Physik B Condensed Matter 53, 1 (1983).

- Kramer and MacKinnon (1993) B. Kramer and A. MacKinnon, Reports on Progress in Physics 56, 1469 (1993).

- Simonyan and Zisserman (2014) K. Simonyan and A. Zisserman, arXiv preprint arXiv:1409.1556 (2014).

- Nair and Hinton (2010) V. Nair and G. E. Hinton, in Icml (2010).

- Bridle (1989) J. Bridle, Advances in neural information processing systems 2 (1989).

- Srivastava et al. (2014) N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov, The journal of machine learning research 15, 1929 (2014).