The Role of Positive and Negative Citations in Scientific Evaluation

Abstract

Quantifying the impact of scientific papers objectively is crucial for research output assessment, which subsequently affects institution and country rankings, research funding allocations, academic recruitment and national/international scientific priorities. While most of the assessment schemes based on publication citations may potentially be manipulated through negative citations, in this study, we explore Conflict of Interest (COI) relationships and discover negative citations and subsequently weaken the associated citation strength. PANDORA (Positive And Negative COI- Distinguished Objective Rank Algorithm) has been developed, which captures the positive and negative COI, together with the positive and negative suspected COI relationships. In order to alleviate the influence caused by negative COI relationship, collaboration times, collaboration time span, citation times and citation time span are employed to determine the citing strength; while for positive COI relationship, we regard it as normal citation relationship. Furthermore, we calculate the impact of scholarly papers by PageRank and HITS algorithms, based on a credit allocation algorithm which is utilized to assess the impact of institutions fairly and objectively. Experiments are conducted on the publication dataset from American Physical Society (APS) dataset, and the results demonstrate that our method significantly outperforms the current solutions in Recommendation Intensity of list R at top-K and Spearman’s rank correlation coefficient at top-K.

Index Terms:

Conflict of Interest, Negative Citations, Impact Evaluation.I Introduction

With the development of scholarly big data, quantifying research output plays an important role in ranking institutions and countries, allocating research grants, making hiring decisions and planning scientific priorities [1, 2, 3]. For instance, it is observed that the institutional appraisal system has been consistently evolving to address the various needs in scientific impact evaluation [4, 5, 6]. While self-citations are meant for reflecting the research progress or knowledge diffusion which is a standard and acceptable procedure [7], the act could, however, be abused to artificially inflate research impact [8] or introduce excessive self-advertisement [9]. In this paper, the scope of self-citations is extended to cover co-authors citations (note: co-author citations are not limited to co-authors of the citing paper, but co-authors in all papers over a pre-defined time frame, such as over the past three years), as well as colleague citations (i.e. co-workers belong to the same institute). The act of the extended scope of self-citation, which is referred as COI citations in this paper, may either be necessary or negative as discussed above. Prior study shows that self-citation accounts for under a three-year citation window [10], and this figure is expected higher under COI as it’s an extended scope of self-citation. Unfortunately, there s lack of universal ground truth to differentiate legitimate citations among all COI citations. Thus, there’s a need to develop a mechanism to model COI citations, and associate different weights to different type of citations. It should be noted that, legitimate self-citations should be treated as regular citations with standard weights (usually 1); whereas negative citations should be given a lower weight (i.e. this weight may be less than 1) to reduce their inflated impact.

Current approaches of institution appraisal fall in two primary categories: full counting and fractional counting. The full counting based methods assume that one scholarly paper contributes equally to all authors’ institutions, so the impact of the paper could be counted multiple times [11]. The fractional counting based methods consider the rate indicators of a selected list of top journals and the best papers or focus only on highly-cited papers [12]. Fractional counting may also suffer from potential distortion in institutional impact evaluation. This is because diversity of negative citations [13, 14] is neglected. A feasible solution to the problem is to design a better assessment metric, which can identify the negative citations, assesses the impact of academic papers objectively and allocates fair shares to contributing institutions.

The evaluation techniques for the impact of scientific outputs have evolved dramatically in recent years, from citation-based indicators [15] to rank-based metrics [13, 16, 17]. A nonlinear PageRank algorithm was proposed to improve the effectiveness of reference ranking [13]. FutureRank was presented to rank scientific articles by citation, authors and time [16]. Based on FutureRank, Wang et al. [17] proposed a CAJTRank to fairly evaluate scientific articles by considering citation, authors, journals and time information. Due to the rapid development of impact metrics, some researchers appealed to exploiting a measured method for metrics [18, nature2013beware]. Unfortunately, most existing metrics regard all citations with equal weights, and it is likely that these metrics do not properly reflect accurate impact of authors, journals and institutions. Therefore, there is a crucial need for an improved metric to fairly assess academic papers.

There are two technical challenges for fair appraisal of academic affiliations: (1) identifying negative citations; and (2) distinguishing the citation strength, and allocating the impact of scientific paper to the affiliation(s) of each author. Dissecting the citation relationship is a feasible way for solving the first problem, and we define positive/negative COI relationships and positive/negative suspected COI relationships as follows:

Positive/negative COI relationships: for two papers with existing citing relationship, if the authors of the two papers are ever co-authors, and if there are one or more papers citing the two papers at the same time, the citation behavior is viewed as positive COI relationships. Otherwise, if no independent papers (papers from different authors) co-cite the two papers, the citation is considered as a negative COI relationship.

Positive/negative suspected COI relationships: similar to the definition of Positive/negative COI, which leverages citation behavior among coauthors, suspected COI leverages the citation behaviors for authors from the same affiliation. Likewise, if there are independent papers (papers without authors from the same institute) recognizing the correlation of papers from the same affiliation, the citation is viewed as an positive suspected COI, otherwise the citation is considered as a negative suspected COI.

We propose PANDORA to model both the COI and suspected COI relationships for fairly measuring the impact of papers. We leverage the credit allocation algorithm [19] to capture coauthor s contributions of a paper, and adjusted weighting is applied to distribute the impact of a publication to affiliation(s) of each author.

In this paper, we differentiate positive and negative COI relationships, positive and negative suspected COI relationships according to citation patterns, as illustrated in Fig. 1. The measurements of negative COI and negative suspected COI relationships between researchers employ the following four factors: collaboration times, collaboration time span, citation times and citation time span. It should be noted that if the authors of paper citing paper is a positive COI, the citation is regarded normal, and the citation strength is set as 1. Extensive experiments are conducted on two subsets of American Physical Society (APS), i.e. PRC and PRE. The results demonstrate that our method outperforms the existing approaches in the Recommendation Intensity at top-K and Spearman s rank correlation coefficient at top-K.

(Note: where and are the list of papers and authors, respectively. The red line and blue line denote the citing relationship. The figure illustrates four cases: (A) Before cites , the authors of and have co-authored one or multiple publications, and and are co-cited by other publications. Just like cites , author and author ever collaborated , and and were co-cited by , makes up a positive COI author pair. (B) Compared with (A), and are not co-cited by other publications, just like and have not been cited by other papers simultaneously, composes a negative COI author pair. (C) Before cites , between authors of and have not collaborated each other, but and belong to the same affiliation, and and are co-cited by other papers, just like the relationship of and , is a positive suspect COI author pair. (D) Compared with (C), and are not co-cited by other publications, as and , composes a negative suspect COI author pair.)

II Design of PANDORA

II-A Dataset

Our experiments are conducted on the APS dataset, which contains 71,287 publications published in two different subsets of APS: Physical Review C and Physical Review E (http://publish.aps.org/datasets), between 1970 and 2013 (43 years). Each record in the dataset includes the paper s title, DOI, author(s), date of publication, affiliation(s) of authors and publisher. A list of citations is provided by an independent dataset within the APS dataset.

II-B Impact of a scholarly paper

The structure of PANDORA is illustrated in Fig. 2. PANDORA quantifies publication impact by differentiating positive COI, negative COI, positive suspected COI and negative suspected COI between the citing and cited publications. Furthermore, PANDORA computes the authoritative score of each paper based on CAJTRank [17]. CAJTRank was a graph-based ranking method and used PageRank [20] algorithm and HITS [21] algorithm simultaneously to rank scientific papers by relying on the four factors: citations, authors, journals (conferences) and publication time. PANDORA adopts the weighted PageRank to fairly evaluate the impact of scholarly papers, and it also leverages the credit allocation algorithm [19] to allocate the impact of a paper to its signed authors. In this paper, we consider citations, authors, journals and time factors, mainly because these factors can influence the impact of papers.

II-C Identification of positive COI

If the authors of a citing paper and a cited paper have been co-authors of one or more papers, COI relationships exist. Although the COI citations may distort the appraisal of research impact, some of these citations are positive and necessary. Consider a scenario where the authors of the citing paper and the cited paper have published papers together. If and are co-cited by the papers from different authors, we consider the citation of and is reasonable, and we refer the citation as a positive COI, with its citation strength set as 1.

II-D Identification of negative COI

If COI relationships exist between the authors of a citing paper and a cited paper , and no paper cites and simultaneously, we define cases like this as negative COI. The citing strength is quantified by the correlation strength between the authors, and we introduce two factors: collaboration times, and collaboration time span, for adjusting the weight of citation strength. The negative COI strength of co-authors in PANDORA is defined as follows:

| (1) |

where is the negative COI strength of co-authors between the th author and the th author. indicates the cumulative number of papers coauthored by the th author and the th author. , where is a set of papers published by the th author, and is a set of papers published by the th author. is the last year of the th author citing the th author. is the initial year of the th author starts citing the th author. indicates the number of year between the first and the last collaborations of authors and .

The relationship strength of each two publications based on the co-author’s negative COI is calculated by:

| (2) |

where is the negative COI strength of the th paper and the th paper. It is the sum of negative COI between each two authors of citing paper and cited paper. indicates the cumulative number of papers coauthored between each two signed authors in the th paper and the th paper. , is the set of all papers with signed authors of the th paper. is the all papers set of signed authors of the th paper. The citation strength of each two publications is defined as follows:

| (3) |

where an exponentially decaying formula is proposed to calculate the citation strength between paper and paper . We consider the citing strength ranges from 0 to 1. As scholars usually intend to cite recent work, if a paper fails to attract citations in early years after being disseminated, the chance of attracting more citations over time will be limited. is the citation strength of the th paper citing the th paper. is defined within a range between 0 and 1 in our work, instead of assuming the citation strength as 1 (i.e. without regarding the COI relationships) in previous works. The value of citation strength is reasonable with the range between 0 and 1, and the formula favors current citation. is a constant value illustrating predefined decay parameter [17]. stands for the current time, is the time of paper citing paper , and represents the time duration of paper citing paper .

II-E Identification of positive suspected COI

Given paper cites paper , although the authors between the two papers have not collaborated in producing any joint paper, the authors of and belong to the same affiliation, we consider that suspected COI relationship exists between them. If the two publications are co-cited by one or more publications (i.e. demonstrated relevance), we regard paper citing paper as positive suspected COI, and the citing strength is set as 1.

II-F Identification of negative suspected COI

Given paper cites paper , the authors between the two papers have not co-authored any paper, and the authors of and belong to the same affiliation, however the two publications are not co-cited by other publications (i.e. without demonstrated relevance), we regard paper citing paper as negative suspected COI. The citing strength is quantified by introducing the two factors: citing times and citing time span. The strength of negative suspected COI relationship of each two authors is defined as follows:

| (4) |

where is the negative suspected COI strength of co-authors of the th author and the th author. is the cumulative number of papers of the th author citing the th author. indicates the number of years between the first and the last citing of authors and . The strength of suspected COI relationship of each two articles is calculated by:

| (5) |

where is the negative suspected COI strength of the th paper and the th paper. It is a summation of all the negative suspected COI between authors of paper and paper . is the cumulative citing number between the authors of the th paper and the th paper. The strength of citation relationship of each two articles is defined as:

| (6) |

II-G The authority score of a paper

In CAJTRank, all citing weights are set as 1, and the abnormal citations are ignored, which leads to the potential issues in article impact evaluation. PANDORA is proposed to address these issues to improve objective and accurate impact assessment of research articles. The core idea of PANDORA is that the prestige of a publication is quantified by the scores of its weighted-PageRank, authors, journal published and references, namely the impact of a publication. Compared to CAJTRank algorithm, PANDORA method constructs a weighted-PageRank to capture the authority score of each publication in citation networks. While the initial score of each paper is set as , indicates the total numbers of scholarly papers in the experiment. Meanwhile, PANDORA also considers the authority scores of each author, journal and references, which are calculated by HITS algorithm. Particularly, CAJTRank algorithm assumed all co-authors’ contributions are equal to a paper. The CAJTRank algorithm neglects a fact that contributions of different authors of a paper are never equal. In order to resolve this problem, credit allocation is introduced in order to reasonably distribute the influential score of different authors of individual paper [19]. Concrete process of computing the prestige score of each publication is demonstrated as follows:

The weighted PageRank score of paper is computed by

| (7) |

where indicates all the papers of linking to paper , is the total number of publication linking out. illustrates the citation strength of paper citing paper . is the original score of paper before iteration is updated.

The authors’ authority scores of single publication are determined by the influence score of each author, while the prestige score of individual author is related to the impact scores of his/her published papers, and can be calculated by the HITS algorithm. When computing the hub score of each author, credit allocation algorithm is used to distribute the proportion of impact of single paper to different authors.

Specifically, the authors’ prestige scores of each paper, , is calculated by

| (8) |

where indicates a summation of all authors’ hub scores in the experimental data. denotes the co-authors’ list of paper , shows all the papers published by author , illustrates the proportion of publication impact score by different authors of . is the prestige score of paper , is the number of publications of author .

Likewise, the prestige score of a journal paper is computed by the HITS algorithm. Specific formula is shown as follows:

| (9) |

where demonstrates the prestige score of publication transmitted from its published journal, and the hub score of each journal includes the prestige scores of all the papers published in the journal. is the sum of all journals’ hub scores, is the journal published by paper , and each paper belongs to one journal. is the set of papers published by journal , is the total number of publications in .

The reference scores of each publication are also computed by the HITS algorithm:

| (10) |

where shows the publication score collected from the authority scores of its references. indicates the total scores transmitted from all the hub papers. contains all the publications that links to. is the sum of publication references .

The authority score of each article contains the four components: PageRank score of the paper, scores of authors, journal and references of this work. The authority score is defined as the weighted sum of these components plus a normalize term:

| (11) |

In our experiment, if the score gap between the present and previous prestige of each paper is less than 0.0001, we consider the presented PANDORA iterative algorithm converges. indicates the authority score of publication . , , and are constant parameters, ranging from 0 to 1. The probability of random jump is set as 0.15 experimentally; meanwhile, the summation of is set as 0.85 for obtaining good experimental results. Currently, the typical approaches for parameter estimation include simple linear regression, multivariate linear regression and support vector machine regression [22]. Based on our experimental characteristic, multivariate linear regression is employed to estimate the parameters of PANDORA, CAJTRank and FutureRank, due to the characters of different factors and linear evaluation. We set the sum of all parameters as 0.85 in the three algorithms, and we find that a parameter captures relative high value, and other parameters receive relative low values by multivariate linear regress. We then estimate three groups of optimal parameters to compare the accuracy of RI and Spearman s rank correlation coefficients of the above mentioned three algorithms. More details can be found in reference [23].

II-H Impact of institution and country

In order to assess the impact of each institution objectively, the computing process is divided into the two parts in this section. The first part is to objectively allocate the impact of each publication to its co-authors by PANDORA. If a publication is only signed by an author, the prestige score of the paper belongs to the single author and this situation is relatively popular in decades ago. With the development of Internet, the collaboration among multiple authors also starts to prevail. Correspondingly, fair distribution of publication impact to multiple authors becomes an important practice to evaluate the impact of different scholars and their institutions.

To fairly distribute the impact of a paper, credit allocation algorithm is leveraged to resolve the coauthors’ contributions to a paper. In this algorithm, the contributive proportion of each author to a paper is determined by the total credit from all of his/her co-cited papers. The second part is to calculate the impact of different institutions. The impact of a scholar is defined as follows:

| (12) |

where refers to the set of individual scholar’s impact captured from his/her publications. refers to the proportion of credit allocation to different authors. indicates the prestige score of a scholarly paper.

The institutional impact is determined by the impact of all scholars’ publications in this institution:

| (13) |

where indicates the set of institutional impact, containing the impact of all scholars in this institution. denotes a scholar’s impact. For the authors with multiple affiliations, we consider the first affiliation as the primary institution.

Furthermore, the impact of a country is determined by the impact of publications of all institutions in this country. Given a country with institutions, the impact of a country is defined as follows:

| (14) |

where represents the set of country impact. denotes the institutional impact.

III Results

III-A Measuring the impact of papers

In order to objectively evaluate the impact of institutions, we first explore the COI relationships in citation networks. 71,287 papers with 755,902 author pairs are analyzed in our experiment. The author pairs are divided into the four categories: positive COI, negative COI, positive suspected COI and negative suspected COI. It is interesting to note that the author pairs of positive COI relationships and negative COI relationships are 59,388 pairs and 48,377 pairs respectively. There are 25,803 papers with positive COI, and 24,294 papers about with negative COI. We also find that there are 3,196 author pairs exist suspected COI relationships, in which author pairs of positive suspected COI relationships and negative suspected COI relationships are 1,645 and 1,551 respectively.

We conduct the experiments on two subsets of APS to compare PANDORA with FutureRank [16] and CAJTRank [17]. The experiments use two metrics: [23] and Spearman s rank correlation coefficient [24]. Due to the lack of ground truth for ranking papers, previous research adopted the future PageRank score [16] or the future citations [17] as the ground truth. In our study, citations without COI are used as the ground truth instead. The reason is that both future PageRank scores and future citations contain negative citations. Therefore, the ground truth may be biased, and it likely results in the inaccurate appraisal of the impact of academic publications.

The concept of is described as follows: let indicate the list of top-K returned papers of a ranking method and represent the list of ground truth. For any scholarly paper in with the ranked order , the of at is defined as:

| (15) |

The above formula implies that for any article of the top-K ground truth list, if it is ranked higher, of the article will be higher. Correspondingly, we are able to gain the of the list at . According to the of each article, the of the list at could be defined as follows:

| (16) |

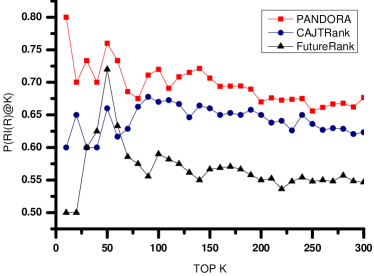

To better understand the impact that PageRank, authors, journal, citations and time factors have on the prestige scores of each article, a multivariate linear regression is implemented to estimate the parameters of PANDORA, FutureRank and CAJTRank, to optimize the ranking results in terms of performance. Fig. 3 shows different accuracy rates of the three algorithms on top-K papers, and ranges from 10 to 300. The accurate rates of CAJTRank and FutureRank are between 0.6 and 0.677, and between 0.5 and 0.633, respectively. In comparison, the accurate rates of PANDORA are between 0.656 and 0.8. As shown in Fig. 3, PANDORA consistently outperforms CAJTRank and FutureRank in terms of for all values.

Moreover, the Spearman s rank correlation coefficient is utilized to assess the similarity of these algorithms.

| (17) |

where and indicate the position of publication in the ground truth rank list and the corresponding algorithm rank list, respectively. and are the average rank positions of all publications in the two ranks lists, respectively.

In Fig. 4, we observe that Spearman’s rank correlation coefficients of PANDORA and CAJTRank are at around 0.5 and 0.42 respectively, while the coefficient changes from 0.267 to 0.519 for FutureRank. The best result could be obtained by considering all kinds of information: PageRank, authors, journal, references and time factors. PANDORA outperforms the other state-of-art methods on two subsets of APS.

By comparing and Spearman’s rank correlation coefficients of CAJTRank and FutureRank, we find that journal factor is beneficial to assess the impact of papers. At the same time, by comparing PANDORA and CAJTRank, the preceding results indicate that by the using COI relationships, weighted PageRank could improve the performance of PANDORA. Next, we take dynamic evolutionary nature of citation networks, time information and COI relationships of authors into consideration, and jointly use them to determine the citing strength, which have important effect on generating a better and Spearman’s rank correlation coefficient. Moreover, PANDORA’s author credit allocation scheme helps generating a fair and objective ranking list.

III-B Quantifying the impact of institutions

In order to capture the existence of COI citations at the institution-level and at the country-level, several characteristics are investigated for institution and country : the institution size (), represents the total number of authors from institution who published at least one publication. shows the number of publications published from institution . is the cumulative COI citations derived from all publications in institution . The country size (), denotes the total number of authors from country who published at least a publication. represents the number of publications published from country ; shows the cumulative COI citations derived from all publications in country .

Figs. 5a and 5b show the correlation between the institution size and both the average COI citations and the average publications COI citations . Fig. 5a indicates that most institutions have a small number of scholars publishing on APS journals. It could be observed that COI citations exist in institution with different scales, and the larger the institution is, the more COI citations are likely to be. Fig. 5b shows the correlation between institution size and the average COI citations of each paper . According to Fig. 5b, we can observe that COI citations of each paper range from , and the average COI citations of each paper range from 0.529 to 1.022. Figs. 5c and 5d show the correlation between the country size and both the average COI citations and the average publications COI citations . Fig. 5c indicates that most countries have a small number of scholars in the APS publications, except for a few countries. For example, American scholars are the highest contributors to PRC and PRE, with 20,468 scholars. We also find that the country size positively correlates with COI citations, which indicates that large countries are likely to result in COI citations according to the analysis of each author’s COI citations. The potential cause of this phenomenon is that large institutions or large countries have more international and internal collaborations. According to Fig. 5c, we also observe that when country size falls in between ranks 60 and 100, presents a sudden fall. To obtain a better understanding of the decrease, we investigate these countries and their adjacent countries in the following three aspects: the number of papers, the number of co-authors and COI citations. We observe an interesting phenomenon: small COI citations exist in these countries, and the number of co-authors (about 3 authors) of each paper is less than their adjacent countries (around 4 authors). This may be the reason why impact of these countries is relatively small compared with other countries. Fig. 5d shows the correlation between the country size and the average COI citations of each paper . It indicates that each publication contains more COI citations in large countries compared to small countries, COI citations of each paper range from 0.156 to 0.883, while the lower values are similar to the ones in Fig. 5c.

In order to investigate the impact of average institution and average scholarly papers in different institution sizes and country sizes, several external characteristics are examined. The institution impact () represents the total scores of all authors collected by all papers in this affiliation. The country impact (), denotes the total summation of all the institutions collected by all the authors in this country. In Figs. 6a and 6b, the correlation between the institution size and both average institution impact and average scholarly papers impact are shown. We find that institution size influences the impact of each author in his/her affiliation, that is, scholars in large institution obtain higher average impact (Fig. 6a). We also notice that the institution size has little influence on the average impact of publications in institutions on the whole (Fig. 6b). In Figs. 6c and 6d, the correlation between the country size and both country average impact and average country scholarly papers impact are demonstrated, which indicates country size has some positive influence on the impact of country, however, the country size has little influence on the average impact of publications in countries on the whole. According to Figs. 5c and 6c, we observe that if the impact of country is small, its COI citations are small.

To investigate the relationship of COI citations and impact of papers evolving at the institution-level and at the country-level, previous studies have been focusing on citation distributions, yet little is concerned about the evolution of COI citations of individual institution and country. The trends of COI citations variations in different institutions and countries are well illustrated by the COI citations history of publications extracted from PRC and PRE subsets of APS corpus (Figs. 7a and 7b). In Fig. 7b, we analyze the relationship between the impact of top 100 institutions and their COI citations. The relationship distribution indicates that the higher the impact of institution is, the more COI citations it may contain. For example, the impact and COI citations of the top ranked institution are far beyond the other institutions. Most institutions with lower COI citations have a relative smaller impact than other institutions with higher COI citations. According to Fig. 7b, we observe that the top 20 institutions are from American national laboratory and university by the impact of institutions rank. In Fig. 7a, we observe that the countries with higher impact likely have more COI citations than the countries with lower impact. By COI citations of each country rank, top 20 countries from high to low in order are: America, Germany, France, Japan, Italy, China, UK, Spain, Australia, Canada, India, Netherlands, Russia, Belgium, Brazil, Israel, Poland, Switzerland, Denmark and Sweden.

According to Fig. 7c, we observe that the COI citations of some countries suddenly increase since 1993 year, the trend of high COI citations continues until 2005. After that, COI citations of country present decreased trend. The reason behind is that COI citations of a paper are a cumulative process. The published time of papers becomes shorter, and possible COI citations are relatively smaller, therefore, we observe that around 2013, the COI citations of country become less. Fig. 7d characters the dynamic impact of scholarly papers in country-level. We observe that the impact of countries presents a sudden increase since 1993, which is consistent with Fig. 7c. What fueled this increase in the impact of country? From the statistics of the yearly summation of national publications, we observe that the number of publications grows rapidly in 1993, and some countries even increase to 10 times, compared to 1992. We believe this shift has close relationship with the ”information superhighway” strategy of the United States originated from the Clinton period. In September 1993, shortly after Clinton became the president of the United States, he officially launched the cross-century national information infrastructure project plan. This program not only had a very broad impact on the world, but also created a brilliant future for the United States information economy. In addition, a journal paper published in Nature 2013 also reported that international collaboration increased more than ten-fold since the mid-1990s, which coincides with our experimental results from another aspect [25]. Top 20 countries from high to low in order are: America, Germany, France, China, Japan, India, Italy, UK, Spain, Brazil, Russia, Canada, Netherlands, Australia, Israel, Belgium, Poland, Argentina, Korea and Switzerland by impact of country rank. Specially, America is the most prominent country, and its impact is about 3.5 times of Germany. Through such sorting results, we observe that the ranking order of country impact correlates with scientific and technological competitiveness and more details for scientific competitiveness of nations can be found in the reference [6].

IV Discussion

Our analysis about the citation relationship in APS dataset by time evolution indicates that regardless of the institution size, the COI relationships in scientific evolution networks are a common phenomenon and COI citations present variable trends in different institutions and countries. Institutions with the highest COI citations are found in USA, followed by Australia, Germany, Israel, Finland and Spain. While USA and Germany belong to G8 countries, Australia and Israel are the countries with high investments in research [26]. This finding suggests that leading countries in the world are not insulated from negative citations behaviors. Understanding how the COI relationships affect the appraisal of scholarly papers is of great importance for scientific community. We investigate the criteria of fair assessment of the scientific impact, and the proposed PANDORA technique helps identifying highly influential papers, scholars, and academic institutions.

There are several potential explanations for why COI relationships exist in citation networks. Firstly, the cooperation under the competition mechanism may play a crucial role. Specially, an extremely strong collaboration relationship (super ties) has remarkably positive impact on citations [27]. For example, pursuing collaborative research in a close community tends to be more beneficial than working alone, and negative COI citations could increase at the same time. Secondly, some researchers may cite deliberately each other s work to boost citation count, hence, the implied academic reputations [13], i.e., researchers may cite their friends’ newly published papers. Lastly, the same scientific affiliation may also contribute to negative COI citations, i.e., they likely cite their colleagues just because they belong to a scientific team instead of actual research relevance. Although we have stated several possible reasons of COI citations generation, the behavior in real scientific community are more complicated and further investigating in COI citations is required to fairly evaluate the scientific impact.

In summary, we have proposed a COI-based method to give the audiences a deeper insight into the impact of papers, institutions and countries. Our method has several distinct features over conventional approaches: (i) The citing strength is determined based on the COI relationships in citation networks, and the four categories of COI relationships are investigated for adjusting the citation weights. To this end, a co-citation mechanism is used to identify positive COI, negative COI, positive suspected COI and negative suspected COI relationships in citation networks. In our method, a homogeneous and heterogeneous fusion perspective is adopted so that the scores of PageRank, authors, journals, references fit together into the corresponding impact of articles calculation. To quantify the impact of papers, the well-known approach PageRank treats all citation weights as 1, making all citations with equal importance. However, as COI relationships exist, our approach adopts a weighted PageRank to identify different citation relationships to fairly evaluate the impact of papers. (ii) PANDORA performs better than the current methods in terms of evaluating the impact of institutions. We adopt a credit allocation algorithm for fairly distributing the impact of each publication to its authors, which could guarantee accurate appraisal of authors’ impact. Then we reasonably assign the authority score of each scholar to his/her affiliation and country. Previous research equally allocated the impact of single paper to its all authors, or distributed them according to the sequence order of authors. Thus some papers are computed twice or more when calculating the total publications of a country [28]. In our method, we take the first author s first affiliation to resolve these issues. (iii) Our approach could be utilized to improve some established metrics for scientific impact by considering the COI relationships, such as JIF and H-index. Current evaluation methods of citation-based impact, from JIF to H-index, contain anomalous citations inevitably leading to the inaccuracy of evaluation. However, PANDORA can effectively discover such abnormal citations. If the abnormal citations can be eliminated before calculating JIF and H-index, these metrics can reflect true impact more effectively.

Our proposed method has few limitations: (i) Our experiments covered a descent number of research papers (71,287), these papers come from APS dataset and the study is limited to the physics discipline. (ii) Dependence of the data quality: for example, we extracted the affiliation details from APS dataset, thus relied on the mechanism that APS chosen to combine multiple affiliations that are variants of the same institution. (iii) COI relationships are identified through co-author and same institution, whereas other forms of COI relationships might also exist.

Our analysis provides guidance on quantifying the impact of the academic publications, institutions and countries using a metrics-based system. As such, understanding how research papers trajectories shift in affiliation-level and country-level is valuable for career planning and selection of collaborative opportunities. Furthermore, better understanding of the COI relationships in citation networks is also significant for assessing efficiency and productivity of scientific research.

Acknowledgment

The authors extend their appreciation to the International Scientific Partnership Program ISPP at King Saud University for funding this research work through ISPP0078. This work was supported in part by the National Natural Science Foundation of China under Grant 61502075, China Postdoctoral Science Foundation Funded Project (2015M580224), and Liaoning Province Doctor Startup Fund (201501166).

References

- [1] J. Ypersele, “The maze of impact metrics,” Nature, vol. 502, no. 7472, pp. 423–423, 2013.

- [2] F. Xia, W. Wang, T. M. Bekele, and H. Liu, “Big scholarly data: A survey,” vol. 3, pp. 18–35, 2017.

- [3] X. Bai, H. Liu, F. Zhang, Z. Ning, X. Kong, I. Lee, and F. Xia, “An overview on evaluating and predicting scholarly article impact,” Information, vol. 8, no. 3, p. 73, 2017.

- [4] A. Aragón, “A measure for the impact of research.” Scientific reports, vol. 3, pp. 1649–1649, 2012.

- [5] L. Bornmann, “Ranking institutions by the handicap principle,” Scientometrics, vol. 100, no. 2, pp. 603–604, 2014.

- [6] L. Bornmann, M. Stefaner, F. Anegón, and R. Mutz, “What is the effect of country-specific characteristics on the research performance of scientific institutions? using multi-level statistical models to rank and map universities and research-focused institutions worldwide,” Journal of Informetrics, vol. 8, no. 3, pp. 581–593, 2014.

- [7] R. G lvez, “Assessing author self-citation as a mechanism of relevant knowledge diffusion,” Scientometrics, pp. 1–12, 2017.

- [8] C. Bartneck and S. Kokkelmans, “Detecting h-index manipulation through self-citation analysis.” Scientometrics, vol. 87, no. 1, pp. 85–98, 2011.

- [9] P. Seglen, “The skewness of science,” Journal of the Association for Information Science and Technology, vol. 43, no. 9, pp. 628 C–638, 1992.

- [10] D. Aksnes, “A macro study of self-citation,” Scientometrics, vol. 56, no. 2, pp. 235–246, 2003.

- [11] P. Vinkler, “The evaluation of research by scientometric indicators,” Chandos Pub, 2010.

- [12] L. Bornmann, M. Stefaner, F. Anegón, and R. Mutz, “Ranking and mapping of universities and research-focused institutions worldwide based on highly-cited papers: A visualisation of results from multi-level models,” Online Information Review, vol. 38, no. 1, pp. 43–58, 2014.

- [13] L. Yao, T. Wei, A. Zeng, Y. Fan, and Z. Di, “Ranking scientific publications: the effect of nonlinearity,” Scientific reports, vol. 4, 2014.

- [14] X. Bai, F. Xia, I. Lee, J. Zhang, and Z. Ning, “Identifying anomalous citations for objective evaluation of scholarly article impact,” Plos One, vol. 11, no. 9, p. e0162364, 2016.

- [15] E. Garfield, “The history and meaning of the journal impact factor,” Jama, vol. 295, no. 1, pp. 90–93, 2006.

- [16] H. Sayyadi and L. Getoor, “Futurerank: Ranking scientific articles by predicting their future pagerank.” in SDM. SIAM, 2009, pp. 533–544.

- [17] Y. Wang, Y. Tong, and M. Zeng, “Ranking scientific articles by exploiting citations, authors, journals, and time information,” in Twenty-Seventh AAAI Conference on Artificial Intelligence, 2013, pp. 933–939.

- [18] J. Wilsdon, “We need a measured approach to metrics,” Nature, vol. 523, no. 7559, p. 129, 2015.

- [19] H. Shen and A. Barabási, “Collective credit allocation in science,” Proceedings of the National Academy of Sciences, vol. 111, no. 34, pp. 12 325–12 330, 2014.

- [20] L. Page, “The pagerank citation ranking : Bringing order to the web,” Stanford Digital Libraries Working Paper, vol. 9, no. 1, pp. 1–14, 1998.

- [21] J. M. Kleinberg, “Authoritative sources in a hyperlinked environment,” in Acm-Siam Symposium on Discrete Algorithms, 1998, pp. 668–677.

- [22] H. Purwins, B. Barak, A. Nagi, R. Engel, U. Hockele, A. Kyek, S. Cherla, B. Lenz, G. Pfeifer, and K. Weinzierl, “Regression methods for virtual metrology of layer thickness in chemical vapor deposition,” Mechatronics, IEEE/ASME Transactions on, vol. 19, no. 1, pp. 1–8, 2014.

- [23] X. Jiang, X. Sun, and H. Zhuge, “Towards an effective and unbiased ranking of scientific literature through mutual reinforcement,” in Proceedings of the 21st ACM international conference on Information and knowledge management. ACM, 2012, pp. 714–723.

- [24] J. Myers and A. Well, Research design and statistical analysis. L. Erlbaum Associates, 2010.

- [25] J. Adams, “Collaborations: The fourth age of research,” Nature, vol. 497, no. 7451, pp. 557–560, 2013.

- [26] G. Cimini, A. Gabrielli, and F. S. Labini, “The scientific competitiveness of nations,” PloS one, vol. 9, no. 12, p. e113470, 2014.

- [27] A. Petersen, “Quantifying the impact of weak, strong, and super ties in scientific careers,” Proceedings of the National Academy of Sciences, vol. 112, no. 34, pp. E4671–E4680, 2015.

- [28] D. King, “The scientific impact of nations,” Nature, vol. 430, no. 6997, pp. 311–316, 2004.