The reflectionless properties of Toeplitz waves and Hankel waves: an analysis via Bessel functions

Abstract

We study reflectionless properties at the boundary for the wave equation in one space dimension and time, in terms of a well-known matrix that arises from a simple discretisation of space. It is known that all matrix functions of the familiar second difference matrix representing the Laplacian in this setting are the sum of a Toeplitz matrix and a Hankel matrix. The solution to the wave equation is one such matrix function. Here, we study the behaviour of the corresponding waves that we call Toeplitz waves and Hankel waves. We show that these waves can be written as certain linear combinations of even Bessel functions of the first kind. We find exact and explicit formulae for these waves. We also show that the Toeplitz and Hankel waves are reflectionless on even, respectively odd, traversals of the domain. Our analysis naturally suggests a new method of computer simulation that allows control, so that it is possible to choose — in advance — the number of reflections. An attractive result that comes out of our analysis is the appearance of the well-known shift matrix, and also other matrices that might be thought of as Hankel versions of the shift matrix. By revealing the algebraic structure of the solution in terms of shift matrices, we make it clear how the Toeplitz and Hankel waves are indeed reflectionless at the boundary on even or odd traversals. Although the subject of the reflectionless boundary condition has a long history, we believe the point of view that we adopt here in terms of matrix functions is new.

keywords

Toeplitz waves, Hankel Waves, One–way waves, Bessel functions, Matrix functions

MSC 65N06, 15A60, 65F30, 65F60

1 Introduction

“One–way waves” are similar to solutions of wave equations but they move in only one direction, and exhibit no propagation in the opposite direction. They are important in the subject of absorbing boundary conditions [4]. Trefethen & Halpern study the well-posedness of one–way wave equations, and they give a nice overview of the history [16]. The literature on reflectionless boundary conditions, and on applications of Bessel function expansions to waves, is extensive. We do not attempt a survey here. An incomplete list of authors includes: Grote, Keller, Givoli, Bayliss & Turkel, Higdon, Ting & Miksis, Lubich & Schadle, Alpert, Greengard & Hagstrom. Particular attention is paid to the issue of whether or not the proposed reflectionless boundary conditions are exact or approximate, and whether or not the conditions are local or global. There are also important connections to the Kirchoff formula and to the Dirichlet–to–Neumann map, and to Born series, that we do not consider here.

We distinguish three settings:

-

•

Fully continuous: both time and space are continuous. The reflectionless boundary condition is the Sommerfeld condition, which in our setting might be applied by imposing

(1) on the solution at the left and right boundaries. The choice of the plus or minus sign depends on whether the wave is traveling left or right. Notice this condition (1) is local in time and local in space.

-

•

Fully discrete: Both time and space have been discretized. Engquist & Majda [5, Equation (5.3)] address the issue of the nature of the condition in this setting. Their reflectionless boundary condition is not a simple expression. It is not local in time and it is not local in space.

-

•

Semi-discrete: time remains continuous and space is discretized. Intuitively, we expect the conditions to be local in time, and non-local in space. This situation has been studied by Halpern [7, Section 3].

A well-known model in these settings is a set of masses connected by linear springs, which can be generalised to higher dimensions through an array of springs. The resulting framework is then a linear system of ordinary differential equations.

The focus of our article is on the semi-discrete setting, where time remains continuous. However, from equation (LABEL:eq:eq6) onwards we find it more convenient to evaluate our expressions at certain discrete time points. (Note that our evaluations here are exact for the semi-discrete setting, as opposed to what is commonly done in the literature in the ‘fully-discrete’ setting where some further approximation is introduced.) In our semi-discrete setting, the solution to the wave equation can be thought of as a matrix function [15]. Very recently Nadukandi & Higham have shown how to efficiently compute these wave–kernel matrix functions [11].

It is important to note that our purpose in this article is not to study the reflectionless boundary condition per se, nor to study the big field of inverse problems to which it relates; these topics already have an extensive literature. The boundary condition in particular, is an old problem that has been studied by many authors; among the early literature, perhaps the work of Halpern [7] is closest to our own framework. Instead, our purpose is to reveal the properties of the Toeplitz waves and the Hankel waves that we will introduce later. During the course of our analysis, it transpires that these Toeplitz and Hankel waves possess some attractive reflectionless properties.

We revisit the problem with a new perspective, by carefully revealing the algebraic structure of those matrix functions. We find particularly explicit and exact formulas. An attractive outcome of our perspective is to show that the travelling wave can be considered as the sum of two waves that we call the Toeplitz wave and the Hankel wave. We show that these waves can be written as certain linear combinations of even index Bessel functions of the first kind. These expansions, as we will explain, make it clear that the Toeplitz wave and Hankel wave are reflectionless on even, respectively odd, traversals of the domain.

In section 2, we give some background on Toeplitz and Hankel matrix functions. In section 3, we show how to write a travelling wave as the sum of two waves that we call a Toeplitz wave and a Hankel wave. In section 4, we give analytic expressions for the Toeplitz and Hankel waves in terms of certain sums of even index Bessel functions of the first kind. In section 5, we show simulations of these waves using the methods of Nadukandi & Higham [11], and also using methods inspired by our analytic expressions. With our analysis, we are now able to algebraically explain interesting behaviours that have previously been observed in numerical simulations in the literature [9]. We also showcase another benefit of our analysis, namely that it suggests a method of simulation that allows us to choose, in advance, the number of reflections. The paper concludes in section 6 with some observations and discussion for future work.

2 Toeplitz–plus–Hankel matrix functions

This section collects together some of the key results from [15] concerning Toeplitz-plus-Hankel matrix functions that we will need later.

The central difference approximation to the Laplacian, which in one space dimension is simply the second derivative, , is , where is the tridiagonal, symmetric positive definite Toeplitz matrix:

| (2) |

and

| (3) |

We can diagonalize the matrix where the eigenvalues appear in the diagonal matrix and where the eigenvectors form the columns of . Given a function of a scalar argument, we define a matrix function [8] via diagonalization:

| (4) |

The eigenvalues of the matrix in (2) are:

| (5) |

and the eigenvectors are:

| (6) |

With these explicit expressions, the entry of the matrix function is

| (7) |

Recall that Toeplitz matrices are those with constant diagonals, whereas Hankel matrices have constant anti-diagonals. We now define the strong Toeplitz-plus-Hankel property (as in [15]): a matrix has the strong Toeplitz-plus-Hankel property when all matrix functions are the sum of a Toeplitz matrix and a Hankel matrix. For the purpose of this definition and this article, we only consider matrices that are diagonalizable, and by all matrix functions, we only consider those functions that come via diagonalization, as defined above. The strong Toeplitz-plus-Hankel property is equivalent to requiring that each rank one projection matrix coming from the eigenvectors, can be written as a sum of a Toeplitz matrix and a Hankel matrix. The matrix has this strong property, as we will now demonstrate.

Entries of the rank one symmetric matrices, denoted that project onto eigenspaces, are products of sines, of the form . Recall the trigonometric identity

| (8) |

Thus each entry in can be written as the sum of a term of the form that leads to a Toeplitz matrix, and another term of the form that leads to a Hankel matrix. Thus, for , the rank one matrix

| (9) |

is the sum of a Toeplitz matrix, with entries

| (10) |

and a Hankel matrix

| (11) |

recalling that .

A sum of Toeplitz matrices is again a Toeplitz matrix, and similarly Hankel matrices are closed under addition. Recalling (4), (7) and (9), we see that as has the strong Toeplitz-plus-Hankel property, all matrix functions of are the sum of a Toeplitz matrix and a Hankel matrix:

| (12) |

A few observations are noteworthy. In general, a matrix cannot be written exactly as the sum of a Toeplitz matrix and a Hankel matrix.

When it is possible to express a matrix exactly as the sum of a Toeplitz matrix and a Hankel matrix, the Toeplitz-plus-Hankel splitting is not unique. The reason is that there is a two dimensional space of matrices that are simultaneously both Toeplitz and Hankel (examples of such matrices are displayed in (35)). One possible basis for that space is formed by two matrices: the all ones matrix, in which every entry is 1, and the checkerboard matrix in which every entry is of magnitude 1, together with an alternating pattern of signs. Thus there is a somewhat arbitrary choice as to where to include these matrices (they could go into either the Toeplitz part or the Hankel part, possibly also with some weighting). In this article we always make the particular choice that is implicit when we use (10) and (11).

It is helpful to consider the action of the Toeplitz and Hankel parts on a vector ‘input.’ To illustrate this action, consider the so-called shift matrix, which is an example of a Toeplitz matrix. In this particular example, we see the action is a ‘forward shift’ that preserves the orientation of the input:

| (13) |

Define shift matrices where corresponds to the matrix with 1 on the th upper subdiagonal and 0 elsewhere. We will need these matrices later in (4). Let be the identity matrix. These matrices and their transposes form a natural basis, , for the space of Toeplitz matrices. That is, an arbitrary Toeplitz matrix , with first row and first column , is a linear combination of these shift matrices: . Thus, we can think of the action of the Toeplitz matrix as a linear combination of these shifts [6]. Likewise, to illustrate the action of a Hankel matrix, consider the ‘Hankel version of the shift matrix.’ In this example, we see a ‘backward shift’ (which is also known as a reversal permutation) that reverses the orientation of the input:

| (14) |

We define a set of such matrices, with ones on a backwards diagonal and which we denote by , and which are depicted later in (39). These matrices are related to the shift matrices by multiplying by the anti-identity matrix , that is for , , and for . The set of matrices form a natural basis for the space of Hankel matrices. That is, an arbitrary Hankel matrix can be written as a linear combination , where the weights in the combination come from the backward diagonals of the Hankel matrix.

3 The wave equation is Toeplitz–plus–Hankel

This section summarizes observations from [9] that we will need later. It transpires that the Toeplitz part of the solution to the wave equation can be thought of as a type of solution that does not feel the boundaries on even traversals of the domain, whereas the Hankel part of the solution does not feel the boundaries on odd traversals of the domain.

Consider the wave equation

| (15) |

on the domain with zero Dirichlet boundary conditions . In the semi-discrete setting, the continuous wave equation (15) is approximated on an equally spaced grid of points , with spacing , by the linear system of ordinary differential equations

| (16) |

with the matrix from (2). It can be quickly checked by differentiating twice that a solution to our semi-discrete model in (16) is the matrix function

| (17) |

Here we are also using the square root matrix function, and we restrict attention to the special case of a wave equation involving a symmetric graph Laplacian, of which the matrix in (2) is an example. This solution (17) could be compared with the representations of the solutions presented by Nadukandi & Higham [11], who are able to efficiently compute solutions to the wave equation, even in the more general situation where the graph Laplacian matrix in question is not symmetric.

A second order differential equation such as (16) requires two initial conditions. In our solution (17), we are assuming an initial condition , and we are also assuming zero initial velocity. If the initial velocity, , is not zero then the solution involves an extra term:

| (18) |

Our article focuses on the solution in (17).

To connect this solution (17) to the matrix function point of view let us make the particular choice of function

| (19) |

Define

| (20) |

with as in (10) but now , and

| (21) |

with as in (11). The spacing is chosen so that the mesh consists of equally-spaced points, including the endpoints -1 and 1. Now via (12), the solution (17) to the wave equation is the sum of a Toeplitz wave, , and a Hankel wave, . That is,

| (22) |

Next, we seek to to obtain explicit expressions for the Toeplitz and Hankel waves in terms of certain finite sums of Bessel functions of the first kind.

4 Expressions for the Toeplitz and Hankel waves as certain sums of Bessel functions

In this section we derive explicit representations of the Toeplitz and Hankel waves on a symmetric one dimensional domain with a symmetric initial condition. We will show that the nonconstant Toeplitz wave traverses the domain on odd traversals of the domain (the first traversal is a half traversal), while the nonconstant Hankel wave appears on even traversals. These explicit expressions are given as a finite sum of even Bessel functions of the first kind and the number of traversals appears as a parameter on the total number of Bessel functions required. In order to construct the Toeplitz and Hankel waves we need some preliminary lemmas and definitions, some of which we collect from section 2, but here we specialise the expressions to a more convenient form.

Lemma 4.1.

Let be the tridiagonal matrix with 2 on the diagonal and -1 on the first upper and lower subdiagonals as displayed in (2); then the eigenvalues of satisfy

| (23) |

Proof.

We now consider the spatially discretised wave equation based on the central difference approximation on with constant discretisation , is odd. Note that in the case is odd, then 0 is always in the set of mesh points. Hence the discretised spatial operator is and the travelling wave is given by

| (24) |

where denotes the matrix function . We note that the eigenvalues of this matrix are

| (25) |

We can now define the Toeplitz and Hankel components of this matrix function. They are

Hence the Toeplitz and Hankel waves are

| (27) |

where the full wave is

The choice of notation and indicates the close relationship to the matrices appearing in (20), (21), and (22).

In what follows, we will sample time at equally-spaced time points with , a positive integer. We are now discretising in time, but note that we are still evaluating the semi-discrete model (where time remains continuous) exactly at these chosen discrete time points. This is as opposed to what is usually termed a ‘fully discrete’ model, where some additional approximation is introduced. We will also assume without loss of generality that .

Now the Toeplitz and Hankel waves are

The expressions in (LABEL:eq:eq6) lead us to consider Bessel functions of the first kind. In the following, we list some lemmas which yield some important properties of the Bessel functions.

Lemma 4.2.

The Bessel functions of the first kind, real, are

For integer values of the index , the following properties hold.

For non-negative integers , has an infinite number of zeros and Siegel’s Theorem states that for any integers and , and have no common zeros other than . (See, for example, Abramowitz and Stegun [1]). The following Lemma 4.3 gives us the well-known Bessel relation, which will be fundamental for our analysis.

Lemma 4.3.

The Bessel relation is given by

As a consequence of Lemma 4.3,

| (29) |

It is clear that we can use (29) in simplifying the expressions for the Toeplitz and Hankel waves in (LABEL:eq:eq6). In particular we can rewrite (LABEL:eq:eq6) as

Using the definition of the Toeplitz and Hankel matrices in (10) and (11) and the trigonometric relation

then we can write that component , of the vectors and are

| (30) | |||||

| (31) | |||||

A reminder about notation may be helpful here: denotes component of the time-varying vector that is the Toeplitz wave at time that was introduced in (LABEL:eq:eq6), whereas is the matrix defined in (10).

In order to make further progress we use the following lemma, which is known as the Lagrange identity, and a subsequent lemma.

Lemma 4.4.

Let

If the angle is not an integer multiple of , i.e. , an integer, then

Otherwise, if , then .

Proof.

Consider . Then use De Moivre’s Theorem and equate real and imaginary parts. ∎

Lemma 4.5.

For , with an integer, the following identity holds true:

Proof.

If with , then and clearly . Otherwise using the relationship

gives

which is or according to the parity of . ∎

We will use Lemma 4.5 to simplify the Toeplitz wave and the Hankel wave formulation given in (30) and (31). In the case of the Toeplitz wave, can take on the values , , so or . Therefore we must take care when these values of are of the form , . Similarly, in the case of the Hankel wave, the corresponding values are , or .

We now introduce a parameter . The integer will be used as an upper limit for the number of terms in a sum, in the expressions below. We will separate the cases in which the indices take the values , , from the other values of .

We note can take on values , say, where . Hence for with then

and the corresponding in these cases. We will now simplify the expression in vector form.

If then there is a component of the Toeplitz wave

Similarly, if , , then we can use the shift matrices where introduced earlier.

Introducing the vectors , , where

leads to the first part of the Toeplitz wave as

| (32) |

We will write the factor at the front as . Subtracting off this term and reincorporating into (30) and then using Lemma 6 with either 0 or -1 and finally dividing throughout by leads to

where

| (34) |

where and are the matrices (as an aside, notice that these matrices have the special property that they are simultaneously both Toeplitz and Hankel)

| (35) |

The expression for the term above in eq. 34 is complicated. It will be helpful to simplify this expression for because this will simplify the expression we obtain later for the Toeplitz wave. This simplification is made possible by Lemma 4.6 and Lemma 4.7, as follows.

Lemma 4.6.

The following relationships hold:

Proof.

From Lemma 4.3, the Bessel relations, we have

The relationships claimed in the lemma are obtained by addition and subtraction of these two equalities, and noticing cancelation of terms. ∎

We can now simplify by applying Lemma 4.6.

Lemma 4.7.

where .

We can now write the characterisation of the Toeplitz wave over the traversals in two ways: either in terms of the vectors (Theorem 4.1) or increasing values of the Bessel indices (Corollary 4.1.1). A reason to seek results such as these is that they allow us to express the wave as a sum over the number of traversals, which is useful later in computer simulations when we want to control the number of reflections, for example.

Theorem 4.1.

The Toeplitz wave over traversals is

| (36) | |||||

where and

We can rewrite (36) in the ascending index of the Bessel function.

Corollary 4.1.1.

The Toeplitz wave over traversals is

| (37) | |||||

We can observe from (37) that the term is repeated twice and there are always three missing terms when moving to the next traversal - e.g. , , or , , , etc.

We can now construct the Hankel wave based on (31). We must consider the cases where where now or . Let ; then can take values . Hence

so

| (38) |

For these values .

Let be the Hankel matrices in which 1’s are on the th backwards subdiagonal of , and all other entries are zero. That is,

| (39) |

Then we can construct the vector for these values of given in (38). It is easy to show that this leads to the term

By the same argument as for the Toeplitz wave, the remaining component is

This leads to Theorem 4.2.

Theorem 4.2.

The Hankel wave over traversals is

| (40) | |||||

In a way that is analogous to what we did for the Toeplitz wave, we rewrite (40) for the Hankel wave in the ascending index of the Bessel function.

Corollary 4.2.1.

The Hankel wave over traversals is

| (41) | |||||

where

and so .

In the case of the Hankel wave we can see that similarly to the Toeplitz wave there are three missing terms when moving to the next traversal - e.g. , , , but the indices are shifted by from the Toeplitz case, which of course corresponds to a shift by the length of the domain.

5 Simulations: The reflectionless properties of the Toeplitz and Hankel waves

We can now perform computer simulations of the semi-discrete model (16) by computing the solution in (17). That solution (17) and thus a simulation are computed via the wave-kernel matrix function software of Nadukandi & Higham [11]. For reference, the solution coming from d’Alembert’s formula (as if the problem were on the whole real line with no boundaries) is also included. Separately, we can also simulate the Toeplitz waves in two different ways, using either (20) or the Bessel expansions (36). Likewise, we can simulate the Hankel waves in two different ways, using either (21) or (40). The sum of the Toeplitz wave and the Hankel wave is always exactly equal to the solution to the wave equation with Dirichlet conditions, as in (22), and this property is consistent with results of the simulations, as expected.

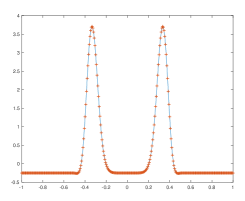

Consider the simulation in fig. 1. The simulation begins with a smooth symmetric Gaussian, centered on as an initial condition. Figure 1 shows the solution at three time-points: before, during, and after the first moment at which the wave reaches the boundary. The following points are notable.

-

•

Before the wave reaches the boundary (this corresponds to ), the solution is “purely Toeplitz” and the Hankel part of the solution is a spatial and temporal constant that is nearly zero – this constant is discussed in section 6.

-

•

After the wave reaches the boundary, the opposite is true: the solution is “purely Hankel,” and the Toeplitz part of the solution is a constant. (And this constant is of the opposite sign to the constant in the dot point above.) This corresponds to .

-

•

If the simulation is run for larger time values, then this behaviour is repeated, in the sense that on any even traversal the Toeplitz wave is constant, while on any odd traversal the Hankel wave is constant.

Naively, one might be tempted to incorporate the condition (1) into simulations in the semi-discrete setting, in the hope of achieving a reflectionless boundary condition. Unfortunately this leads to disappointment; such a simulation does show reflections at the boundary (albeit small reflections that can be reduced by refining the discretization). The issue is that the condition (1) is only reflectionless in the fully continuous setting, whereas in computer simulations, there must be some type of discretization (for example, in the semi-discrete setting, we might try a finite difference approximation). With this in mind, the reflectionless behaviour that can be achieved with the Toeplitz and Hankel waves on even or odd traversals of the domain (fig. 1) for which we have derived exact methods of simulation, and an accompanying analysis to explain the behaviour, is especially satisfying.

In fig. 2 we show the Toeplitz wave (top left) computed via (20) or (36) with and on the third traversal, and the Hankel wave computed via (21) or (40) with and on the fourth traversal (top right). The good agreement that we see numerically between these two different methods of computing the solution is a check that the theorems and formulas that we present are correct. The bottom left panel shows the Toeplitz wave computed via (20) or (36) with and . This purpose of this example is to illustrate that the formulas (36) we derive are a constant, near zero, for all , whereas the exact solution to the semi-discrete problem may be complicated for large . This panel also showcases our ability to control and choose in advance the number (through the choice of ) of reflections in a simulation. The effect of reducing the spatial discretization error can be seen by comparing the top right panel () with the bottom right panel (). (Note that our formulas for the semi-discrete problem are exact, and the computations of the solution to the semi-discrete problem shown here are so accurate that they may be regarded as exact solutions. The phrase discretization error here is used to indicate the comparison of the solution of the continuous problem to the semi-discrete solution.) With a smaller , and thus a larger dicretization error, we see that the semi-discrete problem becomes more oscillatory.

The integer appears in our formulas (36) and (40) as the number of terms in a sum. That number can be chosen by the user. The figures illustrate that can be thought of as the number of full wave traversals of the whole domain. For example, the choice would allow simulation of the top panel of fig. 1, starting from an initial condition that is symmetric about , and simulating the first half traversal of the domain. Whereas to use the formulas to simulate the bottom panel of fig. 1, would require choosing .

Two benefits that our analysis brings to these simulations are noteworthy. First, we are able to choose in advance the number of reflections. For example, we could ask for a simulation that allows exactly two reflections, by choosing . In such a simulation, after the second reflection, the Toeplitz waves and the Hankel waves (computed using our formulas that involve summation to an upper limit ) vanish for all larger values of time. This is not possible with existing methods of simulation, such as via the software for the wave-kernel matrix functions [11] that we have also included for comparison here. Second, we are able to explain why the Toeplitz wave is reflection-less (on even traversals of the domain) in simulations. For example, we are able to explain why the Toeplitz wave is perfectly reflection-less the very first time it hits the boundary. This would not be possible without the expressions for the Toeplitz wave that we derived.

|

|

|

|

|

|

|

6 Discussion and Future Work

Based on our previous analysis, we can make a number of observations.

-

•

The term is a function of the spatial discretisation error in the sense that as increases, this term converges linearly to some limit that depends on the initial condition.

-

•

For the initial condition

then we can show

and is essentially 0 for even modest values of , e.g. . Thus is which converges to as . It is interesting that this term is not zero.

-

•

Note that the sum of the Toeplitz wave and the Hankel wave is the full wave and the term cancels. The constant component of the Toeplitz and Hankel waves as becomes large is or , respectively.

- •

- •

-

•

For the Toeplitz wave, the matrices and act as shift matrices that allow the wave to move. In the case of the Hankel wave, the corresponding matrices are the Hankel matrices . Thus one way to think about wave propagation is through these elementary Toeplitz and Hankel matrices.

-

•

There is a rich theory on the sums of Bessel functions of the form

(42) These are called Neumann series [12]. Here

is an appropriate contour, and the are Neumann polynomials given by

There are generalisations of (42) (see Watson [17]) of the form

More recently Pogany and Süli [14] have given integral representations for certain Neumann series including

Al-Jarrah et al. [2] have considered Neumann series in the context of the Fourier cosine transform

| (43) |

and derived formulas for

for a given . We note that Bessel functions can be cast in the form of (43).

Since the Toeplitz and Hankel waves, defined over a finite number of traversals, can be considered as certain finite sums of even Bessel functions of the first kind, these are then finite Neumann series and some of this theory could be of interest in this context. We speculate that generalisations of these ideas might be applicable to other applications of Bessel function expansions to waves [10], or to the analysis of time series data arising in wave phenomena [13].

It is worth making some final remarks about the subject of inverse problems. As mentioned in the Introduction, it is not the purpose of our article to study inverse problems. That would require extending our analysis here to a wave equation with variable coefficients, and also extending to higher dimensions. Nonetheless, if we view our analysis here as a first step towards inverse problems, then we can make the following observations about how such an extension, in one dimension, may be carried out in future work. Commonly, a wave propagates at different speeds, , at different spatial locations. In an inverse problem, this speed might initially be unknown, and the goal is to estimate at many points , based on known observational data about the wave. One tool in that process of estimation, is called a forward solver, which given at all locations, is then able to simulate the waves.

As a simple example, suppose that the domain consists of two halves, and that the wave speed is in the first half of the domain and in the second half. One possible model of this situation can come from scaling our finite matrix by a diagonal matrix that encodes the wave speed. For example, and . It is tempting to apply our ‘Toeplitz-plus-Hankel’ analysis to this situation. However, the matrix cannot be written exactly as the sum of a Toeplitz matrix and a Hankel matrix. Thus, it is impossible to write all matrix functions exactly as a sum of a Toeplitz part and a Hankel part. We can still compute the solution to this wave equation with variable speed as a matrix function by, for example, employing the wave-kernel matrix functions of Nadukandi & Higham [11], but we should not expect that matrix function to be simply Toeplitz-plus-Hankel. In other words, we cannot expect to repeat our previous analysis for the constant coefficient case verbatim in this new variable coefficient case.

Nevertheless, it seems likely that the analysis that we have presented can be generalised to handle this setting of variable coefficients. The starting point will be to again examine the algebraic structure of the solutions, and in analogy with the way we investigated here, particularly to examine the rank one projection matrices coming from the spectral decomposition of the operator . Encouragingly, the non-symmetric matrix in is an example of a tridiagonal –Toeplitz matrix (in our simple example, it is a –Toeplitz matrix), for which exact expressions for the eigenvalues and for the eigenvectors are known [3]. The first step is to compute expressions for the rank one projection matrices coming from those eigenvectors, and this will be the subject of future work.

Acknowledgments

This work was completed at the University of Oxford. We would like to thank Professors Endre Süli and Nick Trefethen of the Mathematical Institute at the University of Oxford for their comments. The second author would like to thank Professor Nick Trefethen for hosting her visit at the Mathematical Institute in November and December 2018.

References

- [1] M Abramowitz and I A Stegun. Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Dover, New York, ninth Dover printing, tenth GPO printing edition, 1964.

- [2] A Al-Jarrah, K M Dempsey, and M L Glasser. Generalised series of Bessel functions. JCAM, 143(1):1 – 8, 2002.

- [3] R Álvarez-Nodarse, J Petronilho, and NR Quintero. On some tridiagonal k-toeplitz matrices: Algebraic and analytical aspects. applications. Journal of Computational and Applied Mathematics, 184(2):518–537, 2005.

- [4] Bjorn Engquist and Andrew Majda. Absorbing boundary conditions for numerical simulation of waves. Proc Natl Acad Sci U S A, 74:765–1766, 1977.

- [5] Bjorn Engquist and Andrew Majda. Radiation boundary conditions for acoustic and elastic wave calculations. Communications on Pure and Applied Mathematics, 32(3):313–357, 1979.

- [6] Robert M Gray et al. Toeplitz and circulant matrices: A review. Foundations and Trends® in Communications and Information Theory, 2(3):155–239, 2006.

- [7] Laurence Halpern. Absorbing boundary conditions for the discretization schemes of the one-dimensional wave equation. Mathematics of Computation, 38(158):415–429, 1982.

- [8] N. J. Higham. Functions of Matrices. SIAM, 2008.

- [9] Shev MacNamara and Gilbert Strang. Operator splitting. In Splitting Methods in Communication, Imaging, Science, and Engineering, pages 95–114. Springer, 2016.

- [10] NV Movchan, RC McPhedran, AB Movchan, and CG Poulton. Wave scattering by platonic grating stacks. In Proceedings of the Royal Society of London A: Mathematical, Physical and Engineering Sciences, page rspa20090301. The Royal Society, 2009.

- [11] Prashanth Nadukandi and Nicholas J Higham. Computing the wave-kernel matrix functions. SIAM J. SCI. COMPUT., 2018.

- [12] C G Neumann. Theorie der Besselschen Funktionen. B G Teubner, Verlag, Leipzig, 1867.

- [13] Ravindra Pethiyagoda, Scott W McCue, and Timothy J Moroney. Spectrograms of ship wakes: identifying linear and nonlinear wave signals. Journal of Fluid Mechanics, 811:189–209, 2017.

- [14] T K Pogany and E Süli. Integral representation for Neumann series of Bessel functions. Proc. Am. Maths. Soc., 137(7):2363–2368, 2009.

- [15] G. Strang and S. MacNamara. Functions of difference matrices are Toeplitz plus Hankel. SIAM Review, 56(3):525–546, 2014.

- [16] Lloyd N. Trefethen and Laurence Halpern. Well-posedness of one-way wave equations and absorbing boundary conditions. Mathematics of Computation, 47:421–435, 1986.

- [17] G N Watson. A Treatise on the Theory of Bessel Functions. Cambridge University Press, Cambridge, UK, 1922.