The Random Feature Method for Time-dependent Problems

Abstract.

We present a framework for solving time-dependent partial differential equations (PDEs) in the spirit of the random feature method. The numerical solution is constructed using a space-time partition of unity and random feature functions. Two different ways of constructing the random feature functions are investigated: feature functions that treat the spatial and temporal variables (STC) on the same footing, or functions that are the product of two random feature functions depending on spatial and temporal variables separately (SoV). Boundary and initial conditions are enforced by penalty terms. We also study two ways of solving the resulting least-squares problem: the problem is solved as a whole or solved using the block time-marching strategy. The former is termed “the space-time random feature method” (ST-RFM). Numerical results for a series of problems show that the proposed method, i.e. ST-RFM with STC and ST-RFM with SoV, have spectral accuracy in both space and time. In addition, ST-RFM only requires collocation points, not a mesh. This is important for solving problems with complex geometry. We demonstrate this by using ST-RFM to solve a two-dimensional wave equation over a complex domain. The two strategies differ significantly in terms of the behavior in time. In the case when block time-marching is used, we prove a lower error bound that shows an exponentially growing factor with respect to the number of blocks in time. For ST-RFM, we prove an upper bound with a sublinearly growing factor with respect to the number of subdomains in time. These estimates are also confirmed by numerical results.

Key words and phrases:

time-dependent PDEs, partition of unity method, random feature method, collocation method, separation-of-variables random features2010 Mathematics Subject Classification:

Primary: 65M20,65M55,65M70.1. Introduction

Time-dependent partial differential equations (PDEs), such as diffusion equation, wave equation, Maxwell equation, and Schrödinger equation, are widely used for modeling the dynamic evolution of physical systems. Numerical methods, including finite difference method [11], finite element methods [17], and spectral methods [15], have been proposed to solve these PDEs. Despite the great success in theory and application, these methods still face some challenges, to name a few, complex geometry, mesh generation, and possibly high dimensionality.

Along another line, the success of deep learning in computer vision and natural language processing [8] attracts great attention in the community of scientific computing. As a special class of functions, neural networks are proved to be universal approximators to continuous functions [3]. Many researchers seek for solving ordinary and partial differential equations with neural networks [6, 9, 5, 16, 19, 14, 7]. Since the PDE solution can be defined in the variational (if exists), strong, and weak forms, deep Ritz method [5], deep Galerkin method [16] and physics-informed neural networks [14], and weak adversarial network [19] are proposed using loss (objective) functions in the variational, strong, and weak forms, respectively. Deep learning-based algorithms have now made it fairly routine to solve a large class of PDEs in high dimensions without the need for mesh generation of any kind.

For low-dimensional problems, traditional methods are accurate, with reliable error control, stability analysis and affordable cost. However, in practice, coming up with a suitable mesh is often a highly non-trivial task, especially for complex geometry. On the contrary, machine-learning methods are mesh-free and only collocation points are needed. Even for low-dimensional problems, this point is still very attractive. What bothers a user is the lackness of reliable error control in machine-learning methods. For example, without an exact solution, the numerical approximation given by a machine-learning method does not show a clear trend of convergence as the number of parameters increases.

There are some efforts to combine the merits of traditional methods and deep-learning based methods. The key ingredient is to replace deep neural networks by a special class of two-layer neural networks with the inner parameters fixed, known as random features [12, 13] or extreme learning machine [10]. Random feature functions are proved to be universal approximators as well, meanwhile only the parameters of the output layer need to be optimized, leading to a convex optimization problem. Extreme learning machines are employed to solve ordinary and partial differential equations in [18] and [1], respectively. Spectral accuracy is obtained for problems with analytic solutions, and the simplicity of network architectures reduces the training difficulty in terms of execution time and solution accuracy, compared to deep neural networks. In [4], a special kind of partition of unity (PoU), termed as domain decomposition, is combined with extreme learning machines to approximate the PDE solution and the block time-marching strategy is proposed for long time simulations. Spectral accuracy is obtained in both space and time for analytic solutions, but the error grows exponentially fast in most cases as the simulation time increases. In [2], combining PoU and random feature functions, the random feature method (RFM) is proposed to solve static PDEs with complex geometries. An automatic rescaling strategy is also proposed to balance the weights of equations and boundary conditions, which is found to work well for linear elasticity and Stokes flow over complex geometrices.

The objective in this article is to propose a methodology for solving time-dependent PDEs that shares the merits of both traditional and machine learning-based algorithms. This new class of algorithms can be made spectrally accurate in both space and time. Meanwhile, they are also mesh-free, making them easy to use even in settings with complex geometry. Our starting point is based on a combination of rather simple and well-known ideas: We use space-time PoU and random feature functions to represent the approximate solution, the collocation method to take care of the PDE as well as the boundary conditions in the least-squares sense, and a rescaling procedure to balance the contributions from the PDE and initial/boundary conditions in the loss function. This method is called ST-RFM. For time-dependent problems, a random feature function can depend on both spatial and temporal variables, i.e. space-time concatenation input (STC), or is the product of two random feature functions depending on spatial and temporal variables separately (SoV). STC can be viewed as natural extensions of random feature method for static problems [2], while SoV may be a better choice for some time-dependent PDEs. Both STC and SoV are proved to be universal approximators. For long time intervals, PoU for the temporal variable, i.e. domain decomposition along the time direction, is propsed to solve time-dependent problems. Error estimates of the ST-RFM and the block-time marching strategy are provided. ST-RFM yields spectrally accurate results with slowly growing error in terms of the number of subdomains in time, while the error generated by the block time-marching strategy in [4] grows exponentially fast in terms of the number of blocks. These findings are confirmed by numerical results in one and two dimensions.

This article is organized as follows. In Section 2, we present the ST-RFM and prove the approximation property of STC and SoV. Upper bound error estimate for ST-RFM and lower bound error estimate for the block time-marching strategy are provided. In Section 3, numerical experiments in one and two dimensions for heat, wave, and Schrödinger equations are conducted to show the spectral accuracy of the proposed method, and confirm the error estimates for long time simulations. Application of ST-RFM to a two-dimensional wave equation over a complex geometry is also provided. Conclusions are drawn in Section 4.

2. Random Feature Method for Solving Time-dependent PDEs

For completeness, we first recall the RFM for solving static problems. We then introduce the ST-RFM for solving time-dependent PDEs, and prove the universal approximation property and the error estimate.

2.1. Random Feature Method for Static Problems

Let , where is the dimension of , and let be the dimension of output. Consider the following boundary-value problem

| (1) |

where and are known functions, and are differential and boundary operators, respectively. is the boundary of .

The RFM has the following components. First, points are chosen from , typically uniformly distributed. Then, is decomposed to subdomains with . Thus, we have . For each , a PoU function with support , i.e. , is constructed. In one dimension, two PoU functions are commonly used:

| (2a) | ||||

| (2b) | ||||

The first PoU is discontinuous while the second one is continuously differentiable. In high dimensions, the PoU function can be constructed as a tensor product of one-dimensional PoU functions , i.e. .

Then, for each , RFM constructs random features by a two-layer neural network with random but fixed parameters and , i.e.

| (3) |

where the nonlinear activation function is chosen as tanh or trigonometric functions in [2]. In (3), each component of and is independently sampled from , where controls the magnitude of parameters. In particular, both the weights and the biases are fixed in the optimization procedure. Moreover, is a linear transformation to transform inputs in to . The approximate solution in RFM is the linear combination of these random features together with the PoU

| (4) |

where are unknown coefficients to be sought and denotes the degree of freedom. For vectorial solutions, we approximate each component of the solution by (4), i.e.

| (5) |

To find the optimal set of parameters , RFM evaluates the original problem on collocation points and formulates a least-squares problem. To be specific, RFM samples collocation points in each , and computes the rescaling parameters and for , , and satisfying . Let and . Then, the random feature method minimizes

| (6) |

where is of the form (5) and is the -th coefficient of the random feature .

The above problem (6) is a linear least-squares problem when and are linear operators. Moreover, when the discontinuous PoU is used, continuity conditions between adjacent subdomains must be imposed by adding regularization terms in (6), while no regularization is required when is used for second-order equations. By minimizing (6), the optimal coefficients are obtained and the numerical solution is constructed by (5).

2.2. Space-Time Random Feature Method

Now, we consider time-dependent PDEs of the following form with the final time

| (7) |

where , and are known functions and is the initial operator.

Following the same routine as in RFM, we construct a partition of . First, we decompose the spatial domain to subdomains where each subdomain contains a central point . We then decompose the temporal interval to subdomains, i.e. , where each subdomain contains a central point . The product of the PoUs in space and in time results in the space-time PoU, i.e.

| (8) |

where and are indices for spatial and temporal subdomains, respectively.

Next, we generalize spatial random features (3) to space-time random features. There are two options. The first can be viewed as a natural extension of (3), where the concatenation of spatial and temporal variables is fed into as the input and the output is a random feature function, i.e.

| (9) |

Here and are the weights associated with spatial and temporal inputs, respectively. is the bias, and are linear transformations from to and from to , respectively. (9) is called STC.

The second option is to use separation of variables, which mimics the technique of separation of variables for solving PDEs

| (10) |

In this formulation, the space-time random feature is a product of the spatial random feature and the temporal random feature, thus we term it as SoV. For both random features, the degrees of freedom .

The combination of these space-time random features and PoU leads to the following approximate solution in the scalar case

| (11) |

where is the unknown coefficient to be sought. A vectorial solution can be formulated in the same way as that in (5), i.e.

| (12) |

For the -th component of , we denote the coefficient associated to by .

By substituting (11) or (12) into (7), we define the loss function on collocation points. Denote spatial collocation points in the -th spatial subdomain and temporal collocation points in the -th temporal subdomain, respectively. In RFM, we define the rescaling parameters , and for , , , and satisfying . Then, the ST-RFM minimizes

| (13) | ||||

to find the numerical solution of the form (12). Moreover, when or and is used, continuity conditions between adjacent spatial (temporal) subdomains must be imposed by adding regularization terms in (13), while no regularization is required when is used for second-order equations.

For better illustration of this method, we consider an example with , the initial operator being identity and rescaling parameters being one. For convenience, we set and relabel by without confusion. Under these settings, we introduce two matrix-valued functions , and three induced matrices as follows:

where is the row index and is the column index. Then, we construct the matrix as follows:

Define the following vectors

then we construct the vector , where

Let , then the optimal coefficient by ST-RFM is obtained by

| (14) |

For time-dependent partial differential equations, Dong et al. [4] proposed the block time-marching strategy. Block time-marching strategy solves Eq. (7) in each time block individually and applies the numerical results in -th block at the terminal time as the initial conditions of -th block. Let be the number of time blocks, and let for simplicity. For first-order equations in time, we present the detailed process of block time-marching strategy in Algorithm 1, and the optimal coefficient is the concatenation of optimal coefficients in all time blocks.

2.3. Approximation Properties

Both concatenation random features and separation-of-variables random features are universal approximators. To prove these, we first recall the definition of sigmoidal functions in [3].

Definition 2.1.

is said to be sigmoidal if

The approximation property of STC is given in the following theorem.

Theorem 2.1.

Let be any continuous sigmoidal function. Then finite sums of

| (15) |

are dense in . In other words, given any and , there exists a sum , such that

Proof.

It is a direct consequence of in [3, Theorem 2]. ∎

Corollary 2.2.

Proof.

Consider a continuous sigmoidal function , then . From Theorem 2.1, there is a sum , such that

Define . If , then the finite sum satisfies

If , since is compact and thus is bounded and closed, there exist , and such that

holds for all . Then, the finite sum satisfies

for all . ∎

For SoV, we first extend the definition of discriminatory functions in [3] as follows.

Definition 2.2.

is said to be discriminatory if for a measure

for all , , and implies .

Lemma 2.3.

Any bounded, measurable sigmoidal function is discriminatory. In particular, any continuous sigmoidal function is discriminatory.

Proof.

For any , we have

Thus, converges in the pointwise and bounded sense to

as . Similarly, we have converges in the pointwise and bounded sense to

as .

Denote and . Let be the hyperline defined by and be the space defined by . Then by the Lesbegue Bounded Convergence Theorem, we have

for all .

We now show that the measure of all quarter spaces being zero implies that the measure itself must be zero. This would be trivial if was a positive measure but here it is not.

For a fixed and a bounded measurable function , we define a linear functional as

Note that is a bounded functional on since is a finite signed measure. Set the indicator function of the interval , i.e. if and if , then

Similarly, if is the indicator function of the open interval . By linearity, holds for the indicator function of any interval and for any simple function (sum of indicator functions of intervals). Since simple functions are dense in , .

In particular, a substitution of bounded measurable functions and gives

for all . The Fourier transform of is zero and we have . Hence, is discriminatory. ∎

Remark 2.4.

Following the same routine, we can prove the approximation property of SoV. For any continuous sigmoidal function , the finite sums

| (16) |

are dense in .

Corollary 2.5.

Proof.

The proof is similar to that of Corollary 2.2. ∎

2.4. Error Estimates of Space-time Random Feature Method

For convenience, we set to analyze the error of block time-marching strategy and the ST-RFM. Let and be exact solutions in Eq. (14) and the block time-marching strategy in Algorithm 1, and and be numerical solutions by a numerical solver, such as direct or iterative methods, respectively. Then we have the following results.

Theorem 2.6.

Assume that for any linear least-squares problem in ST-RFM and block time-marching strategy, there exists such that . Then .

Proof.

Under the assumption, for each subproblem in Algorithm 1, the optimal solution satisifies

Recalling the definition of in ST-RFM, we have

After some algebraic calculations, we have

Since the optimal solution of linear least-squares problem is unique under the assumption, we have . ∎

From Theorem 2.6, solving the time-dependent PDEs by the block time-marching strategy is equivalent to solving the same problem by the ST-RFM. In practice, however, the numerical solution is different from the optimal solution , and we denote the difference by . For long time intervals, when and increases by one, the solution error at block or subdomain is expected to be greater than previous blocks or subdomains due to error accumulation. To quantitatively analyze the error propagation in terms of and , we first introduce a matrix as

For simplicity, random feature functions are set to be the same over different time subdomains, i.e. for all . Then, all are the same and can be denoted by . To proceed, we need the following assumption for .

Assumption 2.1.

is diagonalizable.

Remark 2.7.

Although this assumption cannot be proved, we find that numerically is diagonalizable for all the numerical examples we have tested. This is not a bad assumption since it provides a lower bound error estimate of the block time-marching strategy and is verified numerically as well.

Under Assumption 2.1, there exists such that is the eigenvector of with eigenvalue and is a unitary orthogonal basis in . Denote the eigenvalue with the largest modulus by , i.e. . Since forms a basis, there exists such that , where , are independent and identically distributed random variables with , and .

We need the following lemma to bound a geometric series from above and from below.

Lemma 2.8.

For any with and , there exists such that

holds for , where .

Proof.

When , we have

Therefore, there exists such that

holds for . Similarly, when , we have

Therefore, there exists such that

Thus, the conclusion holds when . ∎

Now we are ready to characterize the difference between and under the perturbation .

Lemma 2.9.

Under a perturbation to the solution, there exists and such that when , the solution difference by block time-marching strategy satisfies

where

Proof.

The difference between and satisfies

where due to . Notice that is an orthonormal basis of , thus

| (Unitary orthogonality of ) | ||||

The lower bound can be estimated for four different cases.

-

(1)

When , we have

where .

-

(2)

When and , let , where and , then we have

where .

-

(3)

When and , we have

where .

-

(4)

When , we have

where .

∎

If different random feature functions are chosen over different time subdomains, we observe that also satisfies the estimation in Lemma 2.9.

Lemma 2.10.

For the space-time random feature method, there exists and such that

Proof.

where . ∎

Lemma 2.11.

Let two functions and be of the form (11) with and the coefficients being , , respectively, then there exists such that

Proof.

Notice that

where , and

where . Moreover, for all , we have . ∎

Theorem 2.12 (Lower bound).

Denote the exact solution. Let be the error between the numerical solution and the exact solution to the least-squares problem. Given , we assume that

Then, there exists and such that when ,

| (18) |

Proof.

Notice that

∎

Theorem 2.13 (Upper bound).

Denote the exact solution. Let be the error between the numerical solution and the exact solution to the least-squares problem. Given , we assume that

Then, there exists and such that when ,

| (19) |

Proof.

Notice that

∎

3. Numerical Results

In this section, we present numerical results for one-dimensional and two-dimensional problems with simple geometry and a two-dimensional problem with complex geometry to demonstrate the effectiveness of the ST-RFM and confirm theoretical results.

3.1. One-dimensional Problems

3.1.1. Heat Equation

Consider the following problem

| (20) |

where , , and . The exact solution is chosen to be

| (21) |

We choose the initial condition by restricting Eq. (21) to , and the boundary conditions and by restricting Eq. (21) to and , respectively.

Set the default hyper-parameters , , , , and . Numerical solutions and errors of STC (Eq. (9)) and SoV (Eq. (10)) are plotted in Figure 1. The error in the ST-RFM is small (), which indicates that both random feature functions have strong approximation properties.

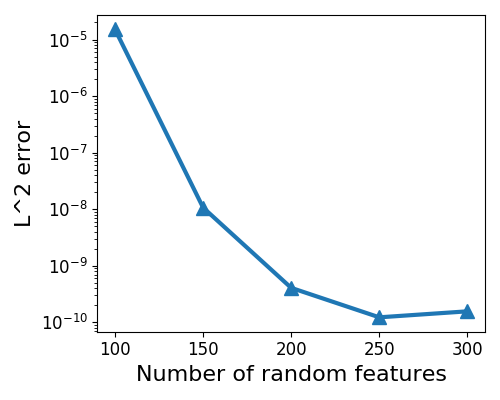

Next, we report the convergence behavior with respect to different parameters in Figure 2(a)-(d). In Figure 2(a), we set , , , and to verify the convergence with respect to . In Figure 2(b), we set , , , and to verify the convergence with respect to . In Figure 2(c), we set , , , and to verify the convergence with respect to . In Figure 2(d), we set , , , and to verify the convergence with respect to /. A clear trend of spectral accuracy is observed for the ST-RFM in both spatial and temporal directions.

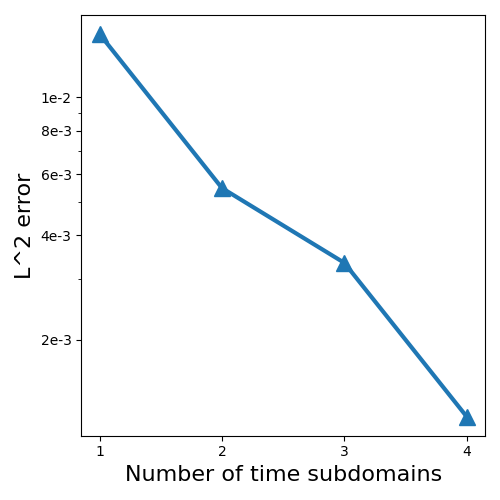

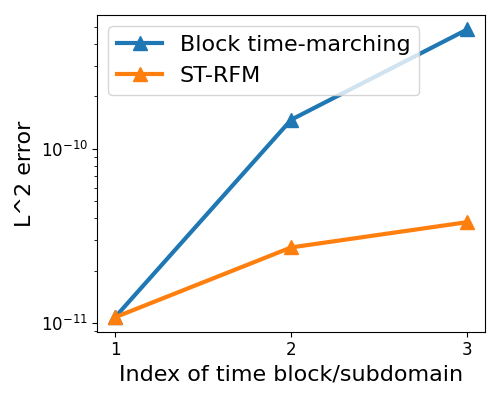

Now, we compare STC and SoV in Figure 2(e), where the default hyper-parameter setting is used. For this example, STC performs better than SoV. The comparison between the block time-marching strategy and the ST-RFM is plotted in Figure 2(f), where we set and for the block time-marching strategy and and for the ST-RFM. The error of solution by the block time-marching strategy increases exponentially fast with respect to the number of blocks, while the error in the ST-RFM remains almost flat over all time subdomains.

3.1.2. Heat Equation with Nonsmooth Initial Condition

Consider the heat equation (20) with , and the nonsmooth initial condition as follows:

| (22) |

It is easy to check that only belongs to .

Since an exact solution is not analytically available for the problem under consideration, we employ a set of hyper-parameters, namely , , , , , and , to obtain a reference solution. Numerical convergence of ST-RFM is evaluated in terms of the relative error, which is depicted in Figure 3. We use STC to implement the basis function of ST-RFM here. Apparently, spectral accuracy is observed. However, the accuracy is only around , two orders of magnitude larger than that in the smooth case (20), which is attributed to the regularity deficiency.

3.1.3. Wave Equation

Consider the following problem

| (23) |

where , , and . The exact solution is chosen to be

| (24) |

Initial conditions and are chosen accordingly.

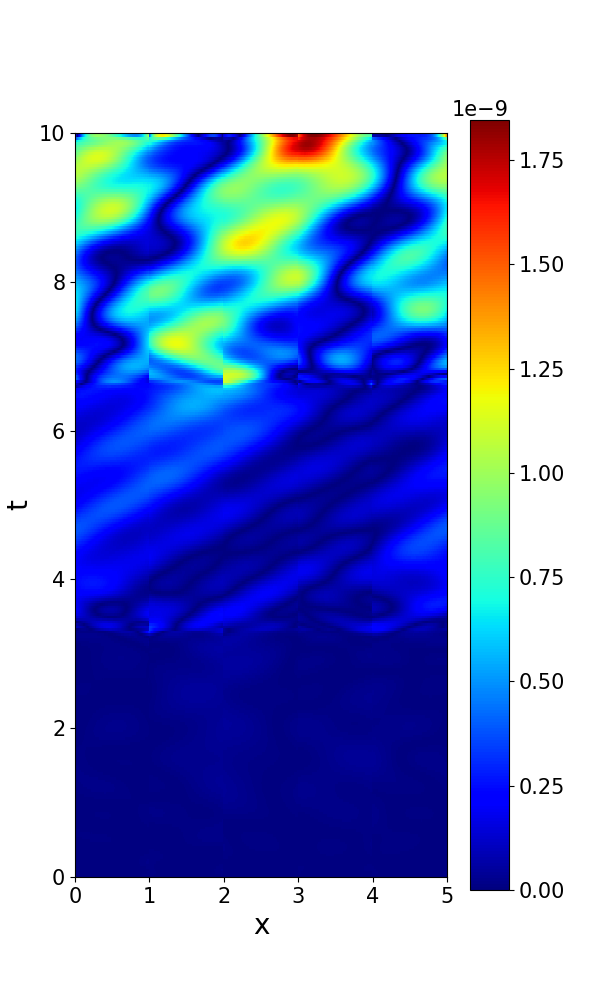

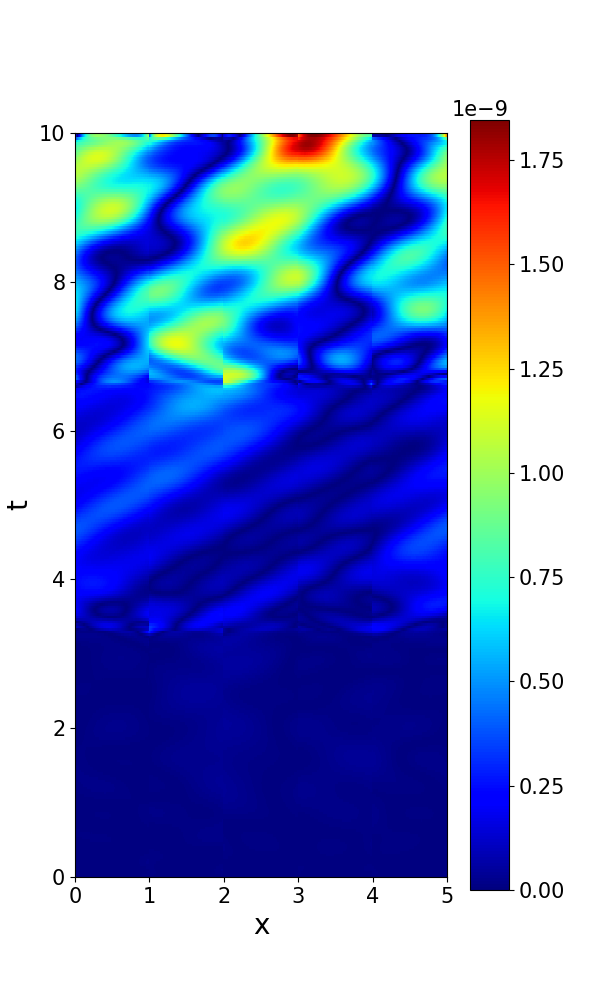

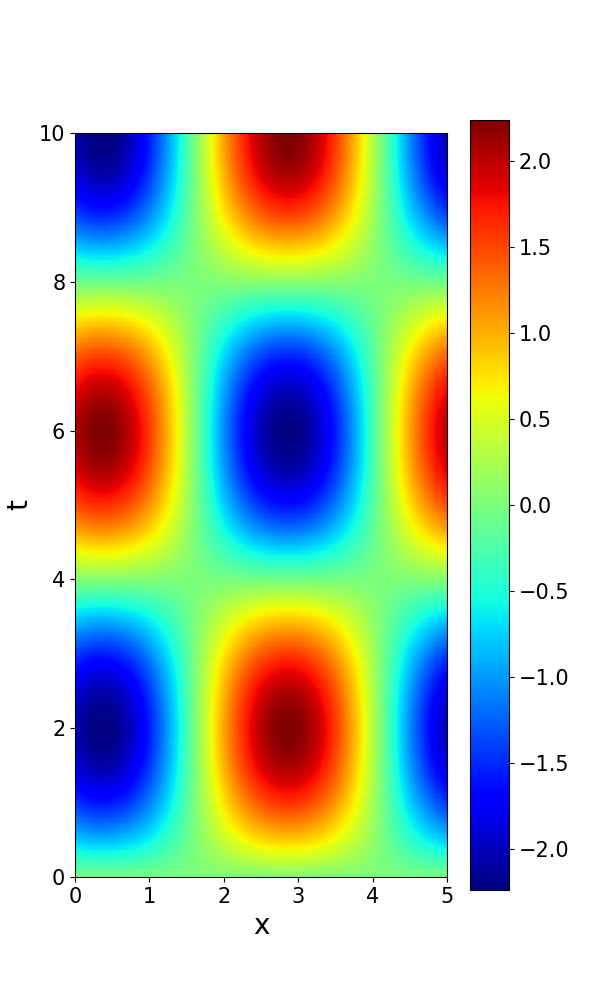

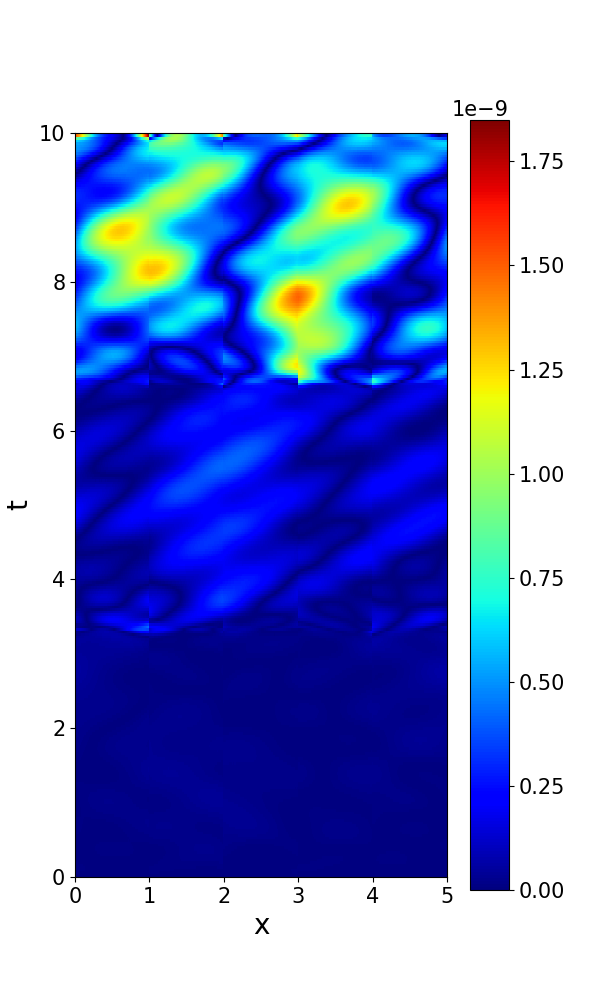

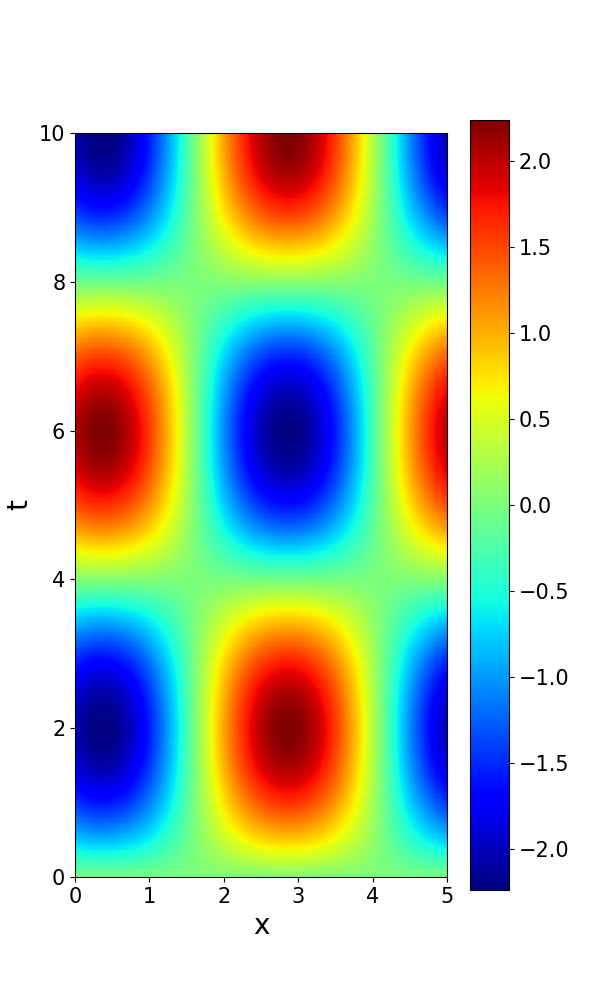

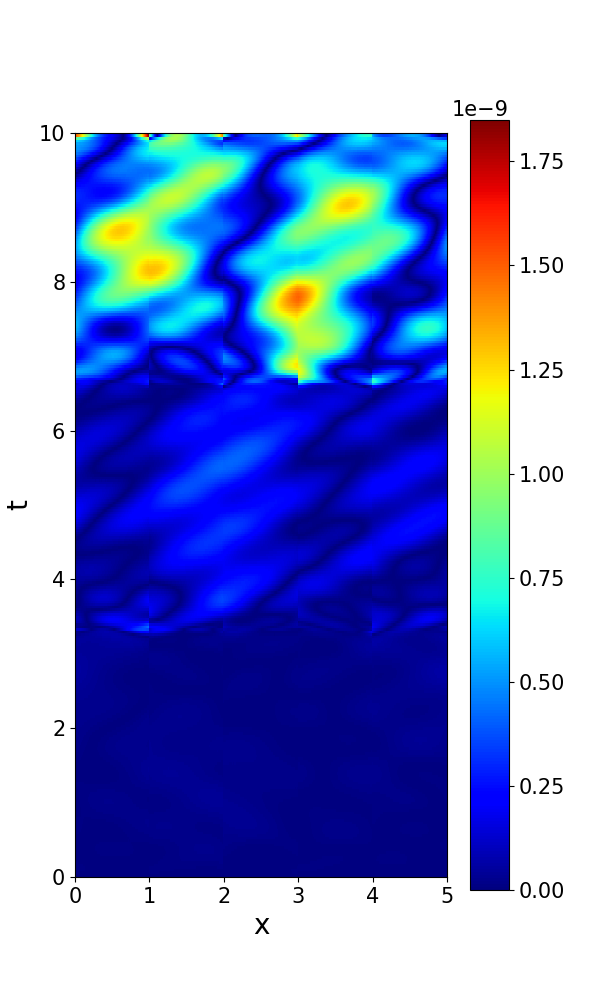

Set the default hyper-parameters , , , , and . Numerical solutions and errors of STC and SoV are plotted in Figure 4. The error is smaller than .

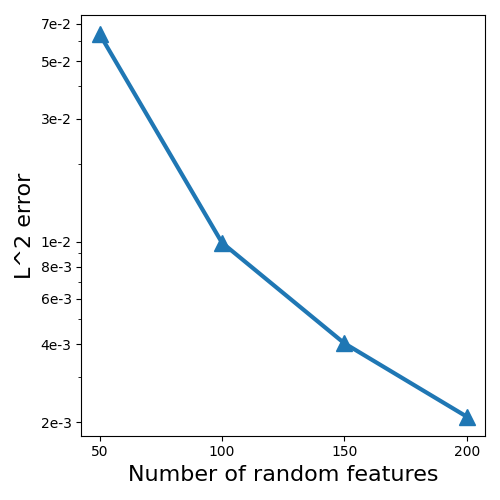

Next, we report the convergence behavior with respect to different parameters in Figure 5(a-d). In Figure 5(a), we set , , , and to verify the convergence with respect to . In Figure 5(b), we set , , , and to verify the convergence with respect to . In Figure 5(c), we set , , , and to verify the convergence with respect to . In Figure 5(d), we set , , , and to verify the convergence with respect to /. A clear trend of spectral accuracy is observed for the ST-RFM in both spatial and temporal directions.

Now, we compare STC and SoV in Figure 5(e), where we set , , , and . For this example, SoV performs better than STC. The comparison between the block time-marching strategy and the ST-RFM is plotted in Figure 5(f), where we set and for block time-marching strategy and and for ST-RFM. The error of the solution by the block time-marching strategy increases exponentially fast with respect to the number of blocks, while the error in the ST-RFM remains almost flat over all time subdomains.

3.1.4. Schrödinger Equation

Consider the following problem

| (25) |

where , and . The exact solution is chosen to be

| (26) |

and is chosen accordingly.

Set the default hyper-parameters , , , , and . Numerical solutions and errors of STC and SoV are plotted in Figure 6. The error in the ST-RFM is smaller than .

Next, we report the convergence behavior with respect to different parameters in Figure 7(a-d) (real part) and Figure 8(a-d) (imaginary part). In Figure 7(a) and Figure 8(a), we set , , , and to verify the convergence with respect to . In Figure 7(b) and Figure 8(b), we set , , , and to verify the convergence with respect to . In Figure 7(c) and Figure 8(c), we set , , , and to verify the convergence with respect to . In Figure 7(d) and Figure 8(d), we set , , , and to verify the convergence with respect to /. A clear trend of spectral accuracy is observed for the ST-RFM in both spatial and temporal directions.

Now, we compare STC and SoV in Figure 7(e) and Figure 8(e), where we set , , , and . For this example, SoV performs better than STC. The comparison between the block time-marching strategy and the ST-RFM is plotted in Figure 7(f) and Figure 8(f), where we set and for block time-marching strategy and and for ST-RFM. The error of the solution by the block time-marching strategy increases exponentially fast with respect to the number of blocks, while the error in the ST-RFM increases slower over all time subdomains.

3.1.5. Verification of Theoretical Results

First, we verify Assumption 2.1 for all one-dimensional problems using the sufficient condition that the number of different eigenvalues of , denoted by #, equals to the number of random features . Results are recorded in Table 1. The number of unique eigenvalues of equals to for all one-dimensional problems and Assumption 2.1 is verified.

| Heat | Wave | Schrödinger | |||

|---|---|---|---|---|---|

| #unique | #unique | #unique | |||

| 100 | 100 | 100 | 100 | 200 | 200 |

| 150 | 150 | 150 | 150 | 300 | 300 |

| 200 | 200 | 200 | 200 | 400 | 400 |

| 250 | 250 | 250 | 250 | 500 | 500 |

| 300 | 300 | 300 | 300 | 600 | 600 |

| 350 | 350 | 350 | 350 | 700 | 700 |

| 400 | 400 | 400 | 400 | 800 | 800 |

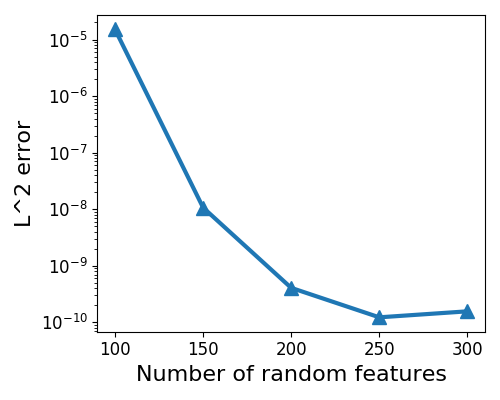

Next, we use the wave equation to verify the error estimate in Theorem 2.12. For the block time-marching strategy, we set , and for ST-RFM, we set . Eigenvalues of are plotted in Figure 9(a), and the error is plotted in Figure 9(b).

The largest modulus of eigenvalues of from Figure 9(a) is , indicating that , while the error in the block time-marching strategy increases exponentially with the rate from Figure 9(b). Therefore, the lower bound estimate in Theorem 2.10 is verified. In addition, the error in the ST-RFM remains almost flat, which is mainly constrolled by the approximation power of space-time random features.

3.2. Two-dimensional problems

3.2.1. Membrane Vibration over a Simple Geometry

Consider the following problem

| (27) |

where , and . The exact solution is chosen to be

| (28) |

and and are chosen accordingly.

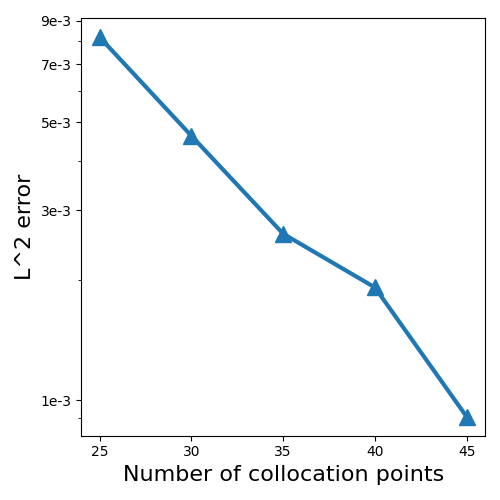

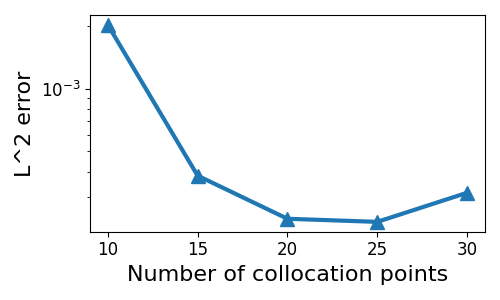

Set the default hyper-parameters , , , and . We report the convergence behavior with respect to different parameters in Figure 10(a-c). In Figure 10(a), we set , , , and to verify the convergence with respect to . In Figure 10(b), we set , , and to verify the convergence with respect to . In Figure 10(c), we set , , and to verify the convergence with respect to the number of collocation points. A clear trend of spectral accuracy is observed for the ST-RFM in both spatial and temporal directions. Now, we compare STC and SoV in Figure 10(d), when , , , and . Performances of STC and SoV are close.

3.2.2. Membrane vibration over a Complex Geometry

Consider a complex geometry in Figure 11

and the following the membrane vibration problem

| (29) |

where , . The same and are used as in Section 3.2.1. Set the default hyper-parameters , , , , and . Numerical solutions of SoV at different times are plotted in Figure 12.

No exact solution is available and numerical convergence is shown here. errors of solution with respect to different parameters is recorded in Table 2, Table 3 and Table 4. The solution with the largest degrees of freedom is chosen as the reference solution. As the parameter varies, the numerical solution converges to the reference solution, indicating the robustness of the ST-RFM in solving time-dependent partial differential equations over a complex geometry.

| # Time Blocks | / | // | Solution error | ||

|---|---|---|---|---|---|

| 1 | 2 | 1 | 400 | 30 | 5.98e-1 |

| 1 | 2 | 2 | 400 | 30 | 7.07e-2 |

| 1 | 2 | 3 | 400 | 30 | 4.57e-2 |

| 1 | 2 | 4 | 400 | 30 | 3.89e-2 |

| 1 | 2 | 5 | 400 | 30 | 3.31e-2 |

| 1 | 2 | 6 | 400 | 30 | Reference |

| # Time Blocks | / | // | Solution error | ||

|---|---|---|---|---|---|

| 5 | 2 | 2 | 400 | 10 | 7.71e-2 |

| 5 | 2 | 2 | 400 | 15 | 2.16e-2 |

| 5 | 2 | 2 | 400 | 20 | 1.44e-2 |

| 5 | 2 | 2 | 400 | 25 | 1.25e-2 |

| 5 | 2 | 2 | 400 | 30 | Reference |

| # Time Blocks | / | // | Solution error | ||

|---|---|---|---|---|---|

| 5 | 2 | 2 | 100 | 30 | 1.05e-1 |

| 5 | 2 | 2 | 150 | 30 | 5.25e-2 |

| 5 | 2 | 2 | 200 | 30 | 3.96e-2 |

| 5 | 2 | 2 | 250 | 30 | 2.82e-2 |

| 5 | 2 | 2 | 300 | 30 | 1.80e-2 |

| 5 | 2 | 2 | 350 | 30 | 1.46e-2 |

| 5 | 2 | 2 | 400 | 30 | Reference |

4. Concluding Remarks

In this work, we study numerical algorithms for solving time-dependent partial differential equations in the framework of the random feature method. Two types of random feature functions are considered: space-time concatenation random feature functions (STC) and space-time separation-of-variables random feature functions (SoV). A space-time partition of unity is used to piece together local random feature functions to approximate the solution. We tested these ideas for a number of time-dependent problems. Our numerical results show that ST-RFM with both STC and SoV has spectral accuracy in space and time. The error in ST-RFM remains almost flat as the number of time subdomains increases, while the error grows exponentially fast when the block time-marching strategy is used. Consistent theoretical error estimates are also proved. A two-dimensional problem over a complex geometry is used to show that the method is insensitive to the complexity of the underlying domain.

Acknowledgments

The work is supported by National Key R&D Program of China (No. 2022YFA1005200 and No. 2022YFA1005203), NSFC Major Research Plan - Interpretable and General-purpose Next-generation Artificial Intelligence (No. 92270001 and No. 92270205), Anhui Center for Applied Mathematics, and the Major Project of Science & Technology of Anhui Province (No. 202203a05020050).

References

- [1] Francesco Calabrò, Gianluca Fabiani, and Constantinos Siettos, Extreme learning machine collocation for the numerical solution of elliptic pdes with sharp gradients, Computer Methods in Applied Mechanics and Engineering 387 (2021), no. 1, 114–188.

- [2] Jingrun Chen, Xurong Chi, Weinan E, and Zhouwang Yang, Bridging traditional and machine learning-based algorithms for solving pdes: The random feature method, Journal of Machine Learning 1 (2022), no. 3, 268–298.

- [3] George Cybenko, Approximation by superpositions of a sigmoidal function, Mathematics of control, signals and systems 2 (1989), no. 4, 303–314.

- [4] Suchuan Dong and Zongwei Li, Local extreme learning machines and domain decomposition for solving linear and nonlinear partial differential equations, Computer Methods in Applied Mechanics and Engineering 387 (2021), no. 1, 114–129.

- [5] Weinan E and Yu Bing, The deep ritz method: A deep learning-based numerical algorithm for solving variational problems, Communications in Mathematics and Statistics 6 (2018), no. 1, 1–12.

- [6] Weinan E, Jiequn Han, and Arnulf Jentzen, Deep learning-based numerical methods for high-dimensional parabolic partial differential equations and backward stochastic differential equations, Communications in Mathematics and Statistics 5 (2017), no. 4, 349–380.

- [7] Weinan E, Jiequn Han, and Arnulf Jentzen, Algorithms for solving high dimensional pdes: from nonlinear monte carlo to machine learning, Nonlinearity 35 (2021), no. 1, 278.

- [8] Ian Goodfellow, Yoshua Bengio, and Aaron Courville, Deep learning, MIT Press, 2016.

- [9] Jiequn Han, Arnulf Jentzen, and Weinan E, Solving high-dimensional partial differential equations using deep learning, Proceedings of the National Academy of Sciences 115 (2018), no. 34, 8505–8510.

- [10] Guang-Bin Huang, Qin-Yu Zhu, and Chee-Kheong Siew, Extreme learning machine: Theory and applications, Neurocomputing 70 (2006), no. 1, 489–501.

- [11] Randall J LeVeque, Finite difference methods for ordinary and partial differential equations: steady-state and time-dependent problems, SIAM, 2007.

- [12] Radford M. Neal, Bayesian learning for neural networks, Ph.D. thesis, University of Toronto, 1995.

- [13] Ali Rahimi and Benjamin Recht, Random features for large-scale kernel machines, Advances in Neural Information Processing Systems (NIPS), vol. 20, Curran Associates, Inc., 2007.

- [14] M. Raissi, P. Perdikaris, and G.E. Karniadakis, Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations, Journal of Computational Physics 378 (2019), no. 1, 686–707.

- [15] Jie Shen, Tao Tang, and Li-Lian Wang, Spectral methods: algorithms, analysis and applications, vol. 41, Springer Science & Business Media, 2011.

- [16] Justin Sirignano and Konstantinos Spiliopoulos, Dgm: A deep learning algorithm for solving partial differential equations, Journal of Computational Physics 375 (2018), no. 1, 1339–1364.

- [17] Vidar Thomée, Galerkin finite element methods for parabolic problems, vol. 25, Springer Science & Business Media, 2007.

- [18] Yunlei Yang, Muzhou Hou, and Jianshu Luo, A novel improved extreme learning machine algorithm in solving ordinary differential equations by legendre neural network methods, Advances in Difference Equations 2018 (2018), no. 1, 1–24.

- [19] Yaohua Zang, Gang Bao, Xiaojing Ye, and Haomin Zhou, Weak adversarial networks for high-dimensional partial differential equations, Journal of Computational Physics 411 (2020), no. 1, 109409.