11email: [email protected]

The Price of Pessimism for Automated Defense ††thanks: Funded by the Auerbach Berger Chair in Cybersecurity held by Spiros Mancoridis, at Drexel University

Abstract

The well-worn George Box aphorism “all models are wrong, but some are useful” is particularly salient in the cybersecurity domain, where the assumptions built into a model can have substantial financial or even national security impacts. Computer scientists are often asked to optimize for worst-case outcomes, and since security is largely focused on risk mitigation, preparing for the worst-case scenario appears rational. In this work, we demonstrate that preparing for the worst case rather than the most probable case may yield suboptimal outcomes for learning agents. Through the lens of stochastic Bayesian games, we first explore different attacker knowledge modeling assumptions that impact the usefulness of models to cybersecurity practitioners. By considering different models of attacker knowledge about the state of the game and a defender’s hidden information, we find that there is a cost to the defender for optimizing against the worst case.

1 Introduction

Cybersecurity incidents continue to grow in frequency and volume each year, and are estimated to cause $8 billion worth of damage in 2023 [6]. While cybersecurity awareness continues to grow and companies continue to invest in their security functions, the majority of threat response functions are still carried out manually by cybersecurity analysts. As a result, there is a move to automate parts of cyber threat response – something clearly illustrated by the wide availability and marketing of security orchestration, automation, and response (SOAR) systems. These systems, however, are largely rule-based – they take some specific action when some specific criterion is met. This is because SOAR lacks any direct knowledge of the network system it is responsible for helping defend. We instead consider the use of attacker simulation to train defensive agents and aim to answer the question of what assumptions about attacker information should be made to train the most robust defensive agents.

Abstractions of complex systems generally trade accuracy for tractability because there is some use in modeling that system in different scenarios. In cybersecurity, we use modeling to consider potential future states of the system we are tasked with defending and how different threats and risks [28] may be realized. As an additional constraint in cybersecurity modeling, accuracy and timeliness are constantly in tension. Responding inaccurately to a potentially malicious event that was triggered by benign behavior can have a significant cost, but not responding fast enough to a malicious event can be even more expensive. In the automation of these responses, there is a tendency to optimize for the worst-case. However, as we know from e.g. linear programming [16], there are cases where a solution that is worst-case optimal is empirically suboptimal in practice. Our work seeks to understand the “price of pessimism” – that is, the cost to a computer network defender for overestimating the knowledge or capability of an attacker.

While a number of different game types have been used across the security games space [30, 20], a small handful comprise the preeminent models used in the space – The Bayesian leader-follower game111Also known as the Stackelberg game, the stochastic Bayesian game, and the two player zero sum game. When training a learning agent for cyberdefense, decisions about attacker modeling directly impact the policy learned by the defending agent. We concern ourselves primarily with the two Bayesian game variants under differing prior knowledge and observability assumptions where the attacker is an actor who seeks to deploy ransomware across a target network. While the impact of altering a game’s state or action space is clear and the implications are well-understood, there are other modeling assumptions that are often made implicitly. These assumptions are critical parts of the game design that impact its usefulness to cybersecurity practitioners. In this work, we make assumptions explicit about the presence of attackers and noise, and consider the impact of assumptions about attacker knowledge.

Our work begins with a presentation of related work in the field of security game theory. We then define the stochastic Bayesian game model and setting we use as an abstraction of attacker-defender interaction on a computer network before presenting a theoretical model of how defender belief about attacker knowledge is liable to influence their behavior. In this manuscript, we are particularly interested in the case we deem most realistic – one where the attacker has limited knowledge of the target network. Our model, being built on partially observable stochastic Bayesian games which have no known general solution concept [14], requires that we develop a decision theory for the players. To overcome the limitations of an attacker to optimize against a particular defender, we introduce the use of the restricted Bayes/Hurwicz criterion [11] for decision making under uncertainty. In order to validate our theoretical findings, we leverage reinforcement learning in a YAWNING-TITAN [5] environment modified to allow attackers and defenders to act as independent learning agents. We leverage the proximal policy optimization reinforcement learning algorithm of Schulman et al. [26], an on-policy deep reinforcement learning algorithm with generally good performance that is used in the default implementation of YAWNING-TITAN222https://github.com/dstl/YAWNING-TITAN/tree/main. We conclude with a discussion of our results and avenues for further work.

Contributions

This work evaluates the “price of pessimism”, a phenomenon wherein the a priori assumption about an adversary’s knowledge of a system results in a suboptimal response pattern. In particular, this manuscript contributes the following:

-

1.

For reinforcement learning agents in a stochastic Bayesian game, optimizing against a worst-case adversary leads to suboptimal policy convergence.

-

2.

Defending agents trained against attacking agents that learn are also highly capable against algorithmic attackers even when they have not seen those algorithmic attackers during training.

-

3.

An extension of the YAWNING-TITAN [5] reinforcement learning framework for training independent attacking and defending agents

-

4.

A novel use of the Bayes-Hurwicz criterion for parameterizing attacker decision making under uncertainty

2 Related Work

Security game theory is a broad field informed by cybersecurity, decision theory, and game theory. Recent challenges like CAGE [33] have encouraged development of models like CybORG [13] and YAWNING-TITAN [5] that use reinforcement learning to train autonomous agents that defend against cyber attacks. The Ph.D thesis of Campbell [7] also considers a similar problem space to our work and leverages the same game theoretic model. These works address a similar problem space to our work: the development of a defensive agent that disrupts an adversary while minimizing impact to network users. This paper builds on prior work by the authors [14] that uses a simple state and action space for Stochastic Bayesian Games (SBG) as introduced by Albrecht and Ramamoorthy [3]. The partial observability of the proposed SBG relates closely to the work of Tomášek, Bošanský, and Nguyen [32] on one-sided partially observable stochastic games. Their work considers sequential attacks on some target and develops scalable algorithms for solving zero-sum security games in this setting and present algorithms to compute upper and lower value bounds on a subgame. By contrast, our work seeks to understand how the defender’s beliefs about the attacker impacts the rewards and outcomes for defenders. Additionally, the aforementioned works and other related works like Khouzani et al. [17] and Chatterjee et al. [8] consider the attacker as either a deterministic operator or leverage epidemic modeling techniques to describe an attacker’s movements through a network. Our work here is unique in the respect that we model the attacker as a learning agent, a phenomenon more in line with real-world attackers.

The work of Thakoor et al. [31] and subsequent work by Aggarwal et al. [2] informs our point of view on how attackers respond to risk. In this work, we implicitly assume bounded rationality and account for risk and uncertainty within our model. While Thakoor et al. and Aggarwal et al. use cumulative prospect theory [34] to address deception as a source of uncertainty, we instead consider it one component of a larger overall framework.

A key component of this work is the assumptions about information available to the players. Specifically, the defending player’s beliefs about an attacker’s knowledge. In the security games context, this dynamic is well-captured by existing literature on deception and counter-deception [24]. Our work extends this research area by exploring this dynamic from a game design perspective concerning beliefs defending players hold about the attackers independent of in-game actions and how that impacts the learning of defensive agents.

3 Modeling Assumptions

Since our work is concerned with modeling assumptions, we aim to make our own assumptions as explicit and general as possible. We extend the state and action space used in prior work [14], but operate under the same assumption that attackers and defenders choose their next action simultaneously at each time step. We maintain, without loss of generality, that there is a single attacker and a single defender present in the game.

3.1 State Space

The state space of this game consists of a network graph , where each is a defender-owned computer and each edge is a tuple indicating a network connection between two nodes . The state of each machine is a tuple .

-

•

is the “vulnerability” of a node: the probability that a basic attack will be successful

-

•

is the “true” state of compromise and is visible only to the attacker

-

•

is the defender-visible state of compromise

At each time step, with probability , an “alert” is generated independent of attacker or defender action that sets even if the true compromise state , corresponding to a false positive alert. This phenomenon is justified and described in further depth in Section 3.4.

3.2 Attackers

The attacker’s action space, consists of actions on elements of subject to visibility constraints. We define a “compromised” node as a node that the attacker has gained access to and thus has . An “accessible” node is any node with some edge connecting it to any compromised node. The observable state space of an attacker, consists of all compromised nodes and all accessible nodes. The attacker’s action space consists of the following actions, which each incur some cost :

-

•

Basic Attack: Compromise an accessible with probability .

-

•

Zero-day Attack: Compromise an accessible as if .

-

•

Move: Move from some compromised to another compromised

-

•

Do Nothing: Take no action

-

•

Execute: End the game and realize rewards for all compromised

Attacker types inform what exactly their objectives are and may influence the categories of malware used e.g., coin miner, ransomware, backdoor. Moreover, the attacker’s type informs the utility function of the attacker and the cost of each action. For some attacker types, e.g., cybercriminals using ransomware, there is a clear utility: the ransom paid by the victim. However, other attacker types may aim to steal private information that is not be directly convertible to currency. The estimation of these utility functions is thus type-specific, though any compromised machine will confer nonzero utility to the attacker.

For our purposes, we assume that the attacker is a ransomware attacker and aims to compromise as many machines as possible and end the game before the defender can remove them from the system. Assuming unit reward for each node, this means that for a network of size , the attacker’s reward at any time if they take the Execute action or control an attacker-defined percentage of the network is:

Where is the final timestep of the game and is the cost of the action taken at time . We note that our reward function in Section 5 uses a scaling factor for the value of in lieu of unit value, and that this function also holds in cases where each node has a different value.

3.3 Defenders

The defender’s action space, consists of actions on and . Although the defender can take only one action at each time step, they may take that action on a set of nodes or edges. The defender’s observable space, consists of the entirety of and the number of alerts, , for all at all times. Specifically, the defender may:

-

•

Reduce Vulnerability: For some , slightly decrease the probability that a basic attack will be successful

-

•

Make Node Safe: For some , reduce the probability that a basic attack will be successful to 0.01

-

•

Restore Node: For some , reset the node to its initial, uncompromised state, including the probability that a basic attack will be successful

-

•

Scan: With some probability, detect the true compromised status of each

-

•

Do Nothing: Take no action

The objective of cybersecurity, broadly, is to maintain the confidentiality, availability, and integrity [4] of a system. The defender’s utility thus arises from the availability of resources. Each action has some cost associated with it, where the impact of the action being taken on the availability of that resource on the system dictates . For example, the Reduce Vulnerability and Make Node Safe actions are very similar, but the Reduce Vulnerability action incurs a much smaller cost under default YAWNING-TITAN settings. The defender’s utility is a fixed-value reward for eliminating the adversary or withstanding the attack minus the sum of all costs associated with the actions taken during the course of the game.

3.4 Presence of Noise

Security detections are not infallible, and some number of both false positives – detections that alert on benign behavior, and false negatives – failures to detect malicious behavior, must be expected. Attackers are incentivized to and have adopted techniques like using cloud infrastructure and software as a service (SaaS) providers to conduct attacks [15] and the use of legitimate executables or “lolbins” for malicious purposes [19]. Attackers seek to blend in, so detection of malicious behavior that is similar to benign behavior is important for defenders. Since there is no way to definitively determine whether or not a program is malicious, these rules and algorithms yield some number of false positive alerts. The empirical rate of these false positive alerts, according to surveys, appears to be somewhere between 20% [27] and 32% [18]. In cases where some number of alerts are not an indicator of actual attack activity, any probabilistic approach to network security must grapple with this noise. The security game setting has modeled this sort of behavior in the realm of deception and counter-deception. Work by Nguyen and Yadav [23] shows that while attacker payoffs are improved by deception, learning defenders can reduce the value of this deception.

Assuming that an attacker’s behavior is detected with some probability , then there is an independent probability of false positives . Letting and characterize two independent Bernoulli processes that may each yield an alert, we can treat the emission of an alert as the joint probability of these two processes. The probability of an alert occurring at all is thus , as described in earlier work by the authors [14]. The expected probability that a particular alert is attributable to benign activity is . For simplicity, we assume that and are the same across all nodes.

In the absence of the noise assumption, attacker-defender interaction becomes a game of Cops and Robbers on a graph [29] where a defender can eliminate the attacker by finding the “cop number” – the number of nodes required to “surround” the attacker and eliminate all of their access at once – for the subgraph the attacker has explored. This is still an extremely challenging problem, since even without noise, the defender only has a belief about the extremal edges of that subgraph and finding the attacker’s possible subgraphs has exponential complexity. As a result, the importance of an assumption about noise relates with assumptions about under what circumstances a defender realizes a reward.

3.5 Presence of Attackers

In non-cooperative game theory, two players are playing a game and each seeks to optimize against some utility function. This comes, of course, with the implicit assumption that both players know they are playing a game. In cybersecurity games, when modeling the beliefs of the defender, we frequently imply that the defender knows an attacker is present a priori. In reality, attackers are not always present in our system, and this has a substantial impact on defender expectations. Clearly, any response taken when an attacker is not present incurs a cost and yields no reward.

Let be the probability that an attacker is present in a system. If our game has alert probability and no noise – that is, – then although we know the probability of an alert is , the occurrence of an alert allows us to set and the defender can directly pursue the attacker as described in Section 3.4. Assuming noise is present in the system, the probability of an alert occurring at any time step is then

| (1) |

In this setting, since an attacker may not be present, the probability of a false positive event occurring at any time step is

4 Attacker Knowledge

As one might predict and as was demonstrated in prior work [14], attacker utility increases monotonically with the knowledge available to them. Knowing the parameters of a simplified game allows defenders to set a threshold of alerts in expectation – that is, if they know the values of , , and , they can compute the number of alerts that might be expected at time and shut down any system that has generated more than the threshold number of alerts. However, considering only the value in expectation can lead to poor outcomes for the defender, as even for small values of , any deviation from expectation can lead to suboptimal outcomes for the defender e.g. shutting down systems when no attacker is present. As such, the defender’s threshold for taking an action should instead be influenced by the level of deviation from their expectation.

As in Section 3.5, our per-node expectation of alerts, conditioned on the presence of an attacker, is . The defender establishes some prior and at each time step , observes some number of alerts across the nodes of the network, where . The total number of alerts, , expected at time is therefore , which can be treated as a random sample drawn from a Beta-binomial distribution. Given , the defender performs a Bayesian update on . The posterior distribution of is a beta distribution and so at time , the defender updates as follows:

| (2) |

Where for brevity, is the beta function, and are empirically derived parameters such that:

This yields the following for deriving and from observed alerts:

Setting , the defender should observe, on average, alerts at each time step. As the defender observes more than alerts at each time step, their confidence that an attacker is present grows. In lieu of simply setting an alert threshold, the defender sets some threshold for according to their risk tolerance, which may be calculated given the value of the network compared to costs or given exogenously. Once this empirical posterior estimate of is exceeded, remediation action should be taken.

In the full-knowledge scenario, however, the attacker has access to all of the defender’s information and can see the threshold for . Furthermore, they can directly compute how an action they will take will update and choose an action in accordance with that update, subject only to the condition that due to the presence of noise, some triggering event may occur even if they take no action. Thus, just as in prior work [14], the attacker should take the Execute action to end the game and collect the current reward when taking any other action may push over the threshold.

4.1 Zero Knowledge

In real-world settings, attackers are unaware of parameters like and , so they cannot ex ante optimize their actions and must instead seek to achieve their goal by balancing the risk of being caught with the need to achieve their goal. Thus, the “optimistic”, from the defender’s perspective, zero knowledge setting is the most consequential for generating real security impacts. In the zero knowledge setting, the defender is still armed with full knowledge and chooses some threshold for to take an action. However, the attacker does not know any of the parameters of the network and must balance exploration and exploitation given only the state, as each action they take may alert the defender of their presence. Given their limited information, they can use the Restricted Bayes/Hurwicz criterion [11] to choose their action.

Definition 1

The Restricted Bayes/Hurwicz criterion is a procedure for decision making under uncertainty parameterized by and defined by:

| (3) |

where is the decision-maker’s confidence in their distribution, is the coefficient of pessimism, is the prior distribution over the decision-maker’s actions, is the best case probability distribution over actions, and is the worst case probability distribution over actions

The attacker has some initial probability distribution over their actions indicating the probability they will take an action at a given time step given a state. The coefficient of pessimism corresponds to the attacker’s belief about the value of , which we write . Due to the limited signal available to the attacker, the confidence parameter should be monotonically decreasing over time as they observe likely defender actions. For notational simplicity, we set and write the criterion as follows:

At the start of the game, the attacker must estimate the probability of an alert occurring when they take an action. The attacker knows that false positive alerts occur and can infer that they need to establish some value that reflects Equation 1. In the absence of any information about the defender’s configuration, the attacker must sample some value . The use of the PERT distribution [9], a special case of the Beta distribution, is well-motivated from operations research. We allow the minimum and maximum value in the range and set the parameter of the distribution to the midpoint . The initial value of can be similarly sampled.

When the attacker observes a signal from a defender – that is, when the defender takes some action on an attacker-controlled node, the attacker updates their belief about , and reduces , since they are less confident in their initial distribution over their actions. This update process follows Algorithm 1. In order to conduct this update, the attacker estimated alert generation probability is used alongside their estimate of the defender’s belief about an attacker’s presence, since the attacker has no knowledge of the number of alerts and must instead construct a Bayesian estimate given these parameters.

In the full-knowledge case with thresholding, the best response dynamics are determined exactly by expectation and the parameters of the network [14]. However, this work considers a significantly expanded state space where both attackers and defenders have a richer action space. In both the full-knowledge and zero-knowledge case, the attacker’s choice of action depends on the state and any actions observed from the defender. The zero-knowledge case in particular is highly dependent on the establishment of good prior distributions. Prior distributions can be given exogenously or can be learned. In our case, we elect to learn via simulation. The results of this simulation are described in Section 5.1.

5 Empirical Evaluation

Game theoretic proofs about behavior in cybersecurity environments can provide powerful tools for thinking about how attacker-defender interaction occurs in practice. However, they do not always carry over to real-world environments. To gain empirical insight into the way these assumptions manifest in practice, we modify the YAWNING-TITAN (YT) framework [5] to include noise and allow two independent agents – one attacker and one defender – to be trained simultaneously. To do this, we create a new multiagent environment with a single state space where an attacking agent and a defending agent both operate but have their own separate observation and action spaces. This environment extends the functionality of YT by providing the ability to treat the attacking agent as a learner, rather than following a fixed algorithm for determining attacker actions. Moreover, since our zero-knowledge training case involves training both an attacker and a defender with different observation spaces, methods like Multi-Agent DDPG [21] are not suitable, as such methods would disclose hidden information about the environment to each agent. Despite issues of known overfitting to suboptimal policies due to non-stationarity [22], we opt to use two distinct instances of proximal policy optimization (PPO) [26] – one each for the attacker and defender – to train our agents, as this the algorithm generally performs well on a variety of tasks and has been used in prior, related work by others [5].

In this environment, we associate a cost to each attacker and defender action in accordance with those included in YT, and associate a positive reward for the agent that “wins” the episode along with a negative reward for the agent that “loses” the episode. To improve learning, we scale the negative reward for the defender such that they achieve a lesser negative reward for closer failures. Specifically, this scaling factor is the number of timesteps that the game has taken divided by the maximum number of timesteps required for a defender victory. We find that our defensive agent trained to expect the adversary has complete knowledge of the system will make worse decisions by overestimating the adversary when less information is available. For the sake of evaluating defensive agents against a programmatic, non-learning attacker, we adapt YT’s NSARed agent [25], based on a description of cyber attack automation, to work within our environment. The implementation of the NSARed agent is purely algorithmic – there is no machine learning component – and defensive agents are not exposed to the agent at training time, making it a stable baseline for comparison. The full code for our training and evaluation implementation is available on GitHub333https://github.com/erickgalinkin/pop_rocks/.

5.1 Establishment of Priors

The attacker must consider three distributions when playing the game: . For the “best case” distribution , we train an attacking agent against a defender whose only action is to do nothing. For the “worst case” distribution $̱P$, we train an attacking agent against a defender who has access to both attacker and defender observation spaces and thus has full-knowledge of the attacker’s moves and the network. The remainder of this subsection describes the training of the model is drawn from.

Our setting assumes an adversary who uses ransomware, where the attacking player “wins” when they control more than 80% of the network. For each training episode, we instantiate a random entrypoint for the attacker on a 50-node network whose edges are randomly generated to ensure the network has 60% connectivity, and that there are no unconnected nodes. We leverage proximal policy optimization (PPO) [26] for our learning agents in two settings:

-

1.

Optimistic (zero-knowledge): The attacking agent can see only the nodes they control and adjacent nodes. They cannot see the vulnerability status of any nodes.

-

2.

Pessimistic (full-knowledge): the attacking agent has access to the same information and observation space as the defending agent.

In accordance with our modeling assumptions, the attacking and defending player simultaneously decide their moves for timestep from their action space. Each agent is trained in either the optimistic or pessimistic environment for 3000 episodes and evaluated for 500 episodes across randomly generated environments in both the optimistic and pessimistic setting. Based on empirical results from experiments in the environment, the actor learning rate is set to 0.0002 and the critic learning rate is set to 0.0005. Higher learning rates were tried and led to fast convergence to suboptimal policies, as PPO assumes full observability to achieve globally optimal policies and our environment is only partially observable. Values for all hyperparameters and action costs for both players were fixed across all settings and are included in Tables 2 and 2.

| Parameter | Value |

|---|---|

| Actor Learning Rate | 0.0002 |

| Critic Learning Rate | 0.0005 |

| Training Epochs | 3500 |

| 0.99 | |

| Update Epochs | 5 |

| Batch Size | 64 |

| Win Reward | 5000 |

| Lose Reward | -100 |

| Attacker | Cost | Defender | Cost |

|---|---|---|---|

| Basic | 2 | Reduce Vuln | 1.5 |

| Zero Day | 6 | Make Safe | 4 |

| Move | 0.5 | Restore | 6 |

| Do Nothing | 0 | Scan | 0.5 |

| Execute | 0 | Do Nothing | 0 |

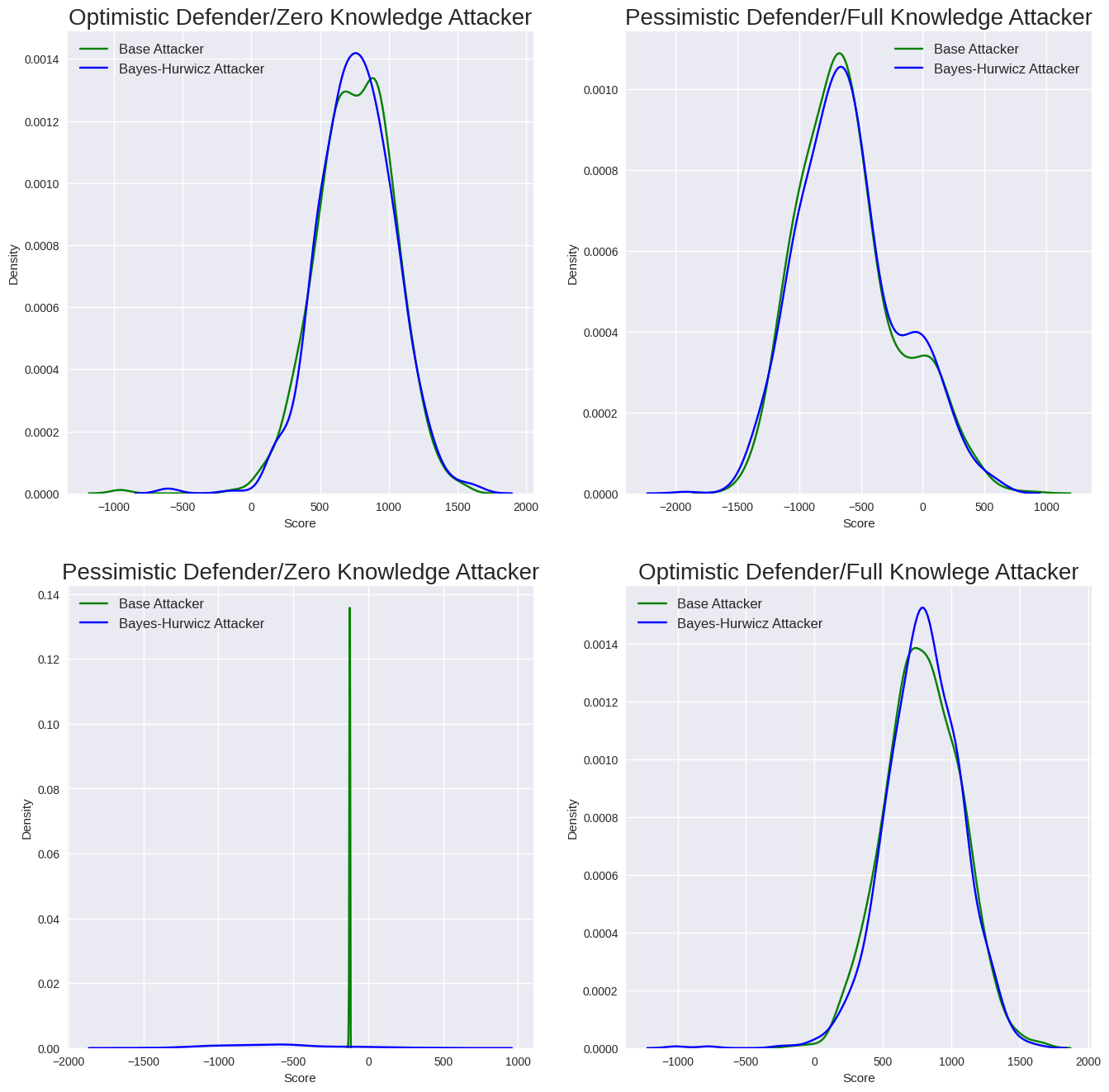

Evaluating reinforcement learning findings is notoriously difficult. Therefore, in line with Agarwal et al. [1], we look to more robust measurements that capture the uncertainty in results. Specifically, in addition to standard evaluation metrics, we consider also score distributions and the interquartile mean across evaluation runs. These metrics help capture the stochasticity in the task and normalize our results. Smoothed training curves in the optimistic setting are shown in Figure 2; the pessimistic setting is shown in Figure 2. The average rewards and interquartile mean for evaluation of the defending agents trained in the optimistic and pessimistic settings achieved each of the two evaluation settings are shown in Figure 3 and Figure 4. Score distributions are captured in Figure 5.

We observe in Figures 2 and 2 that the attacker generally starts out with a poor reward, but learns how to attack the target within 500 epochs. In the optimistic setting, the defender initially starts out with a very high level of reward but once the attacker begins winning more often, they must adapt their strategy, with rewards for both agents converging around epoch 3000. In the pessimistic setting, the defender similarly starts with a reasonably high reward, but the attacker quickly learns how to overcome the defender’s strategy. In this setting, the defender does not rebound and instead settles into a local optimum – minimizing the magnitude of loss rather than continuing to explore for a strategy that eliminates the attacker. Longer runs – up to 10000 training epochs – were attempted, but the pessimistic defender’s policy in those cases achieved even worse evaluation results than the defender trained for 3000 epochs. Note that the reward scale for attackers and defenders is not the same and defenders cannot achieve the extreme rewards that attackers do.

What we find from our evaluation is that the defending agent trained in the pessimistic setting performs worse on average than the defending agent trained in the optimistic setting. Across both evaluation settings, the optimistic defender is more robust in general and performs significantly better on average across all settings, as we can observe from Figures 3 and 4. Note that a negative score implies that the attacker is winning more often than the defender, while a positive score implies that the defender is winning more often. Each defender performs relatively better in the setting they were trained in and experiences less variance, as we can see the relative stability between the mean and interquartile mean for in-domain settings. Interestingly, both optimistic and pessimistic defenders perform well against the NSARed attacker, suggesting that training against learning agents offers substantial benefit over training against more “static” algorithmic attackers.

The score distributions in Figure 5 demonstrate the impact of the pessimistic defender’s convergence. While the optimistic defender has a fairly broad, relatively normal distribution in both settings, the pessimistic defender’s probability density is highly concentrated just below zero in domain and widely distributed across highly negative and highly positive out of distribution. This underscores the robustness of the optimistic defender and indicates that in training, the pessimistic defender converges to a policy that expects to achieve a negative reward and seeks to minimize that negative reward, rather than a policy that expects a positive reward and seeks to maximize it. Since the defender’s win condition depends on eliminating the attacker or surviving 500 episodes, this “loss minimization” policy leads to suboptimal performance against learning attackers.

To explain the difference in outcomes and what is learned in training, we can examine the differences in actions taken by the optimistic and pessimistic defenders. We find that the pessimistic defender agent uses the more expensive “restore node” action at a higher frequency than the optimistic agent, while the optimistic agent spends more turns on reducing the vulnerability of nodes and making nodes safe. This is likely an artifact of the attacker in the pessimistic setting having access to significantly more information, requiring a more aggressive response to forestall an attacker win. The distribution of action usage for both agents across all evaluations is shown in Table 3.

| Action | Pessimistic | Optimistic | Difference |

|---|---|---|---|

| Do Nothing | 0% | 1.4% | 1.4% |

| Scan | 3.22% | 3.19% | 0.03% |

| Reduce Vulnerability | 16.1% | 20.68% | 4.58% |

| Make Node Safe | 38.83% | 41.33% | 2.5% |

| Restore Node | 41.85% | 33.4% | 8.45% |

In the interest of ensuring our action costs do not have an undue influence on our results, we perform our same reinforcement learning evaluation for our pessimistic setting. We vary two parameters – “connectivity” and “security” – over the range , with a floor of 0.000001 to avoid division by zero. To establish the robustness of the model, we multiply the cost of each action by the ratio of connectivity and security such that the importance of security is nearly zero, the cost of actions becomes very high and when the value of connectivity is nearly zero, the cost of actions approaches zero, given fixed security value. We find that there is a nearly linear pattern relating the cost of actions to rewards, but the learned policy remains stable, provided the relative costs of actions is fixed and is not degenerate even near extremal values.

5.2 Use of Bayes-Hurwicz Decision Criterion for Attackers

As mentioned in Section 4.1, attackers in the real world do not have the luxury of training their priors to convergence against a target and must combine their prior knowledge with what is observed during an attack. Since the attacker is making decisions under uncertainty, some criterion must be used to allow them to do that subject to their own parameters. Using the pretrained, frozen models from Section 5.1, we consider how the application of the Restricted Bayes/Hurwicz criterion for the attacker as defined in Equation 3 impacts the outcomes of the attacking player. Aside from the use of the Restricted Bayes/Hurwicz criterion for the attackers, all of the defender and environmental evaluation settings remain the same.

The attacking agent draws independent prior and values from PERT distributions in accordance with Algorithm 1 at the start of each round and updates their at each timestep if defender activity is observed – that is, if the defender takes an action that removes access to an attacker controlled node. For each evaluation scenario, the relevant trained attacker model – zero knowledge or full knowledge – is used to establish , , and $̱P$ for the observed state of the game. Specifically, each policy model accepts an attacker-observed state and outputs a distribution over the attacker’s action space such that , , and . The attacker leverages these model outputs and the values of , and with Equation 3 to determine their next best action.

Interquartile mean evaluation rewards for the attacker, shown in Figure 6, illustrate the impact of restricted Bayes/Hurwicz. Against the optimistic defender, the use of restricted Bayes/Hurwicz yields marginally worse performance for the zero-knowledge (in-domain) attacker, but marginally better performance for the full-knowledge (out-of-domain) attacker. Against the pessimistic defender, the pure zero-knowledge attacker performs incredibly well, and using Bayes/Hurwicz somewhat negatively impacts the attacker’s interquartile mean reward. While the full-knowledge (in-domain) attacker performs worse overall against the pessimistic defender, the pure strategy is better than using Bayes-Hurwicz, as we would expect.

Results for defenders against both base attackers and those using restricted Bayes/Hurwicz are shown in Figure 7, and reflect the information from Figure 4. The optimistic defender experiences a marginal improvement against the restricted Bayes/Hurwicz attackers in both the zero knowledge and full knowledge case. Meanwhile, the pessimistic defender performs slightly better against the restricted Bayes/Hurwicz attacker in the full knowledge (in-domain) case, but significantly worse against the restricted Bayes/Hurwicz attacker in the zero knowledge (out-of-domain) case.

6 Conclusion and Future Work

The game design assumptions made about an attacker’s capabilities and knowledge grow increasingly important as we seek to develop improved mitigation capabilities for cyber defenders. In this work, we have demonstrated a phenomenon we call “the price of pessimism” – the cost incurred by a defender by assuming a more pessimistic view of what an attacker is likely to know. Practically, this suggests that the development of defensive agents necessitates careful consideration of what real-world attackers are likely to know, and model those assumptions correctly. Specifically, assumptions made about attacker knowledge considerably influence what defenders learn and the efficacy of their response. In future work, we aim to define more precisely how environmental factors should be determined and the impact of per-node and subnet variability of true and false positive alert rates on both attacker and defender performance.

Our results demonstrate that a defender’s assumptions about a priori attacker knowledge of an environment have a measurable impact on how that defender responds to potential intrusions. An assumption that overestimates an attacker’s knowledge and the concomitant learned response dynamics from this assumption leads to overreaction to false positives on the part of defending agents, incurring unnecessary costs and leading to poor convergence in reinforcement learning settings. We conclude that future work in this space seeking to have impact on systems in the real world should account for the likely knowledge and learning dynamics of attackers in addition to those of defenders and should aim to more accurately capture the learning behavior of attackers.

In future work, we aim to apply these findings to automated threat response by incorporating human factors, threat modeling, and using more complex simulation frameworks. Although our agents are learning agents, attacks both today and in the foreseeable future are conducted not by pure utility maximizing agents, but by human beings. As a result, we look to incorporate prospect theory [34] in future work similar to how such models have been used to align large language models [12]. We also aim to explore how incorporating threat intelligence information about sequences of attacker actions and how they lead to different outcomes can constrain the defender’s action space, allowing for threat-informed defense. Finally, given the restricted node states of the YT reinforcement learning environment, research incorporating threat intelligence may need to leverage a simulation framework more similar to real-world environments, like CybORG [10].

References

- [1] Agarwal, R., Schwarzer, M., Castro, P.S., Courville, A.C., Bellemare, M.: Deep reinforcement learning at the edge of the statistical precipice. Advances in neural information processing systems 34, 29304–29320 (2021)

- [2] Aggarwal, P., Thakoor, O., Jabbari, S., Cranford, E.A., Lebiere, C., Tambe, M., Gonzalez, C.: Designing effective masking strategies for cyberdefense through human experimentation and cognitive models. Computers & Security 117, 102671 (2022)

- [3] Albrecht, S.V., Ramamoorthy, S.: A game-theoretic model and best-response learning method for ad hoc coordination in multiagent systems. In: Proceedings of the 2013 international conference on Autonomous agents and multi-agent systems. pp. 1155–1156 (2013)

- [4] Anderson, R.: Security engineering: a guide to building dependable distributed systems. John Wiley & Sons (2020)

- [5] Andrew, A., Spillard, S., Collyer, J., Dhir, N.: Developing optimal causal cyber-defence agents via cyber security simulation. In: Workshop on Machine Learning for Cybersecurity (ML4Cyber) (07 2022)

- [6] Brooks, C.: Cybersecurity trends & statistics for 2023; what you need to know. https://www.forbes.com/sites/chuckbrooks/2023/03/05/cybersecurity-trends–statistics-for-2023-more-treachery-and-risk-ahead-as-attack-surface-and-hacker-capabilities-grow/ (2023)

- [7] Campbell, R.G.: Autonomous Network Defense Using Multi-Agent Reinforcement Learning and Self-Play. Ph.D. thesis, San Jose State University (2022)

- [8] Chatterjee, S., Tipireddy, R., Oster, M., Halappanavar, M.: Propagating Mixed Uncertainties in Cyber Attacker Payoffs : Exploration of Two-Phase Monte Carlo Sampling and Probability Bounds Analysis. In: IEEE International Symposium on Technologies for Homeland Security. IEEE (2016)

- [9] Clark, C.E.: The pert model for the distribution of an activity time. Operations Research 10(3), 405–406 (1962)

- [10] Cyber operations research gym. https://github.com/cage-challenge/CybORG (2022), created by Maxwell Standen, David Bowman, Son Hoang, Toby Richer, Martin Lucas, Richard Van Tassel, Phillip Vu, Mitchell Kiely, KC C., Natalie Konschnik, Joshua Collyer

- [11] Ellsberg, D.: Risk, ambiguity and decision. Routledge (2015)

- [12] Ethayarajh, K., Xu, W., Muennighoff, N., Jurafsky, D., Kiela, D.: Kto: Model alignment as prospect theoretic optimization. arXiv preprint arXiv:2402.01306 (2024)

- [13] Foley, M., Hicks, C., Highnam, K., Mavroudis, V.: Autonomous network defence using reinforcement learning. In: Proceedings of the 2022 ACM on Asia Conference on Computer and Communications Security. pp. 1252–1254 (2022)

- [14] Galinkin, E., Pountourakis, E., Carter, J., Mancoridis, S.: Simulation of attacker defender interaction in a noisy security game. In: AAAI-23 Workshop on Artificial Intelligence for Cyber Security (2023)

- [15] Galinkin, E., Singh, A., Vamshi, A., Hwong, J., Estep, C., Canzanese, R.: The Future of Cyber Attacks and Defense is in the Cloud. In: Proceedings - IEEE MALCON (2019), https://www.researchgate.net/publication/336592029

- [16] Illés, T., Terlaky, T.: Pivot versus interior point methods: Pros and cons. European Journal of Operational Research 140(2), 170–190 (2002)

- [17] Khouzani, M.H., Sarkar, S., Altman, E.: Saddle-point strategies in malware attack. IEEE Journal on Selected Areas in Communications 30(1), 31–43 (2012). https://doi.org/10.1109/JSAC.2012.120104

- [18] Kohgadai, A.: Alert fatigue: 31.9% of it security professionals ignore alerts (2017), https://virtualizationreview.com/articles/2017/02/17/the-problem-of-security-alert-fatigue.aspx

- [19] Kumar, S., et al.: An emerging threat fileless malware: a survey and research challenges. Cybersecurity 3(1), 1–12 (2020)

- [20] Liang, X., Xiao, Y.: Game theory for network security. IEEE Communications Surveys and Tutorials 15(1), 472–486 (2013). https://doi.org/10.1109/SURV.2012.062612.00056

- [21] Lowe, R., Wu, Y.I., Tamar, A., Harb, J., Pieter Abbeel, O., Mordatch, I.: Multi-agent actor-critic for mixed cooperative-competitive environments. Advances in neural information processing systems 30 (2017)

- [22] Moalla, S., Miele, A., Pascanu, R., Gulcehre, C.: No representation, no trust: Connecting representation, collapse, and trust issues in ppo. arXiv preprint arXiv:2405.00662 (2024)

- [23] Nguyen, T.H., Yadav, A.: The risk of attacker behavioral learning: Can attacker fool defender under uncertainty? In: International Conference on Decision and Game Theory for Security. pp. 3–22. Springer (2022)

- [24] Pawlick, J., Zhu, Q.: Game Theory for Cyber Deception. Springer (2021)

- [25] Ridley, A.: Machine learning for autonomous cyber defense. The Next Wave: The National Security Agency’s Review of Emerging Technologies (2018)

- [26] Schulman, J., Wolski, F., Dhariwal, P., Radford, A., Klimov, O.: Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347 (2017)

- [27] Security, O.: 2022 cloud security alert fatigue report (2022), https://orca.security/lp/sp/2022-cloud-security-alert-fatigue-report-thank-you/

- [28] Shostack, A.: Threat Modeling. Wiley, first edn. (2014)

- [29] Simard, F., Desharnais, J., Laviolette, F.: General cops and robbers games with randomness. Theoretical Computer Science 887, 30–50 (2021)

- [30] Sokri, A.: Game theory and cyber defense. Games in Management Science: Essays in Honor of Georges Zaccour pp. 335–352 (2020)

- [31] Thakoor, O., Jabbari, S., Aggarwal, P., Gonzalez, C., Tambe, M., Vayanos, P.: Exploiting bounded rationality in risk-based cyber camouflage games. In: International Conference on Decision and Game Theory for Security. pp. 103–124. Springer (2020)

- [32] Tomášek, P., Bošanskỳ, B., Nguyen, T.H.: Using one-sided partially observable stochastic games for solving zero-sum security games with sequential attacks. In: International Conference on Decision and Game Theory for Security. pp. 385–404. Springer (2020)

- [33] TTCP: cage-challenge. https://github.com/cage-challenge (2021)

- [34] Tversky, A., Kahneman, D.: Advances in prospect theory: Cumulative representation of uncertainty. Journal of Risk and uncertainty 5(4), 297–323 (1992)