[1]\fnmRandy \surDavila

[1]\orgdivDepartment of Computational Applied Mathematics & Operations Research, \orgnameRice University , \orgaddress\street6100 Main Street, \cityHouston, \postcode77005, \stateTX, \countryUSA

The Optimist: Towards Fully Automated

Graph Theory Research

Abstract

This paper introduces the Optimist, an autonomous system developed to advance automated conjecture generation in graph theory. Leveraging mixed-integer programming (MIP) and heuristic methods, the Optimist generates conjectures that both rediscover established theorems and propose novel inequalities. Through a combination of memory-based computation and agent-like adaptability, the Optimist iteratively refines its conjectures by integrating new data, enabling a feedback process with minimal human (or machine) intervention. Initial experiments reveal the Optimist’s potential to uncover foundational results in graph theory, as well as to produce conjectures of interest for future exploration. This work also outlines the Optimist’s evolving integration with a counterpart agent, the Pessimist (a human or machine agent), to establish a dueling system that will drive fully automated graph theory research.

keywords:

Automated conjecturing, automated reasoning, graph theory, TxGraffiti1 Introduction

This paper introduces the Optimist, an autonomous agent for generating conjectures in graph theory that iteratively adapts its output based on feedback. The Optimist builds upon the principles of TxGraffiti [1] with enhancements in mixed-integer programming, heuristic search, and a memory-based structure that allow it to efficiently produce and refine conjectures. Through its agent-based framework, the Optimist can systematically generate inequalities involving graph invariants and filter results based on empirical strength, novelty, and known mathematical knowledge. The system, implemented in Python, is open-source and accompanied by Jupyter notebooks, enabling accessibility and reproducibility available at this papers companion GitHub repository111https://github.com/RandyRDavila/The-Optimist/tree/main.

A key feature of the Optimist is its dynamic memory structure, which stores computed graph invariants and theorems, facilitating rapid retrieval and incremental updates as new graphs are introduced. This adaptive structure allows the system to refine its conjectures with minimal human intervention, moving toward a form of fully automated reasoning in graph theory. Additionally, the Optimist is designed to interact with a complementary (human or machine) agent, the Pessimist, which will challenge the Optimist’s conjectures by identifying counterexamples. Together, these agents form a dueling framework, termed GraphMind, enabling continuous conjecture generation, verification, and refinement in a closed feedback loop.

The remainder of this paper is organized as follows: Section 2 reviews prior work in automated conjecture generation, situating the Optimist within this field. Section 3 details the system’s architecture, heuristics, and optimization methods. Section 4 presents the conjectures generated by the Optimist, highlighting both rediscovered known results and new inequalities. Finally, Section 5 discusses the future work and the development of the Optimist in tandem with Pessimist and the potential impact of GraphMind in advancing automated reasoning in graph theory.

2 Related Work

The concept of intelligent machines contributing to mathematical research dates back to Turing’s 1948 proposal, which envisioned machines capable of high-level reasoning with minimal external data [2]. This vision set the stage for early computer-assisted mathematics. Among the first systems to embody this idea was Newell and Simon’s Logic Theorist, developed in the 1950s, which demonstrated the potential for machines to prove theorems in first-order logic. Newell and Simon anticipated that computers would one day play a central role in mathematical discovery [3]. While early systems like the Logic Theorist were focused on theorem proving, concurrent efforts in computer-assisted conjecturing emerged with Wang’s Program II [4], designed to generate statements interpretable as conjectures in logic. A significant challenge in Wang’s work, however, was filtering meaningful statements from the overwhelming volume of output. As Wang noted:

“The number of theorems printed out after running the machine for a few hours is so formidable that the writer has not even attempted to analyze the mass of data obtained” [4].

Refining large lists of generated statements into meaningful conjectures became a focal challenge in automated conjecture systems, prompting later programs to develop heuristics for identifying statements of mathematical significance.

2.1 Fajtlowicz’s Graffiti and the Dalmatian Heuristic

A major advancement in filtering meaningful conjectures emerged with Fajtlowicz’s Graffiti program in the 1980s. Graffiti generated inequalities between real-valued functions (invariants) on mathematical objects, primarily within graph theory. The program operated on a database of graphs, aiming to identify inequalities of the form:

where is a target invariant and is an expression involving other invariants, often in the form of sums, products, or more complex combinations constructed through an arithmetic expression tree. For example, Graffiti might conjecture an inequality such as , where represents the independence number of a graph, and denotes the graph’s order (number of vertices).

The challenge of distinguishing nontrivial conjectures from trivial ones persisted. To address this, Graffiti introduced the innovative Dalmatian heuristic, a technique for refining conjectures based on their significance and validity across known examples. The Dalmatian heuristic operates with two primary criteria:

The Dalmatian Heuristic

-

•

Truth Test: The conjectured inequality must hold for all graphs in the database.

-

•

Significance Test: The conjecture must provide a stronger bound for at least one graph compared to previously generated conjectures.

Fajtlowicz described the heuristic’s process as follows:

“The program keeps track of conjectures made in the past, and when it encounters a new candidate for a conjecture, it first verifies whether an example in the database shows that the conjecture does not follow from earlier conjectures. If no such example exists, the conjecture is rejected as non-informative. If one exists, the program proceeds with testing the conjecture’s correctness, and then checks whether the conjecture should be rejected by other heuristics. If accepted, the list of conjectures is revised, and less informative conjectures are removed from the list and stored separately in case the new conjecture is refuted later.” [5]

The Dalmatian heuristic not only reduced the number of conjectures but also enhanced their relevance by prioritizing conjectures that advanced current knowledge. When applied to identifying bounds on invariants, particularly those of active research interest, Graffiti produced conjectures where existing theory offered insufficient predictions for invariant values. Such conjectures, if extending current understanding, were considered significant. Indeed, Graffiti generated numerous conjectures that contributed substantially to both graph theory [6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31] and mathematical chemistry [32, 33, 34, 35, 36, 37, 38, 39]. For further details on Graffiti and the Dalmatian heuristic, see [1, 40].

2.2 Extensions of the Dalmatian Heuristic: Graffiti.pc and Conjecturing

Building upon the Dalmatian heuristic, DeLaViña’s Graffiti.pc and Larson’s Conjecturing programs extended automated conjecturing in graph theory and beyond. Developed during DeLaViña’s PhD studies under Fajtlowicz, Graffiti.pc retained the original focus on graph theory while significantly expanding computational capabilities. Unlike its predecessor, which handled datasets with a few hundred graphs, Graffiti.pc was implemented in C++ to work with much larger datasets, often comprising millions of graphs, and sometimes required computational clusters for efficient conjecture generation and testing. With applications in both education and research, Graffiti.pc notably contributed to domination theory, leading to important results in this field [41, 42, 43]. Despite these advances, increasing the dataset size did not always yield higher quality conjectures, as both Graffiti and Graffiti.pc were limited by the absence of certain small, special graphs in their datasets, which could serve as counterexamples to conjectures.

Recognizing the importance of selecting relevant datasets, Larson’s Conjecturing program introduced a more flexible framework capable of generating conjectures across various mathematical disciplines, while still implementing the Dalamatian heuristic. Although the focus remained on inequalities involving graph invariants, Conjecturing allowed for user-defined objects, invariants, and relations, expanding beyond graph theory into fields such as number theory and algebra. This adaptability enabled researchers to tailor the conjecture generation process for specific problems and customize parameters to guide the program towards nontrivial results.

2.3 TxGraffiti

TxGraffiti [1] is an automated conjecturing program maintained by the author that focuses on generating meaningful conjectures in graph theory by leveraging principles similar to Fajtlowicz’s Graffiti, particularly the emphasis on the mathematical strength of statements. However, TxGraffiti applies linear optimization models to a precomputed tabular dataset of graph invariants, generating both upper and lower bounds for target invariants by solving optimization problems that minimize or maximize the discrepancy between a target invariant and an expression involving other invariants. Specifically, to establish an upper bound, TxGraffiti minimizes a linear objective subject to constraints that enforce the inequality across all graphs meeting certain boolean conditions. This approach results in inequalities of the form , where is the target invariant, represents other graph properties, and and are optimized scalars. Similarly, lower bounds are generated by maximizing this objective under reversed constraints, offering a dual approach to bounding invariants within the dataset.

To prioritize conjectures, TxGraffiti employs the touch number heuristic, which measures how often an inequality holds as an equality across different graphs, thus reflecting the conjecture’s strength and relevance. Conjectures with high touch numbers are prioritized, as they suggest a tighter relationship between invariants. Additionally, TxGraffiti uses the static-Dalmatian heuristic, a refinement of the original Dalmatian heuristic, to eliminate redundant conjectures by filtering out those that can be derived from others via transitivity. This heuristic operates on a fixed set of conjectures, iteratively discarding any conjecture that does not introduce new information relative to previously accepted ones. Together, the optimization methods and heuristics used by TxGraffiti have led to several published results in graph theory (see [44, 45, 46, 47, 48, 49]).

For other notable automated conjecturing systems that have contributed to various mathematical domains, see Lenat’s AM [50, 51, 52], Epstein’s Graph Theorist [53], Colton’s HR system [54, 55, 56], Hansen and Caporossi’s AutoGraphiX (AGX) [57, 58], and Mélot’s GraPHedron and its successor PHOEG [59, 60].

3 The Optimist: An Automated Conjecturing Agent

The Optimist is an automated conjecturing agent dedicated to conjecture generation in graph theory, designed to construct, evaluate, and refine conjectures systematically. Drawing inspiration from the principles underlying TxGraffiti, Optimist implements a range of computational techniques aimed at producing conjectures with mathematical rigor and relevance. The Optimist incorporates foundational components, including graph data, invariants, and a repository of known theorems, which serve as the basis for its conjecture generation.

Optimist distinguishes itself from static conjecturing tools through its adaptability and agent-like autonomy. By employing iterative refinement and adaptive updates, Optimist dynamically integrates new information, such as additional theorems or counterexamples, which allows it to iteratively enhance the quality and accuracy of its conjectures. This capability to evolve its knowledge base and refine its conjectures in response to new data characterizes Optimist as a continuously learning system within automated reasoning, with the potential to contribute robustly to the development of theoretical insights in graph theory.

3.1 Initial Knowledge and Setup

At the core of the Optimist agent’s framework is an initial set of structured inputs that constitute its foundational knowledge, forming the basis for conjecture formulation. This setup includes three primary components: a collection of graphs, a dictionary of computable graph invariant functions, and a repository of known theorems. Each graph in the collection provides a distinct context in which relationships between invariants can be examined, thereby supplying diverse examples to inform potential conjectures.

The dictionary of invariants encompasses functions that compute essential properties of each graph, such as independence number, vertex count, and bipartiteness, which serve as key components in constructing conjectures. These invariants provide quantifiable data points that the Optimist utilizes in identifying relationships and generating bounds.

Furthermore, Optimist references a repository of known theorems to prevent the generation of redundant conjectures that merely replicate established results. This enables the system to focus on conjectures that extend beyond current knowledge, prioritizing relationships that are novel or have yet to be formally proven.

To facilitate conjecture generation and analysis, Optimist employs several internal data structures. The framework organizes conjectures into separate lists of upper and lower bounds for each target invariant, consolidating these conjectures into a comprehensive collection. This approach allows for efficient retrieval and streamlined analysis, supporting a systematic examination of conjectures across various graph invariants.

3.2 Constructing a Knowledge Base from Graph Invariants

To systematically investigate relationships between graph invariants, the Optimist framework constructs a tabular dataset, termed its knowledge base, or knowledge table (implemented as a Pandas DataFrame). In this structure, each row corresponds to a unique graph instance, while each column represents a specific invariant or boolean property. This organization enables efficient data access and facilitates conjecture generation by allowing the framework to process invariant data in a manner akin to feature-based analysis in machine learning models. Analogous to how learning models identify patterns across features, Optimist identifies potential conjectures by exploring relationships among these stored invariants.

A primary advantage of this knowledge base is that it stores all invariant values upon initial computation, obviating the need for recomputation during conjecture generation and validation. Given that calculating graph invariants can be computationally intensive, this storage approach significantly reduces the overhead of conjecture generation, streamlining the exploration process. By storing these invariant values centrally, Optimist can efficiently retrieve relationships among invariants in real time, thereby facilitating more dynamic conjecture formulation.

This knowledge base thus functions as a structured “memory” for the Optimist agent, enabling it to effectively leverage previously computed data in the same way a reasoning system might recall facts to inform subsequent decision-making. Through this design, Optimist not only enhances computational efficiency but also supports a more comprehensive exploration of graph-theoretic relationships.

3.3 Mixed-Integer Programming for Conjecture Generation

The Optimist framework employs a mixed-integer programming (MIP) approach to generate conjectures establishing upper and lower bounds on a target invariant. Unlike TxGraffiti, which typically relates a single invariant to the target invariant, Optimist formulates conjectures by considering linear combinations of multiple invariants under various boolean conditions. The Optimist agent simultaneously seeks both upper and lower bounds, and in cases where bounds converge, it identifies exact equalities.

To produce meaningful bounds on a target invariant, Optimist solves two MIP formulations that optimize for tight upper and lower bounds. The framework emphasizes maximizing instances where each bound holds as an equality, ensuring conjectures that are not only accurate but also sharp. This focus on equality instances aligns with the goal of producing conjectures that provide informative bounds on extreme values of the target invariant.

Let denote the target invariant, and let represent a linear combination of other invariants. The bounds we seek can be expressed as:

where is a weighted sum of invariants with weights that maximize equality conditions across a dataset derived from the Optimist agent’s knowledge base. Let represent the target invariant for each graph , and let represent the selected invariants of graph . The MIP seeks weights and an intercept to optimize the following constraints:

For the upper bound, we solve:

Binary variables are introduced to indicate equality, expressed by the constraint:

where is a large constant that relaxes the equality requirement when .

Similarly, for the lower bound, we solve:

Binary variables are introduced, with constraints defined as:

The objective function maximizes the total number of equality instances:

This formulation identifies optimal weights and intercepts for the bounds, yielding:

When the solutions for upper and lower bounds converge, we obtain an equality:

Otherwise, the inequalities define a bounded range for , delineating the behavior of the target invariant over the graph dataset.

The MIP is implemented in Python using the PuLP library, providing a flexible and efficient platform for solving these models; see Appendix A or our GitHub repository222https://github.com/RandyRDavila/The-Optimist/tree/main. Through this optimization approach, Optimist generates conjectures that not only align with observed data but also enhance interpretability by prioritizing equality instances, thus identifying sharp bounds for the target invariant.

3.4 Systematic Generation of Conjectures

The Optimist framework explores diverse combinations of invariants systematically, aiming to generate a comprehensive set of conjectures for each target invariant. For each target invariant, Optimist constructs bounds by iterating through pairs of explanatory invariants, each conditioned by a single boolean property. This process is managed through the function make_all_linear_conjectures, which organizes these combinations to generate upper and lower bounds; see Listing 1.

The function make_all_linear_conjectures performs the following operations:

-

•

For each pair of invariants, it applies all specified boolean properties, ensuring that the conjecture generation covers a broad space of possible relationships.

-

•

Within each pair, the target invariant is constrained to avoid redundancies, ensuring that neither explanatory invariant directly corresponds to the target.

-

•

The function calls the MIP-based make_linear_conjectures function to compute bounds on the target invariant, yielding a candidate set of upper and lower conjectures.

The output of this procedure is a set of candidate conjectures for each target invariant, reflecting a systematic approach to exploring multi-invariant relationships under varied boolean conditions.

This function thus enables Optimist to generate bounds across a systematic range of invariant combinations, producing a foundational set of conjectures that can then be refined and prioritized based on empirical support and mathematical relevance.

3.5 The Hazel Heuristic

After invoking the make_all_linear_conjectures function on a target invariant, the Optimist agent typically produces extensive lists of potential conjectures—often in the hundreds—depending on the numerical and boolean properties considered. To identify and prioritize conjectures with strong empirical backing, Optimist applies the Hazel Heuristic, a filtering process centered on each conjecture’s touch number.

The touch number of a conjectured inequality is defined as the number of graphs in the Optimist knowledge base for which the inequality holds as an equality. A high touch number suggests that the conjectured bound is not only generally valid but also sharp for a significant subset of graphs, indicating a potentially fundamental relationship between the target invariant and the explanatory invariants. High-touch conjectures are, therefore, more likely to capture structural properties of graph invariants and yield insights beyond loose or incidental bounds.

The Hazel Heuristic applies three successive steps:

-

1.

Deduplication: Removes duplicate conjectures to ensure the uniqueness of bounds.

-

2.

Filtering: Discards conjectures with touch numbers below a specified threshold, removing bounds that lack consistent sharpness across the dataset.

-

3.

Sorting: Orders conjectures in descending order of touch number, bringing conjectures with the highest empirical support to the forefront.

By prioritizing conjectures with the highest touch numbers, the Hazel Heuristic ensures that the most empirically significant conjectures are advanced for further analysis. This ranking approach confers several advantages:

-

•

Relevance: Conjectures with high touch numbers are more likely to represent tight bounds, making them strong candidates for formal validation or proof.

-

•

Robustness: A high touch number indicates that a conjecture’s bound is consistently representative across diverse graph structures, enhancing its robustness and potential generalizability.

-

•

Informational Value: High-touch conjectures highlight areas where graph invariants exhibit strong interactions, offering insights into fundamental invariant relationships.

Through the Hazel Heuristic, Optimist focuses on conjectures with maximal empirical evidence, refining the conjecture set to emphasize inequalities with the greatest potential for meaningful mathematical contribution.

3.6 The Morgan Heuristic

The Morgan Heuristic identifies and removes redundant conjectures, prioritizing the most general conjectures with broad applicability. Specifically, a conjecture is flagged as redundant if it shares the same conclusion as another but is based on a more specific (less general) hypothesis. By focusing on generality, this heuristic ensures that the final set of conjectures includes only the most powerful and widely applicable statements.

For example, consider the two conjectures in Listing 3. Every tree is a bipartite graph, but not every bipartite graph is a tree. Therefore, Conjecture 4, which applies to all connected bipartite graphs, is more general than Conjecture 2, which applies only to trees. As such, Conjecture 2 is discarded in favor of Conjecture 4.

In practice, the generality of a conjecture’s hypothesis is determined by counting the number of graphs in the Optimist knowledge base that satisfy the hypothesis. If one hypothesis applies to a larger subset of graphs than another, it is considered more general. This count-based approach, though heuristic, provides a practical method for determining generality even when relationships between graph classes are not fully defined; see the implementation in Listing 4.

The key steps in the Morgan Heuristic are as follows:

-

1.

Identify Redundancy: Conjectures are flagged as redundant if they share identical conclusions but differ in hypothesis generality.

-

2.

Assess Generality: For each pair of conjectures with the same conclusion, the conjecture with a hypothesis that applies to a larger subset of graphs is considered more general, based on empirical counts from the Optimist dataset.

-

3.

Comparison and Removal: If one conjecture is a generalization of another, the less general conjecture is removed.

-

4.

Refinement: This process iteratively refines the conjecture set, ensuring that only unique, maximally general conjectures remain.

Applying the Morgan Heuristic yields a refined list of conjectures that are maximally general and devoid of redundancy. This reduction not only simplifies the conjecture set but also enhances its theoretical strength, highlighting statements with the broadest potential applicability.

3.7 The Strong-Smokey and Weak-Smokey Heuristics

To further refine the conjecture set, the Optimist agent employs two selective heuristics: the weak-smokey and strong-smokey heuristics. Both heuristics reduce redundancy by examining the set of sharp graphs—instances where each conjectured inequality holds as an equality—though each applies distinct criteria to retain only conjectures that offer unique insights.

The weak-smokey heuristic iterates through conjectures in descending order of touch number, retaining conjectures that introduce new sharp graphs not already covered by previously selected conjectures. This approach prioritizes conjectures with complementary equality instances, achieving a balance between inclusiveness and informativeness. By focusing on unique sharp instances, weak-smokey substantially reduces the initial conjecture set while maintaining broad empirical relevance.

In contrast, the strong-smokey heuristic applies a stricter criterion, requiring that each retained conjecture covers a strict superset of sharp graphs compared to any previously selected conjecture. This process begins with the conjecture having the highest touch number, then adds conjectures only if they introduce strictly new equality instances. By requiring that each new conjecture expands the set of sharp graphs, strong-smokey yields a minimal set of conjectures, often halving the number retained by weak-smokey. This minimality ensures that only conjectures providing the most unique insights are retained.

Applying either the weak-smokey or strong-smokey heuristic significantly reduces the conjecture set for a given target invariant, typically retaining only four or five conjectures from an initially large list. As a stricter filter, strong-smokey produces a subset of the conjectures selected by weak-smokey. It is employed when Optimist seeks to retain only the most general and minimal set of conjectures, emphasizing statements that provide the greatest informational value.

3.8 Conjecture Generation, Filtering, and Adaptive Knowledge Update

The Optimist agent is designed as an iterative system for conjecture generation, filtering, and continuous refinement based on new information. At the core of this process is the use of mixed-integer programming (MIP) to generate conjectures. Using combinations of graph invariants, Optimist generates potential upper and lower bounds for a target invariant. Each conjecture is initially stored in memory, organized by target invariant.

Given the potentially large number of conjectures generated, Optimist applies a multi-stage filtering process to ensure that only the most informative and robust conjectures are retained. The first phase employs the Hazel heuristic, which prioritizes conjectures with the highest empirical relevance by sorting them according to touch number—a measure of how frequently each conjecture holds as an equality across the dataset. This step ensures that Optimist focuses on conjectures that provide tight bounds for the target invariant.

Next, the Morgan heuristic is applied to remove redundant conjectures with more restrictive hypotheses that do not contribute new insights over more general conjectures with the same conclusion. This heuristic ensures the final conjecture set retains only the most widely applicable statements.

A final layer of filtering applies either the strong-smokey or weak-smokey heuristic, depending on the desired strictness. The weak-smokey heuristic favors inclusiveness, retaining conjectures that add new sharp instances, while the strong-smokey heuristic strictly selects conjectures that cover strictly broader sets of sharp graphs. This stage produces a minimal, highly informative set of conjectures, prioritizing those with the broadest empirical applicability – essentially equivalent to an unpublished version of TxGraffiti called TxGraffiti II, which is available as an interactive website333https://txgraffiti.streamlit.app/Generate_MIP_Conjectures.

Knowledge Update and Counterexample Handling

The Optimist agent is designed to adapt dynamically to new data, allowing it to refine its conjectures and avoid rediscovering known results. Before retaining any conjecture, Optimist references a repository of established theorems, removing any conjecture implied by these known results. This approach focuses the agent’s attention on novel insights.

The agent further adapts based on counterexamples provided by users. If a counterexample graph contradicts an existing conjecture, Optimist incorporates the new graph into its knowledge base and updates all conjectures to ensure consistency with observed data. This self-improvement process enables the agent to maintain accurate and reliable conjectures, continuously refining its knowledge and conjecture quality with each iteration. By iterating through these stages of generation, filtering, and adaptive updates, Optimist exemplifies a robust approach to autonomous reasoning and knowledge refinement in graph theory.

4 Case Study: Conjecture Generation for the Independence Number

This section presents a case study to evaluate the Optimist agent’s ability to generate conjectures that are both empirically grounded and theoretically insightful. Our objective is to demonstrate the Optimist’s effectiveness in autonomously rediscovering classical bounds on the independence number, , and its adaptability in refining conjectures in response to iterative user feedback.

Optimist was assessed on its capacity to conjecture bounds on , a fundamental but computationally challenging graph invariant. Beginning with a minimal dataset, we examined whether the agent could autonomously derive meaningful bounds on and subsequently refine its conjectures through feedback and new information.

4.1 Experimental Setup and Initial Conjecture Generation

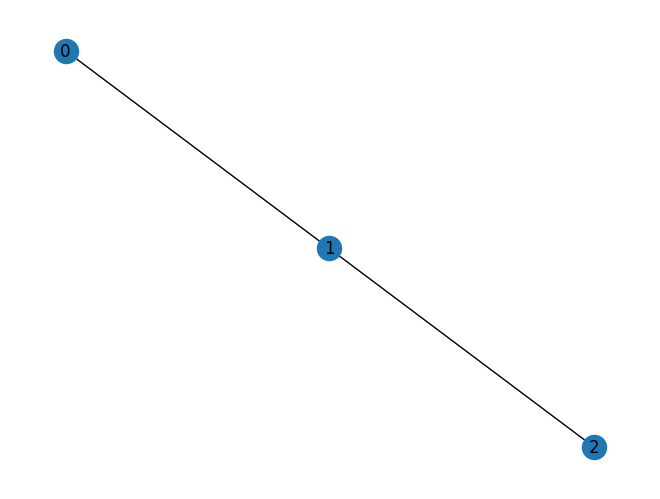

The initial setup for Optimist included three of the smallest nontrivial connected graph structures: the complete graphs and and the path graph , as shown in Figure 2. The agent was configured with a selection of invariants relevant to conjecturing , including the order , minimum degree , maximum degree , matching number , and minimum maximal matching number , among others. With this setup, Optimist could explore a variety of relationships between and these properties.

From this minimal configuration, Optimist generated an initial series of conjectures aimed at establishing upper and lower bounds for . The resulting conjectures were broad, reflecting the limited dataset and covering a variety of possible inequalities. This initial series included nearly 60 conjectures, illustrating the heuristic’s dependency on dataset size and the need for further refinement to isolate the most significant bounds.

4.2 Iterative Knowledge Refinement through User Feedback

Following the initial conjecture set, the user identified conjectures that could be invalidated by counterexamples. For instance, the conjecture presented in Listing 7 was shown to be false for the path graph .

Noting this counterexample, the user informed the Optimist agent of , prompting the agent to update its knowledge base and regenerate its conjecture set.

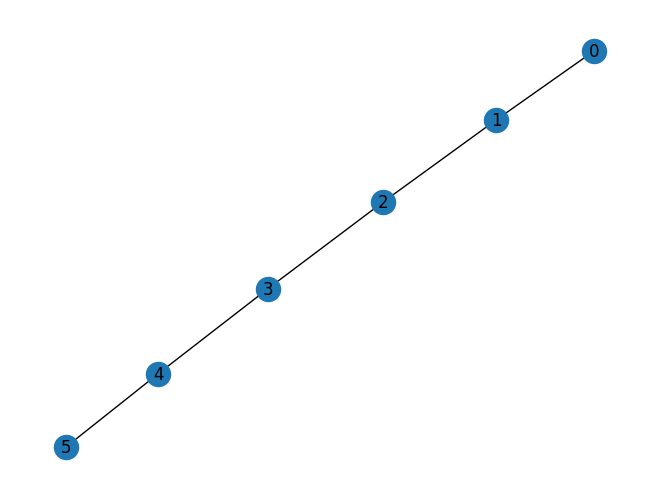

With each new graph added, Optimist refined its conjecture set, retaining inequalities that consistently held across the expanded dataset and discarding or modifying those that failed to generalize. This interactive process continued over multiple iterations, with Optimist generating conjectures and the user acting as a Pessimist by providing counterexamples. Each update expanded the agent’s knowledge base, enabling it to generate more reliable conjectures. Figure 3 shows the set of counterexample graphs introduced during this process.

This iterative refinement process allowed Optimist to progressively narrow down the set of plausible bounds on , adapting its conjectures in response to new data. The user’s counterexamples acted as a form of hypothesis testing, whereby each graph introduced was a probe challenging Optimist’s emerging hypotheses. Through this feedback loop, the agent evolved toward a more reliable and theoretically grounded set of conjectures.

Occasionally, the Optimist agent generated conjectures that aligned with known bounds or equations for the independence number, often appearing at the top of its conjecture lists. When these familiar relationships were recognized by the user, they were communicated to the agent, enabling Optimist to store this knowledge and exclude known results from its conjecture set going forward. This process allowed Optimist to focus on unexplored relationships. See Listing 9 for an example of updating the agent with known results.

By the conclusion of the experiment, after providing nine counterexamples, the Optimist agent had learned several key theorems, including well-known and classical results in graph theory. These learned theorems are shown in Listing 10.

The emergence of these theorems with minimal user intervention highlights Optimist’s empirical robustness and adaptability, demonstrating its potential to align autonomously with established mathematical knowledge. This adaptive capacity reflects the system’s strength in identifying both classical and novel bounds, reinforcing its value as a tool for automated reasoning in graph theory. Please see our GitHub repository444https://github.com/RandyRDavila/The-Optimist/tree/main for the complete experiment available as a Colab and Jupyter notebook.

4.3 The Evolution of the Optimist Agent and the Concept of GraphMind

The Optimist agent begins as a knowledge-based system, equipped with a foundational set of graphs and a suite of functions for computing graph invariants. From this initial setup, the agent constructs a tabular dataset, representing each graph as a unique instance and each function as a feature. This tabular structure serves as a dynamic memory, enabling Optimist to generate conjectures based on observed relationships across invariants, initially producing bounds and identities for specific graph properties.

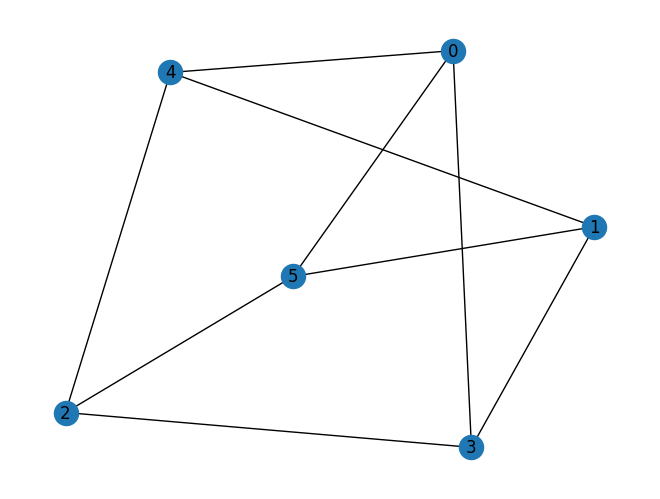

Through interaction with a counterexample generator—referred to as the Pessimist, which can be either a human user or an autonomous agent—Optimist adapts its conjectures and knowledge base. When a counterexample is found that disproves a conjecture, the Pessimist introduces a new graph, prompting Optimist to re-evaluate its existing conjectures. This iterative feedback loop allows Optimist to refine its dataset, accumulate new graph structures, and distill empirically valid conjectures. Over time, Optimist builds a repository of both new conjectures and established theorems, increasing its capability to generate stronger, more general conjectures that remain consistent with an ever-growing dataset; effectively increasing its intelligence; see Figure 4 for an illustration.

In a future implementation where the Pessimist is also an autonomous agent, this feedback loop could operate without human intervention. The Optimist would continuously propose conjectures, while the Pessimist systematically seeks counterexamples. This interaction forms an automated cycle of discovery, where conjecture generation and counterexample search evolve concurrently, refining both conjectures and the underlying graph structures in the dataset. This setup, while unable to formally prove conjectures, would create a self-sustaining environment for empirical discovery in graph theory—a system we refer to as GraphMind.

GraphMind represents a conceptual leap toward a fully automated graph theorist. While it lacks formal proof capabilities, its ability to autonomously discover conjectures and graph structures could drive meaningful advances in graph theory. By automating the processes of hypothesis generation and empirical verification, GraphMind would serve as a powerful tool for mathematical exploration, potentially uncovering new classes of graphs, invariants, and relationships that extend beyond human intuition.

5 Conclusion and Future Directions

In this paper, we presented the Optimist agent, a framework designed for automated conjecture generation in graph theory. Through the use of mixed-integer programming, heuristic filtering, and iterative refinement, Optimist has demonstrated the ability to autonomously derive empirically strong and mathematically relevant conjectures. In particular, our case study on the independence number highlighted Optimist’s capability to rediscover established results, such as Kőnig’s theorem and classical bounds, while dynamically refining its conjectures based on iterative user feedback. Notably, the Optimist agent often conjectures published conjectures of TxGraffiti (see [44, 45, 46, 47, 48, 49]) as a subset of other conjectures which remain open and under investigation.

The Optimist framework represents an important step toward an automated approach to hypothesis generation in discrete mathematics. By integrating a counterexample-searching counterpart—the Pessimist—we envision a collaborative system, GraphMind, where Optimist proposes conjectures and Pessimist seeks counterexamples. This dueling-agent framework creates a feedback loop, allowing both agents to iteratively test, refine, and adapt conjectures without human intervention.

GraphMind represents a vision of a fully automated graph theorist. While current implementations do not have formal proof capabilities, the ability to generate, validate, and refine conjectures could lead to significant advancements in empirical discovery within graph theory. As both agents operate in tandem, they provide a balanced and rigorous exploration of graph invariants, potentially uncovering novel relationships and classes of graphs that might remain hidden to human intuition.

Future work will focus on developing an autonomous Pessimist agent to enable a continuous cycle of conjecture and counterexample generation. Early results suggest that deep reinforcement learning, combined with Monte Carlo Tree Search, can empower the Pessimist agent to construct counterexample graphs on a small number of vertices. Additionally, integrating formal proof techniques, such as automated theorem proving, could further enhance GraphMind, allowing it not only to propose conjectures but also to rigorously verify or disprove them. Furthermore, leveraging language models to identify and cross-reference known theorems in the literature could effectively eliminate the need for human intervention in GraphMind. By fully automating this exploratory process, GraphMind could serve as a powerful tool for mathematical discovery, providing researchers with a novel and efficient approach to exploring complex structures in graph theory.

Appendix A Python Code for Inequality Generation

Appendix B Python Code Implementing Heuristics

Appendix C Python Code for the Optimist Python class

References

- \bibcommenthead

- Davila [2024] Davila, R.: Automated conjecturing in mathematics with TxGraffiti. Discov. Artif. 359, 290–302 (2024)

- Turing [2004] Turing, A.: Intelligent machinery. The Essential Turing, 395–432 (2004)

- Simon and Newell [1958] Simon, H.A., Newell, A.: Heuristic problem solving: the next advance in operations research. Oper. Res. 6, 1–10 (1958)

- Wang [1990] Wang, H.: Computer theorem proving and artificial intelligence. Computation, Logic, Philosophy, 63–75 (1990)

- Fajtlowicz [1995] Fajtlowicz, S.: On conjectures of graffiti, part V. In: 7th Int. Quadrennial Conf. Graph Theory, Comb. Appl., vol. 1, pp. 367–376 (1995)

- Fajtlowicz and Waller [1986] Fajtlowicz, S., Waller, W.: On two conjectures of graffiti. Congr. Numer. (1986)

- Chung [1988] Chung, F.: The average distance is not more than the independence number. J. Graph Theory 12, 229–235 (1988)

- Alon and Seymour [1989] Alon, N., Seymour, P.: A counter-example to the rank-coloring conjecture. J. Graph Theory 13, 523–525 (1989)

- Fajtlowicz [1989] Fajtlowicz, S.: On conjectures and methods of graffiti. In: 4th Proceedings Clemson Miniconference (1989)

- Beezer et al. [1989] Beezer, R.A., Riegsecker, J., Smith, B.A.: On conjectures of graffiti concerning regular graphs. Technical report, University of Puget Sound Dept. of Mathematics and Computer Science (1989). Technical Report No. 89-1

- Favaron et al. [1990] Favaron, O., Maheo, M., Sacle, J.-F.: Some results on conjectures of graffiti - 1. Ars Comb. 29, 90–106 (1990)

- Favaron et al. [1991a] Favaron, O., Maheo, M., Sacle, J.-F.: Some results on conjectures of graffiti - iii. Technical report, Universite de Paris-Sud (1991). Research Report No. 670, LRI

- Favaron et al. [1991b] Favaron, O., Maheo, M., Sacle, J.-F.: On the residue of a graph. J. Graph Theory 15, 39–64 (1991)

- Favaron et al. [1993] Favaron, O., Maheo, M., Sacle, J.-F.: Some eigenvalue properties in graphs (conjectures of graffiti - ii). Discrete Math. 111, 197–220 (1993)

- Griggs and Kleitman [1994] Griggs, J.R., Kleitman, D.J.: Independence and the havel-hakimi residue. Discrete Math. 127, 209–212 (1994)

- Fajtlowicz et al. [1995] Fajtlowicz, S., McColgan, T., Reid, T.J., Staton, W.: Ramsey numbers of induced regular subgraphs. Ars Comb. 39, 149–154 (1995)

- Wang [1997] Wang, L.-X.: On one of graffiti’s conjecture 583. Appl. Math. Mech. 18(4), 357–360 (1997)

- Firby and Haviland [1997] Firby, P., Haviland, J.: Independence and average distance in graphs. Discrete Appl. Math. 75, 27–37 (1997)

- Bollobas and Erdos [1998] Bollobas, B., Erdos, P.: Graphs of extremal weights. Ars Comb. 50, 255–233 (1998)

- Bollobas and Riordan [1998] Bollobas, B., Riordan, O.M.: On some conjectures of graffiti. Discrete Math. 179, 223–230 (1998)

- Caro [1998] Caro, Y.: Colorability, frequency and graffiti-119. J. Comb. Math. Comb. Comput. 27, 129–134 (1998)

- Dankelmann et al. [1998] Dankelmann, P., Swart, H., Oellermann, O.: On three conjectures of graffiti. J. Comb. Math. Comb. Comput. 26, 131–137 (1998)

- Jelen [1999] Jelen, F.: -independence and the -residue of a graph. J. Graph Theory 32, 241–249 (1999)

- Codenotti et al. [2000] Codenotti, B., Del Corso, G., Manzini, G.: Matrix rank and communication complexity. Linear Algebra Appl. 304, 193–200 (2000)

- Beezer et al. [2001] Beezer, R.A., Riegsecker, J., Smith, B.A.: Using minimum degree to bound average distance. Discrete Math. 226, 365–371 (2001)

- Favaron et al. [2003] Favaron, O., Maheo, M., Sacle, J.-F.: The randic index and other graffiti parameters of graphs. MATCH Commun. Math. Comput. Chem. 47, 7–23 (2003)

- Zhang [2004] Zhang, X.-D.: On the two conjectures of graffiti. Linear Algebra Appl. 385, 369–379 (2004)

- Dankelmann et al. [2005] Dankelmann, P., Dlamini, G., Swart, H.C.: Upper bounds on distance measures in k3,3-free graphs. Util. Math. 67, 205–221 (2005)

- Hansen et al. [2009] Hansen, P., Hertz, A., Kilani, R., Marcotte, O., Schindl, D.: Average distance and maximum induced forest. J. Graph Theory 60(1), 31–54 (2009)

- Cygan et al. [2012] Cygan, M., Pilipczuk, M., Skrekovski, R.: On the inequality between radius and rankic index for graphs. MATCH Commun. Math. Comput. Chem. 67, 451–466 (2012)

- Yue et al. [2019] Yue, J., Zhu, Y., Klavzar, S.: The annihilation number does not bound the 2-domination number from the above. Discrete Math. (2019)

- Fowler [1997] Fowler, P.: Fullerene graphs with more negative than positive eigenvalues; the exceptions that prove the rule of electron deficiency. J. Chem. Soc. Faraday 93, 1–3 (1997)

- Fowler et al. [1998] Fowler, P., Hansen, P., Rogers, K.M., Fajtlowicz, S.: C60br24 as a chemical illustration of graph theoretical independence. J. Chem. Soc. Perkin Trans. 2 (1998)

- Fowler et al. [1999] Fowler, P., Rodgers, K., Fajtlowicz, S., Hansen, P., Caporossi, G.: Facts and conjectures about fullerene graphs. leapfrog, cylinder and fullerene graphs. In: Proc. Euroconf. ALCOMA (1999)

- Fajtlowicz and Larson [2003] Fajtlowicz, S., Larson, C.: Graph-theoretical independence as a predictor of fullerene stability. Chem. Phys. Lett. 377, 485–490 (2003)

- Stevanovic and Caporossi [2005] Stevanovic, D., Caporossi, G.: On the (1,2)-spectral spread of fullerenes. In: Graphs and Discovery DIMACS: Ser. Discrete Math. Theor. Comput. Sci. vol. 69, pp. 395–370 (2005)

- Fajtlowicz [2005] Fajtlowicz, S.: On representation and characterization of buckminsterfullerene c60. In: Graphs and Discovery DIMACS: Ser. Discrete Math. Theor. Comput. Sci. vol. 69, pp. 127–135 (2005)

- Fajtlowicz et al. [2005] Fajtlowicz, S., John, P., Sach, H.: On maximum matchings and eigenvalues of benzenoid graphs. Croat. Chem. Acta 78, 195–201 (2005)

- Doslic and Reti [2011] Doslic, T., Reti, T.: Spectral properties of fullerene graphs. MATCH Commun. Math. Comput. Chem. 66, 733–742 (2011)

- Larson and Van Cleemput [2016] Larson, C.E., Van Cleemput, N.: Automated conjecturing i: Fajtlowicz’s dalmatian heuristic revisited. Artif. Intell. 231, 17–38 (2016)

- Henning and Yeo [2013] Henning, M.A., Yeo, A.: Total domination and matching numbers in graphs with all vertices in triangles. Discrete Math. 313, 174–181 (2013)

- Henning and Yeo [2014] Henning, M.A., Yeo, A.: A new lower bound for the total domination number in graphs proving a graffiti.pc conjecture. Discrete Appl. Math. 173, 45–52 (2014)

- Henning and Wash [2017] Henning, M.A., Wash, K.: Matchings, path covers and domination. Discrete Math. 340, 3207–3216 (2017)

- Brimkov et al. [2024] Brimkov, B., Davila, R., Schuerger, H., Young, M.: Computer assisted discovery: Zero forcing vs vertex cover. Discrete Appl. Math. 359, 290–302 (2024)

- Caro et al. [2020] Caro, Y., Davila, R., Pepper, R.: New results relating matching and independence. Discuss. Math. Graph Theory 42, 921–935 (2020)

- Caro et al. [2022] Caro, Y., Davila, R., Henning, M.A., Pepper, R.: Conjectures of TxGraffiti: Independence, domination, and matchings. Australas. J. Comb. 84(2), 258–274 (2022)

- Davila and Henning [2021] Davila, R., Henning, M.A.: Zero forcing versus domination in cubic graphs. J. Comb. Optim. 41, 553–577 (2021)

- Davila and Henning [2019] Davila, R., Henning, M.A.: Total forcing versus total domination in cubic graphs. Appl. Math. Comput. 354, 385–395 (2019)

- Davila and Henning [2020] Davila, R., Henning, M.A.: Zero forcing in claw-free cubic graphs. Bull. Malays. Math. Sci. Soc. 43, 673–688 (2020)

- Lenat [1977] Lenat, D.B.: The ubiquity of discovery. Artif. Intell. 9, 257–285 (1977)

- Lenat [1979] Lenat, D.B.: On automated scientific theory formation: A case study using the am program. Mach. Intell. 9, 251–286 (1979)

- Lenat [1982] Lenat, D.B.: The nature of heuristics. Artif. Intell. 9, 189–249 (1982)

- Epstein [1988] Epstein, S.L.: Learning and discovery: One system’s search for mathematical knowledge. Comput. Intell. 4(1), 42–53 (1988)

- Colton et al. [1999] Colton, S., Bundy, A., Walsh, T.: Automated concept formation in pure mathematics. In: Proceedings of the 16th International Joint Conference on Artificial Intelligence, vol. 2, pp. 786–791. Morgan Kaufmann Publishers, San Francisco, CA (1999)

- Colton [1999] Colton, S.: Refactorable numbers—a machine invention. J. Integer Seq. 2, 99–12 (1999)

- Colton [2002] Colton, S.: Automated Theory Formation in Pure Mathematics. Springer, Heidelberg (2002). https://doi.org/10.1007/978-1-4471-0147-5

- Caporossi and Hansen [2000] Caporossi, G., Hansen, P.: Variable neighborhood search for extremal graphs: 1 the autographix system. Discrete Math. 212(1–2), 29–44 (2000)

- Caporossi and Hansen [2004] Caporossi, G., Hansen, P.: Variable neighborhood search for extremal graphs: 5 three ways to automate finding conjectures. Discrete Math. 276(1–3), 81–94 (2004)

- Mélot [2008] Mélot, H.: Facet defining inequalities among graph invariants: The system graphedron. Discrete Appl. Math. 156, 1875–1891 (2008)

- Devillez et al. [2019] Devillez, G., Hauweele, P., Mélot, H.: PHOEG Helps to Obtain Extremal Graphs. In: Operations Research Proceedings 2018: Selected Papers of the Annual International Conference of the German Operations Research Society, pp. 251–257. Springer, Cham, Switzerland (2019)