The Limitations of Limited Context for Constituency Parsing

Abstract

Incorporating syntax into neural approaches in NLP has a multitude of practical and scientific benefits. For instance, a language model that is syntax-aware is likely to be able to produce better samples; even a discriminative model like BERT with a syntax module could be used for core NLP tasks like unsupervised syntactic parsing. Rapid progress in recent years was arguably spurred on by the empirical success of the Parsing-Reading-Predict architecture of (Shen et al., 2018b), later simplified by the Order Neuron LSTM of (Shen et al., 2019). Most notably, this is the first time neural approaches were able to successfully perform unsupervised syntactic parsing (evaluated by various metrics like F-1 score).

However, even heuristic (much less fully mathematical) understanding of why and when these architectures work is lagging severely behind. In this work, we answer representational questions raised by the architectures in (Shen et al., 2018b; 2019), as well as some transition-based syntax-aware language models (Dyer et al., 2016): what kind of syntactic structure can current neural approaches to syntax represent? Concretely, we ground this question in the sandbox of probabilistic context-free-grammars (PCFGs), and identify a key aspect of the representational power of these approaches: the amount and directionality of context that the predictor has access to when forced to make parsing decision. We show that with limited context (either bounded, or unidirectional), there are PCFGs, for which these approaches cannot represent the max-likelihood parse; conversely, if the context is unlimited, they can represent the max-likelihood parse of any PCFG.

1 Introduction

Neural approaches have been steadily making their way to NLP in recent years. By and large however, the neural techniques that have been scaled-up the most and receive widespread usage do not explicitly try to encode discrete structure that is natural to language, e.g. syntax. The reason for this is perhaps not surprising: neural models have largely achieved substantial improvements in unsupervised settings, BERT (Devlin et al., 2019) being the de-facto method for unsupervised pre-training in most NLP settings. On the other hand unsupervised syntactic tasks, e.g. unsupervised syntactic parsing, have long been known to be very difficult tasks (Htut et al., 2018). However, since incorporating syntax has been shown to improve language modeling (Kim et al., 2019b) as well as natural language inference (Chen et al., 2017; Pang et al., 2019; He et al., 2020), syntactic parsing remains important even in the current era when large pre-trained models, like BERT (Devlin et al., 2019), are available.

Arguably, the breakthrough works in unsupervised constituency parsing in a neural manner were (Shen et al., 2018b; 2019), achieving F1 scores 42.8 and 49.4 on the WSJ Penn Treebank dataset (Htut et al., 2018; Shen et al., 2019). Both of these architectures, however (especially Shen et al., 2018b) are quite intricate, and it’s difficult to evaluate what their representational power is (i.e. what kinds of structure can they recover). Moreover, as subsequent more thorough evaluations show (Kim et al., 2019b; a), these methods still have a rather large performance gap with the oracle binary tree (which is the best binary parse tree according to F1-score) — raising the question of what is missing in these methods.

We theoretically answer both questions raised in the prior paragraph. We quantify the representational power of two major frameworks in neural approaches to syntax: learning a syntactic distance (Shen et al., 2018b; a; 2019) and learning to parse through sequential transitions (Dyer et al., 2016; Chelba, 1997). To formalize our results, we consider the well-established sandbox of probabilistic context-free grammars (PCFGs). Namely, we ask:

When is a neural model based on a syntactic distance or transitions able to represent the max-likelihood parse of a sentence generated from a PCFG?

We focus on a crucial “hyperparameter” common to practical implementations of both families of methods that turns out to govern the representational power: the amount and type of context the model is allowed to use when making its predictions. Briefly, for every position in the sentence, syntactic distance models learn a distance to the previous token — the tree is then inferred from this distance; transition-based models iteratively construct the parse tree by deciding, at each position , what operations to perform on a partial parse up to token . A salient feature of both is the context, that is, which tokens is a function of (correspondingly, which tokens can the choice of operations at token depend on)?

We show that when the context is either bounded (that is, only depends on a bounded window around the -th token) or unidirectional (that is, only considers the tokens to the left of the -th token), there are PCFGs for which no distance metric (correspondingly, no algorithm to choose the sequence of transitions) works. On the other hand, if the context is unbounded in both directions then both methods work: that is, for any parse, we can design a distance metric (correspondingly, a sequence of transitions) that recovers it.

This is of considerable importance: in practical implementations the context is either bounded (e.g. in Shen et al., 2018b, the distance metric is parametrized by a convolutional kernel with a constant width) or unidirectional (e.g. in Shen et al., 2019, the distance metric is computed by a LSTM, which performs a left-to-right computation).

This formally confirms a conjecture of Htut et al. (2018), who suggested that because these models commit to parsing decision in a left-to-right fashion and are trained as a part of a language model, it may be difficult for them to capture sufficiently complex syntactic dependencies. Our techniques are fairly generic and seem amenable to analyzing other approaches to syntax. Finally, while the existence of a particular PCFG that is problematic for these methods doesn’t necessarily imply that the difficulties will carry over to real-life data, the PCFGs that are used in our proofs closely track linguistic intuitions about difficult syntactic structures to infer: the parse depends on words that come much later in the sentence.

2 Overview of Results

We consider several neural architectures that have shown success in various syntactic tasks, most notably unsupervised constituency parsing and syntax-aware language modeling. The general framework these architectures fall under is as follows: to parse a sentence with a trained neural model, the sentence is input into the model, which outputs at each step , and finally all the outputs are utilized to produce the parse.

Given unbounded time and space resources, by a seminal result of Siegelmann and Sontag (1992), an RNN implementation of this framework is Turing complete. In practice it is common to restrict the form of the output in some way. In this paper, we consider the two most common approaches, in which is a real number representing a syntactic distance (Section 2.1) (Shen et al., 2018b; a; 2019) or a sequence of parsing operations (Section 2.2) (Chelba, 1997; Chelba and Jelinek, 2000; Dyer et al., 2016). We proceed to describe our results for each architecture in turn.

2.1 Syntactic distance

Syntactic distance-based neural parsers train a neural network to learn a distance for each pair of adjacent words, depending on the context surrounding the pair of words under consideration. The distances are then used to induce a tree structure (Shen et al., 2018b; a).

For a sentence , the syntactic distance between and () is defined as , where is the context that takes into consideration 111Note that this is not a conditional distribution—we use this notation for convenience.. We will show that restricting the surrounding context either in directionality, or in size, results in a poor representational power, while full context confers essentially perfect representational power with respect to PCFGs.

Concretely, if the context is full, we show:

Theorem (Informal, full context).

For sentence generated by any PCFG, if the computation of has as context the full sentence and the position index under consideration, i.e. and , then can induce the maximum likelihood parse of .

On the flipside, if the context is unidirectional (i.e. unbounded left-context from the start of the sentence, and even possibly with a bounded look-ahead), the representational power becomes severely impoverished:

Theorem (Informal, limitation of left-to-right parsing via syntactic distance).

There exists a PCFG such that for any distance measure whose computation incorporates only bounded context in at least one direction (left or right), e.g.

the probability that induces the max likelihood parse is arbitrarily low.

In practice, for computational efficiency, parametrizations of syntactic distances fall into the above assumptions of restricted context (Shen et al., 2018b). This puts the ability of these models to learn a complex PCFG syntax into considerable doubt. For formal definitions, see Section 4.2. For formal theorem statements and proofs, see Section 5.

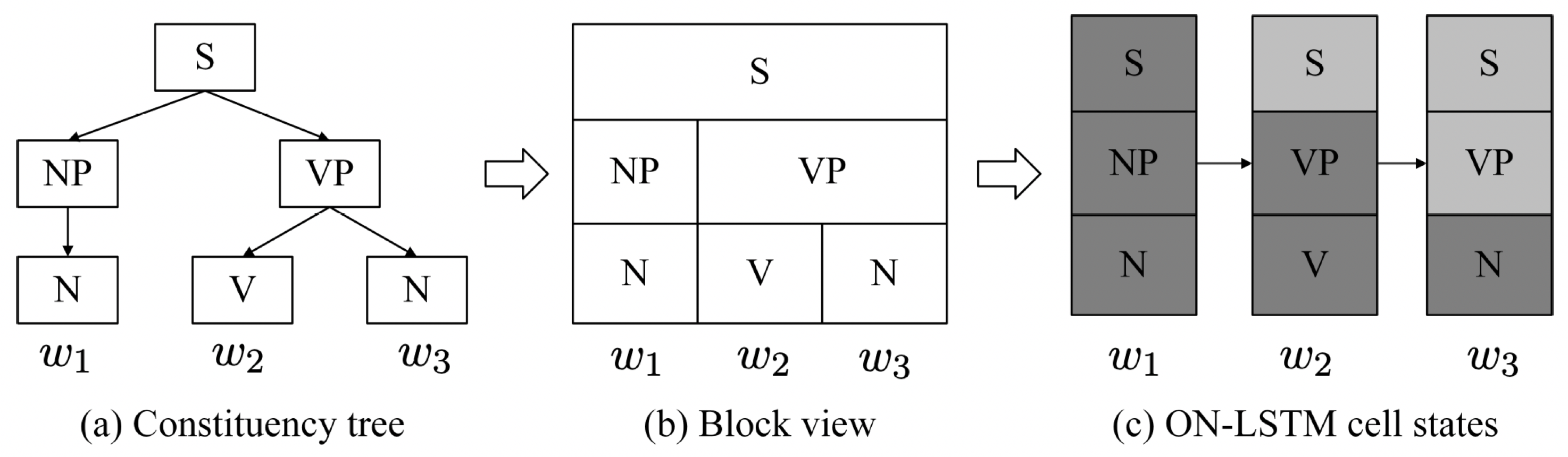

Subsequently we consider ON-LSTM, an architecture proposed by Shen et al. (2019) improving their previous work (Shen et al., 2018b), which also is based on learning a syntactic distance, but in (Shen et al., 2019) the distances are reduced from the values of a carefully structured master forget gate (see Section 6). While we show ON-LSTM can in principle losslessly represent any parse tree (Theorem 3), calculating the gate values in a left to right fashion (as is done in practice) is subject to the same limitations as the syntactic distance approach:

Theorem (Informal, limitation of syntactic distance estimation based on ON-LSTM).

There exists a PCFG for which the probability that the syntactic distance converted from an ON-LSTM induces the max likelihood parse is arbitrarily low.

2.2 Transition-based parsing

In principle, the output at each position of a left-to-right neural models for syntactic parsing need not be restricted to a real-numbered distance or a carefully structured vector. It can also be a combinatorial structure — e.g. a sequence of transitions (Chelba, 1997; Chelba and Jelinek, 2000; Dyer et al., 2016). We adopt a simplification of the neural parameterization in (Dyer et al., 2016) (see Definition 4.7).

With full context, Dyer et al. (2016) describes an algorithm to find a sequence of transitions to represent any parse tree, via a “depth-first, left-to-right traversal” of the tree. On the other hand, without full context, we prove that transition-based parsing suffers from the same limitations:

Theorem (Informal, limitation of transition-based parsing without full context).

There exists a PCFG , such that for any learned transition-based parser with bounded context in at least one direction (left or right), the probability that it returns the max likelihood parse is arbitrarily low.

Remark.

There is no immediate connection between the syntactic distance-based approaches (including ON-LSTM) and the transition-based parsing framework, so the limitations of transition-based parsing does not directly imply the stated negative results for syntactic distance or ON-LSTM, and vice versa.

2.3 The counterexample family

Most of our theorems proving limitations on bounded and unidirectional context are based on a PCFG family (Definition 2.1) which draws inspirations from natural language already suggested in (Htut et al., 2018): later words in a sentence can force different syntactic structures earlier in the sentence. For example, consider the two sentences: “I drink coffee with milk.” and “I drink coffee with friends.” Their only difference occurs at their very last words, but their parses differ at some earlier words in each sentence, too, as shown in Figure 1.

To formalize this intuition, we define the following PCFG.

Definition 2.1 (Right-influenced PCFG).

Let be positive integers. The grammar has starting symbol , other non-terminals

and terminals

The rules of the grammar are

in which means that the left expands into the right through a sequence of rules that conform to the requirements of the Chomsky normal form (CNF, Definition 4.4). Hence the grammar is in CNF.

The language of this grammar is

The parse of an arbitrary is shown in Figure 2. Each corresponds to a unique parse determined by the choice of . The structure of this PCFG is such that for the parsing algorithms we consider that proceed in a “left-to-right” fashion on , before processing the last token , it cannot infer the syntactic structure of any better than randomly guessing one of the possibilities. This is the main intuition behind Theorems 2 and 5.

Remark.

While our theorems focus on the limitation of “left-to-right” parsing, a symmetric argument implies the same limitation of “right-to-left” parsing. Thus, our claim is that unidirectional context (in either direction) limits the expressive power of parsing models.

3 Related Works

Neural models for parsing were first successfully implemented for supervised settings, e.g. (Vinyals et al., 2015; Dyer et al., 2016; Shen et al., 2018a). Unsupervised tasks remained seemingly out of reach, until the proposal of the Parsing-Reading-Predict Network (PRPN) by Shen et al. (2018b), whose performance was thoroughly verified by extensive experiments in (Htut et al., 2018). The follow-up paper (Shen et al., 2019) introducing the ON-LSTM architecture simplified radically the architecture in (Shen et al., 2018b), while still ultimately attempting to fit a distance metric with the help of carefully designed master forget gates. Subsequent work by Kim et al. (2019a) departed from the usual way neural techniques are integrated in NLP, with great success: they proposed a neural parameterization for the EM algorithm for learning a PCFG, but in a manner that leverages semantic information as well — achieving a large improvement on unsupervised parsing tasks.222By virtue of not relying on bounded or unidirectional context, the Compound PCFG (Kim et al., 2019a) eschews the techniques in our paper. Specifically, by employing a bidirectional LSTM inference network in the process of constructing a tree given a sentence, the parsing is no longer “left-to-right”.

In addition to constituency parsing, dependency parsing is another common task for syntactic parsing, but for our analyses on the ability of various approaches to represent the max-likelihood parse of sentences generated from PCFGs, we focus on the task of constituency parsing. Moreover, it’s important to note that there is another line of work aiming to probe the ability of models trained without explicit syntactic consideration (e.g. BERT) to nevertheless discover some (rudimentary) syntactic elements (Bisk and Hockenmaier, 2015; Linzen et al., 2016; Choe and Charniak, 2016; Kuncoro et al., 2018; Williams et al., 2018; Goldberg, 2019; Htut et al., 2019; Hewitt and Manning, 2019; Reif et al., 2019). However, to-date, we haven’t been able to extract parse trees achieving scores that are close to the oracle binarized trees on standard benchmarks (Kim et al., 2019b; a).

Methodologically, our work is closely related to a long line of works aiming to characterize the representational power of neural models (e.g. RNNs, LSTMs) through the lens of formal languages and formal models of computation. Some of the works of this flavor are empirical in nature (e.g. LSTMs have been shown to possess stronger abilities to recognize some context-free language and even some context-sensitive language, compared with simple RNNs (Gers and Schmidhuber, 2001; Suzgun et al., 2019) or GRUs (Weiss et al., 2018; Suzgun et al., 2019)); some results are theoretical in nature (e.g. Siegelmann and Sontag (1992)’s proof that with unbounded precision and unbounded time complexity, RNNs are Turing-complete; related results investigate RNNs with bounded precision and computation time (Weiss et al., 2018), as well as memory (Merrill, 2019; Hewitt et al., 2020). Our work contributes to this line of works, but focuses on the task of syntactic parsing instead.

4 Preliminaries

In this section, we define some basic concepts and introduce the architectures we will consider.

4.1 Probabilistic context-free grammar

First recall several definitions around formal language, especially probabilistic context free grammar:

Definition 4.1 (Probabilistic context-free grammar (PCFG)).

Formally, a PCFG (Chomsky, 1956) is a 5-tuple in which is the set of terminals, is the set of non-terminals, is the start symbol, is the set of production rules of the form , where , is of the form , , and . Finally, is the rule probability function, in which for any

is the conditional probability

Definition 4.2 (Parse tree).

Let denote the set of parse trees that can derive. Each is associated with , the sequence of terminals composed of the leaves of and , the probability of the parse tree, defined by the product of the probabilities of the rules in the derivation of .

Definition 4.3 (Language and sentence).

The language of is

Each is called a sentence in , and is associated with the set of parses , the set of max likelihood parses, , and its probability .

4.2 Syntactic distance

The Parsing-Reading-Predict Networks (PRPN) (Shen et al., 2018b) is one of the leading approaches to unsupervised constituency parsing. The parsing network (which computes the parse tree, hence the only part we focus on in our paper) is a convolutional network that computes the syntactic distances (defined in Section 2.1) based on the past words. A deterministic greedy tree induction algorithm is then used to produce a parse tree as follows. First, we split the sentence into two constituents, and , where and form the left and right subtrees of . We recursively repeat this procedure for the newly created constituents. An algorithmic form of this procedure is included as Algorithm 1 in Appendix A.

Note that, due to the deterministic nature of the tree-induction process, the ability of PRPN to learn a PCFG is completely contingent upon learning a good syntactic distance.

4.3 The ordered neuron architecture

Building upon the idea of representing the syntactic information with a real-valued distance measure at each position, a simple extension is to associate each position with a learned vector, and then use the vector for syntactic parsing. The ordered-neuron LSTM (ON-LSTM, Shen et al., 2019) proposes that the nodes that are closer to the root in the parse tree generate a longer span of terminals, and therefore should be less frequently “forgotten” than nodes that are farther away from the root. The difference in the frequency of forgetting is captured by a carefully designed master forget gate vector , as shown in Figure 3 (in Appendix B). Formally:

Definition 4.5 (Master forget gates, Shen et al., 2019).

Given the input sentence and a trained ON-LSTM, running the ON-LSTM on gives the master forget gates, which are a sequence of -dimensional vectors , in which at each position , . Moreover, let represent the -th dimension of . The ON-LSTM architectures requires that , , and

4.4 Transition-based parsing

In addition to outputting a single real numbered distance or a vector at each position , a left-to-right model can also parse a sentence by outputting a sequence of “transitions” at each position , an idea proposed in some traditional parsing approaches (Sagae and Lavie, 2005; Chelba, 1997; Chelba and Jelinek, 2000), and also some more recent neural parameterization (Dyer et al., 2016).

We introduce several items of notation:

-

•

: the -th transition performed when reading in , the -th token of the sentence

-

•

: the number of transitions performed between reading in the token and reading in the next token .

-

•

: the sequence of transitions after reading in the prefix of the sentence.

-

•

: the parse of the sentence . .

We base our analysis on the approach introduced in the parsing version of (Dyer et al., 2016), though that work additionally proposes a generator version. 333Dyer et al. (2016) additionally proposes some generator transitions. For simplicity, we analyze the simplest form: we only allow the model to return one parse, composed of the parser transitions, for a given input sentence. Note that this simplified variant still confers full representational power in the “full context” setting (see Section 7).

Definition 4.6 (Transition-based parser).

A transition-based parser uses a stack (initialized to empty) and an input buffer (initialized with the sentence ). At each position , based on a context , the parser outputs a sequence of parsing transitions , where each can be one of the following transitions (Definition 4.7). The parsing stops when the stack contains one single constituent, and the buffer is empty.

Definition 4.7 (Parser transitions, Dyer et al., 2016).

A parsing transition can be one of the following three types:

-

•

NT(X) pushes a non-terminal X onto the stack.

-

•

SHIFT: removes the first terminal from the input buffer and pushes onto the stack.

-

•

REDUCE: pops from the stack until an open non-terminal is encountered, then pops this non-terminal and assembles everything popped to form a new constituent, labels this new constituent using this non-terminal, and finally pushes this new constituent onto the stack.

In Appendix Section C, we provide an example of parsing the sentence “I drink coffee with milk” using the set of transitions given by Definition 4.7.

The different context specifications and the corresponding representational powers of the transition-based parser are discussed in Section 7.

5 Representational Power of Neural Syntactic Distance Methods

In this section we formalize the results on syntactic distance-based methods. Since the tree induction algorithm always generates a binary tree, we consider only PCFGs in Chomsky normal form (CNF) (Definition 4.4) so that the max likelihood parse of a sentence is also a binary tree structure.

To formalize the notion of “representing” a PCFG, we introduce the following definition:

Definition 5.1 (Representing PCFG with syntactic distance).

Let be any PCFG in Chomsky Normal Form. A syntactic distance function is said to be able to -represent if for a set of sentences in whose total probability is at least , can correctly induce the tree structure of the max likelihood parse of these sentences without ambiguity.

Remark.

Ambiguities could occur when, for example, there exists such that . In this case, the tree induction algorithm would have to break ties when determining the local structure for . We preclude this possibility in Definition 5.1.

In the least restrictive setting, the whole sentence , as well as the position index can be taken into consideration when determining each . We prove that under this setting, there is a syntactic distance measure that can represent any PCFG.

Theorem 1 (Full context).

Let . For each PCFG in Chomsky normal form, there exists a syntactic distance measure that can 1-represent .

Proof.

For any sentence , let be its max likelihood parse tree. Since is in Chomsky normal form, is a binary tree. We will describe an assignment of such that their order matches the level at which the branches split in . Specifically, , let denote the lowest common ancestor of and in . Let denote the shortest distance between and the root of . Finally, let . As a result, induces . ∎

Remark.

Since any PCFG can be converted to Chomsky normal form (Hopcroft et al., 2006), Theorem 1 implies that given the whole sentence and the position index as the context, the syntactic distance has sufficient representational power to capture any PCFG. It does not state, however, that the whole sentence and the position are the minimal contextual information needed for representability nor does it address training (i.e. optimization) issues.

On the flipside, we show that restricting the context even mildly can considerably decrease the representational power. Namely, we show that if context is bounded even in a single direction (to the left or to the right), there are PCFGs on which any syntactic distance will perform poorly 444In Theorem 2 we prove the more typical case, i.e. unbounded left context and bounded right context. The other case, i.e. bounded left context and unbounded right context, can be proved symmetrically.. (Note in the implementation (Shen et al., 2018b) the context only considers a bounded window to the left.)

Theorem 2 (Limitation of left-to-right parsing via syntactic distance).

Let be the sentence start symbol. Let the context

, there exists a PCFG in Chomsky normal form, such that any syntactic distance measure cannot -represent .

Proof.

Let be a positive integer. Consider the PCFG in Definition 2.1.

For any , consider the string . Note that in the parse tree of , the rule is applied. Hence, and are the unique pair of adjacent non-terminals in whose lowest common ancestor is the closest to the root in the parse tree of . Then, in order for the syntactic distance metric to induce the correct parse tree for , must be the unique maximum in .

However, is restricted to be in the form

Note that , the first tokens of and are the same, which implies that the inferred syntactic distances

are the same for and at each position . Thus, it is impossible for to induce the correct parse tree for both and . Hence, is correct on at most one , which corresponds to probability at most . Therefore, cannot -represent . ∎

Remark.

In the counterexample, there are only possible parse structures for the prefix . Hence, the proved fact that the probability of being correct is at most means that under the restrictions of unbounded look-back and bounded look-ahead, the distance cannot do better than random guessing for this grammar.

Remark.

The above Theorem 2 formalizes the intuition discussed in (Htut et al., 2018) outlining an intrinsic limitation of only considering bounded context in one direction. Indeed, for the PCFG constructed in the proof, the failure is a function of the context, not because of the fact that we are using a distance-based parser.

Note that as a corollary of the above theorem, if there is no context () or the context is both bounded and unidirectional, i.e.

then there is a PCFG that cannot be -represented by any such .

6 Representational Power of the Ordered Neuron Architecture

In this section, we formalize the results characterizing the representational power of the ON-LSTM architecture. The master forget gates of the ON-LSTM, in which each , encode the hierarchical structure of a parse tree, and Shen et al. (2019) proposes to carry out unsupervised constituency parsing via a reduction from the gate vectors to syntactic distances by setting:

| (1) |

First we show that the gates in ON-LSTM in principle form a lossless representation of any parse tree.

Theorem 3 (Lossless representation of a parse tree).

For any sentence with parse tree in any PCFG in Chomsky normal form, there exists a dimensionality , a sequence of vectors in which each , such that the estimated syntactic distances via (1) induce the structure of .

Proof.

By Theorem 1, there is a syntactic distance measure that induces the structure of (such that ).

For each , set if is the -th smallest entry in , breaking ties arbitrarily. Then, each , and also induces the structure of .

Let . For each , let whose lower dimensions are 0 and higher dimensions are 1. Then,

Therefore, the calculated induces the structure of . ∎

Although Theorem 3 shows the ability of the master forget gates to perfectly represent any parse tree, a left-to-right parsing can be proved to be unable to return the correct parse with high probability. In the actual implementation in (Shen et al., 2019), the (real-valued) master forget gate vectors are produced by feeding the input sentence to a model trained with a language modeling objective. In other words, is calculated as a function of , rather than the entire sentence.

As such, this left-to-right parser is subject to similar limitations as in Theorem 2:

Theorem 4 (Limitation of syntactic distance estimation based on ON-LSTM).

For any , there exists a PCFG in Chomsky normal form, such that the syntactic distance measure calculated with (1), , cannot -represent .

7 Representational Power of Transition-Based Parsing

In this section, we analyze a transition-based parsing framework inspired by (Dyer et al., 2016; Chelba and Jelinek, 2000; Chelba, 1997).

Again, we proceed to say first that “full context” confers full representational power. Namely, using the terminology of Definition 4.6, we let the context at each position be the whole sentence and the position index . Note that any parse tree can be generated by a sequence of transitions defined in Definition 4.7. Indeed, Dyer et al. (2016) describes an algorithm to find such a sequence of transitions via a “depth-first, left-to-right traversal” of the tree.

Proceeding to limited context, in the setting of typical left-to-right parsing, the context consists of all current and past tokens and all previous parses . We’ll again prove even stronger negative results, where we allow an optional look-ahead to input tokens to the right.

Theorem 5 (Limitation of transition-based parsing without full context).

For any , there exists a PCFG in Chomsky normal form, such that for any learned transition-based parser (Definition 4.6) based on context

the sum of the probabilities of the sentences in for which the parser returns the maximum likelihood parse is less than .

Proof.

Let be a positive integer. Consider the PCFG in Definition 2.1.

Note that is applied to yield string . Then in the parse tree of , and are the unique pair of adjacent terminals in whose lowest common ancestor is the closest to the root. Thus, different requires a different sequence of transitions within the first input tokens, i.e. .

For each , before the last token is processed, based on the common prefix , it is equally likely that , w. prob. each.

Moreover, when processing , the bounded look-ahead window of size does not allow access to the final input token .

Thus, , it is impossible for the parser to return the correct parse tree for both and without ambiguity. Hence, the parse is correct on at most one , which corresponds to probability at most . ∎

8 Conclusion

In this work, we considered the representational power of two frameworks for constituency parsing prominent in the literature, based on learning a syntactic distance and learning a sequence of iterative transitions to build the parse tree — in the sandbox of PCFGs. In particular, we show that if the context for calculating distance/deciding on transitions is limited at least to one side (which is typically the case in practice for existing architectures), there are PCFGs for which no good distance metric/sequence of transitions can be chosen to construct the maximum likelihood parse.

This limitation was already suspected in (Htut et al., 2018) as a potential failure mode of leading neural approaches like (Shen et al., 2018b; 2019) and we show formally that this is the case. The PCFGs with this property track the intuition that bounded context methods will have issues when the parse at a certain position depends heavily on latter parts of the sentence.

The conclusions thus suggest re-focusing our attention on methods like (Kim et al., 2019a) which have enjoyed greater success on tasks like unsupervised constituency parsing, and do not fall in the paradigm analyzed in our paper. A question of definite further interest is how to augment models that have been successfully scaled up (e.g. BERT) in a principled manner with syntactic information, such that they can capture syntactic structure (like PCFGs). The other question of immediate importance is to understand the interaction between the syntactic and semantic modules in neural architectures — information is shared between such modules in various successful architectures, e.g. (Dyer et al., 2016; Shen et al., 2018b; 2019; Kim et al., 2019a), and the relative pros and cons of doing this are not well understood. Finally, our paper purely focuses on representational power, and does not consider algorithmic and statistical aspects of training. As any model architecture is associated with its distinct optimization and generalization considerations, and natural language data necessitates the modeling of the interaction between syntax and semantics, those aspects of considerations are well beyond the scope of our analysis in this paper using the controlled sandbox of PCFGs, and are interesting directions for future work.

References

- Bisk and Hockenmaier [2015] Yonatan Bisk and Julia Hockenmaier. Probing the linguistic strengths and limitations of unsupervised grammar induction. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 1395–1404, Beijing, China, July 2015. Association for Computational Linguistics. doi: 10.3115/v1/P15-1135. URL https://www.aclweb.org/anthology/P15-1135.

- Chelba [1997] Ciprian Chelba. A structured language model. In 35th Annual Meeting of the Association for Computational Linguistics and 8th Conference of the European Chapter of the Association for Computational Linguistics, pages 498–500, Madrid, Spain, July 1997. Association for Computational Linguistics. doi: 10.3115/976909.979681. URL https://www.aclweb.org/anthology/P97-1064.

- Chelba and Jelinek [2000] Ciprian Chelba and Frederick Jelinek. Structured language modeling. Computer Speech & Language, 14(4):283 – 332, 2000. ISSN 0885-2308. doi: https://doi.org/10.1006/csla.2000.0147. URL http://www.sciencedirect.com/science/article/pii/S0885230800901475.

- Chen et al. [2017] Qian Chen, Xiaodan Zhu, Zhen-Hua Ling, Si Wei, Hui Jiang, and Diana Inkpen. Enhanced LSTM for natural language inference. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 1657–1668, Vancouver, Canada, July 2017. Association for Computational Linguistics. doi: 10.18653/v1/P17-1152. URL https://www.aclweb.org/anthology/P17-1152.

- Choe and Charniak [2016] Do Kook Choe and Eugene Charniak. Parsing as language modeling. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, pages 2331–2336, Austin, Texas, November 2016. Association for Computational Linguistics. doi: 10.18653/v1/D16-1257. URL https://www.aclweb.org/anthology/D16-1257.

- Chomsky [1956] N. Chomsky. Three models for the description of language. IRE Transactions on Information Theory, 2(3):113–124, 1956. doi: 10.1109/TIT.1956.1056813.

- Chomsky [1959] Noam Chomsky. On certain formal properties of grammars. Information and Control, 2(2):137 – 167, 1959. ISSN 0019-9958. doi: https://doi.org/10.1016/S0019-9958(59)90362-6. URL http://www.sciencedirect.com/science/article/pii/S0019995859903626.

- Devlin et al. [2019] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 4171–4186, Minneapolis, Minnesota, June 2019. Association for Computational Linguistics. doi: 10.18653/v1/N19-1423. URL https://www.aclweb.org/anthology/N19-1423.

- Dyer et al. [2016] Chris Dyer, Adhiguna Kuncoro, Miguel Ballesteros, and Noah A. Smith. Recurrent neural network grammars. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 199–209, San Diego, California, June 2016. Association for Computational Linguistics. doi: 10.18653/v1/N16-1024. URL https://www.aclweb.org/anthology/N16-1024.

- Gers and Schmidhuber [2001] F. Gers and J. Schmidhuber. Lstm recurrent networks learn simple context-free and context-sensitive languages. IEEE transactions on neural networks, 12 6:1333–40, 2001.

- Goldberg [2019] Yoav Goldberg. Assessing bert’s syntactic abilities, 2019.

- He et al. [2020] Qi He, Han Wang, and Yue Zhang. Enhancing generalization in natural language inference by syntax. In Findings of the Association for Computational Linguistics: EMNLP 2020, pages 4973–4978, Online, November 2020. Association for Computational Linguistics. doi: 10.18653/v1/2020.findings-emnlp.447. URL https://www.aclweb.org/anthology/2020.findings-emnlp.447.

- Hewitt and Manning [2019] John Hewitt and Christopher D. Manning. A structural probe for finding syntax in word representations. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 4129–4138, Minneapolis, Minnesota, June 2019. Association for Computational Linguistics. doi: 10.18653/v1/N19-1419. URL https://www.aclweb.org/anthology/N19-1419.

- Hewitt et al. [2020] John Hewitt, Michael Hahn, Surya Ganguli, Percy Liang, and Christopher D. Manning. RNNs can generate bounded hierarchical languages with optimal memory. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 1978–2010, Online, November 2020. Association for Computational Linguistics. doi: 10.18653/v1/2020.emnlp-main.156. URL https://www.aclweb.org/anthology/2020.emnlp-main.156.

- Hopcroft et al. [2006] John E. Hopcroft, Rajeev Motwani, and Jeffrey D. Ullman. Introduction to Automata Theory, Languages, and Computation (3rd Edition). Addison-Wesley Longman Publishing Co., Inc., USA, 2006. ISBN 0321462254.

- Htut et al. [2018] Phu Mon Htut, Kyunghyun Cho, and Samuel Bowman. Grammar induction with neural language models: An unusual replication. In Proceedings of the 2018 EMNLP Workshop BlackboxNLP: Analyzing and Interpreting Neural Networks for NLP, pages 371–373, Brussels, Belgium, November 2018. Association for Computational Linguistics. doi: 10.18653/v1/W18-5452. URL https://www.aclweb.org/anthology/W18-5452.

- Htut et al. [2019] Phu Mon Htut, Jason Phang, Shikha Bordia, and Samuel R. Bowman. Do attention heads in bert track syntactic dependencies? ArXiv, abs/1911.12246, 2019.

- Kim et al. [2019a] Yoon Kim, Chris Dyer, and Alexander Rush. Compound probabilistic context-free grammars for grammar induction. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 2369–2385, Florence, Italy, July 2019a. Association for Computational Linguistics. doi: 10.18653/v1/P19-1228. URL https://www.aclweb.org/anthology/P19-1228.

- Kim et al. [2019b] Yoon Kim, Alexander Rush, Lei Yu, Adhiguna Kuncoro, Chris Dyer, and Gábor Melis. Unsupervised recurrent neural network grammars. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 1105–1117, Minneapolis, Minnesota, June 2019b. Association for Computational Linguistics. doi: 10.18653/v1/N19-1114. URL https://www.aclweb.org/anthology/N19-1114.

- Kuncoro et al. [2018] Adhiguna Kuncoro, Chris Dyer, John Hale, Dani Yogatama, Stephen Clark, and Phil Blunsom. LSTMs can learn syntax-sensitive dependencies well, but modeling structure makes them better. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 1426–1436, Melbourne, Australia, July 2018. Association for Computational Linguistics. doi: 10.18653/v1/P18-1132. URL https://www.aclweb.org/anthology/P18-1132.

- Linzen et al. [2016] Tal Linzen, Emmanuel Dupoux, and Yoav Goldberg. Assessing the ability of LSTMs to learn syntax-sensitive dependencies. Transactions of the Association for Computational Linguistics, 4:521–535, 2016. doi: 10.1162/tacl˙a˙00115. URL https://www.aclweb.org/anthology/Q16-1037.

- Merrill [2019] William Merrill. Sequential neural networks as automata. In Proceedings of the Workshop on Deep Learning and Formal Languages: Building Bridges, pages 1–13, Florence, August 2019. Association for Computational Linguistics. doi: 10.18653/v1/W19-3901. URL https://www.aclweb.org/anthology/W19-3901.

- Pang et al. [2019] Deric Pang, Lucy H. Lin, and Noah A. Smith. Improving natural language inference with a pretrained parser, 2019.

- Reif et al. [2019] Emily Reif, Ann Yuan, Martin Wattenberg, Fernanda B Viegas, Andy Coenen, Adam Pearce, and Been Kim. Visualizing and measuring the geometry of bert. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 32, pages 8594–8603. Curran Associates, Inc., 2019. URL https://proceedings.neurips.cc/paper/2019/file/159c1ffe5b61b41b3c4d8f4c2150f6c4-Paper.pdf.

- Sagae and Lavie [2005] Kenji Sagae and Alon Lavie. A classifier-based parser with linear run-time complexity. In Proceedings of the Ninth International Workshop on Parsing Technology, pages 125–132, Vancouver, British Columbia, October 2005. Association for Computational Linguistics. URL https://www.aclweb.org/anthology/W05-1513.

- Shen et al. [2018a] Yikang Shen, Zhouhan Lin, Athul Paul Jacob, Alessandro Sordoni, Aaron Courville, and Yoshua Bengio. Straight to the tree: Constituency parsing with neural syntactic distance. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 1171–1180, Melbourne, Australia, July 2018a. Association for Computational Linguistics. doi: 10.18653/v1/P18-1108. URL https://www.aclweb.org/anthology/P18-1108.

- Shen et al. [2018b] Yikang Shen, Zhouhan Lin, Chin wei Huang, and Aaron Courville. Neural language modeling by jointly learning syntax and lexicon. In International Conference on Learning Representations, 2018b. URL https://openreview.net/forum?id=rkgOLb-0W.

- Shen et al. [2019] Yikang Shen, Shawn Tan, Alessandro Sordoni, and Aaron Courville. Ordered neurons: Integrating tree structures into recurrent neural networks. In International Conference on Learning Representations, 2019. URL https://openreview.net/forum?id=B1l6qiR5F7.

- Siegelmann and Sontag [1992] Hava T. Siegelmann and Eduardo D. Sontag. On the computational power of neural nets. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, COLT ’92, page 440–449, New York, NY, USA, 1992. Association for Computing Machinery. ISBN 089791497X. doi: 10.1145/130385.130432. URL https://doi.org/10.1145/130385.130432.

- Suzgun et al. [2019] Mirac Suzgun, Yonatan Belinkov, Stuart Shieber, and Sebastian Gehrmann. LSTM networks can perform dynamic counting. In Proceedings of the Workshop on Deep Learning and Formal Languages: Building Bridges, pages 44–54, Florence, August 2019. Association for Computational Linguistics. doi: 10.18653/v1/W19-3905. URL https://www.aclweb.org/anthology/W19-3905.

- Vinyals et al. [2015] Oriol Vinyals, Ł ukasz Kaiser, Terry Koo, Slav Petrov, Ilya Sutskever, and Geoffrey Hinton. Grammar as a foreign language. In C. Cortes, N. Lawrence, D. Lee, M. Sugiyama, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 28, pages 2773–2781. Curran Associates, Inc., 2015. URL https://proceedings.neurips.cc/paper/2015/file/277281aada22045c03945dcb2ca6f2ec-Paper.pdf.

- Weiss et al. [2018] Gail Weiss, Yoav Goldberg, and Eran Yahav. On the practical computational power of finite precision RNNs for language recognition. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), pages 740–745, Melbourne, Australia, July 2018. Association for Computational Linguistics. doi: 10.18653/v1/P18-2117. URL https://www.aclweb.org/anthology/P18-2117.

- Williams et al. [2018] Adina Williams, Andrew Drozdov, and Samuel R. Bowman. Do latent tree learning models identify meaningful structure in sentences? Transactions of the Association for Computational Linguistics, 6:253–267, 2018. doi: 10.1162/tacl˙a˙00019. URL https://www.aclweb.org/anthology/Q18-1019.

Appendix A Tree Induction Algorithm Based on Syntactic Distance

The following algorithm is proposed in [Shen et al., 2018b] to create a parse tree based on a given syntactic distance.

Appendix B ON-LSTM Intuition

See Figure 3 below, which is excerpted from [Shen et al., 2019] with minor adaptation to the notation.

Appendix C Examples of parsing transitions

Table 1 below shows an example of parsing the sentence “I drink coffee with milk” using the set of transitions given by Definition 4.7, which employs the parsing framework of [Dyer et al., 2016]. The parse tree of the sentence is given by

| \Tree[.S [.NP [.N I ] ] [.VP [.V drink ] [.NP [.NP [.N coffee ] ] [.PP [.P with ] [.N milk ] ] ] ] ] |

| Stack | Buffer | Action |

|---|---|---|

| I drink coffee with milk | NT(S) |

|

| (S | I drink coffee with milk | NT(NP) |

| (S (NP | I drink coffee with milk | NT(N) |

| (S (NP (N | I drink coffee with milk | SHIFT |

| (S (NP (N I | drink coffee with milk | REDUCE |

| (S (NP (N I)) | drink coffee with milk | NT(VP) |

| (S (NP (N I)) (VP | drink coffee with milk | NT(V) |

| (S (NP (N I)) (VP (V | drink coffee with milk | SHIFT |

| (S (NP (N I)) (VP (V drink | coffee with milk | REDUCE |

| (S (NP (N I)) (VP (V drink) | coffee with milk | NT(NP) |

| (S (NP (N I)) (VP (V drink) | ||

| (NP | coffee with milk | NT(NP) |

| (S (NP (N I)) (VP (V drink) | ||

| (NP (NP | coffee with milk | NT(N) |

| (S (NP (N I)) (VP (V drink) | ||

| (NP (NP (N | coffee with milk | SHIFT |

| (S (NP (N I)) (VP (V drink) | ||

| (NP (NP (N coffee | with milk | REDUCE |

| (S (NP (N I)) (VP (V drink) | ||

| (NP (NP (N coffee)) | with milk | NT(PP) |

| (S (NP (N I)) (VP (V drink) | ||

| (NP (NP (N coffee)) (PP | with milk | NT(P) |

| (S (NP (N I)) (VP (V drink) | ||

| (NP (NP (N coffee)) (PP (P | with milk | SHIFT |

| (S (NP (N I)) (VP (V drink) | ||

| (NP (NP (N coffee)) (PP (P with | milk | REDUCE |

| (S (NP (N I)) (VP (V drink) | ||

| (NP (NP (N coffee)) (PP (P with) | milk | NT(N) |

| (S (NP (N I)) (VP (V drink) | ||

| (NP (NP (N coffee)) (PP (P with) (N | milk | SHIFT |

| (S (NP (N I)) (VP (V drink) | ||

| (NP (NP (N coffee)) (PP (P with) (N milk | REDUCE |

|

| (S (NP (N I)) (VP (V drink) | ||

| (NP (NP (N coffee)) (PP (P with) (N milk))))) |