The KiTS21 Challenge: Automatic segmentation of kidneys, renal tumors, and renal cysts in corticomedullary-phase CT

Abstract

This paper presents the challenge report for the 2021 Kidney and Kidney Tumor Segmentation Challenge (KiTS21) held in conjunction with the 2021 international conference on Medical Image Computing and Computer Assisted Interventions (MICCAI). KiTS21 is a sequel to its first edition in 2019, and it features a variety of innovations in how the challenge was designed, in addition to a larger dataset. A novel annotation method was used to collect three separate annotations for each region of interest, and these annotations were performed in a fully transparent setting using a web-based annotation tool. Further, the KiTS21 test set was collected from an outside institution, challenging participants to develop methods that generalize well to new populations. Nonetheless, the top-performing teams achieved a significant improvement over the state of the art set in 2019, and this performance is shown to inch ever closer to human-level performance. An in-depth meta-analysis is presented describing which methods were used and how they faired on the leaderboard, as well as the characteristics of which cases generally saw good performance, and which did not. Overall KiTS21 facilitated a significant advancement in the state of the art in kidney tumor segmentation, and provides useful insights that are applicable to the field of semantic segmentation as a whole.

1 Introduction

1.1 Kidney Tumor Background

With the utilization of cross-sectional imaging now as high as it’s ever been, kidney tumors are now most often discovered incidentally, rather than on the basis of symptoms [37, 8]. There is growing evidence that large numbers of renal tumors are either benign or indolent, especially when they are small and incidentally discovered, and they might therefore be best managed with surveillance rather than intervention [42, 50, 25]. However, metastatic renal cancer remains highly lethal, so the rare instances in which a small and/or incidentally-discovered renal tumor progresses to metastatic disease are unacceptable, and their risk must be weighed against overtreatment and its associated cost and morbidity [46]. Some argue that renal mass biopsy has the potential to resolve this treatment decision dilemma, but others argue that its relative lack of sensitivity hinders its ability to convince physicians and patients that their disease won’t progress [48], and ultimately, its utilization remains relatively low [32]. There remains a significant unmet need for tools to reliably differentiate between benign/indolent renal tumors and those with metastatic potential.

1.2 Kidney Tumor Radiomics

Increasingly, the so-called “radiome” is revealing itself as a powerful quantitative predictor of clinically-meaningful outcomes in cancer [15]. In renal tumors, radiomic features have shown exciting potential for predicting histologic subtype [4], nuclear grade [40], somatic tumor mutations [27], and even cancer-specific and overall survival [17]. In surgical oncology, a number of “nephrometry” scores such as R.E.N.A.L. [30] and PADUA [13] have been developed which synthesize various manually-extracted radiomic features to produce scores which have been shown to robustly correlate with perioperative and oncologic outcomes. Of these, the R.E.N.A.L. score has recently been approximated in terms of segmentation-based radiomic features, and was shown to be noninferior to human-derived scores at predicting clinical outcomes [22], and the others are sure to follow in short order.

At the heart of most radiomics approaches is the need for the spatial delineation of which structures occupy what space in a given image. As one might imagine, manually delineating each region of interest is a very time consuming activity, and is subject to significant interobserver variability [20] to which, radiomics algorithms are sometimes quite sensitive [34]. There is thus significant interest in developing highly-accurate automatic methods for semantic segmentation.

Modern deep learning approaches have achieved impressive performance on a wide variety of semantic segmentation tasks [18], but their need for large and high-quality training datasets has hindered their development in problems such as kidney cancer for which little annotated data is publicly available. Further, the development of deep learning algorithms requires a huge number of design decisions, not only about their structure but also about procedures during training and validation. There remains little consensus in the computer vision community about which algorithms are truly optimal for a given semantic segmentation task.

1.3 The KiTS21 Challenge

Machine learning competitions, or “challenges” as they are often called, have become a mainstay in the medical image analysis research community [45]. In a challenge, a central organizing team takes responsibility for defining a clinically important problem and collecting a large labeled dataset. They then split this dataset into a public “training set” which is disseminated to the larger research community, and a “test set” which is kept secret. Teams are invited to train their favorite machine learning models on the training set, and the organizing team is responsible for measuring and ranking how well these models perform on the secret test set.

Challenges serve as an excellent model for interdisciplinary collaboration: The organizing team, which is ostensibly most interested in the domain-specific nuances of the clinical problem, benefits from top research groups from around the world turning their attention to their problem and proposing solutions. And the participants, who are ostensibly most interested in machine learning methodology, benefit from a new and high quality dataset carefully tailored to a clinically meaningful problem by domain experts. In a way, these challenges play a role analogous to that played by “model organisms” and “cell lines” in the biological sciences – that is, they allow researchers to make the sort of head to head comparisons that would otherwise be impossible if everyone was working only on their own private datasets – or their own private breed of organism, or their own private line of immortalized cells.

One of the first machine learning competitions to use this format was the Critical Assessment of protein Structure Prediction or “CASP” competition which has been followed by a sequel CASP event every even-numbered year since 1994 [39]. CASP is in its 15th iteration at the time of writing, and it has served as an invaluable resource to the protein structure prediction community over the last 30 years as it has progressed through several generations of computational biochemistry [29], with the latest being dominated by deep learning methods such as DeepMind’s ”AlphaFold” [26]. One might wonder whether DeepMind would have shown such strong interest in this important problem if it had not been so carefully and painstakingly curated into a challenge format as it was by the organizers of CASP. It is the authors’ strong belief that the same applies to most challenges: they attract interest and attention to their chosen problem by individuals and research groups that otherwise might never have spent time on them.

Many excellent challenges have been organized in the medical image analysis community over the last two decades, and the semantic segmentation of cross-sectional images is one of the most popular subjects [36]. These segmentation challenges have asked participants to segment things such as specific anatomical structures like bones and organs [33, 19, 28, 47, 35], organs at risk in radiation therapy planning [31], and, like KiTS, lesions and the organs they affect [1, 5, 2].

Of particular interest is the recent QUBIQ challenge 111https://qubiq21.grand-challenge.org/ which provided participants with multiple independent annotations per region of interest. Here, participants were asked to train a model not only to segment the region accurately, but also to estimate the pixelwise uncertainty in their segmentations in the hope that model uncertainty would be highest in the areas where different annotators disagreed.

The challenge described in this report, “The 2021 Kidney Tumor Segmentation Challenge” or “KiTS21” is the second challenge in the “KiTS” series after its 2019 iteration, “KiTS19” [21]. KiTS19 represented the first large-scale publicly available dataset of kidney tumor Computed Tomography (CT) images with associated semantic segmentations. It attracted submissions from more than 100 teams from around the world, and saw the winning team surpass the previous state of the art in kidney tumor segmentation, while nearly matching human-level performance in segmenting the affected kidneys. KiTS21 builds upon KiTS19, but differs from it in several important ways, enumerated below:

-

1.

Data annotation was performed in public view

-

2.

Like QUBIQ, multiple annotations were released per ROI

-

3.

Renal cysts were segmented as an independent class

-

4.

The test set images came from a separate institution in a different geographical area

-

5.

Teams were required to submit a paper summarizing their method for review and approval before participating

The following section will explain each of these new design features in detail, while also providing an in-depth description of the cohort. The remainder of this report proceeds as follows: Sec 2 describes the KiTS21 dataset and annotation process. In section 3, the results of the KiTS21 challenge are discussed, including a statistical analysis of the leaderboard and the methods used by the 3 highest-performing teams. Section 4 concludes with the a discussion of the lessons learned from organizing this challenge and possible future directions for KiTS.

2 Materials and Methods

2.1 The KiTS21 Dataset

The dataset used for KiTS21 consisted of two distinct cohorts for training and test sets collected at separate time points for different purposes. Ultimately, they were both annotated with segmentation labels in a single unified effort, but the processes to identify the patients and collect their images are described in separate sections below.

2.1.1 Training Set Collection

After receiving approval from the University of Minnesota institutional review board (study 1611M00821), a query was designed to identify patients from the institution’s electronic medical record system who met the following criteria:

-

1.

Underwent partial or radical nephrectomy between January 1, 2011 and June 15, 2019

-

2.

Were diagnosed with a renal mass prior to the nephrectomy

-

3.

Underwent a CT scan within the 80 days prior to their nephrectomy

This returned a collection of 962 patients. Manual chart review of each of these 962 patients was used to identify those who specifically underwent nephrectomy for fear of renal malignancy. The resulting 799 patients were reviewed in random order to identify those who had a CT scan available which showed the entirety of all kidneys and kidney tumors, and were in the corticomedullary contrast phase. After reviewing 544 cases in this way, 300 were identified for use as the training set for this study. It is important to note that these 300 cases were the same 300 that were split between the training (210) and test (90) sets for the KiTS19 challenge, [21].

2.1.2 Test Set Collection

The test set used for the KiTS21 challenge consisted of 100 cases of patients who had been treated with partial or radical nephrectomy for fear of renal malignancy at the Cleveland Clinic. Preoperative CT images were obtained from all patients for whom they were available, and these patients were reviewed in random order until 100 patients with a scan in the corticomedullary phase were identified for use as the test set of the KiTS21 challenge.

2.1.3 Overall Dataset Characteristics

The characteristics of the patients comprising the training and test sets can be found in table 1. Of note is a stark gender imbalance in which men outnumber women by roughly a 2:1 ratio. This imbalance is consistent between the training and test sets, and is, in fact, a well established phenomenon in the epidemiology of renal cell carcinoma [7]. The median age of patients in the training set was 60 years, and the median age of patients in the test set was 63 years. The median BMI of patients in the training set was 29.82 kg/, and the median BMI of patients in the test set was 29.7 kg/. The median tumor diameter in the training set was 4.2 cm, and the median tumor diameter in the test set was 3.8 cm.

While the patients used for the training set were all treated at a single academic health center, the preoperative scans used in this dataset were often captured at a variety of community hospitals and clinics prior to referral. This endows the dataset with significant heterogeneity in terms of which scanners were used and with what protocol. A map depicting the geographic locations of all of the scanning institutions represented in this dataset is shown in figure 2.

| Attribute | Training (N=300) | Testing (N=100) |

| Age (years) | 60 (51, 68) | 63 (55, 60) |

| BMI (kg/) | 29.82 (26.16, 35.28) | 29.7 (25.7, 33.5) |

| Tumor Diameter* (cm) | 4.2 (2.6, 6.1) | 3.8 (2.9, 5.4) |

| Gender | ||

| Male | 180 (60%) | 63 (63%) |

| Female | 120 (40%) | 37 (37%) |

| pT Stage | ||

| pT1a | 146 (48.7%) | 55 (55%) |

| pT1b | 59 (19.7%) | 16 (16%) |

| pT2a | 15 (5%) | 3 (3%) |

| pT2b | 5 (1.7%) | 0 (0%) |

| pT3a | 70 (23.3%) | 26 (26%) |

| pT4 | 5 (1.7%) | 0 (0%) |

| Subtype | ||

| Clear Cell RCC | 203 (67.7%) | 70 (70%) |

| Papillary RCC | 28 (9.3%) | 11 (11%) |

| Chromophobe RCC | 27 (9%) | 8 (8%) |

| Oncocytoma | 16 (5.3%) | 4 (4%) |

| Other | 26 (8.7%) | 7 (7%) |

| Grade | ||

| 1 | 33 (11%) | 4 (4%) |

| 2 | 119 (39.7%) | 37 (37%) |

| 3 | 66 (22%) | 40 (40%) |

| 4 | 26 (8.7%) | 7 (7%) |

| N/A | 56 (18.7%) | 12 (12%) |

2.2 Data Annotation Process

Based on the prior experience and feedback collected during and after the KiTS19 challenge, KiTS21 features a unique purpose-built annotation process.

2.2.1 Public Annotation Platform

There has recently been some discussion about the need for greater clarity about how annotations are produced for medical image analysis research [43]. All too often, papers simply report which structures were segmented along with the credentials of the researcher or group of researchers who supervised and or carried out the annotations. This approach fails to capture important nuances about the annotation process such as how the regions of interest were specifically defined, what tool was used to produce the annotations themselves, and specific instructions that were given to the annotators, if any, regarding uncertainty and quality control. This information is crucial for making informed and fair comparisons regarding the performance of models on a given task.

In an attempt to provide as much clarity as possible regarding the annotation process, the training set was annotated in such a way that any member of the public could view the annotation process as it took place. A website was developed222https://kits21.kits-challenge.org which offered a dashboard showing every training case and its status in the annotation process. For an example of this, see Fig. 3. Each region of interest is denoted with a set of clickable icons that the user can use to view the annotations it represents in the same tool that the annotators used to produce them333https://github.com/SenteraLLC/ulabel. Further still, the exact set of instructions provided to the group of annotators is documented in a webpage that is available for anyone to view444https://kits21.kits-challenge.org/instructions.

2.2.2 Multiple Annotations per Region of Interest

No semantic segmentation dataset is exactly correct, nor will one ever be. For much the same reason that surgeons will excise some ‘margin’ of healthy tissue with a tumor, a radiologist cannot always identify the exact extent of a tumor’s border with 100% certainty. This issue is further complicated by artifacts such as partial volume averaging in cross-sectional imaging.

On top of genuine uncertainty, there is a second factor which contributes to error in semantic segmentation datasets: mistakes. This is akin to ‘coloring outside the lines’ in a coloring book. When one is asked to precisely delineate hundreds of structures with dozens of axial frames to delineate per structure, they can quickly grow tired and the extent to which their delineations match their intentions will degrade.

The KiTS21 challenge aimed to explicitly address this latter issue of delineation mistakes. To do this, the annotation process consisted of three distinct phases:

-

1.

Localization: A medical trainee places a 3d bounding box around the ROI

-

2.

Guidance: A medical trainee places a small number of t-shaped pins along the intended delineation path surrounding the ROI in some sample of axial slices (see Fig. 5)

-

3.

Delineation: A layperson (e.g., crowd worker) uses the localization and guidance to produce a delineation that matches the trainee’s intentions

In the above paradigm, localization and guidance were performed once for each region of interest, reviewed and refined by an expert if needed, and then three separate delineations were collected from laypeople. This allowed for quantifying and controlling for mistakes by collecting three independent delineations which were all guided by the same annotation intent.

2.2.3 Changes To Intended Segmentation Classes and Dataset Size

One might notice the presence of three additional ROI types referenced in Fig. 3: ‘Ureter’, ‘Artery’, and ‘Vein’. The original intention when planning this challenge was to segment these structures in the same way as the ‘Kidney’, ‘Tumor’, and ‘Cyst’ structures that were ultimately included in the dataset. Regrettably, during the annotation process it was discovered that it would not be feasible to ensure that the segmentation labels for all six region types would be of sufficiently quality before the training set release deadline, especially with the inordinate amount of time that these complex structures took to correct and refine. The annotation team thus decided to prioritize the quality of the kidney, tumor, and cyst regions, while leaving the ureter, artery, and vein regions for future work.

2.3 Challenge Design Decisions

2.3.1 Use of a Separate Institution for Test Set

One commen criticism of single-institutional cohorts in medical image analysis is the possibility that any model trained only on that cohort might show inflated performance when validated on that cohort, as compared to its true performance on some random collection of images sampled from the true distribution of images that it is meant to be applied to. It is therefore generally recommended that data from a separate institution should be used for external validation [6]. As described in section 2.1, the KiTS21 training set is unique in that while all nephrectomy procedures took place at a single institution, patients most commonly underwent imaging at a different institution prior to being referred to the Fairview University of Minnesota Medical Center for treatment. One might therefore argue that the KiTS19 dataset already represents sufficient diversity in imaging institutions to prevent overfitting to site-specific characteristics. Nonetheless, it is much more convincing to use a properly separate cohort for the test set, and for that reason, the KiTS21 test set was built on a cohort of patients treated at a separate institution: the Cleveland Clinic.

2.3.2 Peer Review Requirement

One of the most important contributions a challenge can make to the research community is to elucidate which approaches work best for a particular problem. This depends on challenge participants taking the time to document their approach in a detailed publication. Unfortunately, the reports produced to accompany challenge submissions are often woefully lacking in detail and clarity, and this severely hinders a challenge’s impact [24].

In an attempt to prevent this, KiTS21 instituted a policy that short papers accompanying submissions to the challenge would undergo a peer review process in which they would be reviewed for clarity and completeness. After the challenge, these papers were then published as MICCAI satellite event proceedings, similar to a typical workshop. A template paper555https://www.overleaf.com/read/nfbqmtkcyzdp was provided to teams to help guide them in what information was expected to be provided, and what a typical structure might look like. Teams were required to submit this paper at least a week before test set submissions opened, and their submissions would not be considered until the paper had been approved. Ultimately 27 out of 28 papers submitted were approved, but most had to undergo a round of revisions with repeat review.

2.3.3 Metrics and Ranking

In KiTS19, a simple average Sørensen-Dice metric was used to rank teams. Sørensen-Dice is an attractive option because it is very widely-used and well-understood by the community. However, recent research has revealed some of its shortcomings [44], such as its agnosticism to how many objects were ‘detected’ when multiple objects exist within the same image – resulting in a case where smaller objects are given much more weight, when in reality, a smaller tumor on the contralateral kidney, for example, might be even more important to detect. To address this, the Sørensen-Dice metric was supplemented with the Surface Dice metric, as described in [41].

Another factor taken under consideration for KiTS21 was the natural hierarchical relationship between the target segmentation classes. Masses (i.e., tumors and cysts) are naturally part of the overall kidney region, and tumors are naturally a subset of a particular patient’s collection of masses. Further, since the most difficult tasks for models to learn in this problem are (1) differentiating between tumors and cysts, and (2) determining the boundary between masses and healthy kidney, the problem was framed in terms of what we’re calling ‘Hierarchical Evaluation Classes’ (HECs) where the first class is the union of all regions, the second class is the union of the tumor and cyst regions, and the third class is the tumor alone. This prevents penalizing a model twice for mislabeling a tumor for a cyst, or part of a mass as healthy kidney, etc.

Since three independent annotations per region of interest were collected, each case consisted of possible composite segmentation masks, where is the number of regions of interest for that case. ranged from 3, in the simplest case (two kidneys and a tumor), to well over 10 in some cases with several cysts and tumors. At test time, a random sample of these composite segmentations for each case was used for evaluation.

Ultimately, both the Sørensen-Dice and the Surface Dice were computed for each HEC of each randomly sampled composite segmentation in the test set. Average total scores were computed for each metric, and then a rank-then-aggregate approach was used to determine the final leaderboard rankings. In the case of a tie, the average Sørensen-Dice score on the tumor region was used as a tiebreaker.

2.3.4 Incentive and Prize

Every team which had their manuscript approved for publication in the KiTS21 proceedings and made a valid submission to the challenge was invited to present their work in a short format at the KiTS21 session of the 2021 MICCAI conference. Teams that placed in the top 5 were given the option to give a longer presentation, and were also invited to participate on the challenge report as coauthors. The first place team was also awarded a cash prize of $5,000 USD.

2.3.5 Changes to Intended Submission Procedure

The testing phase of machine learning competitions generally proceeds in one of two ways. In the first way, teams are given access to the images (but not labels) of the test set for a limited period of time, during which they download the data, run inference on it with the model that they have developed, and send their predictions back to the organizers for evaluation. This was the method used by KiTS19. The second approach is to ask teams to package their model in such a way that it can be sent to the organizers and run on the test set on a private server, thereby preventing the participating team from ever having direct access to the test set images.

The second approach is generally thought to be preferable, since it eliminates all possibility that a team might ‘cheat’ by manually intervening in the predictions made by their model to improve them unfairly. The downside to this approach, however, is that teams are limited to the computational resources made available to them by the organizers, which depending on the level of funding, might be quite limited. The sorts of large ensembles of models that often win machine learning competitions require significant time and resources to run, and might not be feasible in a challenge using the latter approach. Further still, the latter approach adds significant complexity to the tasks of both the organizers and the participants, with both parties having to build and maintain systems for these inference tasks. Containerization solutions such as Docker have made tasks like this easier, but they come with a learning curve, and not everyone in the research community has extensive experience with them.

KiTS21 originally planned to ask teams to prepare Docker containers in which to submit their models for fully private evaluation. Regrettably, soon into the submission period it became clear that this would not be practical with the resources available. More than half of the teams who submitted their containers exhausted either the time or memory constraints imposed by the cloud-based submission system that had graciously been made available by the https://grand-challenge.org platform. Ultimately the responsibility for this unfortunate debacle lies with the organizers for failing to clearly communicate the resource limitations to participants. Since teams had already invested considerable effort in solutions that exceeded the resource limitations, it was decided the most fair thing to do was to pivot to the former approach in which teams were provided with a limited 48 hour window during which to download the test imaging, run inference on their own computing hardware, and return their predictions to the organizers.

3 Results

3.1 Performance and Ranking

Overall, 29 teams submitted papers to the KiTS21 challenge proceedings, and in-so-doing, registered their intention to submit predictions. One of these teams was not able to be reached after submitting their paper, and so was excluded. Another signed up to receive a copy of the test set images, but did not return predictions, and so was also excluded. A further team withdrew prior to receiving the test set images. This left 26 teams who submitted their predictions to the challenge. Once the results were announced, a further one team asked to have their paper retracted and their results removed from the leaderboard. All told, 25 teams were included in the final leaderboard with corresponding manuscripts. The top-5 teams were invited to give long-form oral presentations at the MICCAI KiTS21 workshop. The remaining 20 teams were given the option to give a shorter talk, and 9 of them accepted.

Shown in figure 6 is a series of box plots for each team’s tumor segmentation performance on the 100 test set cases. For reference, the inter-rater agreement in tumor segmentation for this task was previously shown to be 0.88 [21], whereas the top-5 teams achieved values of 0.86, 0.83, 0.83, 0.82, and 0.81 respectively. The top-9 teams all appear to have very similar performance profiles with a tight cluster around 0.8 and then a low-density uniform distribution of scores on one or two dozen cases. Interestingly, the top 5 teams had no ”complete misses” among them, with a nonzero dice score on all 100 cases. This stands in contrast to the KiTS19 leaderboard in which every team missed at least one tumor completely.

The variety in predictions for a single case can be qualitatively examined by plotting the sum of those predictions as a heatmap. This is what is shown in figure 7. Clearly, the vast majority of teams concentrated their predictions on the correct region of the kidney. However, there is significant variation in the exact delineation of the boundary between the lesion and the kidney. This is consistent with expert opinion that this tumor-kidney delineation is much more difficult than simply detecting the tumor itself. That said, a precise and accurate boundary delineation is very important for downstream applications of these segmentations, such as for surgical planning, where a misjudgement of the boundary could lead to the unnecessary removal of too much healthy parenchyma, leading to poor renal functional outcomes, and the removal of too little tumor could lead to positive surgical margins and a greater risk for avoidable recurrence at the primary site.

The final leaderboard ranking was determined with a rank-then-aggregate procedure using the respective means across HECs of the two chosen varieties of dice scores. A static final ranking is, of course, necessary in order to award prizes and to determine which teams are invited to present at the MICCAI KiTS21 workshop. However, it is important to note that the final ranking does not necessarily represent an unimpeachable truth about which teams are better than others. The test set has a finite size, and so inferential statistics must be used to make claims about differences in performance. The final results of this pairwise analysis, along with descriptive plots supporting these conclusions are shown in figure 8. All analyses were performed with and corrections for multiple hypothesis testing were made using the Holm-Bonferroni procedure [23].

As shown, the first-place team was not statistically superior to any of the top-5 teams at a family-wise error rate of , but it was suprior to nearly every team thereafter – with the interesting exception of the 9th place team. This lack of statistical significance is often used to criticise challenges as being “unfair” or “unreliable”. In the context of the nominal cash prize awarded to the “winning” team, perhaps this is true. However, it can be argued that the true value in machine learning competitions is not in the pairwise testing between individual methods, but rather in the population-level meta-analyses that they enable regarding the design decisions that were made by each team. Challenges, therefore, might not be the best way to determine which method is “best”, but they are an excellent way to determine which methods are currently “better” than others. It is therefore of great importance that the submissions be accompanied by detailed manuscripts describing which methods were used. KiTS21 not only requested, this, but enforced it using a peer review process. After the challenge, discrete data about each method were collected by a manual review of each paper, and a brief discussion of this data is provided in the following section.

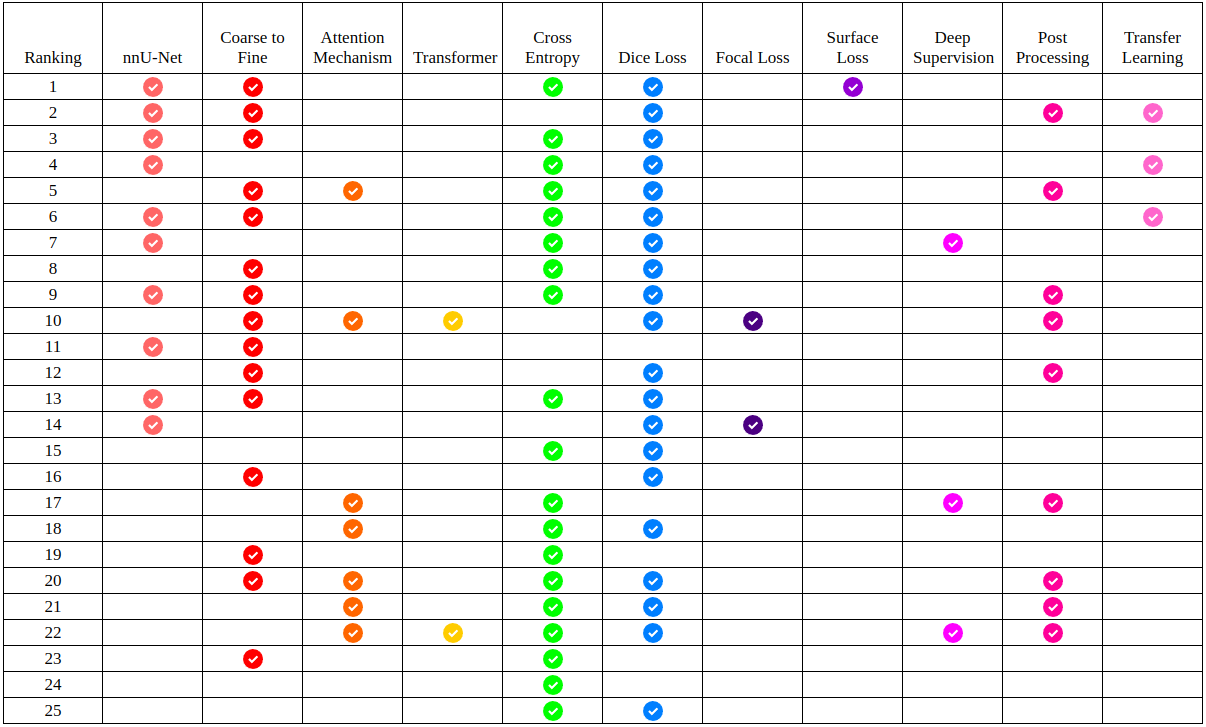

3.2 Methods Used

In the course of manually reviewing each team’s manuscript, 11 specific binary data points were extracted from each paper, corresponding to 11 commonly-used methods for this problem. Which teams used which of these methods is presented in tabular form in figure 9. As can be seen, the nnU-Net approach dominates the top half of the leaderboard, with ”coarse-to-fine” frameworks and transfer learning both also being overrepresented among high-performing teams. The most popular loss function was the sum of cross entropy and dice loss, but notably, it was an nnU-Net trained with a sum of cross entropy and surface loss that ultimately won the competition. Many of the teams who chose not to use nnU-Net instead opted for architectures which incorporated some form of attention mechanism. Among these, two teams used a visual transformer network, but in general, these attention mechanisms underperformed in comparison to nnU-Net.

3.3 Hidden Strata Analysis

It’s important to understand how the performance of these models varies on subpopulations within the greater population of patients they might be applied to. Existing health care disparities are well-documented [3, 10, 11], and there is a significant concern that the proliferation of predictive models in medicine will serve to exacerbate these disparities [9].

One aspect of machine learning problems that heightens the risk of disparate performance on different populations is underrepresentation in the training set. In the case of KiTS21, the training set was drawn from patients treated at the University of Minnesota, which is a considerably more caucasian population than most other parts of the United States. Further still, given that the subject matter is kidney cancer, which has an intrinsic higher prevalence in males, females also make up a relative minority of the dataset (see table 1). The following sections present an exploration of this issue using both hypothesis-driven and unsupervised methodologies.

3.3.1 Hypothesis-Driven Analysis

Due to substantial underrepresentation of non-white and female patients in the KiTS21 training set, a natural hypothesis is that segmentation performance could vary on the basis of race and/or sex. A multivariate linear regression analysis was performed using both race and gender as predictors with two additional covariates in order to determine whether these variables independently associate with the mean model performance of the top-5 teams. The results, shown in table 2 reveal that non-white patients do in fact see signficantly worse performance compared to white patients. Surprisingly, however, women actually see significantly better performance than men. This speaks to the inherent unpredictability of hidden strata analysis, and how training set characteristics alone are not sufficient to predict how a model will perform on a given subpopulation.

| Variable | Coefficient | P-value |

| Tumor Size (cm)* | ||

| Clear Cell Subtype | ||

| Female Gender* | ||

| Non-Caucasian Race* | ||

| Intercept* |

Interestingly, for the two teams who submitted transformer networks, the results look quite different (table 3). In fact, both gender and race fall out of significance, whereas tumor size appears to play a much larger role. This could suggest that transformer networks are more robust to hidden strata than the nnU-Net dominated top-5 submissions. However, it should be noted that the top-5 teams still outperform the transformer networks even on those subpopulations where it performs worst.

| Variable | Coefficient | P-value |

| Tumor Size (cm)* | ||

| Clear Cell Subtype | ||

| Female Gender | ||

| Non-Caucasian Race | ||

| Intercept* |

3.3.2 Unsupervised Analysis

Deep neural networks are very effective at extracting high-level semantic information from high-dimensional data such as images. Researchers often exploit this property in conjunction with a variety of nonparametric dimensional reduction techniques [49, 38] in order to visualize the clusters of input samples which appear to be represented by the network in similar ways. This has proven to be a useful tool for discovering structure in high-dimensional datasets. By a similar argument, the performance of a variety of deep neural networks on a given dataset can also be conceived as a high-dimensional representation of high-level semantic information about each instance. The same techniques can therefore be applied to visualize the clusters of patients which appear to have similar signatures in terms of semantic segmentation performance.

Figure 10 shows the results of hierarchical clustering performed on the set of test set cases using the performance metrics from each team as a feature vector. This reveals certain interesting clusters of cases, such as that on the far right in which virtually every team performed poorly. This stands in contrast to that cluster near the middle on which nearly every team performed well. Perhaps the most distinctive is the cluster of three cases on the far left on which nearly every teams performed poorly, except for a select few teams who performed well. Interestingly, the teams that performed well on these cases were not necessarily the teams who ranked near the top of the leaderboard.

3.4 Methods Used by Top 3 Teams

The three subsections that follow are brief overviews of the methods used by the three highest-performing teams who submitted to the challenge.

3.4.1 First Place: A Coarse-to-Fine Framework for the 2021 Kidney and Kidney Tumor Segmentation Challenge

This submission [52] was made by Zhongchen Zhao, Huai Chen, and Lisheng Wang from the Shanghai Jiao Tong University, China.

Data use and preprocessing

This submission made use of the KiTS21 dataset alone, and used random weight initializations for their network. The images were preprocessed by resampling to an isotropic 0.78125 mm pixel size using third order spline interpolation.

Architectures

As shown in figure 11, a coarse-to-fine approach was used which first roughly segmented the entire kidney region. This coarse kidney segmentation was used to generate a cropped region around each kidney, which was fed to a finer kidney segmentation network. The result of that network was fed to two additional networks as inputs, along with the cropped image, to produce fine tumor and ”mass” segmentations, where masses refer to the union of the tumor and cyst regions. The predictions of each of these networks were aggregated to produce a final composite prediction. Each of the four networks in use were trained using the nnU-Net framework [24].

Training

The networks were originally trained using a sum of the dice loss and the cross entropy loss, but once these objective functions plateaued, a novel “surface loss” was used for further fine-tuning. The aim of this was to optimize the predictions in such a way that was in line with the surface dice metric, which was used in addition to the volumetric dice for the final leaderboard ranking. This surface loss term is defined below:

Where is the surface of the ground truth, and are the sets of false-positive and false-negative points, respectively, and is a constant.

Postprocessing

Once raw predictions by the network had been made, connected component analysis was used to clean up extraneous predictions. Size thresholds of 20,000 voxels, 200 voxels, and 50 voxels were used to eliminate kidney, tumor, and cyst predictions that were too small to be realistic. Cysts and tumors that were not touching regions that were predicted to be kidney were also excluded.

Results

This method achieved the place rank on the leaderboard of the KiTS21 challenge with an average volumetric dice score of 0.908 and an average surface dice score of 0.826. Of note, this method achieved a volumetric dice score for the kidney region of 0.86, which is quite close to the previously reported interobserver agreement for the KiTS19 challenge of 0.88 [21].

3.4.2 Second Place: An Ensemble of 3D U-Net Based Models for Segmentation of Kidney and Masses in CT Scans

This submission [16] was made by Alex Golts, Daniel Khapun, Daniel Shats, Yoel Shoshan and Flora Gilboa-Solomon of IBM Research - Israel.

Data use and preprocessing

This submission did not make direct use of any data other than the official training set. One of the models in the final ensemble used by this method was initialized with weights of a model pretrained on the publicly available Liver Tumor Segmentation (LiTS) dataset [5]. Other models in the ensemble were initialized randomly. This method used both low and high resolution architectures. For the former, the data was resampled to a common spacing of mm, and for the latter, mm. The labeled annotations maps used during training were sampled randomly per slice from different plausible annotations per region based on the existing multiple human annotators. This was done to improve the robustness of the trained models and make them better suited to the official KiTS21 evaluation protocol.

Architectures

A single-stage 3D U-Net and a two-stage 3D U-Net Cascade architecture were used by this method, as implemented in the nnU-Net framework [24]. The latter consists of first applying a 3D U-Net on low resolution data, and then using the low-res segmentation results to augment the input to another 3D U-Net applied to high resolution data. This serves the purpose of increasing the spatial contextual information that the network sees, while keeping a feasible input patch size with regards to available GPU memory. The final model ensemble used by this submission consists of three single-stage 3D U-Net models and one two-stage cascaded model.

Training

Patches of size were sampled and input to the network. All models were trained with a combination of Dice and cross-entropy losses [24]. One of the models in the final ensemble was additionally trained with a regularized loss which encouraged smoothness in the network predictions. Training was done for 250,000 iterations of Stochastic Gradient Descent. Training a single-stage 3D U-Net model took 48 hours on a single Tesla V100 GPU. All models were trained on 5 cross-validation splits with 240 cases used for training and the remaining 60 for validation.

Postprocessing

Custom postprocessing was applied to the segmentation results, removing rarely occuring implausible findings: tumor and cyst findings positioned outside of kidney findings, and small kidney fragment findings surrounded by another class. Fig. 4 shows prediction examples for two slices, with and without the proposed postprocessing.

| input | without | with | ground truth | |

|---|---|---|---|---|

| postprocessing | postprocessing | |||

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c444b0ce-c08c-4104-8dd8-a1a3e9d8fe69/image_60.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c444b0ce-c08c-4104-8dd8-a1a3e9d8fe69/raw_pred_60.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c444b0ce-c08c-4104-8dd8-a1a3e9d8fe69/postprocessed_pred_60.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c444b0ce-c08c-4104-8dd8-a1a3e9d8fe69/label_60.png) |

|

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c444b0ce-c08c-4104-8dd8-a1a3e9d8fe69/image_105.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c444b0ce-c08c-4104-8dd8-a1a3e9d8fe69/raw_pred_105.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c444b0ce-c08c-4104-8dd8-a1a3e9d8fe69/postprocessed_pred_105.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c444b0ce-c08c-4104-8dd8-a1a3e9d8fe69/label_105.png) |

Results

The final submission was an ensemble of four models as follows: (1) 3D U-Net trained with an added regularized loss, (2) 3D U-Net trained with a different random seed for the training label generation process, (3) 3D U-Net and (4) 3D U-Net cascade, both trained with weights initialized from a model trained on the LiTS dataset. This submission scored 0.896 mean Dice and 0.816 mean Surface Dice, resulting in second place. For a more detailed description of this submission, see [16].

3.4.3 Third Place: A Coarse-to-Fine 3D U-Net Network for Semantic Segmentation of Kidney CT Scans

This submission [14] was made by Yasmeen George from the Monash University, Australia.

Network architecture.

The proposed coarse-to-fine cascaded U-Net approach is based on 3D U-Net architecture and has two stages. In the first stage, a 3D U-Net model is trained on downsampled images to roughly delineate kidney region. In the second stage, a 3D U-Net model is trained to have more detailed segmentation of the three classes (kidney, tumor, cyst) using the full resolution images guided by the first stage segmentation maps. The 3D U-Net architecture had an encoder and a decoder path each with five resolution steps. The encoder part was performed using strided convolutions starting with 30 feature maps then doubling up each level to a maximum of 320. The decoder part was based on transposed convolutions. Each layer consists 3D convolution with kernel and strides of 1 in each dimension, leaky ReLU activations, and instance normalization. For more details please refer to our paper [14].

Data preprocessing.

The CT intensities (HU) were transformed by subtracting mean and dividing by standard deviation. In the first stage, each CT scan was resampled using third order spline interpolation to a spacing of mm resulting in median volume dimensions of voxels. While in the second stage, a spacing of mm was used with median volume dimensions of voxels. Data augmentation methods including random rotations, gamma transformation, and random cropping were used during training.

Training and validation.

The proposed models were implemented using nnU-Net framework [24] with Python 3.6 and PyTorch framework on NVIDIA Tesla V100 GPUs. Majority aggregation ground truth was used for training and validation. All models were trained from scratch using 5-fold cross-validation with a patch size of that was randomly sampled from the input resampled volumes. The models were trained using stochastic gradient descent (SGD) optimizer for 1000 epochs using a batch of size 2 with 250 batches per epoch. The training objective was to minimize the sum of cross-entropy and dice loss.

|

|

Results.

The model achieved the place in the leaderboard of KiTS21 challenge with a mean sampled average dice score of 0.8944 and a mean sampled average surface dice score of 0.8140 using a test set of 100 CT scans. The proposed approach scored 0.9748, 0.8813, 0.8710 average dice for kidney, tumor and cyst using 3D cascade U-Net model. Figure 12.visualizes the segmentation results for the trained model.

4 Conclusions

This paper presented the results of the 2021 Kidney Tumor Segmentation Challenge (KiTS21). The challenge featured many innovations in terms of challenge design, including a novel annotation scheme to produce multiple volumetric annotations per ROI and a fully transparant web-based annotation process. The challenge attracted 25 full submissions from teams around the world, and the top-performing team surpassed the prior state of the art performance set with the predecessor KiTS19 challenge, despite the use of a test set from an entirely different institution and geographic area. A meta-analysis of the methods used by participating teams showed the continued popularity and dominant performance of the nnU-Net framework, although significant interest by teams in developing transformer or other attention-based methods was also observed. A hidden strata analysis was presented, which revealed that the top-performing teams were not necessarily the ones who had the most uniform performance on subpopulations within the test set.

The continued goals with KiTS are to continue to expand and augment the quality of the dataset to facilitate even better performance, while also continuing to challenge participants with additional hetereogeneity and complexity, such that the community can continue to move towards a more realistic real-world setting for this problem. The upcoming KiTS23 edition achieves this by incorporating the venous contrast phase in addition to the corticomedullary phase which both KiTS19 and KiTS21 were based on. As organizers, our hope is that the community will continue to find KiTS to be a useful resource for advancing the state of the art in kidney tumor segmentation.

Acknowledgements

Research reported in this publication was supported in part by the National Cancer Institute of the National Institutes of Health under Award Number R01CA225435. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Additional support for research activities including developing the annotation procedure, performing image annotations, and analyzing the submission data was provided by The Intutive Foundation, Cisco, and by research scholarships from the Climb 4 Kidney Cancer Foundation. The monetary prize for the winning team was graciously sponsored by Histosonics, Inc.

Finally, we would like to thank the urology departments at the University of Minnesota and Cleveland Clinic for graciously allowing us to use their collections of patient data for this purpose.

References

- [1] Vincent Andrearczyk, Valentin Oreiller, Mario Jreige, Martin Vallières, Joel Castelli, Hesham Elhalawani, Sarah Boughdad, John O Prior, and Adrien Depeursinge. Overview of the hecktor challenge at miccai 2020: automatic head and neck tumor segmentation in pet/ct. In 3D Head and Neck Tumor Segmentation in PET/CT Challenge, pages 1–21. Springer, 2021.

- [2] Spyridon Bakas, Mauricio Reyes, Andras Jakab, Stefan Bauer, Markus Rempfler, Alessandro Crimi, Russell Takeshi Shinohara, Christoph Berger, Sung Min Ha, Martin Rozycki, et al. Identifying the best machine learning algorithms for brain tumor segmentation, progression assessment, and overall survival prediction in the brats challenge. arXiv preprint arXiv:1811.02629, 2018.

- [3] Joseph R Betancourt, Alexander R Green, J Emilio Carrillo, and Elyse R Park. Cultural competence and health care disparities: key perspectives and trends. Health affairs, 24(2):499–505, 2005.

- [4] Abhishta Bhandari, Muhammad Ibrahim, Chinmay Sharma, Rebecca Liong, Sonja Gustafson, and Marita Prior. Ct-based radiomics for differentiating renal tumours: a systematic review. Abdominal Radiology, 46(5):2052–2063, 2021.

- [5] Patrick Bilic, Patrick Christ, Hongwei Bran Li, Eugene Vorontsov, Avi Ben-Cohen, Georgios Kaissis, Adi Szeskin, Colin Jacobs, Gabriel Efrain Humpire Mamani, Gabriel Chartrand, et al. The liver tumor segmentation benchmark (lits). Medical Image Analysis, page 102680, 2022.

- [6] David A Bluemke, Linda Moy, Miriam A Bredella, Birgit B Ertl-Wagner, Kathryn J Fowler, Vicky J Goh, Elkan F Halpern, Christopher P Hess, Mark L Schiebler, and Clifford R Weiss. Assessing radiology research on artificial intelligence: a brief guide for authors, reviewers, and readers—from the radiology editorial board, 2020.

- [7] Umberto Capitanio, Karim Bensalah, Axel Bex, Stephen A Boorjian, Freddie Bray, Jonathan Coleman, John L Gore, Maxine Sun, Christopher Wood, and Paul Russo. Epidemiology of renal cell carcinoma. European urology, 75(1):74–84, 2019.

- [8] Umberto Capitanio and Francesco Montorsi. Renal cancer. The Lancet, 387(10021):894–906, 2016.

- [9] Leo Anthony Celi, Jacqueline Cellini, Marie-Laure Charpignon, Edward Christopher Dee, Franck Dernoncourt, Rene Eber, William Greig Mitchell, Lama Moukheiber, Julian Schirmer, Julia Situ, et al. Sources of bias in artificial intelligence that perpetuate healthcare disparities—a global review. PLOS Digital Health, 1(3):e0000022, 2022.

- [10] Elizabeth N Chapman, Anna Kaatz, and Molly Carnes. Physicians and implicit bias: how doctors may unwittingly perpetuate health care disparities. Journal of general internal medicine, 28:1504–1510, 2013.

- [11] Rumi Chunara, Yuan Zhao, Ji Chen, Katharine Lawrence, Paul A Testa, Oded Nov, and Devin M Mann. Telemedicine and healthcare disparities: a cohort study in a large healthcare system in new york city during covid-19. Journal of the American Medical Informatics Association, 28(1):33–41, 2021.

- [12] Manuel JA Eugster, Torsten Hothorn, and Friedrich Leisch. Exploratory and inferential analysis of benchmark experiments. 2008.

- [13] Vincenzo Ficarra, Giacomo Novara, Silvia Secco, Veronica Macchi, Andrea Porzionato, Raffaele De Caro, and Walter Artibani. Preoperative aspects and dimensions used for an anatomical (padua) classification of renal tumours in patients who are candidates for nephron-sparing surgery. European urology, 56(5):786–793, 2009.

- [14] Yasmeen George. A coarse-to-fine 3d u-net network for semantic segmentation of kidney ct scans. In International Challenge on Kidney and Kidney Tumor Segmentation, pages 137–142. Springer, 2022.

- [15] Robert J Gillies, Paul E Kinahan, and Hedvig Hricak. Radiomics: images are more than pictures, they are data. Radiology, 278(2):563, 2016.

- [16] Alex Golts, Daniel Khapun, Daniel Shats, Yoel Shoshan, and Flora Gilboa-Solomon. An ensemble of 3d u-net based models for segmentation of kidney and masses in ct scans. In International Challenge on Kidney and Kidney Tumor Segmentation, pages 103–115. Springer, 2022.

- [17] Dong Han, Nan Yu, Yong Yu, Taiping He, and Xiaoyi Duan. Performance of ct radiomics in predicting the overall survival of patients with stage iii clear cell renal carcinoma after radical nephrectomy. La radiologia medica, 127(8):837–847, 2022.

- [18] Shijie Hao, Yuan Zhou, and Yanrong Guo. A brief survey on semantic segmentation with deep learning. Neurocomputing, 406:302–321, 2020.

- [19] Tobias Heimann, Bryan J Morrison, Martin A Styner, Marc Niethammer, and Simon Warfield. Segmentation of knee images: a grand challenge. In Proc. MICCAI Workshop on Medical Image Analysis for the Clinic, volume 1. Beijing, China, 2010.

- [20] Nicholas Heller, Joshua Dean, and Nikolaos Papanikolopoulos. Imperfect segmentation labels: How much do they matter? In Intravascular Imaging and Computer Assisted Stenting and Large-Scale Annotation of Biomedical Data and Expert Label Synthesis, pages 112–120. Springer, 2018.

- [21] Nicholas Heller, Fabian Isensee, Klaus H Maier-Hein, Xiaoshuai Hou, Chunmei Xie, Fengyi Li, Yang Nan, Guangrui Mu, Zhiyong Lin, Miofei Han, et al. The state of the art in kidney and kidney tumor segmentation in contrast-enhanced ct imaging: Results of the kits19 challenge. Medical image analysis, 67:101821, 2021.

- [22] Nicholas Heller, Resha Tejpaul, Fabian Isensee, Tarik Benidir, Martin Hofmann, P Blake, Zachary Rengal, Keenan Moore, Niranjan Sathianathen, Arveen Adith Kalapara, et al. Computer-generated renal nephrometry scores yield comparable predictive results to those of human-expert scores in predicting oncologic and perioperative outcomes. The Journal of urology, 207(5):1105–1115, 2022.

- [23] Sture Holm. A simple sequentially rejective multiple test procedure. Scandinavian journal of statistics, pages 65–70, 1979.

- [24] Fabian Isensee, Paul F Jaeger, Simon AA Kohl, Jens Petersen, and Klaus H Maier-Hein. nnu-net: a self-configuring method for deep learning-based biomedical image segmentation. Nature methods, 18(2):203–211, 2021.

- [25] Michael AS Jewett, Kamal Mattar, Joan Basiuk, Christopher G Morash, Stephen E Pautler, D Robert Siemens, Simon Tanguay, Ricardo A Rendon, Martin E Gleave, Darrel E Drachenberg, et al. Active surveillance of small renal masses: progression patterns of early stage kidney cancer. European urology, 60(1):39–44, 2011.

- [26] John Jumper, Richard Evans, Alexander Pritzel, Tim Green, Michael Figurnov, Olaf Ronneberger, Kathryn Tunyasuvunakool, Russ Bates, Augustin Žídek, Anna Potapenko, et al. Highly accurate protein structure prediction with alphafold. Nature, 596(7873):583–589, 2021.

- [27] Christoph A Karlo, Pier Luigi Di Paolo, Joshua Chaim, A Ari Hakimi, Irina Ostrovnaya, Paul Russo, Hedvig Hricak, Robert Motzer, James J Hsieh, and Oguz Akin. Radiogenomics of clear-cell renal cell carcinoma: associations between ct imaging features and mutations. Radiology, 270(2):464, 2014.

- [28] A Emre Kavur, N Sinem Gezer, Mustafa Barış, Sinem Aslan, Pierre-Henri Conze, Vladimir Groza, Duc Duy Pham, Soumick Chatterjee, Philipp Ernst, Savaş Özkan, et al. Chaos challenge-combined (ct-mr) healthy abdominal organ segmentation. Medical Image Analysis, 69:101950, 2021.

- [29] Andriy Kryshtafovych, Torsten Schwede, Maya Topf, Krzysztof Fidelis, and John Moult. Critical assessment of methods of protein structure prediction (casp)—round xiv. Proteins: Structure, Function, and Bioinformatics, 89(12):1607–1617, 2021.

- [30] Alexander Kutikov and Robert G Uzzo. The renal nephrometry score: a comprehensive standardized system for quantitating renal tumor size, location and depth. The Journal of urology, 182(3):844–853, 2009.

- [31] Zoé Lambert, Caroline Petitjean, Bernard Dubray, and Su Kuan. Segthor: segmentation of thoracic organs at risk in ct images. In 2020 Tenth International Conference on Image Processing Theory, Tools and Applications (IPTA), pages 1–6. IEEE, 2020.

- [32] John T Leppert, Janet Hanley, Todd H Wagner, Benjamin I Chung, Sandy Srinivas, Glenn M Chertow, James D Brooks, Christopher S Saigal, Urologic Diseases in America Project, et al. Utilization of renal mass biopsy in patients with renal cell carcinoma. Urology, 83(4):774–780, 2014.

- [33] Geert Litjens, Robert Toth, Wendy van de Ven, Caroline Hoeks, Sjoerd Kerkstra, Bram van Ginneken, Graham Vincent, Gwenael Guillard, Neil Birbeck, Jindang Zhang, et al. Evaluation of prostate segmentation algorithms for mri: the promise12 challenge. Medical image analysis, 18(2):359–373, 2014.

- [34] Rongjie Liu, Hesham Elhalawani, Abdallah Sherif Radwan Mohamed, Baher Elgohari, Laurence Court, Hongtu Zhu, and Clifton David Fuller. Stability analysis of ct radiomic features with respect to segmentation variation in oropharyngeal cancer. Clinical and translational radiation oncology, 21:11–18, 2020.

- [35] Jun Ma, Yao Zhang, Song Gu, Xingle An, Zhihe Wang, Cheng Ge, Congcong Wang, Fan Zhang, Yu Wang, Yinan Xu, et al. Fast and low-gpu-memory abdomen ct organ segmentation: The flare challenge. Medical Image Analysis, 82:102616, 2022.

- [36] Lena Maier-Hein, Matthias Eisenmann, Annika Reinke, Sinan Onogur, Marko Stankovic, Patrick Scholz, Tal Arbel, Hrvoje Bogunovic, Andrew P Bradley, Aaron Carass, et al. Why rankings of biomedical image analysis competitions should be interpreted with care. Nature communications, 9(1):1–13, 2018.

- [37] Robert J McDonald, Kara M Schwartz, Laurence J Eckel, Felix E Diehn, Christopher H Hunt, Brian J Bartholmai, Bradley J Erickson, and David F Kallmes. The effects of changes in utilization and technological advancements of cross-sectional imaging on radiologist workload. Academic radiology, 22(9):1191–1198, 2015.

- [38] Leland McInnes, John Healy, and James Melville. Umap: Uniform manifold approximation and projection for dimension reduction. arXiv preprint arXiv:1802.03426, 2018.

- [39] John Moult. A decade of casp: progress, bottlenecks and prognosis in protein structure prediction. Current opinion in structural biology, 15(3):285–289, 2005.

- [40] Mostafa Nazari, Isaac Shiri, Ghasem Hajianfar, Niki Oveisi, Hamid Abdollahi, Mohammad Reza Deevband, Mehrdad Oveisi, and Habib Zaidi. Noninvasive fuhrman grading of clear cell renal cell carcinoma using computed tomography radiomic features and machine learning. La radiologia medica, 125(8):754–762, 2020.

- [41] Stanislav Nikolov, Sam Blackwell, Alexei Zverovitch, Ruheena Mendes, Michelle Livne, Jeffrey De Fauw, Yojan Patel, Clemens Meyer, Harry Askham, Bernardino Romera-Paredes, et al. Deep learning to achieve clinically applicable segmentation of head and neck anatomy for radiotherapy. arXiv preprint arXiv:1809.04430, 2018.

- [42] Srinivasa R Prasad, Venkateswar R Surabhi, Christine O Menias, Abhijit A Raut, and Kedar N Chintapalli. Benign renal neoplasms in adults: cross-sectional imaging findings. American Journal of Roentgenology, 190(1):158–164, 2008.

- [43] Tim Rädsch, Annika Reinke, Vivienn Weru, Minu D Tizabi, Nicholas Schreck, A Emre Kavur, Bünyamin Pekdemir, Tobias Roß, Annette Kopp-Schneider, and Lena Maier-Hein. Labeling instructions matter in biomedical image analysis. arXiv preprint arXiv:2207.09899, 2022.

- [44] Annika Reinke, Matthias Eisenmann, Minu Dietlinde Tizabi, Carole H Sudre, Tim Rädsch, Michela Antonelli, Tal Arbel, Spyridon Bakas, M Jorge Cardoso, Veronika Cheplygina, et al. Common limitations of performance metrics in biomedical image analysis. 2021.

- [45] Annika Reinke, Minu D Tizabi, Matthias Eisenmann, and Lena Maier-Hein. Common pitfalls and recommendations for grand challenges in medical artificial intelligence. European Urology Focus, 7(4):710–712, 2021.

- [46] Giuseppe Rosiello, Alessandro Larcher, Francesco Montorsi, and Umberto Capitanio. Renal cancer: overdiagnosis and overtreatment, 2021.

- [47] Anjany Sekuboyina, Malek E Husseini, Amirhossein Bayat, Maximilian Löffler, Hans Liebl, Hongwei Li, Giles Tetteh, Jan Kukačka, Christian Payer, Darko Štern, et al. Verse: A vertebrae labelling and segmentation benchmark for multi-detector ct images. Medical image analysis, 73:102166, 2021.

- [48] Jeffrey J Tomaszewski, Robert G Uzzo, and Marc C Smaldone. Heterogeneity and renal mass biopsy: a review of its role and reliability. Cancer biology & medicine, 11(3):162, 2014.

- [49] Laurens Van der Maaten and Geoffrey Hinton. Visualizing data using t-sne. Journal of machine learning research, 9(11), 2008.

- [50] Arvind Vasudevan, Robert J Davies, Beverley A Shannon, and Ronald J Cohen. Incidental renal tumours: the frequency of benign lesions and the role of preoperative core biopsy. BJU international, 97(5):946–949, 2006.

- [51] Manuel Wiesenfarth, Annika Reinke, Bennett A Landman, Manuel Jorge Cardoso, Lena Maier-Hein, and Annette Kopp-Schneider. Methods and open-source toolkit for analyzing and visualizing challenge results. arXiv preprint arXiv:1910.05121, 2019.

- [52] Zhongchen Zhao, Huai Chen, and Lisheng Wang. A coarse-to-fine framework for the 2021 kidney and kidney tumor segmentation challenge. In International Challenge on Kidney and Kidney Tumor Segmentation, pages 53–58. Springer, 2022.