The inverse Kalman filter

Abstract

We introduce the inverse Kalman filter (IKF), which enables exact matrix-vector multiplication between a covariance matrix from a dynamic linear model and any real-valued vector with linear computational cost. We integrate the IKF with the conjugate gradient algorithm, which substantially accelerates the computation of matrix inversion for a general form of covariance matrix, where other approximation approaches may not be directly applicable. We demonstrate the scalability and efficiency of the IKF approach through applications in nonparametric estimation of particle interaction functions, using both simulations and real cell trajectory data.

1 Introduction

Dynamic linear models (DLMs) or linear state space models are ubiquitously used in modeling temporally correlated data [37, 5]. Each observation in DLMs is associated with a -dimensional latent state vector , defined as:

| (1) | ||||

| (2) |

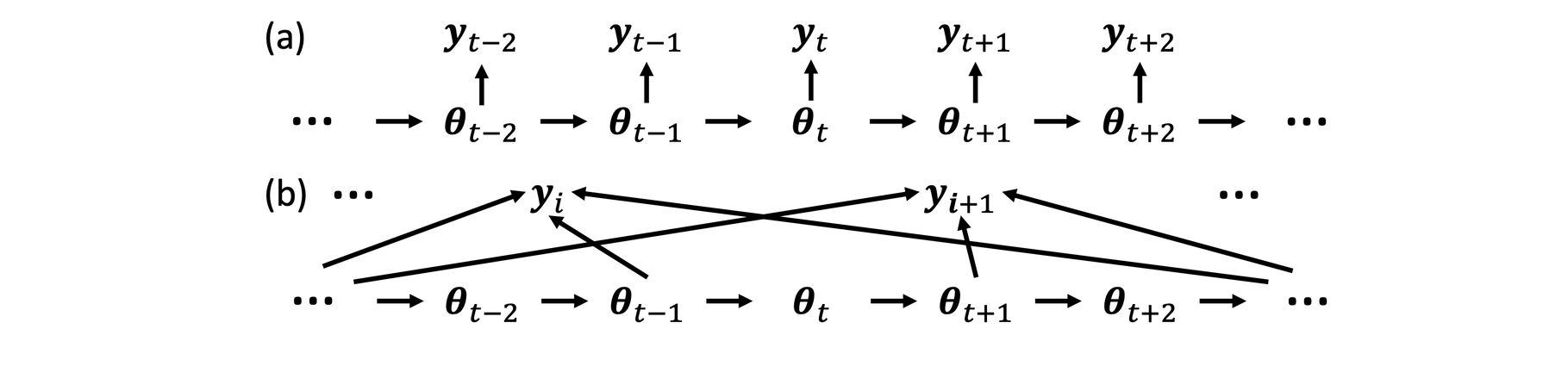

where and are matrices of dimensions and , respectively, is a covariance matrix for , and the initial state vector with assumed herein. The dependent structure of DLMs is illustrated in Fig. 1(a), where each observation is associated with a single latent state .

DLMs are a flexible class of models that include many widely used processes, such as autoregressive and moving average processes [26, 27]. Some Gaussian processes (GPs) with commonly used kernel functions, including the Matérn covariance with a half-integer roughness parameter [12], can also be represented as DLMs [38, 13], with closed-form expressions for , and . This connection, summarized in the Supplementary Material, enables differentiable GPs within the DLM framework, allowing efficient estimation of smooth functions from noisy observations.

The Kalman filter (KF) provides a scalable approach for estimating latent state and computing likelihood in DLMs, which scales linearly with the number of observations [16], as reviewed in Appendix A. In particular, the KF enables efficient computation of for any -dimensional vector in operations, where is the Cholesky factor of the covariance matrix , summarized in Lemma 6 in the Appendix.

Computational challenges remain for high-dimensional state space models and scenarios where KF cannot be directly applied, such as the dependent structure shown in Fig. 1(b), where each observation can be associated with multiple latent states. This interaction structure, introduced in Section 3, is common in physical models, such as molecular dynamics simulation [28]. One way to overcome these challenges is to efficiently compute for any -dimensional vector and utilize optimization methods such as the conjugate gradient (CG) algorithm for computing the predictive distribution [15]. This strategy has been used to approximate the maximum likelihood estimator of parameters in GPs [32, 25]. Yet, each CG iteration requires matrix-vector multiplication, which involves operations and storage, making it prohibitive for large .

To address this issue, we introduce the inverse Kalman filter (IKF), which computes and with operations without approximations, where is the Cholesky factor of any DLM-induced covariance and is an -dimensional vector. The complexity is significantly smaller than in direct computation. The latent states dimension is not larger than 3 in our applications, and it includes many commonly used models such as twice differentiable GPs with a Matérn covariance in (15), often used as a default choice in GP surrogate models.

The IKF algorithm can be extended to accelerate matrix multiplication and inversion, a key computational bottleneck in many problems. We integrate the IKF into a CG algorithm to scalably compute the predictive distribution of observations with a general covariance structure:

| (3) |

where is a DLM-induced covariance matrix, is sparse, and is a diagonal matrix. This structure appears in nonparametric estimation of particle interactions, which will be introduced in Section 3. Both real and simulated studies involve numerous particles over a long time period, where conventional approximation methods [34, 19, 3, 7, 10] are not applicable. This covariance structure also appears in other applications, including varying coefficient models [14] and estimating incomplete lattices of correlated data [31]. We apply our approach to the latter in Section S5 and provide numerical comparisons with various approximation methods in Section S9 of the Supplementary Material.

2 Inverse Kalman filter

In this section, we introduce an exact algorithm for computing with , where is an -dimensional real-valued vector and is an covariance matrix of observations induced by a DLM with latent states in Eqs. (1)-(2). This algorithm is applicable to any DLM specified in Eqs. (1)-(2). Denote , where the Cholesky factor does not need to be explicitly computed in our approach. We provide fast algorithms to compute and by Lemma 1 and Lemma 2, respectively, each with operations. Detailed proofs of these lemmas are available in the Supplementary Material. In the following, , , denote the th element of the vector of , , and , respectively, and denotes the th element of for and .

Lemma 1 (Compute with linear computational cost).

Lemma 2 (Compute with linear computational cost).

The algorithm in Lemma 1 is unconventional and nontrivial to derive. In contrast, Lemma 2 has direct connection to the Kalman filter. Specifically, the one-step-ahead predictive distribution in step (ii) of the Kalman filter, given in Appendix A, enables computing for any vector (Lemma 6). Lemma 2 essentially reverses this operation, computing for any vector , leading to the term Inverse Kalman filter (IKF).

The IKF algorithm, outlined in Algorithm 1, sequentially applies Lemmas 1 and 2 to reduce the computational cost and storage for from to without approximations. This approach applies to all DLMs, including GPs that admit equivalent DLM representations, such as GPs with Matérn kernels where roughness parameters and correspond to and , respectively. Details are provided in the Supplementary Material.

While Algorithm 1 can be applied to DLM regardless of the noise variance, we do not recommend directly computing in scenarios when the noise variance is zero or close to zero in Eq. (1). This is because when , in Eq. (27), the variance of the one-step-ahead predictive distribution, could be extremely small, which leads to large numerical errors in Eq. (28) due to unstable inversion of . To ensure robustness, we suggest computing with a positive scalar and outputting as the results of . Here, can be interpreted as the covariance matrix of a DLM with noise variance . Essentially, we introduce artificial noise to ensure that computed in KF is at least for numerical stability. The result remains exact and independent of the choice of .

We compare the IKF approach with the direct matrix-vector multiplication of in noise-free scenarios in Fig. 2. The experiments utilize with uniformly sampled from and the Matérn covariance with unit variance, roughness parameter , and range parameter [12]. Panels (a) and (b) show that IKF significantly reduces computational costs compared to direct computation, with a linear relationship between computation time and the number of observations. IKF achieves covariance matrix-vector multiplication for a -dimensional output vector in about 2 seconds on a desktop, making it highly efficient for iterative algorithms like the CG algorithm [15] and the randomized log-determinant approximation [29]. Fig. 2(c) compares the maximum absolute error between robust IKF with and non-robust IKF with . The non-robust IKF exhibits large numerical errors due to instability when approaches zero, while robust IKF remains stable by ensuring is no smaller than , even with near-singular covariance matrices .

Lemmas 1 and 2 facilitate the computation of each element in using DLM parameters and enable the linear computation of . These results are summarized in Lemmas 3 and 4, respectively, with proofs provided in the Supplementary Material.

Lemma 3 (Cholesky factor from the inverse Kalman filter).

Lemma 4 (Compute with linear computational cost).

3 Nonparametric estimation of particle interaction functions

The IKF algorithm is motivated by the problem of estimating interactions between particles, which is crucial for understanding complex behaviors of molecules and active matter, such as migrating cells and flocking birds. Minimal physical models, such as the Vicsek model [35] and their extensions [1, 9], provide a framework for explaining the collective motion of active matter.

Consider a physical model encompassing multiple types of latent interactions. Let be a -dimensional output vector representing the th particle, which is influenced by distinct interaction types. For the th type of interaction, the th particle interacts with a subset of particles rather than all particles, typically those within a radius . This relationship is expressed as a latent factor model:

| (13) |

where denotes a Gaussian noise vector. The term is a -dimensional known factor loading that links the th output to the th neighbor in the th interaction, with the unknown interaction function evaluated at a scalar-valued input , such as the distance between particles and , for , , and . For a dataset of particles over time points, the total number of observations is .

An illustrative example of this framework is the Vicsek model, a seminal approach for studying collective motion [35] (see Fig. S1(a) in the Supplementary Material). In this model, the 2-dimensional velocity of the th particle at time , , has a constant magnitude , where denotes the norm, and and are the components of the velocity along two orthogonal directions for and . The velocity angle is updated as

| (14) |

where is a zero-mean Gaussian noise with variance . The set of neighbors includes particles within a radius of from particle at time , i.e., , where and are 2-dimensional position vectors of particle and its neighbor , respectively, and denotes the total number of neighbors of the th particle, including itself, at time .

The Vicsek model in Eq. (14) is a special case of the general framework in Eq. (13) with one-dimensional output , i.e. , with the index of the th observation being a function of time and particle . The Viscek model contains a single interaction, i.e. , with linear interaction function , where being the velocity angle of the neighboring particle, and the factor loading being .

Numerous other physical and mathematical models of self-propelled particle dynamics can also be formulated as in Eq. (13) with a parametric form of a particle interaction function [1]. Nonparametric estimation of particle interactions is preferred when the physical mechanism is unknown [17, 23], but the high computational cost limits its applicability to systems with large numbers of particles over long time periods.

In this work, we model each latent interaction function nonparametrically using a GP. By utilizing kernels with an equivalent DLM representation, we can leverage the proposed IKF algorithms to significantly expedite computation. This includes commonly used kernels such as Matérn kernel with a half-integer roughness parameter [12], often used as the default setting of the GP models for predicting nonlinear functions, as the smoothness of the process can be controlled by its roughness parameters [11].

For each interaction , we form the input vector , where ‘u’ indicates that the input entries are unordered, with for any tuple , , , , and . The marginal distribution of the th factor follows , where the th entry of the covariance matrix is , with , and being the variance parameter and the correlation function, respectively. We employ the Matérn correlation with roughness parameters and . For , the Matérn correlation is the exponential correlation , while for , the Matérn correlation follows:

| (15) |

where denotes the range parameter.

By integrating out latent factor processes , the marginal distribution of the observation vector , with dimension , follows a multivariate normal distribution:

| (16) |

where is a sparse block diagonal matrix, such that the th diagonal block is a matrix for . The posterior predictive distribution of the latent variable for any given input is also a normal distribution:

| (17) |

with the predictive mean and variance given by

| (18) | ||||

| (19) |

where , , and represents additional system parameters.

The main computational challenge in calculating the predictive distribution (17) lies in inverting the covariance matrix , where and range between and in applications. Though various GP approximation methods have been proposed [34, 30, 8, 2, 22, 10, 3, 18], they typically approximate the parametric covariance rather than , thus not directly applicable for computing the distribution in Eq. (17).

To address this, we employ the CG algorithm [15] to accelerate the computation of the predictive distribution. Each CG iteration needs to compute for an -dimensional vector . To employ the IKF, we first need to rearrange the input vector into a non-decreasing input sequence . Denote the covariance with . The covariance of the observations can be written as a weighted sum of by introducing a permutation matrix for each interaction: , where with being a permutation matrix such that . After this reordering, the computation of can be broken into four steps: (1) , (2) , (3) , and (4) . Here is a sparse matrix with non-zero entries. The IKF algorithm is used in step (2) to accelerate the most expensive computation, with all computations performed directly using terms in the KF algorithm without explicitly constructing the covariance matrix.

We refer to the resulting approach as the IKF-CG algorithm. This approach reduces the computational cost for computing the posterior distribution from operations to pseudolinear order with respect to , as shown in Table S1 in the Supplementary Material. Furthermore, the IKF-CG algorithm can accelerate the parameter estimation via both cross-validation and maximum likelihood estimation, with the latter requiring an additional approximation of the log-determinant [29]. Details of the CG algorithm, the computation of the predictive distribution, parameter estimation procedures, and computational complexity are discussed in Sections S3, S4, S6, and S7 of the Supplementary Material, respectively.

4 Numerical results for particle interaction function estimation

4.1 Evaluation criteria

We conduct simulation studies in Sections 4.2-4.3 and analyze real cell trajectory data in Section 4.4 to estimate particle interaction functions in physical models. The code and data to reproduce all numerical results are available at https://github.com/UncertaintyQuantification/IKF. Simulation observations are generated at equally spaced time frames with interval , though the proposed approach is applicable to both equally and unequally spaced time frames. For simplicity, the number of particles is assumed constant across all time frames during simulations.

For each of the latent factors, predictive performance is assessed using normalized root mean squared error (NRMSEj), the average length of the posterior credible interval (), and the proportion of interaction function values covered within the 95% posterior credible interval (), based on test inputs :

| (20) | ||||

| (21) | ||||

| (22) |

Here, represents the predicted mean at , is the average of the th interaction function, and denotes the posterior credible interval of , for . A desirable method should have a low NRMSEj, small , and close to .

To account for variability due to initial particle positions, velocities, and noise, each simulation scenario is repeated times to compute the average performance metrics. Unless otherwise specified, parameters are estimated by cross-validation, with of the data used as a training set and the rest as the validation set. All computations are performed on a macOS Mojave system with an 8-core Intel i9 processor running at 3.60 GHz and 32 GB of RAM.

4.2 Vicsek model

We first consider the Vicsek model introduced in Section 3. For each particle at time , after obtaining its velocity angle in Eq. (14), its position is updated as:

| (23) |

where is the particle speed and is the time step size. Particles are initialized with velocity , where is drawn from and . Initial particle positions are uniformly sampled from to keep consistent particle density across experiments. The goal is to estimate the interaction function nonparametrically, without assuming linearity. The interaction radius is estimated alongside other parameters, with results detailed in the Supplementary Material.

We first compare the computational cost and accuracy of IKF-CG, CG, and direct computation for calculating the predictive mean in Eq. (18) and the marginal likelihood in Eq. (16) using the covariance in Eq. (15). Simulations are conducted with particles and noise variance . Panel (a) of Fig. 3 shows that the IKF-CG approach substantially outperforms both direct computation and the CG algorithm in computational time when predicting test inputs with varying time lengths , ranging from 4 to 200. Fig. 3(b) compares the NRMSE of the predictive mean between direct computation and the IKF-CG method as the number of observations ranges from 400 to 2,000, corresponding to from 4 to 20. The two methods yield nearly identical predictive errors, with negligible differences in NRMSE. Fig. 3 (c) shows a comparison of log-likelihood values computed via direct computation and IKF-CG, where the latter employs the log-determinant approximation detailed in the Supplementary Material. Both methods produce similar log-likelihood values across different sample sizes.

Next, we evaluate the performance of the IKF-CG algorithm across 12 scenarios with varying particle numbers (), time frames (), and noise variances (). The predictive performance is evaluated using test inputs evenly spaced across the domain of the interaction function, , averaged over experiments.

Panels (a) and (b) of Figs. 4-5 show the predictive performance of particle interactions using the Matérn covariance with roughness parameter and the exponential covariance (Matérn with ), respectively, for noise variance . Results for are provided in the Supplementary Material. Across all scenarios, NRMSEs remain low, with improved accuracy for larger datasets and smaller noise variances. The decrease in the average length of the posterior interval with increasing sample size, along with the relatively small interval span compared to the output range , indicates improved prediction confidence with more observations. Moreover, the coverage proportion for the posterior credible is close to the nominal level in all cases, validating the reliability of the uncertainty quantification. By comparing panels (a) and (b) in Figs. 4-5, we find that the Matérn covariance in Eq. (15) yields lower NRMSE and narrower posterior credible intervals than the exponential kernel. This improvement is due to the smoother latent process induced by Eq. (15), which is twice mean-squared differentiable, while the process with an exponential kernel is not differentiable.

Figure 6(a) shows close agreement between predicted and true interaction functions over domain . The 95% interval is narrow yet covers approximately 95% of the test samples of the interaction function. Figure 6(b) compares particle trajectories over time steps using the predicted mean of one-step-ahead predictions and the true interaction function. The trajectories are visually similar.

4.3 A modified Vicsek model with multiple interactions

Various extensions of the Vicsek model have been studied to capture more complex particle dynamics [1, 9]. For illustration, we consider a modified Vicsek model with two interaction functions. The 2-dimensional velocity , corresponding to the output in Equation (13), is updated according to:

| (24) |

where is a Gaussian noise vector with variance . The first term in (24) models velocity alignment with neighboring particles, and the second term introduces a distance-dependent interaction . The definitions of neighbor sets, 2-dimensional vector , and the form of are provided in Section S8.2 of the Supplementary Material.

We simulate 12 scenarios, each replicated times, using the same number of particles, time frame, and noise level as in the original Vicsek model in Section 4.2. The predictive performance of the latent factor model is evaluated using test inputs evenly spaced across the training domain of each interaction function. We focus on the model with the Matérn kernel in Eq. (15) and results for the exponential kernel are detailed in the Supplementary Material. Consistent with the results of the Vicsek model, we find models with the Matérn kernel in Eq. (15) are more accurate due to the smooth prior imposed on the interaction function.

| First Interaction | Second Interaction | ||||||

|---|---|---|---|---|---|---|---|

| NRMSE | NRMSE | ||||||

Table 1 summarizes the predictive performance for ; results for are included in the Supplementary Material. While both interaction functions exhibit relatively low NRMSEs, the second interaction function has a higher NRMSE than the first because the repulsion at short distances creates fewer training samples with close-proximity neighbors. This discrepancy can be mitigated by increasing the number of observations. The average length of the posterior credible interval for the second interaction decreases as the sample size increases, and coverage proportion remains close to the nominal 95% level across all scenarios.

In Fig. 7, we show the predictions of the interaction functions, where the shaded regions represent the 95% posterior credible intervals. The predictions closely match the true values, and the credible intervals, while narrow relative to the output range and nearly invisible in the plots, mostly cover the truth. These results suggest high confidence in the predictions.

4.4 Estimating cell-cell interactions on anisotropic substrates

We analyze video microscopy data from [24], which tracks the trajectories of over 2,000 human dermal fibroblasts moving on a nematically aligned, liquid-crystalline substrate. This experimental setup encourages cellular alignment along a horizontal axis, with alignment order increasing over time, though the underlying mechanism remains largely unknown. Cells were imaged every 20 minutes over a 36-hour period, during which the cell count grew from 2,615 to 2,953 due to proliferation. Our objective is to estimate the latent interaction function between cells. Given the vast number of velocity observations (), direct formation and inversion of the covariance matrix is impractical.

We apply the IKF-CG algorithm to estimate the latent interaction function. Due to the anisotropic substrate, cellular velocities differ between horizontal and vertical directions, so we independently model the velocity of the th cell in each direction by

| (25) |

where is the cell count at time , correspond to horizontal and vertical directions, respectively, and denotes the noise. Inspired by the modified Vicsek model, we set . To account for velocity decay caused by increasing cell confluence, we model the noise variances as , where is the sample velocity variance at time , and is a parameter estimated from data for . The neighboring set , where denotes the interaction radius of direction for , excludes particles moving in opposite directions, reflecting the observed gliding and intercalating behavior of cells [36].

We use observations from the first half of the time frames as the training set and the latter half as the testing set. The predicted interaction functions in Fig. 8 show diminishing effects in both directions, likely due to cell-substrate interactions such as friction. The estimated uncertainty (obtained via residual bootstrap) increases when the absolute velocity of neighboring cells in the previous time frame is large, which is attributed to fewer observations at the boundaries of the input domain. Furthermore, the interaction in the vertical direction is weaker than in the horizontal direction, due to the confinement from the anisotropic substrate in the vertical direction.

Our nonparametric estimation of the interaction functions is compared with two models for one-step-ahead forecasts of directional velocities: the original Vicsek model introduced in Section 4.2 and the anisotropic Vicsek model, which predicts velocities using the average velocity of neighboring cells from the previous time frame. As shown in Table 2, our model outperforms both Vicsek models in RMSE for one-step-ahead forecasts of directional velocities, despite the large inherent stochasticity in cellular motion. Moreover, our model has notably shorter average interval lengths that cover approximately of the held-out observations. These findings underscore the importance of the IFK-CG algorithm in enabling the use of large datasets to overcome high stochasticity and capture the underlying dynamics of alignment processes.

| Horizontal direction | Vertical direction | |||||

|---|---|---|---|---|---|---|

| RMSE | L(95%) | P(95%) | RMSE | L(95%) | P(95%) | |

| Baseline Vicsek | 0.011 | 80% | 0.011 | 95% | ||

| Anisotropic Vicsek | 0.024 | 98% | 0.020 | 99% | ||

| Nonparametric estimation | 0.015 | 95% | 0.0092 | 95% | ||

5 Concluding remarks

Several future directions are worth exploring. First, computing large matrix-vector products is ubiquitous, and the approach can be extended to different models, including some large neural network models. Second, it is an open topic to scalably compute the logarithm of the determinant of the covariance in Eq. (3) without approximation. Third, the new approach can be extended to estimate latent functions when forward equations are unavailable or computationally intensive in nonlinear or non-Gaussian dynamical systems, where ensemble Kalman filter [6, 33] and particle filter [20] are commonly used. Fourth, the proposed approach can be extended for estimating and predicting multivariate time series [21] and generalized factor processes for categorical observations [4].

Acknowledgement

Xinyi Fang acknowledges partial support from the BioPACIFIC Materials Innovation Platform of the National Science Foundation under Award No. DMR-1933487. We thank the editor, associate editor, and three anonymous referees for their comments that substantially improved this article.

Supplementary Material

The Supplementary Material contains (i) proofs of all lemmas; (ii) a summary of the connection between GPs with Matérn covariance (roughness parameters 0.5 and 2.5) and DLMs, with closed-form , and ; (iii) the conjugate gradient algorithm; (iv) procedures for the scalable computation of the predictive distribution in particle dynamics; (v) an application of the IKF-CG algorithm for predicting missing values in lattice data; (vi) the parameter estimation method; (vii) computational complexity analysis; (viii) additional numerical results for particle interaction; and (ix) numerical results for predicting missing values in lattice data.

Appendix A. Kalman Filter

Lemma 5.

Let . Recursively for :

-

(i)

The one-step-ahead predictive distribution of given is

(26) with and .

-

(ii)

The one-step-ahead predictive distribution of given is

(27) with and .

-

(iii)

The filtering distribution of given follows

(28) with and .

References

- [1] Hugues Chaté, Francesco Ginelli, Guillaume Grégoire, Fernando Peruani, and Franck Raynaud. Modeling collective motion: variations on the Vicsek model. The European Physical Journal B, 64(3):451–456, 2008.

- [2] Noel Cressie and Gardar Johannesson. Fixed rank kriging for very large spatial data sets. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 70(1):209–226, 2008.

- [3] Abhirup Datta, Sudipto Banerjee, Andrew O Finley, and Alan E Gelfand. Hierarchical nearest-neighbor Gaussian process models for large geostatistical datasets. Journal of the American Statistical Association, 111(514):800–812, 2016.

- [4] Daniele Durante and David B Dunson. Nonparametric Bayes dynamic modelling of relational data. Biometrika, 101(4):883–898, 2014.

- [5] James Durbin and Siem Jan Koopman. Time series analysis by state space methods, volume 38. OUP Oxford, 2012.

- [6] Geir Evensen. Data assimilation: the ensemble Kalman filter. Springer Science & Business Media, 2009.

- [7] Andrew O. Finley, Abhirup Datta, and Sudipto Banerjee. spNNGP R Package for Nearest Neighbor Gaussian Process Models. Journal of Statistical Software, 103(5):1–40, 2022.

- [8] Reinhard Furrer, Marc G Genton, and Douglas Nychka. Covariance tapering for interpolation of large spatial datasets. Journal of Computational and Graphical Statistics, 15(3):502–523, 2006.

- [9] Francesco Ginelli, Fernando Peruani, Markus Bär, and Hugues Chaté. Large-scale collective properties of self-propelled rods. Physical review letters, 104(18):184502, 2010.

- [10] Robert B Gramacy and Daniel W Apley. Local Gaussian process approximation for large computer experiments. Journal of Computational and Graphical Statistics, 24(2):561–578, 2015.

- [11] Mengyang Gu, Xiaojing Wang, and James O Berger. Robust Gaussian stochastic process emulation. Annals of Statistics, 46(6A):3038–3066, 2018.

- [12] Mark S Handcock and Michael L Stein. A Bayesian analysis of kriging. Technometrics, 35(4):403–410, 1993.

- [13] Jouni Hartikainen and Simo Sarkka. Kalman filtering and smoothing solutions to temporal Gaussian process regression models. In Machine Learning for Signal Processing (MLSP), 2010 IEEE International Workshop on, pages 379–384. IEEE, 2010.

- [14] Trevor Hastie and Robert Tibshirani. Varying-coefficient models. Journal of the Royal Statistical Society: Series B (Methodological), 55(4):757–779, 1993.

- [15] Magnus R Hestenes and Eduard Stiefel. Methods of conjugate gradients for solving linear systems. Journal of research of the National Bureau of Standards, 49(6):409, 1952.

- [16] Rudolph Emil Kalman. A new approach to linear filtering and prediction problems. Journal of basic Engineering, 82(1):35–45, 1960.

- [17] Yael Katz, Kolbjørn Tunstrøm, Christos C Ioannou, Cristián Huepe, and Iain D Couzin. Inferring the structure and dynamics of interactions in schooling fish. Proceedings of the National Academy of Sciences, 108(46):18720–18725, 2011.

- [18] Matthias Katzfuss and Joseph Guinness. A general framework for Vecchia approximations of Gaussian processes. Statistical Science, 36(1):124–141, 2021.

- [19] Matthias Katzfuss, Joseph Guinness, and Earl Lawrence. Scaled Vecchia approximation for fast computer-model emulation. SIAM/ASA Journal on Uncertainty Quantification, 10(2):537–554, 2022.

- [20] Genshiro Kitagawa. Monte Carlo filter and smoother for non-Gaussian nonlinear state space models. Journal of computational and graphical statistics, 5(1):1–25, 1996.

- [21] Clifford Lam, Qiwei Yao, and Neil Bathia. Estimation of latent factors for high-dimensional time series. Biometrika, 98(4):901–918, 2011.

- [22] Finn Lindgren, Håvard Rue, and Johan Lindström. An explicit link between Gaussian fields and Gaussian Markov random fields: the stochastic partial differential equation approach. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 73(4):423–498, 2011.

- [23] Fei Lu, Ming Zhong, Sui Tang, and Mauro Maggioni. Nonparametric inference of interaction laws in systems of agents from trajectory data. Proceedings of the National Academy of Sciences, 116(29):14424–14433, 2019.

- [24] Yimin Luo, Mengyang Gu, Minwook Park, Xinyi Fang, Younghoon Kwon, Juan Manuel Urueña, Javier Read de Alaniz, Matthew E Helgeson, Cristina M Marchetti, and Megan T Valentine. Molecular-scale substrate anisotropy, crowding and division drive collective behaviours in cell monolayers. Journal of the Royal Society Interface, 20(204):20230160, 2023.

- [25] Suman Majumder, Yawen Guan, Brian J Reich, and Arvind K Saibaba. Kryging: geostatistical analysis of large-scale datasets using Krylov subspace methods. Statistics and Computing, 32(5):74, 2022.

- [26] Giovanni Petris, Sonia Petrone, and Patrizia Campagnoli. Dynamic linear models. In Dynamic linear models with R. Springer, 2009.

- [27] Raquel Prado and Mike West. Time series: modeling, computation, and inference. Chapman and Hall/CRC, 2010.

- [28] Dennis C Rapaport. The art of molecular dynamics simulation. Cambridge university press, 2004.

- [29] Arvind K Saibaba, Alen Alexanderian, and Ilse CF Ipsen. Randomized matrix-free trace and log-determinant estimators. Numerische Mathematik, 137(2):353–395, 2017.

- [30] Edward Snelson and Zoubin Ghahramani. Sparse Gaussian processes using pseudo-inputs. Advances in neural information processing systems, 18:1257, 2006.

- [31] Michael L Stein. Interpolation of spatial data: some theory for kriging. Springer Science & Business Media, 2012.

- [32] Michael L Stein, Jie Chen, and Mihai Anitescu. Stochastic approximation of score functions for Gaussian processes. The Annals of Applied Statistics, 7(2):1162–1191, 2013.

- [33] Jonathan R Stroud, Michael L Stein, Barry M Lesht, David J Schwab, and Dmitry Beletsky. An ensemble Kalman filter and smoother for satellite data assimilation. Journal of the american statistical association, 105(491):978–990, 2010.

- [34] Aldo V Vecchia. Estimation and model identification for continuous spatial processes. Journal of the Royal Statistical Society: Series B (Methodological), 50(2):297–312, 1988.

- [35] Tamás Vicsek, András Czirók, Eshel Ben-Jacob, Inon Cohen, and Ofer Shochet. Novel type of phase transition in a system of self-driven particles. Physical review letters, 75(6):1226, 1995.

- [36] Elise Walck-Shannon and Jeff Hardin. Cell intercalation from top to bottom. Nature Reviews Molecular Cell Biology, 15(1):34–48, 2014.

- [37] M. West and P. J. Harrison. Bayesian Forecasting & Dynamic Models. Springer Verlag, 2nd edition, 1997.

- [38] Peter Whittle. On stationary processes in the plane. Biometrika, pages 434–449, 1954.