The Dialog Must Go On:

Improving Visual Dialog via Generative Self-Training

Abstract

Visual dialog (VisDial) is a task of answering a sequence of questions grounded in an image, using the dialog history as context. Prior work has trained the dialog agents solely on VisDial data via supervised learning or leveraged pre-training on related vision-and-language datasets. This paper presents a semi-supervised learning approach for visually-grounded dialog, called Generative Self-Training (GST), to leverage unlabeled images on the Web. Specifically, GST first retrieves in-domain images through out-of-distribution detection and generates synthetic dialogs regarding the images via multimodal conditional text generation. GST then trains a dialog agent on the synthetic and the original VisDial data. As a result, GST scales the amount of training data up to an order of magnitude that of VisDial (1.2M 12.9M QA data). For robust training of the synthetic dialogs, we also propose perplexity-based data selection and multimodal consistency regularization. Evaluation on VisDial v1.0 and v0.9 datasets shows that GST achieves new state-of-the-art results on both datasets. We further observe the robustness of GST against both visual and textual adversarial attacks. Finally, GST yields strong performance gains in the low-data regime. Code is available at https://github.com/gicheonkang/gst-visdial.

1 Introduction

Recently, there has been extensive research towards developing visually-grounded dialog systems [12, 13, 41, 37] due to their significance in many real-world applications (e.g., helping visually impaired person). Notably, Visual Dialog (VisDial) [12] has provided a testbed for studying such systems, where a dialog agent should answer a sequence of image-grounded questions. For instance, the agent is expected to answer open-ended questions like “What color is it?” and “How old does she look?”. This task requires a holistic understanding of visual information, linguistic semantics in context (e.g., it and she), and most importantly, the grounding of these two.

Most of the previous approaches in VisDial [55, 79, 90, 40, 61, 76, 22, 19, 33, 96, 10, 29, 28, 60, 9, 34] have trained the dialog agents solely on VisDial data via supervised learning. More recent studies [59, 89, 8] have employed self-supervised pre-trained models such as BERT [14] or ViLBERT [54] and finetuned them on VisDial data. The models are typically pre-trained to recover masked inputs and predict the semantic alignment between two segments. This pretrain-then-transfer learning strategy has shown promising results by transferring knowledge from the models pre-trained on large-scale data sources [83, 4, 97] to VisDial.

Our research question is the following: How can the dialog agent expand its knowledge beyond what it can acquire via supervised learning or self-supervised pre-training on the provided datasets? Some recent studies have shown that semi-supervised learning and pre-training have complementary modeling capabilities in image [98] and text classification [16]. Inspired by them, we consider semi-supervised learning (SSL) as a way to address the above question.

Let us assume that large amounts of unlabeled images are available. SSL for VisDial can be applied to generate synthetic conversations for the unlabeled images and train the agent with the synthetic data. However, there are two critical challenges to this approach. First, the target output for VisDial (i.e., multi-turn visual QA data) is more complex than that of the aforementioned studies [98, 16]. Specifically, they have addressed the classification problems, yielding class probabilities as pseudo labels [45]. In contrast, SSL for VisDial should generate a sequence of pseudo queries (i.e., visual questions) and pseudo labels (i.e., corresponding answers) in natural language to train the answering agent. It further indicates that the target output should be generated while considering the multimodal and sequential nature of the visual dialog task. Next, even if SSL yields synthetic dialogs via text generation, there may be noise, such as generating irrelevant questions or incorrect answers to given contexts. A robust training method is required to leverage such noisy synthetic dialog datasets.

In this paper, we study the above challenges in the context of SSL, especially self-training [98, 16, 45, 6, 84, 91, 92, 23, 77, 50, 71, 58, 35, 31, 85], where a teacher model trained on labeled data predicts the pseudo labels for unlabeled data. Then, a student model jointly learns on the labeled and the pseudo-labeled datasets. Unlike existing studies in self-training that have mainly studied uni-modal, discriminative tasks such as image classification [92, 98, 84] or text classification [16, 58, 35], we extend the idea of self-training to the task of multimodal conditional text generation.

To this end, we propose a new learning strategy, called Generative Self-Training (GST), that artificially generates multi-turn visual QA data and utilizes the synthetic data for training. GST first trains the teacher model (answerer) and the visual question generation model (questioner) using VisDial data. It then retrieves a set of unlabeled images from a Web image dataset, Conceptual 12M [7]. Next, the questioner and the teacher generate a series of visual QA pairs for the retrieved images. Finally, the student is trained on the synthetic and the original VisDial data. We also propose perplexity-based data selection (PPL) and multimodal consistency regularization (MCR) to effectively train the student with the noisy dialog data. PPL is to selectively utilize the answers whose perplexity of the teacher is below a threshold. MCR encourages the student to yield consistent predictions when the perturbed multimodal inputs are given. As a result, GST successfully augments the synthetic VisDial data (11.7M QA pairs), thus mitigating the need to scale up the size of the human-annotated VisDial data, which is prohibitively expensive and time-consuming.

Our key contributions are three-fold. First, we propose Generative Self-Training (GST) that generates multi-turn visual QA data to leverage unlabeled Web images effectively. Second, experiments show that GST achieves new state-of-the-art performance on VisDial v1.0 and v0.9 datasets. We further demonstrate two important results: (1) GST is indeed effective when the human-annotated visual dialog data is extremely scarce (improving up to 11.09 absolute points on NDCG), and (2) PPL and MCR are effective when training the noisy synthetic dialog data. Third, to validate the robustness of GST, we evaluate our proposed method under three different visual and textual adversarial attacks, i.e., FGSM, coreference, and random token attacks. We observe that GST significantly improves the performance compared with the baseline models against all adversarial attacks, especially boosting NDCG scores from 21.60% to 45.43% in the FGSM attack [20].

2 Related work

Visual dialog. Visual Dialog (VisDial) [12] has been proposed as an extended version of Visual Question Answering (VQA) [4, 3, 36], where a dialog agent should answer a series of interdependent questions using an image and the dialog history. Prior work has developed a variety attention mechanisms [55, 79, 90, 40, 61, 76, 22, 19, 33, 60] considering the interactions among the image, dialog history, and question. Some studies [96, 34] have attempted to discover the semantic structures of the dialog in the context of graph neural networks [74] using the soft attention mechanisms [5]. From the learning algorithm perspective, all of them have relied on supervised learning on VisDial data. More recently, a line of research [59, 89, 8] has employed self-supervised pre-training to leverage the knowledge of related vision-and-language datasets [83, 4, 97]. However, our approach is based on semi-supervised learning and produces the task-specific data (i.e., visual dialogs) for unlabeled images to train the dialog agent.

Sequence generation in vision-and-language tasks. Many studies have generated natural language for the visual inputs such as image captioning [93, 3], video captioning [26, 63], visual question generation (VQG) [32, 42, 18, 53, 64, 27], visual dialog (VisDial) [12, 19], and video dialog [2, 44]. Furthermore, recent studies [94, 46] have produced text data for vision-and-language pre-training. GST is similar to these studies in that the model generates the text data, but our focus is on studying the effect of semi-supervised learning (SSL) on top of such pre-training approaches. To the best of our knowledge, GST is the first approach to show the efficacy of SSL throughout a wide range of visual QA tasks.

Neural dialog generation. In NLP literature, extensive studies have been conducted regarding neural dialogue generation for both open-domain dialogue [95, 82, 47, 80, 73, 48] and task-oriented dialogue [88, 25]. Our approach is similar to neural dialogue generation in that the model should generate a corresponding response based on the dialog history and the current utterance. However, we aim to produce visually-grounded dialogs, and thus the image-groundedness of the question and the semantic correctness of the answer are important. On the other hand, neural dialogue generation considers many different aspects: specificity, response-relatedness [78], interestingness [56], and diversity [47].

3 Approach

3.1 Preliminaries

Self-training. We have a labeled dataset = and an unlabeled dataset = . Typically, self-training trains a teacher model on the labeled dataset . The teacher then predicts the pseudo label for the unlabeled data , constructing the pseudo-labeled dataset = . Finally, a student model is trained on . Many variants have been studied on this setup: (1) selecting the subset of the pseudo-labeled dataset [23, 92, 84], (2) adding noise to inputs [98, 23, 92, 91, 84], and (3) iterating the above setup multiple times [23, 92].

Visual dialog. The visual dialog (VisDial) dataset [12] contains an image and a visually-grounded dialog = where denotes an image caption. is the number of rounds for each dialog. At round , a dialog agent is given a triplet as an input, consisting of the image, the dialog history, and a visual question. denotes all dialog rounds before the -th round. The agent is then expected to predict a ground-truth answer . There are two broad classes of methods in VisDial: generative and discriminative. Generative models aim to generate the ground-truth answer by maximizing the log-likelihood of . In contrast, discriminative models are trained to retrieve the ground-truth answer from a list of answer candidates . Our main focus is the generative models since they do not need pre-defined answer candidates and are thus more practical to be deployed in real-world applications.

3.2 Generative Self-Training (GST)

This subsection describes our approach, called GST, which generates multi-turn visual QA data and utilizes the generated data for training. An overview of GST is shown in Figure 1. We have a human-labeled VisDial dataset where is a given image, and each dialog consists of an image caption and rounds of QA pairs. In the following, we omit the superscript in the ground-truth answer for brevity. GST first trains a teacher and a questioner with the labeled dataset via supervised learning. It then retrieves unlabeled images from the Conceptual 12M dataset [7] using a simple outlier detection model, the multivariate normal distribution. Next, the questioner and the teacher generate the visually-grounded dialog for the unlabeled image via multimodal conditional text generation, finally yielding a synthetic dialog dataset . We call this dataset the silver VisDial data to distinguish it from the human-labeled VisDial dataset [12] (short for the gold VisDial data). Finally, a student is trained on a combination of the gold and the silver VisDial data while applying perplexity-based data selection (PPL) and multimodal consistency regularization (MCR) to the silver VisDial data. We describe the details of each process in the following parts.

Teacher & questioner training. First, a series of question-and-answer pairs for the unlabeled images should be generated to train the answering agent. Accordingly, GST first trains the answer generator, the teacher model , on the gold VisDial dataset. Specifically, the teacher learns to generate the ground-truth answer’s word sequence , given the context triplet , consisting of the image, the dialog history, and the question. It is optimized by minimizing the negative log-likelihood of the ground-truth answer. Formally,

| (1) |

where , , and denote the number of data tuples in gold VisDial data, dialog rounds, and the sequence length of the ground-truth answer, respectively. indicates all word tokens before the -th token in the answer sequence. Similar to the teacher, the questioner is trained to generate the question at round , given the image and the dialog history until round (i.e., ). The questioner is also optimized by minimizing the negative log-likelihood of the follow-up question. Note that the teacher and the questioner are trained separately to prevent possible unintended co-adaptation [37]. Both the teacher and the questioner are based on encoder-decoder architecture, where an encoder aggregates the context triplet, and a decoder generates the target sentence. We implement the models by integrating a pre-trained vision-and-language encoder, ViLBERT [54], with the transformer decoder [72]. We refer readers to Appendix A for a detailed architecture.

Unlabeled in-domain image retrieval (IIR). Inspired by the work [16] that highlighted the importance of using in-domain data, GST retrieves in-domain image data from the Conceptual 12M dataset [7] with an out-of-distribution (OOD) detection model. Specifically, we extract the dimensional feature vector for each image in the gold VisDial dataset by using the Vision Transformer (ViT) [15] in the CLIP model [67], yielding a feature matrix for the entire images . Based on the matrix, we build the multivariate normal distribution whose dimension is , i.e., . We regard this normal distribution as the empirical distribution of the gold VisDial images and perform OOD detection by identifying the probability of each feature vector for the unlabeled image. Consequently, the top- unlabeled images are retrieved out of 12 million Web images ( million).

Visually-grounded dialog generation. This step mimics a scenario where two people have a conversation about the given images. Given the retrieved images , our goal is to generate the visually-grounded dialogs where each dialog consists of the image caption and rounds of QA pairs. In an actual implementation, we use the image captions in the Conceptual 12M dataset [7] and thus do not generate the captions. The QA pairs are sequentially generated. Concretely, the image , the caption , and the generated QA pairs until round are used as inputs when the questioner generates the question at round (i.e., ). After then, the teacher produces the corresponding answer based on the image , the dialog history , and the question . Finally, GST produces the silver VisDial dataset .

Student training with noisy data. In Figure 1, the student is trained on the combination of the silver and the gold VisDial data. According to many studies [92, 23, 84, 98] in self-training, selectively utilizing the samples in the pseudo-labeled dataset is a common approach since the confidence of the teacher model’s predictions varies from sample to sample. To this end, we introduce a simple yet effective data selection method for the sequence generation problem, perplexity-based data selection (PPL). PPL is to utilize the answers whose perplexity of the teacher is below a certain threshold. Perplexity is defined as the exponentiated average negative log-likelihood of a sequence; the lower, the better. We hypothesize that PPL, albeit noisy, can be an indicator of whether the generated answer is correct or not, as in [81]. Furthermore, inspired by the consistency regularization [91, 84] widely utilized in recent SSL algorithms, we also propose the multimodal consistency regularization (MCR) to improve the generalization capability of the student. MCR encourages the student to yield predictions similar to the teacher’s predictions even when the student is provided with perturbed multimodal inputs. Finally, we design a loss function for the student as:

| (2) |

where , , and denote the number of data tuples in silver VisDial data, indicator function, and selection threshold, respectively. denotes the context for the silver VisDial data. The loss function is the sum of the losses for the silver and the gold VisDial data. PPL and MCR are applied to compute the loss of the silver VisDial data. PPL is used in the indicator function above, selecting the synthetic answers whose perplexity of the teacher is below the threshold . It implies that the unselected answers are ignored during training. The teacher’s perplexity of each answer is computed in the dialog generation step above. Next, denotes the stochastic function for MCR that injects perturbations to the input space of the student. Inspired by ViLBERT [54], we implement the stochastic function by randomly masking 15% of image regions and word tokens. Specifically, masked image regions have their image features zeroed out, and the masked word tokens are replaced with a special [MASK] token. The intuition behind MCR is minimizing the distance between the perturbed (i.e., masked) predictions from the student and the unperturbed predictions (i.e., ) from the teacher. It indicates that the perturbation is not injected when the teacher generates the synthetic answers. We believe MCR makes the student robust to the input noise, and PPL encourages the student to maintain a low entropy (i.e., confident) in noisy data training. The student and the teacher have the same model capacity and are based on the same model architecture.

4 Experiments

4.1 Experimental setup

VisDial datasets. We evaluate our proposed approach on the VisDial v1.0 and v0.9 datasets [12], collected by the AMT chatting between two workers about MS-COCO [52] images. Each dialog consists of a caption from COCO and a sequence of ten QA pairs. The VisDial v0.9 dataset has 83k dialogs on COCO-train and 40k dialogs on COCO-validation images. More recently, Das et al. [12] released additional 10k dialogs on Flickr images to use them as validation and test splits for the VisDial v1.0 dataset. As a result, the VisDial v1.0 dataset contains 123k, 2k, and 8k dialogs as train, validation, and test split. This dataset is licensed under a Creative Commons Attribution 4.0 International License.

Evaluation protocol. We follow the standard evaluation protocol established in the work [12] for evaluating visual dialog models. The visual dialog models for both generative and discriminative tasks have been evaluated by the retrieval-based evaluation metrics: mean reciprocal rank (MRR), recall@k (R@k), mean rank (Mean), and normalized discounted cumulative gain (NDCG). Specifically, all dialogs in VisDial contain a list of 100 answer candidates for each visual question, and there is one ground-truth answer in the answer candidates. The model sorts the answer candidates by the log-likelihood scores and then is evaluated by the four different metrics. MRR, R@k, and Mean consider the rank of the single ground-truth answer, while NDCG111https://visualdialog.org/challenge/2019#evaluation considers all relevant answers from the 100-answers list by using the densely annotated relevance scores for all answer candidates. The community regards NDCG as the primary evaluation metric.

The size of synthetic data. The size of the silver VisDial data (i.e., ) is 3.6M which is 30x larger than that of the gold VisDial data (M). Note that the silver VisDial data contains approximately 36M QA pairs since each dialog contains 10 QA pairs. 11.7M QA pairs out of 36M (32%) are actually utilized after applying perplexity-based data selection when we set the selection threshold to 50. Consequently, the total amount of the training data is nearly 12.9M QA pairs, combining the silver data (11.7M QA pairs) with the original gold data (1.2M QA pairs).

Iterative training. We introduce the concept of iterative training [92, 23], which iterates the self-training algorithm a few times. The iterative training treats the student model at -th iteration as a teacher model at (+1)-th iteration to generate a new synthetic silver data and train a new student. Specifically, the iterative training repeats the third and fourth steps in Figure 1, where the silver VisDial data accumulates as the iteration proceeds. The student model at each iteration is trained with the accumulated silver and gold data by following the previous studies [92, 23]. We iterate GST up to three times. Unless stated otherwise, the student model is trained with three iterations.

4.2 Visual dialog results

Comparison with state-of-the-art. We compare GST with the state-of-the-art approaches on the validation set of the VisDial v1.0 and v0.9 datasets, consisting of UTC [8], MITVG [9], VD-BERT [89], LTMI [60], KBGN [28], DAM [29], ReDAN [19], DMRM [10], Primary [22], RvA [61], CorefNMN [40], CoAtt [90], HCIAE [55], and MN [12]. We decided to use the validation splits since all previous studies benchmarked the models on those splits. In Table 1, GST significantly outperforms all compared methods on all evaluation metrics. Compared with the state-of-the-art model, the student model improves MRR 3.20% (56.83 60.03) and R@1 3.26% (47.14 50.40) on the VisDial v0.9 dataset. The improvement is consistently observed on the VisDial v1.0 dataset, boosting NDCG 1.61% (63.86 65.47) and MRR 0.97% (52.22 53.19). Moreover, it is noticeable that recent strong models (i.e., UTC, MITVG, and VD-BERT) are also built based on the pre-trained weights of ViLBERT [54], transformer [87], and BERT [14], respectively. Our proposed method also achieves new state-of-the-art results on the discriminative VisDial models. Details can be found in Appendix B.

| VisDial v0.9 (val) | VisDial v1.0 (val) | ||||||||||

| Model | MRR | R@1 | R@5 | R@10 | Mean | NDCG | MRR | R@1 | R@5 | R@10 | Mean |

| MN [12] | 52.59 | 42.29 | 62.85 | 68.88 | 17.06 | 51.86 | 47.99 | 38.18 | 57.54 | 64.32 | 18.60 |

| HCIAE [55] | 53.86 | 44.06 | 63.55 | 69.24 | 16.01 | 59.70 | 49.07 | 39.72 | 58.23 | 64.73 | 18.43 |

| CoAtt [90] | 55.78 | 46.10 | 65.69 | 71.74 | 14.43 | 59.24 | 49.64 | 40.09 | 59.37 | 65.92 | 17.86 |

| CorefNMN [40] | 53.50 | 43.66 | 63.54 | 69.93 | 15.69 | - | - | - | - | - | - |

| RvA [61] | 55.43 | 45.37 | 65.27 | 72.97 | 10.71 | - | - | - | - | - | - |

| Primary [22] | - | - | - | - | - | - | 49.01 | 38.54 | 59.82 | 66.94 | 16.60 |

| DMRM [10] | 55.96 | 46.20 | 66.02 | 72.43 | 13.15 | - | 50.16 | 40.15 | 60.02 | 67.21 | 15.19 |

| ReDAN [19] | - | - | - | - | - | 60.47 | 50.02 | 40.27 | 59.93 | 66.78 | 17.40 |

| DAM [29] | - | - | - | - | - | 60.93 | 50.51 | 40.53 | 60.84 | 67.94 | 16.65 |

| KBGN [28] | - | - | - | - | - | 60.42 | 50.05 | 40.40 | 60.11 | 66.82 | 17.54 |

| LTMI [60] | - | - | - | - | - | 63.58 | 50.74 | 40.44 | 61.61 | 69.71 | 14.93 |

| VD-BERT [89] | 55.95 | 46.83 | 65.43 | 72.05 | 13.18 | - | - | - | - | - | - |

| MITVG [9] | 56.83 | 47.14 | 67.19 | 73.72 | 11.95 | 61.47 | 51.14 | 41.03 | 61.25 | 68.49 | 14.37 |

| UTC [8] | - | - | - | - | - | 63.86 | 52.22 | 42.56 | 62.40 | 69.51 | 15.67 |

| Student (ours) | 60.03.18 | 50.40.15 | 70.74.09 | 77.15.13 | 12.13.18 | 65.47.14 | 53.19.11 | 43.08.10 | 64.09.05 | 71.51.13 | 14.34.15 |

| NDCG | |||||

| Model | 1% | 5% | 10% | 20% | 30% |

| Teacher | 27.64 | 50.04 | 54.46 | 57.14 | 60.67 |

| Student | 38.73 (+11.09) | 56.60 (+6.56) | 58.62 (+4.16) | 60.92 (+3.78) | 63.09 (+2.42) |

GST in the low-data regime. Is GST also helpful when gold data is scarce? We investigate this question to identify the effect of GST in the low-data regime. We assume that only a small subset of the gold VisDial data (1%, 5%, 10%, 20%, and 30%) is available. Therefore, the size of the gold data is 0.01, 0.05, 0.1, 0.2, and 0.3, respectively. We first train the teacher and the questioner on such scarce data, and then these two agents generate a new silver VisDial data for unlabeled images in the Conceptual 12M dataset [7] with size . The student is then trained on the newly generated silver VisDial data and the small amount of the gold VisDial data. The student is based on a single iterative training, and PPL and MCR are still applied in this experiment. In Table 2, GST yields huge improvements on both metrics, especially NDCG, boosting up to 11.09 absolute points compared with the teacher. We observe that the smaller the amount of gold data, the larger the performance gap between the teacher and the student on NDCG. It implies that GST is helpful, especially when gold data is scarce. We speculate the results in the low-data regime are particularly remarkable in other dialog-based tasks [86, 2, 69, 51] since they are based on relatively small-scaled datasets, and scaling up the size of the human-dialog datasets is laborious and expensive.

Question type analysis. We conduct a question-type analysis to identify what type of questions obtain benefits from GST. We divided the question type into six categories, Yes/No, Color, Objects, Counting, Time/Place, and Others. In Table 3, the student model obtains more gains compared with the teacher model in less frequent question types (e.g., Counting and Time / Place).

4.3 Adversarial robustness results

We introduce a comprehensive evaluation setup for adversarial robustness in VisDial. Specifically, we propose three different adversarial attacks: (1) the FGSM attack, (2) a coreference attack, and (3) a random token attack. The FGSM attack perturbs input visual features, and the others attack the dialog history (i.e., textual inputs).

Baselines. We compare our student model against two ablative baselines: (1) the teacher model and (2) the student model utilizing the entire CC12M images without applying the in-domain image retrieval (i.e., student-iter1-full). We propose the student-iter1-full model to study the effect of the discarded images and the corresponding synthetic dialog data on adversarial robustness.

| Model | Question Type | |||||

| Yes / No | Color | Objects | Counting | Time / Place | Others | |

| (60.4%) | (14.8%) | (5.1%) | (3.1%) | (8.5%) | (9.0%) | |

| Teacher | 66.87 | 60.61 | 53.67 | 49.44 | 69.36 | 61.32 |

| Student | 67.41 (+0.54) | 61.85 (+1.24) | 55.25 (+1.58) | 51.76 (+2.32) | 71.38 (+2.02) | 63.02 (+1.70) |

| Model | No Attack | Coreference Attack | Random Token Attack | |||

| 10% | 20% | 30% | 40% | |||

| Teacher | 56.55 | 52.60 | 54.691.12 | 52.860.79 | 49.412.09 | 45.042.28 |

| Student (iter1, full) | 58.53 | 54.26 | 56.591.37 | 54.551.15 | 50.982.06 | 46.561.96 |

| Student (iter1) | 58.63 | 54.34 | 55.590.88 | 54.261.54 | 51.042.39 | 47.042.03 |

| Student (iter2) | 56.92 | 52.69 | 55.590.88 | 53.571.40 | 49.951.91 | 46.822.02 |

| Student (iter3) | 59.30 | 55.44 | 57.250.91 | 55.101.50 | 52.112.75 | 48.002.90 |

Adversarial robustness against the FGSM attack. The Fast Gradient Signed Method (FGSM) [20] is a white-box attack that perturbs the visual inputs based on the gradients of the loss with respect to the visual inputs. Formally,

| (3) |

where and denote the visual inputs and the corresponding ground-truth labels, respectively. is a hyperparameter that adjusts the intensity of perturbations. However, different from the above setup, each question in VisDial can have one or more relevant answers in the list of answer candidates. We thus define the FGSM attack for VisDial as follows:

| (4) |

where and denote the number of answer candidates and a function that returns the human-annotated relevance scores for each answer candidate, respectively. The relevance scores range from 0 to 1. and are the context triplet (i.e., ) and the -th answer candidate, respectively. The Equation 4 indicates that the gradients of the loss for all relevant answers are considered for the FGSM attack.

As shown in Figure 2, we validate the models with four different epsilon values . The student model shows very significant improvements in NDCG compared with the teacher model. Specifically, the performance gap between the student model with three iterations (i.e., student-iter3) and the teacher model widens up to 23.83 absolute points (21.60 45.43) when is 0.1. It illustrates that GST makes the visual dialog model robust against the FGSM attack even though the student model is not optimized for adversarial robustness. Furthermore, we can clearly identify the efficacy of the iterative training as the intensity of the perturbations increases. The NDCG scores are boosted from 37.82% (iter1) to 45.43% (iter3) at . Finally, the student-iter1 model shows better performance than the student-iter1-full model. It implies that the additional use of the discarded images along with the synthetic dialog does not bring any gains in the FGSM attack.

Adversarial robustness against the textual attacks. We also study the adversarial robustness against textual attacks to illustrate the effect of GST. We decide to perturb the dialog history because it contains useful information to answer the given question (e.g., cues for pronoun). However, according to recent studies [1, 34] in VisDial, not all questions require the dialog history to respond with the correct answers. So the work [1] has proposed a challenging subset of the VisDial validation dataset called VisDialConv. The VisDialConv dataset only contains questions that necessarily require the dialog history to answer (e.g., can you tell what it is for?). The crowd-workers conducted a manual inspection to select such context-dependent questions.

Based on the VisDialConv dataset, we apply two different black-box attacks. First, we propose the coreference attack, which substitutes the noun phrases or pronouns in the dialog history with their synonyms to fool the VisDial models. Specifically, we leverage the off-the-shelf neural coreference resolution tool222https://github.com/huggingface/neuralcoref based on the work [11]. and find words in the dialog history that refer to objects such as those mentioned in a given question. We also borrow the counter-fitting word embeddings [57] similar to textfooler [30] to retrieve the synonyms. We greedily substitute the words with the synonyms with a minimum cosine distance in the embedding space since we observe that the other synonyms harm the original semantics of the dialog history. In Table 4, the student-iter3 model outperforms the teacher model on NDCG by a large margin (2.84%, 52.60 55.44) in the coreference attack. Furthermore, we do not see any merit in utilizing the entire CC12M [7] images and the corresponding synthetic dialog data, comparing the student-iter1-full with the student-iter1.

The random token attack randomly replaces the word or sub-word tokens in the dialog history with a special [MASK] token. The pre-trained BERT model [14] then recovers the masked tokens with masked language modeling (MLM) similar to BERT-ATTACK [49]. Finally, the perturbed dialog history is fed into the visual dialog models. We conduct this experiment by adjusting the probability of random masking up to 40%. As shown in Table 4, we evaluate each model with five random seeds and report the arithmetic mean and the standard deviations. The results demonstrate that GST is relatively robust against the random token attack compared with the baseline models.

4.4 Analysis of the silver VisDial data

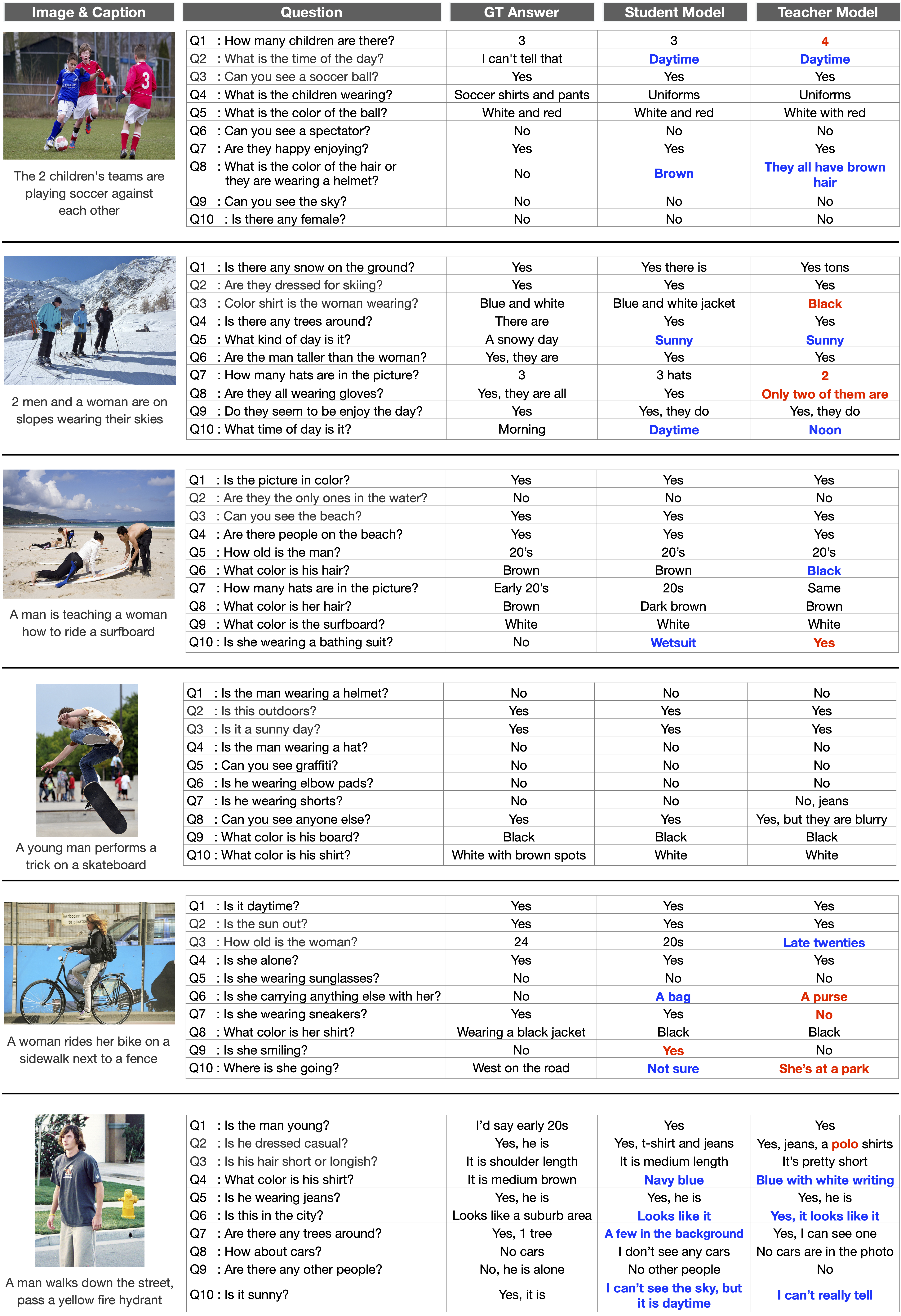

Comparison between silver and gold data. For qualitative analysis of the silver data, we visualize the generated conversations from our proposed models and the ones from humans. We excerpt the human conversation from the VisDial v1.0 validation dataset, and the questioner and the student generate the machine conversation using the image and the caption in the validation data. As shown in Figure 3, diverse visual questions are generated in the silver VisDial data. For example, in D10 of the last example, the questioner asks about “a car” not mentioned by the human questioner and not even presented in the image caption. The student responds correctly to the question. Likewise, from D3 to D6 in the first example, the questioner deals with “a cell phone,” whereas the human questioner deals with different topics. However, we identify that the student sometimes fails to generate correct answers (i.e., the red-colored text), showing the importance of more precise visual grounding.

The diversity of silver questions. We further quantify the generated question’s diversity by comparing the gold questions with the silver ones for the same images in the VisDial v1.0 validation dataset. We extract N-grams for every ten questions (i.e., per image) in the gold and silver data and compare the N-grams between the two. We define the question diversity as the percentage of unique silver N-grams not observed in the gold N-grams. We identify the question diversity by adjusting N from one to four. We generate three silver datasets and report the mean and standard deviations of the question diversity since the questioner performs stochastic decoding (see Appendix D). In Table 5, the diversity significantly increases as N increases (92.80% at N=4). It indicates that the questioner mainly generates different and distinctive 4-grams compared with the human questioner.

| Model | N-gram Diversity | No Match | |||

| N=1 | N=2 | N=3 | N=4 | ||

| Questioner | 28.06 0.14 | 56.46 0.09 | 76.98 0.08 | 92.80 0.08 | 95.38 0.15 |

Furthermore, as shown in No Match at Table 5, the questioner rarely generates the same questions that belong to gold questions. We analyze the answer diversity in Appendix C.

4.5 Ablation study

The results of an ablation study are in Appendix B.2.

5 Conclusion

We propose a semi-supervised learning approach for VisDial, called GST, that generates a synthetic visual dialog dataset for unlabeled Web images via multimodal conditional text generation. GST achieves the new state-of-the-art performance on the VisDial v1.0 and v0.9 datasets. Moreover, we demonstrate the efficacy of GST in low-data regime and adversarial robustness analysis. Finally, GST produces diverse dialogs compared with the human dialog. We believe the idea of GST is generally applicable to other multimodal generative domains and expect GST to open the door to leveraging unlabeled images in many visual QA tasks.

Acknowledgements. This work was supported by the SNU-NAVER Hyperscale AI Center and the Institute of Information & Communications Technology Planning & Evaluation (IITP) (2021-0-01343-GSAI/40%, 2022-0-00953-PICA/30%, 2022-0-00951-LBA/20%, 2021-0-02068-AIHub/10%) grant funded by the Korean government.

References

- [1] Shubham Agarwal, Trung Bui, Joon-Young Lee, Ioannis Konstas, and Verena Rieser. History for visual dialog: Do we really need it? In ACL, 2020.

- [2] Huda Alamri, Vincent Cartillier, Abhishek Das, Jue Wang, Anoop Cherian, Irfan Essa, Dhruv Batra, Tim K Marks, Chiori Hori, Peter Anderson, et al. Audio visual scene-aware dialog. In CVPR, 2019.

- [3] Peter Anderson, Xiaodong He, Chris Buehler, Damien Teney, Mark Johnson, Stephen Gould, and Lei Zhang. Bottom-up and top-down attention for image captioning and visual question answering. In CVPR, 2018.

- [4] Stanislaw Antol, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C Lawrence Zitnick, and Devi Parikh. Vqa: Visual question answering. In ICCV, 2015.

- [5] Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. Neural machine translation by jointly learning to align and translate. In ICLR, 2014.

- [6] David Berthelot, Nicholas Carlini, Ian Goodfellow, Nicolas Papernot, Avital Oliver, and Colin A Raffel. Mixmatch: A holistic approach to semi-supervised learning. In NeurIPS, 2019.

- [7] Soravit Changpinyo, Piyush Sharma, Nan Ding, and Radu Soricut. Conceptual 12m: Pushing web-scale image-text pre-training to recognize long-tail visual concepts. In CVPR, 2021.

- [8] Cheng Chen, Yudong Zhu, Zhenshan Tan, Qingrong Cheng, Xin Jiang, Qun Liu, and Xiaodong Gu. Utc: A unified transformer with inter-task contrastive learning for visual dialog. In CVPR, 2022.

- [9] Feilong Chen, Fandong Meng, Xiuyi Chen, Peng Li, and Jie Zhou. Multimodal incremental transformer with visual grounding for visual dialogue generation. In ACL, 2021.

- [10] Feilong Chen, Fandong Meng, Jiaming Xu, Peng Li, Bo Xu, and Jie Zhou. Dmrm: A dual-channel multi-hop reasoning model for visual dialog. In AAAI, 2020.

- [11] Kevin Clark and Christopher D Manning. Deep reinforcement learning for mention-ranking coreference models. In ACL, 2016.

- [12] Abhishek Das, Satwik Kottur, Khushi Gupta, Avi Singh, Deshraj Yadav, José MF Moura, Devi Parikh, and Dhruv Batra. Visual dialog. In CVPR, 2017.

- [13] Harm De Vries, Florian Strub, Sarath Chandar, Olivier Pietquin, Hugo Larochelle, and Aaron Courville. Guesswhat?! visual object discovery through multi-modal dialogue. In CVPR, 2017.

- [14] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. In NAACL, 2019.

- [15] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. In ICLR, 2021.

- [16] Jingfei Du, Edouard Grave, Beliz Gunel, Vishrav Chaudhary, Onur Celebi, Michael Auli, Ves Stoyanov, and Alexis Conneau. Self-training improves pre-training for natural language understanding. In NAACL, 2021.

- [17] Angela Fan, Mike Lewis, and Yann Dauphin. Hierarchical neural story generation. In ACL, 2018.

- [18] Zhihao Fan, Zhongyu Wei, Piji Li, Yanyan Lan, and Xuanjing Huang. A question type driven framework to diversify visual question generation. In IJCAI, 2018.

- [19] Zhe Gan, Yu Cheng, Ahmed EI Kholy, Linjie Li, Jingjing Liu, and Jianfeng Gao. Multi-step reasoning via recurrent dual attention for visual dialog. In ACL, 2019.

- [20] Ian J Goodfellow, Jonathon Shlens, and Christian Szegedy. Explaining and harnessing adversarial examples. In ICLR, 2015.

- [21] Yves Grandvalet and Yoshua Bengio. Semi-supervised learning by entropy minimization. In NIPS, 2005.

- [22] Dalu Guo, Chang Xu, and Dacheng Tao. Image-question-answer synergistic network for visual dialog. In CVPR, 2019.

- [23] Junxian He, Jiatao Gu, Jiajun Shen, and Marc’Aurelio Ranzato. Revisiting self-training for neural sequence generation. In ICLR, 2020.

- [24] Ari Holtzman, Jan Buys, Maxwell Forbes, Antoine Bosselut, David Golub, and Yejin Choi. Learning to write with cooperative discriminators. In ACL, 2018.

- [25] Xinting Huang, Jianzhong Qi, Yu Sun, and Rui Zhang. Mala: Cross-domain dialogue generation with action learning. In AAAI, 2020.

- [26] Vladimir Iashin and Esa Rahtu. Multi-modal dense video captioning. In CVPR Workshops, 2020.

- [27] Unnat Jain, Ziyu Zhang, and Alexander G Schwing. Creativity: Generating diverse questions using variational autoencoders. In CVPR, 2017.

- [28] Xiaoze Jiang, Siyi Du, Zengchang Qin, Yajing Sun, and Jing Yu. Kbgn: Knowledge-bridge graph network for adaptive vision-text reasoning in visual dialogue. In Proceedings of the 28th ACM International Conference on Multimedia, pages 1265–1273, 2020.

- [29] Xiaoze Jiang, Jing Yu, Yajing Sun, Zengchang Qin, Zihao Zhu, Yue Hu, and Qi Wu. Dam: Deliberation, abandon and memory networks for generating detailed and non-repetitive responses in visual dialogue. In IJCAI, 2020.

- [30] Di Jin, Zhijing Jin, Joey Tianyi Zhou, and Peter Szolovits. Is bert really robust? a strong baseline for natural language attack on text classification and entailment. In AAAI, 2020.

- [31] Hwiyeol Jo and Ceyda Cinarel. Delta-training: Simple semi-supervised text classification using pretrained word embeddings. In EMNLP, 2019.

- [32] Shen Kai, Lingfei Wu, Siliang Tang, Yueting Zhuang, Zhuoye Ding, Yun Xiao, Bo Long, et al. Learning to generate visual questions with noisy supervision. In NeurIPS, 2021.

- [33] Gi-Cheon Kang, Jaeseo Lim, and Byoung-Tak Zhang. Dual attention networks for visual reference resolution in visual dialog. In EMNLP, 2019.

- [34] Gi-Cheon Kang, Junseok Park, Hwaran Lee, Byoung-Tak Zhang, and Jin-Hwa Kim. Reasoning visual dialog with sparse graph learning and knowledge transfer. In EMNLP, 2021.

- [35] Giannis Karamanolakis, Subhabrata Mukherjee, Guoqing Zheng, and Ahmed Hassan Awadallah. Self-training with weak supervision. In NAACL, 2021.

- [36] Jin-Hwa Kim, Jaehyun Jun, and Byoung-Tak Zhang. Bilinear attention networks. In NeurIPS, volume 31, 2018.

- [37] Jin-Hwa Kim, Nikita Kitaev, Xinlei Chen, Marcus Rohrbach, Byoung-Tak Zhang, Yuandong Tian, Dhruv Batra, and Devi Parikh. Codraw: Collaborative drawing as a testbed for grounded goal-driven communication. In ACL, 2019.

- [38] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In ICLR, 2014.

- [39] Guillaume Klein, Yoon Kim, Yuntian Deng, Jean Senellart, and Alexander M Rush. Opennmt: Open-source toolkit for neural machine translation. In ACL, 2017.

- [40] Satwik Kottur, José MF Moura, Devi Parikh, Dhruv Batra, and Marcus Rohrbach. Visual coreference resolution in visual dialog using neural module networks. In ECCV, 2018.

- [41] Satwik Kottur, José M. F. Moura, Devi Parikh, Dhruv Batra, and Marcus Rohrbach. Clevr-dialog: A diagnostic dataset for multi-round reasoning in visual dialog. In NAACL, 2019.

- [42] Ranjay Krishna, Michael Bernstein, and Li Fei-Fei. Information maximizing visual question generation. In CVPR, 2019.

- [43] Ranjay Krishna, Yuke Zhu, Oliver Groth, Justin Johnson, Kenji Hata, Joshua Kravitz, Stephanie Chen, Yannis Kalantidis, Li-Jia Li, David A Shamma, et al. Visual genome: Connecting language and vision using crowdsourced dense image annotations. In ICCV, 2017.

- [44] Hung Le, Doyen Sahoo, Nancy F Chen, and Steven CH Hoi. Multimodal transformer networks for end-to-end video-grounded dialogue systems. In ACL, 2019.

- [45] Dong-Hyun Lee et al. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In ICML Workshop on challenges in representation learning, 2013.

- [46] Junnan Li, Dongxu Li, Caiming Xiong, and Steven Hoi. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. arXiv preprint arXiv:2201.12086, 2022.

- [47] Jiwei Li, Will Monroe, Alan Ritter, Michel Galley, Jianfeng Gao, and Dan Jurafsky. Deep reinforcement learning for dialogue generation. In EMNLP, 2016.

- [48] Jiwei Li, Will Monroe, Tianlin Shi, Sébastien Jean, Alan Ritter, and Dan Jurafsky. Adversarial learning for neural dialogue generation. In EMNLP, 2017.

- [49] Linyang Li, Ruotian Ma, Qipeng Guo, Xiangyang Xue, and Xipeng Qiu. Bert-attack: Adversarial attack against bert using bert. In EMNLP, 2020.

- [50] Xinzhe Li, Qianru Sun, Yaoyao Liu, Qin Zhou, Shibao Zheng, Tat-Seng Chua, and Bernt Schiele. Learning to self-train for semi-supervised few-shot classification. In NeurIPS, volume 32, 2019.

- [51] Yanran Li, Hui Su, Xiaoyu Shen, Wenjie Li, Ziqiang Cao, and Shuzi Niu. Dailydialog: A manually labelled multi-turn dialogue dataset. In IJCNLP, 2017.

- [52] Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C Lawrence Zitnick. Microsoft coco: Common objects in context. In ECCV, 2014.

- [53] Feng Liu, Tao Xiang, Timothy M Hospedales, Wankou Yang, and Changyin Sun. ivqa: Inverse visual question answering. In CVPR, 2018.

- [54] Jiasen Lu, Dhruv Batra, Devi Parikh, and Stefan Lee. Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. In NeurIPS, 2019.

- [55] Jiasen Lu, Anitha Kannan, Jianwei Yang, Devi Parikh, and Dhruv Batra. Best of both worlds: Transferring knowledge from discriminative learning to a generative visual dialog model. In NIPS, 2017.

- [56] Shikib Mehri and Maxine Eskenazi. Unsupervised evaluation of interactive dialog with dialogpt. In SIGDIAL, 2020.

- [57] Nikola Mrkšić, Diarmuid O Séaghdha, Blaise Thomson, Milica Gašić, Lina Rojas-Barahona, Pei-Hao Su, David Vandyke, Tsung-Hsien Wen, and Steve Young. Counter-fitting word vectors to linguistic constraints. In NAACL, 2016.

- [58] Subhabrata Mukherjee and Ahmed Hassan Awadallah. Uncertainty-aware self-training for text classification with few labels. In NeurIPS, 2020.

- [59] Vishvak Murahari, Dhruv Batra, Devi Parikh, and Abhishek Das. Large-scale pretraining for visual dialog: A simple state-of-the-art baseline. In ECCV, 2020.

- [60] Van-Quang Nguyen, Masanori Suganuma, and Takayuki Okatani. Efficient attention mechanism for visual dialog that can handle all the interactions between multiple inputs. In ECCV, 2020.

- [61] Yulei Niu, Hanwang Zhang, Manli Zhang, Jianhong Zhang, Zhiwu Lu, and Ji-Rong Wen. Recursive visual attention in visual dialog. In CVPR, 2019.

- [62] Vicente Ordonez, Girish Kulkarni, and Tamara Berg. Im2text: Describing images using 1 million captioned photographs. In NIPS, 2011.

- [63] Yingwei Pan, Ting Yao, Houqiang Li, and Tao Mei. Video captioning with transferred semantic attributes. In CVPR, 2017.

- [64] Badri N Patro, Sandeep Kumar, Vinod K Kurmi, and Vinay P Namboodiri. Multimodal differential network for visual question generation. In EMNLP, 2018.

- [65] Romain Paulus, Caiming Xiong, and Richard Socher. A deep reinforced model for abstractive summarization. In ICLR, 2018.

- [66] Jiaxin Qi, Yulei Niu, Jianqiang Huang, and Hanwang Zhang. Two causal principles for improving visual dialog. In CVPR, 2020.

- [67] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In ICML, 2021.

- [68] Alec Radford, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, Ilya Sutskever, et al. Language models are unsupervised multitask learners. OpenAI blog, 1(8):9, 2019.

- [69] Hannah Rashkin, Eric Michael Smith, Margaret Li, and Y-Lan Boureau. Towards empathetic open-domain conversation models: A new benchmark and dataset. In ACL, 2019.

- [70] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. Faster r-cnn: Towards real-time object detection with region proposal networks. In NIPS, 2015.

- [71] Chuck Rosenberg, Martial Hebert, and Henry Schneiderman. Semi-supervised self-training of object detection models. In IEEE Workshops on Application of Computer Vision, 2005.

- [72] Sascha Rothe, Shashi Narayan, and Aliaksei Severyn. Leveraging pre-trained checkpoints for sequence generation tasks. In Transactions of the Association for Computational Linguistics, 2020.

- [73] Abdelrhman Saleh, Natasha Jaques, Asma Ghandeharioun, Judy Shen, and Rosalind Picard. Hierarchical reinforcement learning for open-domain dialog. In AAAI, 2020.

- [74] Franco Scarselli, Marco Gori, Ah Chung Tsoi, Markus Hagenbuchner, and Gabriele Monfardini. The graph neural network model. In IEEE Transactions on Neural Networks. IEEE, 2008.

- [75] Christoph Schuhmann, Richard Vencu, Romain Beaumont, Robert Kaczmarczyk, Clayton Mullis, Aarush Katta, Theo Coombes, Jenia Jitsev, and Aran Komatsuzaki. Laion-400m: Open dataset of clip-filtered 400 million image-text pairs. arXiv preprint arXiv:2111.02114, 2021.

- [76] Idan Schwartz, Seunghak Yu, Tamir Hazan, and Alexander G Schwing. Factor graph attention. In CVPR, 2019.

- [77] Henry Scudder. Probability of error of some adaptive pattern-recognition machines. In IEEE Transactions on Information Theory, 1965.

- [78] Abigail See, Stephen Roller, Douwe Kiela, and Jason Weston. What makes a good conversation? how controllable attributes affect human judgments. In NAACL, 2019.

- [79] Paul Hongsuck Seo, Andreas Lehrmann, Bohyung Han, and Leonid Sigal. Visual reference resolution using attention memory for visual dialog. In NIPS, 2017.

- [80] Iulian Serban, Alessandro Sordoni, Ryan Lowe, Laurent Charlin, Joelle Pineau, Aaron Courville, and Yoshua Bengio. A hierarchical latent variable encoder-decoder model for generating dialogues. In AAAI, 2017.

- [81] Siamak Shakeri, Cicero Nogueira dos Santos, Henry Zhu, Patrick Ng, Feng Nan, Zhiguo Wang, Ramesh Nallapati, and Bing Xiang. End-to-end synthetic data generation for domain adaptation of question answering systems. In EMNLP, 2020.

- [82] Lifeng Shang, Zhengdong Lu, and Hang Li. Neural responding machine for short-text conversation. In ACL, 2015.

- [83] Piyush Sharma, Nan Ding, Sebastian Goodman, and Radu Soricut. Conceptual captions: A cleaned, hypernymed, image alt-text dataset for automatic image captioning. In ACL, 2018.

- [84] Kihyuk Sohn, David Berthelot, Nicholas Carlini, Zizhao Zhang, Han Zhang, Colin A Raffel, Ekin Dogus Cubuk, Alexey Kurakin, and Chun-Liang Li. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. In NeurIPS, 2020.

- [85] Nandan Thakur, Nils Reimers, Johannes Daxenberger, and Iryna Gurevych. Augmented sbert: Data augmentation method for improving bi-encoders for pairwise sentence scoring tasks. In NAACL, 2021.

- [86] Jesse Thomason, Michael Murray, Maya Cakmak, and Luke Zettlemoyer. Vision-and-dialog navigation. In CoRL, 2020.

- [87] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. In NIPS, 2017.

- [88] Kai Wang, Junfeng Tian, Rui Wang, Xiaojun Quan, and Jianxing Yu. Multi-domain dialogue acts and response co-generation. In ACL, 2020.

- [89] Yue Wang, Shafiq Joty, Michael R Lyu, Irwin King, Caiming Xiong, and Steven CH Hoi. Vd-bert: A unified vision and dialog transformer with bert. In EMNLP, 2020.

- [90] Qi Wu, Peng Wang, Chunhua Shen, Ian Reid, and Anton Van Den Hengel. Are you talking to me? reasoned visual dialog generation through adversarial learning. In CVPR, 2018.

- [91] Qizhe Xie, Zihang Dai, Eduard Hovy, Thang Luong, and Quoc Le. Unsupervised data augmentation for consistency training. In NeurIPS, 2020.

- [92] Qizhe Xie, Minh-Thang Luong, Eduard Hovy, and Quoc V Le. Self-training with noisy student improves imagenet classification. In CVPR, 2020.

- [93] Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhudinov, Rich Zemel, and Yoshua Bengio. Show, attend and tell: Neural image caption generation with visual attention. In ICML, 2015.

- [94] Antoine Yang, Antoine Miech, Josef Sivic, Ivan Laptev, and Cordelia Schmid. Just ask: Learning to answer questions from millions of narrated videos. In ICCV, 2021.

- [95] Yizhe Zhang, Siqi Sun, Michel Galley, Yen-Chun Chen, Chris Brockett, Xiang Gao, Jianfeng Gao, Jingjing Liu, and Bill Dolan. Dialogpt: Large-scale generative pre-training for conversational response generation. In ACL, 2020.

- [96] Zilong Zheng, Wenguan Wang, Siyuan Qi, and Song-Chun Zhu. Reasoning visual dialogs with structural and partial observations. In CVPR, 2019.

- [97] Yukun Zhu, Ryan Kiros, Rich Zemel, Ruslan Salakhutdinov, Raquel Urtasun, Antonio Torralba, and Sanja Fidler. Aligning books and movies: Towards story-like visual explanations by watching movies and reading books. In ICCV, 2015.

- [98] Barret Zoph, Golnaz Ghiasi, Tsung-Yi Lin, Yin Cui, Hanxiao Liu, Ekin Dogus Cubuk, and Quoc Le. Rethinking pre-training and self-training. In NeurIPS, 2020.

Supplementary Materials

The supplementary materials are organized as:

Appendix A Details of model architecture

A detailed architecture of our proposed model is presented in Figure 4. We use the encoder-decoder model, where the encoder aggregates the multimodal context, and the decoder generates the target sentence using the hidden states of the encoder. The answerer models (i.e., the student and the teacher) utilize the given image, the dialog history, and the question as the context. On the other hand, the questioner uses the given image and the dialog history as context to generate the question.

We employ the ViLBERT model [54] as our encoder. We employ the BERT model [14] for sequence generation [72] as our autoregressive decoder. The decoder has 12 layers of transformer blocks, with each block having 12 attention heads and a hidden size of 768. We present a detailed view of the encoder in (b) for Figure 4. The encoder consists of the vision stream and the language stream. The language stream is the same model as the decoder (i.e., BERT), which has 12 layers of transformer blocks. The vision stream has 6 layers of transformer blocks, with each block having 8 attention heads with a hidden size of 1024. The co-attention layers connect the 6 transformer layers in the vision stream to the last 6 transformer layers in the language stream. The encoder concatenates the hidden states of each stream and passes them to the decoder. The decoder generates the target sentence by using them.

Appendix B Further quantitative analysis

B.1 Experiments on the discriminative models

In this subsection, we discuss the details regarding GST for the discriminative visual dialog. We first describe how we can adapt GST to the discriminative models and then show the results on VisDial v1.0 test-standard split.

Model architecture. Although our main focus is the generative model, we conduct additional experiments to identify the effect of GST in the discriminative VisDial model. Our proposed models (i.e., the student, the teacher, and the questioner) are based on encoder-decoder architecture where the encoder is based on the vision-and-language encoder model [54], and the decoder is the transformer decoder [72]. In this experiment, we remove the decoder model, so the student is based on the encoder-only architecture, the same model architecture as the ViLBERT model [54]. We describe more details in the following subsection.

| VisDial v1.0 (test-std) | ||||||

| Model | NDCG | MRR | R@1 | R@5 | R@10 | Mean |

| CorefNMN [40] | 54.70 | 61.50 | 47.55 | 78.10 | 88.80 | 4.40 |

| RvA [61] | 55.59 | 63.03 | 49.03 | 80.40 | 89.83 | 4.18 |

| Synergistic [22] | 57.32 | 62.20 | 47.90 | 80.43 | 89.95 | 4.17 |

| ReDAN [19] | 61.86 | 53.13 | 41.38 | 66.07 | 74.50 | 8.91 |

| DAN [33] | 57.59 | 63.20 | 49.63 | 79.75 | 89.35 | 4.30 |

| FGA [76] | 52.10 | 63.70 | 49.58 | 80.97 | 88.55 | 4.51 |

| VD-BERT [89] | 59.96 | 65.44 | 51.63 | 82.23 | 90.68 | 3.90 |

| VisDial-BERT [59] | 63.87 | 67.50 | 53.85 | 84.68 | 93.25 | 3.32 |

| Student (ours) | 64.91 | 68.44 | 55.05 | 85.18 | 93.35 | 3.23 |

| P1+P2 [66] | 71.60 | 48.58 | 35.98 | 62.08 | 77.23 | 7.48 |

| MCA [1] | 72.47 | 37.68 | 20.67 | 56.67 | 72.12 | 8.89 |

| SGL+KT [34] | 72.60 | 58.01 | 46.20 | 71.01 | 83.20 | 5.85 |

| VD-BERT [89] | 74.54 | 46.72 | 33.15 | 61.58 | 77.15 | 7.18 |

| UTC [8] | 74.32 | 50.24 | 37.12 | 63.98 | 79.88 | 6.48 |

| VisDial-BERT [59] | 74.47 | 50.74 | 37.95 | 64.13 | 80.00 | 6.28 |

| Student (ours) | 71.76 | 68.09 | 55.18 | 83.68 | 91.93 | 3.57 |

Tricks for adapting to a discriminative task. The goal of the discriminative task is to retrieve the ground-truth answer from a list of answer candidates. Therefore, it implies that the gold VisDial dataset [12] contains the pre-defined answer candidates for each question to train and evaluate the discriminative models. However, the silver VisDial dataset generated by our proposed models does not include the answer candidates since the dataset is generated to train the generative models that do not need the answer candidates. To circumvent this issue, GST first trains the student model for the generative task, i.e., the encoder-decoder model, on the silver VisDial data. Then, we extract the trained weights of the encoder in the student and initialize the encoder-only model with the weights. Finally, the encoder-only model is trained to retrieve the ground-truth answer from the list of answer candidates using the gold VisDial dataset. This trick circumvents the need for the answer candidates when training the silver VisDial data.

Results on VisDial v1.0 test split. We compare the student model with the state-of-the-art approaches in the discriminative task, consisting of VisDial-BERT [59], UTC [8], VD-BERT [89], SGL+KT [34], P1+P2 [66], MCA [1], FGA [76], ReDAN [19], DAN [33], Synergistic [22], RvA [61], and CorefNMN [40]. As shown in the upper part of Table 6, GST outperforms the state-of-the-art approaches on all evaluation metrics in the VisDial v1.0 test-standard split. It is worth noticing that GST boosts NDCG 1.04% (63.87 64.91) compared with the VisDial-BERT model, whose configuration is almost the same as the student except for the use of the silver VisDial data. Furthermore, recent studies finetune the discriminative VisDial models on the densely annotated labels333https://visualdialog.org/challenge/2019#evaluation in the validation dataset and evaluate the models on the test set to boost NDCG. The dense annotation finetuning yields considerable improvements on NDCG and counter-effect on other metrics (i.e., MRR, R@k, and Mean) due to the trade-off relationship [59] between NDCG and the others. To mitigate such performance polarization, we follow the knowledge transfer technique in SGL+KT [34] when using the dense labels. In the below part of Table 6, the student model still shows competitive performance on NDCG, maintaining powerful performance on other metrics.

| Model | PPL | MCR | IIR | Iteration | VisDial v1.0 (val) | ||||||

| NDCG | MRR | R@1 | R@5 | R@10 | Mean | ||||||

| Teacher | 0 | 64.50 | 52.06 | 42.04 | 62.92 | 71.06 | 14.54 | ||||

| Teacher (w/ CPT) | ✓ | 0 | 63.59 | 51.70 | 41.99 | 61.88 | 68.62 | 16.21 | |||

| Student (iter1, w/o PPL) | ✓ | ✓ | 1 | 63.96 | 52.33 | 42.68 | 62.52 | 69.47 | 15.56 | ||

| Student (iter1, w/o MCR) | ✓ | ✓ | 1 | 63.71 | 52.49 | 42.56 | 62.87 | 70.00 | 15.21 | ||

| Student (iter1, w/o IIR) | ✓ | ✓ | 1 | 64.57 | 52.33 | 42.10 | 63.46 | 71.54 | 14.31 | ||

| Student (iter1) | ✓ | ✓ | ✓ | 1 | 65.06 | 52.84 | 42.74 | 63.66 | 71.30 | 14.60 | |

| Student (iter2) | ✓ | ✓ | ✓ | 2 | 65.46 | 53.04 | 43.15 | 63.63 | 71.00 | 14.73 | |

| Student (iter3) | ✓ | ✓ | ✓ | 3 | 65.47 | 53.19 | 43.08 | 64.09 | 71.51 | 14.34 | |

Results on VisDial v1.0 validation split. We also compare GST with the state-of-the-art vision-and-language pre-training model, BLIP [46]. The BLIP model is trained on the large-scale image-text datasets, such as Laion-400M [75], CC12M [7], CC3M [83], COCO [52], Visual Genome [43], and SBU captions [62]. Then, the model is finally fine-tuned on VisDial data. GST trains the student model on nearly 6.7M images, including 3.1M images (CC3M [83] and VQA [4]) to pretrain ViLBERT [54] and 3.6M images filtered from CC12M [7] to generate and train synthetic dialog data. As shown in Table 8, GST shows competitive performance on the VisDial v1.0 validation split, outperforming BLIP on MRR. It is noticeable that the BLIP model utilizes nearly twenty times more images than GST. It indicates that GST is effective and sample-efficient.

B.2 Ablation study

We perform an ablation study to illustrate the effect of each component in GST. We report the performance of four ablative models: student w/o PPL, student w/o MCR, student w/o IIR, and teacher w/ CPT. Student w/o PPL denotes the model that utilizes all generated QA pairs without applying the perplexity-based data selection. Student w/o MCR does not inject noises into the inputs of the student model. Student w/o IIR utilizes the entire CC12M [7] images to generate the silver VisDial data without applying in-domain image retrieval. It is the same model as the student-iter1-full in Section 4.3. Lastly, the teacher with continued pre-training (CPT) continues to perform pre-training with image-caption pairs in the silver VisDial data. CPT is proposed to identify the effect of utilizing additional vision-and-language data. Specifically, masked language modeling loss and masked image region loss are optimized by following ViLBERT [54].

In Table 7, we observe all components (i.e., PPL, MCR, and IIR) play a significant role in boosting the performance. Notably, by comparing the student model with the student w/o IIR, we find that utilizing the entire Web images does not contribute to an accurate answer prediction. Moreover, we observe that CPT results in a considerable drop in performance. We conjecture that it is due to low-precision image captions in the CC12M dataset, as mentioned in the paper [7]. But the student still shows competitive performance even if it also utilizes the captions in the dialog history. Finally, the iterative training monotonically improves the performance, similar to the robustness results in Section 4.3.

B.3 Do performance improvements come from a larger computational cost?

It takes more computational costs to train the student model than to train the teacher model due to the silver VisDial data. Accordingly, we perform an analysis to prove that the performance improvements do not merely come from larger computational costs. The training time of the teacher model is about 1 day with one NVIDIA A100 GPU. It takes 5 days to train the student model with three iterations (i.e., iter3). Accordingly, we compare the ensemble of 5 teacher models with the student model with the iter3. We ensemble 5 teacher models with different weight initialization and average logits for 5 teacher models to predict the answer. The results are shown in Table 9. The student model outperforms the ensembles of 5 teacher models on both metrics. It indicates that the improvements from GST do not merely come from increased computational costs.

| VisDial v1.0 (val) | ||

| Model | NDCG | MRR |

| Teacher (single model) | 64.50 | 52.06 |

| Teacher (5 ensembles) | 64.82 | 52.51 |

| Student (single model) | 65.47 | 53.19 |

| Model | QA Utilization |

| Student (iter1) | 32.52% |

| Student (iter2) | 39.06% |

| Student (iter3) | 46.40% |

B.4 The QA utilization across different iterations

We identify how many QA pairs in the silver VisDial data are actually utilized after applying perplexity-based data selection (i.e., PPL). Accordingly, we define QA utilization as the proportion of utilized QA pairs in the silver VisDial data. The QA utilization across different iterations is shown in Table 10. We observe that the QA utilization increases as the iteration proceeds. It implies that the student model leverages more data as the iteration proceeds, and more importantly, the average perplexity of the generated answers gradually decreases. We argue that the drop of the answer perplexity is closely related to the student model being more confident and remaining low-entropy [21, 84].

Appendix C Further qualitative analysis

C.1 More visualization of silver data

We visualize more silver data based on the image-caption pairs in the Conceptual Captions (CC12M) [7] dataset. As shown in Figure 6, the questioner and the student models generate diverse and correct visual dialog data, although the image caption data is noisy. For instance, the image caption in the fourth example (i.e., Luckily the woman s daughter adopted a puppy from litter so that poppy can keep in touch with it) is not well grounded with the given image. Still, our proposed models produce the visually-grounded QA samples. Finally, the student sometimes fails to generate correct answers (the red-colored text), similar to Figure 3.

C.2 Analysis of silver and gold answers.

We visualize the ground-truth answer (i.e., the gold answer) and the answer predictions from the student and the teacher models given the same context. As shown in Figure 5, the student model indeed produces correct answers compared with the teacher model. Moreover, both models produce many correct or plausible answers, although the predicted answers differ from the gold answers (see the blue-colored text). For instance, for the last question in the third example (i.e., Is she wearing a bathing suit?), the student answers “wetsuit” to the question, although the ground-truth answer is “no”. We conjecture that the ability to generate such different yet correct answers is evaluated as a high NDCG performance; NDCG considers all relevant responses in the answer candidates.

Appendix D Implementation details

We integrate the vision-and-language encoder [54] with the transformer decoder for sequence generation (i.e., [72]) to train the teacher, the questioner, and the student. The decoder has 12 layers of transformer blocks, with each block having 12 attention heads and a hidden size of 768. The maximum sequence length of the encoder and the decoder is 256 and 25, respectively. We extract the feature vectors of the input images by using the Faster R-CNN [70, 3] pre-trained on Visual Genome [43]. The number of bounding boxes for each image is fixed to 36. We set the threshold for PPL to 50. We train on one A100 GPU with a batch size of 72 for 70 epochs. Training time takes about 3 days. We use the Adam optimizer [38] with an initial learning rate 1e-5. The learning rate is warmed up to 2e-5 until 10k iterations and linearly decays to 1e-5. In visually-grounded dialog generation, the questioner and the teacher decode the sequences using the top- sampling [17, 24, 68] with and the temperature of 0.7. We use the top- sampling since its computation is cheap yielding accurate and diverse sequences. Furthermore, we apply the 4-gram penalty [65, 39] when generating visual questions to ensure that no 4-gram appears twice in the questions for each dialog.

Appendix E Discussion

E.1 Relationship between self-supervised pre-training and generative self-training.

We develop the teacher, the questioner, and the student models on top of ViLBERT [54] which leverages vision-and-language pre-training. Thus, the teacher can be understood as a typical model that follows the pretrain-then-transfer learning strategy mentioned in the introduction, whereas the student leverages both pre-training and generative self-training. By comparing the student with the teacher, we identify that self-supervised pre-training and GST are complementary modeling capabilities.

E.2 Limitations and future work.

One of the major limitations of our approach is the learning efficiency of the student model. We demonstrate the effectiveness of our proposed method, but there can be more efficient ways to improve the visual dialog model. For example, our method generates the dialog data without considering the difficulty of the question. We believe that the competency-aware or curriculum-based visual dialog generation can make our proposed self-training algorithm more efficient and powerful. We will leave it as a future work.

E.3 Ethical considerations.

Since GST generates the visually-grounded dialogs, our proposed models have the potential to produce biased and offensive language, although arguably to a lesser extent than the open-domain dialog [95, 82, 47, 80, 73, 48]. We attempt to mitigate ethical concerns such as biases against people of a certain gender, race, age, and ethnicity or the use of offensive content. Our proposed method utilizes the images and the captions in the Conceptual 12M dataset [7], where several data cleansing processes (e.g., the offensive content filtering or replacing each person name with the special <PERSON> token) have been conducted. At least, we could not find any conversation violating the ethical considerations in a manual inspection by visualizing 100 synthetic dialogs.