The Curious Case of Integrator Reach Sets, Part I: Basic Theory

Abstract

This is the first of a two part paper investigating the geometry of the integrator reach sets, and the applications thereof. In this Part I, assuming box-valued input uncertainties, we establish that this compact convex reach set is semialgebraic, translated zonoid, and not a spectrahedron. We derive the parametric as well as the implicit representation of the boundary of this reach set. We also deduce the closed form formula for the volume and diameter of this set, and discuss their scaling with state dimension and time. We point out that these results may be utilized in benchmarking the performance of the reach set over-approximation algorithms.

Keywords: Reach set, integrator, convex geometry, set-valued uncertainty.

I Introduction

Integrators with bounded controls are ubiquitous in systems-control. They appear as Brunovsky normal forms for the feedback linearizable nonlinear systems. They also appear frequently as benchmark problems to demonstrate the performance of the reach set computation algorithms. Despite their prominence, specific results on the geometry of the integrator reach sets are not available in the systems-control literature. Broadly speaking, the existing results come in two flavors. On one hand, very generic statements are known, e.g., these reach sets are compact convex sets whenever the set of initial conditions is compact convex, and the controls take values from a compact (not necessarily convex) set [1]. On the other hand, several numerical toolboxes [2, 3] are available for tight outer approximation of the reach sets over computationally benign geometric families such as ellipsoids and zonotopes. The lack of concrete geometric results imply the absence of ground truth when comparing the efficacy of different algorithms, and one has to content with graphical or statistical (e.g., Monte Carlo) comparisons.

Building on the preliminary results in [4], this paper undertakes a systematic study of the integrator reach sets. In particular, we answer the following basic questions:

We consider the integrator dynamics having states and inputs with relative degree vector (vector of positive integers). In other words, we consider a Brunovsky normal form with integrators where the th integrator has degree for . The dynamics is

| (1) |

where , the set is compact, and

| (2a) | |||

| (2b) | |||

In (2a), the symbol denotes a block diagonal matrix whose arguments constitute its diagonal blocks. In (2b), the notation stands for the column vector of zeros, and denotes the th basis (column) vector in for . The symbol denotes horizontal concatenation.

Notice that an integrator that replaces the ones appearing in the system matrices with arbitrary nonzero reals, is always reducible to the normal form (1)-(2) by renaming the variables. For instance, for any , the system is equivalent to where , .

Let denote the forward reach set of (1) at time , starting from a given compact convex set of initial conditions , i.e.,

| (3) |

In words, is the set of all states that the controlled dynamics (1)-(2) can reach at time , starting from the set at , with measurable control compact. Formally,

| (4) |

where denotes the Minkowski sum. The set-valued integral [5] in (4) is defined for any point-to-set function , as

| (5) |

where the summation symbol denotes the Minkowski sum, and is the floor operator; see e.g., [1]. Our objective is to study the geometry of (4) in detail.

This paper significantly expands our preliminary works [4, 6]: here we consider multi-input integrators as opposed to the single input case considered in [4]. Even for the single input case, while [4, Thm. 1] derived an exact formula for the volume of the reach set, that formula involved limit and nested sums, and in that sense, was not really a closed-form formula [7] – certainly not amenable for numerical computation. In this paper, we derive closed-form formula for the general multi-input case when the input uncertainty is box-valued, i.e., is hyperrectangle. In the same setting, the present paper addresses previously unexplored directions: the scaling laws for the volume and diameter of integrator reach sets, exact parametric and implicit equations for the boundary, and the classification of these sets.

The paper is structured as follows. After reviewing some preliminary concepts in Sec. II, we consider the integrator reach set resulting from box-valued input set uncertainty in Sec. III. The results on taxonomy and the boundary of the corresponding reach set are provided in Sec. IV. The results on the size of this set are collected in Sec. V. The application of these results for benchmarking the reach set over-approximation algorithms are discussed in Sec. VI. All proofs are deferred to the Appendix. Sec. VII summarizes the paper, and outlines the directions pursued in its sequel Part II.

II Preliminaries

In the following, we summarize some preliminaries which will be useful in the main body and in the Appendix.

II-1 State transition matrix

II-2 Support function

The support function of a compact convex set , is given by

| (7) |

where denotes the standard Euclidean inner product. Geometrically, gives the signed distance of the supporting hyperplane of with outer normal vector , measured from the origin. Furthermore, the supporting hyperplane at is , and we can write

For compact ,

| (8) |

The support function is convex in . For more details on the support function, we refer the readers to [8, Ch. V].

The support function uniquely determines the set . Given matrix-vector pair , the support function of the affine transform is

| (9) |

Given a function , its Legendre-Fenchel conjugate is

| (10) |

From (7)-(10), it follows that is the Legendre-Fenchel conjugate of the indicator function

Since the indicator function of a convex set is a convex function, the biconjugate . This will be useful in Section IV.

To proceed further, we introduce some notations. Since is compact, let

| (11) |

that is, and are the component-wise minimum and maximum, respectively, of the input vector. Furthermore, let

| (12) |

and introduce

| (13) |

for . Also, let

| (14) |

for . When , we simplify the notations as

| (15) |

Using (13) and following (7), we deduce Proposition 1 stated next (proof in Appendix -A).

Proposition 1.

(Support function for compact ) For compact convex , and compact , the support function of the reach set (4) is

| (16) |

where denotes the convex hull.

II-3 Polar dual

The polar dual of any non-empty set is given by

| (17) |

From this definition, it is immediate that contains the origin, and is a closed convex set. The bipolar . Thus, if is compact convex and contains the origin, then we have the involution . From (7) and (17), notice that is the unit support function ball, i.e., . In Sec. IV-D, we will mention some properties of the polar dual of the integrator reach set.

II-4 Vector measure

Let be a -field of the subsets of a set. A countably additive mapping is termed a vector measure. Here, “countably additive” means that for any sequence of disjoint sets in such that their union is in , we have . Some of the early investigations of vector measures were due to Liapounoff [9] and Halmos [10]; relatively recent references are [11, 12].

II-5 Zonotope

A zonotope is a finite Minkowski sum of closed line segments or intervals where these intervals are imbedded in the ambient Euclidean space . Explicitly, for some positive integer , we write

Thus, a zonotope is the range of an atomic vector measure. Alternatively, a zonotope can be viewed as the affine image of the unit cube. A compact convex polytope is a zonotope if and only if all its two dimensional faces are centrally symmetric [13, p. 182]. For instance, the cross polytope , is not a zonotope. Standard references on zonotope include [14, 15], [16, Ch. 2.7].

The set of zonotopes is closed under affine image and Minkowski sum, but not under intersection. In the systems-control literature, a significant body of work exists on computationally efficient over-approximation of reach sets via zonotopes [17, 18, 19] and its variants such as zonotope bundles [20], constrained zonotopes [21], complex zonotopes [22], and polynomial zonotopes [23, 24].

II-6 Variety and ideal

Let , the vector space of real-valued -variate polynomials. The (affine) variety is the set of all solutions of the system . Given , the set

is called the ideal generated by . We write this symbolically as . Roughly speaking, is the set of all polynomial consequences of the given system of polynomial equations in indeterminates. We refer the readers to [25, Ch. 1] for detailed exposition of these concepts.

III Box-valued Input Uncertainty

In the remaining of this paper, we characterize the exact reach set (3) when input set is box-valued, and remark on the quality of approximation for the same when is arbitrary compact.

When is box-valued, denote the reach set (3) as , i.e., with a box superscript***For the single input () case, we drop the box superscript.. In this case, each of the single input integrator dynamics with dimensional state subvectors for , are decoupled from each other. Then is the Cartesian product of these single input integrator reach sets: for , i.e.,

| (18) |

In what follows, we will sometimes exploit that (18) may also be written as†††In general, the Minkowski sum of a given collection of compact convex sets is not equal to their Cartesian product. However, the “factor sets” in (18) belong to disjoint mutually orthogonal dimensional subspaces, , which allows writing this Cartesian product as a Minkowski sum. a Minkowski sum . Notice that the decoupled dynamics also allows us to write a Minkowski sum decomposition for the set of initial conditions

and accordingly, the initial condition subvectors for . Thus .

Since the support function of the Minkowski sum is equal to the sum of the support functions, we have

| (19) |

This leads to the following result (proof in Appendix -B) which will come in handy in the ensuing development.

Theorem 1.

(Support function for box-valued ) For compact convex , and box-valued input uncertainty set given by

| (20) |

the support function of the reach set (4) is

| (21) |

The formula (21) upper bounds (16) resulting from the same initial condition and arbitrary compact with related to via (11). Thus, from (8), the reach set with box-valued input uncertainty will over-approximate the reach set associated with arbitrary compact , at any given , provided are defined as (11).

When is compact but not box-valued, then we can quantify the quality of the aforesaid over-approximation in terms of the two-sided Hausdorff distance metric between the convex compact sets , expressible [13, Thm. 1.8.11] in terms of their support functions as

| (22) |

Thanks to (8), the absolute value in (22) can be dispensed since with set equality if is box, in which case and .

It is known that [26, Prop. 6.1] the set resulting from a linear time invariant dynamics such as (1)-(2) remains invariant under the closure of convexification of the input set . Therefore, it is possible that and even when the compact set is nonconvex. For instance, the reach set resulting from some compact convex and dynamics (1)-(2) with the nonconvex input uncertainty set , is identical to resulting from the same , same dynamics, and the box-valued input uncertainty set (20) with for all .

Likewise, for the same compact convex , the reach set resulting from (1)-(2) with the nonconvex input set , , is the same as that resulting from the cross-polytope . More generally, for , suppose results from the unit norm ball input uncertainty set . Let . If results from the same , same dynamics, and input uncertainty set (20) with for all , then using [27, Thm. 1], (22) simplifies to

| (23) |

where is the Hölder conjugate of , i.e., , and . In this case, the positive value (23) quantifies the quality of strict over-approximation for . The objective in (23) being positive homogeneous, admits lossless constraint convexification , and the corresponding maximal value‡‡‡As such, (23) has a difference of convex objective, and by the Weierstrass extreme value theorem, the maximum is achieved. for moderate dimensions , can be found by direct numerical search.

IV Taxonomy and Boundary

For compact convex, it is well-known [1, Sec. 2] that the reach set given by (3) is compact convex for all provided is compact. However, it is not immediate what kind of convex set is, even for singleton .

In this Section, we examine the question “what type of compact convex set is” when is box-valued uncertainty set of the form (20). In the same setting, we also derive the equations for the boundary .

Notice that for non-singleton , the taxonomy question is not well-posed since the classification then will depend on . Also, setting in (4), it is apparent that is a translation of the set-valued integral in (4). Thus, classifying amounts to classifying the second summand in (4).

IV-A is a Zonoid

A zonoid is a compact convex set that is defined as the range of an atom free vector measure (see Sec. II-4). Affine image of a zonoid is a zonoid. Minkowski sum of zonoids is also a zonoid. We refer the readers to [28, 29, 30],[31, Sec. I] for more details on the properties of a zonoid. By slight abuse of nomenclature, in this paper we use the term zonoid up to translation, i.e., we refer to the translation of zonoids as zonoids (instead of using another term such as “zonoidal translates”).

Let us mention a few examples. Any compact convex symmetric set in is a zonoid. In dimensions three or more, all norm balls for are zonoids.

An alternative way to think about the zonoid is to view it as the limiting set (convergence with respect to the two-sided Hausdorff distance, see e.g., [4, Appendix B]) of the Minkowski sum of line segments, i.e., the limit of a sequence of zonotopes [28, 14, 15]. Formally, given a Hausdorff convergent sequence of zonotopes , the zonoid is

for some (element-wise vector inequality), and a suitable mapping . Our analysis will make use of this viewpoint in Sec. V-A. Our main result in this subsection is the following.

To appreciate Theorem 2 via the limiting viewpoint mentioned before, let us write

| (24) |

where all summation symbols denote Minkowski sums. The first term in (24) denotes a translation. In the second term, the outer summation over index arises by writing the Cartesian product (18) as the Minkowski sum . Furthermore, uniformly discretizing into subintervals , , we write as the limit of the Minkowski sum over index . Geometrically, the innermost summands in the second term denote non-uniformly rotated and scaled line intervals in . In other words, the second term in (24) is a Minkowski sum of sets, each of these sets being the limit of a sequence of sets comprising of zonotopes

which are the Minkowski sum of line segments. Since is a zonoid, the second term in (24) is a Minkowski sum of zonoids, and is therefore a zonoid [28, Thm. 1.5]. The entire right hand side of (24), then, is translation of a zonoid, and hence a zonoid.

Remark 1.

In the following, we derive formulae for the boundary (Proposition 2 and Sec. IV-C) and volume (Theorem 5) of the integrator reach set with (singleton set). From (25), it is clear that one cannot expect similar closed form formulae for arbitrary compact (or even arbitrary compact convex) . In this sense, our closed form formulae are as general as one might hope for. For a specific non-singleton , one can use these formulae to first derive the boundary (resp. volume) of , and then use (25) to get numerical estimates for the boundary (resp. volume) of (cf. Remark 2).

IV-B is Semialgebraic

A set in is called basic semialgebraic if it can be written as a finite conjunction of polynomial inequalities and equalities, the polynomials being in . Finite union of basic semialgebraic sets is called a semialgebraic set. A semialgebraic set need not be basic semialgebriac; see e.g., [32, Example 2.2].

Semialgebraic sets are closed under finitely many unions and intersections, complement, topological closure, polynomial mapping including projection [33, 34], and Cartesian product. For details on semialgebraic sets, we refer the readers to [35, Ch. 2]; see [36, Appendix A.4.4] for a short summary.

In Proposition 2 below, we derive a parametric representation of , the boundary of the reach set. Then we use this representation to establish semialgebraicity of in Theorem 4 that follows.

Proposition 2.

For relative degree vector , and fixed comprising of subvectors where , consider the reach set (4) with singleton and given by (20). For , define and as in (11)-(12). Let the indicator function for , and otherwise. Then the components of

admit parametric representation in terms of the parameters satisfying . This parameterization is given by

| (26) |

where denotes the th component of the th subvector for .

The following is a consequence of the appearing in (26).

Corollary 3.

The single input integrator reach set has two bounding surfaces for each . In other words, there exist such that

with boundary .

During the proof of Theorem 4 below, it will turn out that in fact for all . In words, are real algebraic hypersurfaces for all .

Let us exemplify the parameterization (26) for the case . In this case,

| (27) |

| (28) |

In (27), taking plus (resp. minus) signs in each of component gives the parametric representation of the curve (resp. ). These curves are as in [4, Fig. 1(a)], and their union defines . We note that the parameterization (27) appeared in [37, p. 111].

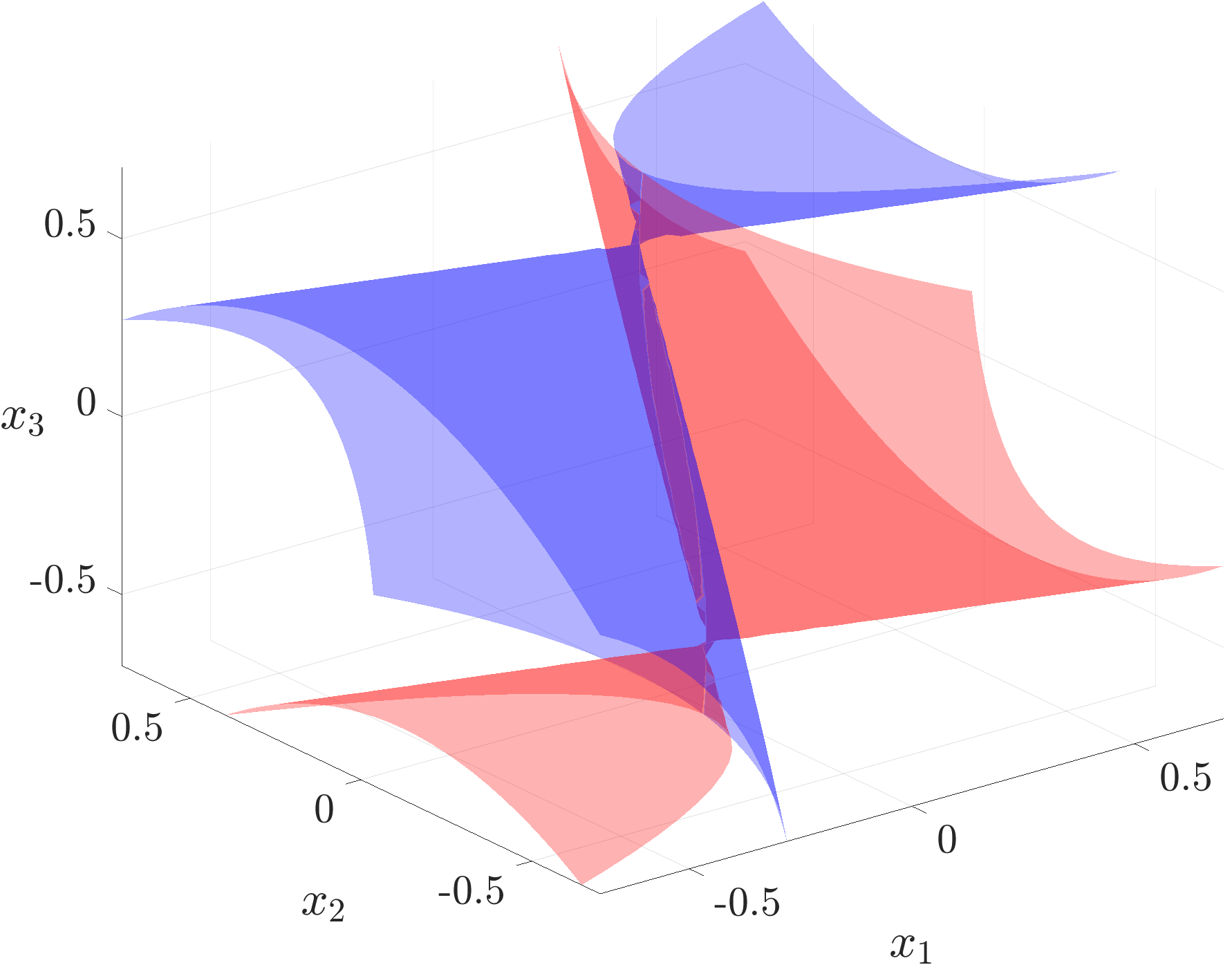

Likewise, in (28), taking plus (resp. minus) signs in each of component gives the parametric representation of the surface (resp. ). The resulting set is the triple integrator reach set, and is shown in Fig. 1.

Now we come to the main result of this subsection.

Let us illustrate the bounding curves and surfaces for (27) and (28) respectively, in the implicit form. Eliminating the parameter from (27) reveals that are parabolas. In particular,

| (29) |

and the formula for follows mutatis mutandis.

Similarly, eliminating the parameters from (28) reveals that are quartic polynomials. In particular,

| (30) |

and again, the formula for follows mutatis mutandis.

IV-C Implicitization of

To derive the implicit equations for the bounding algebraic hypersurfaces for all , we need to eliminate the parameters from (26). For this purpose, it is helpful to write (26) succinctly as

| (31) |

where

| (32) |

To simplify the rather unpleasant notation , we will only address the case. In (31), this allows us to replace by , and to drop the subscript from the ’s. This does not invite any loss of generality in terms of implicitization since post derivation, we can replace by to recover the respective ’s.

With slight abuse of notation, we will also drop the superscript from the ’s in (31). Recall that the plus (resp. minus) superscript in the ’s indicates (resp. ). From (32), it is clear that in either case, the is affine in , which is the th coordinate of the boundary point for the th block. Importantly, for , the quantity does not depend on any other component of the boundary point than the th component. Again, the plus-minus superscripts can be added back post implicitization.

Thus, the notationally simplified version of (31) that suffices for implicitization, is

| (33) |

which is a system of homogeneous polynomials in variables . The objective is to derive the implicitized polynomial associated with (33).

When , the parameterization (33) becomes

and we get degree 2 implicitized polynomial

| (34) |

For , substituting for the in (34) from (32) with appropriate plus-minus signs recovers (29).

When , the parameterization (33) becomes

elementary algebra gives degree 4 implicitized polynomial

| (35) |

As before, for , substituting for the in (35) from (32) with appropriate plus-minus signs recovers (30). However, for or higher, it is practically impossible to derive the implicitization via brute force algebra.

A principled way to implicitize (33) is due to G. Zaimi [38], and starts with defining for . Introduce the sequence via the generating function (see e.g., [39, Ch. 1])

| (36) |

Taking the logarithmic derivative of (36), and then using the generating functions for all , yields

| (37) |

Integrating (37) with respect to , we obtain

| (38) |

Equating (36) and (38) allows us to compute as a degree polynomial of the ’s.

On the other hand, since the generating function (36) is a rational function with denominator polynomial of degree , the following Hankel determinant vanishes§§§This result goes back to Kronecker [40]. See also [41, p. 5, Lemma III].

| (39) |

Substituting the ’s obtained as degree polynomials of the ’s into (39) gives an implicit polynomial in indeterminate of degree . Finally, reverting back the ’s to the ’s result in the desired implicit polynomial , which is also of degree .

For instance, when , the relation (39) becomes

| (40) |

In this case, equating (36) and (38) gives

Substituting these back in (40) yields the quartic polynomial , which under the mapping recovers (35), and thus (30).

In summary, (39) is the desired implicitization of the bounding hypersurfaces of the single input integrator reach set (up to the change of variables). The Cartesian product of these implicit hypersurfaces gives the implicitization in the multi input case.

IV-D Dual of

From convex geometry standpoint, it is natural to ask what kind of characterization is possible for the polar dual (see Sec. II-3) of the integrator reach set or . We know in general that will be a closed convex set. Depending on the choice of and , the set may not contain the origin, and thus the bipolar

that is, we do not have the involution in general.

Furthermore, since is semialgebraic from Sec. IV-B, so must be its polar dual ; see e.g., [36, Ch. 5, Sec. 5.2.2].

IV-E Summary of Taxonomy

So far we explained that the compact convex set is semialgebraic, and a translated zonoid. Two well-known subclasses of convex semialgebraic sets are the spectrahedra and the spectrahedral shadows. The spectrahedra, a.k.a. linear matrix inequality (LMI) representable sets are affine slices of the symmetric positive semidefinite cone. The spectrahedral shadows, a.k.a. lifted LMI or semidefinite representable sets are the projections of spectrahedra. The spectrahedral shadows subsume the class of spectrahedra; e.g., the set is a spectrahedral shadow but not a spectrahedron. The polar duals of spectrahedra are spectrahedral shadows [36, Ch. 5, Sec. 5.5].

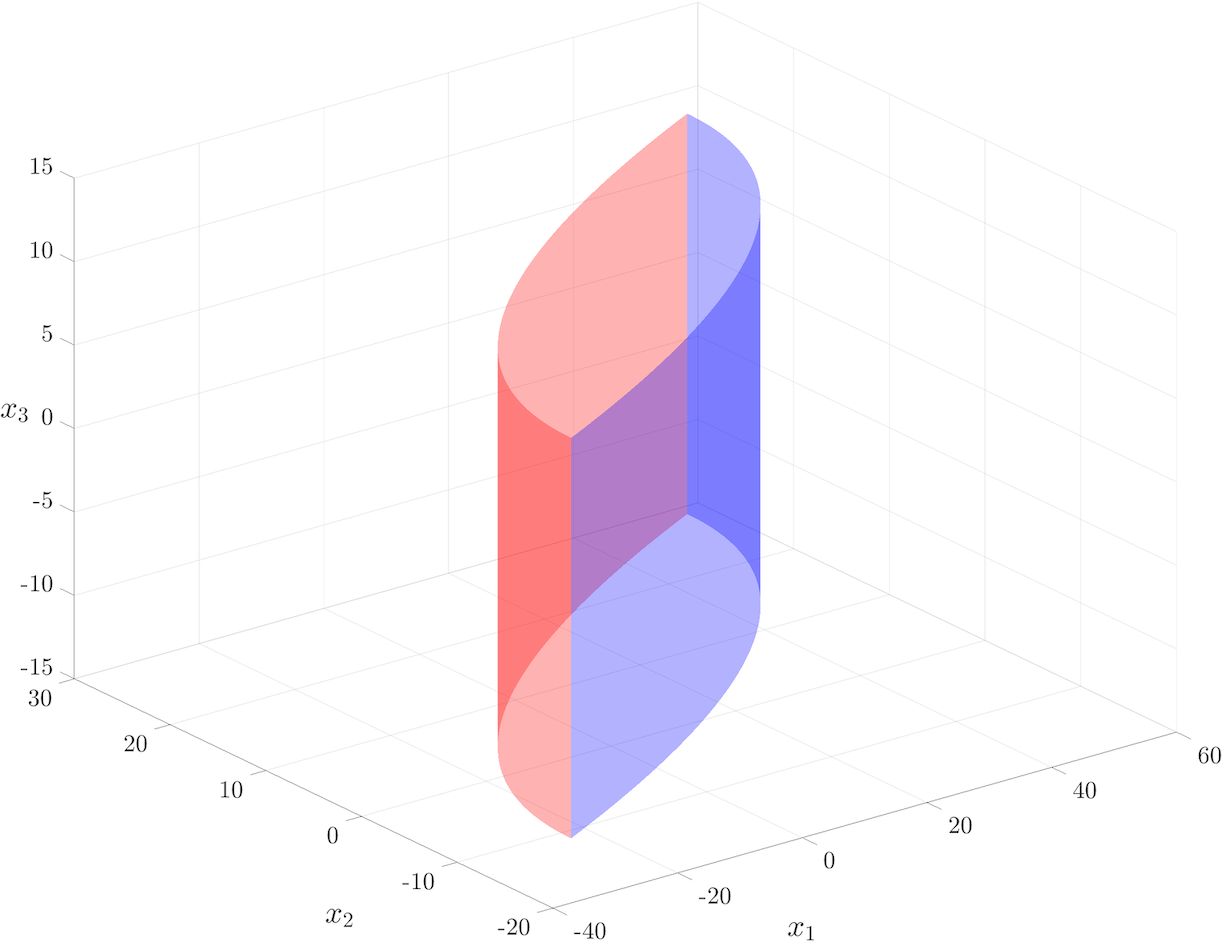

We note that is not a spectrahedron. To see this, we resort to the contrapositive of [44, Thm. 3.1]. Specifically, the number of intersections made by a generic line passing through an interior point of the -dimensional reach set with its real algebraic boundary is not equal to the degree of the bounding algebraic hypersurfaces, the latter we know from Sec. IV-C to be . In other words, the is not rigidly convex, see [44, Sec. 3.1 and 3.2]. Fig. 3 helps visualize this for . From Fig. 3(a), we observe that a generic line for has intersections with the bounding real algebraic curves whereas from (29), we know that are degree polynomials. Likewise, Fig. 3(b) reveals that a generic line for has intersections with the bounding real algebraic surfaces whereas from (30), we know that the polynomials in this case, are of degree .

Could the reach set be spectrahedral shadow? Some calculations show that sufficient conditions as in [45] do not seem to hold. However, this remains far from conclusive. We summarize our taxonomy results in Fig. 4; the highlighted region shows where the integrator reach set belongs. To answer whether this highlighted region can be further narrowed down, seems significantly more challenging.

V Size

We next quantify the “size” of the reach set by computing two functionals: its -dimensional volume (Sec. V-A), and its diameter or maximum width (Sec. V-B). In Sec. V-C, we discuss how these functionals scale with the state dimension .

V-A Volume

The following result gives the volume formula for the integrator reach set .

Theorem 5.

For a simple illustration of Theorem 5, consider , with . The corresponding reach set at is shown in Fig. 5 for , . Here and .

This reach set, being a direct product of the double integrator reach set (cf. Fig. 2) and the single integrator reach set , is a cylinder¶¶¶Here, the notation stands for the third component of vector .. In [4], we explicitly derived that , and therefore, the volume of this cylindrical set must be equal to “height of the cylinder cross sectional area”, i.e.,

Indeed, a direct application of the formula (41) recovers the above expression.

Remark 2.

If the initial set is not singleton, then computing the volume of the requires us to compute the volume of a Minkowski sum. Notice that

since from (2b), for all . Therefore, combining (4), (41) with the classical Brunn-Minkowski inequality, we obtain a lower bound for as

The above bound holds for any compact , not necessarily convex.

V-B Diameter

We now focus on another measure of “size” for the integrator reach set , namely its diameter, or maximal width.

By definition, the width [13, p. 42] of , is

| (42) |

where (the unit sphere imbedded in ), and the support function is given by (21). In other words, (42) gives the width of in the direction .

For singleton , combining (21) and (42), we have

| (43) |

where the last equality follows from the fact that in (13) is component-wise nonnegative for all .

The diameter of the reach set is its maximal width:

| (44) |

Notice that (43) is a convex function of ; see e.g., [46, p. 79]. Thus, computing (44) amounts to maximizing a convex function over the unit sphere. We next derive a closed form expression for (44).

Theorem 6.

To illustrate Theorem 6, consider the triple integrator with and . In this case, , , and we can parameterize the unit vector as

Thus (44) reduces to

Furthermore, , and we obtain

where means that either all components are plus or all minus. Thus, the maximizing tuples are given by

| (47) |

Hence, the diameter of the triple integrator reach set at time is equal to .

Fig. 6 shows how the width of the integrator reach set for , varies over , which parameterize the unit sphere . The location of the maximizers are given by (47), and are depicted in Fig. 6 via filled black circle and filled black square.

For a visualization of the width and diameter for the double integrator, see [4, Fig. 2].

V-C Scaling Laws

We now turn to investigate how the volume and the diameter of the integrator reach set scale with time and the state dimension. While scaling laws reveal limits of performance of engineered systems (see e.g., [47, 48]), they have not been formally investigated in the context of reach sets even though empirical studies are common [20], [49, Table 1], [50, Fig. 4].

For clarity, we focus on the single input case and hence do not notationally distinguish between and .

V-C1 Scaling of the volume

Fig. 7 plots the volume (41) for the single input () case against time for varying state space dimension . In this case, , and therefore . As expected, the volume of the reach set increases with time for any fixed .

Let us now focus on the scaling of the volume with respect to the state dimension . For , using the known asymptotic [51] for , we find the asymptotic for the volume:

where is the Glaisher-Kinkelin constant [52, Sec. 2.15].

Fig. 7 shows that when is small, the volume of the larger dimensional reach set stays lower than its smaller dimensional counterpart. In particular, given two state space dimensions with , and all other parameters kept fixed, there exists a critical time when the volume of the dimensional reach set overtakes that of the dimensional reach set.

In particular, for , we get

| (49) |

For instance, when , , , we have . When , , , we have . The dashed vertical lines in Fig. 7 show the critical times given by (49).

Applying Stirling’s approximation , we obtain the asymptotic for (49):

where denotes asymptotic equivalence [53, Ch. 1.4], and is the Euler number.

V-C2 Scaling of the diameter

Fig. 8 plots the diameter of (45) for the single input () case against time for varying state space dimension . As earlier, , . As expected, the diameter of the reach set increases with time for any fixed .

As , the diameter approaches a limiting curve shown by the dotted line in Fig. 8. To derive this limiting curve, notice that for , the formula (45) gives

| (50) |

We write the partial sum

| (51) |

and by ratio test, note that both the sums converge. In particular, converges to , where is the zeroth order modified Bessel function of the first kind. This follows from the very definition of the th order modified Bessel function of the first kind, given by

where denotes the Gamma function.

On the other hand, using the definition of the generalized hypergeometric function∥∥∥Here, denotes the Pochhammer symbol [54, p. 256] or rising factorial.

we find that

Therefore, (51) evaluates to

| (52) |

Combining (50), (51), (52), and using the continuity of the square root function on , we deduce that

| (53) |

That exists and equals to zero, follows from (51) and the continuity of the square:

To see the last equality, let . By the ratio test, , hence is a Cauchy sequence and .

VI Benchmarking Over-approximations of Integrator Reach Sets

In practice, a standard approach for safety and performance verification is to compute “tight” over-approximation of the reach sets of the underlying controlled dynamical system. Several numerical toolboxes such as [3, 2] are available which over-approximate the reach sets using simple geometric shapes such as zonotopes and ellipsoids. Depending on the interpretation of the qualifier “tight”, different optimization problems ensue, e.g., minimum volume outer-approximation [55, 56, 57, 58, 59, 60, 61, 62].

One potential application of our results in Sec. V is to help quantify the conservatism in different over-approximation algorithms by taking the integrator reach set as a benchmark case. For instance, Fig. 9 shows the conservatism in zonotopic over-approximations of the single input integrator reach sets for with and , computed using the CORA toolbox [2, 63]. To quantify the conservatism, we used the volume formula (41) for computing the ratio of the volumes . The results shown in Fig. 9 were obtained by setting the zonotope order in the CORA toolbox, which means that the number of zonotopic segments used by CORA for over-approximation was . As expected, increasing the zonotope order improves the accuracy at the expense of computational speed, but among the different dimensional volume ratio curves, trends similar to Fig. 9 remain. It is possible [31, Thm. 1.1, 1.2] to compute the optimal zonotope order as function of the desired approximation accuracy (i.e., desired Hausdorff distance from the zonoid).

For the numerical results shown in Fig. 9, we found the diameters of the over-approximating zonotopes for , to be the same as that of the true diameters given by (45) for all times.

Fig. 10 depicts the conservatism in ellipsoidal over-approximations of the single input integrator reach sets for with and , following the algorithms in ellipsoidal toolbox [3]. Specifically, the reach set at time , is over-approximated by the intersection of a carefully constructed parameterized family of ellipsoids defined as

for unit vectors , . The choice of determines , which in turn parameterizes the symmetric positive definite shape matrix ; we refer the readers to [64, Ch. 3.2], [37, Ch. 3] where the corresponding evolution equations were derived using optimal control. The center vectors , and the shape matrices for this parameterized family of ellipsoids are constructed such that is guaranteed to be a superset of the reach set at time for any finite , and for , recovers the reach set at that time.

For the results shown in Fig. 10, we used randomly chosen unit vectors . Ideally, one would like to compute the (unique) minimum volume outer ellipsoid (MVOE), a.k.a. the Löwner-John ellipsoid [65, 66] of the convex set , which is a semi-infinite programming problem [46, Ch. 8.4.1], and has no known exact semidefinite programming (SDP) reformulation. We computed two different relaxations of this problem: one based on the S procedure [67, Ch. 3.7.2], and the other by homothetic scaling of the maximum volume inner ellipsoid (MVIE) [65, Thm. III] of the set . Both of these lead to solving SDP problems, and both are guaranteed to contain the Löwner-John ellipsoid of the intersection of the parameterized family of ellipsoids. These suboptimal (w.r.t. the MVOE criterion) solutions, computed using cvx [68], are shown in Fig. 10.

Fig. 10 shows that the S procedure entail less conservatism compared to the MVIE scaling, in terms of volume. While the volume ratio trends in Fig. 10 are similar to that observed in Fig. 9, the approximation quality is lower. In light of the results in Sec. IV-A, this is not surprising: the integrator reach sets being zonoids (i.e., Hausdorff limit of zonotopes), the zonotopic outer-approximations are expected to perform better than other over-approximating shape primitives.

The main point here is that our results in Sec. V provide the ground truth for the size of the integrator reach set, thereby help benchmarking the performance of reach set approximation algorithms.

VII Epilogue

Recap

The present paper initiates a systematic study of integrator reach set. When the input uncertainty set is hyperrectangle, we showed that the corresponding compact convex reach set is in fact semialgebraic (Sec. IV-B) as well as a zonoid (range of an atom free vector measure) up to translation (Sec. IV-A). We derived the equation of its boundary in both parametric (Proposition 2) and implicit form (Sec. IV-C). We obtained the closed form formula for the volume (Sec. V-A) and diameter (Sec. V-B) of these reach sets. We also derived the scaling laws (Sec. V-C) for these quantities. We pointed out that these results may be used to benchmark the performance of set over-approximation algorithms (Sec. VI).

What Next

In the sequel Part II, we will show how the ideas presented herein enable computing the reach sets for feedback linearizable systems. The focus will be in computing the reach set in transformed state coordinates associated with the normal form, and to map that set back to original state coordinates under diffeomorphism. This, however, requires nontrivial extension of the basic theory presented here (especially those in Proposition 2 and Sec IV-C) since we will need to handle time-dependent set-valued uncertainty in transformed control input even when the original control takes values from a set that is not time-varying.

-A Proof of Proposition 1

Since support function is distributive over sum, we have

| (54) |

The block diagonal structure of the matrix in (2) implies

| (55) |

Following the definition of support function and [4, Proposition 1], we then have

| (56) |

The last equality in (-A) follows from (13), and from the fact [26, Prop. 6.1] that the reach set remains invariant under the closure of convexification of the input set . Substituting (55) and (-A) in (54) yields (16).

-B Proof of Theorem 1

Since the uncertainties in (20) along different input co-ordinate axes are mutually independent, the support function of the reach set is of the form (19). Therefore, in this case, (16) takes the form

| (57) |

The optimizer of the integrand in the RHS of (57), for , can be written in terms of the Heaviside unit step function as

where denotes the signum function. Therefore,

| (58) |

for . Substituting (58) back in (57) and integrating over completes the proof.

-C Proof of Theorem 2

-D Proof of Proposition 2

From Sec. II-2, the supporting hyperplane at any with outward normal is , and the Legendre-Fenchel conjugate

| (59) |

For , let comprise of subvectors . Since the Cartesian product (18) is equivalent to the Minkowski sum , and the support function of Minkowski sum is the sum of support functions of the summand sets [13, p. 48], we have

| (60) |

wherein the last line follows from the property that the Legendre-Fenchel conjugate of a separable sum equals to the sum of the Legendre-Fenchel conjugates [46, p. 95].

Therefore, combining (59) and (60), we obtain

| (61) |

For , since each objective in (61) involves an integral of the absolute value of a polynomial in of degree , that polynomial can have at most roots in the interval , i.e., can have at most sign changes in that interval. If all roots of the aforesaid polynomial are in , we denote these roots as , and write

| (62) |

Notice that even if the number of roots in is strictly less than******this may happen either because there are repeated roots in , or because some real roots exist outside , or because some roots are complex conjugates, or a combination of the previous three. , the expression (62) is generic in the sense the corresponding summand integrals become zero. Thus, combining (61) and (62), we arrive at

| (63) |

-E Proof of Corollary 3

From (26), we get two different parametric representations of in terms of . One parametric representation results from the choice of positive sign for the appearing in (26), and another for the choice of negative sign for the same. Denoting the implicit representation corresponding to the parametric representation (26) with (resp. ) sign as (resp. ), the result follows.

-F Proof of Theorem 4

We notice that (26) gives polynomial parameterizations of the components of for all . In particular, for each , the right hand side of (26) is a homogeneous polynomial in parameters of degree . By polynomial implicitization [25, p. 134], the corresponding implicit equations (when fixing plus sign for in (26)) and (when fixing minus sign for in (26)), must define affine varieties in .

Specifically, denote the right hand sides of (26) as for all , where the superscripts indicate that either all ’s are chosen with plus signs, or all with minus signs. Then write (26) as

Now for each , consider the ideal

and let be the th elimination ideal of . Then for each , the variety

Likewise, the variety .

Thus, the algebraic boundary (i.e., the Zariski closure of the Euclidean boundary) of is

Therefore, is semialgebraic for all .

Since the Cartesian product of semialgebraic sets is semialgebraic, the statement follows from (18).

-G Proof of Theorem 5

We organize the proof in three steps.

Step 1: From (18), we have

| (65) |

Step 2: Motivated by (65), we focus on deriving the -dimensional volume of . For this purpose, we proceed as in [4] by uniformly discretizing the interval into subintervals , , with breakpoints , where for .

From (24), we then have

| (66) |

where was defined in (13). We recognize that the set in (66) is a Minkowski sum of intervals, i.e., is a zonotope imbedded in , wherein each interval is rotated and scaled in via different linear transformations , .

Using the formula for the volume of zonotopes [15, eqn. (57)], [69, exercise 7.19], we can write (66) as

| (67) |

To compute the summand determinants in (67), let

where . In the matrix list notation, let us use the vertical bars to denote the absolute value of determinant. From (13), equals

| (68) |

where we used the properties of elementary row operations.

Notice that the determinant appearing in the last step of (68) is the Vandermonde determinant (see e.g., [70, p. 37])

| (69) |

Combining (67), (68) and (69), we obtain

| (70) |

Step 3: Our next task is to simplify (70). Observe that the sum

| (71) |

returns a polynomial in of degree , and hence the limit in (70) is always well-defined. Specifically, the limit extracts the leading coefficient of this polynomial.

Let us denote the leading coefficient of the sum (71) as . By the Euler-Maclaurin formula [71], [72, Chap. II.10]:

| (72) |

One way to unpack (72) is to write it as a sum over the symmetric permutation group of the finite set , i.e.,

where , , and stands for “the number of”. We will now prove that

| (73) |

To this end, we write as an integral over :

| (74) |

In 1955, de Bruijn [73, see toward the end of Sec. 9] used certain Pfaffians to evaluate

-H Proof of Theorem 6

From (13), the subvector , where , is component-wise nonnegative for all .

Therefore, by triangle inequality, we have

| (76) |

where denotes the th subvector with component-wise absolute values. Let us call as the “absolute unit vector”.

The upper bound in (76) is convex in , and is maximized by an absolute unit vector collinear with given by

| (77) |

i.e., the unit vectors associated with up to plus-minus sign permutations among its components.

References

- [1] P. Varaiya, “Reach set computation using optimal control,” in Verification of Digital and Hybrid Systems. Springer, 2000, pp. 323–331.

- [2] M. Althoff, “An introduction to CORA 2015,” in Proc. of the Workshop on Applied Verification for Continuous and Hybrid Systems, 2015.

- [3] A. A. Kurzhanskiy and P. Varaiya, “Ellipsoidal toolbox (ET),” in Proceedings of the 45th IEEE Conference on Decision and Control. IEEE, 2006, pp. 1498–1503.

- [4] S. Haddad and A. Halder, “The convex geometry of integrator reach sets,” in 2020 American Control Conference (ACC). IEEE, 2020, pp. 4466–4471.

- [5] R. J. Aumann, “Integrals of set-valued functions,” Journal of Mathematical Analysis and Applications, vol. 12, no. 1, pp. 1–12, 1965.

- [6] S. Haddad and A. Halder, “Boundary and taxonomy of integrator reach sets,” in 2022 American Control Conference (ACC). IEEE, 2022, pp. 4133–4138.

- [7] J. M. Borwein and R. E. Crandall, “Closed forms: what they are and why we care,” Notices of the AMS, vol. 60, no. 1, pp. 50–65, 2013.

- [8] J.-B. Hiriart-Urruty and C. Lemaréchal, Convex analysis and minimization algorithms I: Fundamentals. Springer Science & Business media, 2013, vol. 305.

- [9] A. A. Liapounoff, “Sur les fonctions-vecteurs completement additives,” Izvestiya Rossiiskoi Akademii Nauk. Seriya Matematicheskaya, vol. 4, no. 6, pp. 465–478, 1940.

- [10] P. R. Halmos, “The range of a vector measure,” Bulletin of the American Mathematical Society, vol. 54, no. 4, pp. 416–421, 1948.

- [11] J. Diestel and B. Faires, “On vector measures,” Transactions of the American Mathematical Society, vol. 198, pp. 253–271, 1974.

- [12] N. Dinculeanu, Vector measures. Elsevier, 2014.

- [13] R. Schneider, Convex bodies: the Brunn–Minkowski theory. Cambridge University Press, 2014, no. 151.

- [14] P. McMullen, “On zonotopes,” Transactions of the American Mathematical Society, vol. 159, pp. 91–109, 1971.

- [15] G. Shephard, “Combinatorial properties of associated zonotopes,” Canadian Journal of Mathematics, vol. 26, no. 2, pp. 302–321, 1974.

- [16] H. S. M. Coxeter, Regular polytopes. Courier Corporation, 1973.

- [17] A. Girard, “Reachability of uncertain linear systems using zonotopes,” in International Workshop on Hybrid Systems: Computation and Control. Springer, 2005, pp. 291–305.

- [18] A. Girard and C. Le Guernic, “Zonotope/hyperplane intersection for hybrid systems reachability analysis,” in International Workshop on Hybrid Systems: Computation and Control. Springer, 2008, pp. 215–228.

- [19] M. Althoff, O. Stursberg, and M. Buss, “Computing reachable sets of hybrid systems using a combination of zonotopes and polytopes,” Nonlinear Analysis: Hybrid Systems, vol. 4, no. 2, pp. 233–249, 2010.

- [20] M. Althoff and B. H. Krogh, “Zonotope bundles for the efficient computation of reachable sets,” in 2011 50th IEEE Conference on Decision and Control and European Control Conference. IEEE, 2011, pp. 6814–6821.

- [21] J. K. Scott, D. M. Raimondo, G. R. Marseglia, and R. D. Braatz, “Constrained zonotopes: A new tool for set-based estimation and fault detection,” Automatica, vol. 69, pp. 126–136, 2016.

- [22] A. S. Adimoolam and T. Dang, “Using complex zonotopes for stability verification,” in 2016 American Control Conference (ACC). IEEE, 2016, pp. 4269–4274.

- [23] M. Althoff, “Reachability analysis of nonlinear systems using conservative polynomialization and non-convex sets,” in Proceedings of the 16th International Conference on Hybrid Systems: Computation and Control, 2013, pp. 173–182.

- [24] N. Kochdumper and M. Althoff, “Sparse polynomial zonotopes: A novel set representation for reachability analysis,” IEEE Transactions on Automatic Control, 2020.

- [25] D. Cox, J. Little, and D. OShea, Ideals, varieties, and algorithms: an introduction to computational algebraic geometry and commutative algebra. Springer Science & Business Media, 2013.

- [26] J. Yong and X. Y. Zhou, Stochastic controls: Hamiltonian systems and HJB equations. Springer Science & Business Media, 1999, vol. 43.

- [27] S. Haddad and A. Halder, “Certifying the intersection of reach sets of integrator agents with set-valued input uncertainties,” IEEE Control Systems Letters, 2022.

- [28] E. D. Bolker, “A class of convex bodies,” Transactions of the American Mathematical Society, vol. 145, pp. 323–345, 1969.

- [29] R. Schneider and W. Weil, “Zonoids and related topics,” in Convexity and its Applications. Springer, 1983, pp. 296–317.

- [30] P. Goodey and W. Wolfgang, “Zonoids and generalisations,” in Handbook of Convex Geometry. Elsevier, 1993, pp. 1297–1326.

- [31] J. Bourgain, J. Lindenstrauss, and V. Milman, “Approximation of zonoids by zonotopes,” Acta Mathematica, vol. 162, no. 1, pp. 73–141, 1989.

- [32] C. Vinzant, “The geometry of spectrahedra,” in Sum of Squares: Theory and Applications. American Mathematical Society, 2020, pp. 11–36.

- [33] A. Tarski, “A decision method for elementary algebra and geometry,” in Quantifier elimination and cylindrical algebraic decomposition. Springer, 1998, pp. 24–84.

- [34] A. Seidenberg, “A new decision method for elementary algebra,” Annals of Mathematics, pp. 365–374, 1954.

- [35] J. Bochnak, M. Coste, and M.-F. Roy, Real algebraic geometry. Springer Science & Business Media, 2013, vol. 36.

- [36] G. Blekherman, P. A. Parrilo, and R. R. Thomas, Semidefinite optimization and convex algebraic geometry. SIAM, 2012.

- [37] A. B. Kurzhanski and P. Varaiya, Dynamics and Control of Trajectory Tubes: Theory and Computation. Springer, 2014, vol. 85.

- [38] G. Zaimi, “A polynomial implicitization,” MathOverflow. [Online]. Available: https://mathoverflow.net/q/381335

- [39] H. S. Wilf, generatingfunctionology. CRC Press, 2005.

- [40] L. Kronecker, Zur Theorie der Elimination einer Variabeln aus zwei algebraischen Gleichungen. Buchdruckerei der Königl. Akademie der Wissenschaften (G. Vogt), 1881.

- [41] R. Salem, Algebraic numbers and Fourier analysis. Wadsworth Publishing Company, 1983.

- [42] R. Schneider, “Zonoids whose polars are zonoids,” Proceedings of the American Mathematical Society, vol. 50, no. 1, pp. 365–368, 1975.

- [43] Y. Lonke, “On zonoids whose polars are zonoids,” Israel Journal of Mathematics, vol. 102, no. 1, pp. 1–12, 1997.

- [44] J. W. Helton and V. Vinnikov, “Linear matrix inequality representation of sets,” Communications on Pure and Applied Mathematics, vol. 60, no. 5, pp. 654–674, 2007.

- [45] J. W. Helton and J. Nie, “Semidefinite representation of convex sets,” Mathematical Programming, vol. 122, no. 1, pp. 21–64, 2010.

- [46] S. P. Boyd and L. Vandenberghe, Convex optimization. Cambridge University Press, 2004.

- [47] L.-L. Xie and P. R. Kumar, “A network information theory for wireless communication: Scaling laws and optimal operation,” IEEE transactions on information theory, vol. 50, no. 5, pp. 748–767, 2004.

- [48] F. Xue, P. R. Kumar et al., “Scaling laws for ad hoc wireless networks: an information theoretic approach,” Foundations and Trends® in Networking, vol. 1, no. 2, pp. 145–270, 2006.

- [49] C. Fan, J. Kapinski, X. Jin, and S. Mitra, “Locally optimal reach set over-approximation for nonlinear systems,” in 2016 International Conference on Embedded Software (EMSOFT). IEEE, 2016, pp. 1–10.

- [50] Y. Meng, D. Sun, Z. Qiu, M. T. B. Waez, and C. Fan, “Learning density distribution of reachable states for autonomous systems,” in Conference on Robot Learning. PMLR, 2022, pp. 124–136.

- [51] OEIS Foundation Inc., “The On-Line Encyclopedia of Integer Sequences,” http://oeis.org/A107254, 2019.

- [52] S. R. Finch, Mathematical constants. Cambridge University Press, 2003.

- [53] N. G. De Bruijn, Asymptotic methods in analysis. Courier Corporation, 1981, vol. 4.

- [54] M. Abramowitz and I. A. Stegun, Handbook of mathematical functions with formulas, graphs, and mathematical tables. US Government Printing Office, 1970, vol. 55.

- [55] F. C. Schweppe, Uncertain dynamic systems. Prentice Hall, 1973.

- [56] F. Chernousko, “Optimal guaranteed estimates of indeterminacies with the aid of ellipsoids, I,” Engineering Cybernetics, vol. 18, no. 3, pp. 1–9, 1980.

- [57] ——, “Guaranteed ellipsoidal estimates of uncertainties in control problems,” IFAC Proceedings Volumes, vol. 14, no. 2, pp. 869–874, 1981.

- [58] D. Maksarov and J. Norton, “State bounding with ellipsoidal set description of the uncertainty,” International Journal of Control, vol. 65, no. 5, pp. 847–866, 1996.

- [59] C. Durieu, E. Walter, and B. Polyak, “Multi-input multi-output ellipsoidal state bounding,” Journal of Optimization Theory and Applications, vol. 111, no. 2, pp. 273–303, 2001.

- [60] T. Alamo, J. M. Bravo, and E. F. Camacho, “Guaranteed state estimation by zonotopes,” Automatica, vol. 41, no. 6, pp. 1035–1043, 2005.

- [61] A. Halder, “On the parameterized computation of minimum volume outer ellipsoid of Minkowski sum of ellipsoids,” in 2018 IEEE Conference on Decision and Control (CDC). IEEE, 2018, pp. 4040–4045.

- [62] ——, “Smallest ellipsoid containing p-sum of ellipsoids with application to reachability analysis,” IEEE Transactions on Automatic Control, 2020.

- [63] “CORA: A tool for continuous reachability analysis,” https://tumcps.github.io/CORA/, accessed: 2021-02-14.

- [64] A. Kurzhanskiĭ and I. Vályi, Ellipsoidal calculus for estimation and control. Nelson Thornes, 1997.

- [65] F. John, “Extremum problems with inequalities as subsidiary conditions,” Studies and Essays: Courant Anniversary Volume, presented to R. Courant on his 60th Birthday, pp. 187–204, 1948.

- [66] M. Henk, “Löwner-John ellipsoids,” Documenta Math, vol. 95, p. 106, 2012.

- [67] S. Boyd, L. El Ghaoui, E. Feron, and V. Balakrishnan, Linear matrix inequalities in system and control theory. SIAM, 1994.

- [68] M. Grant and S. Boyd, “CVX: Matlab Software for Disciplined Convex Programming, version 2.1,” http://cvxr.com/cvx, Mar 2014.

- [69] G. M. Ziegler, Lectures on polytopes. Springer Science & Business Media, 2012, vol. 152.

- [70] R. A. Horn and C. R. Johnson, Matrix analysis. Cambridge University Press, 2012.

- [71] T. M. Apostol, “An elementary view of Euler’s summation formula,” The American Mathematical Monthly, vol. 106, no. 5, pp. 409–418, 1999.

- [72] E. Hairer and G. Wanner, Analysis by its History. Springer Science & Business Media, 2006, vol. 2.

- [73] N. De Bruijn, “On some multiple integrals involving determinants,” J. Indian Math. Soc, vol. 19, pp. 133–151, 1955.