The CSIRO Crown-of-Thorn Starfish Detection Dataset

Abstract.

Crown-of-Thorn Starfish (COTS) outbreaks are a major cause of coral loss on the Great Barrier Reef (GBR) and substantial surveillance and control programs are underway in an attempt to manage COTS populations to ecologically sustainable levels. We release a large-scale, annotated underwater image dataset from a COTS outbreak area on the GBR, to encourage research on Machine Learning and AI-driven technologies to improve the detection, monitoring, and management of COTS populations at reef scale. The dataset is released and hosted in a Kaggle competition that challenges the international Machine Learning community with the task of COTS detection from these underwater images 111https://www.kaggle.com/c/tensorflow-great-barrier-reef.

1. Introduction

Australia’s Great Barrier Reef (GBR) is a national icon and a World Heritage Site, where tiny corals build continental-scale underwater structures inhabited by thousands of other marine creatures. While it is no secret that the GBR is under threat, it may not occur to everyone that a species of starfish, the Crown-of-Thorns Starfish (COTS), is one of the few main factors responsible for coral loss on the GBR.

Hat-sized and covered with poisonous spines, COTS are a common reef species which feed on corals and have been an integral part of coral reef ecosystems for millennia. However, their populations can explode to thousands of starfish on individual reefs, eating up most of the coral and leaving behind a white-scarred reef that will take years to recover. For centuries the reef was resilient enough to recover from such a natural life cycle, and affected coral was given time to eventually grow back. This is no longer the case as a combination of stress-factors now threaten health of the GBR. The World Wide Fund for Nature (WWF) lists five key threats to the GBR222https://www.wwf.org.au/what-we-do/oceans/great-barrier-reef#gs.8nv5fh and the COTS outbreaks and their links to increased farm pollution are at the top of the list, second only to the massive bleaching events that impacted the reef in recent history.

Australian agencies are running a major monitoring and management program in an effort to track and control COTS populations to ecologically sustainable levels. One of the key methods used for COTS and coral cover surveillance is the Manta Tow method, in which a snorkel-diver is towed behind a boat to perform visual assessment of the underwater habitat. The boat stops after every transect (typically every 200 meters) to allow variables observed during the tow to be recorded on a datasheet. Information from such surveys is used to identify early outbreaks of COTS or characterize existing outbreaks, and provides key information for the decision support systems to better target deployment of COTS control teams. While effective in general, the survey method has a few limitations related to its operational scalability, data resolution, reliability and traceability.

One way of improving the survey method is to deploy underwater imaging devices to collect data from the reefs and employ deep learning algorithms to analyze the imagery, for example, to find COTS and characterize coral cover. AI-driven environmental surveys could significantly improve the efficiency and scale at which marine scientists and reef managers survey for COTS, offering great benefits to the COTS control program.

The dataset presented in this paper aims to make a bold statement about the capabilities of Machine Learning and AI technologies applied to broadscale surveillance of underwater habitats. In partnership with international community, we want to demonstrate a step-change in delivering accurate, timely, and reliable assessment of reef-scale ecosystems. We are releasing a large annotated underwater imagery dataset collected on the GBR, collected in collaboration with COTS Control teams in a COTS outbreak area. We have worked with domain experts and marine scientists to validate the dataset annotations and are sharing the data with international community through a Kaggle competition.

2. Data Collection Method

We have collected the data with GoPro Hero9 cameras that were adapted for use in the Manta Tow method. Specifically, the camera was attached to the bottom of the manta tow board that the snorkeler-diver holds onto during the surveys. The camera provides an oblique field of view of the reef below the diver and the distance to the reef dynamically changes as the diver explores the reef. The distance is typically several meters from the bottom, but can be as close as a few tens of centimeters or as far as 10 meters or more. The survey boat moves at a speed up to 5 knots and it pulls the diver for a duration of two minutes which equates to transect of approximately 200 meters. The boat then stops to let the diver record data observed during the transect on a sheet of paper. We set the GoPro cameras to record videos continuously at 24 frames per second at 3840x2160 resolution and manually removed the periods of no activity between transects.

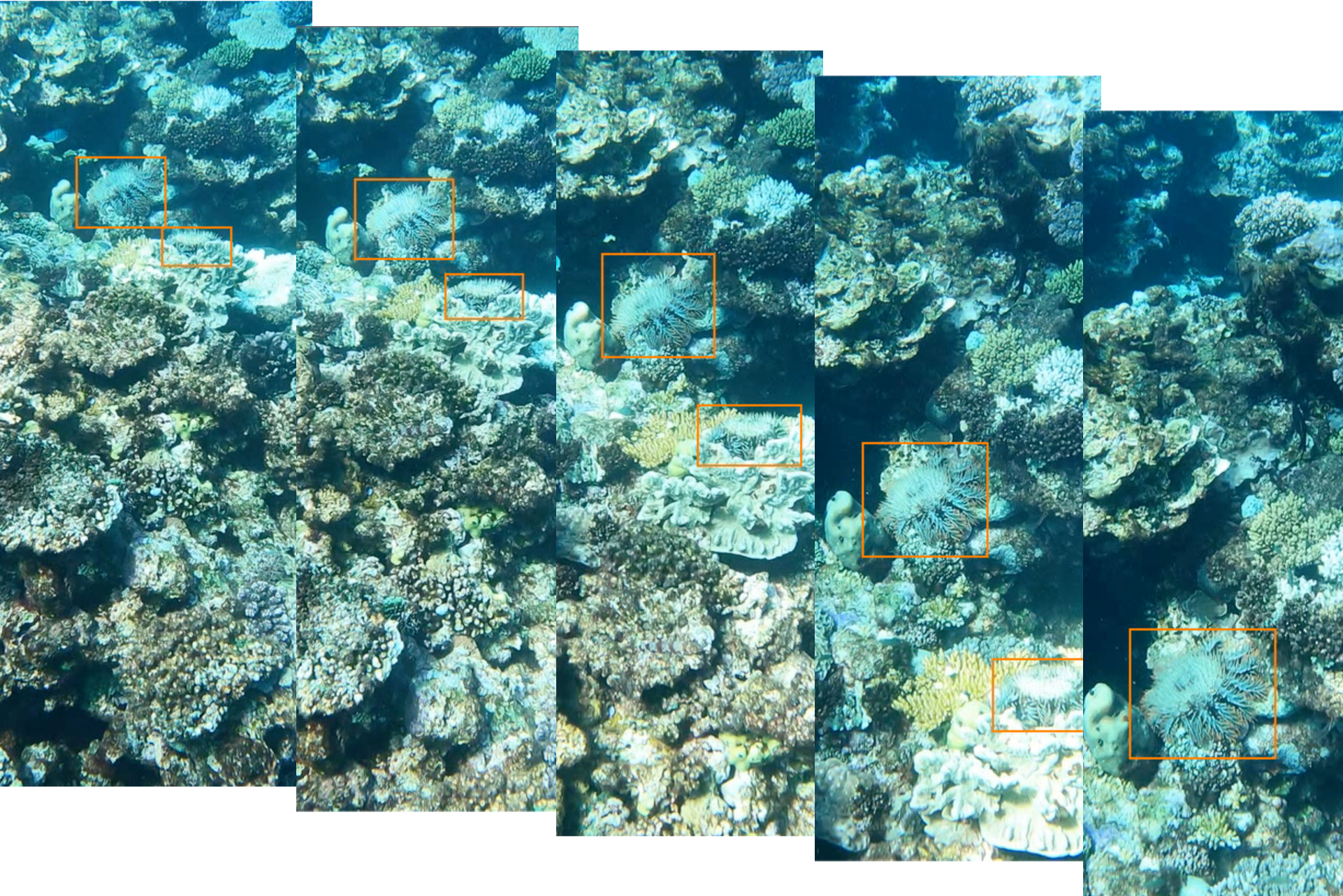

The dataset then underwent an AI-assisted annotation and quality assurance process, in which expert annotators, with the help of pre-trained COTS detection models, identified all COTS in the images. Each COTS detection in an image was marked with a rectangular box using our annotation software. The dataset was collected over a single day in October 2021 at a reef in the Swain Reefs region of the GBR. There are variations in lighting, visibility, coral habitat, depth, distance from bottom and viewpoint.

3. Dataset Characterization

The released dataset consists of sequences of underwater images collected at five different areas on a reef in the GBR. It contains more than 35k images, with hundreds of individual COTS visible. The dataset is split between training and testing data and for the purposes of the competition, we are releasing the training dataset consisting of images annotated with bounding boxes around each COTS (e.g. Fig. 2).

There are a few key factors that differentiate this dataset with conventional object detection datasets:

-

•

There is only one class, COTS, in this object detection dataset.

-

•

The dataset naturally exhibits sequence-based annotations as multiple images are taken of the same COTS as the boat moves past it. Note that the evaluation is still individual detection-based, similar to the COCO challenge.

-

•

There could be multiple COTS in the same image and the COTS can overlap each other. COTS are cryptic animals and like to hide, thus only part of the animal might be visible in some images.

-

•

The key objective in surveys conducted by the monitoring and control program is to find all visible COTS along the defined transect paths, hence recall is prioritized. The evaluation is therefore based on the average F2-score (see next section for details).

4. Evaluation Protocol

For a single image, the IoU of a proposed set of object pixels and a set of true object pixels is calculated as: .

The procedure that counts True Positives (TP), False Positives (FP), and False Negatives (FN) is defined as:

Given an IoU threshold , the Fβ-score (Sasaki, 2007) is calculated based on the number of true positives , false negatives , and false positives ,

| (1) |

We use for the competition (F2-score).

The F2-score is calculated for IoU thresholds ranging from 0.3 to 0.8 at 0.05 increments, and we then use the average as the metric for the main leaderboard.

Acknowledgements

We thank Dan Godoy and the Blue Planet Marine COTS Control Team and research vessel crew, who helped plan voyage logistics and collected GoPro video alongside their manta tow surveys. We also thank Dave Williamson at GBRMPA for providing data and planning support.

We thank Google for supporting the project in various ways and thank the Google/Kaggle team that includes Scott Riddle, Danial Formosa, Taehee Jeong, Glenn Cameron, Addison Howard, Will Cukierski, Sohier Dane, Ryan Holbrook, and more.

References

- (1)

- Sasaki (2007) Yutaka Sasaki. 2007. The truth of the F-measure. Teach Tutor Mater (01 2007).