The Beatbots: A Musician-Informed Multi-Robot Percussion Quartet

Abstract

Artistic creation is often seen as a uniquely human endeavor, yet robots bring distinct advantages to music-making, such as precise tempo control, unpredictable rhythmic complexities, and the ability to coordinate intricate human and robot performances. While many robotic music systems aim to mimic human musicianship, our work emphasizes the unique strengths of robots, resulting in a novel multi-robot performance instrument called the Beatbots, capable of producing music that is challenging for humans to replicate using current methods. The Beatbots were designed using an “informed prototyping” process, incorporating feedback from three musicians throughout development. We evaluated the Beatbots through a live public performance, surveying participants () to understand how they perceived and interacted with the robotic performance. Results show that participants valued the playfulness of the experience, the aesthetics of the robot system, and the unconventional robot-generated music. Expert musicians and non-expert roboticists demonstrated especially positive mindset shifts during the performance, although participants across all demographics had favorable responses. We propose design principles to guide the development of future robotic music systems and identify key robotic music affordances that our musician consultants considered particularly important for robotic music performance.

Index Terms:

robotic music, multi-robot systems, informed prototypingI Introduction

Creating art is often seen as a uniquely human venture, tied to emotion, creativity, and expression [1]. Yet, the intersection of robotics and art is expanding [2, 3, 4], challenging traditional ideas on art and sparking excitement and skepticism about robots’ artistic role. In this work, we focus on robotic music, an area where robots offer distinct advantages, such as the creation of unpredictable rhythms [5, 6] and precise tempo control [7], expanding possibilities in musical performance.

While many current robotic music systems are designed to replicate human playing, often being trained on human musicians to sound as “human” as possible [8, 9, 10, 11], our approach emphasizes robotic capabilities. We explore using robots as an alternative means of music creation, focusing on performing music that would be challenging for humans to replicate alone. By integrating human-robot co-creation principles [12, 13] and utilizing robotic abilities like the addition of randomness [14] and coordination between multiple robots within a system [15], we showcase how robots can contribute to music in novel ways, while still including meaningful human interaction [16] and, importantly, centering human musicians’ values [17].

For this work, we chose percussion music because it aligns well with known robotic strengths. Opting for a multi-robot system also allowed us to explore more complex rhythmic coordination between musicians, as seen in several well-known contemporary percussion compositions [18, 19]. We specifically chose the recognized percussion quartet form [20].

Our robotic percussion quartet, called the Beatbots, represents a novel artistic system for producing and performing robotic music. To ensure alignment with human musicianship values, we collaborated with three musicians through informed prototyping [21] in the design of our robotic music system. Our work was driven by the following research questions:

-

R1)

Through informed prototyping with musicians, can we develop a novel robotic system that utilizes robots’ unique strengths in percussion music performance?

-

R2)

How do experts and non-experts in music and robotics perceive robotic percussion music performance?

-

R3)

What do participants value in a multi-robot percussion music experience? How does interaction with robotic percussion performers affect that experience?

We also present a public demonstration and evaluation of the Beatbots system, providing insights into how different demographics—experts and non-experts in percussion music and robotics—perceive the performance. Our work also introduces five design principles for robotic percussion systems based on insights from the demonstration and discusses musical robot affordances that emerged from our musician-informed design process. We aim to offer reusable strategies and insights for future robot music performance development.

II Related Work

II-A Algorithmic & Generative Percussion Music

Percussion music originated from instinct, with early percussionists relying on innate musicality. Over time, techniques were codified and passed down over generations [22, 23], with rhythmic repetition and rule-based phrasing forming a foundation for intricate patterns [24]. This enabled the creation of new, complex patterns derived from traditional phrases, much like certain procedural approaches to music composition [25], which range from medieval to contemporary works [26]. Additionally, contemporary percussion composers sometimes move away from strict rules, introducing elements of randomness to add further musical complexity [27, 28].

Steve Reich is known for repetitive rhythms and phasing [29], where phrases are played at different tempos to create desynchronized textures, as seen in Drumming and Clapping Music [18, 30]. Similarly, Philip Glass uses overlapping rhythms to build complex layers from simple patterns, as in his String Quartet 6 [31, 19]. John Cage, in works like his Composed Improvisations, pioneered rule-driven compositions [32] that use randomness, allowing performers to improvise within structured frameworks—such as having musicians roll dice to decide their next pattern [33].

Our work takes inspiration from contemporary percussion compositions by incorporating phasing, rhythmic layering, and randomness, implemented by our robotic music system. These techniques, pioneered by composers like Reich, Glass, and Cage, are particularly well-suited for our approach emphasizing unique robot strengths, as robots can easily execute random or complex patterns requiring difficult coordination, like phasing and overlapping rhythms, which would require extensive training for human musicians to perform accurately.

II-B Robot Musicianship

As robots become increasingly prevalent [34, 35], their applications in creative fields extend beyond automation [2, 3, 4, 8]. Musical robots have been developed for various instruments [36, 37], ranging from string [11, 38], to wind [39, 40], to percussion [41, 42].

Recent developments in robotic music tend to emphasize anthropomorphism, with systems mimicking human appearance or trained on human movements [8, 41, 10]. Examples include the Waseda Flutist Robot [40], Toyota’s violin-playing robot [11], and others [43, 44, 45]. Robot systems also leverage physical presence and visual cues—important elements to live performance [46]—which digital musicians cannot [6, 47].

While robotic music systems are often viewed as novel instruments [48], musicians emphasize that human control—either through programming or real-time interaction—is essential for classification as a new instrument [6]. Systems like Shimon [41, 9] or GuitarBot, in performance with violinist Mari Kimura [49], demonstrate real-time interaction through their ability to synchronize and adapt to human musicians.

Robots also possess capabilities that transcend human limitations, such as increased accuracy [50], improved ability to follow instructions while introducing unpredictable variations [6, 14], and the ability to perform feats of speed or scale that would require complex coordination of multiple humans [5, 36]. For example, Haile, a robot that plays a Native American drum, exceeds human speed [5], while other systems utilize three-dimensional space to achieve orchestral effects [36, 51]. However, these systems typically employ instrument actuation methods that mimic human movements, still aiming to produce music that sounds as human-like as possible.

Our work diverges from prior approaches by explicitly leveraging robots’ unique capabilities rather than emulating humans. Through the Beatbots, we explore new artistic possibilities enabled by our algorithmic percussion music and novel actuation method. While this approach may produce unconventional musical output, we believe it will lead to new insights toward distinctly robotic music systems and musical culture of the future [16]. Given this divergence, we prioritized input from human musicians [52] throughout our design process to ensure our system maintains artistic integrity while pushing the boundaries of traditional musicianship.

III Design Method

This section outlines the design process of the Beatbots, a four-robot system which leverages robot strengths by playing algorithmic percussion music using whole-body kinetic movement. Robot behaviors are inspired by leader-follower rules, and they can be interacted with through moving the robots and arenas or controlling robot behavior via a keyboard interface.

III-A Informed Prototyping

When developing the Beatbots, our primary objective was to center the values of its ideal users: musicians [53, 54]. Unlike technology-focused research that typically prioritizes system accuracy and efficiency [55], designing novel musical systems that prioritize user needs requires a deep understanding of what matters most to users [56], often diverging significantly from designers’ initial goals [55]. As robotic systems become increasingly sophisticated, incorporating user values into the design process becomes even more critically important [57].

We therefore involved musicians as key consultants in defining the robotic system through informed prototyping [21]. This approach enabled a nuanced integration of human creativity and musician values with machine potential, ensuring the system was grounded in users’ artistic and ethical values [58].

We sought feedback from three musicians during the design process: one classically trained percussionist (M1), one guitarist, composer, and instrument designer (M2), and one pianist formally trained in both classical and jazz piano (M3). All three have some level of experience with percussion playing, are practicing live performers, and compose music. Additionally, M2 earned a doctoral degree in a music domain and M3 earned a Bachelor’s degree from a music conservatory.

We gathered feedback from our musician consultants at various stages of the design process, with detailed results presented in the following sub-sections. Specifically, we sought feedback for robot choice, programmatic music composition, instrument selection, arena design, autonomous robot behaviors, and methods of human control over the robots. We collected this feedback by presenting the current prototype to the musicians in-person as a live performance, allowing them to share their thoughts verbally without any guiding questions. This approach helped identify what aspects were most important to the musicians without external influence. After hearing initial insights, researchers asked follow-up questions to clarify and ensure a thorough understanding, enabling us to effectively and accurately integrate their feedback into the system.

III-B Robot Choice

An early design choice by the research team was to make the robots identical in appearance and sensors, creating a cohesive look and enabling future scalability. Additionally, rather than using conventional stationary actuators, like a robot arm, our musicians advocated for leveraging the robots’ ability for dynamic, whole-body movement, and instead use kinetic motion to strike instruments—a distinctly non-anthropomorphic approach. They specifically favored Sphero111https://sphero.com robots over more traditional vehicle-like designs, citing their novel, futuristic aesthetic. Using rolling balls to hit drums along the arena’s sides was also likely to be more visually engaging, especially for non-technical viewers. We chose the Sphero BOLTs222https://sphero.com/collections/all/products/sphero-bolt for their built-in sensors, including a gyroscope and motor encoder, and programmable display lights, which added visual interest and interactivity. We hypothesized that these features would enhance the experience for non-technical audiences.

III-C Percussion Music

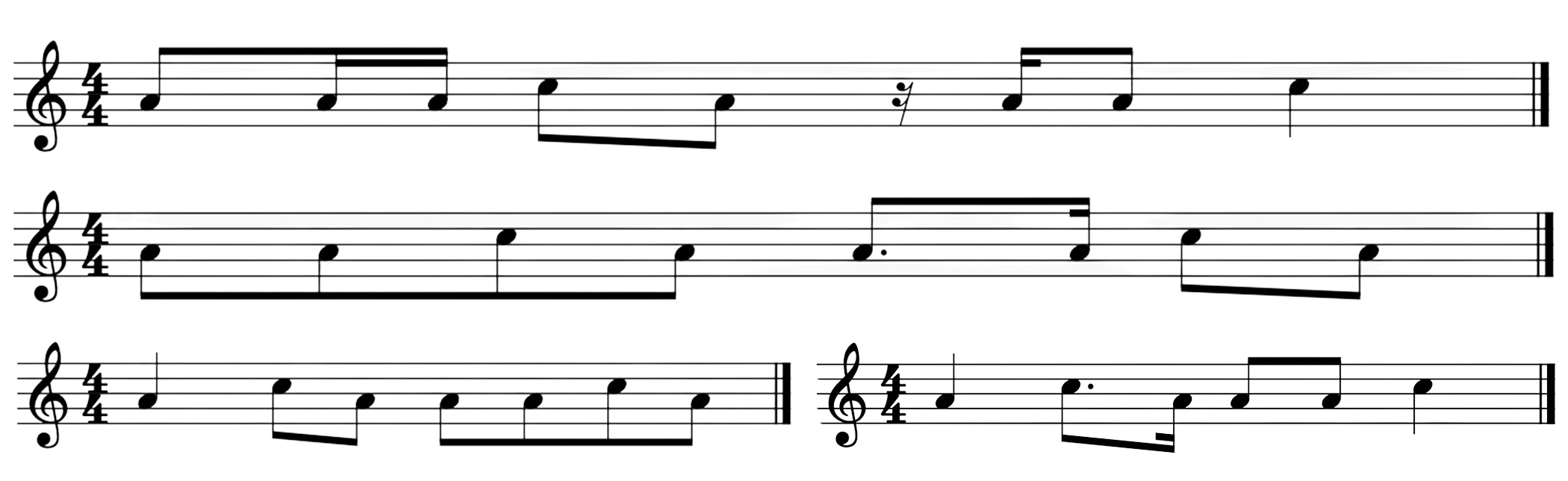

Based on feedback from our musician consultants, we prioritized the robots’ capability to play authentic percussion rhythms. Similar to Cage’s Composed Improvisations [33] and other works illustrating the divisible nature of music [59], the system’s music was built from pre-written, rule-based percussive patterns selected randomly. These patterns were informed by interviews with our musician consultants, particularly M1, through which we gathered twenty-five percussive rhythms which the Beatbots could combine to create a rich and complex musical performance (example rhythms in Fig. 1).

With the help of our musician consultants, particularly M1, we categorized these patterns. Uneven groupings, such as long, short, short, shortest, long, shortest, short, were categorized as “uneven patterns”, while even groupings like long, long were “even patterns”. We also differentiated between quicker and slower patterns—“slower” featured mostly longer note values, while “quicker” contained more shorter note values.

Another key concept gleaned from our musicians was the distinction between single and double strokes. A single stroke occurs when one downward motion creates a single sound, while a double stroke uses the momentum of the first hit to bounce and produce a second sound. A single stroke is heard as a longer sound, whereas a double stroke is heard more as two shorter sounds. The percussion patterns our musicians suggested included both single and double strokes, requiring additional considerations in robot behavior.

Instrument choice was another area where we sought input from musicians, piloting several instruments with them before making a final decision. We tested pitched (chimes, kalimba) and unpitched instruments (frame drums, shakers, cymbals). While pitched instruments added melody, their shape often caused the robots to veer off-course after impact, and incorrect pitches were far more sonically disruptive than mistimed unpitched sounds. Ultimately, we chose unpitched instruments, finding frame drums were the best-sounding option and could also easily function as walls for the four-sided robot arena.

We pilot-tested several drum sizes and found the ten-inch drums produced the best sound when struck by the robots. Frame drums also offered the advantage of producing two distinct tones: a deeper “bass” tone when hit in the center and a sharper “slap” tone when struck near the edge. By positioning the drums accordingly, both sounds could be played despite the robots’ small size. The original plan was to make all four arena walls out of ten-inch frame drums. However, the robots often accidentally hit drums that were not their direct target while moving toward their “goal” drum, making it difficult for audiences to distinguish between what we dubbed “purposeful” and “accidental” sounds.

Our musicians noted that percussionists in traditional quartets often play multiple instruments, inspiring our solution: we introduced ten-inch tambourines to the arena, positioning identical instruments across from each other. The tambourine’s metal jingles added a distinct sound, differentiating “accidental” hits on tambourines from “purposeful” ones on frame drums. This also enabled robots to switch instruments mid-performance, adding a dynamic element to the composition.

III-D “Arena” Design

The robots’ enclosure, or “arena,” played a key role in shaping the rhythms the robots could produce with kinematic motion and whether single or double strokes could be played.

From the start, instruments were positioned along arena walls to optimize acoustics, as drums placed perpendicular to the robots produced the loudest sound. Each robot started the performance in the center of its own arena, with instrument placement and arena size refined through pilot testing. After testing sizes up to two feet by two feet, we settled on ten inch by ten inch arenas, with each wall made near-entirely of a frame drum or tambourine, supported by small wooden bars.

This compact design allowed for flexible arrangements, with easily movable arenas to accommodate different spaces. For instance, arenas could be arranged as shown in Fig. 2 for a clear view of all robots at once or positioned to surround the audience for an immersive experience. Our musicians suggested using distinct colors to help audiences identify individual “performers”. We therefore assigned unique colors to each robot-arena pair, helping viewers recognize each distinct unit in the quartet despite a uniform physical appearance.

III-E Robot Behaviors

We implemented two distinct roles within our quartet, designating one robot as “leader” and the others as “followers.” This structure is common in group music settings, such as with lead singers, conductors, and concertmasters [23], and it guided the selection of rhythmic patterns. Each robot, at every step of the performance, had a list of potential patterns that it randomly selected its next pattern from. This list was based on each robots’ previous instruction and—if it was a follower—the leader’s current instruction. This approach mirrors contemporary techniques, like in Cage’s Composed Improvisations [33], where the score is actually a set of instructions. These leader-follower rules were developed in collaboration with musician consultants. Fig. 3 contains a diagram depicting how the robots chose their next pattern.

To ensure the robots played basic rhythms as accurately as possible, we implemented a global “metronome-clock” synchronized to real-world time. This clock maintained a tempo of 60 beats per minute (BPM), guiding the robots when playing their selected pattern. We found that, despite the slow 60 BPM tempo, the robots struggled to play rhythms accurately due to their imprecise movement control. Even with periodic re-synchronization using the global “metronome-clock,” the robots quickly fell out of sync. For example, even small deviations in timing when aiming to spend 0.25 seconds on a note became quite noticeable within one or two patterns.

While these instabilities initially sounded jarring, they created interesting patterns when played in a group—reminiscent of the phasing seen in Reich’s Clapping Music [29, 18] and the sound layering of Glass’ String Quartet 6 [19]. When presented with the robot music, our musicians encouraged us to embrace these sonic complexities as the timing inconsistencies introduced a unique desynchronous element—one that would be difficult for humans to consistently replicate, which was our design goal. Unlike humans, robots could add or subtract truly random intervals between notes, even doing so while playing in a group, without being influenced by other performers.

Through experimentation, we also found that the robots’ spherical shape helped produce both single and double strokes. A single stroke occurred when the robot maintained forward motion after hitting the drum, whereas for a double stroke, cutting motor power immediately after impact caused the robot to bounce back then forward again for a second, softer hit. While unintended double strokes sometimes occurred (for instance, if power was cut slightly too early), this unexpected behavior further added sonic complexity, which our musician consultants saw as a positive contribution to the composition.

Finally, to address situations where the robots failed to detect collisions after hitting the walls, we implemented a fail-safe. If no collision was detected after four seconds—the length of one pattern—the robot would automatically turn around and begin their next pattern. This sometimes led to unintended silences, but our musicians felt that having moments of rest added a natural musical element.

III-F User Interface

Our musician consultants emphasized the need for incorporating human interaction into the robotic system’s behavior. This would not only enhance audience engagement but also help musicians feel actively involved in making the music. They suggested that interacting with the system felt like programming a synthesizer or creating a DJ set—while robots physically made the music, musicians saw the system as a new method of music creation under their artistic control.

The initial design allowed users to press buttons at different points in the performance to control the robots’ movements, preserving the generative composition while offering some user control. Pilot testing revealed that using a digital piano keyboard for this interaction made participants feel more musically engaged, transforming the interaction from a distant performance to a more personal musical experience.

Implemented human interactions fell into two categories: changing the robots’ lights and their movements. By default, the robots’ display lights shifted to a new hue of their assigned color every second. However, when different piano keys were pressed, the robots synchronously changed their lights to various new colors, allowing users to control an important visual element of the performance. Pilot testing showed visual control was particularly influential for non-musician users.

Pressing different piano keys also allowed users to control various aspects of robot movement. Users could make the robots spin in place, move in circles around their arena, switch primary instruments—changing between frame drum and tambourine by turning 90 degrees—or return to the center of the arena. Users could also stop and restart the robots’ default behavior at any time. A sequence of stopping, re-centering, and restarting helped manage desynchronization and any other minor differences in the global “metronome-clock,” allowing users to adjust the performance’s musical complexity.

IV User Study

To investigate different populations’ perceptions of the robotic quartet, we conducted a user study inviting university students and community members to view and interact with the robots at a local arts space, as seen in Fig. 4.

Study activities and surveys were reviewed by a university Institutional Review Board.

IV-A Participants

community members attended with completing our survey. The performance was advertised via university mailing lists and bulletin boards. All survey respondents were students of the university where the performance was located. Each participant was asked to rate their familiarity with percussion music and robots on a five-point Likert scale, with average familiarity with percussion music being () and familiarity with robots being ().

IV-B Study Design

Participants were invited to first watch the Beatbots perform live, with the option to control parts of the performance afterwards. Almost all participants () chose to try controlling parts of the robot musical performance.

IV-B1 Robotic Performance

The Beatbots played a generative percussion composition which participants would first watch for one to three minutes without interacting with the robots.

IV-B2 Non-Performance Interaction

Participants were invited to pick up the robots, play with the robots outside the arenas, and move the robot arenas around the room as they saw fit.

IV-B3 Performance Interaction

Participants were then invited to control the Beatbots in another performance. They were encouraged to try all available methods of controlling the performance, as described in section III-F: changing the robots’ colors, switching the robots’ primary instrument, making the robots spin in place, making the robots travel in circles around the arena, stopping then restarting the robots’ movements, and re-centering the robots. These contributions were all mediated by pressing keys on a small piano keyboard.

IV-B4 User Survey

At the end of the experience, participants were asked to fill out an optional user survey which was disseminated anonymously through a nearby QR code. The survey asked them to rate their familiarity with percussion music and robots each on a five-point Likert scale. Additionally, they were asked three free-response questions: “Did you enjoy the experience? What made it positive or negative?”, “Do you believe a human quartet could play what you just heard?”, and “Did you enjoy the human interaction component? How did it add or detract from the experience?”

IV-C Data Collection & Analysis

Researchers at the performance took detailed observational notes, and survey responses were collected at the scene.

| Themes | Sub-themes | Definitions |

| Playful Robot Engagement | Robot Control Enjoyment | Enjoyed controlling the robots |

| Robot Interaction Enjoyment | Enjoyed interacting with robots in non-control ways | |

| Robot Movement Enjoyment | Enjoyed how the robots moved around the arena | |

| Robot Aesthetic Appeal | Visual Engagement | Engaged by the robot visuals (e.g., lights or colors) |

| Found Robots “Cute” & “Funny” | Appreciated robots’ friendly and humorous behavior | |

| Calming Experience | Described the experience as calming or peaceful | |

| Musical Appreciation | Percussion Music Enjoyment | Enjoyed how the percussion music sounded |

| Appreciation of Complex Rhythms | Specifically appreciated the complexity of rhythms |

In our survey analysis, we conducted inductive analysis [60] of participants’ free responses to generate a list of relevant themes. Two researchers independently reviewed responses, inductively identified, and finalized themes. Then, two independent researchers coded survey responses with the themes, and we calculated Cohen’s Kappa to measure inter-rater reliability, achieving a “substantial” agreement score of (“substantial” agreement referring to ) [61].

V Results

Analysis of participant responses revealed several key findings about user experience. We first present themes identified through analysis of survey free responses (see Table I), followed by patterns observed across different levels of expertise.

V-A Playful Robot Engagement

Both the performance and interactive aspects of the Beatbots resulted in an engaging experience characterized by what several participants described as “playfulness” in robot interaction, control, and autonomous movement. P23 remarked, “I found it to be childlike… I enjoyed the aspect of play.”

The performance evoked strong positive responses, many of which cited robots’ rolling movement as a reason for enjoyment. P6 wrote “I enjoyed seeing them roll around,” while P16 appreciated “that [the robots] roll around in little tambourine cages.” The coordinated movement between robots especially impressed viewers, with P11 noting they “liked that each of the robots worked together to create a cohesive piece.”

Most participants did not immediately approach the interactive opportunities. However, after researchers mentioned the possibility for interaction, nearly all respondents tested the human-robot interactions. Of those, the vast majority reported positive experiences, with only two expressing reservations, stating that control made the experience feel “less magical” (P24) or “more predictable” (P23).

The piano keyboard interface for controlling the robots proved particularly engaging. P2 wrote “I loved the interactions I had with robot by using the keyboard,” while P3 remarked that “it was interesting seeing how the piano was able to control the pattern.” During the interactions, several participants also remarked that using the keyboard made them feel like fellow musicians within the ensemble. P21 expressed the playful nature of controlling the ensemble, writing “It made me feel like the director of my own symphony!”

Physical, non-controlling interactions also emerged as a key element of engagement. Many participants valued being able to pick up and move the robots, which seemed to help them connect with the robots as entities rather than just machines. P13 captured this sentiment, saying it was “fun to interact with the little robots, really brought joy.” P1 echoed this: “It was fun to play around with how the robots acted.”

V-B Robot Aesthetic Appeal

The visual design elements of the Beatbots helped craft a distinct aesthetic experience that participants found appealing. The combination of lights, movement, and physical design contributed to both the robots’ perceived personality and the overall atmosphere of the performance.

The robots’ lights were particularly compelling, featured in several responses. P1 noted how the colored lights contributed to giving the robots personality, observing that they “seemed to have some personality when they spinned around and changed color.” P21 also expressed that they “enjoyed the colors and rhythms. It gives rhythm and blues a whole new meaning!”

Participants also responded well to the overall charming aesthetic character of the spherical robots. Multiple participants used endearing descriptors, with P4 calling them “so cute” and P8 finding them “so fun.” This aesthetic encouraged engagement, evidenced by several participant comments, such as P5 noting they “like the funny noises and robots.”

The combined aesthetic effects of the robot system created a calming atmosphere. P14 characterized the experience as “very zen,” while P25 appreciated it as “an engaging sensory experience.” The careful integration of visuals and other aspects of physical form with the generated music seemed to transform what could have been a purely rhythmic performance into a comprehensive multi-sensory performance.

V-C Musical Appreciation

Participants’ engagement with the musical aspects centered around two distinct elements: enjoyment of the percussion music itself and the complexity of the generated rhythms.

Those who praised the music output often described it as unconventional but still appealing. P14 observed that “though the sounds sound a bit off tempo, it sounds very calming,” suggesting an appreciation for the atypical rhythms. P27 expressed surprise at the musicality of the system, noting that “it was cool that it actually produced a tune.” These responses indicate that participants found value in the robots’ musical capabilities, even when—and sometimes because—the output deviated from traditional percussion performance.

The rhythmic complexity also surfaced as an engaging element separate from music enjoyment. When asked whether humans could replicate the performance, participants’ responses revealed a fascination with its technical and rhythmic complexity. P26 noted this, suggesting “All rhythms are unique and there’s probably some 127-th tuplet that would be almost impossible to recreate,” referring to an exceptionally precise musical note value. P22 also pointed to the irregular timing, stating that “the rhythms seem a little too erratic/unstable/stop-and-go for a normal human.” Yet rather than viewing these characteristics negatively, participants appreciated them as unique features of robotic musicianship, comparing the music to “rave” or “experimental” music that moves in unexpected but still engaging ways.

V-D Patterns Across Expertise

A particularly striking finding was the contrast between expert and non-expert responses to the robotic performance. While nearly all survey respondents () reported a favorable opinion—with one respondent reporting a neutral opinion, who had a previous aversion to percussion music—their reasoning varied based on expertise level.

The robot music sharply divided participants along the lines of musical expertise—experts tended to not enjoy the music while non-experts consistently expressed positive reactions. Among our participants, non-experts ( participants who rated themselves or on a five-point Likert scale) provided all positive reviews that specifically mentioned music enjoyment in the free responses, indicating a strong enjoyment of the music despite not having the technical background to understand the rhythmic patterns behind the music.

In contrast, percussion experts ( participants who self-rated as a or , and whom researchers identified as music students) followed a different trajectory. Researchers who spoke with expert musicians before the performance began noted that expert musicians were far more negative about their hopes for the experience than non-experts. However, by the end of the performance, all four expert musicians who completed the survey indicated positive reactions to the complete audio-visual experience. Though no music experts reported enjoyment of the music, they developed appreciation through non-musical aspects. Their music expertise also led them to recognize technical complexities that non-experts missed—for instance, all experts thought the performance would be impossible for humans to replicate exactly, while all five participants who strongly believed it could be reproduced were non-experts.

Robotics expertise also influenced engagement with the system, albeit differently compared to musical expertise. Among our participants, we had 4 robotics experts (rated or on the Likert scale) and 20 non-experts (rated or on the Likert scale) based on self-reported expertise.

Robotics experts focused more on the control and coordination aspects, with all experts highlighting their experience controlling the robots in the survey—usually expressing a desire for more granular control. This reflects a deeper technical understanding of robots’ movement capabilities. Additionally, researchers at the performance who spoke with expert and non-expert roboticists noted that roboticists actually had higher expectations for what the robots would be able to do, compared to non-experts who were initially more skeptical.

Despite this initial skepticism, non-expert roboticists showed more eventual enthusiasm for the robots’ movements and physical presence in their responses. Notably, all participants who explicitly enjoyed the robot movement were non-experts, indicating a strong mindset shift regarding robot movements. They also frequently compared the robotic sounds to familiar musical genres, potentially indicating a focus on the overall experience rather than its technical aspects. This focus may also explain why the keyboard interface proved particularly effective at engaging non-experts, as by allowing them to directly control and interact with the robots, the interface provided a hands-on way for non-experts to connect with the performance and appreciate the robots’ capabilities, without needing to understand underlying technical complexities.

VI Discussion

The complex, unconventional rhythms generated by the Beatbots demonstrate the potential for robotic music systems to push artistic boundaries while centering musician values. The positive response to the Beatbots’ unique robotic rhythms suggests that there is a receptive audience for experimental robotic music. However, the differences in how surveyed experts and non-experts perceived the performance show that, to fully realize the potential of robotic music as an emerging art form, designers must strike a balance between pushing creative boundaries and ensuring that their work resonates with both musicians and audience members of all demographics.

VI-A The Role of Expertise

Regarding R2, the differences in how music and robotics experts versus non-experts experienced the robotic performance suggest that expertise significantly shapes user perceptions.

These differences highlight the complex role of expertise in shaping audience perceptions of robot music. Initial skepticism of musical experts, stemming from attunement to precision and conventional rhythms, contrasts with the openness of non-experts to unconventional music. Similarly, the expectation of robotics experts regarding technical aspects, like granularity of control, differed from non-experts’ initial skepticism and eventual captivation with the “magical” robot movements.

Our findings reveal how different aspects of the performance shifted different expert mindsets: hands-on interaction transformed robotics non-experts from skeptical to enthusiastic, while multi-sensory elements won over music experts despite their continued skepticism about the musical output. This demonstrates that expertise does not determine audience responses in a uniform way, with various performance elements creating unique pathways to appreciation. Ultimately, the fact that both experts and non-experts found value in the Beatbots performance, even if through different routes, highlights the inclusive potential of robotic musicianship. This suggests that by centering the values of both experts and non-experts, designers can create experiences that push artistic boundaries while still fostering broad engagement and appreciation.

VI-B Musician-Informed Robot Affordances

During our design process, our musicians provided valuable insight into what types of capabilities are most important to musicians for inclusion in future robot music systems. To address R1, we provide a list of the musical robot affordances in our system inspired by our musicians’ feedback.

VI-B1 Authentic rhythmic patterns

The actual rhythmic or melodic content used by robotic music systems should be derived from real musical practices, even if methods of playing the music are dissimilar to human methods. This was the most important affordance to our musician consultants.

VI-B2 Music-informed decision-making architecture

The system’s decision-making process should reflect how musicians make choices in musical settings—and if there are multiple robots in the system, they should coordinate in ways inspired by music ensembles, such as leader-follower [23].

VI-B3 Movement-music integration

Robot movements should be grounded in musical principles, even when exploring novel capabilities. Our musicians valued how robots’ unique movements could parallel traditional musical techniques—like stopping motions mirroring musical rests and the robots’ rolling movements enabling single and double strokes.

VI-B4 Visual identity system

VI-B5 Musical control interfaces

Systems should provide meaningful human interaction and control that encourage musical participation. Prior work indicates that interaction is crucial to robot music systems being considered as true instruments [6], a sentiment shared by our musician consultants.

VI-C Design Principles for Robot Music Systems

Addressing R3, we offer five design principles for future design work of robotic percussion musical systems based on participant values in all (non-interactive and interactive) elements of the robotic performance.

VI-C1 Create a multi-sensory experience

Robot artists have an ability to integrate multiple senses in art-making [50], and it is important to leverage that strength. Our work demonstrates that participants appreciated visual elements, such as movement and lights. Incorporating multi-sensory stimuli can create a more engaging and enjoyable experience.

VI-C2 Incorporate elements of playfulness

Our findings show that participants responded particularly positively to playful elements, describing the experience as “cute” and “funny”. As robots are often stereotyped as cold and mechanical, playful behaviors can create a more engaging, joyful atmosphere.

VI-C3 Design the system to encourage user interaction

Interactive elements enhance the musical experience by helping participants feel like active contributors and engaging non-experts in music. However, participants initially hesitated due to unclear interaction opportunities. Robot music systems should naturally invite interaction through clear, intuitive cues.

VI-C4 Emphasize unique strengths of robot performers

Our robots used whole-body movement and randomness [5, 6] to perform music in a uniquely robotic way. Unlike humans, who rely on sensory cues for synchronization [62], robots can more easily perform complex rhythms. Participants appreciated these diverse capabilities, noting how robots generated rhythmic complexities and coordinated their movements.

VI-C5 Consider the target audience background

We observed that expertise significantly shaped how participants perceived the robotic quartet, with different elements engaging experts and non-experts in unique ways. System design should thoughtfully incorporate elements that can engage diverse audiences through different routes to appreciation.

VI-D Limitations & Future Work

Because we recruited via open call, most participants were likely already interested in robotic music. Additionally, most participants were laypeople in both music and robotics, and even “experts” were university students studying those fields. Future research should aim to recruit participants with more diverse opinions and more expertise in these two fields.

Additionally, our one-day study could not account for the potential novelty effect, and some survey questions were phrased in a way that may have incurred positively biased responses. Future work should investigate the longer-term impact of robotic music and use more neutral question framing.

To improve the system, future research should focus on experimenting with different robots or different control methods for more granular control, as suggested by participants. Future investigations could also modify the arena and incorporate a wider range of instruments, including unpitched (e.g., shakers, cymbals) and pitched percussion instruments (e.g., xylophones) to introduce new melodic and rhythmic elements.

Lastly, we propose continued discussion and collaboration with musicians for iterative refinement. This extended partnership could lead to more sophisticated performances that better align with musician values and expectations.

VII Conclusion

In this paper, we present the Beatbots, a multi-robot percussion quartet co-designed with three musicians. The system uses true percussive rhythms gathered from percussionists, leader-follower dynamics, and randomness to generate unique robotic musical pieces. We held a public performance and surveyed participants (). Results indicated that participants were receptive to the robot performance, citing common themes of playful engagement, the systems’ aesthetic appeal, and appreciation of the music and complex rhythms. Responses varied by expertise, with expert musicians and non-expert roboticists having the greatest mindset shifts. Finally, we discussed affordances inspired by feedback from our musicians for future robot music systems and proposed five design principles for human-interactive robotic music systems.

Acknowledgment

The authors would like to graciously thank Radhika Nagpal for her guidance and support throughout this project.

References

- [1] J. D. Hoffmann, Z. Ivcevic, and N. Maliakkal, “Emotions, creativity, and the arts: Evaluating a course for children,” Empirical Studies of the Arts, vol. 39, no. 2, pp. 123–148, 2021.

- [2] P. Gemeinboeck and R. Saunders, “Creative machine performance: Computational creativity and robotic art.” in ICCC. Citeseer, 2013, pp. 215–219.

- [3] M. Jeon, “Robotic arts: Current practices, potentials, and implications,” Multimodal Technologies and Interaction, vol. 1, no. 2, p. 5, 2017.

- [4] O. Thörn, P. Knudsen, and A. Saffiotti, “Human-robot artistic co-creation: a study in improvised robot dance,” in 2020 29th IEEE International conference on robot and human interactive communication (RO-MAN). IEEE, 2020, pp. 845–850.

- [5] G. Weinberg and S. Driscoll, “Toward robotic musicianship,” Computer Music Journal, pp. 28–45, 2006.

- [6] G. Weinberg, “Robotic musicianship-musical interactions between humans and machines,” in Human Robot Interaction. IntechOpen, 2007.

- [7] C. Crick, M. Munz, and B. Scassellati, “Synchronization in social tasks: Robotic drumming,” in ROMAN 2006-The 15th IEEE international symposium on robot and human interactive communication. IEEE, 2006, pp. 97–102.

- [8] R. Savery, L. Zahray, and G. Weinberg, “Shimon sings-robotic musicianship finds its voice,” Handbook of Artificial Intelligence for Music: Foundations, Advanced Approaches, and Developments for Creativity, pp. 823–847, 2021.

- [9] G. Hoffman and G. Weinberg, “Interactive improvisation with a robotic marimba player,” Autonomous Robots, vol. 31, pp. 133–153, 2011.

- [10] A. Kapur, E. Singer, M. S. Benning, G. Tzanetakis, and Trimpin, “Integrating hyperinstruments, musical robots & machine musicianship for north indian classical music,” in Proceedings of the 7th international conference on New interfaces for musical expression, 2007, pp. 238–241.

- [11] Y. Kusuda, “Toyota’s violin-playing robot,” Industrial Robot: An International Journal, vol. 35, no. 6, pp. 504–506, 2008.

- [12] L. Candy, E. Edmonds, and M. Quantrill, “Integrating computers as explorers in art practice,” Explorations in Art and Technology, pp. 225–230, 2002.

- [13] C. Gomez Cubero, M. Pekarik, V. Rizzo, and E. Jochum, “The robot is present: Creative approaches for artistic expression with robots,” Frontiers in Robotics and AI, vol. 8, p. 662249, 2021.

- [14] E. P. Bruun, I. Ting, S. Adriaenssens, and S. Parascho, “Human–robot collaboration: a fabrication framework for the sequential design and construction of unplanned spatial structures,” Digital Creativity, vol. 31, no. 4, pp. 320–336, 2020.

- [15] A. Albin, G. Weinberg, and M. Egerstedt, “Musical abstractions in distributed multi-robot systems,” in 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2012, pp. 451–458.

- [16] R. Rowe, Machine musicianship. MIT press, 2004.

- [17] E. Poirson, J.-F. Petiot, and J. Gilbert, “Integration of user perceptions in the design process: application to musical instrument optimization,” 2007.

- [18] J. Colannino, F. Gómez, and G. T. Toussaint, “Analysis of emergent beat-class sets in steve reich’s ”clapping music” and the yoruba bell timeline,” Perspectives of New Music, vol. 47, no. 1, pp. 111–134, 2009. [Online]. Available: http://www.jstor.org/stable/25652402

- [19] J. Liberatore. Music in all directions: An examination of style in music of philip glass. [Online]. Available: https://performingarts.nd.edu/news-announcements/music-in-all-directions-an-examination-of-style-in-music-of-philip-glass/

- [20] S. P. Ice, The percussion quartet: A chronological listing and performance guide of six selected works. The University of Oklahoma, 2012.

- [21] B. Camburn, V. Viswanathan, J. Linsey, D. Anderson, D. Jensen, R. Crawford, K. Otto, and K. Wood, “Design prototyping methods: state of the art in strategies, techniques, and guidelines,” Design Science, vol. 3, p. e13, 2017.

- [22] R. Hartenberger, The Cambridge companion to percussion. Cambridge University Press, 2016.

- [23] W. L. Benzon, “Stages in the evolution of music,” Journal of Social and Evolutionary Systems, vol. 16, no. 3, pp. 273–296, 1993.

- [24] C. Adler, “Mathematics, automation and intuition in signals intelligence for percussion,” System, vol. 23, no. 2, pp. 19–30, 1999.

- [25] P. Langston, “Six techniques for algorithmic music composition,” in Proceedings of the International Computer Music Conference, vol. 60. Citeseer, 1989, p. 59.

- [26] K. McAlpine, E. Miranda, and S. Hoggar, “Making music with algorithms: A case-study system,” Computer Music Journal, vol. 23, no. 2, pp. 19–30, 1999.

- [27] Z. Tucker, “Emergence and complexity in music,” 2017.

- [28] A. Popoff, “Indeterminate music and probability spaces: the case of john cage’s number pieces,” in Mathematics and Computation in Music: Third International Conference, MCM 2011, Paris, France, June 15-17, 2011. Proceedings 3. Springer, 2011, pp. 220–229.

- [29] K. R. Schwarz, “Steve reich: Music as a gradual process: Part i,” Perspectives of New Music, vol. 19, no. 1/2, pp. 373–392, 1980. [Online]. Available: http://www.jstor.org/stable/832600

- [30] R. Hartenberger, Performance practice in the music of steve reich. Cambridge University Press, 2016.

- [31] I. Isac, “Repetitive minimalism in the work of philip glass. composition techniques,” Bulletin of the Transilvania University of Braşov, Series VIII: Performing Arts, vol. 13, no. 2-Suppl, pp. 141–148, 2020.

- [32] S. M. Feisst, G. Solis, and B. Nettl, “John cage and improvisation: an unresolved relationship,” Musical improvisation: Art, education, and society, vol. 2, no. 5, 2009.

- [33] S. Z. Solomon. John cage, composed improvisation for snare drum (1987). [Online]. Available: http://szsolomon.com/john-cage-composed-improvisation-snare-drum-1987/

- [34] E. Cone and J. Lambert, “How robots change the world,” 2019.

- [35] J. F. Jimeno, “Fewer babies and more robots: economic growth in a new era of demographic and technological changes,” SERIEs, vol. 10, no. 2, pp. 93–114, 2019.

- [36] M. Bretan and G. Weinberg, “A survey of robotic musicianship,” Communications of the ACM, vol. 59, no. 5, pp. 100–109, 2016.

- [37] A. Kapur, “A history of robotic musical instruments,” in ICMC, vol. 10, no. 1.88, 2005, p. 4599.

- [38] S. Jordà, “Afasia: the ultimate homeric one-man-multimedia-band,” in Proceedings of the 2002 conference on New interfaces for musical expression, 2002, pp. 1–6.

- [39] R. B. Dannenberg, H. B. Brown, and R. Lupish, “Mcblare: a robotic bagpipe player,” Musical Robots and Interactive Multimodal Systems, pp. 165–178, 2011.

- [40] J. Solis, K. Chida, K. Taniguchi, S. M. Hashimoto, K. Suefuji, and A. Takanishi, “The waseda flutist robot wf-4rii in comparison with a professional flutist,” Computer Music Journal, pp. 12–27, 2006.

- [41] G. Hoffman and G. Weinberg, “Shimon: an interactive improvisational robotic marimba player,” in CHI’10 Extended Abstracts on Human Factors in Computing Systems, 2010, pp. 3097–3102.

- [42] G. Weinberg and S. Driscoll, “Robot-human interaction with an anthropomorphic percussionist,” in Proceedings of the SIGCHI conference on Human Factors in computing systems, 2006, pp. 1229–1232.

- [43] J. Uchiyama, T. Hashimoto, H. Ohta, Y. Nishio, J.-Y. Lin, S. Cosentino, and A. Takanishi, “Development of an anthropomorphic saxophonist robot using a human-like holding method,” in 2023 IEEE/SICE International Symposium on System Integration (SII). IEEE, 2023, pp. 1–6.

- [44] A. Zhang, M. Malhotra, and Y. Matsuoka, “Musical piano performance by the act hand,” in 2011 IEEE international conference on robotics and automation. IEEE, 2011, pp. 3536–3541.

- [45] Y. Wu, P. Kuvinichkul, P. Y. Cheung, and Y. Demiris, “Towards anthropomorphic robot thereminist,” in 2010 IEEE International Conference on Robotics and Biomimetics. IEEE, 2010, pp. 235–240.

- [46] M. Schutz, “Seeing music? what musicians need to know about vision,” 2008.

- [47] T. R. P. Pessanha, H. Camporez, J. Manzolli, B. S. Masiero, L. Costalonga, and T. F. Tavares, “Virtual robotic musicianship: Challenges and opportunities,” in Proceedings of the Sound and Music Computing Conference (SMC’21). Sound and Music Computing Network, 2021.

- [48] J. Solis and K. Ng, “Musical robots and interactive multimodal systems: An introduction,” in Musical Robots and Interactive Multimodal Systems. Springer, 2011, pp. 1–12.

- [49] P. Auslander, “Lucille meets guitarbot: Instrumentality, agency, and technology in musical performance,” Theatre Journal, vol. 61, no. 4, pp. 603–616, 2009.

- [50] F. Zhuo, “Human-machine co-creation on artistic paintings,” in 2021 IEEE 1st International Conference on Digital Twins and Parallel Intelligence (DTPI). IEEE, 2021, pp. 316–319.

- [51] A. B. Flø and H. Wilmers, “Doppelgänger: A solenoid-based large scale sound installation.” in NIME, 2015, pp. 61–64.

- [52] C. Vear, A. Hazzard, S. Moroz, and J. Benerradi, “Jess+: Ai and robotics with inclusive music-making,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, 2024, pp. 1–17.

- [53] S. Kujala, “User involvement: a review of the benefits and challenges,” Behaviour & information technology, vol. 22, no. 1, pp. 1–16, 2003.

- [54] T. Zamenopoulos and K. Alexiou, Co-design as collaborative research. Bristol University/AHRC Connected Communities Programme, 2018.

- [55] L. Turchet, “Smart musical instruments: vision, design principles, and future directions,” IEEE Access, vol. 7, pp. 8944–8963, 2018.

- [56] B. Friedman, “Value-sensitive design,” interactions, vol. 3, no. 6, pp. 16–23, 1996.

- [57] A. Sellen, Y. Rogers, R. Harper, and T. Rodden, “Reflecting human values in the digital age,” Communications of the ACM, vol. 52, no. 3, pp. 58–66, 2009.

- [58] J. Halloran, E. Hornecker, M. Stringer, E. Harris, and G. Fitzpatrick, “The value of values: Resourcing co-design of ubiquitous computing,” CoDesign, vol. 5, no. 4, pp. 245–273, 2009.

- [59] S. Benford, P. Tolmie, A. Y. Ahmed, A. Crabtree, and T. Rodden, “Supporting traditional music-making: designing for situated discretion,” in Proceedings of the ACM 2012 conference on Computer Supported Cooperative Work, 2012, pp. 127–136.

- [60] G. Guest, K. MacQueen, and E. Namey, Applied Thematic Analysis. 2455 Teller Road, Thousand Oaks California 91320 United States: SAGE Publications, Inc., 2012. [Online]. Available: https://methods.sagepub.com/book/applied-thematic-analysis

- [61] M. L. McHugh, “Interrater reliability: the kappa statistic,” Biochemia medica, vol. 22, no. 3, pp. 276–282, 2012.

- [62] C. Drake, M. R. Jones, and C. Baruch, “The development of rhythmic attending in auditory sequences: attunement, referent period, focal attending,” Cognition, vol. 77, no. 3, pp. 251–288, 2000.