The BACCO Simulation Project: Exploiting the full power of large-scale structure for cosmology

Abstract

We present the BACCO project, a simulation framework specially designed to provide highly-accurate predictions for the distribution of mass, galaxies, and gas as a function of cosmological parameters. In this paper, we describe our main suite of gravity-only simulations (Gpc and particles) and present various validation tests. Using a cosmology-rescaling technique, we predict the nonlinear mass power spectrum over the redshift range and over scales for points in an -dimensional cosmological parameter space. For an efficient interpolation of the results, we build an emulator and compare its predictions against several widely-used methods. Over the whole range of scales considered, we expect our predictions to be accurate at the level for parameters in the minimal CDM model and to when extended to dynamical dark energy and massive neutrinos. We make our emulator publicly available under http://www.dipc.org/bacco

keywords:

large-scale structure – numerical methods – cosmological parameters1 Introduction

Over the last two decades, our understanding of the Universe has grown tremendously: the accelerated expansion of the Universe and the existence of dark matter are firmly supported; there are strong constraints on the micro-physical properties of dark matter and neutrinos; and the statistics of the primordial cosmic fluctuations are measured with increasing precision (e.g. Alam et al., 2017; Planck Collaboration et al., 2020; Gilman et al., 2020).

Despite the progress, there are several tensions among current data when interpreted within the context of the CDM model. For instance, the value of the Hubble parameter inferred from local supernovae is significantly larger than that inferred from the analysis of the CMB (e.g. Riess, 2019; Freedman et al., 2019). Another example is that the amplitude of fluctuations, , as determined from low-redshift lensing measurements, appears smaller than that inferred from CMB (e.g. Asgari et al., 2020).

In the current era of precision cosmology, the large amount of available large-scale structure (LSS) data promises accurate measurements of cosmological parameters with small systematic errors. These future measurements could shed light on the aforementioned tensions, confirming or ruling out the CDM paradigm (e.g. Weinberg et al., 2013). Many observational campaigns that seek to obtain this data are under construction or with an imminent start (e.g. Euclid111https://sci.esa.int/web/euclid, DESI222https://www.desi.lbl.gov/, J-PAS333http://j-pas.org).

To fully exploit the upcoming LSS data and obtain these cosmological constraints, extremely accurate theoretical models are required. This is an active area of research with different approaches being adopted in the literature. On the one hand, recent advances in perturbation theory have increased considerably the range of scales that can be treated analytically (e.g. Desjacques et al., 2018). These scales are, however, still in the quasi-linear regime. On the other hand, cosmological numerical simulations have also steadily increased their robustness and accuracy (e.g. Kuhlen et al., 2012). Currently, simulations are the most accurate method to model smaller and non-linear scales (where, in principle, much more additional constraining power resides).

Traditionally, numerical simulations were very expensive computationally and suffered from large cosmic-variance errors, thus they were only used to calibrate fitting functions or combined with perturbation theory to provide predictions for nonlinear structure as a function of cosmology (e.g. Smith et al., 2003; Takahashi et al., 2012). This has changed recently, as the available computational power keeps increasing and algorithms become more efficient, it is now possible carrying out large suites of simulations spanning different cosmological models. The so-called emulators have become more popular, which interpolate simulation results to provide predictions in the nonlinear regime and for biased tracers (e.g. Heitmann et al., 2014; Nishimichi et al., 2019; Liu et al., 2018; DeRose et al., 2019; Giblin et al., 2019; Wibking et al., 2019).

To keep the computational cost affordable, emulators are typically restricted to a small region in parameter space which is sampled with a small number of simulations (). Additionally, each individual simulation is low resolution or simulates a relatively small cosmic volume. This limits their usability in actual data analyses and/or add a significant source of uncertainty.

Here we take advantage of several recent advances to solve these limitations. First, we employ a very efficient -body code to carry out a suite of 6 large-volume high-resolution simulations, which allows to resolve all halos and subhalos with mass , together with their merger histories. Second, we employ initial conditions with suppressed variance, which allows to predict robustly even scales comparable to our simulated volume. Third, these simulations are combined with cosmology-rescaling algorithms, so that predictions can be obtained for any arbitrary set of cosmological parameters. As a result, this approach allows us to make highly accurate predictions for the large-scale phase-space structure of dark matter, galaxies, and baryons.

Our approach has many advantages over others in the literature. Firstly, we can predict the matter distribution over a broad range of scales, with high force accuracy and over a large cosmic volume. This allows for detailed modellings of the distribution of gas and the impact of “baryonic effects” (van Daalen et al., 2011; Schneider et al., 2016; Chisari et al., 2018, 2019; Aricò et al., 2020b; van Daalen et al., 2020). We can also resolve collapsed dark matter structures and their formation history, which enables sophisticated modelling of the galaxies that they are expected to host (e.g. Henriques et al., 2020; Moster et al., 2018; Chaves-Montero et al., 2016). In addition, the cosmological parameter space is large and densely sampled, so that emulator uncertainties are kept under a desired level. Finally, the parameter space includes non-standard CDM parameters, dynamical dark energy and massive neutrinos.

As an initial application of our framework, we have used our suite of specially-designed simulations to predict the nonlinear mass power spectrum over the range for different cosmologies within an 8-dimensional parameter space defined by a volume around Planck’s best-fit values. From these, we construct and present an emulator so that these predictions are easily accessible to other researchers. Overall, we reach a few percent accuracy over the whole range of parameters considered.

Our paper is structured as follows. Section 2 is devoted to presenting our numerical simulations and to the description and validation of numerical methods. In Section 3 we describe the construction of an emulator for the nonlinear power spectrum. We highlight Section 3.1.1 where we discuss our strategy for selecting training cosmologies, Section 3.1.4 which discusses our power spectra measurements, and Section 3.2.3 that compares our predictions to other approaches. We summarise our results and discuss the implications of our work in Section 4.

2 Numerical methods

2.1 The BACCO Simulations

The “BACCO simulations” is a suite of 6 -body simulations that follow the nonlinear growth of dark matter structure within a cubic region of L= on a side. These calculations are performed for three different sets of cosmological parameters with two realizations each. The matter distribution is represented by ( billion) particles.

The three cosmologies adopted by our BACCO simulations are provided in Table 1. We dub these cosmologies narya, nenya, and vilya444narya, vilya, and nenya are the most powerful rings after Sauron’s “One Ring” in “The Lord of the Rings” mythology.. These sets are inconsistent with the latest observational constraints, but they were intentionally chosen to so that they can be efficiently combined with cosmology-rescaling algorithms (Angulo & White, 2010). Specifically, Contreras et al. (2020) showed that these 3 cosmologies are optimal, in terms of accuracy and computational cost, to cover all the cosmologies within a region of around the best values found by a recent analysis of the Planck satellite (Planck Collaboration et al., 2014a).

For narya and vilya we stored snapshots, equally log-spaced in expansion factor, , and adopt a Plummer-equivalent softening length of . As pointed out by Contreras et al. (2020), most of the cosmological parameter volume is covered by rescaling nenya, thus we have increased the force resolution and frequency of its outputs to and snapshots, respectively. All simulations were started at using 2nd-order Lagrangian Perturbation theory, and were evolved up to so that they can be accurately scaled to cosmologies with large amplitude of fluctuations.

For each of the three cosmologies, we carry out two realizations with an initial mode amplitude fixed to the ensemble mean, and opposite Fourier phases (Angulo & Pontzen, 2016; Pontzen et al., 2016). These “Paired-&-Fixed” initial conditions allow for a significant reduction of cosmic variance in the resulting power spectrum in the linear and quasi-linear scales (which are the most affected by cosmic variance), as it has been tested extensively in recent works (Chuang et al., 2019; Villaescusa-Navarro et al., 2018; Klypin et al., 2020).

The BACCO simulations were carried out in the Summer of 2019 at Marenostrum-IV at the Barcelona Supercomputing Center (BSC) in Spain. We ran our -body code in a hybrid distributed/shared memory setup employing cores using MPI tasks. The run time was 7.2 million CPU hours, equivalent of days of wall-clock time. The total storage required for all data products is about TB.

To compare against recent emulator projects, we notice these simulations have times better mass resolution, times better force resolution, and the volume of those used by the AEMULUS project (DeRose et al., 2019); 50 times the volume and 3 times better mass resolution than MassiveNuS (Liu et al., 2018); times better mass resolution, 50% larger volume, and twice the spatial resolution than those in the EUCLID emulator project (Euclid Collaboration et al., 2019); times more particles and slightly better force resolution than the runs of the DarkEmulator (Nishimichi et al., 2019); times better mass resolution and similar force resolution and volume to the simulations in the Mira-Titan Universe project (Heitmann et al., 2014). All this, of course, is mostly a result of our project being able to focus computational resources on only 6 simulations, but that is precisely the advantage of the cosmology rescaling approach.

In Fig. 1 we display the projected matter density field of one of our simulations, nenya at . Each panel shows a region of the simulated volume, the top panel a region wide – the full simulation side-length –, whereas middle and bottom panels zoom in regions and wide, respectively. We display the density field as estimated via a tri-cubic Lagrangian tessellation using only particles. Note that thanks to this interpolation, no particle discreteness is visible and filaments and voids become easily distinguishable.

| [] | [] | [] | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Vilya | 0.9 | 0.27 | 0.06 | 0.92 | 0.65 | 0.0 | -1.0 | 0.0 | 1440 | 6.7 | ||

| Nenya | 0.9 | 0.315 | 0.05 | 1.01 | 0.60 | 0.0 | -1.0 | 0.0 | 1440 | 5 | ||

| Narya | 0.9 | 0.36 | 0.05 | 1.01 | 0.70 | 0.0 | -1.0 | 0.0 | 1440 | 6.7 |

2.2 The simulation code

The -body code we employ is an updated version of L-Gadget3 (Springel, 2005; Angulo et al., 2012). This code was originally developed for the Millennium-XXL project and was successfully executed with more than 10,000 CPUs employing 30Tb of RAM. Compared with previous versions of Gadget, L-Gadget3 features an hybrid OpenMP/P-thread/MPI parallelisation strategy and improved domain decomposition algorithms.

In addition to these improvements, our updated L-Gadget3 version stores all outputs in the HDF5 format, implements the possibility of simulating massive neutrinos via the linear response approach (Ali-Haïmoud & Bird, 2013), and features an improvement in the Tree-PM force split and in Kick-Drift operators. The output data structure is such to allow a straightforward reconstruction of the full phase-space distribution via tri-cubic Lagrangian interpolation (Hahn & Angulo, 2016; Stücker et al., 2020).

The code carries out a large fraction of the required post-processing on the fly. Specifically, this includes the 2LPT initial conditions generator, group finding via Friends-of-Friends algorithms and an improved version of SUBFIND (Springel et al., 2001) that is also able to track tidally-disrupted structures, and a descendant finder and merger tree construction (c.f. §2.2.1).

Thanks to the in-lining of these tools, it is not necessary to store the full particle load at every snapshot, which significantly reduces the I/O and long-term storage requirements. The dark matter distribution is, however, very useful in many applications, thus L-Gadget3 is able to store a subset of particles sampling homogeneously the initial Lagrangian distribution.

2.2.1 SubFind and Group finders

The identification of bound structures is a key aspect of -body simulations, thus L-Gadget3 features a version of the SUBFIND algorithm with several improvements.

The first improvement is the ability to track subhalos on-the-fly across snapshots – defining progenitor and descendants –, computing various additional quantities such as peak halo mass, peak maximum circular velocity, infall subhalo mass, and mass accretion rate, among others. These properties become useful when modelling galaxy formation within gravity-only simulations (e.g. Chaves-Montero et al., 2016; Moster et al., 2018).

The second improvement of our updated version of SUBFIND is the use of the subhalo catalogue in the previous snapshot to better identify structures. In the original algorithm, particles are first sorted according to the local density, then when a saddle point is detected, the most massive group at that point is considered as the primary structure. This, however, can cause inconsistencies across time, as small changes can lead to fluctuations in the structure considered as primary. In our version of SUBFIND instead, when a saddle point is detected, we consider as primary the substructure whose main progenitor is the most massive. This has proven to return more stable merger trees and quantities that are not local in time (e.g. peak maximum circular velocity).

Finally, during their evolution, substructures can disappear from a simulation due to artifacts in the structure finder, finite numerical resolution, or because its mass falls below the resolution limit owing to tidal stripping. The last of our improvements to SUBFIND is the ability to track all subhalos with no recognizable descendant, keeping the position and velocity of their most bound particle. This is a indispensable feature to correctly model the small-scale galaxy clustering in dark matter simulations (Guo & White, 2014).

At every output time, we store FoF groups and SUBFIND subhalos with more than 10 particles. In total, there are approximately billion groups and billion subhalos in our outputs. This means our simulations are able to resolve halos with mass , and subhalos with a number density of at .

2.3 Validation

2.3.1 Time and force resolution

In order to quantify the accuracy of our results, we have carried out a suite of small, box, simulations where we systematically vary the numerical parameters around those adopted in the BACCO simulations. Specifically, we consider the time-integration accuracy, force calculation accuracy, softening length, and mass resolution. We adopt the parameters of the nenya simulation as the reference point for these tests.

In Fig. 2 we compare the nonlinear power spectrum between these test runs and one that adopts the same numerical parameters and mean interparticle separation as our main BACCO simulations. Solid and dashed lines denote the results at and , respectively. In all panels the grey shaded region indicates agreement.

In the top panel we display simulations with different mass resolutions, . The main effect of mass resolution is how well small non-linear structures are resolved. We see no significant effect up to and a mild increase, of about 1% in the power at when improving the mass resolution.

The main source of inaccuracies in the force calculation are the terms neglected in the oct-tree multipole expansion. Specifically, Gadget considers only the monopole contribution down to tree-nodes of mass and size that fulfill for a particle at a distance and acceleration . Thus, the accuracy in the force calculation is controlled by the parameter . In the second panel we vary this parameter and see that the power spectrum is converged at a sub-percent level.

As in previous versions of Gadget, time-steps in L-Gadget3 are computed individually for each particle as , where is the softening and is the magnitude of the acceleration in the previous timestep. The parameter therefore controls how accurately orbits are integrated. In the third panel we vary this parameter increasing/decreasing it by a factor of 4/2 with respect to our fiducial value, . We see that the power spectrum varies almost negligibly with less than a impact up to .

Perhaps the most important degree of freedom in a numerical simulation is the softening length, , a parameter that smooths two-body gravitational interactions (note that in Gadget, forces become Newtonian at a distance ). In the fourth panel we compare three simulations with 50% higher and lower values of to values equal to and of the mean interparticle separation. On small scales we see that the amount of power increases systematically the lower the value of the softening length. In particular, our fiducial configuration underestimates the power by % at .

In summary, our results appear converged to better than 1% up to , and to up to . The main numerical parameter preventing better convergence is the softening length. To mitigate its impact, we have adopted the following empirical correction to our power spectrum results

| (1) |

where is the softening length in units of , and is the complementary error function. We found this expression by fitting the effect seen in the bottom panel of Fig. 2. We will apply this correction by default which brings the expected nominal accuracy of our power spectrum predictions to on all scales considered.

2.3.2 The EUCLID comparison project

To further validate our -body code and quantify the accuracy of our numerical simulations, we have carried out the main simulation of the “Euclid code comparison project”, presented in Schneider et al. (2016). This simulation consists of particles of mass in a box, and has been carried out with several -body codes: RAMSES (Teyssier, 2002), PkdGrav3 (Potter et al., 2017), Gagdet3 , and recently with ABACUS by Garrison et al. (2019).

Our realisation of this simulation adopts the same force and time-integration accuracy parameters as of main BACCO simulations, and the same softening length as nenya, . The full calculation required timesteps and took CPU hours employing MPI Tasks.

In Fig. 3 we compare the resulting power spectra at . We display the ratio with respect to our L-Gadget3 results including the correction provided in Eq. 1. For comparison, we display the uncorrected measurement as a blue dashed line. Note the spikes on large scales are caused by noise due to a slightly different -binning in the spectra.

Our results, PkdGrav, RAMSES, and that of ABACUS agree to a remarkable level – they differ by less than 1% up to . This is an important verification of the absolute accuracy of our results, but it is also an important cross-validation of all these 4 -body codes. Interestingly, the Gadget3 result presented in Schneider et al. (2016) is clearly in tension with the other codes. Since the underlying core algorithms in our code and in Gadget3 are the same, the difference is likely a consequence of numerical parameters adopted by Schneider et al. (2016) not being as accurate as those for the other runs. In the future, it will be important to conduct code comparison projects were numerical parameters are chosen so that each code provides results converged to a given degree.

2.3.3 The BACCO simulations

Having validated our numerical setup, we now present an overview of the results of our BACCO simulations at in Fig. 4.

In the left panel we show the nonlinear power spectrum in real space. Firstly, we see the low level of random noise in our predictions owing to the “Paired-&-Fixed” method. On large scales, we have checked that our results agree at the level with respect to the linear theory solution, which we compute for each cosmology using the Boltzmann code CLASS (Lesgourgues, 2011). Also on large scales, we see that the three simulations display significantly different power spectra, despite the three of them having identical values for . This is mostly a consequence of their different primordial spectral index, . In contrast, on scales smaller than , the spectra become much more similar as their linear spectra also do (shown by dotted lines).

In the middle panel we show the monopole of the redshift-space correlation functions for subhalos with a spatial number density equal to selected according to their peak maximum circular velocity. This is roughly analogous to a stellar mass selection above . We see a significant difference in the correlation amplitude among simulations. This hints at the potential constraining power of LSS if a predictive model for the galaxy bias is available. We display each of the two “Paired-&-Fixed” simulations in each cosmology as solid and dashed lines. Unlike in the power spectrum plot, the effect of pairing the initial phase fields is visible.

Finally, in the right panel of Fig. 4 we display the mass function of halos identified by the FoF algorithm. As in previous cases, there are clear differences among cosmologies. The minimum halo mass in our simulations is , which should suffice to model galaxies with star formation rates above /year at (Orsi & Angulo, 2018), as expected to be observed by surveys like EUCLID, DESI, or J-PAS (Favole et al., 2017).

In the next section we will employ our BACCO simulations to predict the nonlinear mass power spectrum as a function of cosmology.

3 Nonlinear mass power spectrum Emulator

Our aim is to make fast predictions for the nonlinear power spectrum over the whole region of currently-viable cosmologies. In this section we describe how we achieve this by building (§3.1) and testing of a matter power spectrum emulator (§3.2).

Our basic strategy is the following:

- •

- •

-

•

Finally, employ those measurements to build an emulator for the power spectra based on either Gaussian Processes or Neural Networks (§3.1.7).

We test the performance and accuracy of our emulator (§3.2.2) and compare it against widely-used methods to predict the nonlinear power spectrum (§3.2.3).

3.1 Building the emulator

3.1.1 The parameter space

In this paper we aim to cover 8 parameters of the CDM model extended with massive neutrinos and dynamical dark energy. Specifically, we consider the parameter range:

| (2) | |||||

where is the total mass in neutrinos, is the r.m.s. linear cold mass (dark matter plus baryons) variance in spheres, and and define the time evolution of the dark energy equation of state: . The parameter range we consider for (, , , ) corresponds to a region around the best-fit parameters of the analysis of Planck Collaboration et al. (2014b). For the dimensionless Hubble parameter, , we expand the range to cover a region around current low-redshift measurements from supernovae data (Riess, 2019).

We assume a flat geometry, i.e. and . We keep fixed the effective number of relativistic species to , and the temperature of the CMB K and neglect the impact of radiation (i.e. ). Note, however, that it is relatively straightforward to relax these assumptions and include in our framework varying curvature, relativistic degrees of freedom, or other ingredients.

For comparison, we notice that the range of parameters covered by our emulator is approximately twice as large as that of the Euclid emulator (with the exception of , which is similar), which implies a parameter space volume times larger, and that it covers cosmological parameters beyond the minimal CDM: dynamical dark energy and massive neutrinos. In contrast, our parameter space is similar to that considered by the Mira-Titan Universe project (Heitmann et al., 2016; Lawrence et al., 2017), however, as we will discuss next, we cover the space with approximately 20 times the number of sampling points.

3.1.2 Training Cosmology Set

We now define the cosmologies with which we will train our emulator. This is usually done by sampling the desired space with a Latin-Hypercube (e.g. Heitmann et al., 2006). We adopt a slightly different strategy based on the idea of iterative emulation of Pellejero-Ibañez et al. (2020) (see also Rogers et al., 2019), where we preferentially select training points in regions of high emulator uncertainty.

Let us first define the quantity we will emulate:

| (3) |

where is the measured nonlinear power spectrum of cold matter (i.e. excluding neutrinos). is the linear theory power spectrum where the BAO have been smeared out according to the expectations of perturbation theory. Specifically, we define:

| (4) |

where is a version of the linear theory power spectrum without any BAO signal. Operationally, this is obtained by performing a discrete sine transform, smooth the result, and return to Fourier space by an inverse transform (Baumann et al., 2018). , with .

Emulating the quantity , rather than the full nonlinear power spectra, reduces significantly the dynamical range of the emulation, simplifying the problem and thus delivering more accurate results (see e.g. Euclid Collaboration et al., 2019; Giblin et al., 2019). We emphasise, however, that these transformations are simply designed to improve the numerical stability of our predictions – our results regarding large scales and BAO are still uniquely provided by our simulation results.

To build our training set, we first construct a Latin-Hypercube with 400 points. We then build a Gaussian Process (GP) emulator for , which provides the expectation value and variance for the emulated quantity (c.f. §3.1.7 for more details). We then evaluate the GP emulator over a Latin-Hypercube built with 2,000 points, and select the 100 points expected to have the largest uncertainty in their predictions. We add these points to our training set and re-build the GP emulator. We repeat this procedure 4 times.

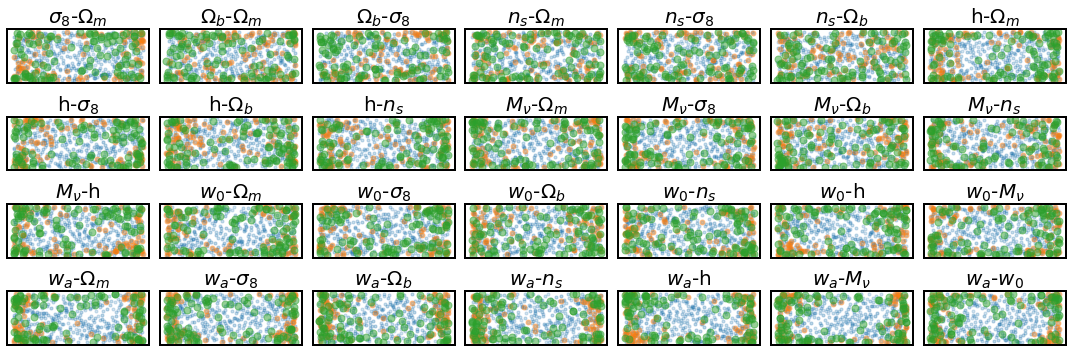

We display the final set of training cosmologies, comprised of 800 points, in Fig. 5. Blue symbols indicate the initial training cosmologies, whereas orange and green symbols do so for the 2nd and 4th iteration, respectively. We can appreciate that most points in the iterations are located near the boundaries of the cosmological space, which minimise extrapolation.

It is worth mentioning that we sample our space significantly better than typical emulators. For instance, the Mira-Titan and Euclid Emulator projects employ and simulations, respectively. This implies that we can keep the emulator errors under any desired level, which could be problematic otherwise, as parameter-dependent uncertainties in models, could in principle bias parameter estimates. On the other hand, sampling points can be designed optimally so that emulation errors are much smaller than a naive Latin Hypercube (Heitmann et al., 2014; Rogers et al., 2019), however, this can be only be done optimally for a single summary statistic: what could be optimal for the matter power spectrum, is not necessarily the best for, e.g, the quadrupole of the galaxy power spectrum.

3.1.3 Cosmology rescaling

Our next step is to predict nonlinear structure for each of our training cosmologies. For this, we employ the latest incarnation of the cosmology-rescaling algorithm, originally introduced by Angulo & White (2010).

In short, the cosmology-rescaling algorithm seeks a coordinate and time transformation such that the linear rms variance coincides in the original and target cosmologies. This transformation is motivated by extended Press-Schechter arguments, and returns highly accurate predictions for the mass function and properties of collapsed objects. On large scales, the algorithm uses 2nd-order Lagrangian Perturbation Theory to modify the amplitude of Fourier modes as consistent with the change of cosmology. On small scales, the internal structure of halos is modified using physically-motivated models for the concentration-mass-redshift relation (e.g. Ludlow et al., 2016).

The accuracy of the cosmology algorithm has been tested by multiple authors (Ruiz et al., 2011; Angulo & Hilbert, 2015; Mead & Peacock, 2014; Mead et al., 2015; Renneby et al., 2018; Contreras et al., 2020), and it has been recently extended to the case of massive neutrinos by Zennaro et al. (2019). Specifically, by comparing against a suite of -body simulations, Contreras et al. (2020) explicitly showed that the cosmology rescaling achieves an accuracy of up to over the same range of cosmological parameter values we consider here (c.f. Eq. 3.1.1). The largest errors appear on small scales and for dynamical dark energy parameters but, when restricted to the 6 parameters of the minimal CDM model, the rescaling returns accurate predictions. Note that the level of accuracy is set by the performance of current models for the concentration-mass-redshift relation (which usually are not calibrated for beyond CDM parameters), and future progress along those lines should feedback into higher accuracy for our emulator.

For our task at hand, we first split the parameter space into three disjoint regions where nenya, narya, or vilya will be employed (Contreras et al., 2020). Then, we load in memory a given snapshot of a given simulation and then rescale it to the corresponding subset of the 800 cosmologies. We employ 10 snapshots per simulation.

The full rescaling algorithm takes approximately 2 minutes (on 12 threads using OpenMP parallelization) per cosmology and redshift. Thus, all the required computations for building the emulator required approximately CPU hours – a negligible amount compared to that employed to run a single state-of-the-art -body simulation.

3.1.4 Power Spectrum Measurements

We estimate the power spectrum in our rescaled simulation outputs using Fast Fourier Transforms. We employ a cloud-in-cells assignment scheme over two interlaced grids (Sefusatti et al., 2016) of points. To achieve efficient measurements at higher wavenumbers, we repeat this procedure after folding the simulation box times in each dimension. We measure the power spectrum in evenly-spaced bins in , from to , and transition from the full to the folded measurement at half the Nyquist frequency. We have explicitly checked that this procedure returns sub-percent accurate power spectrum measurements over the full range of scales considered.

Although our BACCO simulations have high mass resolution, for computational efficiency, hereafter we will consider a subset of particles (uniformly selected in Lagrangian space) as our dark matter catalogue. This catalogue, however, is affected by discreteness noise. For a Poisson sampling of points over a box of side-length , the power spectrum receives a contribution equal to . However, this might not be an accurate estimate for our discreteness noise since our sampling is homogeneous in Lagrangian coordinates. We have estimated its actual contribution as a third-order polynomial by comparing the spectra of the full and diluted samples at different redshifts. We found that the amplitude is proportional to times the Poisson noise at , and that it progressively decreases in amplitude at higher redshifts, to reach times the Poisson noise at .

All our power spectrum measurements will be corrected by discreteness noise by subtracting the term described above, and further corrected for finite numerical accuracy following section §2.3.1. However, this procedure is not perfect, and we still detect % residuals at at . This will contribute to uncertainties in our emulator, which, however, are smaller than systematic uncertainties induced by the cosmology scaling.

3.1.5 Emulator Data

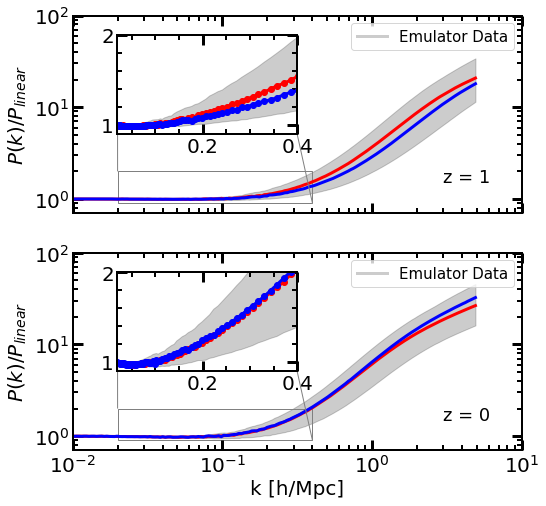

In total, we employ more than 16,000 power spectrum measurements; training cosmologies at approximately 10 different cosmic times for two paired simulations. Grey shaded regions in Fig. 6 show the range covered by this data at and , with the inset focusing on the range of wavemodes where baryonic acoustic oscillations are found. Blue and red lines show two randomly-chosen cosmologies.

On the largest scales, we can see that our rescaled simulations agree almost perfectly with linear theory. Although expected, it provides further validation of the dataset. On intermediate scales, we can see a lack of any oscillatory behaviour – better appreciated in the figure insets. This is a consequence of our correctly capturing the nonlinear smearing of baryonic acoustic oscillations caused mostly by large-scale flows. This is true at both and , which confirms the correct redshift dependence of . On small and nonlinear scales, we see an increase of more than one order of magnitude with different cosmologies differing by even factors of 3-4.

3.1.6 Principal Component Analysis

To reduce the dimensionality of our power spectrum measurements, we have performed a principal components (PC) analysis over our whole dataset, after subtracting the mean. We have kept the 6 -vectors with the highest eigenvalues, which together can explain all but of the data variance.

In the top panel of Fig. 7 we show the amplitude of these PCs, as indicated by the legend. The most important vector is a smooth function of wavenumber and roughly captures the overall impact of nonlinear evolution. Subsequent vectors describe further modifications, but none of them shows significant oscillations, which indicates that accurately models the BAO as a function of cosmology and redshift. It is worth noting that only the 6th vector displays any noticeable noise, owing to the highly precise input dataset.

In the bottom panel of the Fig. 7 we display the ratio of the full over that reconstructed using the first PCs aforementioned. We show the results for a random 2% of the power spectrum in our training data. We can see that almost all of them are recovered to better than . It is interesting to note that the residuals, although increase on intermediate scales, are mostly devoid of structure, which suggests that including additional PCs will simply recover more accurately the intrinsic noise in our dataset rather than systematic dependencies on cosmology.

To confirm this idea, we have repeated our emulation but keeping twice as many PCs. Although the description of the values of Q in our dataset gained accuracy (decreasing the differences down to ), the performance of the emulator at predicting other cosmologies did not increase. This supports the idea that PCs beyond the 6-th are simply capturing particular statistical fluctuations in the training set, rather than cosmology-induced features. It is interesting to note that this also means that all nonlinear information of the power spectrum beyond the BAO can be described by only numbers.

3.1.7 Emulation

To interpolate between our training data, and thus predict for any cosmology, we will construct two emulators: one built by employing Gaussian Processes Regression, and another one built by training a Neural Network.

Gaussian Processes

In short, Gaussian processes assume that every subset of points in a given space is jointly Gaussian distributed with zero mean and covariance . The covariance is a priori unknown but it can be estimated from a set of observations (e.g. our training set). Once the covariance is specified, the Gaussian process can predict the full probability distribution function anywhere in the parameter space.

In our case, we measure the amplitude associated to each PC in each training set. We then build a separate Gaussian Process for each of our PCs, using the package GPy (GPy, 2012). We assume the covariance kernel to be described by a squared exponential, with correlation length and variance set to the values that best describe the correlation among our data, found by maximising the marginal likelihood.

Neural Network

For regression tasks, neural networks perform remarkably well, serving as a highly-precise approximation to almost any continuous function. Thus, they provide an alternative for emulating (see e.g. Agarwal et al., 2014; Kobayashi et al., 2020).

In our case, we found that a feed-forward neural network with a simple architecture allowed us to obtain an accurate emulation. Specifically, we employed a fully-connected network with 2 hidden layers, each with 2000 neurons. For the activation function – which takes our input vectors and transforms them non-linearly – we use of Rectified Linear Units (ReLUs) which are commonly used in machine learning. The training of the network is done by using the adaptive stochastic optimization algorithm Adam (Kingma & Ba, 2014), with a default learning rate of . For the implementation of the neural network we made use of the open-source neural-network python library, Keras (Chollet et al., 2015).

We employ the PCA-reconstructed as our training data for the neural network (NN), so we can compare its results to those of the Gaussian process in equal terms. We randomly selected 5% of our power spectra as our validation set, and employ the remaining 95% as our training data. We define the loss function as the mean squared error function (MSE), and employed 2000 epochs for the training, after which our results appeared converged. At this point, the value of the loss function evaluated in the training and validation sets differed by less than , which suggest no overfitting. We note that we also tried the so-called batch-normalization and data-dropouts, without finding significant improvements in our predictions.

For a given target cosmology in our parameter space, we can predict the amplitude associated to each PC and then reconstruct the full vector. We found both emulators displaying similar performances: we compared their predictions at 1000 random locations within our parameter space, and differences were typically smaller than percent. Both emulators display similar computational performances, about milliseconds for predicting on any cosmology.

3.2 Testing the emulator

3.2.1 A first example

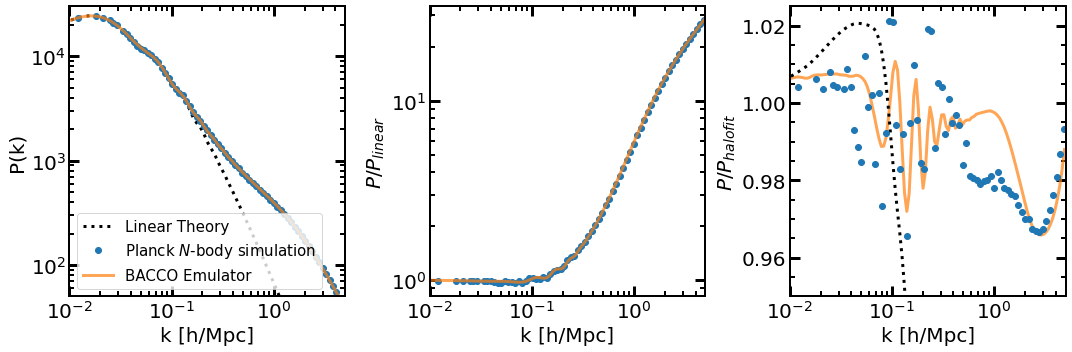

We present a first look at our emulator results in Fig. 8, where we show the nonlinear power spectrum at for a cosmology consistent with a recent analysis of Planck data. Specifically, we consider: , , , , , , , . The left panel shows the full power spectrum, the middle panel do so relative to linear theory, and the rightmost panel relative to HaloFit calibrated by Takahashi et al. (2012).

For comparison, we include as orange circles, the results of a full -body simulation with the same mass resolution and numerical parameters as those of our main BACCO suite, but in a smaller box, . Also as in our BACCO suite, the initial conditions have been “Paired-&-Fixed” for this simulation.

Overall, we can see that the BACCO emulator and the -body simulation agree to a remarkable level, being indistinguishable by eye in the leftmost and middle panels. In particular, on large scales, both agree with linear theory (which only can be appreciated thanks to the “Paired-&-Fixed” initial conditions); on intermediate scales, both also predict a BAO featured smeared out compared to linear theory.

In the rightmost panel we can see these aspects in more detail. Firstly, we note that the simulation and emulator results agree to about 1% on all the scales considered. This is consistent with the expected accuracy of the cosmology rescaling method, but also note that due to its somewhat small volume, the cosmic variance in the -body simulation results are not negligible. It is also interesting to note the systematic disagreement with the predictions from halofit. In following subsections we will explore these differences further.

3.2.2 Accuracy

To start testing the accuracy of our emulator, we have defined 10 cosmologies distributed over the target parameter space (c.f.§3.1.1) using a Latin hypercube. We then rescale our BACCO outputs to those parameters and compare the results against our emulation predictions. This essentially tests how accurate the PCA decomposition and emulation via Gaussian Process Regression are.

In Fig. 9 we show the ratio of the emulated to the rescaled nonlinear power spectrum at and for the 10 cosmologies mentioned before (we recall that neither nor were explicitly included in the emulator). We can see that on all scales our results are better than percent, and that most of the cosmologies are predicted to better that 1% at . Although this is already a high accuracy, we note that more and more rescaled results can be added over time to progressively improve the quality of the emulation. We recall that this level of uncertainty is comparable to the accuracy expected by the cosmology rescaling algorithm: for parameters of the minimal CDM and when considering massive neutrinos and dynamical dark energy.

3.2.3 Comparison with HaloFit, EuclidEmulator, and NgenHaloFit

We now compare our emulation results against four widely-used methods to predict the nonlinear evolution: HaloFit (Takahashi et al., 2012), the Euclid Emulator (Euclid Collaboration et al., 2019), NGenHalofit (Smith & Angulo, 2019), and Mira-Titan (Lawrence et al., 2017). Since not all of them have been calibrated over the whole parameter space covered by our emulator, we have restricted the comparison to the volume covered by the Euclid Emulator. We note that of our 800 training cosmologies, only 2 of them fall within this parameter volume.

In Fig. 10 we display the ratio of those predictions to that of our BACCO emulator. Coloured lines show 10 cosmologies set by a latin hypercube inside the Euclid Emulator parameter space. In addition, we show as a heavy line the Euclid reference cosmology: , , , , , , , , employed by (Euclid Collaboration et al., 2019).

On large scales, , our emulator agrees almost perfectly with NGenHalofit and the Euclid Emulator. Halofit, on the other hand shows a small constant power deficit, whereas the Mira-Titan displays a weakly scale-dependent offset. Over this range of scales, Mira-Titan is given by TimeRG perturbation theory (Pietroni, 2008; Upadhye et al., 2014), which might indicate inaccuracies in that approach.

On intermediate scales, , our results agree very well with those of NGenHalofit; for almost all cosmologies the differences are within the expected accuracy of our emulator. In contrast, we see that Halofit overestimates the amount of power by about 2% at and it does not correctly captures the BAO nonlinear smearing (as was already noted by Euclid Collaboration et al., 2019). On the other hand, MiraTitan underestimates the power by up to 2.5% at , and displays a strong feature at at . Over this range of scales MiraTitan employs a suite of low-resolution, large volume simulations, thus the disagreement could be due to an insufficient numerical accuracy in their simulations.

On small scales, , we continue to see differences among the three methods. For halofit, NGenHaloFit and Mira-Titan, they are systematic, roughly independent of cosmology, and decrease at higher redshift. A possibility is that this could be originated by the different numerical accuracy of the underlying simulations. Specifically, the simulations used by Smith & Angulo (2019) employ a softening length , which according to Eq. 1 is expected to produce an underestimation of 1.5% at . In addition, the transition between their high and low-resolution runs occurs at which might be related to the deficit we observe at at .

In contrast, the differences with respect to Euclid Emulator vary significantly for different cosmologies. Specifically, for the Euclid Reference Cosmology, the agreement is sub-percent on all scales. However, for our other test cosmologies, differences have a spread of %, even at . We do not see this behavior with other methods, which might suggest that there are significant uncertainties in the emulated power spectra of the Euclid Emulator beyond their quoted precision.

Finally, we note that there is a 1% “bump” at in all panels, which is originated by our imperfect shot-noise correction.

The previous comparison was done in a rather restricted cosmological parameter volume, which served as a strong test of our accuracy. However, one of the biggest advantages of our method is the ability to predict much more extreme cosmologies even with non-standard ingredients. We provide an example of this next.

In Fig. 11 we show the predictions for the effects of massive neutrinos on the cold matter (baryons plus dark matter) power spectrum. We display the ratio between cases with various neutrino masses, , relative to that without massive neutrinos . In all cases we use and fix all the other cosmological parameters to those of the “Euclid reference cosmology”. We only display the predictions of HaloFit and Mira-Titan, since NGenHaloFit nor the Euclid Emulator have been calibrated in the case of massive neutrinos.

We can see that on large scales, our predictions agree with both linear theory and HaloFit. For very massive neutrino cases, there is also a good agreement with MiraTitan, but there is a significant disagreement for , this might be caused by the scale-dependent features on large scales seen in the previous figure. On intermediate scales, all predictions also agree on the broadband shape of the neutrino-induced suppression, but our emulator is also able to capture the slightly different BAO suppression expected when massive neutrinos are present. On small scales, all three methods describe the well-known neutrino-induced spoon-like suppression, disagreeing slightly on the magnitude of the maximum suppression. Note however, we expect our emulator to predict more precisely the full shape of the nonlinear power spectrum, owing to the systematic uncertainties in HaloFit and Mira-Titan discussed before.

4 Summary

In this paper we have presented the BACCO simulation project: a framework that aims at delivering high-accuracy predictions for the distribution of dark matter, galaxies, and gas as a function of cosmological parameters.

The basic idea consists in combining recent developments in numerical cosmology – -body simulations, initial conditions with suppressed variance, and cosmology-rescaling methods – to quickly predict the nonlinear distribution of matter in a cosmological volume. The main advantage of our approach is that it requires only a small number of -body simulations, thus they can be of high resolution and volume. This in turn allows sophisticated modelling of the galaxy population (for instance in terms of subhalo abundance matching, semi-empirical or semi-analytic galaxy formation model), and of baryons (including the effects of cooling, star formation and feedback) in the mass distribution.

In this paper we have presented the main suite of gravity-only simulations of the BACCO project. These consist in 3 sets of “Paired-&-Fixed” simulations, each of them of a size and with billion particles. Their cosmologies were carefully chosen so that they maximise the accuracy of our predictions (Fig. 1 and Table 1) while minimising computational resources. We have validated the accuracy of our numerical setup with a suite of small -body simulations (Fig. 2) and by presenting a realization of the Euclid comparison project (Fig. 3). These tests indicate our simulations have an accuracy of 1% up to .

We have employed our BACCO simulations to predict more than 16,000 nonlinear power spectra at various redshifts and for 800 different cosmologies (Figs. 5 and 6). These cosmologies span essentially all the currently allowed region of parameter space of CDM extended to massive neutrinos and dynamical dark energy. Using these results, we built an emulator for the 6 most important principal components of the ratio of the nonlinear power spectrum over the linear expectation (Fig. 7). We show our emulation procedure to be accurate at the level over and (Figs. 8 and 9). Therefore, our accuracy is currently limited by that of cosmology rescaling methods. We compared our predictions against four popular methods to quickly predict the power spectrum in the minimal CDM scenario (Fig. 10) and in the presence of massive neutrinos (Fig. 11).

Since predicting a given cosmology requires an almost negligible amount of CPU time in our BACCO framework, we foresee the accuracy of our emulator to continuously improve as we include more cosmologies in the training set. Extensions to more parameters should also be possible, as, for instance, the number of relativistic degrees of freedom or curvature, can be easily incorporated in cosmology-rescaling methods. Additionally, there are several aspects of such methods that are likely to improve in the future, which should feedback into more accurate predictions and emulated power spectra.

On the other hand, effects induced by baryons on the shape of the nonlinear mass power spectrum can be of 10-30% (e.g. Chisari et al., 2019). Thus, they are much larger than current uncertainties in our emulation, cosmology-rescaling, or even shotnoise. These effects of star formation, gas cooling, and feeback from supermassive black holes are quite uncertain and differ significantly between different hydrodynamical simulations. However, they can be accurately modelled in postprocessing using dark-matter only simulations (Schneider & Teyssier, 2015). Specifically, Aricò et al. (2020b) showed that the effects of 7 different state-of-the-art hydrodynamical simulations could all be modelled to better than 1% within simple but physically-motivated models. In the future, we will implement such models and extend our matter emulation to simultaneously include cosmological and astrophysical parameters (Aricò et al., 2020a).

Acknowledgments

The authors acknowledge the support of the ERC-StG number 716151 (BACCO). SC acknowledges the support of the “Juan de la Cierva Formación” fellowship (FJCI-2017-33816). We thank Simon White, Auriel Schneider, Volker Springel for useful discussions. We thank Aurel Schneider also for making public the initial conditions of the EUCLID comparison project, and to Lehman Garrison for providing us with the respective power spectra of the ABACUS, Ramses, PkdGrav, and Gadget simulations. The authors acknowledge the computer resources at MareNostrum and the technical support provided by Barcelona Supercomputing Center (RES-AECT-2019-2-0012).

Data Availability

The data underlying this article will be shared on reasonable request to the corresponding author.

References

- Agarwal et al. (2014) Agarwal S., Abdalla F. B., Feldman H. A., Lahav O., Thomas S. A., 2014, MNRAS, 439, 2102

- Alam et al. (2017) Alam S., et al., 2017, MNRAS, 470, 2617

- Ali-Haïmoud & Bird (2013) Ali-Haïmoud Y., Bird S., 2013, MNRAS, 428, 3375

- Angulo & Hilbert (2015) Angulo R. E., Hilbert S., 2015, MNRAS, 448, 364

- Angulo & Pontzen (2016) Angulo R. E., Pontzen A., 2016, MNRAS, 462, L1

- Angulo & White (2010) Angulo R. E., White S. D. M., 2010, MNRAS, 405, 143

- Angulo et al. (2012) Angulo R. E., Springel V., White S. D. M., Jenkins A., Baugh C. M., Frenk C. S., 2012, MNRAS, 426, 2046

- Aricò et al. (2020a) Aricò G., Angulo R. E., Contreras S., Ondaro-Mallea L., Pellejero-Ibañez M., Zennaro M., 2020a, arXiv e-prints, p. arXiv:2011.15018

- Aricò et al. (2020b) Aricò G., Angulo R. E., Hernández-Monteagudo C., Contreras S., Zennaro M., Pellejero-Ibañez M., Rosas-Guevara Y., 2020b, MNRAS, 495, 4800

- Asgari et al. (2020) Asgari M., et al., 2020, A&A, 634, A127

- Baumann et al. (2018) Baumann D., Green D., Wallisch B., 2018, J. Cosmology Astropart. Phys., 2018, 029

- Chaves-Montero et al. (2016) Chaves-Montero J., Angulo R. E., Schaye J., Schaller M., Crain R. A., Furlong M., Theuns T., 2016, MNRAS, 460, 3100

- Chisari et al. (2018) Chisari N. E., et al., 2018, MNRAS, 480, 3962

- Chisari et al. (2019) Chisari N. E., et al., 2019, The Open Journal of Astrophysics, 2, 4

- Chollet et al. (2015) Chollet F., et al., 2015, Keras, https://github.com/fchollet/keras

- Chuang et al. (2019) Chuang C.-H., et al., 2019, MNRAS, 487, 48

- Contreras et al. (2020) Contreras S., Angulo R. E., Zennaro M., Aricò G., Pellejero-Ibañez M., 2020, MNRAS, 499, 4905

- DeRose et al. (2019) DeRose J., et al., 2019, ApJ, 875, 69

- Desjacques et al. (2018) Desjacques V., Jeong D., Schmidt F., 2018, Phys. Rep., 733, 1

- Euclid Collaboration et al. (2019) Euclid Collaboration et al., 2019, MNRAS, 484, 5509

- Favole et al. (2017) Favole G., Rodríguez-Torres S. A., Comparat J., Prada F., Guo H., Klypin A., Montero-Dorta A. D., 2017, MNRAS, 472, 550

- Freedman et al. (2019) Freedman W. L., et al., 2019, ApJ, 882, 34

- GPy (2012) GPy since 2012, GPy: A Gaussian process framework in python, http://github.com/SheffieldML/GPy

- Garrison et al. (2019) Garrison L. H., Eisenstein D. J., Pinto P. A., 2019, MNRAS, 485, 3370

- Giblin et al. (2019) Giblin B., Cataneo M., Moews B., Heymans C., 2019, MNRAS, 490, 4826

- Gilman et al. (2020) Gilman D., Birrer S., Nierenberg A., Treu T., Du X., Benson A., 2020, MNRAS, 491, 6077

- Guo & White (2014) Guo Q., White S., 2014, MNRAS, 437, 3228

- Hahn & Angulo (2016) Hahn O., Angulo R. E., 2016, MNRAS, 455, 1115

- Heitmann et al. (2006) Heitmann K., Higdon D., Nakhleh C., Habib S., 2006, ApJ, 646, L1

- Heitmann et al. (2014) Heitmann K., Lawrence E., Kwan J., Habib S., Higdon D., 2014, ApJ, 780, 111

- Heitmann et al. (2016) Heitmann K., et al., 2016, ApJ, 820, 108

- Henriques et al. (2020) Henriques B. M. B., Yates R. M., Fu J., Guo Q., Kauffmann G., Srisawat C., Thomas P. A., White S. D. M., 2020, MNRAS, 491, 5795

- Kingma & Ba (2014) Kingma D. P., Ba J., 2014, Adam: A Method for Stochastic Optimization (arXiv:1412.6980)

- Klypin et al. (2020) Klypin A., Prada F., Byun J., 2020, MNRAS,

- Kobayashi et al. (2020) Kobayashi Y., Nishimichi T., Takada M., Takahashi R., Osato K., 2020, Phys. Rev. D, 102, 063504

- Kuhlen et al. (2012) Kuhlen M., Vogelsberger M., Angulo R., 2012, Physics of the Dark Universe, 1, 50

- Lawrence et al. (2017) Lawrence E., et al., 2017, ApJ, 847, 50

- Lesgourgues (2011) Lesgourgues J., 2011, ArXiv e-prints 1104.2932

- Liu et al. (2018) Liu J., Bird S., Zorrilla Matilla J. M., Hill J. C., Haiman Z., Madhavacheril M. S., Petri A., Spergel D. N., 2018, J. Cosmology Astropart. Phys., 2018, 049

- Ludlow et al. (2016) Ludlow A. D., Bose S., Angulo R. E., Wang L., Hellwing W. A., Navarro J. F., Cole S., Frenk C. S., 2016, MNRAS, 460, 1214

- Mead & Peacock (2014) Mead A. J., Peacock J. A., 2014, MNRAS, 440, 1233

- Mead et al. (2015) Mead A. J., Peacock J. A., Lombriser L., Li B., 2015, MNRAS, 452, 4203

- Moster et al. (2018) Moster B. P., Naab T., White S. D. M., 2018, MNRAS, 477, 1822

- Nishimichi et al. (2019) Nishimichi T., et al., 2019, ApJ, 884, 29

- Orsi & Angulo (2018) Orsi Á. A., Angulo R. E., 2018, MNRAS, 475, 2530

- Pellejero-Ibañez et al. (2020) Pellejero-Ibañez M., Angulo R. E., Aricó G., Zennaro M., Contreras S., Stücker J., 2020, MNRAS, 499, 5257

- Pietroni (2008) Pietroni M., 2008, J. Cosmology Astropart. Phys., 2008, 036

- Planck Collaboration et al. (2014a) Planck Collaboration et al., 2014a, A&A, 571, A16

- Planck Collaboration et al. (2014b) Planck Collaboration et al., 2014b, A&A, 571, A16

- Planck Collaboration et al. (2020) Planck Collaboration et al., 2020, A&A, 641, A6

- Pontzen et al. (2016) Pontzen A., Slosar A., Roth N., Peiris H. V., 2016, Phys. Rev. D, 93, 103519

- Potter et al. (2017) Potter D., Stadel J., Teyssier R., 2017, Computational Astrophysics and Cosmology, 4, 2

- Renneby et al. (2018) Renneby M., Hilbert S., Angulo R. E., 2018, MNRAS, 479, 1100

- Riess (2019) Riess A. G., 2019, Nature Reviews Physics, 2, 10

- Rogers et al. (2019) Rogers K. K., Peiris H. V., Pontzen A., Bird S., Verde L., Font-Ribera A., 2019, J. Cosmology Astropart. Phys., 2019, 031

- Ruiz et al. (2011) Ruiz A. N., Padilla N. D., Domínguez M. J., Cora S. A., 2011, MNRAS, 418, 2422

- Schneider & Teyssier (2015) Schneider A., Teyssier R., 2015, J. Cosmology Astropart. Phys., 12, 049

- Schneider et al. (2016) Schneider A., et al., 2016, J. Cosmology Astropart. Phys., 4, 047

- Sefusatti et al. (2016) Sefusatti E., Crocce M., Scoccimarro R., Couchman H. M. P., 2016, MNRAS, 460, 3624

- Smith & Angulo (2019) Smith R. E., Angulo R. E., 2019, MNRAS, 486, 1448

- Smith et al. (2003) Smith R. E., et al., 2003, MNRAS, 341, 1311

- Springel (2005) Springel V., 2005, MNRAS, 364, 1105

- Springel et al. (2001) Springel V., White S. D. M., Tormen G., Kauffmann G., 2001, MNRAS, 328, 726

- Stücker et al. (2020) Stücker J., Hahn O., Angulo R. E., White S. D. M., 2020, MNRAS, 495, 4943

- Takahashi et al. (2012) Takahashi R., Sato M., Nishimichi T., Taruya A., Oguri M., 2012, ApJ, 761, 152

- Teyssier (2002) Teyssier R., 2002, A&A, 385, 337

- Upadhye et al. (2014) Upadhye A., Biswas R., Pope A., Heitmann K., Habib S., Finkel H., Frontiere N., 2014, Phys. Rev. D, 89, 103515

- Villaescusa-Navarro et al. (2018) Villaescusa-Navarro F., et al., 2018, ApJ, 867, 137

- Weinberg et al. (2013) Weinberg D. H., Mortonson M. J., Eisenstein D. J., Hirata C., Riess A. G., Rozo E., 2013, Physics Reports, 530, 87

- Wibking et al. (2019) Wibking B. D., et al., 2019, MNRAS, 484, 989

- Zennaro et al. (2019) Zennaro M., Angulo R. E., Aricò G., Contreras S., Pellejero-Ibáñez M., 2019, MNRAS, 489, 5938

- van Daalen et al. (2011) van Daalen M. P., Schaye J., Booth C. M., Dalla Vecchia C., 2011, MNRAS, 415, 3649

- van Daalen et al. (2020) van Daalen M. P., McCarthy I. G., Schaye J., 2020, MNRAS, 491, 2424