TFBEST: Dual-Aspect Transformer with Learnable Positional Encoding for Failure Prediction

Abstract

Hard Disk Drive (HDD) failures in datacenters are costly - from catastrophic data loss to a question of goodwill, stakeholders want to avoid it like the plague. An important tool in proactively monitoring against HDD failure is timely estimation of the Remaining Useful Life (RUL). To this end, the Self-Monitoring, Analysis and Reporting Technology employed within HDDs (S.M.A.R.T.) provide critical logs for long-term maintenance of the security and dependability of these essential data storage devices. Data-driven predictive models in the past have used these S.M.A.R.T. logs and CNN/RNN based architectures heavily. However, they have suffered significantly in providing a confidence interval around the predicted RUL values as well as in processing very long sequences of logs. In addition, some of these approaches, such as those based on LSTMs, are inherently slow to train and have tedious feature engineering overheads. To overcome these challenges, in this work we propose a novel transformer architecture - a Temporal-fusion Bi-encoder Self-attention Transformer (TFBEST) for predicting failures in hard-drives. It is an encoder-decoder based deep learning technique that enhances the context gained from understanding health statistics sequences and predicts a sequence of the number of days remaining before a disk potentially fails. In this paper, we also provide a novel confidence margin statistic that can help manufacturers replace a hard-drive within a time frame. Experiments on Seagate HDD data show that our method significantly outperforms the state-of-the-art RUL prediction methods during testing over the exhaustive 10-year data from Backblaze (2013-present). Although validated on HDD failure prediction, the TFBEST architecture is well-suited for other prognostics applications and may be adapted for allied regression problems.

Index Terms:

Failure Prediction, Remaining Useful Life, Transformers, Hard Disk Drive Health, Encoder-Decoder ModelsI Introduction

Condition-based maintenance (CBM) of equipment in large-scale cyber-physical systems (CPS) aims to maximize the reliability of these systems by selectively replacing parts that are predicted to fail[1]. The underlying assumption is that the degradation model these systems follow show up as trends in data that can be effectively used to predict the Remaining Useful Life (RUL) of such systems. Hard disk drives (HDD) are an important storage component of many systems, from personal computers to distributed data-centers. An HDD failure in a data-center can lead to catastrophic data loss if pertinent backup plans are not maintained. It is important to keep in mind that electro-mechanical devices are prone to failure owing to varied operational conditions and aging, therefore HDDs are no exception. However, early sensing of triggers and out-of-distribution signatures in the data can greatly help plan for failure, in the event it occurs. One common measure used for fault quantification in such cases is the Annualized Failure Rate (AFR). AFR represents the likelihood, expressed as a percentage, of a drive experiencing failure within a year. This probability is derived from the performance patterns of comparable drives.

The informed reader might be aware that prognostication techniques, as of this date, are broadly divided into three categories: model-based, data-driven and hybrid. Model-based techniques require precise and thorough dynamic modeling of electro-mechanical machinery or of individual components therein. This becomes an extremely difficult task if there is non-linearity in the system dynamics. Data-driven approaches, on the other hand, exploit the wealth of sensor data features to understand complex interrelationships between these features and use it to estimate the likelihood of failure in future. In the case of HDDs, data-driven approaches look at S.M.A.R.T. sensor features to model the dependencies and look for patterns of interest. Of course, one might look at hybrid approaches as well, where the Physics of Failure is captured by a joint modeling using dynamical system equations and machine learning models [2].

The S.M.A.R.T. monitoring system is essential for protecting HDDs over the course of their useful lives. Temperature, operation hours, on/off cycles, damaged sectors, and read/write errors are just a few of the many variables that it meticulously tracks. Continuous comparisons are made between these metrics and predetermined thresholds established by the HDD manufacturers. The system warns the user proactively when a particular S.M.A.R.T. parameter exceeds its set threshold. A laudable safety measure, this prompt notification enables users to take preventive actions like data backup and prompt drive replacement, preventing potential data loss. But relying on thresholds can lead to very premature drive change. In the literature, machine learning algorithms have been used for RUL prediction, such as Support Vector Machines (SVM) [3], Hidden Markov-models (HMM) [4] and Long short term memory networks (LSTM)[5]. These techniques, however, rely on time-consuming feature engineering. Transformer [6] based approaches, in comparison, can automatically extract useful features from the data and achieve significantly superior prediction performance.

Another notable issue is that more attention should be paid to the essential features providing significant information on degradation. Transformer architectures [6] using the attention mechanism [7] can learn the degradation mechanics without feature selection and can tend to long input sequences. Very recently, the Dual Aspect Self-Attention Transformer (DAST) [8] has been proposed, which adds an additional encoder over the vanilla transformer [6] to extract more information about how different sensors affect the system rather than attending to only the weights of different time steps. However, the DAST network uses an absolute positional encoding which can not effectively model the timesteps. To overcome this, we propose and extensively validate a novel transformer architecture: Temporal-Fusion Bi-Encoder Self-attention Transformer (TFBEST) that uses a learnable positional encoding to encode position of the timesteps in the encoder.

Contributions: The contributions of this work are significant in the following ways:

-

1.

A novel transformer architecture for remaining useful life prediction: We propose a new Transformer architecture which employs a learnable positional encoding to encode position of the timesteps in the encoder and two encoders (sensor and time) to extract information in parallel which avoids mutual influence of information from two aspects. To the best of our knowledge, transformers has not been implemented before for HDD RUL prediction using the comprehensive 10-year quarter-by-quarter health data from Backblaze [9]. We show that our new network outperforms state-of-the-art architectures and produces highly accurate point estimates of the RUL.

-

2.

Attention Mechanism instead of Feature Engineering: Our contribution also lies in using an attention mechanism for the encoder-decoder netowrk, This removes the need of feature selection as explored in [10].

-

3.

Confidence interval and error margin evaluation: We propose a new confidence interval evaluation to provide a robust range of RUL for a hard-drive. This instils confidence around when a hard-drive will fail.

The paper is organized in the following way. In Section II, we go over related work in the field. Section III provides an overview of why feature engineering is tedious and the shift to attention mechanisms. Section IV talks about the details and architecture of the Vanilla Transformer. Section V introduces the proposed method and Section VI divulges into the different configurations and experimentation done with the dataset. Lastly, Section VIII looks at potential avenues for future research.

II Related Work

Self-Monitoring, Analysis and Reporting Technology (S.M.A.R.T. ) is a monitoring system included in hard disk drives (HDDs). Primarily, it senses and creates logs of various health indicators of drives thereby enabling proactive planning against imminent hardware failures.

Many data-driven approaches use S.M.A.R.T. features as their input data and provide predictions on the RUL of the hard-drives. The architectures based on deep learning can automatically collect the key feature information from the original data to perform end-to-end prediction by modeling the functional relationship between the hard-drive degradation process and the S.M.A.R.T. monitoring data.

To date, many deep-learning architectures have been proposed for RUL predictions: TCNN [11], LSTM [11], Stacked LSTM [12], Bi-directional LSTM [13], Spatio-temporal Anomaly Detection LSTM Networks [1], Encoder-Decoder LSTM [10], and Ensemble Learning approaches [14]. All of these architectures have modeled the dataset in a specific way and have applied feature engineering to sanitize the data. However, the literature does not contain a case where attention mechanisms and transformers have been used for remaining useful life (RUL) prediction of hard disk drives. This opens up an avenue to apply transformers for prognosticating impending faults in HDDs and reporting the same.

Transformers are appealing to time series problems such as RUL estimation as they have demonstrated excellent modeling capability for long-range dependencies and interactions in sequential data. The vanilla transformer has undergone many iterations that have been used for a variety of time series activities to meet unique issues in forecasting.

In recent studies, there have been examples of different variants of Transformers employed for specific tasks like forecasting [15, 16] and anomaly detection [17, 18]. Specifically, seasonality or periodicity is an important feature of time series observations [19] leveraged by transformers for generating predictions[8].

III Caveats of feature engineering & Shift towards attention mechanism

III-A Problems with feature selection

Feature engineering plays a fundamental role in the process of getting data ready for machine learning and data analysis. It entails generating novel features or transforming existing ones to boost the performance of a machine learning model, or allowing greater insight from the data. In our prior work [10], we used a subset of features for the same task in order to make things simpler and to filter out redundant attributes. But feature selection is a tedious process: (a) It requires understanding data and its trends. (b) It can be somewhat unreliable, since minute alterations to either the dataset or how the features are chosen can lead to a completely different group of features being chosen. (c) It also becomes extremely challenging to be aware of the consequences of maximizing information gain during feature selection, such as potential overfitting particularly when dealing with noisy data such as the one from Backblaze. We must take into account the trade-off between reducing bias and increasing variance for selecting features in order to make an informed decision.

III-B Attention Mechanism can replace feature selection

Transformers have revolutionized natural language processing (NLP) [20] and have found applications in other research areas. Because of their ability to automatically learn useful properties from data, Transformers’ attention mechanism, particularly in models such as Bidirectional Encoder Representations from Transformers (BERT) [21] and Generative Pre-trained Transformer (GPT) [22], enable them to capture intricate correlations in data [23].

IV Preliminaries of the Transformer

IV-A The Vanilla Transformer

The vanilla Transformer [6] was the first to introduce the attention mechanism. It has been very successful in NLP tasks. As shown in the Fig. 2, it follows an encoder-decoder structure where both the encoder and the decoder are made up of numerous identical blocks. Each encoder block is made up of a multi-head self-attention module and a position-wise feed-forward network, while each decoder block is made up of cross-attention models that are inserted between the multi-head self-attention module and the position-wise feed-forward network.

The vanilla Transformer, unlike an LSTM or RNN, has no recurrence. Instead, it models the sequence information using the positional encoding included in the input embeddings. This can be beneficial for NLP and Neural Machine Translation (NMT) but can be detrimental for hard-drive prognosis as we will see in the next sections.

IV-A1 Positional Encoding

In the vanilla Transformer, for each position , encoding is as follow:

| (1) |

| (2) |

IV-A2 Multi-head Attention

This multi-head attention mechanism allows the model to attend to different parts of the input sequence simultaneously, capturing various patterns and relationships within the data.

The transformer produces the self attention as visualized in Fig. 1(a). The self-attention can be be formulated as:

| (3) |

We will delve into what , and mean:

-

•

Query (Q): It’s the representation of a token (word or position) that you want to calculate the attention scores for concerning other tokens in the sequence. These query vectors are used to determine how much attention each token should pay to other tokens in the sequence.

-

•

Key (K): It’s the representation of a token that provides context for computing the attention scores. These key vectors capture information about the other tokens in the sequence.

-

•

Value (V): These value vectors hold the information that is weighted and combined based on the attention scores.

The model attends to data from several representational subspaces at various places with multi-head attention. It also exploits parallelism to increase model training and performance.

| (4) |

where is a learnable weight matrix used to linearly combine the outputs of all attention heads and

IV-A3 Feed-forward network

The feed-forward network in a Transformer is an important component that captures complicated patterns in the input data. It is made up of numerous fully-connected layers and non-linear activation functions that allow the model to process and convert information gained via attention mechanisms, boosting its ability to learn and express intricate relationships within sequences.

IV-A4 Encoder-Decoder Structure

Using self-attention mechanisms, the encoder analyses the input sequence, acquiring contextual information. The decoder then constructs the output sequence, using contextual information from the encoder and previously created tokens to make coherent translations or predictions. Using self-attention mechanisms, the encoder analyses the input sequence, acquiring contextual information. The decoder then constructs the output sequence, using contextual information from the encoder and previously created tokens to make coherent translations or predictions.

IV-B DAST: Dual Aspect Self-Attention Transformer

The DAST [8] architecture is a recently proposed unique adaptation of the transformer for Turbofan Engine health prognostics on the NASA Commercial Modular Aero-Propulsion System Simulation (CMAPSS) [24] and PHM 2008 data [25]. As shown in Fig. 3, it employs two encoders that function in parallel to extract features from various sensors and time steps. DAST encoders are more effective at processing extended data sequences based solely on self-attention and are capable of adaptively learning to focus on more relevant regions of input. Furthermore, the parallel feature extraction approach prevents information from two aspects from influencing each other.

V TFBEST: Temporal-fusion Bi-encoder Self-attention Transformer

Time-stamped data from sensors are the backbone of fault prognosis in industrial electro-mechanical components. It makes possible the identification of temporal patterns, including seasonality or cyclical behaviors, which are crucial for predicting future conditions or occurrences whilst sensors continuously collect data on various aspects of the physical system in real-time. DAST exploits these two features to extract valuable contextual information. TFBEST is a novel transformer that builds on the DAST architecture by replacing the encoding with a learnable positional encoding based on LSTM.

V-A Limitations of absolute positional encoding

Despite having significant gains in [8], absolute position encoding (APE) has limitations for encoding time series data in Transformers. For each time step in the sequence, APE designates a distinct fixed positional embedding. As a result, the model comes to link particular positions with particular embeddings. The significance of various time steps can change in time series data from the actual world, and the fixed embeddings might not adequately capture this dynamic character. Several studies have revealed that learnable positional embeddings from time series data can be much more effective compared to fixed APE [26, 27].

V-B LSTM based positional encoding

As highlighted, we use a learnable positional encoding using LSTMs. This allows the model to learn the position of the sensor data across time-steps adaptively during training, making it more flexible and effective at collecting positional information. We also notice a signifact performance gain using this positional encoding compared to APE.

V-C Bi-encoders for sensor and time

Building on the DAST architecture, we employ 2 encoder layers. The sensor encoder applies the multi-head self-attention on the sensor dimension to extract useful information. This layer is an additional layer on the vanilla transformer that focuses on the S.M.A.R.T. features and extracts useful context without feature selection. As shown in Fig. 4, we also add a time step encoder layer. The time step encoder layer collects features along the time step dimension, allowing the TFBEST model to focus on the time steps that are more essential for RUL prediction. The positional encoding layer processes the time step encoder’s input data, which is the transpose of inputs.

V-C1 Sensor Encoder Layer

The sensor encoder layer is based on vanilla transformer’s encoder layer with minor modifications to fit the problem at hand. For every HDD log , we define the senor input across time-steps as . We also define as the transpose of the inputs passed to the sensor encoder. The process of self-attention mechanism is visualized in Fig. 1. We generate the Q, K and V matrices by multiplying with , , and (trainable weights) respectively.

| (5) |

Then we calculate the dot product of and (scaled by ), and apply a softmax function along the sensor dimension to obtain the weights of different sensors. Then self-attention can be computed as:

| (6) |

We now employ a multi-head attention mechanism. By separating the attention mechanism into many heads, it allows the model to focus on diverse parts of the input sequence at the same time, allowing it to capture complex dependencies and enhance performance on tasks like machine translation and text synthesis. Each head discovers new relationships in the data, improving the model’s capacity to handle a wide range of patterns and extract relevant information.

| (7) |

where .

V-C2 Time Encoder Layer

The time encoder layer is very similar to the sensor encoder layer. We directly pass the inputs to a learnable positional encoding as described in section V-B.

V-C3 Concatenation of encoder contexts

Both the sensor encoder and the time step encoder are built by stacking identical sensor or time step encoder layers. For simplicity, we utilize the same number of stacks for both encoders. The model concatenates information from the two contexts from the encoders. The output from the encoders can be represented:

| (8) |

where and are the context outputs from the sensor and time step encoders. Because the time step encoder and sensor encoder are positioned in parallel, features of the sensor dimension and time step dimension are retrieved concurrently. This approach eliminates the mutual influence of information from the two elements and increases performance by exploiting parallelism.

V-D Decoder

As we have modeled the problem at hand as a regression task, the decoder takes the inputs as is. The decoder block is similar to the one used in [6]. It calculates the source-target attention. An input embedding layer, decoder layers, an add and normalization layer, and a fully-connected feed forward layer comprise the decoder. The decoder layer consists mostly of two multi-head self-attention sublayers: mask multi-head self-attention and cross-attention sublayer.

VI Experiments

This section introduces a new dataset format, associated experimental settings, and trials on 10-years of Backblaze data to assess TFBEST performance in comparison to cutting-edge RUL prediction techniques and to confirm the benefit of the new architecture.

VI-A Dataset

Backblaze is a cloud-based data storage and backup service provider. To support it’s day-to-day requirements, it monitors and deploys over 240,000 hard-drives [28]. Every quarter, Backblaze publishes daily logs over the quarter. These logs contain information like S.M.A.R.T. features and whether or not a HDD failed on a given day. If it failed, it it marked with a 1 and removed from the subsequent snapshots.

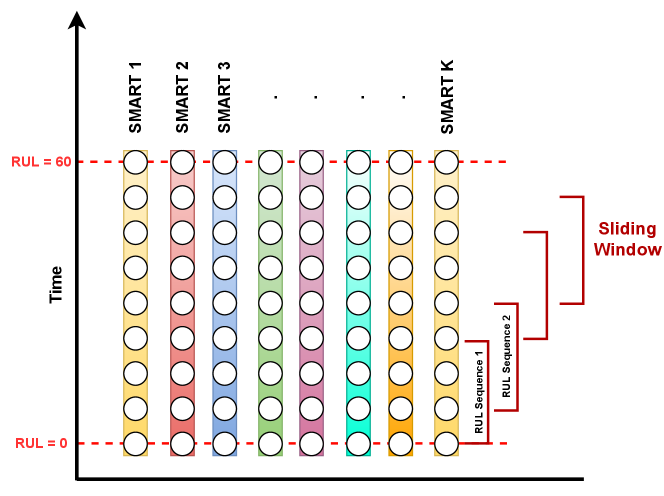

We look at 10-years of worth of data for a particular model of Seagate ST4000DM000 since it is the most failing hard-drive in the cluster [28]. We build the dataset by looking at different serial numbers of the model. We gather previous 60 days for a particular failing serial number. Then it is concatenated in a sliding window described in the next subsection. For each log, we calculate the RUL. RUL is calculated as follows:

| (9) |

If a failed device was discovered, for instance, the RUL column would have a value of 1 the day before, 2 the day before that, and so on. We can now treat this as a regression problem to predict the RUL given S.M.A.R.T. features.

VI-B RUL Sequence Generation

Using a similar approach in [13], we employ a different RUL sequence generation compared to [10] which helps us in providing robust RUL predictions with a confidence interval. The detailed description of the sequence generation is outlined in Fig. 5.

VI-C Experimental Setting

The length of the rolling time frame is set at 30. For training, we employ the Adam optimizer with an maximum epochs of 100. The training loss function is RMSE. The learning rate is set to 0.001 with a batch size of 256. Dropout is used for each encoder and decoder layer, with a dropout rate of 0.1. The training loss function can be formulated as:

| (10) |

where is the actual RUL and is the predicted RUL from the model.

We look at 2013-present data of Seagate ST4000DM000 and build the training, validation and test set as follows:

-

•

Training: January 2013 - December 2019

-

•

Validation: January 2020 - December 2020

-

•

Test: January 2021 - present

In this work we employ parallel attention layers, or heads. Across the models, we use encoder layers and decoder layer. For the encoder-decoder LSTM, we use units LSTM cell and units feed-forward for the transformers.

VI-D Comparison to other state-of-the-art models

Here we compare the performance of TFBEST with state of-the-art deep learning based RUL prediction methods. We consider 3 baselines and compare our model against baselines. The baselines are:

-

•

Encoder-Decoder LSTM

-

•

Vanilla Transformer

-

•

Vanilla Transformer with adjusted APE [29]

-

•

DAST

| Model |

|

|

|

||||||

|---|---|---|---|---|---|---|---|---|---|

|

14.46 | 22.19 | 15.25 | ||||||

|

9.46 | 29.12 | 29.14 | ||||||

|

9.44 | 28.23 | 28.22 | ||||||

| DAST [8] | 9.42 | 13.1 | 13.1 | ||||||

| TFBEST | 7.75 | 9.6 | 9.54 |

In Table I, we can see that LSTMs with feature selection [10] cannot predict well for long sequences and are very time consuming. It takes approximately 2 minutes per epoch and additional time for pre-processing the data. While transformers are fast for training (3̃0 seconds per epoch), TFBEST outperforms Vanilla Transformers and DAST by a big margin. This experiment shows the superiority of the model over state-of-the-art architectures. During experimentation, we also can visualize the predictions of one of the failing hard-drive in the Seagate ST4000DM000 models. Fig. 6 shows that TFBEST approach understands the underlying sequence in time that is changing based on time steps. The Encoder-Decoder LSTM proves that LSTMs are not suited to handle log sequences whilst predictions by the Vanilla transformer and DAST show that using learnable positional encoding in TFBEST gives near-perfect predictions.

VI-E Confidence margin statistic

As we have observed with LSTM-based approaches that are modeled as a regression problem [31, 13, 12], the models output only a single RUL value based on a sequence of data. This may be effective in producing a lower RMSE but confidence from the model may not be adequate. We propose a novel confidence margin metric for RUL prediction. This gives us a error margin and a closed interval of confidence that the RUL will lie in that range.

| (11) |

where, represents the point estimate of the RUL and represents the margin-of-error.

| True RUL |

|

|

DAST | TFBEST | ||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|||||||||||||||||||||

| 60 | 37.98 0.00 | (37.98, 37.98) | 3.72 0.01 | (3.73, 3.87) | 29.98 0.00 | (29.98, 29.98) | 45.13 0.00 | (45.13, 45.13) | ||||||||||||||||||||

| 59 | 38.31 1.24 | (30.46, 46.15) | 3.80 0.01 | (3.73, 3.87) | 29.96 0.01 | (29.92, 30.00) | 44.42 0.29 | (42.59, 46.26) | ||||||||||||||||||||

| 58 | 39.36 1.59 | (34.73, 44.00) | 3.82 0.01 | (3.80, 3.84) | 29.99 0.07 | (29.77, 30.20) | 43.40 0.28 | (42.59, 44.22) | ||||||||||||||||||||

| 57 | 41.42 2.56 | (35.39, 47.45) | 3.71 0.01 | (3.70, 3.73) | 29.91 0.09 | (29.70, 30.12) | 43.57 0.25 | (42.99, 44.15) | ||||||||||||||||||||

| 56 | 42.69 2.59 | (37.17, 48.21) | 3.79 0.00 | (3.78, 3.79) | 29.92 0.06 | (29.80, 30.04) | 43.34 0.68 | (41.89, 44.79) | ||||||||||||||||||||

| 55 | 43.61 2.55 | (38.47, 48.76) | 3.77 0.01 | (3.76, 3.79) | 29.89 0.07 | (29.76, 30.03) | 42.74 0.77 | (41.19, 44.29) | ||||||||||||||||||||

| 54 | 42.92 1.96 | (39.10, 46.73) | 3.79 0.01 | (3.77, 3.80) | 29.88 0.07 | (29.75, 30.02) | 42.29 0.78 | (40.77, 43.80) | ||||||||||||||||||||

| 53 | 42.20 1.62 | (39.12, 45.28) | 3.77 0.01 | (3.76, 3.78) | 29.84 0.08 | (29.68, 29.99) | 41.57 0.74 | (40.16, 42.98) | ||||||||||||||||||||

| 52 | 42.42 1.59 | (39.46, 45.39) | 3.72 0.01 | (3.71, 3.73) | 29.75 0.09 | (29.58, 29.91) | 40.71 0.76 | (39.30, 42.12) | ||||||||||||||||||||

| 51 | 43.46 2.15 | (39.52, 47.40) | 3.77 0.01 | (3.76, 3.78) | 29.77 0.07 | (29.64, 29.90) | 39.75 0.98 | (37.96, 41.54) | ||||||||||||||||||||

| 50 | 43.99 2.20 | (40.01, 47.97) | 3.80 0.01 | (3.79, 3.80) | 29.78 0.08 | (29.64, 29.93) | 39.53 1.09 | (37.55, 41.51) | ||||||||||||||||||||

| 49 | 43.14 1.80 | (39.90, 46.38) | 3.72 0.01 | (3.71, 3.73) | 29.67 0.10 | (29.49, 29.85) | 39.61 1.11 | (37.62, 41.59) | ||||||||||||||||||||

| 48 | 42.19 1.55 | (39.42, 44.95) | 3.72 0.01 | (3.70, 3.73) | 29.54 0.13 | (29.31, 29.77) | 38.94 1.17 | (36.86, 41.02) | ||||||||||||||||||||

| 47 | 41.48 1.36 | (39.07, 43.89) | 3.69 0.01 | (3.68, 3.70) | 29.49 0.13 | (29.25, 29.73) | 38.26 1.24 | (36.06, 40.46) | ||||||||||||||||||||

| 46 | 41.81 1.54 | (39.09, 44.53) | 3.73 0.01 | (3.72, 3.75) | 29.55 0.10 | (29.37, 29.74) | 37.77 1.32 | (35.44, 40.10) | ||||||||||||||||||||

| 45 | 41.21 1.35 | (38.86, 43.57) | 3.71 0.01 | (3.70, 3.73) | 29.48 0.12 | (29.28, 29.69) | 37.51 1.28 | (35.27, 39.75) | ||||||||||||||||||||

| 44 | 40.66 1.22 | (38.52, 42.79) | 3.73 0.01 | (3.72, 3.74) | 29.45 0.13 | (29.21, 29.68) | 37.04 1.31 | (34.75, 39.32) | ||||||||||||||||||||

| 43 | 41.15 1.55 | (38.45, 43.85) | 3.75 0.01 | (3.74, 3.77) | 29.40 0.16 | (29.13, 29.67) | 36.56 1.33 | (34.24, 38.88) | ||||||||||||||||||||

| 42 | 40.43 1.39 | (38.02, 42.84) | 3.78 0.01 | (3.76, 3.79) | 29.44 0.13 | (29.22, 29.67) | 35.80 1.46 | (33.26, 38.33) | ||||||||||||||||||||

| 41 | 40.43 1.40 | (38.00, 42.85) | 3.76 0.01 | (3.74, 3.77) | 29.21 0.19 | (28.88, 29.55) | 35.50 1.39 | (33.11, 37.90) | ||||||||||||||||||||

| 40 | 40.40 1.40 | (37.98, 42.83) | 3.79 0.01 | (3.78, 3.80) | 29.39 0.15 | (29.13, 29.65) | 34.69 1.59 | (31.95, 37.43) | ||||||||||||||||||||

| 39 | 40.08 1.34 | (37.78, 42.38) | 3.68 0.01 | (3.67, 3.69) | 28.99 0.22 | (28.61, 29.37) | 34.59 1.56 | (31.91, 37.27) | ||||||||||||||||||||

| 38 | 39.52 1.28 | (37.32, 41.73) | 3.73 0.01 | (3.72, 3.74) | 29.15 0.18 | (28.85, 29.45) | 34.41 1.63 | (31.61, 37.22) | ||||||||||||||||||||

| 37 | 39.50 1.31 | (37.26, 41.74) | 3.77 0.01 | (3.76, 3.77) | 29.13 0.18 | (28.81, 29.44) | 34.03 1.70 | (31.12, 36.94) | ||||||||||||||||||||

| 36 | 39.46 1.33 | (37.19, 41.73) | 3.76 0.01 | (3.75, 3.77) | 29.00 0.20 | (28.66, 29.35) | 33.91 1.70 | (30.99, 36.82) | ||||||||||||||||||||

| 35 | 39.25 1.30 | (37.03, 41.46) | 3.71 0.01 | (3.70, 3.73) | 28.73 0.25 | (28.31, 29.16) | 33.22 1.66 | (30.39, 36.06) | ||||||||||||||||||||

| 34 | 39.90 1.71 | (36.97, 42.82) | 3.74 0.01 | (3.73, 3.76) | 28.78 0.24 | (28.37, 29.19) | 32.88 1.73 | (29.93, 35.83) | ||||||||||||||||||||

| 33 | 39.94 1.70 | (37.04, 42.83) | 3.75 0.01 | (3.74, 3.76) | 28.81 0.22 | (28.44, 29.19) | 32.31 1.80 | (29.26, 35.37) | ||||||||||||||||||||

| 32 | 39.50 1.58 | (36.81, 42.18) | 3.68 0.01 | (3.67, 3.69) | 28.11 0.33 | (27.55, 28.66) | 31.87 1.75 | (28.89, 34.84) | ||||||||||||||||||||

| 31 | 39.32 1.54 | (36.70, 41.93) | 3.70 0.01 | (3.69, 3.71) | 28.00 0.32 | (27.44, 28.55) | 30.21 1.62 | (27.46, 32.96) | ||||||||||||||||||||

| 30 | 39.34 1.52 | (36.76, 41.93) | 3.72 0.01 | (3.71, 3.73) | 28.07 0.29 | (27.58, 28.57) | 29.92 1.55 | (27.29, 32.55) | ||||||||||||||||||||

| 29 | 38.83 1.50 | (36.29, 41.37) | 3.79 0.07 | (3.67, 3.91) | 27.35 0.45 | (26.58, 28.12) | 29.77 1.46 | (27.30, 32.25) | ||||||||||||||||||||

| 28 | 38.13 1.53 | (35.52, 40.73) | 3.76 0.08 | (3.63, 3.89) | 27.41 0.45 | (26.64, 28.18) | 28.62 1.42 | (26.20, 31.03) | ||||||||||||||||||||

| 27 | 38.40 1.33 | (36.13, 40.67) | 3.80 0.08 | (3.66, 3.93) | 27.38 0.46 | (26.59, 28.17) | 28.05 1.38 | (25.71, 30.39) | ||||||||||||||||||||

| 26 | 38.15 1.36 | (35.83, 40.48) | 3.90 0.08 | (3.76, 4.04) | 27.64 0.46 | (26.85, 28.43) | 27.75 1.46 | (25.27, 30.23) | ||||||||||||||||||||

| 25 | 37.66 1.44 | (35.21, 40.12) | 3.82 0.08 | (3.68, 3.97) | 27.60 0.47 | (26.79, 28.41) | 27.49 1.39 | (25.11, 29.86) | ||||||||||||||||||||

| 24 | 36.93 1.50 | (34.36, 39.50) | 3.87 0.09 | (3.72, 4.02) | 27.63 0.49 | (26.79, 28.47) | 27.09 1.35 | (24.79, 29.39) | ||||||||||||||||||||

| 23 | 36.23 1.59 | (33.52, 38.95) | 3.81 0.09 | (3.65, 3.97) | 27.11 0.51 | (26.24, 27.99) | 26.08 1.25 | (23.95, 28.22) | ||||||||||||||||||||

| 22 | 36.00 1.67 | (33.12, 38.87) | 3.76 0.10 | (3.59, 3.92) | 27.28 0.52 | (26.38, 28.18) | 25.63 1.20 | (23.57, 27.69) | ||||||||||||||||||||

| 21 | 36.05 1.72 | (33.10, 39.00) | 3.79 0.10 | (3.62, 3.97) | 27.22 0.55 | (26.28, 28.15) | 25.36 1.24 | (23.22, 27.50) | ||||||||||||||||||||

| 20 | 36.54 1.58 | (33.82, 39.26) | 3.82 0.11 | (3.64, 4.01) | 27.09 0.57 | (26.11, 28.07) | 24.85 1.25 | (22.69, 27.00) | ||||||||||||||||||||

| 19 | 35.78 1.68 | (32.88, 38.68) | 3.78 0.11 | (3.59, 3.98) | 26.60 0.59 | (25.57, 27.62) | 23.54 1.14 | (21.57, 25.51) | ||||||||||||||||||||

| 18 | 35.49 1.83 | (32.32, 38.66) | 3.76 0.12 | (3.56, 3.97) | 26.25 0.63 | (25.17, 27.34) | 23.02 1.10 | (21.11, 24.93) | ||||||||||||||||||||

| 17 | 34.77 1.97 | (31.34, 38.20) | 3.83 0.13 | (3.61, 4.04) | 26.57 0.66 | (25.42, 27.72) | 23.10 1.14 | (21.12, 25.09) | ||||||||||||||||||||

| 16 | 34.99 2.06 | (31.40, 38.58) | 3.79 0.13 | (3.56, 4.02) | 26.00 0.69 | (24.79, 27.21) | 21.86 1.15 | (19.85, 23.88) | ||||||||||||||||||||

| 15 | 34.13 2.21 | (30.26, 38.00) | 3.77 0.14 | (3.52, 4.02) | 25.54 0.72 | (24.28, 26.81) | 21.77 1.15 | (19.76, 23.78) | ||||||||||||||||||||

| 14 | 33.70 2.43 | (29.41, 37.98) | 3.85 0.15 | (3.58, 4.12) | 25.80 0.77 | (24.44, 27.16) | 20.87 1.24 | (18.69, 23.05) | ||||||||||||||||||||

| 13 | 33.73 2.65 | (29.03, 38.43) | 3.89 0.16 | (3.60, 4.17) | 25.51 0.82 | (24.06, 26.97) | 20.20 1.29 | (17.91, 22.49) | ||||||||||||||||||||

| 12 | 32.97 2.91 | (27.78, 38.16) | 3.91 0.18 | (3.60, 4.22) | 25.63 0.90 | (24.03, 27.23) | 20.00 1.32 | (17.64, 22.36) | ||||||||||||||||||||

| 11 | 31.22 2.87 | (26.06, 36.37) | 3.84 0.19 | (3.50, 4.18) | 24.28 0.94 | (22.60, 25.96) | 18.86 1.51 | (16.15, 21.58) | ||||||||||||||||||||

| 10 | 30.92 3.22 | (25.08, 36.76) | 3.90 0.21 | (3.53, 4.28) | 23.99 1.01 | (22.17, 25.82) | 17.44 1.59 | (14.55, 20.32) | ||||||||||||||||||||

| 9 | 29.22 3.33 | (23.12, 35.32) | 3.99 0.23 | (3.57, 4.41) | 24.36 1.12 | (22.31, 26.41) | 17.06 1.92 | (13.54, 20.57) | ||||||||||||||||||||

| 8 | 27.93 3.66 | (21.13, 34.73) | 3.96 0.25 | (3.48, 4.43) | 23.27 1.20 | (21.03, 25.51) | 15.62 1.89 | (12.10, 19.13) | ||||||||||||||||||||

| 7 | 29.16 3.80 | (21.96, 36.35) | 4.10 0.29 | (3.56, 4.64) | 23.19 1.32 | (20.68, 25.70) | 14.67 2.12 | (10.64, 18.69) | ||||||||||||||||||||

| 6 | 28.40 4.45 | (19.76, 37.05) | 4.09 0.33 | (3.45, 4.73) | 22.57 1.52 | (19.62, 25.51) | 13.24 2.41 | (8.55, 17.94) | ||||||||||||||||||||

| 5 | 30.34 4.62 | (21.04, 39.64) | 4.10 0.38 | (3.33, 4.87) | 21.08 1.63 | (17.79, 24.37) | 11.06 2.40 | (6.22, 15.90) | ||||||||||||||||||||

| 4 | 32.83 4.62 | (22.98, 42.68) | 4.19 0.46 | (3.20, 5.17) | 19.93 1.65 | (16.41, 23.44) | 8.79 2.35 | (3.78, 13.80) | ||||||||||||||||||||

| 3 | 34.63 5.46 | (21.78, 47.48) | 4.31 0.58 | (2.95, 5.67) | 13.98 0.54 | (12.72, 15.24) | 5.53 0.40 | (4.59, 6.48) | ||||||||||||||||||||

| 2 | 36.79 7.09 | (16.09, 57.48) | 4.44 0.77 | (2.19, 6.70) | 14.86 0.59 | (13.15, 16.57) | 4.99 0.23 | (4.32, 5.65) | ||||||||||||||||||||

| 1 | 42.34 7.54 | (-5.29, 89.97) | 4.88 1.16 | (-2.47, 12.22) | 15.43 0.88 | (9.88, 20.97) | 4.23 0.07 | (3.78, 4.69) | ||||||||||||||||||||

| 0 | 34.71 0.00 | (nan, nan) | 6.03 0.00 | (nan, nan) | 6.93 0.00 | (nan, nan) | 3.72 0.00 | (nan, nan) | ||||||||||||||||||||

The confidence margin is calculated as we use a RUL sequence with overlapping values. These overlapping sequences can give a confidence-based estimate of the RUL. A point estimate, on the other hand can be misleading if the model is not perfect. As we can see from the Table II, TFBEST produces near perfect point estimates with lower margin-of-error compared to the rest of the models.

VII Discussion

We propose TFBEST, a novel transformer architecture for prediction of of hard-drive failures and highlight its advantages over the vanilla Transformer and DAST. To begin with, existing deep learning-based RUL prediction approaches rely heavily on the RNN/CNN architecture. While the use of LSTM has provided very good results in previous works, most architectures have to depend on careful feature engineering to extract the useful S.M.A.R.T. features. In contrast to preceding architectures, our solution is based on the Transformer architecture, which relies solely on the self-attention mechanism to analyze S.M.A.R.T. features. Our architecture is also superior to the DAST architecture by incorporating time-step positions via a learnable encoding technique. This has improved performance of predictions significantly.

We also use a new confidence-margin interval to provide a more reliable RUL estimate. RUL predictions can be very far from real truth if we only rely on a single output from the model. It is also more useful to predict a range of RUL for a particular log so that the datacenter can evaluate when to change the drive in a timeframe. Compared to previous literature, where either deep-learning models are used as a binary classifier or predicting a single value, our metric is inspired by confidence around failure. We rely on RUL sequences which create an overlap across 60 days by a rolling window. Experimental studies with the above RUL prediction method validate the advantage of our method and model.

VIII Conclusion and Future Work

We propose TFBEST, a novel transformer architecture for HDD RUL estimation. TFBEST uses a learnable positional encoding based on LSTMs and the self-attention mechanism to process the whole sequence of CBM data. It uses a sensor encoder and a time step encoder to simultaneously record the weighted characteristics of thee data. The TFBEST model adaptively learns the significance of various sensors and time steps without the requirement for feature selection through attention mechanism. The performance of our method for RUL prediction is better than state-of-the-art deep RUL prediction methods, according to experimental findings on actual hard-drive health data spanning 10 years. We also propose a new statistic - a confidence margin which produces a point estimate, error-margin and confidence interval that gives a range of RUL values during which the hard-drive may fail. In a future study, we intend to train and understand the transformer’s performance on other manufacturers. Another potential direction we want to explore is to make the transformer more resilient against cyber attacks. Deep learning models are vulnerable to adversarial attacks such as data poisoning attacks or tampering of weights. Architectural hardening is one way to make these models robust against such adversaries.

Acknowledgment

The research reported in this publication was supported by the Division of Research and Innovation at San José State University under Award Number 23-UGA-08-044. The content is solely the responsibility of the author(s) and does not necessarily represent the official views of San José State University.

References

- [1] S. Basak, S. Sengupta, S.-J. Wen, and A. Dubey, “Spatio-temporal ai inference engine for estimating hard disk reliability,” Pervasive and Mobile Computing, vol. 70, p. 101283, 2021. [Online]. Available: https://doi.org/10.1016/j.pmcj.2020.101283

- [2] B. D. Strom, S. Lee, G. W. Tyndall, and A. Khurshudov, “Hard disk drive reliability modeling and failure prediction,” IEEE Transactions on Magnetics, vol. 43, no. 9, pp. 3676–3684, 2007.

- [3] N. Aussel, S. Jaulin, G. Gandon, Y. Petetin, E. Fazli, and S. Chabridon, “Predictive models of hard drive failures based on operational data,” in 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), 2017, pp. 619–625.

- [4] Y. Zhao, X. Liu, S. Gan, and W. Zheng, “Predicting disk failures with hmm- and hsmm-based approaches,” in Advances in Data Mining. Applications and Theoretical Aspects, P. Perner, Ed. Berlin, Heidelberg: Springer Berlin Heidelberg, 2010, pp. 390–404.

- [5] S. Basak, S. Sengupta, and A. Dubey, “Mechanisms for integrated feature normalization and remaining useful life estimation using lstms applied to hard-disks,” in 2019 IEEE International Conference on Smart Computing (SMARTCOMP), 2019, pp. 208–216.

- [6] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. u. Kaiser, and I. Polosukhin, “Attention is all you need,” in Advances in Neural Information Processing Systems, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, Eds., vol. 30. Curran Associates, Inc., 2017. [Online]. Available: https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf

- [7] D. Bahdanau, K. Cho, and Y. Bengio, “Neural machine translation by jointly learning to align and translate,” 2016.

- [8] Z. Zhang, W. Song, and Q. Li, “Dual-aspect self-attention based on transformer for remaining useful life prediction,” IEEE Transactions on Instrumentation and Measurement, vol. 71, pp. 1–11, 2022. [Online]. Available: https://doi.org/10.1109%2Ftim.2022.3160561

- [9] “Hard Drive Test Data — backblaze.com,” https://www.backblaze.com/cloud-storage/resources/hard-drive-test-data, [Accessed 04-09-2023].

- [10] R. Mohapatra, A. Coursey, and S. Sengupta, “Large-scale end-of-life prediction of hard disks in distributed datacenters,” in 2023 IEEE International Conference on Smart Computing (SMARTCOMP), 2023, pp. 261–266.

- [11] S. Lu, B. Luo, T. Patel, Y. Yao, D. Tiwari, and W. Shi, “Making disk failure predictions SMARTer!” in 18th USENIX Conference on File and Storage Technologies (FAST 20). Santa Clara, CA: USENIX Association, Feb. 2020, pp. 151–167. [Online]. Available: https://www.usenix.org/conference/fast20/presentation/lu

- [12] F. D. d. S. Lima, G. M. R. Amaral, L. G. d. M. Leite, J. P. P. Gomes, and J. d. C. Machado, “Predicting failures in hard drives with lstm networks,” in 2017 Brazilian Conference on Intelligent Systems (BRACIS), 2017, pp. 222–227. [Online]. Available: https://doi.org/10.1109/BRACIS.2017.72

- [13] A. Coursey, G. Nath, S. Prabhu, and S. Sengupta, “Remaining useful life estimation of hard disk drives using bidirectional lstm networks,” in 2021 IEEE International Conference on Big Data (Big Data). Los Alamitos, CA, USA: IEEE Computer Society, dec 2021, pp. 4832–4841. [Online]. Available: https://doi.ieeecomputersociety.org/10.1109/BigData52589.2021.9671605

- [14] M. Zhang, W. Ge, R. Tang, and P. Liu, “Hard disk failure prediction based on blending ensemble learning,” Applied Sciences, vol. 13, no. 5, 2023. [Online]. Available: https://www.mdpi.com/2076-3417/13/5/3288

- [15] S. Li, X. Jin, Y. Xuan, X. Zhou, W. Chen, Y.-X. Wang, and X. Yan, “Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting,” in Advances in Neural Information Processing Systems, H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, Eds., vol. 32. Curran Associates, Inc., 2019. [Online]. Available: https://proceedings.neurips.cc/paper_files/paper/2019/file/6775a0635c302542da2c32aa19d86be0-Paper.pdf

- [16] T. Zhou, Z. Ma, Q. Wen, X. Wang, L. Sun, and R. Jin, “FEDformer: Frequency enhanced decomposed transformer for long-term series forecasting,” in Proceedings of the 39th International Conference on Machine Learning, ser. Proceedings of Machine Learning Research, K. Chaudhuri, S. Jegelka, L. Song, C. Szepesvari, G. Niu, and S. Sabato, Eds., vol. 162. PMLR, 17–23 Jul 2022, pp. 27 268–27 286. [Online]. Available: https://proceedings.mlr.press/v162/zhou22g.html

- [17] J. Xu, H. Wu, J. Wang, and M. Long, “Anomaly transformer: Time series anomaly detection with association discrepancy,” 2022.

- [18] S. Tuli, G. Casale, and N. R. Jennings, “Tranad: Deep transformer networks for anomaly detection in multivariate time series data,” Proc. VLDB Endow., vol. 15, no. 6, p. 1201–1214, feb 2022. [Online]. Available: https://doi.org/10.14778/3514061.3514067

- [19] Q. Wen, K. He, L. Sun, Y. Zhang, M. Ke, and H. Xu, “Robustperiod: Robust time-frequency mining for multiple periodicity detection,” in Proceedings of the 2021 International Conference on Management of Data, ser. SIGMOD ’21. New York, NY, USA: Association for Computing Machinery, 2021, p. 2328–2337. [Online]. Available: https://doi.org/10.1145/3448016.3452779

- [20] T. Lin, Y. Wang, X. Liu, and X. Qiu, “A survey of transformers,” AI Open, vol. 3, pp. 111–132, 2022. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S2666651022000146

- [21] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of deep bidirectional transformers for language understanding,” in Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers). Minneapolis, Minnesota: Association for Computational Linguistics, Jun. 2019, pp. 4171–4186. [Online]. Available: https://aclanthology.org/N19-1423

- [22] A. Radford and K. Narasimhan, “Improving language understanding by generative pre-training,” 2018. [Online]. Available: https://api.semanticscholar.org/CorpusID:49313245

- [23] F. Lin, T. Wu, S. Wu, S. Tian, and G. Guo, “Feature selective transformer for semantic image segmentation,” 2022.

- [24] A. Saxena and K. Goebel, “Turbofan engine degradation simulation data set.” NASA Prognostics Data Repository, 2008, [Accessed 04-09-2023].

- [25] ——, “Phm08 challenge data set.” NASA Prognostics Data Repository, 2008, [Accessed 04-09-2023].

- [26] B. Lim, S. O. Arik, N. Loeff, and T. Pfister, “Temporal fusion transformers for interpretable multi-horizon time series forecasting,” 2020.

- [27] Q. Wen, T. Zhou, C. Zhang, W. Chen, Z. Ma, J. Yan, and L. Sun, “Transformers in time series: A survey,” 2023.

- [28] A. Klein, “Backblaze Drive Stats for Q1 2023 — backblaze.com,” https://www.backblaze.com/blog/backblaze-drive-stats-for-q1-2023/, [Accessed 02-09-2023].

- [29] N. M. Foumani, C. W. Tan, G. I. Webb, and M. Salehi, “Improving position encoding of transformers for multivariate time series classification,” 2023.

- [30] I. Sutskever, O. Vinyals, and Q. V. Le, “Sequence to sequence learning with neural networks,” in Advances in Neural Information Processing Systems, Z. Ghahramani, M. Welling, C. Cortes, N. Lawrence, and K. Weinberger, Eds., vol. 27. Curran Associates, Inc., 2014. [Online]. Available: https://proceedings.neurips.cc/paper/2014/file/a14ac55a4f27472c5d894ec1c3c743d2-Paper.pdf

- [31] P. Anantharaman, M. Qiao, and D. Jadav, “Large scale predictive analytics for hard disk remaining useful life estimation,” in 2018 IEEE International Congress on Big Data (BigData Congress), 2018, pp. 251–254. [Online]. Available: https://doi.org/10.1109/BigDataCongress.2018.00044