Testing Autonomous Systems with Believed Equivalence Refinement

Abstract

Continuous engineering of autonomous driving functions commonly requires deploying vehicles in road testing to obtain inputs that cause problematic decisions. Although the discovery leads to producing an improved system, it also challenges the foundation of testing using equivalence classes and the associated relative test coverage criterion. In this paper, we propose believed equivalence, where the establishment of an equivalence class is initially based on expert belief and is subject to a set of available test cases having a consistent valuation. Upon a newly encountered test case that breaks the consistency, one may need to refine the established categorization in order to split the originally believed equivalence into two. Finally, we focus on modules implemented using deep neural networks where every category partitions an input over the real domain. We present both analytical and lazy methods to suggest the refinement. The concept is demonstrated in analyzing multiple autonomous driving modules, indicating the potential of our proposed approach.

1 Introduction

Mainstream approaches for engineering autonomous vehicles follow a paradigm of continuous improvement. It is widely conceived in industry that unconsidered engineering issues such as edge cases may appear when validating the autonomous vehicles on road with sufficiently many miles. The autonomy function, upon a newly encountered edge case, will be evaluated against the impact and function modification may be performed. While discovering the previously unconsidered issues leads to an improvement of the engineered system, it also challenges the previously stated assumptions being established in design and in test time.

In this paper, we formally address the impact of newly discovered issues over the previously stated test-completeness claims based on equivalence classes and test coverage. Intuitively, equivalence classes are situations where the system demonstrates similar behavior, and test coverage is a relative completeness measure to argue that the testing efforts have covered the entire input space with sufficient diversity. While the concept of equivalence class may be straightforward in theory, to apply it in complex autonomous driving settings, we mathematically formulate a revision called believed equivalence class. The initial categorization used to build equivalence classes is initially based on expert judgements (e.g., all images having sunny weather and straight roads form a believed equivalence class for front-vehicle detection, as experts think that a front vehicle will be detected) and is subject to available test cases demonstrating similar behavior. So long as any newly discovered test cases in the same class violate the behavior similarity (e.g., on sunny weather but the straight road is covered with snow, the perception will fail to detect a white vehicle in the front), there is a need to refine the believed equivalence class. Regarding the impact of refinement over test coverage, while the number of equivalence classes is known to be exponential (aka combinatorial explosion), under the restricted setting of achieving full coverage using -way combinatorial testing [16], the maximum number of additional test cases required to return to full coverage (due to refinement) is bounded by a polynomial with degree.

Finally, we look into the testing of functions implemented using deep neural networks (DNNs). When the equivalence class is based on partitioning the input domain over real numbers, guiding the construction of refined equivalence classes can utilize the known approach of adversarial perturbation [20, 15]. This avoids creating a believed equivalence class that only contains a single point. For deep neural networks, we prove that finding an optimal cut can be encoded using mixed-integer linear programming (MILP). Due to the scalability considerations of purely analytical methods, we also utilize the concept of -nearest neighbors among the existing test data to guide the perturbed direction, in order to find feasible cuts that at least consistently separate points along all perturbed directions.

To demonstrate the validity of the approach, we have applied the technique on DNN-based modules for trajectory following and adaptive cruise control in autonomous driving. Our research prototype continuously refines the believed equivalence upon newly encountered test cases that lead to inconsistency. Altogether our proposal (equivalence classes based on currently available test cases) provides a rigorous framework to understand better the concept of continuous testing and its impact on the completeness of verification of autonomous systems using test coverage.

The rest of the paper is structured as follows. After Section 2 comparing our results with existing work, Section 3 formulates the concept of believed equivalence, coverage, and the refinement of believed equivalence. Section 4 discusses how believed equivalence can be applied when the module under test is a deep neural network. Finally, we summarize our evaluation in Section 5 and conclude in Section 6.

2 Related Work

The concept of equivalence class has been widely used in analyzing complex systems. For example, region construction used in timed automata [3] establishes equivalence classes over infinitely many states regarding the ordering of clock variables reaching integer values. This (finite number of regions) serves as a basis of decidability results for verifying properties of timed automata. State-of-the-art guidelines for safety engineering autonomous driving systems such as ISO TR 4804 [2]111An early version (aka SaFAD) drafted by multiple automotive manufacturers and suppliers is publicly available at https://www.daimler.com/documents/innovation/other/safety-first-for-automated-driving.pdf admit the need for continuous engineering; ISO TR 4804 also explicitly mentions equivalence class as a method to avoid excessive testing efforts. Nevertheless, it is not mathematically defined and lacks formal guarantees compared to the classical equivalence class concept. Finally, there are proposals such as micro-ODD [11] in the safety engineering domain to fine-grain the allowed driving conditions. Still, these proposals lack a formal treatment in terms of an equivalence class. All the above results provide a strong motivation to our work: Our formulated believed equivalence class only demonstrates equivalent behavior on the currently available test cases and may be subject to refinement in a continuous engineering paradigm.

For testing DNN-based autonomous systems, researchers proposed structural coverage metrics to achieve a certain level of “relative completeness” [17, 14, 19]. Still, recent results have indicated their limited applicability [13]. Utilizing scenario description languages [9, 1], one can naturally partition the input space for testing [5, 12], but there is no guarantee that all inputs within the partition demonstrate similar behavior. Therefore, one may only process a weaker guarantee (equivalence by currently available test cases), as reflected by our proposal.

Finally, for deep neural networks, our construction of refining the believed equivalence integrates the idea of local robustness (see [10, 18, 7] for recent surveys) as used in deep learning. Due to the scalability issues related to exact analytical methods such as MILP, it is realistic to generate a believed equivalence class that contains local robustness region within a reasonable time budget; one can perform refinement upon newly encountered test cases. Our adoption of -nearest neighbors serves for this purpose.

3 Believed Equivalence and Refinement

3.1 Believed Equivalence

Let be the function under analysis, where and are the input and the output domain respectively. Let be an evaluation function that assigns an input-output pair with a value in the evaluation domain . Let be the set of categories, where for , each category has a set of elements with the set size being . In our example, we may use to clearly indicate the element and its belonging category. Each element evaluates any input in the domain to true or false. We assume that for any input , for every there exists exactly one () such that , i.e., for each category, every input is associated to exactly one element.

In the following, we define believed equivalence over the test set. Intuitively, for any two data points in the test set, so long as their corresponding element information are the same, demonstrating believed equivalence requires that the results of the evaluation function are also the same.

Definition 1

Given a set of test cases and categories , function demonstrates believed equivalence over under the set and evaluation function , denoted as , if for any two test cases the following condition holds:

|

|

Consider the example illustrated in Figure 1. Given to be all possible pixel values in an image from the vehicle’s mounted camera, we build a highly simplified input categorization in the autonomous driving perception setup. Let , where Time = , Front-Vehicle = {exist, not-exist} indicates whether in the associated ground truth, there exists a vehicle in the front. Consider to be a DNN that performs front-vehicle detection. The first output of , denoted as , generates 1 for indicating “DETECTED” (a front vehicle is detected, as shown in Figure 1 with dashed rectangles) and 0 for “UNDETECTED”. Based on the input image, let be the function that checks if the detection result made by the DNN is consistent with the ground truth, i.e., iff .

As illustrated in Figure 1, let . For and , one can observe from Figure 1 that . Then demonstrates believed equivalence over under , provided that the following conditions also hold.

-

•

,

-

•

,

-

•

.

(Remark) It is possible to control the definition of such that demonstrating believed equivalence is independent of the computed value , thereby creating a partitioning of the input space and its associated properties (i.e., labels). For example, consider the following function. where iff . This evaluation function assigns every input with its associated element index for each category, thereby being independent of .

3.2 Coverage based on Combinatorial Testing

Test coverage can measure the degree that a system is tested against the provided test set. In the following lemma, we associate our formulation of believed equivalence with the combinatorial explosion problem of achieving full test coverage. Precisely, the size of the test set to demonstrate believed equivalence while completely covering all combinations as specified in the categories is exponential to the number of categories.

Lemma 1

Let . Provided that , the minimum size of to satisfy the below condition is larger or equal to .

| (1) |

Proof 1

Recall in Definition 1, requires any “two” test cases where , equals . Therefore, for Equation 1, if there exists only one element in each element combination (in Eq. 1), it still satisfies Definition 1. The number of all possible element combinations is . One needs to cover each element combination with one test case, and a test case satisfying one combination cannot satisfy another combination, due to our previously stated assumption that . Therefore the lemma holds. ∎

As the number of categories can easily achieve , even when each category has only elements, achieving full coverage using the condition in Eq. 1 requires at least different test cases; this is in most applications unrealistic. Therefore, we consider asserting full coverage using -way combinatorial testing [16], which only requires the set of test cases to cover, for every pair () or triple () of categories, all possible combinations. The following lemma states the following: Provided that is a constant, the number of test cases to achieve full coverage can be bounded by a polynomial with being its highest degree.

Lemma 2 (Coverage using -way combinatorial testing)

Let . Provided that , the minimum size of to satisfy -way combinatorial testing, characterized by the below condition, is bounded by .

| (2) |

Proof 2

To satisfy Equation 2, the first universal term creates choices, where for each choice, the second universal term generates at most combinations. Therefore, the total number of combinations induced by two layers of universal quantification equals , and a careful selection of test cases in , with each combination covered by exactly one test case, leads to the size of bounded by . Finally, similar to Lemma 1, covering each combination with only one test case suffices to satisfy . Therefore the lemma holds. ∎

Figure 2 illustrates an example for coverage with -way combinatorial testing, where with each () having and accordingly. Let (i.e., excluding and ). Apart from demonstrating believed equivalence, also satisfies the condition as specified in Lemma 2. By arbitrary picking categories among , for instance and , all combinations are covered by elements in (; ; ; ).

3.3 Encountering

As the definition of believed equivalence is based on the test set , upon new test cases outside the test set, i.e., , the function under analysis may not demonstrate consistent behavior. We first present a formal definition on consistency.

Definition 2

A test case is consistent with the believed equivalence when the following condition holds for all test cases :

Otherwise, is inconsistent with the believed equivalence .

The following result show that, under a newly encountered consistent test case, one can add it to the existing test set while still demonstrating believed equivalence.

Lemma 3

If a test case is consistent with the believed equivalence , then .

Consider again the example in Figure 2. Provided that , one can observe that is consistent with (as for , there exists no test case from ), while is inconsistent (the evaluated result for is not the same as and ).

Encountering a new test case that generates inconsistency, there are two methods to resolve the inconsistency depending on whether is implemented correctly.

-

•

(When is wrong - function modification) Function modification refers to the change of to such that the evaluation over in’ turns consistent; it is used when is engineered wrongly. For the example illustrated in Figure 2, a modification of shall ensure that , , and have the same evaluated value (demonstrated using the same color).

-

•

(When is correct - refining the believed equivalence class) When is implemented correctly, it implies that our believed equivalence, based on the currently defined category , is too coarse. Therefore, it is necessary to refine such that the created believed equivalence separates the newly introduced test case from others. To ease understanding, consider again the example in Figure 1. When one encounters an image where the front vehicle is a white trailer truck, and under heavy snows, we envision that the front vehicle can be undetected. Therefore, the currently maintained categories shall be further modified to

-

–

differentiate images by snowy conditions (preferably via proposing a new category), and

-

–

differentiate images having front vehicles in white from front vehicles having non-white colors (preferably via refining an existing element in a category).

-

–

In the next section, we mathematically define the concept of refinement to cope with the above two types of changes in categories.

3.4 Believed Equivalence Refinement

Definition 3

Given and , a refinement-by-cut generates a new set of categories by first removing in , followed by adding two elements and to , where the following conditions hold.

-

•

For any test case such that , exclusively either or .

-

•

For any test case such that , and .

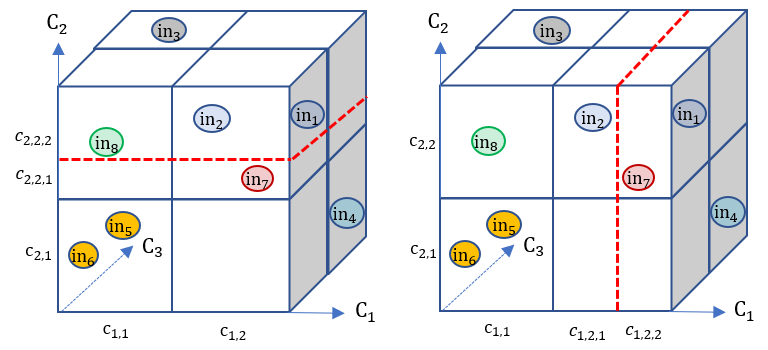

To ease understanding, Figure 3 shows the visualization for two such cuts.222Note that Figure 3, the cut using a plane is only for illustration purposes; the condition as specified in Definition 3 allows to form cuts in arbitrary shapes. The cut in the left refines to and , and the cut in the right refines to and . One can observe that the left cut can enable a refined believed equivalence, as the cut has separated from and . This brings us to the definition of effective refinement cuts.

Definition 4

Given , , where , and a new test case , a refinement-by-cut is effective over in’, if .

Apart from cuts, the second type of refinement is to add another category.

Definition 5

Given , and a new test case , an refinement-by-expansion generates a new set of categories by adding to , where with elements satisfying the following conditions.

-

•

For any test case , and .

-

•

and .

In contrast to refinement cuts where effectiveness is subject to how cuts are defined, the following lemma paraphrases that refinement-by-expansion is by-definition effective.

Lemma 4

Given , , where , and a new test case , a refinement-by-expansion is effective over in’, i.e., .

Proof 4

-

1.

By introducing the new category following the condition as specified in Definition 4, consider where , then as and . We can expand the first universal quantifier such that also holds. As , so .

- 2.

(Coverage under Refinement) Although introducing refinement over categories can make in’ consistent with , it may also make the previously stated coverage claim (using -way combinatorial testing) no longer valid. An example can be found in the left of Figure 3, where a full coverage -way combinatorial testing no longer holds due to the cut. By analyzing category pairs and , one realizes that achieving coverage requires an additional test case where and .

For -way combinatorial testing, in the worst case, the newly introduced element ( in the refinement-by-expansion case; or in the refinement-by-cut case) will only be covered by the newly encountered test case . As one element has been fixed, the number of additional test cases to be introduced to recover full coverage can be conservatively polynomially bounded by , where . Under the case of being a constant, the polynomial is one degree lower than the one as constrained in Lemma 2.

Finally, we omit technical formulations, but as refinement is operated based on the set of categories, it is possible to define the hierarchy of coverage accordingly utilizing the refinement concept. As a simple example, consider the element vehicle being refined to , and to reflect different types of vehicle and their different performance profile. The coverage on vehicle can be an aggregation over the computed coverage from three refined elements.

4 Refinement-by-cut for Inputs over Reals

4.1 Refinement-by-cut as Optimization

Finally, we address the special case where the refinement can be computed analytically. Precisely, we consider the input domain being , i.e., the system takes inputs over reals, where matches the size of the category set. We also consider that every category is characterized by ascending values , such that performs a simple partitioning over the -th input, i.e., for , iff . Figure 4 illustrates the idea. The above formulation fits well for analyzing systems such as deep neural networks where each input is commonly normalized to a value within the interval .

Using Definition 3, a refinement-by-cut implies to find a value such that (1) iff and (2) iff . Provided that being the newly encountered test case, we present one possible analytical approach for finding such a cut, via solving the following optimization problem:

| (3) |

subject to:

| (4) |

| (5) |

| (6) |

In the above stated optimization problem, apart from constraints as specified in Equation 4 which follows the standard definition as regulated in Definition 4 (illustrated in Figure 5a), we additionally impose two optional constraints Eq. 5 and Eq. 6.

-

•

First, we wish to regulate the size of the newly created element such that the new believed equivalence class generalizes better by keeping a minimal distance to the boundary (illustrated in Figure 5b).

-

•

Secondly, we also specify that all inputs within the -ball centered by in’ demonstrate consistent behavior, thereby demonstrating robustness via behavioral equivalence (illustrated in Figure 5c).

The following lemma states the condition where the optimization problem can be solved analytically using integer linear programming. For general cases, nonlinear programming is required.

Lemma 5

Let be a ReLU network and be a linear function over input and output of . Provided that performs a simple partitioning over the -th input, and every input is bounded by ( and ), the optimization problem as stated using Equation 3, 4, 5, and 6 can be encoded using mixed-integer linear programming (MILP).

Proof 5

Finding the maximum for constraint systems using Equation 3, 4, 5 can be trivially by finding the separation plane (illustrated in Figure 5b). Therefore, the key is to independently compute over constraints as specified in Equation 6, and the resulting is the minimum of the computed two. If is a ReLU network with all its inputs being bounded by closed intervals, it is well known that the as well as the local robustness condition can be encoded using MILP [6, 8, 4] due to the piecewise linear nature of ReLU nodes. As long as is a linear function, it remains valid to perform encoding using MILP. ∎

(Extensions) Here we omit technical details, but it is straightforward to extend the above constraint formulation to (1) integrate multiple cuts within the same element and to (2) allow multiple cuts over multiple input variables.

4.2 Using -nearest Neighbors to Generate Robust Refinement Proposals

Finally, as the local robustness condition in Equation 6 is computationally hard to be estimated for state-of-the-art neural networks, we consider an alternative proposal that checks robustness using the direction as suggested by -nearest neighbors. The concept is illustrated using Figure 5d, where one needs to find a cut when encountering in’. The method proceeds by searching for its closest neighbors , and (i.e., ), and builds three vectors pointing towards these neighbors. Then perturb in’ alongside the direction as suggested by these vectors using a fixed step size , until the evaluated value is no longer consistent (in Figure 5d; all rectangular points have consistent evaluated value like in’). Finally, decide the cut () by taking the minimum distance as reflected by perturbations using these three directions.

The generated cut may further subject to refinement (i.e., newly encountered test cases may lead to further inconsistency), but it can be viewed as a lazy approach where (1) we take only finite directions (in contract to local robustness that takes all directions) and (2) we take a fixed step size (in contract to local robustness that has an arbitrary step size). By doing so, the granularity of refinement shrinks on-the-fly when encountering new-yet-inconsistent test cases.

Integrating the above mindset, we present in Algorithm 1 lazy cut refinement for constructing believed equivalence from scratch. Line 3 builds an initial categorization for each variable by using the corresponding bound. We use the set to record all data points that have been considered in building the believed equivalence, where is initially set to empty (line 4). For every new data in to be considered, it is compared against all data points in ; if exists such that the believed equivalence is violated (line 7), then find the nearest neighbors in (line 8) and pick one dimension to perform cut by refinement (line 8 mimicking the process in Figure 5(d)). Whenever the generated cut proposal satisfies the distant constraint as specified in Equation 5 (line 12), the original is replaced by . Otherwise (lines 18-20), every proposed cut can not satisfy the distant constraint as specified in Equation 5 so the algorithm raises a warning (line 19). Finally, the data point in is inserted to , representing that it has been considered. The presentation of the algorithm is simplified to ease understanding; in implementation one can employ multiple optimization methods (e.g., use memory hashing to avoid running the inner loop at line 6 completely) to speed up the construction process.

5 Implementation and Evaluation

We have implemented the concept of believed equivalence and the associated refinement pipeline using Python, focusing on DNN-based modules. The tool searches for feasible cuts on new test cases that lead to inconsistency. As the goal is to demonstrate full automation of the technique, we applied the description in Section 4 such that each category matches exactly one input variable. Therefore, cuts can be applied to the domain of any input variable. In our implementation, we maintain a fixed ordering over categories/variables to be cut whenever refinement is triggered. For the evaluation in automated driving using public examples, we use the lane keeping assist (LKA) module333Details of the example is available at https://www.mathworks.com/help/reinforcement-learning/ug/imitate-mpc-controller-for-lane-keeping-assist.html and the adaptive cruise control (ACC) module444Details of the example is available at https://ch.mathworks.com/help/mpc/ug/adaptive-cruise-control-using-model-predictive-controller.html made publically available by Mathworks to demonstrate the process of refinement on its believed equivalences.

The LKA subsystem takes six inputs, including lateral velocity (LV), yaw angle rate (YAR), lateral deviation (LD), relative yaw angle (RYA), previous steering angle (PSA), and measured disturbance (MD) to decide the steering angle of the vehicle. We construct a DNN for the implementation of the lane keeping assist. The DNN contains three fully connected hidden layers and adopts ReLU as activation functions. The model is trained with the given dataset provided by Mathworks, and the accuracy reaches . The output of the DNN ranges from to , meaning that the steering angle should be between the degree of . We divide the range of its output into five classes in our evaluation function, indicating that the output (steering angle) can be one of the following classes: strong left, left turn, straight, right turn, or strong right. Initially, each category has one element being set as the range of the corresponding input. The categories are gradually refined using cuts when inconsistent test cases appear.

| test cases | LV | YAR | LD | RYA | PSA | MD |

|---|---|---|---|---|---|---|

| 0 | 1 | 1 | 1 | 1 | 1 | 1 |

| 1000 | 28 | 14 | 13 | 5 | 2 | 1 |

| 5000 | 29 | 16 | 13 | 10 | 4 | 2 |

| 10000 | 31 | 17 | 13 | 11 | 6 | 2 |

| 20000 | 32 | 19 | 14 | 12 | 9 | 3 |

| 30000 | 35 | 20 | 15 | 13 | 10 | 3 |

| 40000 | 39 | 21 | 18 | 13 | 12 | 3 |

| 50000 | 40 | 21 | 18 | 13 | 14 | 3 |

| 60000 | 41 | 22 | 18 | 14 | 14 | 3 |

| 70000 | 41 | 22 | 18 | 15 | 15 | 3 |

| 80000 | 41 | 22 | 18 | 15 | 16 | 3 |

Table I provides a trend summary on the number of cuts applied in each category, subject to the number of test cases being encountered. After the first test cases, LV has been refined to intervals, while YAR has been refined to intervals. The searching sequence for feasible cuts is from the first category to the last. From the table, we observe that after the number of explored test cases exceeds , the number of cuts increases slowly. The reason is that the test cases and their evaluated values diverse in the beginning, so the categories are frequently updated. However, with the increasing number of explored test cases, most of the new test cases are consistent with existing ones.

Note that the number of cuts in every category is affected by the ordering how cuts are applied. As the search always starts from the first category (LV), the number of cuts for LV is larger than those of the others. When we change the searching sequence and start from the last category to the first one (i.e., to start the cut from MD), the number of cuts after the first test cases becomes , , , , and , respectively. Though the number of cuts varies with different searching sequence, the number of cuts for the last category (MD) is more stable. The reason being that the number of different valuations of MD in the dataset is small.

The ACC module takes five inputs, including driver-set velocity (), time gap (), velocity of the ego car (), relative distance to the lead car (), and relative velocity to the lead car () to decide the acceleration of the ego car. The first two inputs are assigned with fixed valuations. By setting the range of initial velocities and positions for lead and ego cars, we obtain a set of inputs and outputs from the ACC module using model predictive control lateral velocity (LV). We then construct a DNN for the implementation of the module. The structure of the DNN is similar to that of LKA. The model is trained with the obtained set, and the accuracy reaches 98.0%. The output of the DNN ranges from to 2, for the physical limitation of the vehicle dynamics. We also divide the range of its output into five classes, indicating the velocity can be strong braking, slow braking, braking, slow accelerating, or strong accelerating. The categories of inputs are gradually refined when inconsistent test cases appear.

| test cases | |||

|---|---|---|---|

| 0 | 1 | 1 | 1 |

| 1000 | 221 | 27 | 1 |

| 2000 | 230 | 34 | 1 |

| 3000 | 261 | 43 | 3 |

| 4000 | 262 | 53 | 3 |

| 5000 | 284 | 55 | 4 |

| 6000 | 285 | 57 | 4 |

| 7000 | 289 | 58 | 5 |

| 8000 | 290 | 62 | 5 |

| 9000 | 305 | 67 | 5 |

| 10000 | 307 | 70 | 5 |

Table II lists the trend summary on the number of cuts applied in each category of three inputs (except for the first two), subject to the number of encountered test cases. The searching sequence of feasible cuts is from the first to the last. From the table, we observe that the number of cuts for the velocity of ego increases rapidly when the number of explored test cases is less than 1000. However, the number of cuts for the last input increases slowly. The reason is that the velocity of the ego and the relative distance between the ego and lead car may be the main factors to decide the acceleration valuation of the ego car.

The refined categories show that the granularity for most of the cuts is less than one for the three inputs. For input , the cut granularity is larger when the velocity of the lead car is faster than the ego car. We guess that the model is more sensitive when the velocities of the lead and ego cars are similar.

6 Concluding Remarks

In this paper, we considered the practical problem of using the equivalence class in testing autonomous vehicles. Starting with a set of categorizations, we realized that any combination is only an equivalence class “candidate” and may be further subject to refinement. We formulated this idea using believed equivalence, established its connection with coverage criterion using combinatorial testing, and discussed analytical solutions for finding a refinement using optimization. As guided by the newly discovered test cases, the refinement offers traceability on why such a believed equivalence is formed.

Currently, our initial evaluation is conducted using publicly available autonomous driving functions. We plan to integrate this technique in one of our research projects on automated valet parking to refine the process further and bring the concept into standardization bodies.

(Acknowledgement) This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 956123.

References

- [1] Measurable scenario description language (m-sdl). https://www.foretellix.com/open-language/, 2018.

- [2] Iso/tr 4804:2020 road vehicles — safety and cybersecurity for automated driving systems — design, verification and validation. https://www.iso.org/standard/80363.html, 2020.

- [3] R. Alur. Timed automata. In International Conference on Computer Aided Verification (CAV), pages 8–22. Springer, 1999.

- [4] R. Anderson, J. Huchette, W. Ma, C. Tjandraatmadja, and J. P. Vielma. Strong mixed-integer programming formulations for trained neural networks. Mathematical Programming, pages 1–37, 2020.

- [5] C.-H. Cheng, C.-H. Huang, and H. Yasuoka. Quantitative projection coverage for testing ML-enabled autonomous systems. In International Symposium on Automated Technology for Verification and Analysis (ATVA), pages 126–142. Springer, 2018.

- [6] C.-H. Cheng, G. Nührenberg, and H. Ruess. Maximum resilience of artificial neural networks. In International Symposium on Automated Technology for Verification and Analysis (ATVA), pages 251–268. Springer, 2017.

- [7] T. Dreossi, S. Ghosh, A. Sangiovanni-Vincentelli, and S. A. Seshia. A formalization of robustness for deep neural networks. arXiv preprint arXiv:1903.10033, 2019.

- [8] M. Fischetti and J. Jo. Deep neural networks and mixed integer linear optimization. Constraints, 23(3):296–309, 2018.

- [9] D. J. Fremont, T. Dreossi, S. Ghosh, X. Yue, A. L. Sangiovanni-Vincentelli, and S. A. Seshia. Scenic: a language for scenario specification and scene generation. In ACM SIGPLAN Conference on Programming Language Design and Implementation (PLDI), pages 63–78, 2019.

- [10] X. Huang, M. Kwiatkowska, S. Wang, and M. Wu. Safety verification of deep neural networks. In International Conference on Computer Aided Verification (CAV), pages 3–29. Springer, 2017.

- [11] P. Koopman, B. Osyk, and J. Weast. Autonomous vehicles meet the physical world: RSS, variability, uncertainty, and proving safety. In International Conference on Computer Safety, Reliability, and Security (SAFECOMP), pages 245–253. Springer, 2019.

- [12] Y. Li, J. Tao, and F. Wotawa. Ontology-based test generation for automated and autonomous driving functions. Information and Software Technology, 117:106200, 2020.

- [13] Z. Li, X. Ma, C. Xu, and C. Cao. Structural coverage criteria for neural networks could be misleading. In IEEE/ACM International Conference on Software Engineering: New Ideas and Emerging Results (ICSE-NIER), pages 89–92. IEEE, 2019.

- [14] L. Ma, F. Juefei-Xu, F. Zhang, J. Sun, M. Xue, B. Li, C. Chen, T. Su, L. Li, Y. Liu, et al. Deepgauge: Multi-granularity testing criteria for deep learning systems. In ACM/IEEE International Conference on Automated Software Engineering (ASE), pages 120–131. ACM, 2018.

- [15] S.-M. Moosavi-Dezfooli, A. Fawzi, O. Fawzi, and P. Frossard. Universal adversarial perturbations. In IEEE conference on computer vision and pattern recognition (CVPR), pages 1765–1773, 2017.

- [16] C. Nie and H. Leung. A survey of combinatorial testing. ACM Computing Surveys (CSUR), 43(2):1–29, 2011.

- [17] K. Pei, Y. Cao, J. Yang, and S. Jana. Deepxplore: Automated whitebox testing of deep learning systems. In ACM Symposium on Operating Systems Principles (SOSP), pages 1–18, 2017.

- [18] S. A. Seshia, A. Desai, T. Dreossi, D. J. Fremont, S. Ghosh, E. Kim, S. Shivakumar, M. Vazquez-Chanlatte, and X. Yue. Formal specification for deep neural networks. In International Symposium on Automated Technology for Verification and Analysis (AVTA), pages 20–34. Springer, 2018.

- [19] Y. Sun, M. Wu, W. Ruan, X. Huang, M. Kwiatkowska, and D. Kroening. Concolic testing for deep neural networks. In ACM/IEEE International Conference on Automated Software Engineering (ASE), pages 109–119. ACM, 2018.

- [20] C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow, and R. Fergus. Intriguing properties of neural networks. In International Conference on Learning Representations (ICLR), 2014.