TempGNN: Temporal Graph Neural Networks for Dynamic Session-Based Recommendations

Abstract.

Session-based recommendations which predict the next action by understanding a user’s interaction behavior with items within a relatively short ongoing session have recently gained increasing popularity. Previous research has focused on capturing the dynamics of sequential dependencies from complicated item transitions in a session by means of recurrent neural networks, self-attention models, and recently, mostly graph neural networks. Despite the plethora of different models relying on the order of items in a session, few approaches have been proposed for dealing better with the temporal implications between interactions. We present Temporal Graph Neural Networks (TempGNN), a generic framework for capturing the structural and temporal dynamics in complex item transitions utilizing temporal embedding operators on nodes and edges on dynamic session graphs, represented as sequences of timed events. Extensive experimental results show the effectiveness and adaptability of the proposed method by plugging it into existing state-of-the-art models. Finally, TempGNN achieved state-of-the-art performance on two real-world e-commerce datasets.

1. Introduction

In the current era of information explosion, recommender systems have been widely adopted in most online e-commerce platforms, news portals, social media, etc. (Guy et al., 2010; Li et al., 2017b; Das et al., 2017; Hwangbo et al., 2018) by providing personalized suggestions, so that users can easily find the desired items. However, users’ personal information may not always be accessible in some application settings, such as when they browse websites anonymously without logging in. In such cases, only a large number of browsing event sequences from anonymous users can be used. For this, a session-based recommendation (SBR) has gained increasing attention as a method for predicting subsequent items that an anonymous user is likely to interact with given a sequence of previous items consumed in the ongoing session.

Recent SBR methods have attempted to model how user preferences change over the course of sequential interactions in a session based on deep learning techniques. Recurrent neural network (RNN)-based methods model the series of events in a session as a sequence (Hidasi et al., 2015). In addition, neighbor session information is utilized for augmenting the information on an ongoing session because the information from an ongoing session may be quite sparse (Wang et al., 2019). To determine the effectiveness of long sequences, an attention method has been used for identifying the relevance of each item in a session and capturing the user’s main intention (Liu et al., 2018; Li et al., 2017a). However, blindly feeding the entire series of events into either an RNN or attention-based model makes it difficult to understand the nuanced and intricate structure both within and across sessions, which results in inaccurate modeling. As the most utilized model in recent years, graph neural network (GNN)-based methods convert each session into a directed graph and calculate the degree of information flow between items during information propagation to generate item and session representations (Wu et al., 2019; Gupta et al., 2019; Pan et al., 2020a).

Existing methods achieve considerably high performance by identifying pairwise ordered item transition patterns. However, they ignore an important factor, that is, the temporal implications caused by the time difference between events in a session. Most methods assume that all historical interactions have the same importance as the user’s current choice, but this may not always be the case. Choices almost always have time-sensitive contexts. The selection of an item by a user is influenced by both short-term and current contexts. Even if it is a click event for the same item, its significance may change depending on when a user clicks on it. Therefore, considering ordered item transitions only without the temporal patterns of the item transition relations is suboptimal for accurately capturing the dynamic changes in user preference.

Recent efforts have been devoted to considering the temporal implications of events in a sequential recommendation field. (Li et al., 2020) models the time intervals between items in a sequence. (Zhou et al., 2018) bucketizes a time feature with an exponentially increasing time range. (Ye et al., 2020) jointly learns user interests and two typical temporal patterns: an absolute time pattern and a relative time pattern. However, such temporal encoding strategies have rarely been considered in SBRs, which restricts their ability to capture the significance of interactions at different times. We conjecture that there are two reasons for the difficulties in inferring temporal meaning from session data. Unlike sequential recommendations, as a session usually has a very short length with a short expiration period, the time differences between interactions in a session are very small. Moreover, because user information is not clearly provided for security reasons, it can be extracted only within the limited time of a session unit.

To tackle the aforementioned problems, we propose Temporal Graph Neural Networks (TempGNN), a generic framework for capturing the structural and temporal dynamics in complex item transitions on dynamic session graphs, represented as sequences of timed events. To facilitate GNNs, we propose a temporal embedding method for nodes and edges. The embedding on nodes models the time differences between the prediction time and timestamps of items, whereas the embedding on edges models the time differences between events in a session. In addition, the proposed temporal encoding approach considers time frequency to avoid biased learning towards a specific time range with high popularity. We also propose a novel method for combining temporal information with an item through a gate network, which allows the model to consider the degree of dependence of time and an item on each other. Extensive experimental results using two real-world e-commerce datasets show that our method outperforms various existing state-of-the-art models and this confirms the effectiveness and adaptability of the proposed temporal embedding.

2. Related Work

Session-Based Recommendations. A session is formally represented as a variable-length event sequence with clear boundaries (Wang et al., 2021). To build better-performing SBRs, a GNN has been widely adopted in recent years for modeling the complex item transitions within and across sessions by transforming a session into a graph structure. SR-GNN (Wu et al., 2019) adopted a gated graph neural network (GGNN) (Li et al., 2015) and an attention mechanism for predicting the next item in given a session. NISER+ (Gupta et al., 2019) extended SR-GNN to address popularity bias by introducing L2 normalization. SGNN-HN (Pan et al., 2020a) introduced a virtual node that considers unconnected items and a highway gate to reduce overfitting.

However, previous studies still do not perform well in comprehending complicated transition relationships because they do not consider the time differences between the transitions at all. Unlike other methods, our model can capture item transition patterns better by injecting temporal information from a session into GNNs.

Temporal Embeddings in Session-based Recommendations. TA-GNN (Guo et al., 2020) utilized time interval information between nodes by introducing time-aware adjacency matrices. This method assumes that two interactions are more relevant when the time interval between them is short. TiRec (Zhang et al., 2020) also modeled a session using a time interval. This method adopted a fixed set of sinusoid functions as a basis. KSTT (Zhang et al., 2021) introduced three types of time embedding methods that utilize the time difference between each behavior and the prediction time: time bucket embedding, time2vec (Kazemi et al., 2019), and Mercer time embedding (Xu et al., 2019). These multiple temporal embeddings were expected to capture different time patterns.

The above methods attempted to capture a user’s intention more accurately using either time intervals between interactions or time differences with regards to the prediction timing, whereas our model adopts both to maximize the effect.

Temporal Embeddings in Sequential Recommendations. Many attempts have been made to encode temporal information in Sequential Recommendations. PosRec (Qiu et al., 2021) fully exploited positional information through dual-positional encoding with position-aware GGNNs. TGSRec (Fan et al., 2021) unified sequential patterns and temporal collaborative signals based on Bochner’s theorem (Loomis, 2013). However, the small number of learnable vectors of these methods was insufficient to capture a large amount of time information. Thus, temporal embedding through bucketizing has been widely used. TiSASRec (Li et al., 2020) modeled the time intervals between items in a sequence. ATRank (Zhou et al., 2018) bucketized a time feature with an exponentially increasing time range. TASER (Ye et al., 2020) jointly learned user interests and two typical temporal patterns in absolute and relative times. However, learning with these methods could be skewed in favor of several buckets with high popularity, because the buckets are divided without considering their frequency of appearance.

In contrast, our time encoding approach additionally considers the time frequency, which ensures that each bucket has the same amount of temporal information to avoid biased learning. This is effective in preventing the overfitting of temporal embeddings, regardless of the distribution of time. In addition, we propose a novel method combining temporal information with an item through a gate network that adjusts each weight by considering the relationship between time and an item.

3. Problem Definition

A dynamic session-based recommendation predicts the next item based on an ongoing session taking into account the recommendation timing, as shown in Figure 1. In other words, the next click may change according to the predicted timing. We formulate this task as follows. Let denote all unique items in all sessions, where is the number of unique items. A session is a sequence of items and their timestamps, where and are the -th clicked item and timestamp in , and is the length of the session. Given a session and prediction timestamp , we aim to predict the next clicked item . Items with the highest top-K scores are recommended by estimating a probability vector corresponding to the relevance scores for the unique items.

4. Method

Our workflow consists of three main parts: graph construction, information propagation in a GNN, and attention and prediction, as shown in Figure 3. A detailed description of each process is given in the following sections.

4.1. Graph Construction

We construct a graph from each session to better capture user behavior. The vertices of the graph denote unique nodes from the combinations of items and times. Edges are obtained through the temporal embedding of edges. The dimensions of the embeddings can be set differently, but here they are all set to for convenience.

4.1.1. Item Embedding

Each unique item is mapped onto a trainable vector in . We apply normalization to each embedding during training and inference to reduce the effect of the popularity of items on the model because items with a high frequency during training have a high norm, whereas less popular items do not. There are detailed descriptions and experiments in (Gupta et al., 2019). Therefore, our model uses normalized item embedding as

| (1) |

4.1.2. Temporal Embedding for Nodes

We define temporal embedding for nodes (TN) as a feature of the difference between the prediction timing and click timestamp of each item in a session. This includes information on how much time information should be utilized in predictions, taking into consideration how long ago the interaction was.

Specifically, we first bucketize the time difference. This is a prerequisite for utilizing continuous temporal information, which is difficult to learn. If there are too few buckets, utilizing temporal information has little effect, whereas if the number of buckets is too high, there is a waste of memory with no performance boost. After preparing the appropriate number of buckets, we use a quantile function for the time differences to ensure that each bucket has the same amount of information. This is, of course, performed using the training data. Therefore, the time differences that are not observed during the training process belong to one of the buckets at both ends. After each TN is normalized, it passes through a leaky ReLU function for nonlinearity and a linear layer. In summary, we formulate the -th TN of a session as

| (2) | ||||

where and are learnable parameters, is a leaky ReLU function, means normalization, is a function used to obtain a specific bucket index of , and is a lookup function that takes one embedding vector corresponding to the index.

4.1.3. Temporal Node Aggregation

The nodes used in our GNN reflect the time information to items. Conventional models have used the addition of two embedding vectors as a combination method. The problem with this is that the same temporal information is reflected if the time buckets are the same, regardless of the type of item. However, the relationship between an item and time is more complex. In reality, the degree of sensitivity to time differs depending on the item, even if the temporal embedding is the same. To capture this complex relationship between an item and time, we propose a novel method for controlling the degree of reflection through a gate network when the two embeddings are aggregated, where the weight is calculated by considering the relationship as

| (3) | ||||

where is an element-wise multiplication, is a sigmoid function, denotes a concatenation, and and are trainable parameters.

4.1.4. Temporal Embedding for Edges

Our model exchanges information with neighboring nodes by considering their time intervals. This temporal information is very important when exchanging information they have. For example, a time difference between two nodes that is too long might mean they are not adjacent. In addition, a time interval that is too short could mean a miss click within a session. Therefore, we add temporal embedding for edges (TE) to consider temporal information during propagation between adjacent nodes.

We take the timestamp differences of interactions within a session in two directions: incoming and outgoing, and then feature them in the same way as TN in 4.1.2. The formula is

| (4) | ||||

where .

4.2. Information Propagation in a GNN

Many GNN-based models have been developed for SBRs. Our model advances the previous studies and is particularly based on SGNN-HN and NISER+ (Gupta et al., 2019; Pan et al., 2020a). In addition, we utilize temporal information as an additional feature, which can be applied in any recommendation model.

4.2.1. Star Node

A star node is a virtual node connected to all nodes in a graph with bidirectional edges. Non-adjacent nodes can also propagate information using the star node as an intermediate node (Pan et al., 2020a). At the same time, it has information that integrates the graph. It is updated like other nodes and initialized to the average value of all nodes in the graph as

| (5) |

where denotes the star node and is the number of nodes in the graph.

4.2.2. Message Passing

GNNs typically go through step message passing and neighbor aggregation in order to update a node (Li et al., 2015; Wu et al., 2020; Niepert et al., 2016). Unlike previous GNN-based methods, we designed this exchange of information by considering time intervals between nodes in the message passing phase. Subsequently, a GGNN is applied as a method for updating node information (Li et al., 2015; Wu et al., 2019).

Message passing and aggregation proceed in both directions for incoming and outgoing edges. Among them, we obtain an aggregated message for the -th node from the neighbors through the incoming edges as

| (6) | ||||

where and are trainable parameters for incoming message passing, is the set of incoming neighbors for the -th node, denotes the temporal embedding of the edge from to , and are learnable parameters, and the gate considers the characteristics of the two nodes and the time interval between them (i.e., an incoming edge) in order to adjust the transmitted information. The formula for outgoing message passing is similar to Equation 6 as

| (7) | ||||

where and are trainable parameters and is the set of outgoing neighbors for the -th node. Then, both directional messages are concatenated to update the node as

| (8) |

4.2.3. Updating a Node

Updating nodes proceeds by applying the aggregated message vector and star node. First, the message updates the previous information of a node with a gate as

| (9) | ||||

where , , and are learnable parameters, denotes the -th layer of the GNN, and is a hyperbolic tangent function. After the propagation of adjacent nodes, the star node is reflected in the update, which considers the overall information in the graph. A gate network helps how much information from the previous star node should be propagated as

| (10) | ||||

where is the star node of the previous layer in the GNN and denotes a scaling factor. A non-parametric mechanism is applied for efficient learning unlike SGNN-HN (Pan et al., 2020a). Then, the star node is also updated for continuous graph learning using a non-parametric attention mechanism (i.e., a scaled dot product) as

| (11) | ||||

where is the number of nodes in a graph and denotes a matrix that includes all nodes in the graph.

4.2.4. Highway Gate

This propagation proceeds iteratively with layers through adjacent and intermediate nodes, which consist of shared parameters. This allows the model to obtain more distant information over multiple propagations. However, the individuality of each node can be diluted if it is excessive. Thus, a highway gate (Pan et al., 2020a) is applied to take advantage of both as

| (12) | ||||

where denotes the final node after the highway gate, and are the node after the propagation of the -th layer and initial node, respectively, and and are trainable parameters.

4.3. Attention and Prediction

4.3.1. Obtaining a Preference

Nodes that have completed all propagations are transformed back to a session format as

| (13) |

where is the length of the session and denotes a node arranged in the original order of the sequence. A representation is obtained by reflecting all nodes in different proportions determined by a soft attention mechanism considering the last and overall information (i.e., a star node) as

| (14) | ||||

where , , and are learnable parameters, is the last node, and is the star node after layers. Because the last node could be a decisive clue for estimating a user’s next interaction, a preference vector is formulated as

| (15) |

where and are trainable parameters.

4.3.2. Prediction

We obtain the normalized probabilities for the next click by measuring the similarities between the preference and all candidate items. To solve the long-tail problem of a recommendation (Gupta et al., 2019), cosine similarity is applied as

| (16) |

where is the -th item embedding and , an element of the vector , denotes the similarity between the -th item and a user preference. Then, the similarity vector is normalized by a scaled softmax function, which addresses the convergence problem (Gupta et al., 2019; Pan et al., 2020a) as

| (17) |

where is a scaling factor, is the number of candidate items, and denotes the probability that the next click of a user is the -th candidate item. Then, the items with the highest top-K probabilities in are recommended.

4.3.3. Objective Function

We adopt a cross-entropy loss function as an objective function for the probabilities. Our model is trained by minimizing the loss, which is formulated as

| (18) |

where is a target that indicates whether the next click is the -th item or not. In other words, is a one-hot vector corresponding to the candidate items.

| Yoochoose 1/64 | Yoochoose 1/4 | Diginetica | |

|---|---|---|---|

| # of clicks | 557,248 | 8,326,407 | 982,961 |

| # of train sessions | 369,859 | 5,917,745 | 719,470 |

| # of test sessions | 55,898 | 55,898 | 60,858 |

| # of items | 17,745 | 30,470 | 43,097 |

| Avg. of session lengths | 6.16 | 5.71 | 5.13 |

| Yoochoose 1/64 | Yoochoose 1/4 | Diginetica | |||||||||||

| R@20 | M@20 | R@5 | M@5 | R@20 | M@20 | R@5 | M@5 | R@20 | M@20 | R@5 | M@5 | ||

| RNN-based | GRU4Rec | 62.03 | 23.34 | 37.04 | 20.74 | 67.63 | 27.32 | 42.69 | 24.71 | 34.25 | 9.45 | 14.71 | 7.58 |

| CSRM | 70.20 | 29.77 | 46.05 | 27.20 | 70.50 | 29.23 | 45.37 | 26.58 | 51.51 | 17.20 | 26.55 | 14.76 | |

| Attention-based | STAMP | 68.64 | 29.89 | 45.65 | 27.47 | 70.62 | 30.36 | 46.53 | 27.83 | 47.66 | 15.54 | 24.16 | 13.25 |

| SR-IEM | 70.86 | 31.59 | 47.95 | 29.16 | 71.02 | 30.49 | 46.69 | 27.92 | 51.70 | 17.14 | 26.46 | 14.66 | |

| GNN-based | SR-GNN | 70.38 | 30.71 | 47.08 | 28.26 | 71.39 | 30.96 | 47.07 | 28.40 | 51.46 | 17.54 | 26.94 | 15.11 |

| NISER+ | 71.36 | 31.91 | 48.21 | 29.46 | 72.74 | 32.09 | 48.82 | 29.55 | 54.39 | 19.20 | 29.15 | 16.70 | |

| SGNN-HN | 71.88 | 31.94 | 48.40 | 29.46 | 72.92 | 32.69 | 48.78 | 30.13 | 55.56 | 19.44 | 29.72 | 16.88 | |

| TempGNN | 72.60 | 33.58 | 48.88 | 31.09 | 73.52 | 34.19 | 49.62 | 31.67 | 56.08 | 19.96 | 30.25 | 17.39 | |

5. Experiments

In this section, we first describe the experimental settings, followed by four experimental results and analyses. All experiments were averaged over five replicates.

5.1. Experimental Settings

5.1.1. Datasets

-

•

Yoochoose was released by RecSys Challenge 2015111https://recsys.acm.org/recsys15/challenge/, and contains click streams from an e-commerce website from 6 months.

-

•

Diginetica was used as a challenge dataset for CIKM Cup 2016222https://competitions.codalab.org/competitions/11161. We only adopt the transaction data.

Our preprocessing of these datasets followed previous studies (Wu et al., 2019; Gupta et al., 2019; Pan et al., 2020a) for fairness. We filtered out sessions with only one item and items that occurred fewer than five times. The sessions were split for training and testing, where the last day of Yoochoose and the last week of Diginetica were used for testing. Items that were not included in the training set were excluded from the testing set. Finally, we split the sessions into several sub-sequences. Specifically, for a session , where denotes a pair of items and timestamps, we generated sub-sequences and the corresponding next interaction as for the training and testing sets. As Yoochoose is too large, we only utilized the recent 1/64 and 1/4 fractions of the training set, which are denoted as Yoochoose 1/64 and Yoochoose 1/4, respectively. Statistics for the three datasets are shown in Table 1.

5.1.2. Baselines

-

•

GRU4Rec (Hidasi et al., 2015) applied gated recurrent units (GRUs) to model sequential information in an SBR.

-

•

CSRM (Wang et al., 2019) employed GRUs to model sequential behavior with an attention mechanism and utilized neighbor sessions as auxiliary information.

-

•

STAMP (Liu et al., 2018) applied an attention mechanism to obtain the general preference.

-

•

SR-IEM (Pan et al., 2020b) utilized a modified self-attention mechanism to estimate item importance and recommended the next item based on the global preference and current interest.

-

•

SR-GNN (Wu et al., 2019) adopted GGNNs to obtain item embeddings and recommended by generating a session representation with an attention mechanism.

-

•

NISER+ (Gupta et al., 2019) extended SR-GNN by introducing normalization, positional embedding, and dropout.

-

•

SGNN-HN (Pan et al., 2020a) extended SR-GNN by introducing a highway gate to avoid overfitting and a star node, which is a virtual node connected with all nodes.

5.1.3. Evaluation Metrics

Following previous studies (Wu et al., 2019; Gupta et al., 2019; Pan et al., 2020a), we used the same evaluation metrics R@K (recall) and M@K (mean reciprocal rank), where K is 20 and 5. R@K represents the proportion of test instances that have the target items in the top-K recommended items. M@K is the average of the reciprocal ranks of the target items in the recommendation list.

5.1.4. Parameter Setup

We used the recent 10 items in a session to ensure fairness across all models. An Adam optimizer was adopted, where the initial learning rate is 0.001 with a decay factor of 0.1 for every 3 epochs, , and . In addition, the regularization rate was set to . The batch size was 100. All trainable parameters were initialized using a uniform distribution with a range of according to the dimension of each model. For our model, the dimension of the embeddings was set to 256, the scaling factor was 12, and the number of layers was 6. The numbers of buckets of TN and TE were 40 and 50, respectively. For the other settings of the baselines, we referred to the corresponding paper and official code.

5.2. Overall Performance

Overall performance comparison of the baselines on the three datasets was summarized in Table 2. This was measured using R@20, M@20, R@5, and M@5. First, if we analyze the difference in the performance of the datasets, it seems that Diginetica is more difficult to predict than Yoochoose. In addition, better performance is obtained when we use a larger training set for Yoochoose. However, the difference of 16 times the learning time and memory usage seems to be not as noticeable.

From among RNN-based models, CSRM outperforms GRU4Rec in all measures due to the addition of an attention mechanism to the GRU. It has better performance than STAMP, except for Yoochoose 1/4, for which both show similar results. Although SR-IEM based on a self-attention mechanism performs similarly to CSRM on Diginetica, it usually outperforms the previous models. SR-GNN, the first GNN-based method to be proposed, has similar performance to SR-IEM overall. NISER+, an extended version of SR-GNN, outperforms all previous results and shows a remarkable performance improvement, especially on Diginetica. SGNN-HN has the second rank performance in most of the measures in this experiment and shows the best performance among the baselines. However, our model outperforms the previous studies in all results. In particular, the improvements in terms of M@20 are notable, which is a measure that is difficult to improve compared to R@20 based on other results. Compared with SGNN-HN, the improvement rates of M@20 on the three datasets are 5.13%, 4.59%, and 2.67%, whereas those of R@20 are 1%, 0.82%, and 0.94%, respectively. Because mean reciprocal rank is a measure that considers the recommendation rank, we can recommend item lists with more sophisticated priorities by adding temporal information.

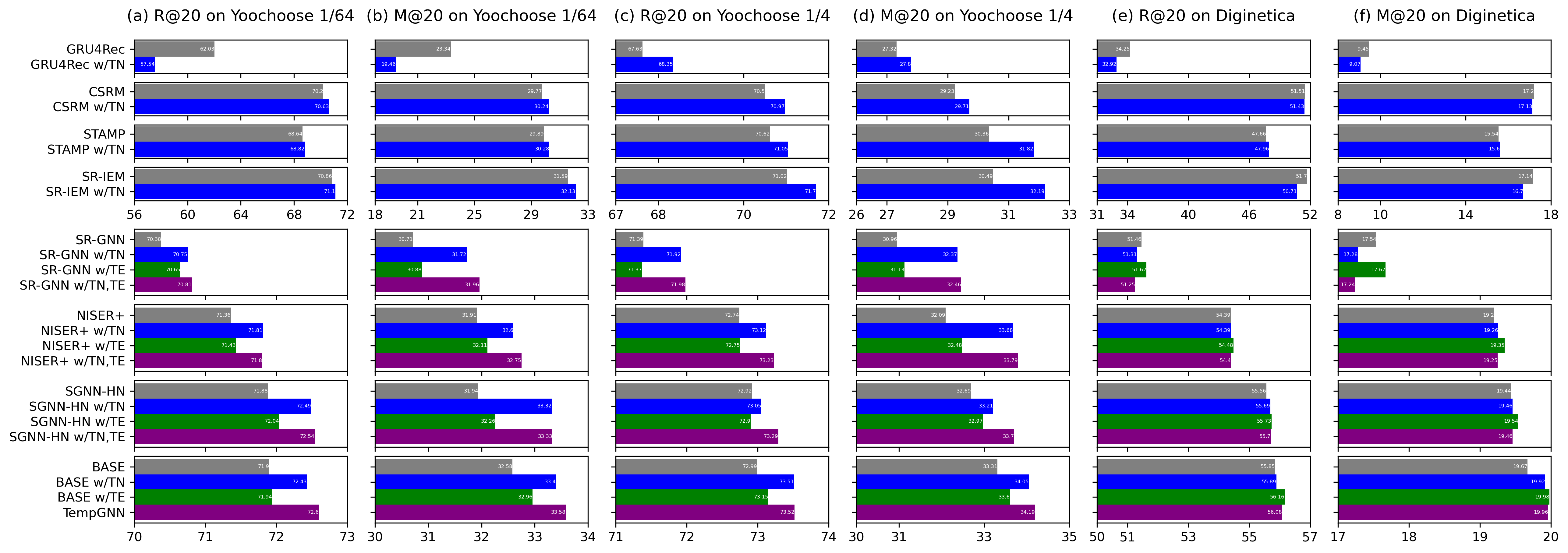

5.3. Models with Temporal Embeddings

The proposed temporal embedding method can easily be adopted in any SBR model. Figure 4 shows the results of utilizing TN, TE, and both together on our model and the baselines described above. Because GRU4Rec, CSRM, STAMP, and SR-IEM among the models are not GNN-based, only TN can be used. The results for the three datasets show different aspects as time information is added.

First, according to the result graphs for Yoochoose 1/64, TN induces an improvement in all models, except for GRU4Rec, which is a pure RNN-based model. This fairly large drop indicates that the model is not trained harmoniously with TN. In addition, although TE does not improve as much as TN, it improves the performance of all GNN-based models. Therefore, the inclusion of temporal information with TN and TE for Yoochoose 1/64 is a very important factor for recommendations.

The results for Yoochoose 1/4 are generally similar to those for Yoochoose 1/64, but even GRU4Rec shows a performance improvement with TN. It appears that a large amount of temporal information leads to improved performance. In addition, TN also shows better results in improvement than TE like the results for Yoochoose 1/64. Interestingly, looking at the results of SR-GNN, NISER+, and SGNN-HN, using only TE does little to improve the performance of R@20, but it helps improve M@20. This means that exploiting the time differences between interactions helps in more sophisticated predictions. In addition, even if TE alone cannot improve R@20, TE with TN yields better results. The results of using the two sets of temporal information together show better performance than those of using TN alone, leading to significant improvements.

The results for Diginetica are different from those for the other two datasets, where the temporal embeddings lead to high improvement rates. In most of the baselines, TN does not help improve, but rather seems to hinder efficient learning. In contrast, TE is helpful for improving all models, which means that the time intervals between interactions in Diginetica provide more clues for accurate predictions. Even the results of GNN-based models show that adding TE alone is better than using both temporal information together.

| Yoochoose 1/64 | Yoochoose 1/4 | Diginetica | ||||

| R@20 | M@20 | R@20 | M@20 | R@20 | M@20 | |

| Base | 71.90 | 32.58 | 72.99 | 33.31 | 55.85 | 19.67 |

| Position | 71.86 | 31.84 | 72.88 | 32.24 | 55.68 | 19.43 |

| Constant | 72.27 | 32.57 | 73.38 | 33.75 | 55.85 | 19.76 |

| Bucket | 72.49 | 32.93 | 73.42 | 33.46 | 55.94 | 19.83 |

| Q | 72.57 | 33.44 | 73.53 | 34.08 | 55.90 | 19.83 |

| Q+A | 72.56 | 33.50 | 73.47 | 34.07 | 56.00 | 19.82 |

| Q+G | 72.43 | 33.43 | 73.52 | 34.08 | 56.07 | 19.91 |

| Q+A+G | 72.60 | 33.58 | 73.52 | 34.19 | 56.08 | 19.96 |

5.4. Comparison of Temporal Embedding Methods

Table 3 shows the results of comparing methods using temporal information for an SBR. Base is a basic version that removes both temporal embeddings (i.e., TN and TE) from TempGNN. Position refers to the model in which positional embedding (Vaswani et al., 2017; Qiu et al., 2021) is added to Base. Because only the most recent 10 items are used in our experiment, a maximum of 10 position information can be used. Constant utilizes one learnable vector by multiplying the constant times, which are normalized between 0 and 1. This is similar to the method used in TGSRec (Fan et al., 2021) except for the periodicity. Bucket means that each bucket is split according to specific intervals after clipping both by 2% to prevent an outward range. This method has been adopted by many previous models (Zhou et al., 2018; Li et al., 2020; Ye et al., 2020), which do not consider time frequency, whereas our method splits the information into groups of equal size.

The performance after adding positional embedding shows a rather small decrease in the three datasets. The result of Constant shows a slight improvement overall. Although fewer trainable parameters are used compared with Position, the performance is rather improved. This means that clues time differences can provide are more helpful in predicting user behavior from a session than positional differences. The result of Bucket is better than that of Constant, except for M@20 on Yoochoose 1/4, and shows a fairly good improvement compared with Base. Q, which changes the method of allocating buckets from Bucket, shows a high improvement on Yoochoose 1/64 and 1/4, particularly for M@20. Adding an activation function (i.e., a leaky ReLU) results in little change from Q, shown as Q+A, whereas a gate network leads to an improvement on Diginetica, shown as Q+G. Finally, our model’s method, Q+A+G, has the best performance, except for R@20 on Yoochoose 1/4. Even so, it exhibits the second-best performance, which is almost equal to the best.

5.5. Number of Buckets

To maximize the use of time clues in session data, an appropriate number of buckets should be set. This depends on the characteristics of the dataset. If the number of buckets is set too small, the temporal information contained is insufficient. This is because even timestamps with different meanings can be classified into the same group. Conversely, if too many buckets are set, there is a waste of memory due to unnecessary splitting. Even if two timestamps belong to different groups, they would have shown no significant differences. Figure 5 shows the number of buckets suitable for TN and TE for the two datasets.

These graphs have three common characteristics. First, too few buckets (e.g., 1 or 2) may degrade the performance compared with a base version without any temporal embedding. This is because unnecessary clues are provided, which actually hinder effective learning. Second, a convergence pattern is exhibited as a certain number is exceeded. This indicates that preparing only a certain number of groups that distinguish times is sufficient. Looking at the converged performance, on Yoochoose 1/64, using TN leads to a meaningful improvement, and utilizing TE does so on Diginetica. Interestingly, both embeddings contribute to improving M@20, which implies that more sophisticated predictions are made. Finally, TN with 10-quantiles always shows higher performance than positional embedding represented by a gray dotted line in the graph. Even if the same number of parameters are used, the difference lies in how the buckets are allocated. Interactions with only one positional gap may be grouped into the same time bucket or there may be a large time difference. Our method shows that it can capture the subtle differences in user behavior that cannot be known by positions.

6. Conclusion

We introduced TempGNN, a generic framework for capturing the structural and temporal patterns in complex item transitions through temporal embedding operators on nodes and edges on dynamic session graphs represented as sequences of timed events. State-of-the-art results were obtained for several datasets. Extensive experimental results confirm that even if a session has relatively short-length interactions, the temporal relationship between items in the session and the prediction point are important factors in predicting the next item and can improve performance. Meanwhile, although our discrete buckets somewhat reflect continuous distribution over time, as shown in Figure 6, we plan to investigate how to fully capture this in future work.

References

- (1)

- Das et al. (2017) Debashis Das, Laxman Sahoo, and Sujoy Datta. 2017. A survey on recommendation system. International Journal of Computer Applications 160, 7 (2017).

- Fan et al. (2021) Ziwei Fan, Zhiwei Liu, Jiawei Zhang, Yun Xiong, Lei Zheng, and Philip S Yu. 2021. Continuous-time sequential recommendation with temporal graph collaborative transformer. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management. 433–442.

- Guo et al. (2020) Yupu Guo, Yanxiang Ling, and Honghui Chen. 2020. A time-aware graph neural network for session-based recommendation. IEEE Access 8 (2020), 167371–167382.

- Gupta et al. (2019) Priyanka Gupta, Diksha Garg, Pankaj Malhotra, Lovekesh Vig, and Gautam M Shroff. 2019. NISER: normalized item and session representations with graph neural networks. arXiv preprint arXiv:1909.04276 (2019).

- Guy et al. (2010) Ido Guy, Naama Zwerdling, Inbal Ronen, David Carmel, and Erel Uziel. 2010. Social media recommendation based on people and tags. In Proceedings of the 33rd international ACM SIGIR conference on Research and development in information retrieval. 194–201.

- Hidasi et al. (2015) Balázs Hidasi, Alexandros Karatzoglou, Linas Baltrunas, and Domonkos Tikk. 2015. Session-based recommendations with recurrent neural networks. arXiv preprint arXiv:1511.06939 (2015).

- Hwangbo et al. (2018) Hyunwoo Hwangbo, Yang Sok Kim, and Kyung Jin Cha. 2018. Recommendation system development for fashion retail e-commerce. Electronic Commerce Research and Applications 28 (2018), 94–101.

- Kazemi et al. (2019) Seyed Mehran Kazemi, Rishab Goel, Sepehr Eghbali, Janahan Ramanan, Jaspreet Sahota, Sanjay Thakur, Stella Wu, Cathal Smyth, Pascal Poupart, and Marcus Brubaker. 2019. Time2vec: Learning a vector representation of time. arXiv preprint arXiv:1907.05321 (2019).

- Li et al. (2017a) Jing Li, Pengjie Ren, Zhumin Chen, Zhaochun Ren, Tao Lian, and Jun Ma. 2017a. Neural attentive session-based recommendation. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management. 1419–1428.

- Li et al. (2020) Jiacheng Li, Yujie Wang, and Julian McAuley. 2020. Time interval aware self-attention for sequential recommendation. In Proceedings of the 13th international conference on web search and data mining. 322–330.

- Li et al. (2017b) Weimin Li, Zhengbo Ye, Minjun Xin, and Qun Jin. 2017b. Social recommendation based on trust and influence in SNS environments. Multimedia Tools and Applications 76, 9 (2017), 11585–11602.

- Li et al. (2015) Yujia Li, Daniel Tarlow, Marc Brockschmidt, and Richard Zemel. 2015. Gated graph sequence neural networks. arXiv preprint arXiv:1511.05493 (2015).

- Liu et al. (2018) Qiao Liu, Yifu Zeng, Refuoe Mokhosi, and Haibin Zhang. 2018. STAMP: short-term attention/memory priority model for session-based recommendation. In Proceedings of the 24th ACM SIGKDD international conference on knowledge discovery & data mining. 1831–1839.

- Loomis (2013) Lynn H Loomis. 2013. Introduction to abstract harmonic analysis. Courier Corporation.

- Niepert et al. (2016) Mathias Niepert, Mohamed Ahmed, and Konstantin Kutzkov. 2016. Learning convolutional neural networks for graphs. In International conference on machine learning. PMLR, 2014–2023.

- Pan et al. (2020a) Zhiqiang Pan, Fei Cai, Wanyu Chen, Honghui Chen, and Maarten de Rijke. 2020a. Star graph neural networks for session-based recommendation. In Proceedings of the 29th ACM international conference on information & knowledge management. 1195–1204.

- Pan et al. (2020b) Zhiqiang Pan, Fei Cai, Yanxiang Ling, and Maarten de Rijke. 2020b. Rethinking item importance in session-based recommendation. In Proceedings of the 43rd International ACM SIGIR conference on research and development in Information Retrieval. 1837–1840.

- Qiu et al. (2021) Ruihong Qiu, Zi Huang, Tong Chen, and Hongzhi Yin. 2021. Exploiting positional information for session-based recommendation. ACM Transactions on Information Systems (TOIS) 40, 2 (2021), 1–24.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. Advances in neural information processing systems 30 (2017).

- Wang et al. (2019) Meirui Wang, Pengjie Ren, Lei Mei, Zhumin Chen, Jun Ma, and Maarten de Rijke. 2019. A collaborative session-based recommendation approach with parallel memory modules. In Proceedings of the 42nd international ACM SIGIR conference on research and development in information retrieval. 345–354.

- Wang et al. (2021) Shoujin Wang, Longbing Cao, Yan Wang, Quan Z Sheng, Mehmet A Orgun, and Defu Lian. 2021. A survey on session-based recommender systems. ACM Computing Surveys (CSUR) 54, 7 (2021), 1–38.

- Wu et al. (2019) Shu Wu, Yuyuan Tang, Yanqiao Zhu, Liang Wang, Xing Xie, and Tieniu Tan. 2019. Session-based recommendation with graph neural networks. In Proceedings of the AAAI conference on artificial intelligence, Vol. 33. 346–353.

- Wu et al. (2020) Zonghan Wu, Shirui Pan, Fengwen Chen, Guodong Long, Chengqi Zhang, and S Yu Philip. 2020. A comprehensive survey on graph neural networks. IEEE transactions on neural networks and learning systems 32, 1 (2020), 4–24.

- Xu et al. (2019) Da Xu, Chuanwei Ruan, Evren Korpeoglu, Sushant Kumar, and Kannan Achan. 2019. Self-attention with functional time representation learning. Advances in neural information processing systems 32 (2019).

- Ye et al. (2020) Wenwen Ye, Shuaiqiang Wang, Xu Chen, Xuepeng Wang, Zheng Qin, and Dawei Yin. 2020. Time matters: Sequential recommendation with complex temporal information. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval. 1459–1468.

- Zhang et al. (2021) Rongzhi Zhang, Yulong Gu, Xiaoyu Shen, and Hui Su. 2021. Knowledge-enhanced Session-based Recommendation with Temporal Transformer. arXiv preprint arXiv:2112.08745 (2021).

- Zhang et al. (2020) Youjie Zhang, Ting Bai, Bin Wu, and Bai Wang. 2020. A Time Interval Aware Approach for Session-Based Social Recommendation. In International Conference on Knowledge Science, Engineering and Management. Springer, 88–95.

- Zhou et al. (2018) Chang Zhou, Jinze Bai, Junshuai Song, Xiaofei Liu, Zhengchao Zhao, Xiusi Chen, and Jun Gao. 2018. Atrank: An attention-based user behavior modeling framework for recommendation. In Thirty-Second AAAI Conference on Artificial Intelligence.