by-nc-sa

TeachTune: Reviewing Pedagogical Agents Against Diverse Student Profiles with Simulated Students

Abstract.

Large language models (LLMs) can empower teachers to build pedagogical conversational agents (PCAs) customized for their students. As students have different prior knowledge and motivation levels, teachers must review the adaptivity of their PCAs to diverse students. Existing chatbot reviewing methods (e.g., direct chat and benchmarks) are either manually intensive for multiple iterations or limited to testing only single-turn interactions. We present TeachTune, where teachers can create simulated students and review PCAs by observing automated chats between PCAs and simulated students. Our technical pipeline instructs an LLM-based student to simulate prescribed knowledge levels and traits, helping teachers explore diverse conversation patterns. Our pipeline could produce simulated students whose behaviors correlate highly to their input knowledge and motivation levels within 5% and 10% accuracy gaps. Thirty science teachers designed PCAs in a between-subjects study, and using TeachTune resulted in a lower task load and higher student profile coverage over a baseline.

A figure comparing three educational methods: Direct Chat, Single-turn Test, and Automated Chat. Direct Chat offers breadth, benchmark dataset offers depth, and Automated Chat achieves both depth and breadth by using simulated agents.

1. Introduction

“A key challenge in developing and deploying Machine Learning (ML) systems is understanding their performance across a wide range of inputs.” (Wexler et al., 2019)

Large Language Models (LLMs) have empowered teachers to build Pedagogical Conversational Agents (PCAs) (Weber et al., 2021) with little programming expertise. PCAs refer to conversational agents that act as instructors (Graesser et al., 2004), peers (Matsuda et al., 2012), and motivators (Alaimi et al., 2020) with whom learners can communicate through natural language, used in diverse subjects, grades, and pedagogies. Teacher-designed PCAs can better adapt to downstream class environments (i.e., students and curriculum) and allow teachers to experiment with diverse class activities that were previously prohibitive due to limited human resources. While conventional chatbots require authoring hard-coded conversational flows and responses (Cranshaw et al., 2017; Choi et al., 2021), LLM-based agents need only a description of how the agents should behave in natural language, known as prompting (Dam et al., 2024). Prior research has proposed prompting techniques (Wu et al., 2022; Brown et al., 2020; Yao et al., 2023), user interfaces (Arawjo et al., 2024; Martin et al., 2024; Fiannaca et al., 2023), and frameworks (Kim et al., 2023; Bai et al., 2022) that make domain-specific and personalized agents even more accessible to build for end-users. With the lowered barrier and cost of making conversational agents, researchers have actively experimented with LLM-based PCAs under diverse pedagogical settings, such as 1-on-1 tutoring (Jurenka et al., 2024; Zamfirescu-Pereira et al., 2023a; Han et al., 2023), peer learning (Jin et al., 2024; Schmucker et al., 2024), and collaborative learning (Liu et al., 2024a; Wei et al., 2024; Nguyen et al., 2024a).

To disseminate these experimental PCAs to actual classes at scale, reviewing agents’ content and interaction qualities is necessary before deployment. Many countries and schools are concerned about the potential harms of LLMs and hesitant about their use in classrooms, especially K-12, despite the benefits (Johnson, 2023; Li et al., 2024c). LLM-based PCAs need robust validation against hallucination (Shoufan, 2023; Park and Ahn, 2024), social biases (Melissa Warr and Isaac, 2024; Wambsganss et al., 2023), and overreliance (Dwivedi et al., 2023; Mosaiyebzadeh et al., 2023). Moreover, since students vary in their levels of knowledge and learning attitudes in a class (Richardson et al., 2012), teachers must review how well their PCAs can cover diverse students in advance to help each student improve attitudes and learn better (Bloom, 1984; Tian et al., 2021; Pereira, 2016; Pereira and Díaz, 2021). For instance, teachers should check whether PCAs help not only poorly performing students fill knowledge gaps but also well-performing students build further knowledge through discussions. Regarding students’ personalities, teachers should check if PCAs ask questions to prompt inactive students and compliment active students to keep them motivated. These attempts contribute to improving fairness in learning, closing the growth gap between students instead of widening it (Memarian and Doleck, 2023).

However, existing methods for reviewing the PCAs’ coverage of various student profiles offer limited breadth and depth (Fig. 1). The current landscape of chatbot evaluation takes two approaches at large. First, teachers can directly chat with their PCAs and roleplay themselves as students (Petridis et al., 2024; Hedderich et al., 2024; Bubeck et al., 2023). Although interactive chats allow teachers to review the behaviors of PCAs over multi-turn conversations in depth, it is time-consuming for teachers to manually write messages and re-run conversations after revising PCA designs, restraining the breadth of reviewing different students. Second, teachers can simultaneously author many input messages as test cases (e.g., benchmark datasets) and assess the PCAs’ responses (Ribeiro and Lundberg, 2022; Wu et al., 2023; Zamfirescu-Pereira et al., 2023b; Cabrera et al., 2023; Kim et al., 2024). Single-turn test cases are scalable and reproducible, but teachers can examine only limited responses that do not capture multi-turn interactions (e.g., splitting explanations (Lee et al., 2023), asking follow-up questions (Shahriar and Matsuda, 2021)), restricting the depth of each review. Teachers may also need to create test cases manually if their PCAs target new curriculums and class activities.

To support efficient PCA reviewing with breadth and depth, we propose a novel review method in which teachers utilize auto-generated conversations between a PCA and simulated students. Recent research has found that LLMs can simulate human behaviors of diverse personalities (Li et al., 2024b; Park et al., 2023) and knowledge levels (Lu and Wang, 2024; Jin et al., 2024). We extend this idea to PCA review by simulating conversations between PCAs and students with LLM. We envision simulated conversations making PCA evaluation as reproducible and efficient as the test case approach while maintaining the benefit of reviewing multi-turn interactions like direct chat. Teachers can review the adaptivity of PCAs by configuring diverse simulated students as a unit of testing and examine the quality of interaction in depth by observing auto-generated conversations among them. We implemented this idea into TeachTune, a tool that allows teachers to design PCAs and review their robustness against diverse students and multi-turn scenarios through automated chats with simulated students. Teachers can configure simulated students by adding or removing knowledge components and adjusting the intensity of student traits, such as self-efficacy and motivation. Our LLM-prompting pipeline, Personalized Reflect-Respond, takes configurations on knowledge and trait intensity levels (5-point scale) as inputs and generates a comprehensive overview to instruct simulated students to generate believable responses.

To evaluate the performance of Personalized Reflect-Respond in simulating targeted student behaviors, we asked ten teachers to interact with nine simulated students of varying knowledge and trait levels in a blinded condition and to predict the simulated students’ configuration levels for knowledge and traits. We measured the difference between teacher-predicted and initially configured levels. Our pipeline showed a 5% median error for knowledge components and a 10% median error for student traits, implying that our simulated students’ behaviors closely align with the expectations of teachers who configure them. We also conducted a between-subjects study with 30 teachers to evaluate how TeachTune can help teachers efficiently review the interaction quality of PCAs in depth and breadth. Study participants created and reviewed PCAs for middle school science classes using TeachTune or a baseline system where PCA review was possible through only direct chats and single-turn test cases. We found that automated chats significantly help teachers explore a broader range of students within traits (large effect size, =0.304) at a lower task load (=0.395).

This paper makes the following contributions:

-

•

Personalized Reflect-Respond, an LLM prompting pipeline that generates an overview of a target student’s knowledge, motivation, and psychosocial context and follows the overview to simulate a believable student behavior.

-

•

TeachTune, an interface for teachers to efficiently review the coverage of PCAs against diverse knowledge levels and student traits.

-

•

Empirical findings showing that TeachTune can help teachers design PCAs at a lower task load and review more student profiles, compared to direct chats and test cases only.

2. Related Work

Our work aims to support the design and reviewing process of PCAs in diverse learning contexts. We outline the emergent challenges in designing conversational agents and how LLM-based simulation can tackle the problem.

2.1. Conversational Agent Design Process

Designing chatbots involves dedicated chatbot designers prototyping and then iteratively revising their designs through testing. Understanding and responding to a diverse range of potential user intents and needs is crucial to the chatbot’s success. Popular methods include the Wizard-of-Oz approach to collect quality conversation data (Klemmer et al., 2000) and co-design workshops to receive direct feedback from multiple stakeholders (Chen et al., 2020; Durall Gazulla et al., 2023). Involving humans to simulate conversations or collecting feedback can help chatbot designers understand human-chatbot collaborative workflow (Cranshaw et al., 2017), explore diverse needs of users (Potts et al., 2021; Candello et al., 2022), or iterate their chatbot to handle edge cases (Klemmer et al., 2000; Choi et al., 2021). Typical chatbot reviewing methods include conducting a usability study with a defined set of chatbots’ social characteristics (Chaves and Gerosa, 2021), directly chatting 1-on-1 with the designed chatbot (Petridis et al., 2024), and testing with domain experts (Hedderich et al., 2024). Such methods can yield quality evaluation but are costly as they need to be executed manually by humans. For more large-scale testing, designers can use existing test cases (Ribeiro and Lundberg, 2022; Bubeck et al., 2023) or construct new test sets with LLMs (Wu et al., 2023). However, such evaluations happen in big chunks of single-turn conversations, which limits the depth of conversation dynamics throughout multiple turns. To complement the limitations, researchers have recently proposed leveraging LLMs as simulated users (de Wit, 2023), role-players (Fang et al., 2024), and agent authoring assistant (Calo and Maclellan, 2024). TeachTune explores a similar thread of work in the context of education by utilizing simulated students to aid teachers’ breadth- and depth-wise reviewing of PCAs.

2.2. Simulating Human Behavior with LLMs

Recent advancements in LLM have led researchers to explore the capabilities of LLMs in simulating humans and their environments, such as simulating psychology experiments (Coda-Forno et al., 2024), individuals’ beliefs and preferences (Namikoshi et al., 2024; Jiang et al., 2024; Shao et al., 2023; Chuang et al., 2024; Choi et al., 2024), and social interactions (Park et al., 2023; Vezhnevets et al., 2023; Li et al., 2024a; Shaikh et al., 2024). In education, existing works have simulated student behaviors for testing learning contents (Lu and Wang, 2024; He-Yueya et al., 2024; Nguyen et al., 2024a), predicting cognitive states of students (Xu et al., 2024; Liu et al., 2024b), facilitating interactive pedagogy (Jin et al., 2024), and assisting teaching abilities of instructors (Markel et al., 2023; Zhang et al., 2024; Nguyen et al., 2024b; Radmehr et al., 2024). In deciding which specific attribute to simulate, existing simulation work has utilized either knowledge states (Lu and Wang, 2024; Jin et al., 2024; Huang et al., 2024; Nguyen et al., 2024a) or cognitive traits, such as personalities and mindset (Markel et al., 2023; Li et al., 2024b; Wang et al., 2024). However, simulating both knowledge states and personalities is necessary for authentic learning behaviors because cognitive traits, in addition to prior knowledge, are a strong indicator for predicting success in learning (Besterfield-Sacre et al., 1997; Astin and Astin, 1992; Richardson et al., 2012; Chen and Macredie, 2004; Chrysafiadi and Virvou, 2013). Liu et al. explored utilizing cognitive and noncognitive aspects, such as the student’s language proficiency and the Big Five personality, to simulate students at binary levels (e.g., low vs. high openness) for testing intelligent tutoring systems (Liu et al., 2024b). Our work develops this idea further by presenting an LLM-powered pipeline that can configure and simulate both learners’ knowledge and traits at a finer granularity (i.e., a five-point scale). Finer-grained control of student simulation will help teachers review PCAs against detailed student types, making their classes more inclusive.

3. Formative Interview and Design Goals

We conducted semi-structured interviews with five school teachers and observed how teachers review PCAs to investigate RQ1. More specifically, we aimed to gain a comprehensive understanding of what types of students teachers want PCAs to cover, what student traits (e.g., motivation level, stress) characterize those students, how teachers create student personas using those traits, and what challenges teachers have with existing PCA review methods (i.e., direct chat and test cases).

-

RQ1:

What are teachers’ needs in reviewing PCAs and challenges in using direct chats and test cases?

3.1. Interviewees

We recruited middle school science teachers through online teacher communities in Korea. We required teachers to possess either an education-related degree or at least one year of teaching experience. The teachers had diverse backgrounds (Table 1). The interview took place through Zoom for 1.5 hours, and interviewees were compensated KRW 50,000 (USD 38).

| Id | Period of teaching | Size of class | Familiarity | ||

| Chatbots | Chatbot design process | ChatGPT | |||

| I1 | 3 years | 20 students | Unfamiliar | Very unfamiliar | Familiar |

| I2 | 6 years | 20 students | Very familiar | Very familiar | Very familiar |

| I3 | 16 years | 21 students | Unfamiliar | Very unfamiliar | Familiar |

| I4 | 2 years | 200 students | Very familiar | Familiar | Very familiar |

| I5 | 1 year | 90 students | Familiar | Familiar | Unfamiliar |

A table with four columns and six rows. On the header row are identifiers, period of teaching, class size, and familiarity (Chatbots, Chatbot design process, ChatGPT). The following rows describe what each participant’s experiences were like for the headers.

A screenshot showing the interface used for the formative interview. In the Direct Chat tab, the chatbot starts the conversation by saying, ”Hi! Do you have any questions about phase transition?” The interviewee responded, ”Hi, I didn’t get what phase transition is,” and the chatbot explained three states with real-life examples. In the Test Cases tab, the interviewee tested ”I don’t want to study this,” ”This is too difficult for me,” and ”Could you give examples.”

3.2. Procedure

We began the interview by presenting the research background, ChatGPT, and its various use cases (e.g., searching, brainstorming, and role-playing). We requested permission to record their voice and screen throughout the session and asked semi-structured interview questions during and after sessions.

Interviewees first identified the most critical student traits that PCAs should cover when supporting diverse students in K-12. To do so, we gave interviewees a list of 42 traits organized under five categories—personality traits, motivation factors, self-regulatory learning strategies, student approaches to learning, and psychosocial contextual influence (Richardson et al., 2012). Interviewees ranked the categories by importance of reviewing and chose the top three traits from each category.

Interviewees then assumed a situation where they created PCAs for their science class to help students review the phase transition between solid, liquid, and gas. Interviewees reviewed the interaction quality and adaptivity of a given tutor-role PCA by chatting with it directly and authoring test case messages, playing the role of students. Interviewees could revisit the list of 42 traits for their review. Interviewees used the interfaces in Fig. 2 for 10 minutes each and were asked to find as many limitations of the PCA as possible. The PCA was a GPT-3.5-based agent with the following system prompt: You are a middle school science teacher. You are having a conversation to help students understand what they learned in science class. Recently, students learned about phase transition. Help students if they have difficulty understanding phase transition.

Subsequently, interviewees listed student profiles whose conversation with the PCA would help them review its quality and adaptivity. A student profile is distinguished from student traits as it is a combination of traits describing a student. Interviewees wrote student profiles in free form, using knowledge level and earlier 42 student traits to describe them (e.g., a student with average science grades but an introvert who prefers individual learning over cooperative learning).

| Category | Student Trait | Definition |

| Motivation factors | Academic self-efficacy | Self-beliefs of academic capability |

| Intrinsic motivation | Inherent self-interest, and enjoyment of academic learning and tasks | |

| Psychosocial contextual influence | Academic stress | Overwhelming negative emotionality resulting directly from academic stressors |

| Goal commitment | Commitment to staying in school and obtaining a degree |

Table displaying four traits by two categories with each definition: Academic self-efficacy, Intrinsic Motivation, Academic stress, and Goal commitment.

3.3. Findings

3.3.1. Teachers deemed students’ knowledge levels, motivation factors, and psychosocial contextual influences as important student traits to review.

Interviewees thought that PCAs should support students with low motivation and knowledge, and hence, it is crucial to review how PCAs scaffold these students robustly. All five interviewees started their reviewing of the PCA with knowledge-related questions to assess the correctness and coverage of its knowledge. They then focused on how the PCA responds to a student with low motivation and interest (Table 2). Motivational factors (i.e., academic self-efficacy and intrinsic motivation) are important because students with low motivation often do not pay attention to class activities, and learning with a PCA would not work at all if the PCA cannot first encourage those students’ participation (I1, I2, and I5). Interviewees also considered psychosocial factors (i.e., academic stress and goal commitment) important as they significantly affect the learning experience (I1). I3 remarked that she tried testing if the PCA could handle emotional questions because they take up most students’ conversations.

3.3.2. Multi-turn conversations are crucial for review, but writing messages to converse with PCAs requires considerable effort and expertise.

Follow-up questions and phased scaffolding are important pedagogical conversational patterns that appear over several message turns. Interviewees commented that it is critical to check how PCAs answer students’ serial follow-up questions, use easier words across a conversation for struggling students, and remember conversational contexts because they affect learning and frequently happen in student-tutor conversations. Interviewees typically had 15 message turns for a comprehensive review of the PCA. Interviewees noted that these multi-turn interactions are not observable in single-turn test cases and found direct chat more informative. However, interviewees also remarked on the considerable workload of writing messages manually (I1), the difficulty of repeating conversations (I4), and the benefits of test cases over direct chats in terms of parallel reviewing (I2). I2 also commented that teachers would struggle to generate believable chats if they have less experience or teach humanities subjects whose content and patterns are diverse.

3.3.3. Teachers’ mental model of review is based on student profiles, but they lack systematic approaches to organize and incorporate diverse types and granularities of student traits.

Interviewees created test cases and conversational patterns with specific student personas in mind and referred to them when explaining their rationale for test cases. For example, I4 recalled students with borderline intellectual functioning and tested if the PCA could provide digestible explanations and diagrams. However, interviewees tend to review PCAs on the fly without a systematic approach; interviewees mix different student personas (e.g., high and low knowledge, shy and active) in a single conversation instead of simulating each persona in a separate chat. I4 and I5 remarked that they had not conceived the separation, and single-persona conversations would have made the review more meaningful. I2 commented that creating student profiles first would have prepared her to organize more structural test cases. Interviewees also commented on the difficulty of describing students with varying levels within a trait (I4) and reflecting diverse traits in free-form writing (I1).

3.4. Design Goals

Based on the findings from the formative interview, we outline the design goals to help teachers efficiently review their PCAs’ limitations against diverse students and improve their PCAs iteratively. The design goals are 1-to-1 mapped to each finding in §3.3 and aim to address teachers’ needs and challenges.

-

DG1.

Support the reviewing of PCAs’ adaptivity to students with varying knowledge levels, motivation factors, and psychosocial contexts.

-

DG2.

Offload the manual effort to generate multi-turn conversations for quick and iterative reviews in the PCA design process.

-

DG3.

Provide teachers with structures and interactions for authoring separate student profiles and organizing test cases.

4. System: TeachTune

We present TeachTune, a web-based tool where teachers can build LLM-based PCAs and quickly review their coverage against simulated students with diverse knowledge levels, motivation factors, and psychosocial contexts before deploying the PCAs to actual students. We outline the user interfaces for creating PCAs, configuring simulated students of teachers’ needs as test cases, and reviewing PCAs through automatically generated conversations between PCAs and simulated students. We also introduce our novel technical pipeline to simulate students behind the scenes.

Diagram showing how a state diagram and conversation are controlled by two LLM agents: PCA and Master Agent. On the left, the state machine diagram includes a root node and two child nodes. The root node represents an initial message generated by the PCA agent, which says, ”Let’s review the phase transition between solid, liquid, and gas.” The student’s message begins with ”Sure! I know that […],” followed by an explanation of the three states of matter. Based on the student’s message, the master agent decides which child node to move to, selecting the node labeled ”the student explains the three states well.” This node contains an instruction: ”Praise the student and ask them to explain with a real-life example.” Following this, the final message generated by the PCA agent says, ”Great job! Can you give me some real-life examples of each state of matter?”

The interface of an automated chat showing a state machine for PCA development on the left and a chat interface on the right. On the right top is an ”Add a profile” button for creating student profiles, with a conversation generated by a student named ”Low motivation” displayed below it. Beneath the student’s message, the ”Check knowledge state” button has been pressed, highlighting the newly acquired knowledge elements in a different color. On the left side, the state diagram displays nodes with two text inputs each: the top text represents student behavior, such as ”The student explains the three states well,” and the bottom text represents instructions, such as ”Praise the student and ask them to explain with real-life examples.” At the left corner of the final node, a robot icon indicates the current active state.

4.1. PCA Creation Interface

Teachers can build PCAs with a graph-like state machine representation (Fig. 3) (Choi et al., 2021; Hedderich et al., 2024). The state machine of a PCA starts with a root node that consists of the PCA’s start message to students and the instruction it initially follows. For example, the PCA in Fig. 3 starts its conversation by saying: “Let’s review the phase transitions between solid, liquid, and gas!” and asks questions about phase transitions to a student (Fig. 3 A) until the state changes to other nodes. The state changes to one of the connected nodes depending on whether or not the student answers the questions well (Fig. 3 B). When the state changes to either node, PCA receives a new instruction, described in the nodes, to behave accordingly (Fig. 3 C). The PCA is an LLM-based agent prompted conditionally with the state machine, whose state is determined by a master LLM agent. The master agent monitors the conversation between the PCA and a student and decides if the state should remain in the same node or transit to one of its child nodes. The prompts used to instruct the master agent and PCA are in Appendix A.1 and A.2.

4.1.1. Authoring graph-based state machines

TeachTune provides a node-based interface to author the state machine of PCAs (Fig. 4 left). Teachers can drag to move nodes, zoom in, and pan the state diagram. They can add child nodes by clicking the “Add Behavior” button on the parent node. Teachers can also add directed edges between nodes to indicate the sequence of instructions PCAs should follow. In each node, teachers describe a student behavior for PCAs to react to (Fig. 4 E: “if the student …”) and instructions for PCAs to follow (Fig. 4 F: “then, the chatbot …”). Student behaviors are written in natural language, allowing teachers to cover a diverse range and granularity of cases, such as cases where students do not remember the term sublimation or ignore PCA’s questions. Instructions can also take various forms, from prescribed explanations about sublimation to abstract ones, such as creating an intriguing question to elicit students’ curiosity. To help teachers understand how the state machine works and debug it, TeachTune visualizes a marker (Fig. 4 D) on the state machine diagram that shows the current state of PCA along conversations during reviews. The node-based interface helps teachers design and represent conversation flows that are adaptive to diverse cases.

Interface for creating a student profile in an automated chat. At the top, there are six knowledge components about phase transitions with checkbox inputs. After that, there are 12 slider inputs, each rated from 1 to 5, for rating four student traits with three questions per trait. Below the sliders is a ”Generate initial draft” button. After clicking this button, a profile description is generated based on the selected knowledge components and student traits.

A diagram illustrating a 3-step prompting pipeline for simulating student responses: Interpret, Reflect, and Respond. The Interpret step runs independently before runtime, creating a trait overview with the text, ”This student has low motivation and interest in the topic,” based on the student’s trait values. During runtime, the previous knowledge state and conversation history flow into the Reflect step, where the knowledge state is updated. In the Respond step, the updated knowledge state, conversation history, and trait overview are used to generate the simulated student’s response, shown as ”Boring. Can I learn something else?”

4.2. PCA Review Interface

Teachers can review the robustness of their PCAs by testing different edge cases with three methods—direct chat, single-turn test cases, and automated chat. The user interface for direct chat and test cases are identical to the ones used in the formative study (Fig. 2); teachers can either directly talk to their PCAs over multi-turn or test multiple pre-defined input messages at once and observe how PCAs respond to each. The last and our novel method, review through automated chats, involves two steps—creating student profiles and observing simulated conversations.

4.2.1. Templated student profile creation

Teachers should first define what types of students they review against. TeachTune helps teachers externalize and develop their evaluation space with templated student profiles. Our interface (Fig. 5) provides knowledge components and student trait inventories to help teachers recognize possible combinations and granularities of different knowledge levels and traits and organize them effectively (DG3). When creating each student profile, teachers can specify the student’s initial knowledge by check-marking knowledge components (Fig. 5 A) and configure the student’s personality by rating the trait inventories on a 5-point Likert scale (Fig. 5 B). TeachTune then generates a natural language description of the student, which teachers can freely edit to correct or add more contextual information about the student (Fig. 5 C). This description, namely trait overview, is passed to our simulation pipeline.

Once teachers have created a pool of student profiles to review against, they can leverage it over their iterative PCA design process, like how single-turn test cases are efficient for repeated reviews. We decided to let teachers configure their student pools instead of automatically providing all possible student profiles because it is time-consuming for teachers to check student profiles who might not even exist in their classes.

TeachTune populates knowledge components pre-defined in textbooks and curricula. Teachers can also add custom (e.g., more granular) knowledge components. For the trait inventories, we chose the top three statements from existing inventories (Sun et al., 2011; Klein et al., 2001; Gottfried, 1985; May, 2009) based on their correlation to student performance (see Appendix B.4). We present three statements for each trait, considering the efficiency and preciseness in authoring student profiles, heuristically decided from our iterative system design.

4.2.2. Automated chat

Teachers then select one of the student profiles to generate a lesson conversation between the profile’s simulated student and their PCAs (Fig. 4 A). PCAs start conversations, and the state marker on the state diagram transits in real-time throughout the conversation. Simulated students initially show unawareness as prescribed by their knowledge states in profiles and acquire knowledge from PCAs in mock conversations. Simulated students also actively ask questions, show indifference, or exhibit passive learning attitudes according to their student traits. TeachTune generates six messages (i.e., three turns) between PCAs and simulated students at a time, and teachers can keep generating further conversation by clicking the “Generate Conversation” button. When teachers change the state machine diagram, TeachTune prompts teachers to re-generate conversations from the beginning. Teachers can use automated chats to quickly review different PCA designs on the same students without manually typing messages (DG2). When teachers find corner cases that their PCA design did not cover, they can add a node that describes the case and appropriate instruction for PCAs. For example, with the state machine in Fig. 3, teachers may find the PCA stuck in the root state when it chats with a simulated student who asks questions. To handle the case, teachers can add a node that reacts to students’ questions and instruct PCA to answer them.

4.3. Personalized Reflect-Respond

We propose a Personalized Reflect-Respond LLM pipeline that simulates conversations with specific student profiles. Our pipeline design is inspired by and extended from Jin et al.’s Reflect-Respond pipeline (Jin et al., 2024); we added a personalization component that prompts LLMs to incorporate prescribed student traits into simulated students (DG1).

Reflect-Respond is an LLM-driven pipeline that simulates knowledge-learning (Jin et al., 2024). It takes a simulated student’s current knowledge state and conversation history as inputs (Fig. 6). A knowledge state is a list of knowledge components that are either acquired or not acquired. The state dynamically changes throughout conversations to mimic knowledge acquisition. To generate a simulated student’s response, inputs pass through the Reflect and Respond steps. Reflect updates the knowledge state by activating relevant components, while Respond produces a likely reply based on the updated state and conversation history.

Our pipeline personalizes Reflect-Respond by giving an LLM additional instruction in the Respond step. Before the runtime of Reflect-Respond, Interpret step first translates trait scores into a trait overview that contains a comprehensive summary and reasoning of how the student should behave (Fig. 6 Step 1). Once teachers edit and confirm the overview through the interface (Fig. 5 C), it is passed to the Respond step so that the LLM takes the student traits into account in addition to the conversational context and knowledge state. We added the Interpret step because it produces student profiles that allow teachers to edit flexibly and prompt LLMs to reflect on student traits more cohesively (i.e., chain of thought (Wei et al., 2022)). The prompts for Interpret, Reflect, and Respond are available in Appendix A.3, A.4, and A.5.

We took an LLM-driven approach to personalize and implement the Reflect-Respond pipeline. We considered adopting student modeling methods that rely on more predictable and grounded Markov models (Tadayon and Pottie, 2020; Maqsood et al., 2022). Still, we decided to use a fully LLM-driven approach because we also target extracurricular teaching scenarios where large datasets to build Markov models may not be available.

5. Evaluation

We evaluated the alignment of Personalized Reflect-Respond to teachers’ perception of simulated students and the efficacy of TeachTune for helping teachers review PCAs against diverse student profiles. Our evaluation explores the following research questions:

-

RQ2:

How accurately does the Personalized Reflect-Respond pipeline simulate a student’s knowledge level and traits expected by teachers?

-

RQ3:

How do simulated students and automated chats, compared to direct chats and test cases, help teachers review PCAs?

The evaluation was twofold. To investigate RQ2, we created nine simulated students of diversely sampled knowledge and trait configurations and asked 10 teachers to predict their configurations through direct chats and pre-generated conversations. To answer RQ3, we ran a between-subjects user study with 30 teachers and observed how the student profile template and simulated students helped the design and reviewing of PCAs. We received approval for our evaluation study design from our institutional review board.

5.1. Technical Evaluation

Under controlled settings, we evaluated how well the behavior of a simulated student instructed by our pipeline aligns with teachers’ expectations of the student regarding knowledge level, motivation, and psychosocial contexts (RQ2).

5.1.1. Evaluators

We recruited ten K-12 science teachers as evaluators through online teacher communities. The evaluators had experience teaching 259.2-sized () classes (min: 8, max: 33) for 4.54.2 years (min: 0.5, max: 15). As compensation, evaluators received KRW 50,000 (USD 38).

5.1.2. Baseline Pipeline

We created a baseline pipeline to explore how the Interpret step affects the alignment gap. The Baseline pipeline directly takes raw student traits in its Respond step without the Interpret step. By comparing Baseline with Ours (i.e., Personalized Reflect-Respond), we aimed to investigate if explanation-rich trait overviews help an LLM reduce the gap between simulated students and teachers’ expectations. Pipelines were powered by GPT-4o-mini, with the temperature set to zero for consistent output. The prompt used for Baseline is available in Appendix B.1.

5.1.3. Setup

The phase transition between solid, liquid, and gas was the learning topic of our setup. We chose phase transition because it has varying complexities of knowledge components and applicable pedagogies. Simulated students could initially know and learn six knowledge components of varying complexity (see Appendix B.4); the first three components describe the nature of three phases, and the latter three are about invariant properties in phase transition with reasoning. The knowledge components were from middle school science textbooks and curricula qualified by the Korean Ministry of Education.

We prepared 18 simulated students for the evaluation (see Fig. 7). We first chose nine student profiles through the farthest-point sampling (Qi et al., 2017), where the point set was 243 possible combinations of different levels of knowledge and student traits to ensure the coverage and diversity of samples. Each student profile was instantiated into two simulated students instructed by Baseline and Ours.

Diagram summarizing key steps of the technical evaluation process. Overall, five traits (knowledge level, motivation, goal commitment, stress, and self-efficacy) are visualized as radar charts, representing student profiles. First, from an initial set of 243 points, 9 student profiles were derived using farthest-point sampling. These profiles were then used to generate 18 simulated students through two different pipeline designs: Baseline and Ours. Finally, 10 evaluators inferred the profiles of the simulated students under blind conditions. Bias is depicted as the difference between the radar charts representing the actual profiles from the pipeline and the evaluator-perceived profiles.

5.1.4. Procedure

We first explained the research background to the evaluators. The evaluators then reviewed 18 simulated students independently in a randomized order. To reduce fatigue from conversing with simulated students manually, we provided two pre-prepared dialogues—interview and lesson dialogues. In interview dialogues, simulated students sequentially responded to six quizzes about phase transition and ten questions about their student traits (Fig. 8). In lesson dialogues, simulated students received 12 instructional messages dynamically generated by an LLM tutor prompted to teach phase transitions (Fig. 9). Lesson dialogues show more natural teacher-student conversations in which teachers speak adaptively to students. Evaluators could also converse with simulated students directly if they wanted. Nine evaluators used direct chats at least once; they conversed with 54.5 students and exchanged 88.3 messages on average.

We gave evaluators a list of six knowledge components and three 5-point Likert scale inventory items for each student trait; they predicted each simulated student’s initial knowledge state, intensity level of the four student traits, and believability. The sampled student profiles, trait overviews, knowledge components, and inventory items used are available in Appendix B.2, B.3, and B.4.

5.1.5. Measures

We measured the alignment between simulated students’ behaviors and teachers’ expectations of them in two aspects—bias and believability. The bias is the gap between the teacher-perceived and system-configured student profiles. A smaller bias would indicate that our pipeline simulates student behaviors closer to what teachers anticipate. Believability (Park et al., 2023) is the perceived authenticity of simulated students regarding their response content and behavior patterns. We measured the bias and believability of each sampled student profile independently and analyzed the overall trend.

Evaluators’ marking on knowledge components was binary (i.e., whether a simulated student possesses this knowledge), and their rating on the four student traits was a scalar ranging from three to fifteen, summing 5-point Likert scale scores from three inventory items as originally designed (Sun et al., 2011; Klein et al., 2001; Gottfried, 1985; May, 2009). We used the two-sided Mann-Whitney U test per simulated student pairs to compare Baseline and Ours. We report the following measures:

-

•

Knowledge Bias (% error). We quantified the bias on knowledge level as the percentage of incorrectly predicted knowledge components. We report the average and median across the evaluators.

-

•

Student Trait Bias (0-12 difference). We calculated the mean absolute error between the evaluators’ Likert score and the configured value for each student trait. We report the average and median across the evaluators.

-

•

Believability (1-5 points). We directly compared evaluators’ ratings on the three statements about how authentic simulated behavioral and conversational responses are and how valuable simulated students are for teaching preparation (Fig. 11).

An interview conversation between an interviewer and a simulated student. The dialogue focuses on reviewing the characteristics of solids and liquids, changes of state, and personal science study goals. The student expresses nervousness but is willing to try a quiz and shares aspirations and concerns about persistence in science studies.

An interview conversation between an LLM tutor and a simulated student. They review the particle arrangements in solids, liquids, and gases, as well as particle motion and state changes. The tutor reassures the student and explains concepts not previously covered, while the student expresses uncertainty but finds the topics interesting.

5.2. Technical Evaluation Result

We report the descriptive statistics on the bias and believability of Personalized Reflect-Respond (Ours) and validate its design by comparing it with Baseline. Our results collectively show that Personalized Reflect-Respond can instruct an LLM to simulate a student’s behavior of a specific knowledge state and traits precisely.

5.2.1. The knowledge bias was small (median: 5%)

The gap between the configured and evaluator-perceived knowledge states was small (the last row of Table 3). Among the nine student profiles, evaluators unanimously and correctly identified the knowledge components of four profiles. The average accuracy across profiles was 93%, where the minimum was 78%. Profiles 4 and 7 achieved the lowest accuracy; evaluators underrated Profile 4 and overrated Profile 7. Student profile 4 describes a learner who knows all knowledge components but exhibits low confidence and interest. The corresponding simulated student tended to respond to the tutor’s questions half-heartedly. We speculate that this behavior might have confused evaluators to think the student was unaware of some of the knowledge components. Student profile 7 was a learner who knew only half of the knowledge but had high self-efficacy. Its confident response might have deluded evaluators that it knows more.

5.2.2. The trait bias was small (median: 1.3 out of 12)

The gap between the configured and perceived levels of student traits was also small (Fig. 10). The mean bias was 1.9, and the minimum and maximum were 0.4 and 4.9, respectively. Considering that we summed the bias from three 5-point scale questions for each trait, teachers can precisely set their simulated students within less than point error on each Likert scale input in our profile generation interface (Fig. 5 B). The average variance between the perceived traits was also small (), possibly indicating that simulated students manifested characteristics unique to their traits and led to a high agreement among teachers’ perceptions. Nevertheless, Profiles 3, 4, and 9 showed biases above four on the goal commitment trait. All of these student profiles had contrasting goal commitment and motivation ratings; for instance, the goal commitment rating of Profile 3 was low, while the motivation rating was high. We contemplate that since these two traits often correlate and go together (Sue-Chan and Ong, 2002; Mikami, 2017), evaluators might have misunderstood the motivational behaviors of simulated students as goal-related patterns.

5.2.3. Simulated students were believable (median: 3.5 out of 5)

Evaluators reported that simulated students behave as naturally as real students and are helpful for teacher training (Fig. 11). The average scores for each question (i.e., B1, B2, and B3) were , , and , respectively. The variance in the B1 scores was high in some of the profiles. For instance, the variance was 2.1 (min: 1, max: 5) for Profile 2, which describes a student with zero knowledge and the lowest goal commitment, motivation, and self-efficacy. Since the simulated student knew nothing, it repeatedly said “I do not know” in its interview and lesson dialogues as instructed by its prompt (Appendix A.5). Evaluators had different opinions on this behavior; low raters felt the repetitive messages were unnatural and artificial, while high raters thought unmotivated students tended to give short and sloppy answers in actual classes. B3 scores showed a similar trend and a high correlation to B1 scores (Pearson’s =0.96).

5.2.4. The Interpret step increased believability significantly

Our ablation study showed the tradeoff relationship between the bias and believability in our pipeline design. The Baseline pipeline showed minimal knowledge and trait bias compared to Ours (Table 3 and Fig. 10). Bias was minimal because Baseline students often revealed the raw trait values in the system prompt when responding to questions (e.g., “I have a low motivation” and “I strongly agree.”) However, these frank responses resulted in a statistically significant decrease in the believability of simulated students (Fig. 11). Evaluators felt artificiality towards the dry and repeated responses and perceived them as detrimental to being a pedagogy tester (B3). On the other hand, Ours students were better at incorporating multiple traits into responses. For example, Profile 5 is a student who has high goal commitment and stress levels at the same time. While Baseline generated “Thank you! But, I am stressed about my daily study.” for a tutor’s encouragement, Ours creates a multifaceted response: “Thank you! I am a bit stressed about my daily study, but I am trying hard.” The Interpret step can balance the tradeoff between bias and believability by prompting LLMs to analyze student profiles more comprehensively and generate more believable behaviors.

| Student Profiles | |||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | Mean | Median | |

| Baseline | 8.320.4 | 0.00.0 | 0.00.0 | 13.35.2 | 1.74.1 | 10.00.0 | 6.75.2 | 0.00.0 | 0.00.0 | 4.4 | 1.7 |

| Ours | 8.3.11.7 | 6.716.3 | 5.05.5 | 21.720.4 | 0.00.0 | 0.0.0.0 | 21.718.3 | 0.00.0 | 0.00.0 | 7.0 | 5.0 |

Table comparing knowledge bias for nine student profiles across two conditions: baseline and ours. Each row lists the mean and standard deviation values, with the last two columns showing the mean and median bias.

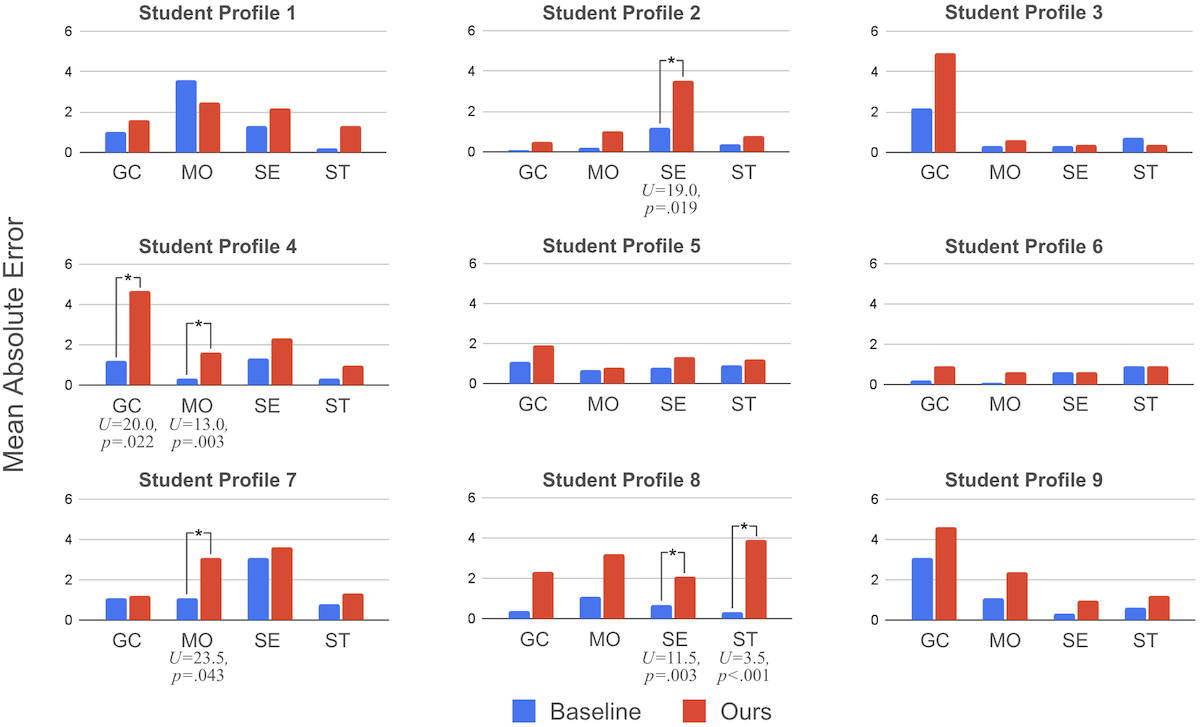

Bar graphs comparing the mean absolute error across two conditions, Baseline and Ours, for nine student profiles. The x-axis represents four traits (goal commitment, motivation, self-efficacy, and stress), and the y-axis ranges from 0 to 6. For Student Profile 2, the self-efficacy trait shows a significant difference in error, with a U-value of 19.0 and a p-value of 0.019. For Student Profile 4, both goal commitment and motivation traits show significant differences in error, with U-values of 20.0 and 13.0 and p-values of 0.022 and 0.003, respectively. For Student Profile 7, the motivation trait shows a significant difference in error, with a U-value of 23.5 and a p-value of 0.043. For Student Profile 8, both self-efficacy and stress traits show significant differences in error, with U-values of 11.5 and 3.5 and p-values of 0.003 and less than 0.001, respectively.

Bar graphs comparing the believability ratings measured on a 5-point Likert scale across two conditions, Baseline and Ours, for nine student profiles. The x-axis represents three questions, and the y-axis ranges from 1 to 5. For Student Profiles 4, 5, 6, 8, and 9, there are statistically significant differences in scores for all three questions, with p-values all below 0.05. For Student Profile 7, the score for the first question shows a significant statistical difference, with a U-value of 19.0 and a p-value of 0.016.

5.3. User Study

We ran a user study with 30 K-12 science teachers to explore how templated student profile creation and automated chats affect the PCA design process (RQ3). We designed a between-subjects study in which each participant created a PCA under one of the three conditions—Baseline, Autochat, and Knowledge. In Baseline, participants used a version of TeachTune without the automated chat feature; participants could access direct chat and single-turn test cases only. In Autochat, participants used TeachTune with all features available; they could generate student profiles with our template interface and use automated chats, direct chats, and test cases. In Knowledge, participants used another version of TeachTune where they could use all features but configure only the knowledge level of simulated students (i.e., no student traits and trait overview); this is analogous to using simulated students powered by the original Reflect-Respond pipeline.

By comparing the three conditions, we investigated the effect of having simulated students on PCA review (Baseline vs. Autochat) and how simulating student traits beyond their knowledge level affect the depth and breadth of the design process (Autochat vs. Knowledge). The Knowledge condition is the baseline for the automated chat feature. By looking into this condition, we investigate if the existing simulated student pipeline (i.e., Reflect-Respond) is enough to elicit improved test coverage and how Personalized Reflect-Respond can improve it further.

5.3.1. Participants

We recruited 36 teachers through online teacher communities in Korea and randomly assigned them to one of the conditions. Participants had varying teaching periods (3.34.7 years) and class sizes (1312 students). Thirteen participants are currently teaching at public schools. According to our pre-task survey (Appendix C.1), all participants had experience using chatbots and ChatGPT. They responded that they were interested in using AI (e.g., image generation AI and ChatGPT) in their classes. More than half of the participants reported they were knowledgeable about the chatbot design process, and five of them actually had experience making chatbots. There was no statistical difference in participants’ teaching experience, openness to AI technology, and knowledge about chatbot design among the conditions. Study sessions took place for 1.5 hours, and participants received KRW 50,000 (USD 38) as compensation.

We randomly assigned ten participants to each condition, and the study was run asynchronously, considering participants’ geographical diversity and daytime teaching positions. We also conducted additional sessions with six teachers in Autochat condition to complement our asynchronous study design by observing how teachers interact with TeachTune directly through Zoom screen sharing. We monitored the whole session and asked questions during and after they created PCAs. We excluded these six participants from our comparative analysis due to our intervention within the sessions. We only report their comments.

5.3.2. Procedure and Materials

After submitting informed consent, the participants received an online link to our system and completed a given task in their available time, following the instructions on the website. Participants first read an introduction about the research background and the purpose of this study and watched a 7-minute tutorial video about the features in TeachTune. Participants could revisit the tutorial materials anytime during the study.

We asked participants to use TeachTune to create a PCA that can teach “the phase transitions between solid, liquid, and gas” to students of as diverse knowledge levels and student traits as possible. Participants then used TeachTune in one of the Baseline, Autochat, and Knowledge conditions to design their PCAs for 30-60 minutes; participants spent 5015 minutes on average. All participants received a list of knowledge components for the topic and explanations of the four student traits to ensure consistency and prevent bias in information exposure. We encouraged participants to consider them throughout the design process. After completing their PCA design, participants rated their task load. Participants then revisited their direct chats, test cases, simulated students, and state diagrams to report the student profiles they had considered in a predefined template (Fig. 12). The study finished with a post-task survey asking about their PCA design experience. The study procedure is summarized in Table 4.

5.3.3. Materials and Setup

Participants received the six knowledge components used in our technical evaluation. We also gave participants an initial state diagram to help them start their PCA design. The knowledge components, initial state diagram, and survey questions are available in Appendix B.4, C.1, C.2, and C.3.

We also made a few modifications to our pipeline setup. Our technical evaluation revealed that repeated responses critically undermine simulated students’ perceived believability and usefulness. To prevent repeated responses and improve the efficacy of the automated chat, we set the temperature of the Respond step to 1.0 and added a short instruction on repetition at the end of the prompt (Appendix A.5 red text). The prompt and temperature for other pipeline components were the same as the technical evaluation.

5.3.4. Measures

We looked into how TeachTune affects the PCA design process as a review tool. An ideal review tool would help users reduce manual task loads, explore extensive evaluation space, and create quality artifacts. We evaluated each aspect with the following measures. Since we had a small sample size for each condition (n=10) and it was hard to assume the normality, we statistically compared the measures between the conditions through the Kruskal-Wallis test. We conducted Dunn’s test for post hoc analysis.

-

•

Task load (1-7 points). Participants responded to the 7-point scale NASA Task Load Index (Hart and Staveland, 1988) right after building their PCAs (Table 4 Step 3). We modified the scale to seven to make it consistent with other scale-based questionnaires. Participants answered two NASA TLX forms, each asking about the task load on PCA creation and PCA review tasks, respectively.

-

•

Coverage. We asked participants to report the student profiles they have considered in their design process (Table 4 Step 4). We gave a template where participants could indicate each of the knowledge levels and four student traits of a student profile into five levels (1: very low, 5: very high). Participants could access their usage logs of direct chats, single-turn test cases, automated chats, and state diagrams to recall all the student profiles covered in their design process (Fig. 12). We define coverage as the number of unique student profiles characterized by the combinations of levels. We focused only on the diversity of knowledge levels and four traits to compare the conditions consistently. We chose self-reporting because system usage logs cannot capture intended student profiles in Baseline and Knowledge.

-

•

Quality (3-21 points per trait). Although our design goals center around improving the coverage of student profiles, we also measured the quality of created PCAs. This was to check the effect of coverage on the final PCA design. We asked two external experts to rate the quality of the PCAs generated by the participants. Both experts were faculty members with a PhD in educational technologies and learning science and have researched AI tutors and pedagogies for ten years. The evaluators independently assessed 30 PCAs by conversing with them and analyzing their state machine diagrams. Evaluators exchanged a median of 2810 and 4520 messages per PCA. We instructed the evaluators to rate the heuristic usability of PCAs (Langevin et al., 2021) and their coverage for knowledge levels and student traits (Appendix C.4). The usability and coverage ratings were composed of three 7-point scale sub-items, and we summed them up for analysis. Evaluators exchanged their test logs and ratings for the first ten chatbots to reach a consensus on the criteria. If the evaluators rated a PCA more than 3 points apart, they rated the PCA again independently. We report their mean rating after conflict resolution.

- •

| Step (min.) | Activity |

| 1 (10) | Introduction on research background and user interface |

| 2 (60) | PCA design |

| 3 (5) | Task load measurement |

| 4 (10) | Student profile reporting |

| 5 (5) | Post-task survey |

Table outlining the steps and time allocation for each activity. Step 1 (10 minutes) involves an introduction to the research background and user interface. Step 2 (60 minutes) is for PCA design. Step 3 (5 minutes) covers task load measurement. Step 4 (10 minutes) involves student profile reporting. Step 5 (5 minutes) is for a post-task questionnaire.

An interface for collecting student profiles with three panels. The left panel provides instructions for the task. The middle panel shows the history, featuring two student profiles labeled ”Low motivation” and ”Low performer,” which are used for automated chat methods. The right panel is for reporting student profiles. It includes five sliders that can be checked or unchecked, representing traits such as knowledge level, goal commitment, motivation, self-efficacy, and academic stress. In the displayed profile, knowledge level, goal commitment, and motivation are set to 1, 3, and 2 points, respectively, while self-efficacy and academic stress are unchecked, indicating they were not considered. At the bottom of the right panel, two buttons are displayed: an ”Add a student type” button for adding additional profiles and a ”Submit” button for final submission.

5.4. User Study Result

Participants created PCAs with 156 nodes and 2110 edges in their state diagram on average. We outline the significant findings from the user study along with quantitative measures, participants’ comments, and system usage logs. Participants are labeld with B[1-10] for Baseline, A[1-10] for Autochat, K[1-10] for Knowledge, and O[1-6] for the teachers we directly observed.

5.4.1. Autochat resulted in a lower physical and temporal task load

There was a significant effect of simulating student traits beyond knowledge on the physical (=10.1, =.006) and temporal (=12.7, =.002) task load for the PCA creation task (Fig. 13 left). The effect sizes were large (Cohen et al., 2013): =0.301 and =0.395, respectively. A post-hoc test suggested Autochat participants had significantly lower task load than Knowledge participants (physical: =.002 and temporal: ¡.001). The same trend appeared in the PCA review task (=6.3, =.043) with a large effect size (=0.160) (Fig. 13 right).

The fact that having simulated students reduced teachers’ task load in Autochat and not in Knowledge may imply that automated chat is meaningful only when simulated students cover all characteristics (i.e., knowledge and student traits). Since participants were instructed to consider diverse knowledge levels and student traits, we surmise that the incomplete review support in Knowledge made automated chat less efficient than not having it. Knowledge participants commented that it would be helpful if they could configure the student traits mentioned in the instructions (K2 and K7).

In our observational sessions, automated chats alleviated teachers’ burden in ideation and repeated tests. O1 commented: “I referred to the beginning parts of automated chats [for starting conversations in direct chats]. I would spend an extra 20 to 30 minutes [to come up with my own] if I did not have automated chats.”

5.4.2. Autochat participants considered more unique student profiles

Participants submitted Baseline: 2.22.3, Autochat: 4.91.6, and Knowledge: 2.91.7 unique student profiles and the difference between conditions was significant (=10.2, =.006, =0.304). Autochat participants considered significantly more student profiles than Baseline (=.002) and Knowledge (=.036). Autochat participants also reported that they covered more levels of different knowledge and student traits (Fig. 14).

The result collectively shows that having simulated students helps teachers improve their coverage in general and significantly elicits extended coverage when simulated students support more characteristics. However, we did not observe a difference in participant-perceived coverage (Appendix C.2, Questions 7 and 8) among the conditions. This insignificant difference may indicate that teachers rated more conservatively after recognizing their unawareness of evaluation space. A1 remarked: “I became more interested in using chatbots to provide individualized guidance to students, and I would like to actually apply [TeachTune] to my classes in the future. During the chatbot test, I again realized that each student has different characteristics and academic performance, so the types of questions they ask are also diverse. Even if the learning content is the same for a class, students’ feedback can vary greatly, and a chatbot could help with this problem.” O3 also remarked that structurally separate student profiles helped her recognize individual students, which would not be considered in direct chats, and prompted her to test as many profiles as possible.

5.4.3. Direct chats, test cases, and automated chats complement each other

All participants reported that the systems were helpful in creating quality PCAs. For the question about future usage of systems (Appendix C.2, Question 10), Autochat participants reported the highest affirmation among the conditions (median: 6), despite the statistical difference to other conditions was not significant. We did not observe a significant preference for direct chats, test cases, and automated chats (Appendix C.2, Questions 1, 3, and 5). Still, participants’ comments showed that each feature has its unique role in a PCA design process and complements each other (see Fig. 15).

Direct chats were helpful, especially when participants had specific scenarios to review. Since participants could directly and precisely control the content of messages, they could navigate the conversational flow better than automated chats (A5), check PCAs’ responses to a specific question (A7), and review extreme student types and messages that automated chats do not support (A10 and K6). Thus, participants used direct chats during early design stages (B2 and K1) and for debugging specific paths in PCAs’ state diagrams in depth (B7, B8, and A6).

On the other hand, participants tend to use automated chats for later exploration stages and coverage tests. Autochat and Knowledge participants often took a design pattern in which they designed a prototypical PCA and tested its basic functionality with direct chats and improved the PCA further by reviewing it with automated chats (A1, A6, K1, and K5). Many participants pointed out that automated chats were efficient for reviewing student profiles in breadth and depth (A4, A5, A10, K2, K7, and K10) and helpful in finding corner cases they had not thought of (K4 and K7). Nevertheless, some participants complained about limited controllability and intervention in automated chats (A1 and A5) and the gap between actual students and our simulated students due to repeated responses (A2 and A3).

Test cases were helpful for node-oriented debugging of PCAs. Participants used them when they reviewed how a PCA at a particular node responds (B5) and when they tested single-turn interactions quickly without having lengthy and manual conversations (B1). Most participants preferred direct chats and automated chats to test cases for their review (Appendix C.2, Questions 1, 3, and 5, direct chat: 5.6, automated chat: 5.3, test cases: 4.5), indicating the importance of reviewing multi-turn interactions in education.

5.4.4. The difference in PCA qualities among conditions was insignificant

On average, Autochat scored the highest quality (Table 5), but we did not observe statistical differences among the conditions for knowledge (=1.75, =.416), motivation factor (=4.89, =.087), psychosocial contexts (=2.49, =.287), and usability (=1.32, =.517). PCA qualities also did not correlate with the size of the state diagram graphs (Spearman rank-order correlation, =.179, =.581, =.486, and =.533, respectively).

The result may suggest that even though Autochat participants could review more automated chats and student profiles during their design, they needed additional support to incorporate their insights and findings from automated chats into their PCA design. Participants struggled to write the instruction to PCAs for each node (A3 and K5) and wanted autosuggestions and feedback for the instruction (K1 and A9), which contributes to the quality of PCAs. The observations imply that the next bottleneck in the LLM-based PCA design process is debugging PCA according to evaluation results.

It is also possible that teachers may not have sufficient learning science knowledge to make the best instructional design decisions based on students’ traits (Harlen and Holroyd, 1997). For instance, O1 designed a PCA for the first time and remarked that she struggled to define good characteristics of PCAs until she saw automated chats as a starting point for creativity. O5 recalled an instance where she tested a student’s message, “stupid robot,” and her PCA responded, “Thank you! You are also a nice student […] Bye.” Although O5 found this awkward, she could not think of a better pedagogical response to stop students from bullying the PCA.

Future work could use well-established guidelines and theories (Koedinger et al., 2012; Schwartz et al., 2016) on personalized instructions to scaffold end-to-end PCA design. When a teacher identifies an issue with a simulated student with low self-efficacy, a system may suggest changes to PCA design for the teacher to add confidence-boosting strategies to PCAs.

Two sets of bar graphs comparing the task load on a 7-point Likert scale across three conditions: Baseline, Autochat, and Knowledge. The x-axis represents six factors: mental, physical, temporal, effort, performance, and frustration. The bar graph on the left shows the task load for the creation task, where statistically significant differences were observed between the Autochat and Knowledge conditions in the physical and temporal task loads, with p-values of 0.002 and less than 0.001, respectively. The bar graph on the right shows the task load for the review task, where similarly, statistically significant differences were found between the Autochat and Knowledge conditions in the physical and temporal task loads, with p-values of 0.042 and 0.015, respectively.

Bar graphs comparing the number of levels covered across three conditions: Baseline, Autochat, and Knowledge. The x-axis represents five traits: knowledge level, goal commitment, motivation, self-efficacy, and stress. The y-axis ranges from 0 to 5. The number of levels for all five traits shows statistically significant differences between the Autochat and Baseline conditions, with p-values of 0.002, 0.003, 0.005, 0.002, and 0.028, respectively. Additionally, for the traits of knowledge level, goal commitment, and motivation, significant differences were observed between the Autochat and Knowledge conditions, with p-values of 0.024, 0.016, and 0.035, respectively.

| Trait | Baseline | Autochat | Knowledge |

| Knowledge coverage | 16.51.4 | 17.00.9 | 16.31.5 |

| Motivation factor coverage | 15.61.2 | 17.41.8 | 16.21.0 |

| Psychosocial context coverage | 15.40.6 | 16.31.5 | 15.40.7 |

| Usability | 16.00.9 | 16.21.3 | 16.01.1 |

Each row presents scores of PCAs per trait (i.e., knowledge coverage, motivation factor coverage, psychosocial context coverage, and usability).

A diagram illustrating iterative PCA design examples from additional session participants. The diagram is organized into three rows, each showing how participants modified the state diagram using different features: direct chats, automated chats, and test cases. For early design, direct chat enabled O1 to address a specific scenario of students struggling with terminology memorization. O1 added a node instructing ”Interpret roots of the word” after monitoring the chatbot’s response using linguistic roots. For the coverage test, automated chat helped O5 refine the PCA for students with low self-efficacy. Instead of providing immediate explanations, O5 modified the instruction to ask about phase transitions step by step. Then, O5 added a new node to flow into another knowledge component when the chatbot paused for additional questions. Finally, for node debugging, O6 added an instruction to ask about real-world examples of phase transitions and confirmed the modification by retesting with the same input message.

6. Discussion

We revisit our research questions briefly and discuss how TeachTune contributes to augmenting the PCA design process.

6.1. Student Traits for Inclusive Education

Teachers expressed their need to review how PCAs adapt to students’ diverse knowledge levels, motivation factors, and psychosocial contextual influence. Prior literature on student traits (Richardson et al., 2012) provided us with extensive dimensions of student traits, and our interview complemented them with teachers’ practical priority and concern among them. Our approach may highlight that we might need a more holistic understanding that spans theories, quantitative analysis, and teacher interviews to identify key challenges teachers face and derive effective design goals.

Moreover, although TeachTune satisfied the basic needs for simulating these student traits, teachers wanted additional characteristics to include more diverse student types and teaching scenarios in actual class settings (A5, A8, A10, and K7). These additional needs should not only include the 42 student traits (Richardson et al., 2012) investigated in our formative interview but should also involve the traits of marginalized learners (Thomas et al., 2024; Manspile et al., 2021). For instance, students with cognitive disabilities need adaptive delivery of information, and immigrant learners would benefit from culturally friendly examples. Reviewing PCAs before deployment with simulated marginalized students will make classes inclusive and prevent technologies from widening skill gaps (Beane, 2024).

6.2. Tolerance for the Alignment Gap

We observed 5% and 10% median alignment gaps between our simulated students and teachers’ perceptions (RQ2). This degree of gap could be bearable in the context of simulating conversations because simulated students are primarily designed for teachers to review interactions, not to replicate a particular student precisely, and real students also often show discrepancies in their knowledge states and behaviors by making mistakes and guess answers (Baker et al., 2008). Recent research on knowledge tracing suggests that students make more than 10% of slips and guesses in a science examination, and the rate depends on students’ proficiency (Liao and Bolt, 2024). The individualized rate of slips and guesses per student profile (e.g., increasing the frequency of guesses for a highly motivated simulated student) may improve the believability of simulated students. Teachers will also need interfaces that transparently reveal the state of simulated students (e.g., Fig. 4 C) to distinguish system errors from intended slips.

6.3. Using Simulated Students for Analysis

Our user study showed that TeachTune helps teachers consider a broader range of students and can help them review their PCAs more robustly before deployment (RQ3). PCA design is an iterative process, and it continues after deploying PCAs to classes. Student profiles and simulated students can support teachers’ post-deployment design process by leveraging students’ conversation history with PCAs. For instance, teachers can group students by their predefined student profiles as a unit of analysis and compare learning gain among the groups to identify design issues in PCA. Simulated students can also serve as an interactive analysis tool. Teachers may fine-tune a simulated student with specific student-PCA conversation data and interactively replay (e.g., ask questions to gain deeper insight about the student) previous learning sessions with the simulated agent aligned with a particular student.

6.4. Profile-oriented Design Workflow

During formative interviews, we observed that teachers unfamiliar with reviewing PCAs often weave multiple student profiles into a single direct chat. To address the issue, TeachTune proposed a two-step profile-oriented workflow comprising steps for (1) organizing diverse student profiles defined by student traits and (2) observing the test messages generated from these profiles. Our user study showed that this profile-oriented review process could elicit diverse student profiles from teachers and help them explore extensive evaluation spaces. The effectiveness of this two-step workflow lies in its hierarchical structure, which first organizes the evaluation scope at the target user level and then branches into specific scenarios each user might encounter. Such a hierarchical approach can be particularly beneficial for laypeople who try making LLM-infused tools by themselves but are not familiar with reviewing them. For example, when a non-expert develops an LLM application, it will be easier to consider potential user groups than to think of corner cases immediately. The two-step workflow with simulated user groups can scaffold the creator to review the application step by step and generate user scenarios rapidly. We expect that the LLM-assisted profile-oriented design workflow is generalizable to diverse creative tasks, such as UX design (Wolff and Seffah, 2011), service design (Idoughi et al., 2012), and video creation (Choi et al., 2024), that require a profound and extensive understanding of target users.

6.5. Risks of Amplifying Stereotypes of Students

Our technical evaluation assumed teachers’ expectations of student behaviors as ground truth, considering that simulated students are proxies for automating testing teachers intend. However, in practical classes, there are risks of teachers having stereotypes or TeachTune amplifying their bias toward students over time.

During the observational sessions, we asked teachers’ perspectives, and teachers expressed varying levels of concern. O3 commented that private tutors would have limited opportunities to observe their students beyond lessons, making them dependent on simulated behaviors. Conversely, O1 was concerned about her possible stereotypes of student behaviors and relied on automated chat to confirm behaviors she expected. O4 stated that automated chats would not bias teachers as they know the chats are simulated and just a point of reference.

Teachers will need an additional feedback loop to close the gap between their expectations and actual students by deploying PCAs iteratively and monitoring student interaction logs as hypothesis testing. Future work may observe and support how teachers fill or widen the gap at a more longitudinal time scale (e.g., a semester with multiple lessons).

7. Limitations and Future Work

We outline the limitations of the work. First, we did not confirm the pedagogical effect of PCAs on students’ learning gain and attitude, as we only evaluated the quality of PCAs with experts. We could run lab studies in which middle school students use the PCAs designed by our participants, and we measure their learning gain on phase transitions through a pre-and post-test. Student-involved studies could also reveal the gap between teachers’ expectations and students’ actual learning; even though a teacher tests a student profile and designs a PCA to cover it, a student of the profile may not find it helpful. Our research focused on investigating the gap between simulated students’ behaviors and teachers’ expectations. Future work can explore the alignment gap between simulated and actual students and develop interactions to guide teachers in debugging their PCAs and closing the gap. Our preliminary findings will act as a foundational step to move on to safer student-involved studies.