Task-Oriented Image Transmission for Scene Classification in Unmanned Aerial Systems

Abstract

The vigorous developments of Internet of Things make it possible to extend its computing and storage capabilities to computing tasks in the aerial system with collaboration of cloud and edge, especially for artificial intelligence (AI) tasks based on deep learning (DL). Collecting a large amount of image/video data, Unmanned aerial vehicles (UAVs) can only handover intelligent analysis tasks to the back-end mobile edge computing (MEC) server due to their limited storage and computing capabilities. How to efficiently transmit the most correlated information for the AI model is a challenging topic. Inspired by the task-oriented communication in recent years, we propose a new aerial image transmission paradigm for the scene classification task. A lightweight model is developed on the front-end UAV for semantic blocks transmission with perception of images and channel conditions. In order to achieve the tradeoff between transmission latency and classification accuracy, deep reinforcement learning (DRL) is used to explore the semantic blocks which have the best contribution to the back-end classifier under various channel conditions. Experimental results show that the proposed method can significantly improve classification accuracy compared to the fixed transmission strategy and traditional content perception methods.

Index Terms:

Aerial image transmission, task-oriented communication, compressive sensing, reinforcement learning (RL).I Introduction

With the exponential growth of the number of mobile edge devices and the huge improvement of computing capabilities of edge devices, increasingly computing demand has been decentralized from the cloud to the edge [1, 2]. Under the coverage of mobile networks, UAVs can provide services for surrounding users through communication with base stations (BS), thereby creating an environment with high performance, low latency and high bandwidth [3]. Image transmission is one of the most essential tasks that drones need to perform, which can capture high-resolution (HR) images that are unreachable for humans beings in high-altitude environments. Due to the changes in flight area and unstable channel conditions, there are still many challenges for unmanned aerial vehicles (UAV) to maintain low latency in high-definition image transmission scenarios. Therefore, it is necessary to study the transmission strategy and its reliability based on aerial images.

Intelligent image analysis technologies such as image classification, image segmentation, and object detection assist in solving the problems in drone scenes [4]. A key to the artificial intelligence (AI) aided edge computing is how to efficiently implement the AI algorithms with the leverage of cloud and edge [5]. Particularly, if AI algorithms are deployed on front-end drones, high-performance computing cannot be guaranteed due to the limited storage space, computing power, and energy consumption. A task-oriented communication problem arises that the received bits convey the intention of the transmitter. Proper analysis of the semantics conveyed by the target task can guide the transmitter and receiver to achieve a common goal whenever communication occurs [6, 7]. A video coding for machines (VCM) framework for machine vision has been proposed in [8], where the image content positively contributing to model inference should be preferentially extracted to ensure that the accurate completion of AI tasks.

Existing MEC-based image transmission framework tends to either encode the images in terms of the change of channel conditions or compress the images on the basis of their content. However, these two schemes have their own deficiencies. Classical methods such as joint source-channel coding (JSCC) [9, 10] directly maps the image pixel values to the complex-valued channel input symbols, and jointly learns the encoding and decoding neural networks with feedback from channels. With particular focus on the quality of the reconstructed images, they did not consider the entire system as a whole to transmit the image semantics for the back-end machine vision tasks. Some studies extract semantic concepts from the texture structure in images to improve the semantic-preserving ability in down-stream vision tasks [11, 12]. Images are compressed by the global similarity within the context pixels in [13] or the block-based compressive sensing (CS) with different sampling rates based on saliencies of semantic blocks [14]. Nevertheless, these methods only encode the artifacts into bits, which are not tightly coupled with downstream tasks. It is critical to find out useful image pixels with the analysis of transmission tasks. After all, the machine vision system based on convolutional neural network (CNN) understands image concepts differently from humans.

Inspired by the idea of task-oriented communication, this article aims at building a new aerial image transmission paradigm, as illustrated in Fig. 1. We consider a remote sensing scenario in which the HR images captured by onboard cameras should be classified immediately. However, the computation resources of the drones are much less than that of the MEC server. There should be a tradeoff between the classification accuracy and transmission latency, which are two main factors determining system performance.

The contributions of this article are summarized as follows:

-

•

We propose a new aerial image transmission paradigm for the scene classification task. In the training stage, deep reinforcement learning (DRL) is used to explore the semantic blocks which have the best contribution to the back-end classifier under various channel conditions. During the testing stage, the model can independently compress the most essential blocks in the HR images for transmission.

-

•

Different from traditional task-oriented communications, the proposed algorithm can perceive channel environment by considering the channel gain as the state, which makes it possible to extract semantic blocks for compression under any channel condition. Moreover, the proposed algorithm is suitable for any target model due to the invariant state space and action space.

-

•

A good balance between transmission latency and classification accuracy is achieved by introducing both of them in the reward function. Experiments show that the system has the ability of making decisions under different channel conditions. Under the premise of transmitting the same amount of bits, the system performance is better than manual designed and traditional content perception methods.

The remainder of this paper is organized as follows. Related works are discussed in Section II. Section III gives a detailed description of the system model. Afterwards, the DRL-based system optimization algorithm is proposed in Section IV. Section V verifies the performance of the proposed algorithm through multiple simulations. Finally, Section VI concludes the paper.

II Related Works

In this section, we first review the related works on task-oriented communications. We then introduce the state-of-the-art in computer vision with deep reinforcement learning.

II-A Task-oriented Communication

In the era of Internet of Everything, communication plays an important role in the collaborative computing tasks of multiple sensors and computing resources. It is necessary to study the application of task-oriented communication in the future IoT paradigm, which dynamically adjusts the information transmission strategy adapted to the task objective and the underlying changes of the communication environments. The works in [6, 7] started from Shannon and Weaver’s original definition and categorization of communication, discussing the value of information while proposing the vision of task-oriented communication and its effectiveness from the perspectives of information, communication, control theory, and computer science.

With the rapid improvement of hardware performance, more and more deep learning models are applied in the communication scenarios, even in the task-oriented communication systems [15, 16, 17, 18, 19, 20]. Xie et al. proposed the deep semantic communication (DeepSC) method for wireless text transmission [16], and its variations, L-DeepSC [15], DeepSC-S [17], and MU-DeepSC [19] for complexity reduction, speech transmission, and multi-user transmission with multi-modal data. Particularly, L-DeepSC [15] extends DeepSC to a lite mode, which is affordable for power-constrained IoT devices. [17] is designed for speech signal transmission by realizing semantic information exchange through an attention mechanism. Moreover, [20] extends DeepSC from point to point transmission to a multi-user transmission system, in which the source encoder and channel encoder are both composed of deep networks. Particularly, L-DeepSC [15] extends DeepSC to a lite mode, which is affordable for power-constrained IoT devices. [19] is designed for speech signal transmission by realizing semantic information exchange through an attention mechanism. Moreover, [20] extends DeepSC from point to point transmission to a multi-user transmission system for supporting multimodal data transmission. [17] subsequently introduced Reed Solomon (RS) channel coding to enhance the reliability of transmission. Similarly, an adaptive circular transformer is applied to semantic communication for more flexible transmission [18].

II-B Computer Vision with Deep Reinforcement Learning

Reinforcement learning (RL) is a classic machine learning method which constructs a Markov decision process (MDP) where agents acquire rewards from environment to update the model parameters in a trial-and-error manner. Deep reinforcement learning (DRL) combines the perception ability of deep learning with the decision-making ability of reinforcement learning. In the high-dimensional continuous space of the state-action pair space, value-based learning will encounter difficulties, and the strategy-based reinforcement learning shows better convergence [21, 22].

A growing number of researchers are applying DRL to solve the perception-decision problem in computer vision tasks [23]. [24] applies a policy gradient based on random curiosity-driven exploration to image coding, resulting in good performance in Atari games. Uzkent et al. select HR image blocks with policy gradients to improve classification accuracy with LR images as states [25]. Yuan et al. control the compression of the target model with agent exploring the relationship between the input and output [26]. Hu et al. jointly optimize the offloading decision and video coding parameters of the MEC-based face recognition framework with Q-learning [27]. Li et al. improve the bit rate by selecting quantization parameters (QPs) for different coding units (CUs) with policy gradients [28]. Li et al. propose an image cropping model based on weakly-supervised DRL in [29]. In the same way, Zhang et al. construct two agents to move and zoom the bounding-box with the emotional and background information in the image [30]. By simulating an agent as a brush, Zhang et al. sequentially relight portraits by DRL and learned a coarse-to-fine policy with local interpretability [31]. Yarats et al directly construct a value function in the pixel domain and regularize the learned policy with model-free DRL, so that the image has better features and visual effects [32].

III System Model

In this section, we propose a task-oriented aerial image transmission framework, as shown in Fig. 2. The whole system is mainly composed of the front-end UAV and the back-end MEC server, where a semantic extraction network and a block-wise deep compressive sampling model are mainly deployed on the front-end UAV while a reconstruction network and the target model are grouped at the back-end MEC server. Assuming that the structure of the target scene classification classifier is agnostic, we can only optimize and adjust the entire system through the output of the target model, so that the proposed scheme can flexibly adapt to a variety of target models. With the perception of the channel conditions and the content in LR images before CS, the policy network selects reliable image regions for sampling. When compressed data is transmitted over a wireless channel, the latency is determined by the amount of data and the channel rate. Afterwards, the reconstruction network recovers the image blocks compressed by the semantic extraction network from the compressed data. The reward is calculated by the model prediction and the latency, so as to optimize the whole system. The detail will be introduced in the following.

III-A Semantic Extraction Module

Semantic blocks are defined as a collection of image blocks that contribute the most to the correct classification of the back-end model. The semantic extraction module is to extract effective semantic blocks for compression through the perception of image content and channel gain, which mainly includes a policy network. Due to the limited computing resources on the sensor, directly using HR images for semantic extraction will cause high energy consumption and latency. Aided by the principle of CS sampling, the front-end sensor can capture LR images in advance, which can be regarded as a prerequisite for semantic extraction. As a branch of joint perception, LR images can also provide semantic information about HR images to guide the model to find out critical pixel regions. The other channel sensing branches are able to provide information for channel gains, enabling the model to select semantic blocks at different scales. Then, the output policy network for semantic extraction is defined as follows:

| (1) |

where denotes the input LR image and channel gain respectively. is a ResNet18 for image feature extraction while is a multilayer perceptron (MLP). is also a MLP for joint decision-making. respectively indicates the corresponding parameters of these parts.

As shown in Fig. 2, it is assumed that the HR images are divided into 4 4 blocks, where each block provides different semantics for the back-end model. The semantic extraction module can choose any combination of them. Therefore, p in Eq. (1) defines sixteen Bernoulli distributions where indicates the probability of each block in the image being a semantic at each dimension . The actions performed by the policy network can be sampled at each dimension , where indicates that the -th semantic block is extracted for compression and otherwise. Therefore, the joint probability density function of the output of the policy network can be expressed as

| (2) |

Then we would model the policy network as a single-step MDP, and the detailed optimization process will be introduced in Sec. IV.

III-B Block-wise Deep CS

Compressed sensing, also called sparse sampling, is a technique for finding sparse solutions of underdetermined linear systems, which has been widely used in the field of signal processing in the past two decades. It maps a linear signal to the compressed domain through a sampling matrix. For high-dimensional image signals, the sampling procedure is performed block by block:

| (3) |

where is the vector form of ’th sub-block in pixel domain. is the compressed signal of . denotes a sampling matrix, which is implemented by a learnable convolution kernel in our system. Note that the sub-block in CS is different from the semantic blocks introduced in previous section.

Traditional methods of recovering the signal need to solve a convex optimization problem of -norm, which is usually of high complexity [33]. In recent years, with the rise of deep learning, some researchers have used CNN to reconstruct the signal [34]. Since the sampling matrix can also be implemented through a convolutional layer, both compression and reconstruction steps can be trained through an end-to-end CNN. In our system, is a vector reshaped from an image block with channels RGB, and denotes the height and width of the sampled sub-blocks. We use a kernel to convolve the image:

| (4) |

where is the convolution kernel without bias, , is the sampling rate. . indicates a convolution operation with a stride of k. means to stitch all image blocks into a whole image. is the total number of image blocks. The elements in the convolution kernel and the sampling matrix are one-to-one correspondence. The two procedures described by Eq. (3) and Eq. (4) are equivalent.

A denoising-based approximate message passing algorithm (D-AMP) was proposed in [33], which alternately iterates the residual variables and the reconstructed signal through a denoising function:

| (5) |

| (6) |

where is the iterative residual in the compressed domain, is a Gaussian denoising filter with parameter . is the divergence of the output Gaussian distribution for the correction of the residual. is the left inverse matrix of .

In order to use CNN to replace the residual correction term to avoid complicated divergence calculations, we can use a residual structure CNN to predict a new residual from the original term, as illustrated in Fig. 3. Suppose that the residual consists of iterations, in each iteration represents the reconstructed image output from the previous stage. The residuals for each sub-block in the compressed domain can be calculated through the sampling matrix. The residual iteration in the compressed domain may still cause serious blocking effects. Mapping the residuals back to the pixel domain with for correction can solve this problem:

| (7) |

| (8) |

where denotes the residual vector of ’th image block at ’th stage, is the residual image at ’th stage. represents the kernel corresponding to , indicates the transposed convolution with a stride of .

Then update the residual in the pixel domain

| (9) |

where is the parameters of the residual network with two convolutional layers. represents the updated residuals at -th stage.

Finally, the image recovered at this stage is output by the denoising network:

| (10) |

where indicates the parameters of the denoising network with three convolutional layers.

Since only the semantic part of the image is transmitted, but the reconstruction procedure is performed on the entire image, the compressed values of the non-semantic part is set to 0 to reduce the computational complexity of the reconstruction network. Therefore, the parameters of all layers do not contain bias.

III-C Image Transmission Model

The transmission latency of the system is mainly determined by the transmission rate and the number of bits transmitted. The transmission latency of the uplink is considered in the proposed system. We assume that the channel between the drones and the BS follows the stationary Rayleigh fading distribution, where the channel gain and transmission rate remain static within a small time interval. Then, the uplink transmission rate at time slot is as follows:

| (11) |

where denotes the BS bandwidth allocated to drones. The signal-to-interference-plus-noise ratio (SINR) for the signals received at the BS is expressed as , where is the transmission power of drones. The additive white Gaussian noise (AWGN) is . The channel gain for the signals received at the BS is for instantaneous perception of communication environment. The distance between drones and BS is the most important factor affecting the channel gain. We will consider the path loss exponent and Rayleigh distribution with unit mean. Generally, channel gain is an unlimited continuous variable. It can be quantified in {-30 dB, -20 dB, -10 dB, 0 dB, 10 dB, 20 dB, 30 dB}, making it easier for the model to discriminate.

Assuming that the policy network has extracted a total of semantic blocks, the number of bits required to be transmitted is given by

| (12) |

where and represent the height and width of the HR images. implies number of quantization bits in compressed domain.

Then the uplink transmission latency is given by

| (13) |

As mentioned above that the transmission rate in each time slot under a stationary channel is constant, the latency is approximately the ratio of the number of transmitted bits to .

IV System Optimization

In this section, we will give a detailed introduction of the optimization procedure of the policy network and the deep CS network for semantic extraction respectively.

IV-A Optimization of Policy Network

As described in Sec. III, the policy network is modeled as a single-step MDP. At any time, the image captured by the sensor and the channel gain sensed in real time are two independent variables. For the proposed model, the image is a high-dimensional continuous variable while the channel gain is a single discrete value. The output policy p implies discrete actions that may be performed by the agent. It is tricky to optimize our high-dimensional system with traditional reinforcement learning methods based on value functions. Policy gradient is not only suitable for our high-dimensional system, but also capable of leanring random policies that cannot be learned by the value function. The purpose is to optimize the parameters by maximizing the expected value of cumulative rewards on all policy trajectories:

| (14) |

where is a trajectory of states, actions, and rewards sampled from an interaction with our policy, and represents the sum of all rewards for this trajectory.

For our single-step MDP process, trajectory is composed of and a. The objective is to maximize the accuracy of the back-end target model while minimizing the uplink transmission latency of the system by maximizing a reward function:

| (15) |

where and respectively represent the total number of and . indicates the single-step reward from the system with the input of and , which is defined by the system’s transmission latency and the correctness of the target model

| (16) |

where denotes the -th output of the backend target model. is the label of input X, and is a function negatively correlated to . A penalty term is represented by . Two kinds of are tried in proposed system

| (17) |

where is a positive hyperparameter.

The gradients of can be updated along the gradient ascent. To avoid over-update of the network with great rewards and keep training stable, an offset need to be subtracted:

| (18) |

where is the system reward when performing a baseline action , which directly selects the image blocks according to the larger value of the output policy without sampling

| (19) |

Since the parameters of the policy network are divided into three parts, and the two input variables are independent of each other, we use the control variable method to train it. This optimization process is divided into three stages. First, the channel gain is kept changing and are kept constant in the outer loop, while and are updated with different LR images in the inner loop. In the middle stage, the LR images are kept changing and are kept constant in the outer loop, while and are updated with different channel gains in the inner loop. In the last stage, are updated synchronously with the LR images and channel gains being sampled randomly.

IV-B Optimization of Deep CS Model

As illustrated in Sec. III, the sampling and compression processes of CS are carried out simultaneously. By deploying the sampling matrix in the front-end UAV, the measured values of semantic blocks in compressed domain can be obtained quickly. The measurement matrices of traditional CS algorithms are usually Gaussian matrices or Hadamard matrices, which should be constructed to satisfy Restricted Isometry Property (RIP) conditions. In proposed system, can be implemented through a convolutional layer and an optimal parameter within the kernel can be learned over the data distribution. Because the deep reconstruction procedure requires the auxiliary calculation of the inverse matrix , it is necessary to pretrain the sampling matrix through an auxiliary layer due to the underivativity of the inversion operation. Assuming that the transposed convolution kernel of the auxiliary layer is with a stride of , an initial reconstruction process can be given by

| (20) |

where Eq. (4) and Eq. (20) compose a pair of compressing and preliminary reconstruction process. The pre-reconstructed image can be approximated by minimizing the mean square error (MSE) loss between X and :

| (21) |

Once the optimal sampling kernel is learned, can be calculated according to the inverse matrix . The pre-reconstructed image for each HR image can be calculated through this auxiliary, which can be used to optimize the deep reconstruction layers in the subsequent stages. Given the set of original images and reconstructed images , the parameters in multi-stage reconstruction layers can also be updated with MSE loss:

| (22) |

Although only semantic blocks are compressed and transmitted, in order to avoid the block effect caused by reconstruction, the training process of the entire reconstruction network is carried out on the full images, without any special operations for any macroblock. Since the reconstructed network does not contain bias, setting the measurement values of the non-semantic regions to 0 during reconstruction will not affect the reconstruction quality too much.

When optimizing the entire system, the two modules are updated in an alternate iterative manner. First, the deep CS model is trained with the full HR images. Afterwards, the semantic extraction model can be trained by this model, since the calculation of system rewards depends on the quality of compression reconstruction. Driven by policy network trained at the previous stage, the deep CS model can be finetuned with HR images.

V Simulation Results and Discussion

In this section, we evaluate the performance of the proposed task oriented algorithm for joint image compression and classification with computer simulations. In addition, we compare the proposed algorithm with other six manual designed and traditional content perception policies.

V-A Simulation Setup

In this section, the experimental setup for the proposed scheme has been introduced. The experiments are conducted in an Ubuntu operating system (CPU Intel core i7-10700 2.9 GHz; memory 64GB, GPU NVIDIA GeForce RTX 3090, which contains 10496 CUDA computing core units and 24GB graphics memory). We use Python and deep learning framework of Pytorch for simulation.

The transmission power of the drones, the noise of the channel and the bandwidth are fixed to 15 dBm, -97 dB, and 100 KHz, respectively. We divide the channel gains into 7 states as the input of the perception network: dB. As a result, their corresponding 7 uplink transmission rates are kbps.

We evaluated the performance of the proposed algorithm on the Aerial Image Dataset (AID) dataset [35] which has 10000 images with 30 different scene classes of size . The 30 aerial scene types are as follows: airport, bare land, baseball field, beach, bridge, center, church, commercial, dense residential, desert, farmland, forest, industrial, meadow, medium residential, mountain, park, parking, playground, pond, port, railway station, resort, river, school, sparse residential, square, stadium, storage tanks and viaduct. Each class contains 220 to 420 samples. samples are randomly selected as the training set with the other samples as the test set. These two sets are consistent during training and testing stages of all aforementioned modules. The target modeled deployed on the back-end server is a ResNet34 with the input size of . Therefore, all HR images are resized to this size. The size of each semantic block is . The receptive field of proposed deep CS model compress is . The sampling rate is set to 0.3 for all of the semantic blocks. All experiments use 8-bit quantization in the compressed domain by default.

V-B Simulation Results

In the experimental environment set up in the previous section, the performance of two reward functions have been tested, as shown in Tab. I. The first two rows of Tab. I indicate 7 channel states and their corresponding transmission rates respectively. The third row means the transmission latency of full HR images in the compressed domain. The two reward functions are and . represents the average number of semantic blocks extracted and transmitted by the two policies learned under different channel conditions. indicates the average semantic data transmitted by the two policies under different channel conditions. is the average transmission latency in these 7 states.

It can be seen from Tab. I that the proposed method can extract different amounts of blocks for transmission according to channel conditions. Additionally, the proposed policy learning method has been compared with 6 other policies under the premise of transmitting the same number of semantic blocks. Assuming that under the gain index , there are samples in the transmitted samples can be correctly classified by the back-end model by the proposed method, then its accuracy is calculated by . Assuming that under the channel condition , the number of semantic blocks transmitted by the -th test sample is , then other policies are controlled to also transmit semantic blocks, which can ensure the fairness of the experiment.

| (dB) | -30 | -20 | -10 | 0 | 10 | 20 | 30 | ||

|---|---|---|---|---|---|---|---|---|---|

| (kbps) | 105 | 355 | 675 | 1006 | 1338 | 1670 | 2003 | ||

| (ms) | 3438 | 1018 | 535 | 359 | 270 | 216 | 180 | ||

| 9.129 | 9.273 | 10.05 | 10.727 | 11.311 | 11.36 | 11.735 | |||

| (kB) | 25.766 | 26.172 | 28.365 | 30.276 | 31.924 | 32.062 | 33.121 | ||

| (ms) | 1962 | 590 | 336 | 241 | 191 | 154 | 132 | ||

| Accuracy | proposed | 0.822 | 0.831 | 0.867 | 0.893 | 0.912 | 0.912 | 0.919 | |

| policy1 | 0.709 | 0.72 | 0.757 | 0.794 | 0.825 | 0.826 | 0.847 | ||

| policy2 | 0.604 | 0.617 | 0.689 | 0.736 | 0.772 | 0.775 | 0.807 | ||

| policy3 | 0.786 | 0.801 | 0.839 | 0.863 | 0.884 | 0.886 | 0.9 | ||

| policy4 | 0.781 | 0.793 | 0.834 | 0.866 | 0.883 | 0.885 | 0.897 | ||

| policy5 | 0.314 | 0.307 | 0.419 | 0.488 | 0.529 | 0.546 | 0.582 | ||

| policy6 | 0.365 | 0.38 | 0.461 | 0.54 | 0.589 | 0.588 | 0.62 | ||

| 9.594 | 9.703 | 10.27 | 11.029 | 11.442 | 11.57 | 12.522 | |||

| (kB) | 27.077 | 27.384 | 28.986 | 31.128 | 32.294 | 32.654 | 35.342 | ||

| (ms) | 2061.38 | 617.019 | 343.174 | 247.368 | 192.976 | 156.336 | 141.146 | ||

| Accuracy | proposed | 0.833 | 0.839 | 0.87 | 0.901 | 0.911 | 0.914 | 0.931 | |

| policy1 | 0.717 | 0.72 | 0.765 | 0.811 | 0.837 | 0.844 | 0.884 | ||

| policy2 | 0.632 | 0.64 | 0.689 | 0.752 | 0.789 | 0.796 | 0.846 | ||

| policy3 | 0.802 | 0.805 | 0.846 | 0.875 | 0.892 | 0.894 | 0.92 | ||

| policy4 | 0.793 | 0.799 | 0.833 | 0.869 | 0.885 | 0.889 | 0.913 | ||

| policy5 | 0.376 | 0.389 | 0.457 | 0.535 | 0.58 | 0.597 | 0.691 | ||

| policy6 | 0.423 | 0.437 | 0.496 | 0.575 | 0.609 | 0.621 | 0.71 | ||

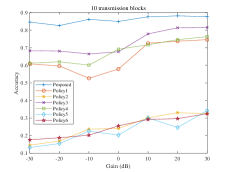

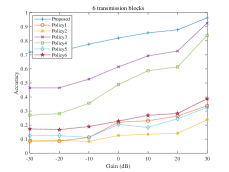

Now we will give a detailed introduction about several other comparative policies. selects semantic blocks from left to right in line order. extracts semantic blocks from top to bottom in the order of the columns. selects semantic blocks in a clockwise order from the center to the outside. On the contrary, extracts semantic blocks in a counterclockwise order from the center to the outside. randomly chooses semantic blocks that are the same as the proposed method for transmission each time. selects semantic blocks in order from largest to smallest in accordance with the saliency of semantic blocks. Tab. I reveals that the accuracy of proposed aerial image classification task offloading scheme is at least two percentage points higher than that of other traditional methods regardless of the channel conditions. Moreover, the proposed method can adaptively adjust the optimal policy of semantic extraction based on the change of channel conditions, focusing more on the semantic transmission latency when the channel conditions are poor while focusing on the completion of back-end classification task under better channel conditions.

Fig. 4 shows the number distribution of transmission blocks under different channel conditions with two different reward functions. The horizontal axis represents block numbers for transmission and the vertical axis represents number of samples that transmit semantic blocks , where indicates a 0-1 function being 1 when the condition in brackets is met, 0 otherwise. It is pretty obvious that the model tends to transmit more semantic blocks under high channel gain and tends to transmit fewer semantic blocks under low channel gain.

The back-end accuracies of target model with two reward functions compared to other policies under different channel conditions are shown in Fig. 5 and Fig. 6. The horizontal axis in each figure still shows 7 different channel gains, and the vertical axis denotes the classification accuracy of the back-end model under the constraints of transmission blocks. The number of samples successfully classified by the back-end model under the constraint of is . Therefore, the accuracy on the curves can be calculated as . The two reward functions of proposed system can make the back-end accuracy better than other policies under the constraints of the same channel gain and transmission volume, especially in a poor channel environment.

Deep reconstruction of full HR images at the final stage are shown in Fig. 7, where from left to right are the original image, reconstructed full image with . Fig. 8 shows the policies learned with two reward functions. From left to right, they are the semantic blocks reconstructed under 7 channel conditions.

VI Conclusion

In this article, we have studied the task oriented communication algorithm for image classification task offloading in aerial systems. In the scenario of aerial image transmission for scene classification task, a joint semantic extraction and compression model is proposed. Under the guidance of the policy gradient-based deep reinforcement learning algorithm, considering the system’s uplink transmission latency and the classification accuracy of the back-end target model as the optimization objective, the proposed system can make optimal semantic extraction decisions under different channel conditions. Simulation results have shown that the proposed joint perception for decision scheme is feasible that a optimal balance between uplink transmission latency and classification accuracy can be achieved. Compared to the traditonal methods, it significantly improves the classification accuracy under the same transmission condition.

References

- [1] W. Shi, J. Cao, Q. Zhang, Y. Li, and L. Xu, “Edge computing: Vision and challenges,” IEEE Internet Things J., vol. 3, no. 5, pp. 637–646, Oct. 2016.

- [2] Y. Mao, C. You, J. Zhang, K. Huang, and K. B. Letaief, “A survey on mobile edge computing: The communication perspective,” IEEE Commun. Surv. Tutor., vol. 19, no. 4, pp. 2322–2358, 4Q. 2017.

- [3] J. Chakareski, “UAV-IoT for next generation virtual reality,” IEEE Trans. Image Process., vol. 28, no. 12, pp. 5977–5990, Dec. 2019.

- [4] E. Karakose, “Performance evaluation of electrical transmission line detection and tracking algorithms based on image processing using UAV,” in 2017 Int. Artif. Intell. Data Process. Symp. (IDAP), Malatya, Turkey, 16–17 Sept. 2017, pp. 1–5.

- [5] B. Jiang, J. Yang, H. Xu, H. Song, and G. Zheng, “Multimedia data throughput maximization in internet-of-things system based on optimization of cache-enabled UAV,” IEEE Internet Things J., vol. 6, no. 2, pp. 3525–3532, Apr. 2019.

- [6] E. C. Strinati and S. Barbarossa, “6G networks: Beyond shannon towards semantic and goal-oriented communications,” Comput. Netw., vol. 190, p. 107930, 2021.

- [7] A. Mostaani, T. X. Vu, and S. Chatzinotas, “Task-oriented communication system design in cyber-physical systems: A survey on theory and applications,” arXiv preprint arXiv:2102.07166, 2021.

- [8] L. Duan, J. Liu, W. Yang, T. Huang, and W. Gao, “Video coding for machines: A paradigm of collaborative compression and intelligent analytics,” IEEE Trans. Image Process., vol. 29, pp. 8680–8695, 2020.

- [9] E. Bourtsoulatze, D. Burth Kurka, and D. Gunduz, “Deep joint source-channel coding for wireless image transmission,” IEEE Trans. Cogn. Commun. Netw., vol. 5, no. 3, pp. 567–579, Sept. 2019.

- [10] D. B. Kurka and D. Gunduz, “Deepjscc-f: Deep joint source-channel coding of images with feedback,” IEEE J. Sel. Areas Inf. Theory, vol. 1, no. 1, pp. 178–193, May 2020.

- [11] J. Chang, Z. Zhao, L. Yang, C. Jia, J. Zhang, and S. Ma, “Thousand to one: Semantic prior modeling for conceptual coding,” in 2021 Int. Conf. Multimedia Expo (ICME), Shenzhen, China, 5–9 July 2021, pp. 1–6.

- [12] J. Wang, Y. Duan, X. Tao, M. Xu, and J. Lu, “Semantic perceptual image compression with a laplacian pyramid of convolutional networks,” IEEE Trans. Image Process., vol. 30, pp. 4225–4237, Mar. 2021.

- [13] M. Li, K. Zhang, J. Li, W. Zuo, R. Timofte, and D. Zhang, “Learning context-based nonlocal entropy modeling for image compression,” IEEE Trans. Neural Netw. Learn. Syst, pp. 1–14, Aug. 2021.

- [14] S. Zhou, Y. He, Y. Liu, C. Li, and J. Zhang, “Multi-channel deep networks for block-based image compressive sensing,” IEEE Trans. Multimedia, vol. 23, pp. 2627–2640, Aug. 2021.

- [15] H. Xie and Z. Qin, “A lite distributed semantic communication system for internet of things,” IEEE J. Sel. Areas Commun., vol. 39, no. 1, pp. 142–153, Jan. 2021.

- [16] H. Xie, Z. Qin, G. Y. Li, and B.-H. Juang, “Deep learning enabled semantic communication systems,” IEEE Transactions on Signal Processing, vol. 69, pp. 2663–2675, Apr. 2021.

- [17] P. Jiang, C.-K. Wen, S. Jin, and G. Y. Li, “Deep source-channel coding for sentence semantic transmission with HARQ,” arXiv preprint arXiv:2106.03009, 2021.

- [18] Q. Zhou, R. Li, Z. Zhao, C. Peng, and H. Zhang, “Semantic communication with adaptive universal transformer,” arXiv preprint arXiv:2108.09119, 2021.

- [19] Z. Weng and Z. Qin, “Semantic communication systems for speech transmission,” IEEE Journal on Selected Areas in Communications, vol. 39, no. 8, pp. 2434–2444, Aug. 2021.

- [20] H. Xie, Z. Qin, and G. Y. Li, “Task-oriented semantic communications for multimodal data,” arXiv preprint arXiv:2108.07357, 2021.

- [21] R. S. Sutton, D. McAllester, S. Singh, and Y. Mansour, “Policy gradient methods for reinforcement learning with function approximation,” in Adv. Neural Inf. Process. Syst. (NIPS), Denver, CO, USA, 29 Nov.–4 Dec. 1999, p. 1057–1063.

- [22] D. Silver, G. Lever, N. Heess, T. Degris, D. Wierstra, and M. Riedmiller, “Deterministic policy gradient algorithms,” in Proc. 31st Int. Conf. Mach. Learn., vol. 32, no. 1, Bejing, China, 22–24 Jun. 2014, pp. 387–395.

- [23] N. Le, V. S. Rathour, K. Yamazaki, K. Luu, and M. Savvides, “Deep reinforcement learning in computer vision: A comprehensive survey,” arXiv preprint arXiv:2108.11510, 2021.

- [24] J. Li, X. Shi, J. Li, X. Zhang, and J. Wang, “Random curiosity-driven exploration in deep reinforcement learning,” Neurocomputing, vol. 418, pp. 139–147, 2020.

- [25] B. Uzkent and S. Ermon, “Learning when and where to zoom with deep reinforcement learning,” in 2020 IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., Seattle, WA, USA, 13–19 June 2020, pp. 12 342–12 351.

- [26] X. Yuan, L. Ren, J. Lu, and J. Zhou, “Enhanced bayesian compression via deep reinforcement learning,” in 2019 IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. (CVPR), Long Beach, CA, USA, 16–20 June 2019, pp. 6946–6955.

- [27] H. Hu, H. Shan, C. Wang, T. Sun, X. Zhen, K. Yang, L. Yu, Z. Zhang, and T. Q. S. Quek, “Video surveillance on mobile edge networks—a reinforcement-learning-based approach,” IEEE Internet Things J., vol. 7, no. 6, pp. 4746–4760, June 2020.

- [28] X. Li, J. Shi, and Z. Chen, “Task-driven semantic coding via reinforcement learning,” IEEE Trans. Image Process., vol. 30, pp. 6307–6320, July 2021.

- [29] D. Li, H. Wu, J. Zhang, and K. Huang, “A2-RL: aesthetics aware reinforcement learning for image cropping,” in 2018 IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. (CVPR), Salt Lake City, UT, USA, 18–22 June 2018, pp. 8193–8201.

- [30] X. Zhang, Z. Li, and J. Jiang, “Emotion attention-aware collaborative deep reinforcement learning for image cropping,” IEEE Trans. Multimedia, vol. 23, pp. 2545–2560, Aug. 2021.

- [31] X. Zhang, Y. Song, Z. Li, and J. Jiang, “PR-RL:portrait relighting via deep reinforcement learning,” IEEE Trans. Multimedia, pp. 1–1, July 2021.

- [32] D. Yarats, I. Kostrikov, and R. Fergus, “Image augmentation is all you need: Regularizing deep reinforcement learning from pixels,” in 9th Int. Conf. Learn. Representations (ICLR), Virtual Event, Austria, 3–7 May 2021, pp. 1–22.

- [33] C. A. Metzler, A. Maleki, and R. G. Baraniuk, “From denoising to compressed sensing,” IEEE Trans. Inf. Theory, vol. 62, no. 9, pp. 5117–5144, Sept. 2016.

- [34] J. Zhang and B. Ghanem, “ISTA-Net: Interpretable optimization-inspired deep network for image compressive sensing,” in 2018 IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. (CVPR), Salt Lake City, UT, USA, 18-22 June 2018, pp. 1828–1837.

- [35] G.-S. Xia, J. Hu, F. Hu, B. Shi, X. Bai, Y. Zhong, L. Zhang, and X. Lu, “AID: A benchmark data set for performance evaluation of aerial scene classification,” IEEE Trans. Geosci. Remote Sens., vol. 55, no. 7, pp. 3965–3981, July 2017.