Task-oriented Embedding Counts: Heuristic Clustering-driven Feature Fine-tuning for Whole Slide Image Classification

Abstract

In the field of whole slide image (WSI) classification, multiple instance learning (MIL) serves as a promising approach, commonly decoupled into feature extraction and aggregation. In this paradigm, our observation reveals that discriminative embeddings are crucial for aggregation to the final prediction. Among all feature updating strategies, task-oriented ones can capture characteristics specifically for certain tasks. However, they can be prone to overfitting and contaminated by samples assigned with noisy labels. To address this issue, we propose a heuristic clustering-driven feature fine-tuning method (HC-FT) to enhance the performance of multiple instance learning by providing purified positive and hard negative samples. Our method first employs a well-trained MIL model to evaluate the confidence of patches. Then, patches with high confidence are marked as positive samples, while the remaining patches are used to identify crucial negative samples. After two rounds of heuristic clustering and selection, purified positive and hard negative samples are obtained to facilitate feature fine-tuning. The proposed method is evaluated on both CAMELYON16 and BRACS datasets, achieving an AUC of 97.13% and 85.85%, respectively, consistently outperforming all compared methods.

keywords:

\KWDComputational pathology , Whole slide image classification , Multiple instance learning , Task-oriented feature fine-tuning , Heuristic clustering1 Introduction

Recent advancements [57] in scanning systems, imaging technologies, and storage devices have enabled a substantial increase in the production of digital pathology slides, commonly known as whole slide images (WSI). This ever-increasing volume of WSI results in a manpower shortage dilemma in pathological analysis. To alleviate this dilemma, leveraging computerized algorithmic methods for analyzing vast quantities of WSI has emerged as a promising solution [59, 40]. The process of using artificial intelligence to analyze WSI is commonly referred to as computational pathology [1]. Computational pathology can provide patients and clinicians with more objective diagnostic and prognostic results, in the field of tumor regions detection[58], immunohistochemistry scores [44], cancer staging [64, 63], virtual staining [68], mitosis detection [53, 52], and glandular segmentation [13, 25, 56].

One of the central aims of computational pathology is to discern disease types, a task commonly referred to as WSI classification. Diverging from natural image classification tasks, one WSI possesses gigapixels, thus directly feeding a WSI into a convolutional neural network (CNN) would result in memory overflow. One of the typical solutions involves segmenting WSI into patches to reduce the processing burden. However, this approach brings another challenge: given the necessity for specialized domain knowledge in pathological image analysis and the extensive number of patches, acquiring patch-level annotations is impractical.

To tackle this challenge, multiple instance learning (MIL) [31, 41, 34, 73, 74, 35, 71, 76, 69, 60, 50, 36, 21, 14], a type of weakly supervised learning, is extensively utilized for WSI classification due to its capability [24, 23], where the whole slide image is treated as a bag of numerous unlabeled instances (patches). Originally designed for binary classification, MIL stipulates that a bag is considered positive if at least one instance within it is positive; otherwise, it is negative. Multiple instance learning networks typically comprise three components: a feature encoder that embeds patches into low-dimensional feature vectors, a MIL aggregator that integrates patch-level features to bag-level features, and a multi-layer perception (MLP) that predicts the category of the bag-level features. In natural image classification, MIL training is usually trained in an end-to-end paradigm. However, directly applying this paradigm to pathological images poses challenges [30, 55, 31, 41] resulting from the inability to store all intermediate feature maps in parallel reported in [71].

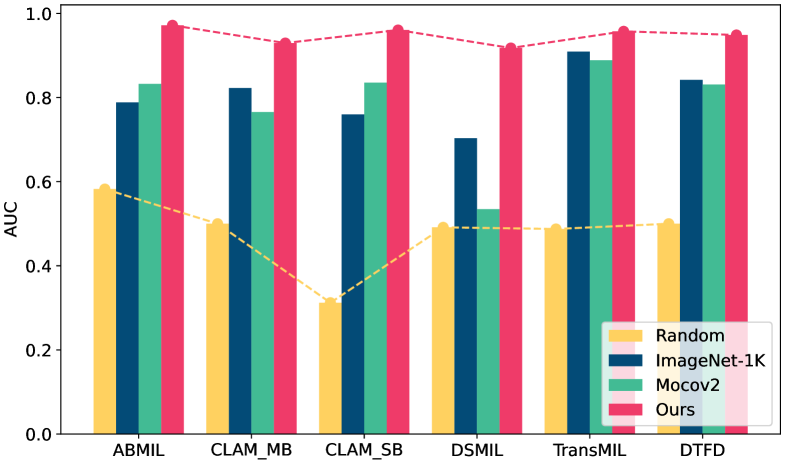

A common approach involves training the feature encoder and the aggregator separately. This typically entails using a pre-trained encoder to extract features, which is then held constant while solely optimizing the MIL aggregator. Currently, several strategies exist for updating the feature encoder, including initializing weights randomly, employing weights pre-trained on natural images through supervised learning, utilizing weights pre-trained on homogeneous images via self-supervised learning (SSL), and leveraging weights pre-trained on task-oriented images via supervised learning. Our observation on CAMELYON16, as depicted in Fig. 1, demonstrates that employing randomly initialized weights for the feature encoder yields unsatisfactory results. Conversely, utilizing pre-trained weights from ImageNet-1k tends to be a more effective choice. However, due to the inherent differences between natural and pathological images, these pre-trained weights may not be optimal for feature extraction in pathological contexts [35]. Alternatively, utilizing weights pre-trained via self-supervised learning helps bridge the domain gap, resulting in superior performance in ABMIL and CLAM_SB. Nonetheless, this approach may yield suboptimal results as it lacks task-specific information. Furthermore, these non-task-oriented methods are significantly influenced by the efficacy of the aggregator.

In response to these challenges, task-oriented feature fine-tuning has emerged as a profitable approach, which facilitates the optimization of the encoder using task-specific information. In this paradigm, the attention score introduced by [31] appears as a logical choice to distill patch-level information from bag-level predictions. However, [69] suggests that extreme attention distribution and imprecise focus can lead to model overfitting, particularly when updating the feature encoder based on samples with noisy supervision. To mitigate these issues, some methods identify instances with the lowest attention scores as negative samples for further training. While this method is limited by the inability to identify crucial hard negative samples.

To address the aforementioned challenges, we propose HC-FT which contains a heuristic clustering strategy that merges conventional clustering processes with the pseudo label strategy for task-oriented feature fine-tuning. This strategy allows us to clean positive samples and detect hard negative samples. The cleaning process tackles the issue of potential encoder overfitting to noisy data observed in previous methods. Simultaneously, the detection of hard negative samples enhances feature discrimination and mitigates the problem of overly concentrated attention. In conclusion, our contribution is as follows:

-

1.

In the realm of multiple instance learning for WSI classification, our observation indicates the significance of the encoder. We found that discriminative feature embeddings can yield effective results across various aggregators.

-

2.

To address potential performance degradation caused by noisy samples, we propose a heuristic clustering strategy aimed at ensuring the purity of information to update the encoder. Additionally, we incorporate hard negative samples to enhance the feature discrimination.

-

3.

Extensive experiments conducted on two public datasets demonstrate the superiority of our method. Furthermore, visualization results provide compelling evidence that our features possess considerable discrimination.

2 Related works

2.1 Multiple instance learning for WSI classification

In general, MIL approaches are categorized into instance-based and embedding-based methods. Instance-based methods focus on acquiring labels for individual instances and aggregating them for bag-level classification. Bag-level prediction is aggregated by the probability of all patches with Mean or Max-pooling [7]. As for embedding-based methods, they embed all patch-level features to form the feature set of bags, directly predicting the bag’s features. Due to their superior performance and enhanced interpretability, these methods are widely used in WSI classification.

In the realm of embedding-based MIL, a notable advancement is the attention-based MIL (ABMIL) introduced by [31]. This pioneering method leverages gated attention mechanisms to assign learnable weights to features at the patch level, marking a significant milestone in the evolution of MIL techniques. Concurrently, [41] have enhanced performance by employing attention mechanisms for the selective sampling of positive and negative instances, coupled with cluster constraints to mine patch-level information and integrate bag-level predictions. Furthermore, [34] identifies key instances and their relations with other instances for bag-level embedding, this approach also underscores the potential of self-supervised methods in enhancing model performance. [74] replaced the self-attention mechanism with a multi-head attention mechanism and a masking strategy is applied to patches with high attention to mitigate issues of excessive attention concentration and model overfitting.

However, several MIL models consider only the semantic information and neglect the spatial relationships between patches. This results in the loss of valuable positional information and overlooks the significance of context in pathological diagnosis. This oversight has been addressed by [34, 54, 37]. [34] have utilized multi-scale information to construct pyramid-structured data, thereby implicitly incorporating spatial information. Expanding upon this, [54] have proposed a model based on the transformer architecture to map the interrelations between patches, while [37] have employed backdoor adjustment for intervention training to mitigate biases introduced by contextual priors. Another method [46, 75, 2, 36] to represent the spatial relationships between different patches is through graph theory, where [75] have integrated MIL with deep graph convolutional networks, demonstrating superior performance in predicting lymph node metastasis. Similarly, [2] have applied graph neural networks to learn the relationships between patches, using graph pooling to infer patches of higher relevance automatically.

Other researches concentrate on the representation of bags, [4] and [33] identifying prominent patches to form bag embeddings. [73] introduced the concept of pseudo bags, developing a two-layer distillation framework that forms diverse bags through distinct distillation methods.

These approaches have to decouple the training of the feature encoder and the MIL aggregator to avoid the memory bottleneck [71], resulting in performance degradation.

2.2 Self-supervised learning

Self-supervised learning, an emergent training paradigm within representation learning [72, 28, 17, 27, 18, 11, 10]. Unlike supervised learning, which is constrained by the availability of labeled data, self-supervised learning can learn from a vast amount of unlabeled data [16, 42]. In the domain of computer vision, self-supervised learning is capable of generating universal visual features that can even surpass the performance of models trained on labeled data, even on highly competitive benchmarks like ImageNet-1K [62, 28]. It is predominantly employed in computational pathology to construct patch embeddings. Given its capacity to derive domain-specific patch embeddings without the need for annotated data, leveraging extensive pathological data for SSL to obtain pre-trained weights emerges as an effective strategy.

The proliferation of patch-level self-supervised training paradigms within computational pathology has been noteworthy [34, 22, 3]. For instance, contrastive learning facilitates the mapping of patches and their augmented versions [28] (typically through pathology-relevant transformations such as random rotation, cropping, and stain jitter) to analogous embeddings. By utilizing pretext tasks, such as discerning correspondences between global and local-level details [15] or reconstructing randomly masked areas within patches [27], as well as leveraging similarities between patches from the same whole slide image or those sharing labels [65]. Self-supervised training methods, which learn similar spatial semantic information, are increasingly used for pre-trained feature encoders. Despite their ability to extract valuable information without annotations, SSL approaches require massive data and computational resources. As a result, a common approach is to use publicly available pre-trained weights on pathological datasets. Yet, the effectiveness of this strategy is limited due to variations in feature spaces across different tasks.

2.3 End-to-end training and fine-tuning

Early research has explored end-to-end training of MIL methods to update features. One approach centered around optimizing the training workflow, [12] leveraged unified memory architectures, allowing GPUs direct access to host memory for streamlined end-to-end model training. [47] capitalized on the locality of convolution operations in CNN to diminish memory demands, [48] also introduced an enhanced streaming CNN technique, tailored to optimize whole slide images for end-to-end training. These strategies have not achieved widespread adoption due to their computationally demanding nature. An alternative perspective focuses directly on WSIs. [55] developed an end-to-end training framework utilizing patch clustering, and [61] applied unsupervised techniques to compress WSIs. While, in theory, end-to-end training models are posited to deliver optimal outcomes, their propensity for overfitting hampers their generalizability.

Fine-tuning is indispensable for acquiring task-oriented features while it is unavailable for direct use in WSI classification due to memory bottlenecks [71]. A pragmatic strategy involves updating the feature encoder with a subset of samples. This task-oriented method iteratively transfers supervised information from one round to the next to update feature representations. For example, [67, 66] sampled patches from WSIs during training. [51] proposed a weakly supervised knowledge distillation concept to update the feature encoder using soft labels while discarding easily classified samples to construct challenging pseudo bags. [71] amalgamated bayesian and collaborative learning principles, applying a quality-aware strategy to update the feature encoder with pseudo labels. [8] engaged in iterative sampling of patches from WSIs to train the feature pyramid encoder, and [60] employed masking on patches with high attention scores, compelling teacher-student models to learn more discriminative features. Although these methods successfully updated the feature encoder, the information used to update the feature encoder may be noisy, and the mask method to implicitly search for hard negative samples may cause the model to lose some key information from high attention patches.

3 Method

3.1 Problem statement

In a dataset of whole slide images with bag-level labels, denoted as , where represents a slide and represents its corresponding label. Our goal is to establish a mapping from the domain to the domain. However, learning direct mapping is extremely challenging due to the gigapixel nature of WSI. Thus, each slide is commonly segmented into patches for multiple instance learning, where denotes -th patch cut from -th slide, the number of patches in a slide is often different from others, and patch’s corresponding label remains unknown during training. In the binary MIL classification problem, the relationship between patch-level labels and bag-level labels typically follows the following pattern:

| (1) |

where represents the positive sample and represents the negative sample.

Multiple instance learning involves a three-step modeling process: (1) Instances are transformed into low-dimensional embeddings through a feature encoder:

| (2) |

(2) All instance embeddings are aggregated into a bag-level representation:

| (3) |

where the attention mechanism is often used for aggregation:

| (4) |

where represents the attention value of patch . In the case of ABMIL, a gated attention mechanism is adopted as follows:

| (5) |

(3) Predict the aggregated bag-level representation with an MLP as follows:

| (6) |

3.2 Heuristic clustering strategy

Task-oriented feature fine-tuning methods are usually based on the idea of distillation which is typically iterative. Take the first round of iteration as an example: (1) extract features with an encoder initialized with pre-trained weights which are trained usually on ImageNet-1K as Eq. 2; (2) train the aggregator and MLP following Eq. 3 and Eq. 6; (3) distill the patch-level information from the and ; (4) fine-tune the feature encoder by the patch-level information and update the features with the new encoder . Subsequently, the aforementioned steps can be repeatedly executed until reaching an optimal outcome or satisfying the termination criteria.

Taking the framework of task-oriented feature fine-tuning methods, a common distillation practice for feature encoder updating is the pseudo label strategy. In this context, a patch-level pseudo label is assigned to each selected instance . This supervised information is employed to update the feature encoder , making the selection of pseudo labels crucial. As learning too many noisy samples could devastate the fine-tuning process, we first introduce a pseudo label cleaning module. Furthermore, overly simplistic samples distilled may fail to provide the feature encoder with ample information, resulting in overfitting issues. Hence, we introduce a hard negative sample mining module, designed to furnish the training process with adversarial information, thereby enhancing the generalization ability.

Then we will introduce each component of the heuristic clustering-driven feature fine-tuning method (HC-FT) in Fig. 2.

3.2.1 Pseudo label initialization

In attention-based multiple instance learning, assigning each selected patch with the bag-level label based on the attention score seems a logical choice. However, as the attention score fails to reflect category information, we adopt a class-wise confidence-based pseudo label initialization as follows: (1) Given an optimal MIL model with , and , the attention score set and instance probability set for each slide can be obtained, where . Then a class-wise confidence score can be assigned to each patch as:

| (7) |

where denotes the class-wise probability, sharing the same dimension with . (2) By ranking the class-wise confidence score set in descending order for each slide, an ordered index set is obtained. (3) Assign patches with top confidences pseudo labels:

| (8) |

where represents the number of patches with highest confidence scores. Notably, increases with each iteration , following the dynamic adjustment scheme:

| (9) |

where is a hyper-parameter determined through experiments. Intuitively, the base number should dynamically change to accommodate bags containing varying numbers of patches. All patches are thus allocated into a high confidence score set and a low confidence score set .

3.2.2 Potential negative sample mining

Initially, clustering is conducted on all patches from the high-confidence set . The category of each cluster center is assigned based on the pseudo label content. As shown in Alg. 1, we first apply K-Means [39] to get clusters. In each cluster, we count the number of pseudo labels for each category, then assign the category pseudo label with the highest number to each cluster. We also introduce a threshold to eliminate those chaotic clusters.

For one class , we can obtain a set of clusters , where cluster centers represent centers of the class . Utilizing all class centers, we can mine more negative samples within the low confidence group . Specifically, for the cluster set , the distance between each patch in and class center can be calculated as:

| (10) |

The new pseudo label is assigned to each patch in by:

| (11) |

The WSI datasets have an inherent character in that the bag-level label is determined by the labels of the patches with the most severe lesions. In other words, all patch-level labels should be less than or equal to the bag-level label in a slide:

| (12) |

One patch that belongs to -th label cluster set while is bigger than its bag-level label is a potential negative sample, these patches are allocated in a set :

| (13) |

With Eq. 12, we can ensure the patches we mined are negative samples. We append a new category for these negative samples, hoping that the model will pay increased attention to them. For clarity, we summarize the process of heuristic clustering strategy in Alg. 2.

3.2.3 Pseudo label refinement

We integrate set and set as a new set, and repeat Alg. 1 to analyze the status of each new cluster.

A. Hard negative sample searching

Hard negative samples refer to negative samples that are difficult for the model to classify. We propose a hard sample mining module to identify hard negative samples for the model to learn, thereby enhancing the distinction ability of the encoder.

After the re-clustering process, a new set appears with filtering the as below:

| (14) |

The objective of re-clustering is to ascertain whether those identified as hard samples are still challenging to distinguish. The set is part of the final hard negative sample set, and the remaining portion originates from the high confidence set . Following the same step in Eq. 13 in high confidence set , we can get a hard negative samples set as:

| (15) |

Combined the two hard negative samples sets and , we can obtain:

| (16) |

where is the final hard negative sample set. In addition to containing negative samples with high attention scores, this set includes representative negative samples that are ignored by attention sorting, which provides more adversarial information for encoder fine-tuning.

B. Positive samples cleaning

The classification performance of the model mainly depends on the quality of positive samples. Incorrect pseudo labels can significantly cause the model to converge to a bad solution. Therefore, we undertake a cleaning process for pseudo labels derived from confidence scores. To be specific, given a center set and a high confidence set , patches whose pseudo labels align with the category represented by their cluster re-clustering are considered to be more accurate, Thus a filtering process is performed as below:

| (17) |

3.3 Task-oriented feature fine-tuning

Through the two aforementioned modules, we can obtain a patch-level dataset annotated with relatively clean pseudo labels for feature fine-tuning. Notably, the patch-level classification task typically encompasses classes, where initial classes consist of positive samples from each category, and the remaining classes represent the corresponding hard negative samples, excluding the non-tumor class.

4 Experiment

4.1 Datasets and metrics

Our experiments employ two publicly available whole slide image classification datasets, namely CAMELYON16 [26] and BRACS [5].

CAMELYON16 dataset is a binary classification dataset focused on the detection of early-stage breast cancer lymph node metastases. It consists of 399 hematoxylin and eosin (H&E) stained WSIs, sourced from two distinct medical institutions, with 270 allocated for training and 129 for testing. Additionally, this dataset provides detailed pixel-level manual annotations of metastatic tumor areas, thereby facilitating in-depth patch-level analysis and enhanced visualization capabilities. We conducted a three-fold cross-validation, where 270 training samples were divided into a training and a validation set at a ratio of 8:2. All slides were cut into non-overlapping patches of pixels at a magnification.

| Methods | Encoder weight | CAMELYON16 | BRACS | Average (%) | ||||||

| ACC (%) | AUC (%) | F1 (%) | ACC (%) | AUC (%) | F1 (%) | ACC | AUC | F1 | ||

| ABMIL [31] | w/Random | |||||||||

| w/ImageNet-1K | ||||||||||

| w/Barlow Twins | ||||||||||

| w/MoCo V2 | ||||||||||

| w/SWAV | ||||||||||

| w/WSI-FT | ||||||||||

| w/BCL | ||||||||||

| w/Ours | ||||||||||

| over others | ||||||||||

| CLAM [41] | w/Random | 45.27 | 48.25 | 29.15 | ||||||

| w/ImageNet-1K | 69.83 | 76.77 | 65.26 | |||||||

| w/Barlow Twins | 67.54 | 78.85 | 63.99 | |||||||

| w/MoCo V2 | 67.85 | 81.81 | 66.22 | |||||||

| w/SWAV | 72.11 | 88.44 | 71.99 | |||||||

| w/WSI-FT | 72.51 | 84.88 | 71.92 | |||||||

| w/BCL | 75.73 | 88.01 | 73.74 | |||||||

| w/Ours | ||||||||||

| over others | +2.84 | +2.28 | +2.88 | +3.83 | +1.00 | +0.04 | +4.10 | +1.64 | +4.22 | |

| DSMIL [34] | w/Random | 50.80 | 61.03 | 34.26 | ||||||

| w/ImageNet-1K | 64.87 | 74.50 | 63.30 | |||||||

| w/Barlow Twins | 62.21 | 69.80 | 57.13 | |||||||

| w/MoCo V2 | 60.61 | 67.61 | 58.11 | |||||||

| w/SWAV | 70.68 | 81.02 | 69.88 | |||||||

| w/WSI-FT | 70.15 | 84.21 | 67.44 | |||||||

| w/BCL | 75.17 | 87.71 | 73.65 | |||||||

| w/Ours | ||||||||||

| over others | +1.03 | +2.76 | +1.05 | +1.91 | +1.71 | +0.75 | +3.96 | +2.24 | +4.14 | |

| Methods | CAMELYON16 | BRACS | Average (%) | ||||||

| ACC (%) | AUC (%) | F1 (%) | ACC (%) | AUC (%) | F1 (%) | ACC | AUC | F1 | |

| ABMIL | |||||||||

| CLAM_SB | |||||||||

| CLAM_MB | |||||||||

| DSMIL | |||||||||

| ACMIL | |||||||||

| TransMIL | |||||||||

| DTFD | |||||||||

| WSI-FT | |||||||||

| BCL | |||||||||

| Ours | |||||||||

BReAst Carcinoma Subtyping (BRACS) dataset emerges as a promotion for the automated detection and classification of breast tumors, also constituted by H&E stained histopathological images. The dataset encompasses 547 whole slide images. Each WSI has been meticulously annotated according to consensus by a panel of three board-certified pathologists, categorizing different types of lesions. Specifically, this dataset includes three types of lesions: benign, malignant, and atypical, further divided into seven subtypes. In this paper, we focus on the three-class classification. We adhered to the official division and employed distinct seeds across three experimental trials. All slides were cut into non-overlapping patches of pixels at a magnification.

In our experiments, we employ several evaluation metrics in bag-level classification, including accuracy (ACC), the area under the receiver operating characteristic curve (AUC), and the harmonic mean of precision and recall (F1 score). For the multi-class dataset BRACS, AUC is computed using a one-versus-all mode. In addition, we also conducted a patch-level evaluation with another two evaluation metrics: the free-response receiver operating characteristic (FROC) [43] and the competition performance metric (CPM) score [45]. FROC is defined as the plot of sensitivity versus the average number of false positive patches per image (FPI) and CPM is defined as the average sensitivity at seven predefined false positive rates which seven values are 0.125, 0.25, 0.5, 1, 2, 4, and 8 in FROC curve and was calculated as:

| (18) |

4.2 Implementation details

All experiments were conducted on one NVIDIA RTX 3090 GPU which possesses 24 GB memory. Our methodology was implemented within the PyTorch v2.0 deep learning framework. In our experiments, we applied the resnet50 [29] backbone as the feature encoder, and Adam optimizer was utilized for training. For the MIL training, the batch size was set to 1, with an initial and minimum learning rate of and , respectively. For the encoder fine-tuning, the batch size was set to 64, with a fixed learning rate of . The maximum number of epochs for training both the MIL training and the encoder fine-tuning was established at 200. The early stopping strategy was implemented to prevent overfitting, which was monitored through the loss on the validation set.

4.3 Methods for comparison

In general, we evaluate the performance of different features under certain MIL models and the performance of certain features under different MIL models.

As our method aims at enhancing feature representation, we first evaluate features generated by different approaches: (1) Random initialization. (2) Pre-trained on natural images, Imagenet-1K [29]. (3) The weight from employing self-supervised methods on extensive WSI datasets, including Barlow Twins [72], MoCo V2 [17], and SWAV [10]. (4) Task-oriented feature fine-tuning methods, including WSI-FT [35] and BCL [71].

Additionally, as a multi-instance learning approach for WSI classification, we also evaluate other relevant MIL methods: (1) The classic ABMIL [31]. (2) Four variants of ABMIL, including single-attention-branch CLAM_SB [41], multi-attention-branch CLAM_MB [41], non-local attention pooling DSMIL [34] and muti-head attention ACMIL [74]. (3) Transformer-based MIL, TransMIL [54]. (6) Double-tier feature distillation MIL, DTFD [73]. (7) End-to-end feature encoder fine-tuning, WSI-FT [35]. (8) Bayesian collaborative learning-based MIL, BCL [71]. All results of these methods are conducted using their official codes under the same settings.

5 Results

5.1 Quantitative comparison

To validate the efficiency of the embedding generated by our approach, we conducted a comparative analysis of performance results obtained with various feature extraction techniques, as reported in Tab. 1. Initially, features derived from a randomly initialized feature encoder exhibited limited classification ability. Through supervised learning with ImageNet-1K, significant enhancements were observed in the representational capacity of embeddings, especially in the CAMELYON16 dataset. Self-supervision based methods facilitated fine-tuning of the feature encoder using extensive pathological images. Embeddings generated through Barlow Twins, MoCo V2, and SWAV failed to demonstrate superior performance in the CAMELYON16 dataset but exhibited improved performance in the BRACS dataset. This phenomenon could be attributed to the dataset’s inherent characteristics. The utilization of self-supervised weights may inadvertently complicate features, resulting in different MIL classification outcomes according to the category distinctions in different datasets. In task-oriented feature fine-tuning methods, BCL outperformed most of the other methods as it distills efficient patch-level information from MIL models for encoder fine-tuning. Notably, our method consistently outperformed other feature fine-tuning approaches in both datasets. This robust performance underscores the effectiveness and versatility of our approach. Furthermore, our results remained optimal even when applied to different MIL models, highlighting the generalizability of our approach.

| Methods | ACC (%) | AUC (%) | F1 (%) | CPM (%) |

| MeanMIL | ||||

| MaxMIL | ||||

| ABMIL | ||||

| CLAM | ||||

| DSMIL | ||||

| TransMIL | ||||

| DTFD | ||||

| BCL(bag) | ||||

| BCL(patch) | ||||

| Ours(bag) | ||||

| Ours(patch) |

To further validate the WSI classification performance of our approach, we evaluated results across various MIL methods, as summarized in Tab. 2. Our analysis reveals that our approach consistently outperforms others on both datasets. Most of the other methods primarily focus on the improvement of the aggregator, yet they fail to achieve comparable performance to ours. This can be attributed to their reliance on features extracted by weights pre-trained on natural images, which is not optimal for WSI classification tasks. WSI-FT, an end-to-end training framework with partial patches, tends to exhibit poor generalization of features. Although BCL offers an excellent foundational framework, the presence of noise in the pseudo labels may potentially lead to model overfitting.

Both as iterative methods, we demonstrated the performance difference between BCL and our approach across different rounds of iteration on the validation and test sets of CAMELYON16 and BRACS datasets. Our observations in Fig. 3 revealed that our method demonstrates a more rapid convergence compared to BCL on the CAMELYON16 dataset, accompanied by a notable improvement in effectiveness. On the BRACS dataset, although BCL’s performance exhibits fluctuations, our method consistently converges swiftly to superior outcomes.

Given the detailed annotations for tumor regions provided by the CAMELYON16 dataset, we evaluated the patch-level classification ability of various MIL methods for comparison. All MIL methods were assessed using their bag-level classification classifiers, while both BCL and our method included an additional patch-level classifier. As presented in Tab. 3, the performance results revealed that most methods exhibited high AUC scores alongside low F1 scores, which primarily stemmed from the inherent characteristics of the MIL principle. These methods tend to focus on a few instances within a bag, as a bag is labeled positive even if only one instance within it is positive. Consequently, this often leads to only a few patches being classified with very high confidence. In contrast, our method consistently achieved optimal results in both ACC and F1 scores. This can be attributed to our approach of selecting hard negative samples to mitigate overfitting. The performance of our bag-level classifier implicitly demonstrated that our method effectively addressed the tendency of MIL to excessively focus on a minority of patches. Furthermore, we provide free-response receiver operating characteristic curves using different MIL models, as depicted in Fig. 4. These curves illustrated that our method outperformed others across all FPIs, indicating the ability to detect tumor slides at a low false positive cost.

5.2 Visualization and interpretation

To provide an intuitive demonstration of the effectiveness of our method, we visualized representative results in Fig. 5. Specifically, we selected a macro-metastasis (’test001’) and a micro-metastasis (’test010’) WSIs from the CAMELYON16 dataset for visualization, generating classification heatmaps using attention scores derived from the MIL aggregator. For ’test001’, our heatmap accurately identifies the macro-metastatic tumor region in the slide, along with some micro-metastatic tumor regions in the surrounding area (e.g., the lower-left corner). Upon closer analysis of regions with low attention in the heatmap, we observed that patches containing a large number of fat cells (e.g., patch ()) were assigned lower attention. However, it is noteworthy that not all patches containing fat cells were dismissed as normal; for instance, in patches where only a small portion contains fat cells (e.g., patch ()), our model still identifies them as tumors, indicating its ability to distinguish between fat cells and tumor cells. Additionally, regions with low attention also contained some lymph cells, which our model treats as hard negative samples in the dataset (e.g., patch ()), leading to their lower attention assignment. For ’test010’, our model successfully identifies the small tumor region, in accord with the annotation. Even in regions with micrometastatic tumor cells (e.g., patch ()), our model demonstrates accurate identification. It’s noted that since the patches are in size, our heatmap slightly extends beyond the annotated tumor region.

| Method | POS | Components | CAMELYON16 | BRACS | Average (%) | |||||

| Mining | Searching | Cleaning | ACC (%) | AUC (%) | ACC (%) | AUC (%) | ACC | AUC | ||

| baseline | ✓ | 67.10 | 77.28 | |||||||

| Ours | ✓ | ✓ | ✓ | 69.43 | 79.88 | |||||

| ✓ | ✓ | 69.98 | 81.28 | |||||||

| ✓ | ✓ | 68.16 | 76.92 | |||||||

| ✓ | ✓ | ✓ | ✓ | 74.31 | 79.88 | |||||

5.3 Ablation study

To validate the effectiveness of key components within our method, we conducted ablation experiments on both the CAMELYON16 and BRACS datasets. As is depicted in Tab. 4, scrutinized three crucial components: potential negative sample mining, hard negative sample searching, and positive sample cleaning. For the potential negative sample mining component, compared to using only positive samples, a noticeable improvement was observed on the CAMELYON16 dataset. This can be attributed to the dataset’s simplicity, which facilitated more accurate category center selection, thus enhancing the mining module’s functionality. Conversely, on the BRACS dataset, the initial suboptimal MIL model generates less accurate category centers, thus consequently fewer useful negative samples are found for fine-tuning. In scenarios without hard negative sample searching, relying solely on high-confidence patches as hard negative samples showed mediocre performance on the CAMELYON16 dataset. In contrast, on the BRACS dataset, significant improvements were observed, as some negative samples were misclassified, thereby providing opportunities for enhancement. For experiments focusing solely on positive sample cleaning, both datasets exhibited slight improvements, indicating the presence of noise in the original positive samples. Notably, our method, although divided into three parts, operates synergistically: the negative sample mining module enables the model to identify missing information from low-confidence patches and maximize the utilization of all patches; the hard negative sample searching module aids the model in learning more adversarial information; and the positive sample cleaning module ensures the purity of positive samples, preventing the model from overfitting to noisy samples.

5.4 Hyper-parameter study

In this study, two critical hyper-parameters, namely the initial number of patches and the number of clusters are used. We conducted extensive experiments to set the best values for these two hyper-parameters. Limit our comparison to the outcomes following the initial iteration, as a selection of hyper-parameters. As depicted in Fig. 6, the curves all exhibit a trend of rising first and then declining. Therefore, we choose the parameters corresponding to the peak values as hyper-parameters. Specifically, we set to 10 on both datasets. As for the number of clusters , since it largely depends on the dataset itself, we set it to for the CAMELYON16 dataset and for the BRACS dataset respectively.

6 Discussion and conclusion

While multiple instance learning has been widely applied in WSI classification, most existing methods have hardly focused on updating the feature encoder. However, despite efforts to enhance the feature encoder, MIL models have often struggled to effectively aggregate suboptimal embeddings for final predictions.

To address this limitation, we introduced HC-FT which utilizes the heuristic clustering strategy for feature fine-tuning. This heuristic clustering strategy enables the identification of feature centers for each category. Leveraging these centers, we developed two modules: the potential negative sample mining module enables the extraction of valuable information from areas not prominently focused on by the model; the label refinement module aids in the identification of hard negative samples and the purification of positive samples. We further fine-tune the feature encoder using selected patches with pseudo labels. Our results demonstrate that features generated using our method consistently exhibit superior performance, even when applied to the simplest ABMIL method.

However, our method has several shortcomings. Firstly, the result of the initial round is crucial, a superior initial outcome enables an effective identify category center. Conversely, if the initial result is poor, clustering centers may be inaccurate, rendering poor performance of subsequent modules. Moreover, due to the task-oriented nature of our approach, directly transferring our results to similar datasets remains uncertain. Lastly, we solely utilized classification tasks to update the feature encoder, other pretext tasks could potentially serve to update the feature encoder, meriting further exploration.

Acknowledgments

This study was supported by Shenzhen Engineering Research Centre (XMHT20230115004), Science and Technology Research Program of Shenzhen City (KCXFZ20201221173207022), and Jilin Fuyuan Guan Food Group Co., Ltd.

References

- Abels et al. [2019] Abels, E., Pantanowitz, L., Aeffner, F., Zarella, M.D., van der Laak, J., Bui, M.M., Vemuri, V.N., Parwani, A.V., Gibbs, J., Agosto-Arroyo, E., et al., 2019. Computational pathology definitions, best practices, and recommendations for regulatory guidance: a white paper from the digital pathology association. The Journal of pathology 249, 286–294.

- Adnan et al. [2020] Adnan, M., Kalra, S., Tizhoosh, H.R., 2020. Representation learning of histopathology images using graph neural networks, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 988–989.

- Azizi et al. [2022] Azizi, S., Culp, L., Freyberg, J., Mustafa, B., Baur, S., Kornblith, S., Chen, T., MacWilliams, P., Mahdavi, S.S., Wulczyn, E., Babenko, B., Wilson, M., Loh, A., Chen, P.H.C., Liu, Y., Bavishi, P., McKinney, S.M., Winkens, J., Roy, A.G., Beaver, Z., Ryan, F., Krogue, J., Etemadi, M., Telang, U., Liu, Y., Peng, L., Corrado, G.S., Webster, D.R., Fleet, D., Hinton, G., Houlsby, N., Karthikesalingam, A., Norouzi, M., Natarajan, V., 2022. Robust and efficient medical imaging with self-supervision. arXiv:2205.09723.

- Bergner et al. [2023] Bergner, B., Lippert, C., Mahendran, A., 2023. Iterative patch selection for high-resolution image recognition. arXiv:2210.13007.

- Brancati et al. [2021] Brancati, N., Anniciello, A.M., Pati, P., Riccio, D., Scognamiglio, G., Jaume, G., Pietro, G.D., Bonito, M.D., Foncubierta, A., Botti, G., Gabrani, M., Feroce, F., Frucci, M., 2021. Bracs: A dataset for breast carcinoma subtyping in h&e histology images. arXiv:2111.04740.

- Brown et al. [2020] Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J.D., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D., Wu, J., Winter, C., Hesse, C., Chen, M., Sigler, E., Litwin, M., Gray, S., Chess, B., Clark, J., Berner, C., McCandlish, S., Radford, A., Sutskever, I., Amodei, D., 2020. Language models are few-shot learners, in: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H. (Eds.), Advances in Neural Information Processing Systems, Curran Associates, Inc.. pp. 1877--1901.

- Campanella et al. [2019] Campanella, G., Hanna, M.G., Geneslaw, L., Miraflor, A., Werneck Krauss Silva, V., Busam, K.J., Brogi, E., Reuter, V.E., Klimstra, D.S., Fuchs, T.J., 2019. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nature medicine 25, 1301--1309.

- Cao et al. [2023] Cao, L., Wang, J., Zhang, Y., Rong, Z., Wang, M., Wang, L., Ji, J., Qian, Y., Zhang, L., Wu, H., et al., 2023. E2efp-mil: End-to-end and high-generalizability weakly supervised deep convolutional network for lung cancer classification from whole slide image. Medical Image Analysis 88, 102837.

- Caron et al. [2019] Caron, M., Bojanowski, P., Joulin, A., Douze, M., 2019. Deep clustering for unsupervised learning of visual features. arXiv:1807.05520.

- Caron et al. [2021a] Caron, M., Misra, I., Mairal, J., Goyal, P., Bojanowski, P., Joulin, A., 2021a. Unsupervised learning of visual features by contrasting cluster assignments. arXiv:2006.09882.

- Caron et al. [2021b] Caron, M., Touvron, H., Misra, I., Jégou, H., Mairal, J., Bojanowski, P., Joulin, A., 2021b. Emerging properties in self-supervised vision transformers, in: Proceedings of the IEEE/CVF international conference on computer vision, pp. 9650--9660.

- Chen et al. [2021] Chen, C.L., Chen, C.C., Yu, W.H., Chen, S.H., Chang, Y.C., Hsu, T.I., Hsiao, M., Yeh, C.Y., Chen, C.Y., 2021. An annotation-free whole-slide training approach to pathological classification of lung cancer types using deep learning. Nature communications 12, 1193.

- Chen et al. [2016] Chen, H., Qi, X., Yu, L., Heng, P., 2016. DCAN: deep contour-aware networks for accurate gland segmentation. CoRR abs/1604.02677. arXiv:1604.02677.

- Chen et al. [2024] Chen, K., Sun, S., Zhao, J., 2024. Camil: Causal multiple instance learning for whole slide image classification, in: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 1120--1128.

- Chen et al. [2022] Chen, R.J., Chen, C., Li, Y., Chen, T.Y., Trister, A.D., Krishnan, R.G., Mahmood, F., 2022. Scaling vision transformers to gigapixel images via hierarchical self-supervised learning, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 16144--16155.

- Chen et al. [2020a] Chen, T., Kornblith, S., Norouzi, M., Hinton, G., 2020a. A simple framework for contrastive learning of visual representations. arXiv:2002.05709.

- Chen et al. [2020b] Chen, X., Fan, H., Girshick, R., He, K., 2020b. Improved baselines with momentum contrastive learning. arXiv:2003.04297.

- Chen* et al. [2021] Chen*, X., Xie*, S., He, K., 2021. An empirical study of training self-supervised vision transformers. arXiv preprint arXiv:2104.02057 .

- Chen and Lu [2023] Chen, Y.C., Lu, C.S., 2023. Rankmix: Data augmentation for weakly supervised learning of classifying whole slide images with diverse sizes and imbalanced categories, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 23936--23945.

- Chikontwe et al. [2020] Chikontwe, P., Kim, M., Nam, S.J., Go, H., Park, S.H., 2020. Multiple instance learning with center embeddings for histopathology classification, in: Medical Image Computing and Computer Assisted Intervention--MICCAI 2020: 23rd International Conference, Lima, Peru, October 4--8, 2020, Proceedings, Part V 23, Springer. pp. 519--528.

- Chu et al. [2024] Chu, H., Sun, Q., Li, J., Chen, Y., Zhang, L., Guan, T., Han, A., He, Y., 2024. Retmil: Retentive multiple instance learning for histopathological whole slide image classification. arXiv:2403.10858.

- Ciga et al. [2021] Ciga, O., Xu, T., Martel, A.L., 2021. Self supervised contrastive learning for digital histopathology. arXiv:2011.13971.

- Das et al. [2020] Das, K., Conjeti, S., Chatterjee, J., Sheet, D., 2020. Detection of breast cancer from whole slide histopathological images using deep multiple instance cnn. IEEE Access 8, 213502--213511. doi:10.1109/ACCESS.2020.3040106.

- Dietterich et al. [1997] Dietterich, T.G., Lathrop, R.H., Lozano-Pérez, T., 1997. Solving the multiple instance problem with axis-parallel rectangles. Artificial intelligence 89, 31--71.

- Gertych et al. [2015] Gertych, A., Ing, N., Ma, Z., Fuchs, T., Salman, S., Mohanty, S., Bhele, S., Velasquez, A., Amin, M., Knudsen, B., 2015. Machine learning approaches to analyze histological images of tissues from radical prostatectomies. Computerized medical imaging and graphics : the official journal of the Computerized Medical Imaging Society 46P2. doi:10.1016/j.compmedimag.2015.08.002.

- Golden [2017] Golden, J.A., 2017. Deep Learning Algorithms for Detection of Lymph Node Metastases From Breast Cancer: Helping Artificial Intelligence Be Seen. JAMA 318, 2184--2186. doi:10.1001/jama.2017.14580.

- He et al. [2021] He, K., Chen, X., Xie, S., Li, Y., Dollár, P., Girshick, R., 2021. Masked autoencoders are scalable vision learners. arXiv:2111.06377.

- He et al. [2019] He, K., Fan, H., Wu, Y., Xie, S., Girshick, R.B., 2019. Momentum contrast for unsupervised visual representation learning. CoRR abs/1911.05722. arXiv:1911.05722.

- He et al. [2015] He, K., Zhang, X., Ren, S., Sun, J., 2015. Deep residual learning for image recognition. arXiv:1512.03385.

- Hou et al. [2016] Hou, L., Samaras, D., Kurc, T.M., Gao, Y., Davis, J.E., Saltz, J.H., 2016. Patch-based convolutional neural network for whole slide tissue image classification. arXiv:1504.07947.

- Ilse et al. [2018] Ilse, M., Tomczak, J.M., Welling, M., 2018. Attention-based deep multiple instance learning. CoRR abs/1802.04712. arXiv:1802.04712.

- Javed et al. [2022] Javed, S.A., Juyal, D., Padigela, H., Taylor-Weiner, A., Yu, L., Prakash, A., 2022. Additive mil: Intrinsically interpretable multiple instance learning for pathology. arXiv:2206.01794.

- Kong and Henao [2021] Kong, F., Henao, R., 2021. Efficient classification of very large images with tiny objects. arXiv:2106.02694.

- Li et al. [2021] Li, B., Li, Y., Eliceiri, K.W., 2021. Dual-stream multiple instance learning network for whole slide image classification with self-supervised contrastive learning, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14318--14328.

- Li et al. [2023] Li, H., Zhu, C., Zhang, Y., Sun, Y., Shui, Z., Kuang, W., Zheng, S., Yang, L., 2023. Task-specific fine-tuning via variational information bottleneck for weakly-supervised pathology whole slide image classification. arXiv:2303.08446.

- Li et al. [2024] Li, J., Chen, Y., Chu, H., Sun, Q., Guan, T., Han, A., He, Y., 2024. Dynamic graph representation with knowledge-aware attention for histopathology whole slide image analysis. arXiv preprint arXiv:2403.07719 .

- Lin et al. [2023] Lin, T., Yu, Z., Hu, H., Xu, Y., Chen, C.W., 2023. Interventional bag multi-instance learning on whole-slide pathological images. arXiv:2303.06873.

- Liu et al. [2024] Liu, P., Ji, L., Zhang, X., Ye, F., 2024. Pseudo-bag mixup augmentation for multiple instance learning-based whole slide image classification. IEEE Transactions on Medical Imaging , 1–1doi:10.1109/tmi.2024.3351213.

- Lloyd [1982] Lloyd, S.P., 1982. Least squares quantization in pcm. IEEE Trans. Inf. Theory 28, 129--136.

- Louis et al. [2016] Louis, D.N., Feldman, M., Carter, A.B., Dighe, A.S., Pfeifer, J.D., Bry, L., Almeida, J.S., Saltz, J., Braun, J., Tomaszewski, J.E., Gilbertson, J.R., Sinard, J.H., Gerber, G.K., Galli, S.J., Golden, J.A., Becich, M.J., 2016. Computational Pathology: A Path Ahead. Archives of Pathology & Laboratory Medicine 140, 41--50. doi:10.5858/arpa.2015-0093-SA.

- Lu et al. [2021] Lu, M.Y., Williamson, D.F., Chen, T.Y., Chen, R.J., Barbieri, M., Mahmood, F., 2021. Data-efficient and weakly supervised computational pathology on whole-slide images. Nature Biomedical Engineering 5, 555--570.

- Misra and van der Maaten [2019] Misra, I., van der Maaten, L., 2019. Self-supervised learning of pretext-invariant representations. arXiv:1912.01991.

- Moskowitz [2017] Moskowitz, C., 2017. Using free-response receiver operating characteristic curves to assess the accuracy of machine diagnosis of cancer. JAMA 318, 2250. doi:10.1001/jama.2017.18686.

- Mungle et al. [2017] Mungle, T., Tewary, S., Das, D.K., Arun, I., BASAK, B., Kumar Agrawal, S., Ahmed, R., Chatterjee, S., Chakraborty, C., 2017. Mrf-ann: a machine learning approach for automated er scoring of breast cancer immunohistochemical images: Mrf-ann. Journal of Microscopy 267. doi:10.1111/jmi.12552.

- Niemeijer et al. [2011] Niemeijer, M., Loog, M., Abramhoff, M., Viergever, M., Prokop, M., van Ginneken, B., 2011. On combining computer-aided detection systems. IEEE Transactions on Medical Imaging 30, 215--223.

- Pati et al. [2022] Pati, P., Jaume, G., Foncubierta-Rodriguez, A., Feroce, F., Anniciello, A.M., Scognamiglio, G., Brancati, N., Fiche, M., Dubruc, E., Riccio, D., et al., 2022. Hierarchical graph representations in digital pathology. Medical image analysis 75, 102264.

- Pinckaers et al. [2021] Pinckaers, H., Bulten, W., van der Laak, J., Litjens, G., 2021. Detection of prostate cancer in whole-slide images through end-to-end training with image-level labels. IEEE Transactions on Medical Imaging 40, 1817--1826. doi:10.1109/TMI.2021.3066295.

- Pinckaers et al. [2022] Pinckaers, H., van Ginneken, B., Litjens, G., 2022. Streaming convolutional neural networks for end-to-end learning with multi-megapixel images. IEEE Transactions on Pattern Analysis and Machine Intelligence 44, 1581--1590. doi:10.1109/TPAMI.2020.3019563.

- Popel et al. [2020] Popel, M., Tomkova, M., Tomek, J., Kaiser, L., Uszkoreit, J., Bojar, O., Žabokrtský, Z., 2020. Transforming machine translation: a deep learning system reaches news translation quality comparable to human professionals. Nature Communications 11, 4381. doi:10.1038/s41467-020-18073-9.

- Qiehe et al. [2024] Qiehe, S., Jiang, D., Li, J., Yan, R., He, Y., Guan, T., Cheng, Z., 2024. Nciemil: Rethinking decoupled multiple instance learning framework for histopathological slide classification, in: Medical Imaging with Deep Learning.

- Qu et al. [2022] Qu, L., Luo, X., Wang, M., Song, Z., 2022. Bi-directional weakly supervised knowledge distillation for whole slide image classification. arXiv:2210.03664.

- Roux et al. [2013] Roux, L., Racoceanu, D., Lomenie, N., Kulikova, M., Irshad, H., Klossa, J., Capron, F., Genestie, C., Le Naour, G., Gurcan, M., 2013. Mitosis detection in breast cancer histological images an icpr 2012 contest. Journal of pathology informatics 4, 8. doi:10.4103/2153-3539.112693.

- Shah et al. [2016] Shah, M.A., Rubadue, C., Suster, D., Wang, D., 2016. Deep learning assessment of tumor proliferation in breast cancer histological images. CoRR abs/1610.03467. arXiv:1610.03467.

- Shao et al. [2021] Shao, Z., Bian, H., Chen, Y., Wang, Y., Zhang, J., Ji, X., Zhang, Y., 2021. Transmil: Transformer based correlated multiple instance learning for whole slide image classification. arXiv:2106.00908.

- Sharma et al. [2021] Sharma, Y., Shrivastava, A., Ehsan, L., Moskaluk, C.A., Syed, S., Brown, D.E., 2021. Cluster-to-conquer: A framework for end-to-end multi-instance learning for whole slide image classification. arXiv:2103.10626.

- Sirinukunwattana et al. [2017] Sirinukunwattana, K., Pluim, J., Chen, H., Qi, X., Heng, P.A., Guo, Y., Wang, L., Matuszewski, B., Bruni, E., Sanchez, U., Böhm, A., Ronneberger, O., Cheikh, B., Racoceanu, D., Kainz, P., Pfeiffer, M., Urschler, M., Snead, D., Rajpoot, N., 2017. Gland segmentation in colon histology images: The glas challenge contest. Medical Image Analysis 35, 489--502. doi:10.1016/j.media.2016.08.008.

- Song et al. [2023] Song, A.H., Jaume, G., Williamson, D.F.K., Lu, M.Y., Vaidya, A., Miller, T.R., Mahmood, F., 2023. Artificial intelligence for digital and computational pathology. Nature Reviews Bioengineering 1, 930--949. doi:10.1038/s44222-023-00096-8.

- Spanhol et al. [2016] Spanhol, F.A., Oliveira, L.S., Petitjean, C., Heutte, L., 2016. Breast cancer histopathological image classification using convolutional neural networks, in: 2016 International Joint Conference on Neural Networks (IJCNN), pp. 2560--2567. doi:10.1109/IJCNN.2016.7727519.

- Srinidhi et al. [2021] Srinidhi, C.L., Ciga, O., Martel, A.L., 2021. Deep neural network models for computational histopathology: A survey. Medical Image Analysis 67, 101813. doi:10.1016/j.media.2020.101813.

- Tang et al. [2023] Tang, W., Huang, S., Zhang, X., Zhou, F., Zhang, Y., Liu, B., 2023. Multiple instance learning framework with masked hard instance mining for whole slide image classification. arXiv:2307.15254.

- Tellez et al. [2021] Tellez, D., Litjens, G., van der Laak, J., Ciompi, F., 2021. Neural image compression for gigapixel histopathology image analysis. IEEE Trans. Pattern Anal. Mach. Intell. 43, 567–578. doi:10.1109/TPAMI.2019.2936841.

- Tomasev et al. [2022] Tomasev, N., Bica, I., McWilliams, B., Buesing, L., Pascanu, R., Blundell, C., Mitrovic, J., 2022. Pushing the limits of self-supervised resnets: Can we outperform supervised learning without labels on imagenet? arXiv:2201.05119.

- Wang et al. [2015] Wang, D., Foran, D.J., Ren, J., Zhong, H., Kim, I.Y., Qi, X., 2015. Exploring automatic prostate histopathology image gleason grading via local structure modeling, in: 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE. pp. 2649--2652.

- Wang et al. [2016] Wang, D., Khosla, A., Gargeya, R., Irshad, H., Beck, A.H., 2016. Deep learning for identifying metastatic breast cancer. arXiv:1606.05718.

- Wang et al. [2022] Wang, X., Yang, S., Zhang, J., Wang, M., Zhang, J., Yang, W., Huang, J., Han, X., 2022. Transformer-based unsupervised contrastive learning for histopathological image classification. Medical Image Analysis 81, 102559. doi:10.1016/j.media.2022.102559.

- Wulczyn et al. [2021] Wulczyn, E., Steiner, D.F., Moran, M., Plass, M., Reihs, R., Tan, F., Flament-Auvigne, I., Brown, T., Regitnig, P., Chen, P.H.C., et al., 2021. Interpretable survival prediction for colorectal cancer using deep learning. NPJ digital medicine 4, 71.

- Wulczyn et al. [2020] Wulczyn, E., Steiner, D.F., Xu, Z., Sadhwani, A., Wang, H., Flament-Auvigne, I., Mermel, C.H., Chen, P.H.C., Liu, Y., Stumpe, M.C., 2020. Deep learning-based survival prediction for multiple cancer types using histopathology images. PloS one 15, e0233678.

- Yan et al. [2023a] Yan, R., He, Q., Liu, Y., Ye, P., Zhu, L., Shi, S., Gou, J., He, Y., Guan, T., Zhou, G., 2023a. Unpaired virtual histological staining using prior-guided generative adversarial networks. Computerized Medical Imaging and Graphics 105, 102185.

- Yan et al. [2023b] Yan, R., Sun, Q., Jin, C., Liu, Y., He, Y., Guan, T., Chen, H., 2023b. Shapley values-enabled progressive pseudo bag augmentation for whole slide image classification. arXiv preprint arXiv:2312.05490 .

- Yang et al. [2022] Yang, J., Chen, H., Zhao, Y., Yang, F., Zhang, Y., He, L., Yao, J., 2022. Remix: A general and efficient framework for multiple instance learning based whole slide image classification, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer. pp. 35--45.

- Yu et al. [2023] Yu, J.G., Wu, Z., Ming, Y., Deng, S., Wu, Q., Xiong, Z., Yu, T., Xia, G.S., Jiang, Q., Li, Y., 2023. Bayesian collaborative learning for whole-slide image classification. IEEE Transactions on Medical Imaging 42, 1809--1821. doi:10.1109/TMI.2023.3241204.

- Zbontar et al. [2021] Zbontar, J., Jing, L., Misra, I., LeCun, Y., Deny, S., 2021. Barlow twins: Self-supervised learning via redundancy reduction. CoRR abs/2103.03230. arXiv:2103.03230.

- Zhang et al. [2022] Zhang, H., Meng, Y., Zhao, Y., Qiao, Y., Yang, X., Coupland, S.E., Zheng, Y., 2022. Dtfd-mil: Double-tier feature distillation multiple instance learning for histopathology whole slide image classification. arXiv:2203.12081.

- Zhang et al. [2023] Zhang, Y., Li, H., Sun, Y., Zheng, S., Zhu, C., Yang, L., 2023. Attention-challenging multiple instance learning for whole slide image classification. arXiv:2311.07125.

- Zhao et al. [2020] Zhao, Y., Yang, F., Fang, Y., Liu, H., Zhou, N., Zhang, J., Sun, J., Yang, S., Menze, B., Fan, X., et al., 2020. Predicting lymph node metastasis using histopathological images based on multiple instance learning with deep graph convolution, in: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 4837--4846.

- Zhu et al. [2023] Zhu, L., Shi, H., Wei, H., Wang, C., Shi, S., Zhang, F., Yan, R., Liu, Y., He, T., Wang, L., et al., 2023. An accurate prediction of the origin for bone metastatic cancer using deep learning on digital pathological images. EBioMedicine 87.