TacIPC: Intersection- and Inversion-free FEM-based Elastomer Simulation For Optical Tactile Sensors

Abstract

Tactile perception stands as a critical sensory modality for human interaction with the environment. Among various tactile sensor techniques, optical sensor-based approaches have gained traction, notably for producing high-resolution tactile images. This work explores gel elastomer deformation simulation through a physics-based approach. While previous works in this direction usually adopt the explicit material point method (MPM), which has certain limitations in force simulation and rendering, we adopt the finite element method (FEM) and address the challenges in penetration and mesh distortion with incremental potential contact (IPC) method. As a result, we present a simulator named TacIPC, which can ensure numerically stable simulations while accommodating direct rendering and friction modeling. To evaluate TacIPC, we conduct three tasks: pseudo-image quality assessment, deformed geometry estimation, and marker displacement prediction. These tasks show its superior efficacy in reducing the sim-to-real gap. Our method can also seamlessly integrate with existing simulators. More experiments and videos can be found in the supplementary materials and on the website: https://sites.google.com/view/tac-ipc.

I Introduction

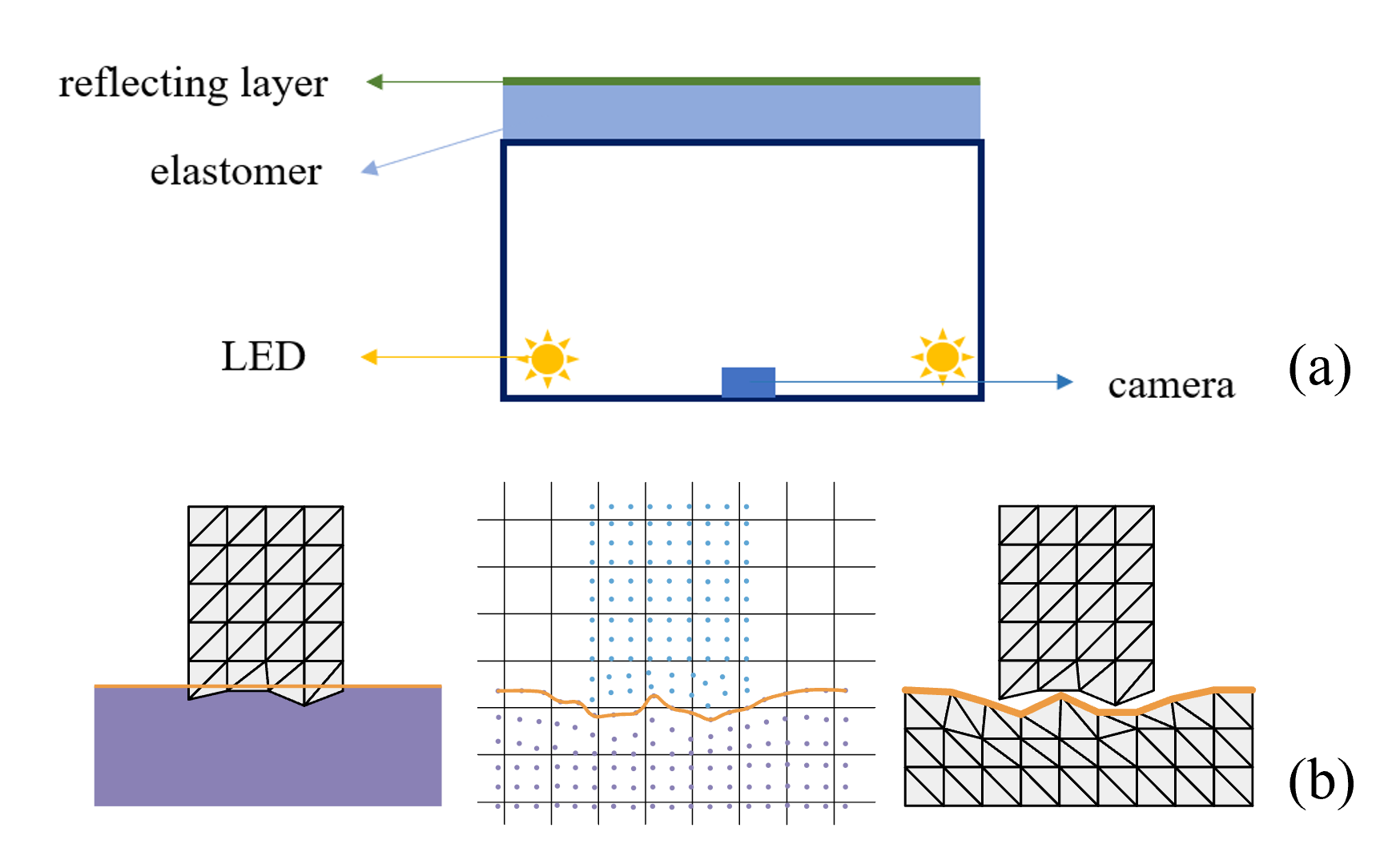

Tactile perception plays a pivotal role in enabling humans to discern and interact with their surrounding environment. Several methods have been explored to implement tactile sensors, including piezoelectric sensors [1], capacitive sensors [2], and optical sensors [3, 4, 5]. Recently, optical sensor-based approaches have become more and more popular due to their ability to generate high-resolution tactile images (also known as pseudo images) and their seamless integration into learning pipelines. The optical tactile sensors often use a camera to capture the deformation of the gel elastomer (as shown in Fig. 1). However, the tactile images derived from optical sensors do not directly correspond to force measurements. Many carefully designed light paths are proposed in previous sensors [3, 4, 5], but none have been able to accurately and consistently reconstruct the deformed surface normals across various shapes. Consequently, the primary application of the optical tactile sensor is its direct incorporation into a learning pipeline [6], with simulation being an integral component of this process.

For simulation, the simulator should be able to support the computation and rendering of the gel elastomer deformation. In the previous works, the gel deformation is treated in two directions: non-physics-based and physics-based ways. As shown in Fig. 1, the non-physical way [7, 8] allows the object and the gel to penetrate each other, and uses the penetration depth as the elastomer deformation. The physics-based way [9, 10] usually adopts the explicit material point method (MPM) as the physics simulation algorithm. The object and gel are both modeled as sets of material points. Explicit MPM benefits from the development of Taichi [11], which has friendly APIs, thus simple coding is enough to build effective simulators. However, explicit MPM has its disadvantages over force simulation (especially frictional force) and its stability (particle penetration and numeric instability), and the rendering is implemented by interpolation between sample points, which can lead to over-smooth effects. Another way to achieve physics-based deformation is the classic finite element method (FEM)-based modeling, which is known to easily model friction and accurate force computation. However, since FEM adopts explicit mesh for the deformed object (i.e., the elastomer), the contact between the object and the gel can easily cause penetration and mesh distortion [12].

In this work, we take the FEM-based path to model the elastomer. The penetration and mesh distortion issues are addressed by incorporating the incremental potential contact (IPC) method [13], which can guarantee intersection-free and inversion-free during contact. In addition, IPC solves the dynamics equation in an implicit way, leading to numerically stable and accurate simulation results. The proposed simulator is named TacIPC. It models the elastomer with a tetrahedral mesh. Explicit mesh modeling has three advantages: (1) Rendering can be directly handled. (2) Friction can be directly modeled. (3). It can be naturally integrated with mesh-based robot simulators such as MuJoCo [14], Bullet [15], Isaac Sim [16], and RFUniverse [17].

For rendering, in the real world, the image is generated by the reflecting layer. The typical Phong-based rendering with Gaussian blur [7, 18] does not replicate the mechanism and only uses it to create seemingly similar effects. In TacIPC, we adopt physics-based ray-tracing rendering to mimic the light path as in the real world by adjusting the LED positions. We calibrate the lighting color temperature in the real world and implement it in the simulation. Also, we use reference images from the real world to generate real-world sensory noise.

To evaluate the proposed tactile simulator, we conduct three tasks: pseudo-image quality assessment, marker displacement prediction, and deformed geometry estimation. These tasks can demonstrate that compared with previous optical tactile sensor simulators, our simulation method can significantly reduce the sim-to-real gap in both rendering and physics simulation, providing a more accurate and reliable way to design and optimize gel-based tactile sensors or provide training data for learning methods. Furthermore, our approach can be easily integrated into existing simulation environments and can significantly improve the performance and accuracy of the simulation. We adopt RFUniverse [17] in our experiments.

We summarize our contribution as follows:

-

•

We propose the first intersection- and inversion-free simulator TacIPC for optical tactile sensors. It utilizes the physical properties of the sensor to simulate realistic tactile images in a numerically stable and physically accurate way.

-

•

We use TacIPC to predict the tactile sensor marker displacement under frictional contacts, which is essential for contact-rich manipulation tasks. It significantly outperforms the MPM-based simulator.

-

•

We train a depth estimation neural network in a sim-to-real fashion using pseudo images generated by TacIPC and validate the model on real-world data.

II Related Works

Our work proposes a physics-based simulator for optical tactile sensors which is related to techniques of simulating the elastomer deformation and the tactile sensors.

II-A Elastomer Simulation

Previous methods to simulate the elastomer effect in optical tactile sensors can be roughly divided into two categories: non-physics-based and physics-based approaches.

The non-physics-based approaches try to produce realistic tactile images in the simulator without actually considering the elastomer deformation caused by external contact. Some works [7, 8] reconstruct the pseudo elastomer surfaces by allowing the object to intersect the elastomer and take the depth of the intersected surface. Then, they apply Gaussian kernels to smooth depth maps. We refer to these methods as “depth-based”. TACTO [18] is also one of the depth-based methods to render the tactile image with PyBullet [15]. But it just adds shadow on the intersected surface. These non-physics-based approaches can produce seemingly plausible pseudo images but they do not have physical context.

Another line is the physics-based approach. Simulating elastomers, such as the gel layers in optical tactile sensors, is a complex task due to their unique deformable property and high degrees of freedom. Numerous studies have adopted the Finite Element Method (FEM) for deformation simulations, as illustrated in [19, 20]. In [21], the authors proposed a robot interface to grasp an elastomer such as rubber spheres and foam cubes. Lee et al. [19] use FEM to estimate force distribution. Though FEM is widely adopted in simulating deformation, it is considered computational consumption and likely causes mesh distortion (e.g., irregular mesh structures or negative element volumes) [12]. Recently, several works have leveraged the material point method (MPM) for a physics-based simulation of elastomers [10, 9]. Such methods enjoy the friendly API provided by Taichi [11], and can easily utilize the explicit MPM method to develop simulators. However, explicit MPM has its disadvantages over frictional force simulation and numeric stability. And the particle-based representation makes it hard to render the tactile image directly. Thus, by rethinking the trend of technical development, we determine physical accuracy is more important and take the FEM path. In this work, we address the distortion issue of FEM simulation by introducing increment potential contact (IPC) [13], which can guarantee intersection- and inversion-free.

II-B Sensor Simulation

With the elastomer simulation technique, we need an interface to incorporate it into a sensor and robot system. For example, in the non-physics-based line of research, [7] initially simulated optical tactile sensors by refining depth maps sourced from Gazebo [22]. Meanwhile, in [18], both the sensor’s and object’s meshes are primarily introduced in OpenGL [23], subsequently modified within the PyBullet simulation. Within this context, depth maps might be procured from PyBullet or concurrently produced in OpenGL alongside tactile visuals.

III IPC Preliminary

To leverage the advantages of FEM-based modeling and explicit mesh representation, the key challenge here is to make the contact intersection-free and inversion-free. We introduce incremental potential contact (IPC) [13] to address it, and will briefly describe its mechanism.

In a dynamics system, without contact and friction, the dynamics differential equation based on Newton’s law for vertices can be formulated as:

| (1) |

where is the external forces applied to the vertices, and is the forces due to elastic deformation. and is the position and velocity of the vertices, is the mass matrix.

Putting Eq. 1 under the implicit Euler scheme:

| (2) |

is equivalent to minimizing the Incremental Potential energy:

| (3) |

which means . In other words, minimizing the energy can lead to the correct evolution direction in terms of Newton’s law (Eq. 1). is the discretized time, is the time step size, is the hyper-elastic energy of deformable objects where the relationship holds due to the principle of virtual work. .

Considering contact, to prevent collision, IPC adopts a barrier function:

| (4) |

where is the geometric distance between contact primitive pairs including point-triangle pairs and edge-edge pairs, and is a threshold parameter.

With this barrier function , one can construct a barrier energy term:

| (5) |

which induces the collision forces between contact primitive pairs, where represents the set of any primitive pair (i.e., an edge-edge pair or a point-triangle pair in the 3D case) with its geometric distance less than , is the weight parameter for this barrier energy term.

Considering the friction, IPC uses a smooth approximation of the Coulomb friction model

| (6) |

where represents the coefficient of friction, is the contact force magnitude, represents the sliding basis, is the tangential relative displacement vector at the local orthogonal frame. Here, is a smooth function

| (7) |

making the friction force integrable, characterizing the transition between dynamic friction and static friction. Now if we lag the sliding basis and contact force magnitude to values , solved in the last optimization step, then we can derive the variational frictional energy by integrating :

| (8) |

which satisfies , where and . Here is a threshold parameter to handle the transition between dynamic and static friction. So the total lagged variational frictional energy would be , and we finally solve frictional contact by minimizing the energy and updating lagged sliding basis along with contact force magnitude alternately.

After adding the friction energy and the barrier energy above into the incremental potential energy , one can solve a dynamics system with contact and friction by minimizing the total energy using the Projective Newton optimization algorithm. During each Newton step, to ensure that no penetration happens, IPC applies the Continuous Collision Detection (CCD) algorithm to compute the Time Of Impact (TOI) and subsequently uses it to filter the Newton step size. In this way, IPC could guarantee that its simulation results are intersection-free and inversion-free.

IV TacIPC

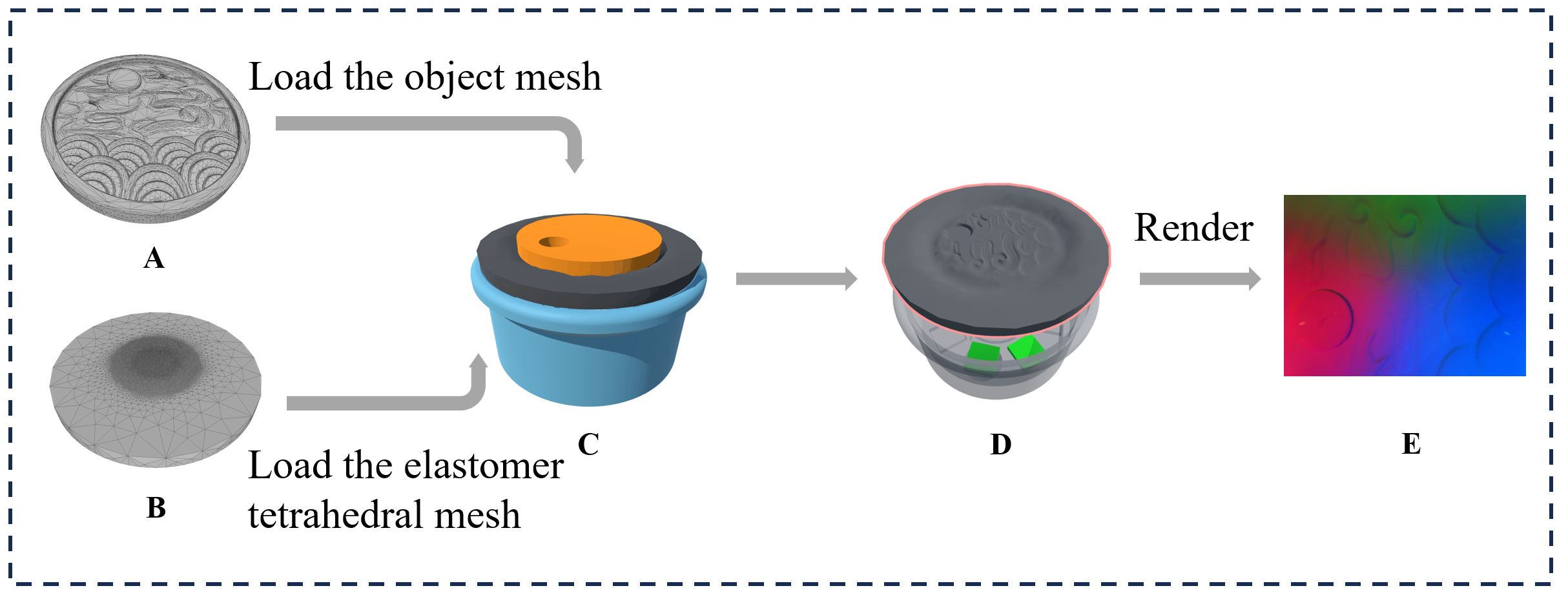

By incorporating IPC into tactile simulation, we construct a robot interface for tactile sensing and rendering. The overall pipeline is illustrated in Fig. 2.

IV-A Elastomer Simulation

In TacIPC, we apply FEM for elastomer simulation. In our work, we use tetrahedral meshes to represent objects. For FEM, previous work [9] has mentioned concerns about the mesh distortions, which may cause irregular meshes or negative element volume and therefore lead to a low accuracy of stress. IPC partially solves this problem by applying CCD and optimization step size filtering techniques to eliminate negative volume and mesh intersection issues, resulting in a higher simulation quality. Additionally, the typical use cases of tactile sensors will not cause large elastomer deformation, thus these problems are usually not significant.

For elastomer simulation, we model the elastomer with a tetrahedral mesh with vertices and tetrahedra. When the elastomer is pressed by an external surface which is also represented by mesh, we apply IPC to handle the contact. The numbers of and depend on the mesh discretization strategy of the gel elastomer. We discuss different strategies in Sec. VI-D. In our work, the main experiments are conducted with strategy: and , in the central region of the mesh where most of the contact happens, vertices and tetrahedral cells reach the highest density where the average edge length is 0.1mm.

IV-B Connection With A Robot Simulator

In Tacchi [9], it shares only the contact object velocity with the external simulator such as MuJoCo [14]. Due to the different contact modeling in Tacchi (particle for object representation, MPM for physics simulation) and MuJoCo (mesh for object representation, penalty-based optimization [25] for physics simulation), the tactile simulation and robot simulation are independent. That is, the contact force between the object and tactile sensor in these simulators will be different, and the particle locations of the gel elastomer in Tacchi will not align with the vertex locations in the external simulator.

However, in TacIPC, we can simply address such issues by sharing all vertex velocities and locations with an external simulator supporting mesh modeling such as RFUniverse.

IV-C Rendering

Since rendering is related to the lighting design of the optical tactile sensors, different sensors may have different designs. We adopt the MC-Tac sensor [3] for the main experiments, as it is an open-source optical tactile sensor.

We load an MC-Tac sensor model in Unity where light source positions and parameters (e.g., lighting color temperature) are aligned with those of the real-world sensor. We also align the Unity camera pose and parameters with those of the real-world camera in the sensor. The deformed elastomer surface generated by TacIPC reflects the light emitted by these light sources, so tactile images can be captured by the Unity camera and subsequently rendered by the standard Unity physics-based rendering pipeline (e.g., real-time ray-tracing). Finally, we subtract the simulation reference image from the rendered image and then add it to the reference image collected from the real world to obtain the result. It can introduce real-world sensory noise and further reduce the sim-to-real gap.

V Experimental setup

In this section, we introduce the task settings to validate the ability of TacIPC in Sec. V-A, real-world setup in Sec. V-B, and simulation setup in Sec. V-C respectively.

V-A Benchmark Tasks

We collect both real-world data and simulation data to evaluate TacIPC in 3 tasks: (1) pseudo-image quality assessment. In this task, we compare the tactile images generated by the simulator with ground truth collected from the real world under the Structural Similarity (SSIM), Mean Absolute Error (MAE), and Peak Signal-to-Noise Ratio (PSNR) metrics. TacIPC and other simulator baselines including the depth-based method [7] and Tacchi [9] are evaluated respectively. (2) marker displacement prediction. In this task, we first press the contact object onto the tactile sensor elastomer, then use a robot gripper to rotate and push the contact object respectively. During the process, we record the movement of the markers on the elastomer. We align all the movements of the contact object in Tacchi and TacIPC respectively with those in the real-world experiments. Finally, we compare the marker displacement predicted by Tacchi and TacIPC with the ground truth collected from real-world experiments. (3) deformed geometry estimation. In this task, we train a U-Net [26] to predict depth images of contact objects from the corresponding tactile images. Two networks are trained on synthetic datasets generated by Tacchi [9] and TacIPC and tested on real-world data. We assess the sim-to-real gap of their output through MAE and Mean Squared Error (MSE).

V-B Real-world Setup

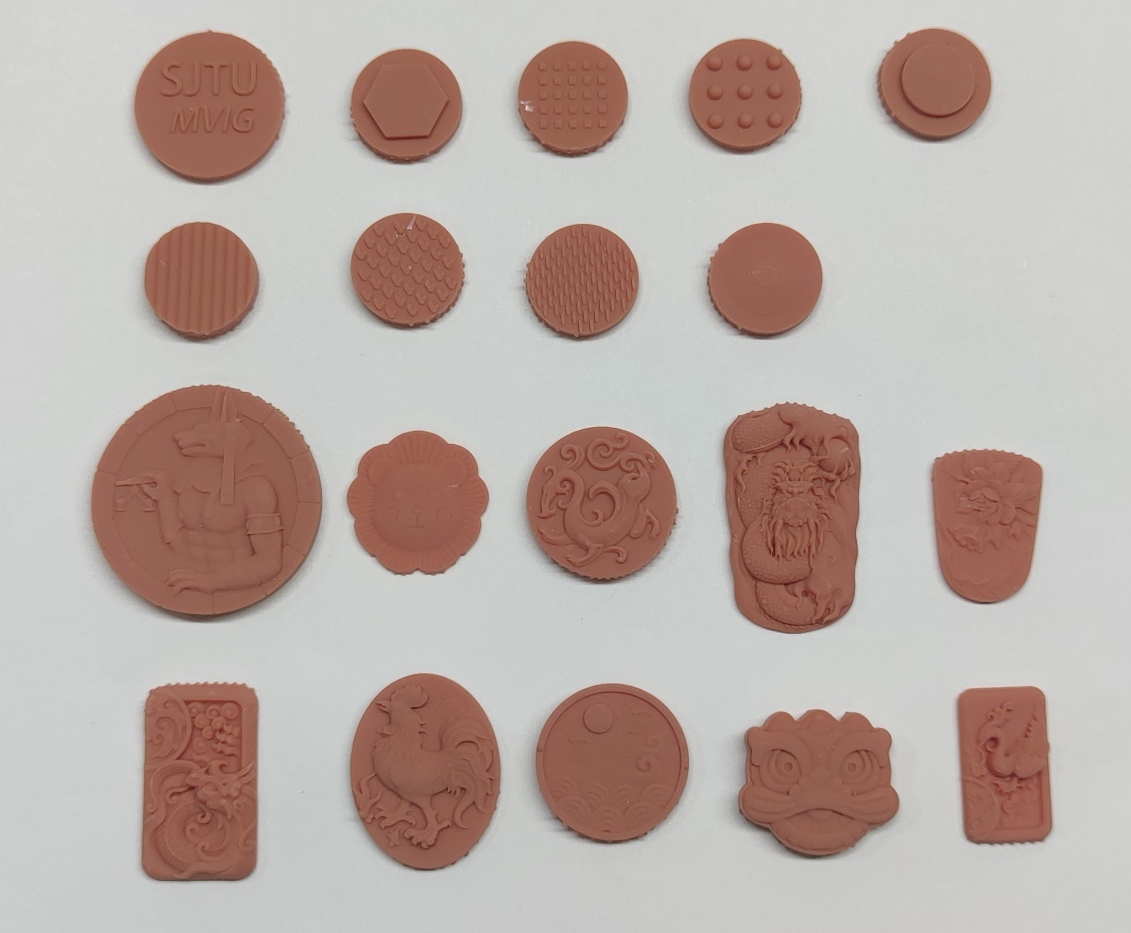

We obtained an object dataset by 3D printing 19 object meshes where 9 of them have different regular patterns such as alphabet letters and polygons and the rest of them have various detailed textures. As from Fig. 3, all of these objects are short cylinders similar to coins, where the patterns are printed on one side of them. We glued the other side of these objects to a wooden cube when conducting the experiments. A Flexiv robot with an AG-95 gripper grasps the cube, and then it will move to the MC-Tac sensor which is fixed on a horizontal tabletop. Subsequently, the gripper goes down to press the elastomer of the sensor. Meanwhile, the tactile snapshots are captured by the MC-Tac camera.

V-C Virtual-world Setup

In the TacIPC simulator, we first use ABAQUS [24] to apply tetrahedral meshing on the MC-Tac elastomer triangle mesh which is a cylinder with a radius of 15mm and a thickness of 2mm. The average edge length of the contact area is about 0.1mm. The vertex number and cell number of the tetrahedral mesh are 23661 and 104675 respectively. For the object mesh, we apply the quadric edge collapse decimation mesh simplification algorithm [27] provided by the MeshLab software [28] before sending them into the simulation scene, resulting in meshes each with 8000 vertices, since most of the original meshes have more than 500,000 vertices which are too many for the simulation and will dramatically increase the computation cost with no apparent quality improvement. In our TacIPC simulation, the timestep , distance threshold for barrier energy is set to be times of the diagonal length of the simulation scene, barrier energy weight . For the contact object, we model it as a rigid body with density . As for the MC-Tac gel elastomer, we measure the gel material parameters in the real world and set them in the simulation scene. These parameters are as follows: gel density , Young’s modulus , and Poisson’s ratio . In the simulation, we use the augmented Lagrangian algorithm to fix the side of the elastomer which is glued to a horizontal surface in the real world and to control the movement of the contact object to press the elastomer. In this way, we can save the deformation results of the elastomer and subsequently use them to render tactile images in Unity.

VI Experiments

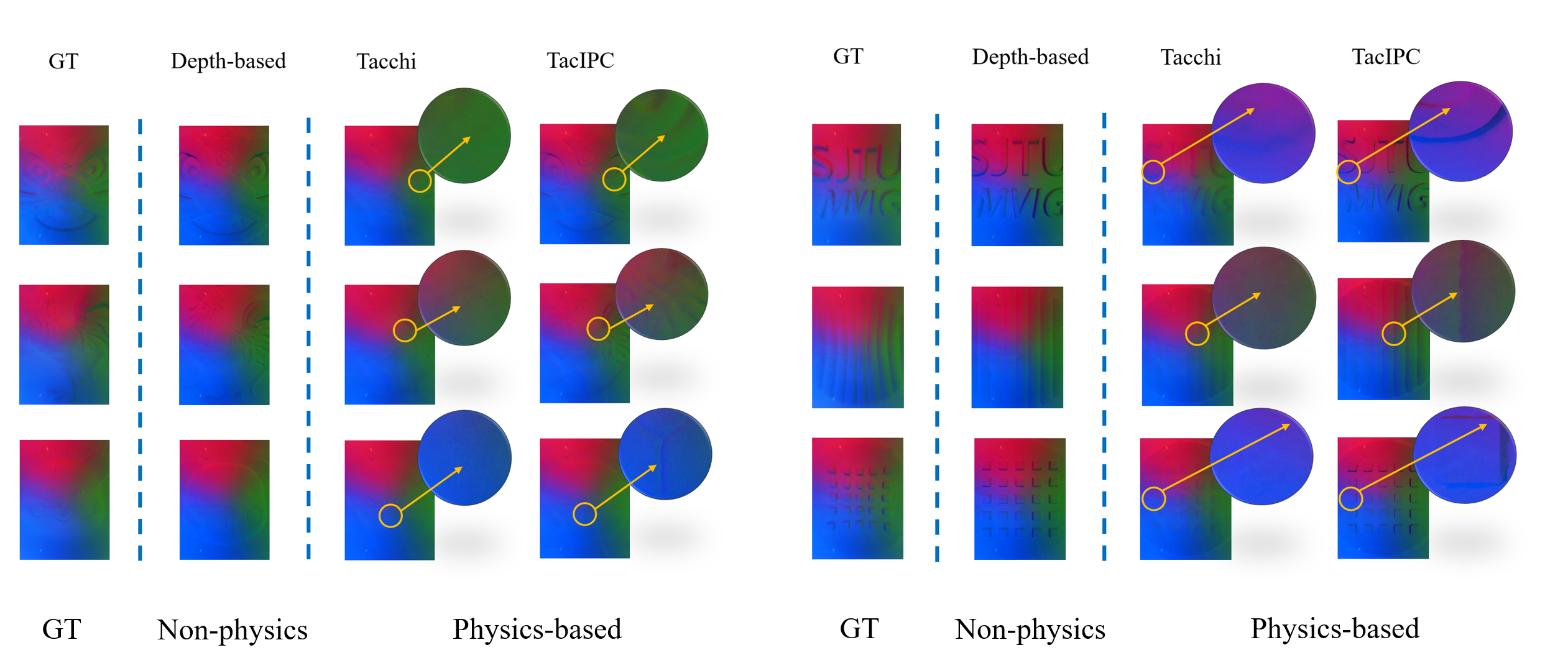

VI-A Pseudo-Image Quality Assessment

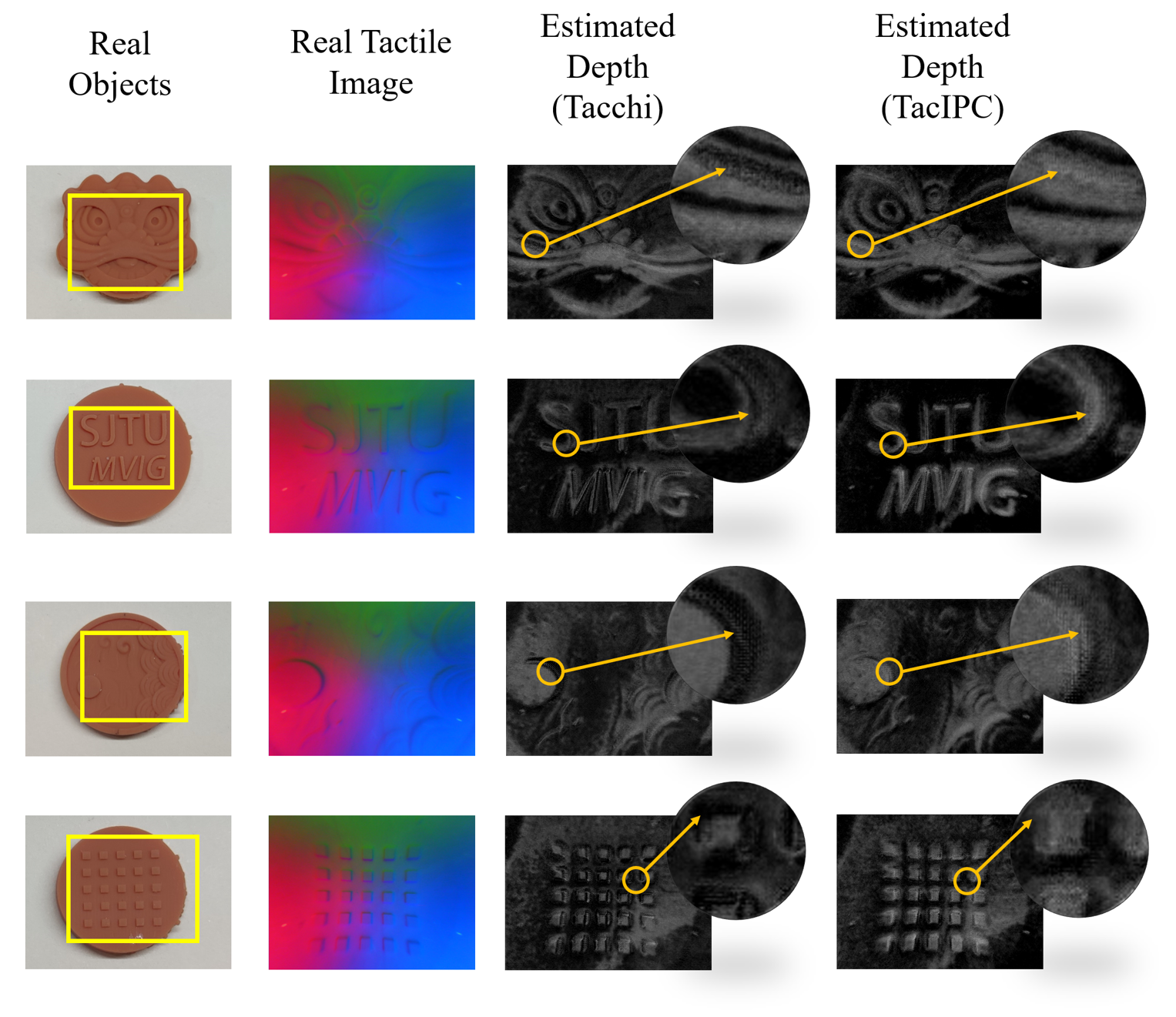

To evaluate the pseudo image quality of our method, we collect tactile sensor images of 19 3D-printed objects with complex textures in the real world. The printed objects and their meshes are shown in Fig. 4. As Fig. 4 shows, Tacchi generates over-smooth results due to its depth field interpolation step. While pseudo images generated by TacIPC illustrate detailed and clear complex textures of contact objects. However, such over-smooth effects may be not properly reflected in the pixel-wise metrics like MAE, and PSNR reported in TABLE I. It inspires us to adopt metrics on physics, as described in the next section.

| Simulation | SSIM | MAE | PSNR |

|---|---|---|---|

| depth-based | 0.86 | 0.043 | 55.92 |

| Tacchi | 0.90 | 0.034 | 59.17 |

| TacIPC | 0.90 | 0.037 | 57.72 |

VI-B Marker Displacement Prediction

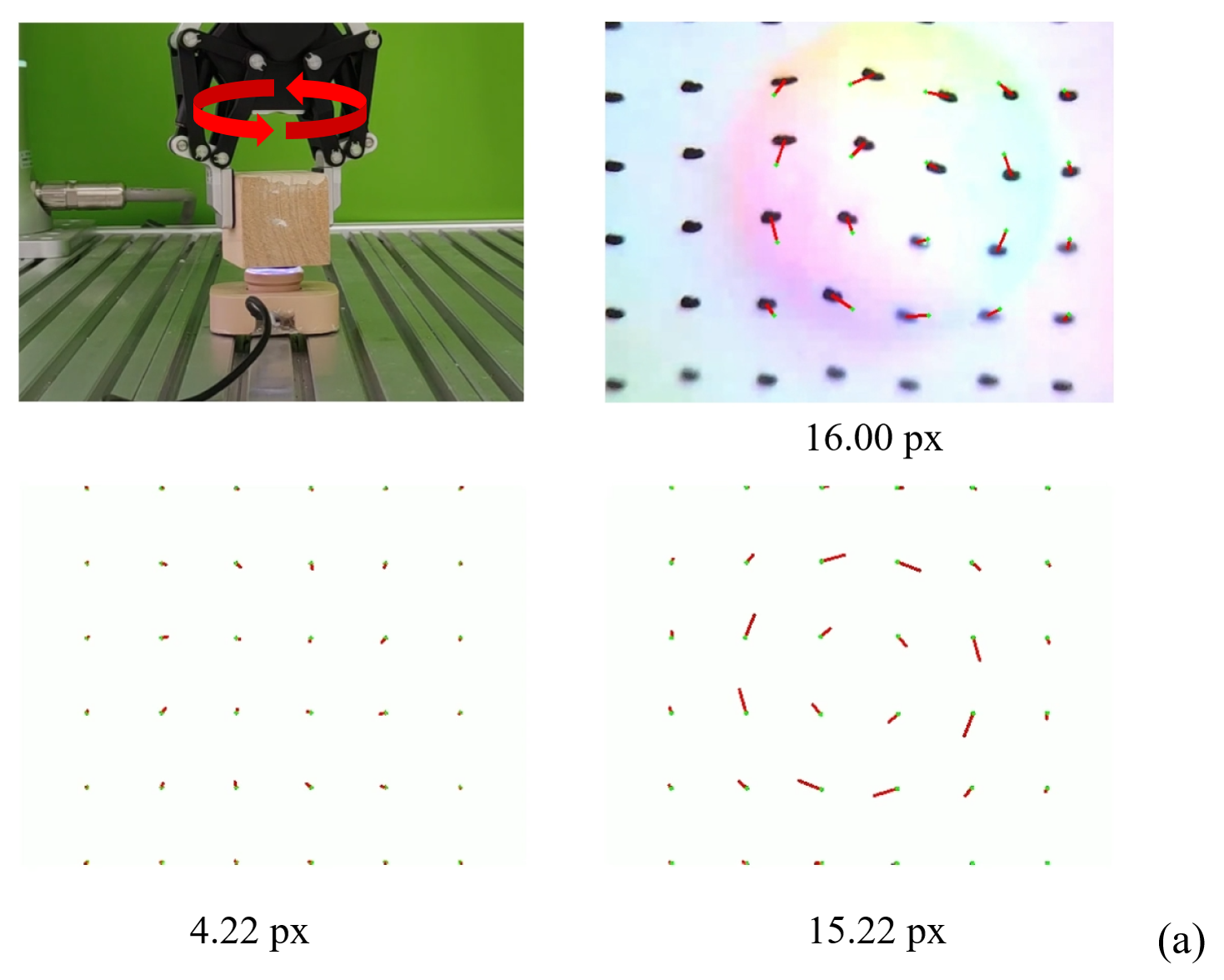

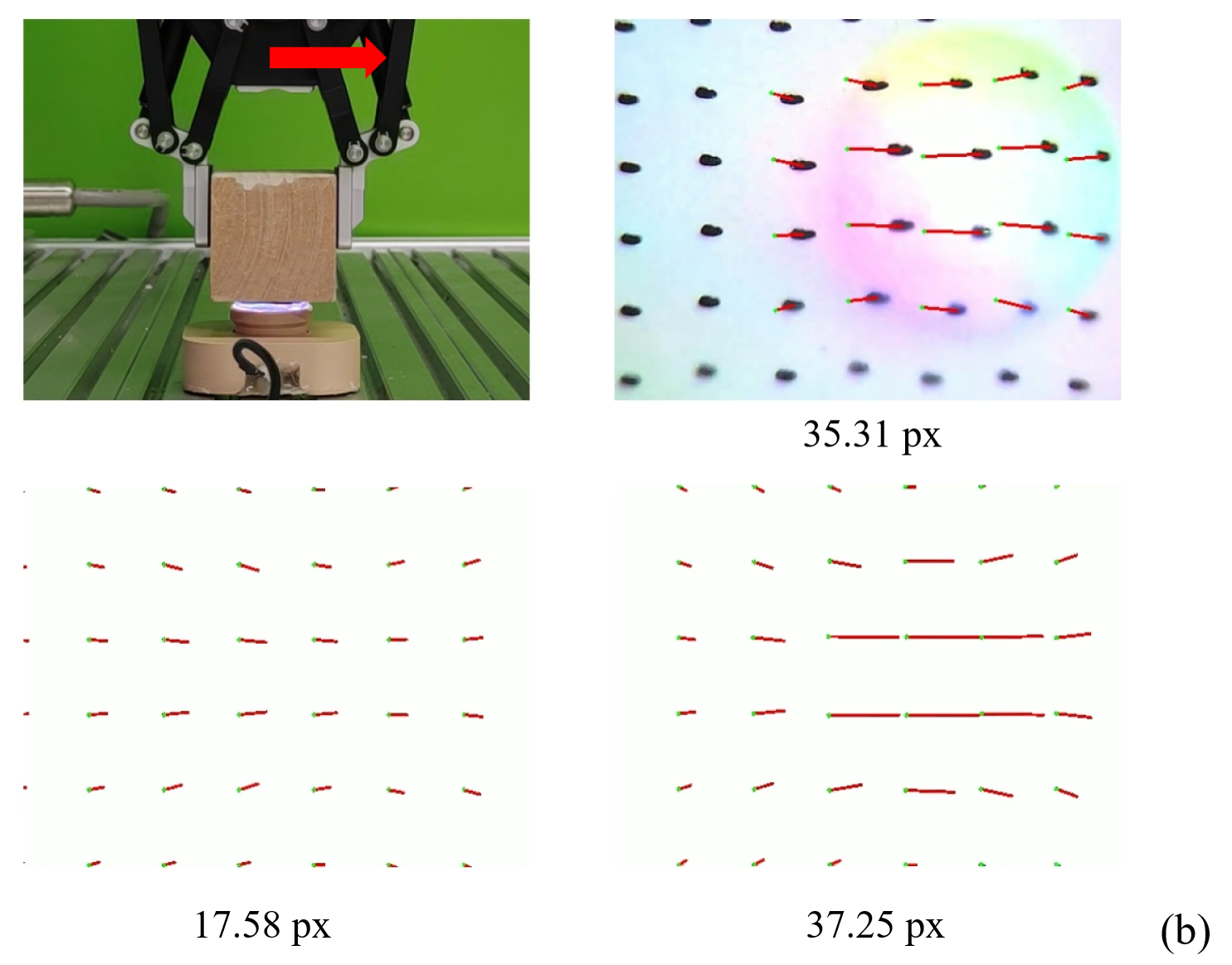

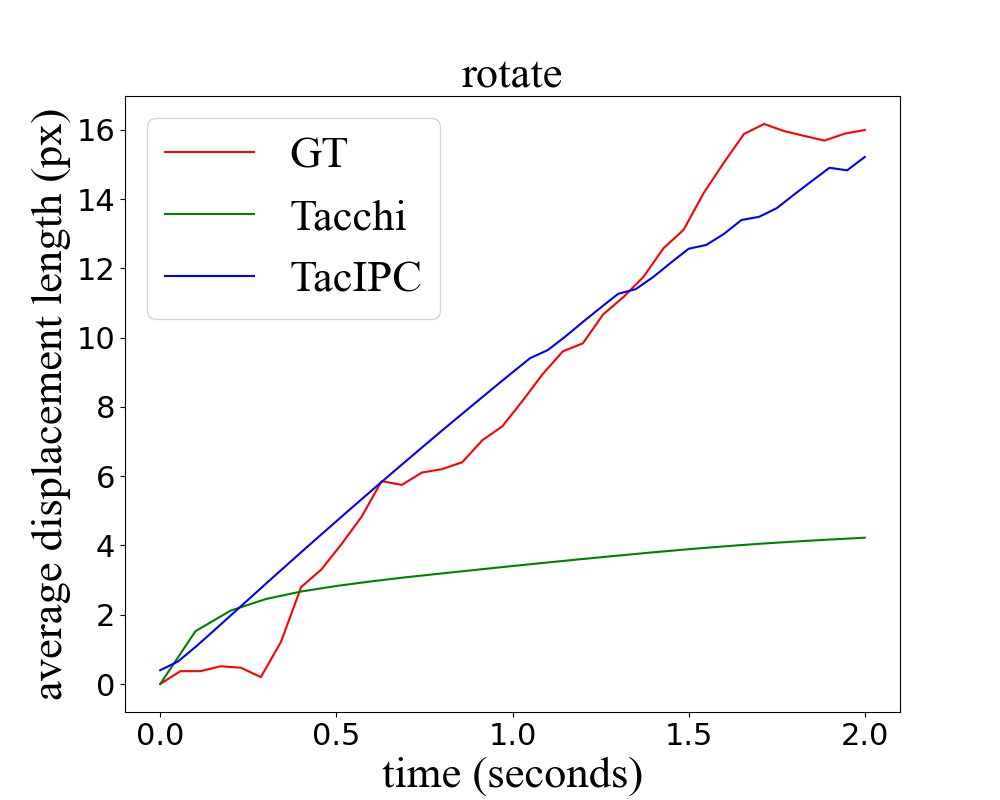

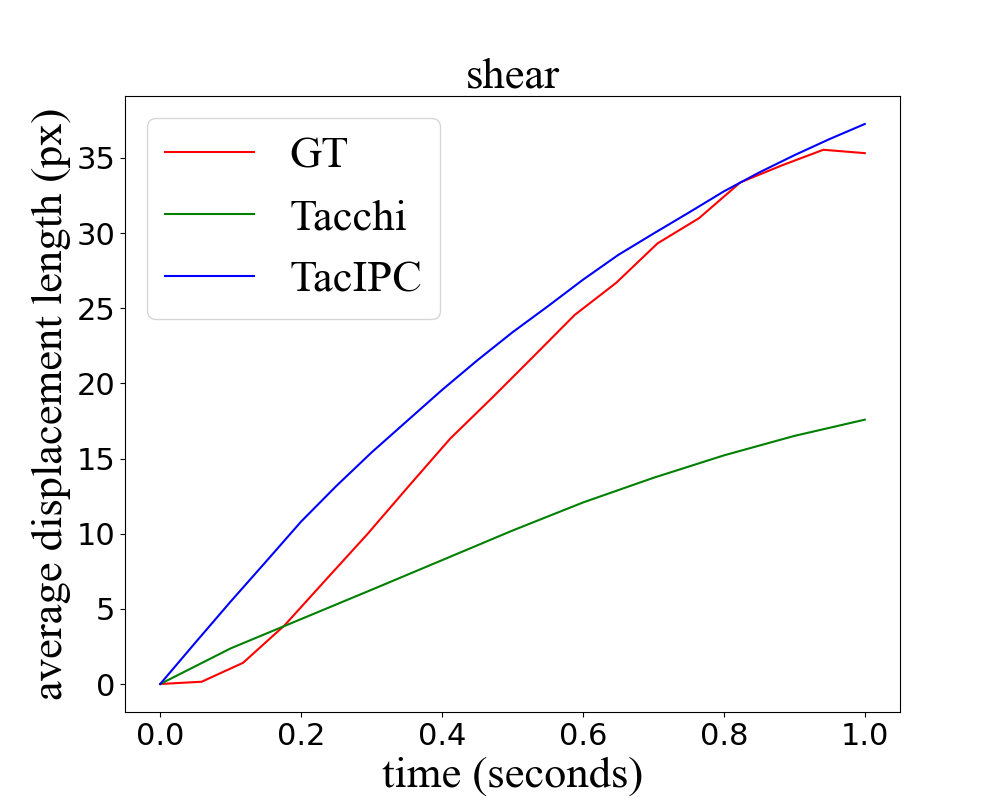

As [4] shows, the marker displacement of tactile sensors is crucial since it could be used to predict force distribution, contributing to more stable manipulation policies. Here we conduct experiments to compare the marker displacement computed by different physics simulation methods and that in the real world. To fully test the marker displacement accuracy in different cases, we conducted the experiment under both shearing frictional contacts and rotational frictional contacts. In both settings, the elastomer was pressed to a depth of 0.5mm by the contact object. Subsequently, in the former setting, the gripper will move straight horizontally, causing the gel elastomer to shear, while in the latter setting the gripper will rotate around a vertical axis through its center, causing the gel elastomer surface to rotate.

We record the marker displacement in both settings in the real world, Tacchi simulator, and TacIPC simulator respectively, as shown in Fig. 5. Take the displacement in the real world as the ground truth, marker displacement in the Tacchi simulator is much shorter than the ground truth, while marker displacement in TacIPC is closer to those in the real world. We also plot the accumulated average displacement length of 20 markers in the center of the contact region in the real world, Tacchi simulator, and TacIPC simulator for each frame in Fig. 6. It clearly illustrates that the marker displacement in our TacIPC is much more consistent with the real world in a long-term sequence than the MPM-based Tacchi simulator.

VI-C Deformed Geometry Estimation

We train a U-Net to estimate the depth map of the contact object given its tactile image. We collect 20 tactile images for each of the 24 object meshes with various random poses to obtain the training dataset by using our TacIPC simulator. We first train the network on this dataset and then validate it on real images collected from the real world to examine the sim-to-real gap between the TacIPC simulator and the real world, which is reported in Table II. The reference depth in the real world is obtained by aligning the flat part of the reconstructed object surface with that of the ground truth contact object mesh in Unity. Qualitative results are shown in Fig 7. The high quality of the estimated depth images illustrates the gap between simulation and real-world is partially bridged by the accuracy of TacIPC.

| Simulation | MAE | MSE |

|---|---|---|

| Tacchi | 0.03072 | 0.001423 |

| TacIPC | 0.02708 | 0.001254 |

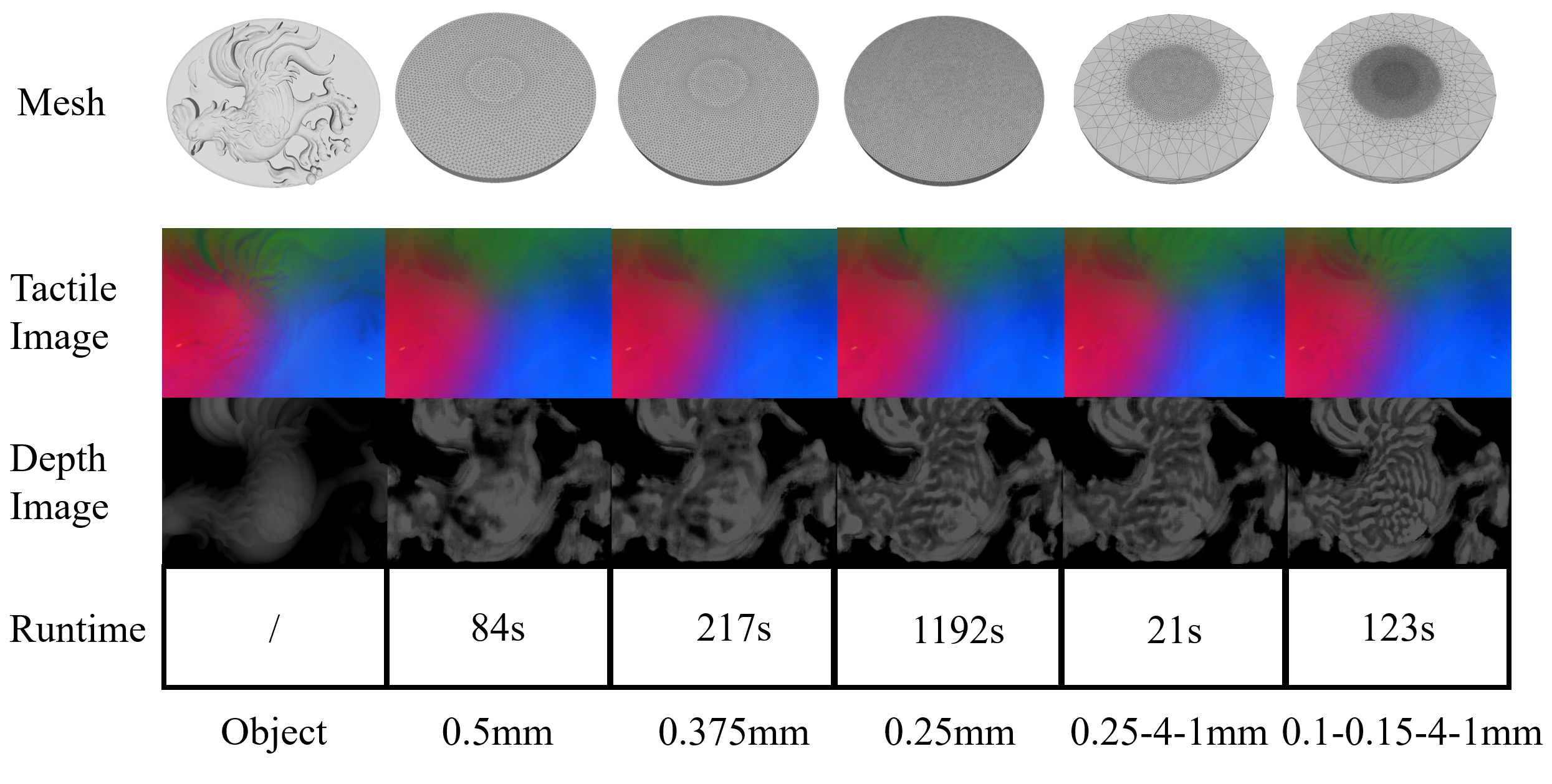

VI-D Ablation Study

Different Meshing Methods

We test different tactile sensor elastomer tetrahedral mesh discretizations generated by uniform meshing and adaptive meshing techniques by using them to generate tactile images and estimate contact object depth maps using these generated images. Three uniformly discretized meshes and two adaptively discretized meshes are listed in the first row of Fig. 8. By uniform meshing, we mean discretizing the object with almost uniform edge lengths. In the 2nd column to the 4th column of Fig. 8, we use average edge lengths of 0.5mm, 0.375mm, and 0.25mm respectively. By adaptive meshing, we mean the density of vertices reaches the maximum, in other words, the average edge length reaches the minimum, around the contact-rich region which is the central region of the elastomer front surface. For the mesh listed in the 5th column of Fig. 8, the average edge length of the front central region, of the front edge region, and of the back side, is 0.25mm, 4mm, and 1mm respectively. The adaptive mesh placed in the 6th column of Fig. 8 has an average edge length that gradually increases from 0.1mm to 0.15mm and then to 4mm, as the region moves from the front central part to the front edge part. The back side of the mesh has an edge length of 1mm. Fig. 8 also shows the tactile images generated by these discretized meshes and the corresponding depth maps estimated by the U-Net previously described in Sec. VI-C. From the results we observe that to achieve similar tactile image quality, adaptive meshing needs far fewer vertices and cells than uniform meshing. In all other experiments, we use the adaptive mesh discretization illustrated in the most right column of Fig. 8 for the MC-Tac elastomer.

Computational Cost

We must admit that in exchange for higher accuracy taking the frictional contacts into account, the computation cost for IPC to simulate the tactile sensor elastomer deformation is much higher than that of explicit MPM. Typically TacIPC needs 6GB GPU memory to compute the elastomer deformation during 5 large timesteps () when the elastomer is pressed by a simplified object mesh with 8000 vertices and 16000 edges. Here the elastomer mesh discretization has 23661 vertices and 104675 tetrahedral cells. All the simulation experiments were running on a GeForce RTX 4090 graphics card.

VII Conclusion And Discussion

We propose TacIPC, an intersection- and inversion-free FEM-based elastomer simulation for optical tactile sensors. TacIPC simulates the deformation of the gel elastomer to generate high-quality tactile images. It can also accurately predict the marker displacement of tactile sensors by applying IPC to properly handle contact and friction. Additionally, we train a depth estimation model that is able to reconstruct the contact object geometry on a TacIPC-generated synthetic dataset, showing a reduced sim-to-real gap. Moreover, TacIPC can be integrated with existing simulators supporting mesh modeling.

In this work, an efficient and standard rendering model is used currently. To gain more realistic tactile images, one needs to improve the rendering model and apply techniques to calibrate its parameters. We leave these for future work.

References

- [1] X. Chen, J. Shao, H. Tian, X. Li, C. Wang, Y. Luo, and S. Li, “Scalable imprinting of flexible multiplexed sensor arrays with distributed piezoelectricity-enhanced micropillars for dynamic tactile sensing,” Advanced Materials Technologies, vol. 5, no. 7, p. 2000046, 2020.

- [2] S. Lee, S. Franklin, F. A. Hassani, T. Yokota, M. O. G. Nayeem, Y. Wang, R. Leib, G. Cheng, D. W. Franklin, and T. Someya, “Nanomesh pressure sensor for monitoring finger manipulation without sensory interference,” Science, vol. 370, no. 6519, pp. 966–970, 2020.

- [3] J. Ren, J. Zou, and G. Gu, “Mc-Tac: Modular camera-based tactile sensor for robot gripper,” in The 16th International Conference on Intelligent Robotics and Applications (ICIRA), 2023.

- [4] W. Yuan, S. Dong, and E. H. Adelson, “Gelsight: High-resolution robot tactile sensors for estimating geometry and force,” Sensors, vol. 17, no. 12, p. 2762, 2017.

- [5] E. Donlon, S. Dong, M. Liu, J. Li, E. Adelson, and A. Rodriguez, “Gelslim: A high-resolution, compact, robust, and calibrated tactile-sensing finger,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 1927–1934.

- [6] W. Xu, Z. Yu, H. Xue, R. Ye, S. Yao, and C. Lu, “Visual-tactile sensing for in-hand object reconstruction,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 8803–8812.

- [7] D. F. Gomes, P. Paoletti, and S. Luo, “Generation of gelsight tactile images for sim2real learning,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 4177–4184, 2021.

- [8] A. Agarwal, T. Man, and W. Yuan, “Simulation of vision-based tactile sensors using physics based rendering,” in 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2021, pp. 1–7.

- [9] Z. Chen, S. Zhang, S. Luo, F. Sun, and B. Fang, “Tacchi: A pluggable and low computational cost elastomer deformation simulator for optical tactile sensors,” IEEE Robotics and Automation Letters, vol. 8, no. 3, pp. 1239–1246, 2023.

- [10] Y. Wang, W. Huang, B. Fang, F. Sun, and C. Li, “Elastic tactile simulation towards tactile-visual perception,” in Proceedings of the 29th ACM International Conference on Multimedia, 2021, pp. 2690–2698.

- [11] Y. Hu, T.-M. Li, L. Anderson, J. Ragan-Kelley, and F. Durand, “Taichi: a language for high-performance computation on spatially sparse data structures,” ACM Transactions on Graphics (TOG), vol. 38, no. 6, pp. 1–16, 2019.

- [12] N.-S. Lee and K.-J. Bathe, “Effects of element distortions on the performance of isoparametric elements,” International Journal for numerical Methods in engineering, vol. 36, no. 20, pp. 3553–3576, 1993.

- [13] M. Li, Z. Ferguson, T. Schneider, T. R. Langlois, D. Zorin, D. Panozzo, C. Jiang, and D. M. Kaufman, “Incremental potential contact: intersection-and inversion-free, large-deformation dynamics.” ACM Trans. Graph., vol. 39, no. 4, p. 49, 2020.

- [14] E. Todorov, T. Erez, and Y. Tassa, “Mujoco: A physics engine for model-based control,” in Intelligent Robots and Systems (IROS), 2012 IEEE/RSJ International Conference on. IEEE, 2012, pp. 5026–5033. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/6386109/

- [15] E. Coumans and Y. Bai, “Pybullet, a python module for physics simulation for games, robotics and machine learning,” http://pybullet.org, 2016–2021.

- [16] V. Makoviychuk, L. Wawrzyniak, Y. Guo, M. Lu, K. Storey, M. Macklin, D. Hoeller, N. Rudin, A. Allshire, A. Handa, and G. State, “Isaac gym: High performance gpu-based physics simulation for robot learning,” 2021.

- [17] H. Fu, W. Xu, R. Ye, H. Xue, Z. Yu, T. Tang, Y. Li, W. Du, J. Zhang, and C. Lu, “Demonstrating rfuniverse: A multiphysics simulation platform for embodied ai.”

- [18] S. Wang, M. Lambeta, P.-W. Chou, and R. Calandra, “Tacto: A fast, flexible, and open-source simulator for high-resolution vision-based tactile sensors,” IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 3930–3937, 2022.

- [19] C. Sferrazza, A. Wahlsten, C. Trueeb, and R. D’Andrea, “Ground truth force distribution for learning-based tactile sensing: A finite element approach,” IEEE Access, vol. 7, pp. 173 438–173 449, 2019.

- [20] S. Zhang, Z. Chen, Y. Gao, W. Wan, J. Shan, H. Xue, F. Sun, Y. Yang, and B. Fang, “Hardware technology of vision-based tactile sensor: A review,” IEEE Sensors Journal, 2022.

- [21] L. Zaidi, J. A. Corrales, B. C. Bouzgarrou, Y. Mezouar, and L. Sabourin, “Model-based strategy for grasping 3d deformable objects using a multi-fingered robotic hand,” Robotics and Autonomous Systems, vol. 95, pp. 196–206, 2017.

- [22] N. Koenig and A. Howard, “Design and use paradigms for gazebo, an open-source multi-robot simulator,” in 2004 IEEE/RSJ international conference on intelligent robots and systems (IROS)(IEEE Cat. No. 04CH37566), vol. 3. IEEE, 2004, pp. 2149–2154.

- [23] D. Shreiner et al., OpenGL programming guide: the official guide to learning OpenGL, versions 3.0 and 3.1. Pearson Education, 2009.

- [24] M. Smith, ABAQUS/Standard User’s Manual, Version 6.9. United States: Dassault Systèmes Simulia Corp, 2009.

- [25] T. Erez, Y. Tassa, and E. Todorov, “Simulation tools for model-based robotics: Comparison of bullet, havok, mujoco, ode and physx,” in 2015 IEEE international conference on robotics and automation (ICRA). IEEE, 2015, pp. 4397–4404.

- [26] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical image computing and computer-assisted intervention. Springer, 2015, pp. 234–241.

- [27] M. Garland and P. S. Heckbert, “Surface simplification using quadric error metrics,” in Proceedings of the 24th annual conference on Computer graphics and interactive techniques, 1997, pp. 209–216.

- [28] P. Cignoni, M. Callieri, M. Corsini, M. Dellepiane, F. Ganovelli, G. Ranzuglia, et al., “Meshlab: an open-source mesh processing tool.” in Eurographics Italian chapter conference, vol. 2008. Salerno, Italy, 2008, pp. 129–136.