\sys: A Prompt Programming Language for Harmonious Integration of Programs and Large Language Model Prompts

Abstract

Large Language Models (LLMs) have become increasingly capable of handling diverse tasks with the aid of well-crafted prompts and integration of external tools, but as task complexity rises, the workflow involving LLMs can be complicated and thus challenging to implement and maintain. To address this challenge, we propose APPL, A Prompt Programming Language that acts as a bridge between computer programs and LLMs, allowing seamless embedding of prompts into Python functions, and vice versa. APPL provides an intuitive and Python-native syntax, an efficient parallelized runtime with asynchronous semantics, and a tracing module supporting effective failure diagnosis and replaying without extra costs. We demonstrate that APPL programs are intuitive, concise, and efficient through three representative scenarios: Chain-of-Thought with self-consistency (CoT-SC), ReAct tool use agent, and multi-agent chat. Experiments on three parallelizable workflows further show that APPL can effectively parallelize independent LLM calls, with a significant speedup ratio that almost matches the estimation. 111Project website can be found in https://github.com/appl-team/appl

1 Introduction

Large Language Models (LLMs) have demonstrated remarkable capabilities in understanding and generating texts across a broad spectrum of tasks (Raffel et al., 2020; Brown et al., 2020; Chowdhery et al., 2023; Touvron et al., 2023a; b; OpenAI, 2023). Their success has contributed to an emerging trend of regarding LLMs as novel operating systems (Andrej Karpathy, 2023; Ge et al., 2023; Packer et al., 2023). Compared with traditional computer systems that precisely execute structured and well-defined programs written in programming languages, LLMs can be guided through flexible natural language prompts to perform tasks that are beyond the reach of traditional programs.

Meanwhile, there is a growing interest in harnessing the combined strengths of LLMs and conventional computing to address more complex challenges. Approaches like tree-of-thoughts (Yao et al., 2024), RAP (Hao et al., 2023) and LATS (Zhou et al., 2023) integrate search-based algorithms with LLM outputs, showing improved outcomes over using LLMs independently. Similarly, the creation of semi-autonomous agents such as AutoGPT (Richards, 2023) and Voyager (Wang et al., 2023) demonstrates the potential of LLMs to engage with external tools, like interpreters, file systems, and skill libraries, pushing the boundaries of what integrated systems can achieve. However, as task complexity rises, the workflow involving LLMs becomes more intricate, requiring more complex prompts guiding LLMs and more sophisticated programs implementing the workflow, which are both challenging to implement and maintain.

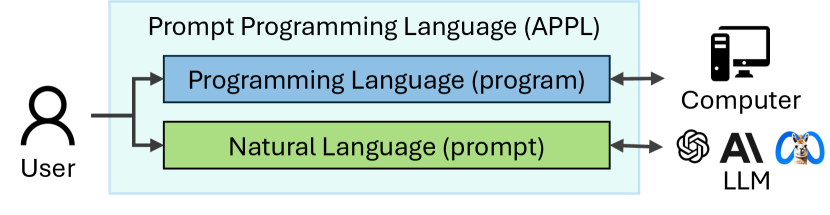

To address these challenges, as illustrated in Figure 1, we propose \sys, A Prompt Programming Language for harmonious integration of conventional programming and LLMs. More specifically, \sys’s key features include:

(1) Readability and maintainability via seamless integration with Python. As shown in Figure 2, \sys seamlessly embeds natural language prompts to Python programs, maintaining prompts’ readability while inheriting modularity, reusability, and dynamism from the host programming language.

(2) Automatic parallelization via asynchronous computation. \sys schedules LLM calls asynchronously, leveraging potential independence among them to facilitate efficient parallelization. This offloads the burden of users to manage synchronization manually, with almost no extra work as shown in Figure 2(a). Its effectiveness is validated in Section 5.2 where experiments show significant accelerations for three popular applications. (3) Smooth transition between structured data and natural language. \sys enables seamlessly converting program objects into prompts (namely promptify). For example, promptify-ing Python functions converts them into LLM-understandable tool specifications. In another direction, based on instructor (Liu, 2023), \sys enables the specification of output structures for LLMs, such as ensuring outputs conform to Pydantic’s BaseModel (Colvin et al., ). When tool specifications are provided, they can be used to constrain the LLM outputs to be a valid tool call.

strives to be a powerful, yet intuitive extension of Python and leverages the unique capabilities of LLMs while being compatible with Python’s syntax and ecosystem. It empowers developers to easily build sophisticated programs that harness the power of LLMs in a manner that’s both efficient and accessible.

2 Related Work

Features LMQL (Beurer-Kellner et al., 2023) Guidance (GuidanceAI, 2023) SGLang (Zheng et al., 2023) \sys Language Syntax Python-like Python Python Python Parallelization and Asynchrony Sub-optimal Manual Auto Auto Prompt Capturing Auto Manual Manual Auto Prompt Context Passing Limited Manual Manual Auto Promptify Tool Manual Manual Manual Auto Prompt Compositor No No No Yes Generation Output Retrieval Template variables str-index str-index Python-native Generation without Side-effects No No No Yes Failure Recovery No No No Yes

LLM programs. The programs involving LLMs continue evolving sophisticated to tackle more challenging tasks, from few-shot prompts (Brown et al., 2020) to elaborated thought process (Wei et al., 2022; Ning et al., 2023; Yao et al., 2024; Besta et al., 2023), from chatbot (OpenAI, 2022) to tool-use agents (Nakano et al., 2021; Ahn et al., 2022; Liu et al., 2022; Liang et al., 2023; Richards, 2023; Patil et al., 2023; Wang et al., 2023; Qin et al., 2023), virtual emulators (Ruan et al., 2024), operating systems (Packer et al., 2023), and multi-agent systems (Park et al., 2023; Hong et al., 2023; Qian et al., 2023). The increasing complexity necessitates powerful frameworks to facilitate the development of those programs.

LLM software stack. On the low level, LLM services are provided by high-performance backends (Gerganov, 2023; HuggingFace, 2023; Kwon et al., 2023) as APIs like OpenAI APIs (OpenAI, ). Building on this, modules with specific functionality are invented, like unifying APIs (BerriAI, 2023), constraining LLM outputs (Willard & Louf, 2023; Liu, 2023) and parallelizing function calls (Kim et al., 2023), where \sys utilizes some of them as building blocks. LMQL (Beurer-Kellner et al., 2023), Guidance (GuidanceAI, 2023), and SGLang (Zheng et al., 2023) are languages similar to \sys. These languages provide developers with precise tools for crafting sophisticated model interactions, enabling finer-grained control over LLM behaviors. We compare \sys with them by case studies in Section 5 and summarize the comparison in Table 1, where \sys provides more automatic supports for prompting and parallelizing LLM calls to streamline the whole workflow. There are also higher-level general-purpose frameworks (Okuda & Amarasinghe, 2024; Prefect, 2023; Khattab et al., 2023; Chase, 2022) and agent-specific frameworks (Team, 2023; Li et al., 2023; Wu et al., 2023). The pipelines in these frameworks can also be implemented using prompt languages like \sys.

3 Language Design and Features

3.1 Design Principles of \sys

(1) Readability and Flexibility. Natural language provides a readable and understandable human-LLM interface, while programming languages enable flexible controls, clear structures, modularization, reusability, and external tools. \sys aims to utilize both strengths.

(2) Easy Parallelization. Parallelization is a critical performance optimization for LLM text generation (Yu et al., 2022; Kwon et al., 2023). \sys aims to provide transparent support for parallelization with almost no extra code introduced.

(3) Convenient Conversion. \sys aims to facilitate easy conversion between structured data (including codes) used in programming and the natural language used in LLMs.

3.2 \sys Syntax and Semantics

As illustrated in the \sys program of Figure 2(a), the syntax and semantics of \sys are extended from Python with slight modifications to integrate natural language prompts and LLMs calls into the traditional programming constructs deeply.

functions are the fundamental building blocks of \sys, denoted by the @ppl decorator. Each \sys function has an implicit context to store prompt-related information. Within \sys functions, the expression statements are interpreted as “interactions” with the context, where the typical behavior is to append strings to the prompt.

Expression statements are standalone Python statements formed by an expression, for example, "str", while an assignment (e.g., a = "str") is not. Their evaluation result is handled differently according to the environment. For example, it causes no extra effect in standard Python while the result is displayed in the interactive environment in Jupyter. Similarly, \sys provides an environment where each \sys function contains a scratchpad of prompts (i.e. context), and the values of expression statements will be converted to prompts (via promptify) and appended to the prompt scratchpad. For example, a standalone string as line 7 or 8 in Figure 2(a) will be appended to the prompt scratchpad.

LLM generations (gen), when being called, implicitly use the accumulated prompt in the context of the \sys function. A special case is the standalone f-string, which is split into parts and they are captured as prompts in order, detailed in Appendix A.1. This provides a more readable way to combine prompts and LLM generations in one line. For example, line 10 of Figure 2(a) means start generation by first adding the prefix “The name of the author is ” to the prompt, which matches human intuition.

Context managers modify how prompt statements are accumulated to the prompt in the context. \sys provides two types of context managers:

(1) Role Changers (such as AIRole in Figure 2(a)) are used in chats to specify the owner of the message, and can only be used as the outmost one.

(2) Prompt Compositors (such as NumberedList in Figure 4) specify the delimiter, indention, indexing format, prolog, and epilog. These compositors allow for meticulously structured finer-grained prompts in hierarchical formatting through native Python syntax, greatly enhancing flexibility without losing readability.

Definition is a special base class for custom definitions. As shown in Figure 4, once declared, Definitions can be easily referred to in the f-string, or used to create an instance by completing its description. They are useful for representing concepts that occur frequently in the prompt and support varying the instantiation in different prompts. See more usage in Appendix D when building a long prompt.

3.3 \sys Runtime

runtime context. Similar to Python’s runtime context that contains local and global variables (locals() and globals()), the context of \sys functions contains local and global prompts that can be accessed via records() and convo(). The example in Figure 3 illustrates how the prompts in the context changed during the execution.

(a) new

(a) new

(b) copy

(b) copy

(c) same

(c) same

(d) resume

(d) resume

Context passing across functions.

When a \sys function (caller) calls another \sys function (callee), users might expect different behaviors for initializing the callee’s context depending on specific use cases.

\sys provides four ways as shown in Figure 5:

(1) new: Creates a clean context. This is the default and most common way.

(2) copy: Creates a copy of the caller’s context. This is similar to “call by value” where the modification to the copy will not influence the original context. This is useful for creating independent and asynchronize LLM calls as shown in Figure 2(a).

(3) same: Uses the same context as the caller. This is similar to “call by reference” where the modifications are directly applied to the original context.

(4) resume: Resumes callee’s context from the last run. This makes the function stateful and suitable for building interactive agents with history (see Figure 9(b) for an example).

When decomposing a long prompt into smaller modules, both new and same can be used as shown in Figure 11, but the default way (new) should be prioritized when possible.

Asynchronous semantics. To automatically leverage potential independence among LLM calls and achieve efficient parallelization, we borrow the idea of the asynchronous semantics from PyTorch (Paszke et al., 2019). Asynchronous semantics means the system can initiate operations that run independently of the main execution thread, allowing for parallelism and often improving performance. In \sys, LLM calls (gen) are asynchronous, which means the main thread proceeds without being blocked by gen until accessing the generation results is necessary. See implementation details in Section 4.2.

Tool calling. Tool calling is an indispensable ability for LLM agents to interact with external resources. To streamline the process of providing tools to LLMs, \sys automatically creates tool specifications from Python functions by analyzing their docstrings and signatures (detailed in Appendix A.3). As shown in Figure 6, the Python functions is_lucky and search can be directly utilized as tools for LLMs. Therefore, thanks to the well-written docstrings, the functions from Python’s rich ecosystem can be integrated into LLMs as tools with minimal effort. Moreover, \sys automatically converts LLMs’ outputs as function calls that can be directly executed to obtain results.

Tracing and caching. \sys supports tracing \sys functions and LLM calls to facilitate users to understand and debug the program executions. The trace is useful for reproducing (potentially partial) execution results by loading cached responses of the LLM calls, which enables failure recovery and avoids the extra costs of resending these calls. This also unlocks the possibility of debugging one LLM call conveniently. Users can also visualize the program execution to analyze the logic, expense, and runtime of the program. \sys provides two modes of tracing, namely strict and non-strict modes, corresponding to different reproduction requirements (perfect or statistical reproduction). We further explain the implementation details for handling the asynchronous semantics in Appendix C.1, and demonstrate the trace visualization in Appendix C.2.

4 Implementation

We elaborate on the implementation details of \sys, including compilation and asynchronous execution.

4.1 Compilation

To provide the runtime context for \sys functions more smoothly and make it transparent to users, we choose to compile (more specifically, transpile) the Python source code to insert the context into the code. We use Python’s ast package to parse the original function as AST, mainly apply the following code transformations on the AST, and compile back from the modified AST to a Python function.

-

•

Context management. We use the keyword _ctx to represent the context of the function, which is inserted in both the definition of \sys functions and the function calls that need the context. The functions that require the context as inputs are annotated by the attribute __need_ctx__, including all functions introduced in Section 3.2: \sys functions, gen and context manager functions.

-

•

Capture expression statements. We add an execution function as a wrapper for all expression statements within the \sys function and let them “interact” with the context. The execution behavior differs based on the value types, where the behavior for the most common str type is appending it to the prompt of the context. For a standalone f-string, we split it into several statements to let them be “executed” in order so that gen functions in the f-string can use the text before them. This procedure is detailed in Appendix A.1.

4.2 Asynchronous Execution and Future Objects

To implement the asynchronous semantics, we adopt the concept of Future introduced in the Python standard library concurrent.futures, which represents the asynchronous execution of a callable. When the result of Future is accessed, it will wait for the asynchronous execution to finish and synchronize the result.

We introduce StringFuture, which represents a string object, but its content may not be ready yet. StringFutures behave almost identically to the normal str objects in Python, so that users can use them as normal strings transparently and enjoy the benefits of asynchronous execution. Meanwhile, the StringFuture delays its synchronization when possible, ideally only synchronizing when the str method is called.

Formally, we define StringFuture to be a list of StringFutures: . Without loss of generality, we can regard a normal string as an already synchronized StringFuture. A StringFuture is synchronized when str() is called, and it collapses to a normal string by waiting and concatenating the results of str():

where operation means (string) concatenation. Such representation enables delaying the concatenation () of two StringFutures and as concatenating () two lists:

For other methods that cannot be delayed, like len(), it falls back to materializing to a normal string and then calls the counterpart method.

Similarly, we introduce BooleanFuture to represent a boolean value that may not be synchronized yet, which can be used to represent the comparison result between two StringFutures. A BooleanFuture is synchronized only when bool(B) is called, which enables using future objects in control flows.

5 Case Studies and Language Comparisons

In this section, we compare \sys with other prompt languages including LMQL (Beurer-Kellner et al., 2023), Guidance (GuidanceAI, 2023), and SGLang (Zheng et al., 2023) (whose syntax is mostly derived from Guidance) through usage scenarios, including parallelized LLM calls like the chain of thoughts with self-consistency (CoT-SC) (Wang et al., 2022), tool use agent like ReAct (Yao et al., 2023), and multi-agent chat. We further include a re-implementation of a long prompt (more than 1000 tokens) from ToolEmu (Ruan et al., 2024) using \sys in Appendix D, demonstrating the usage of \sys functions and prompt compositors for modularizing and structuring long prompts.

5.1 Chain of Thoughts with Self-Consistency (CoT-SC)

As shown in Figure 7, when implementing CoT-SC, \sys exhibits its conciseness and clarity compared with LMQL, SGLang, and Guidance. We mainly compare them from the following aspects:

Parallization. \sys performs automatic parallelization while being transparent to the programmer. In particular, since the returned value need not be immediately materialized, the different branches can execute concurrently. SGLang allows for parallelization between subqueries by explicitly calling the lm.fork() function. LMQL employs a conservative parallelization strategy, which waits for an async function immediately after it’s called. To support the same parallelization strategy, we use the asyncio library to manually launch LMQL queries in parallel at the top level. Guidance does not include asynchrony or parallelization as a core feature.

Generation and output retrieval. For LLM generation calls, \sys, SGLang, and Guidance use a Python function while LMQL uses inline variables (with annotations), which is less flexible for custom generation arguments. For the results of generations, both SGLang and Guidance store them as key-value pairs in a dictionary associated with the context object and are indexed with the assigned key when required. LMQL accesses the result by the declared inline variable, but the variable is not Python-native. In \sys, gen is an independent function call, whose return value can be assigned to a Python variable , which is Python-native and allows for better interoperability with IDEs. Moreover, \sys separates the prompt reading and writing for the generation. Users have the option to write generation results back to the prompt or not (called without side-effects in Table 1). This allows a simple replication of LLM generation calls sharing the same prompt. Otherwise, explicit copying or rebuilding of the prompt would be necessary for such requests.

Context management and prompt capturing. Both SGLang and Guidance need to explicitly manage context objects (s and lm) as highlighted as red in Figure 7. The prompts are captured manually using the += operation. The branching of the context is implemented differently, where SGLang uses the explicit fork method and Guidance returns a new context object in the overridden += operation. In contrast, \sys automatically captures standalone expression statements into the prompt context, similar to LMQL which captures standalone strings. The context management is also done automatically for nested function calls in \sys and LMQL, where \sys four patterns new, copy, same, and resume are supported in \sys (see Figures 5 and 2(a)) while LMQL only supports new and copy.

5.2 Parallelized LLM Calls

We validated the effectiveness of parallelizing LLM calls in \sys in three tasks: question answering with CoT-SC, content generation with skeleton-of-thought (SoT) (Ning et al., 2023), and hierarchical summarization with MemWalker (Chen et al., 2023). As shown in Table 2, the parallel version achieves significant speedup across different branching patterns, and the actual speedup ratios almost match the estimated ones. More experimental details can be found in Appendix B.

Time(s) CoT-SC (Wang et al., 2022) SoT (Ning et al., 2023) MemWalker (Chen et al., 2023) GPT-3.5 LLAMA-7b GPT-3.5 LLAMA-7b GPT-3.5 LLAMA-7b Sequential 27.6 17.0 227.2 272.3 707.6 120.6 Parallel 2.9 1.8 81.2 85.9 230.3 65.4 (estimated speedup*) 10 4.3 3.7 3.8 Speedup 9.49 9.34 2.79 3.17 3.07 1.84

5.3 ReAct Tool Use Agent

We use the ReAct (Yao et al., 2023) tool-use agent as an example to compare the accessibility of tool calls in different languages. As shown in Figure 8(a), the major workflow of ReAct unfolds over several cycles, each comprising three stages: (1) generating “thoughts” derived from existing information to steer subsequent actions; (2) selecting appropriate actions (tool calls) to execute; (3) retrieving the results of executing the actions. These results then serve as observations and influence the subsequent cycle.

LLM Tool calling can be divided into three steps: 1) encoding the external tools as specifications that are understandable by LLMs, 2) parsing generated tool calls, and 3) executing the tool calls. \sys provides out-of-the-box support for these steps based on the OpenAI’s API (OpenAI, ). As shown in Figure 8(a), documented Python functions like is_lucky defined in Figure 6 can directly be used as the tools argument without manual efforts. Differently, in Figure 8(b), Guidance (similarly for SGLang and LMQL) can generate tool calls and parse structured arguments via its select and other constraint primitives, but the tool specifications and format constraints need to be written manually. Guidance provides another way to automate this process but with limited support that only works for functions with string-typed arguments.

5.4 Complex context management: Multi-agent chat bot

We consider a simplified yet illustrative scenario of agent communities (Park et al., 2023; Hong et al., 2023; Qian et al., 2023), where two agents chat with each other, as demonstrated in Figure 9(c). During the chat, each agent receives messages from the counterpart, maintains their own chat history, and makes responses. \sys provides flexible context-passing methods for implementing such agents and we illustrate two ways in Figure 9 as examples.

(a) Explicit chat history: As shown in Figure 9(a), the conversation history is explicitly stored in self._history. The history is first initialized in the _setup function. In the chat function, the history is first retrieved and written into a new prompt context. At the end, self._history is refreshed with records() that represents updated conversation with new messages and responses.

(b) resume the context: As shown in Figure 9(b), each call to the chat method will resume its memorized context, allowing continually appending the conversation to the context. The context is initialization in the first call by copying the caller’s context. This approach provides a clear, streamlined, concise way to manage its own context.

5.5 Code Succinctness Comparison

As demonstrated in Figure 7, \sys is more concise than other compared languages to implement the Cot-SC algorithm. Quantitatively, we further introduce a new metric AST-size to indicate the succinctness of programs written in different languages, which counts the number of nodes in the abstract syntax tree (AST) of the code. For Python programs, we can obtain their AST using the standard library with ast.parse.

| \sys | LMQL | SGLang/Guidance | |||

| Tasks | AST-size | AST-size | vs. \sys | AST-size | vs. \sys |

| CoT-SC | 35 | 57 | 1.63 | 61 | 1.74 |

| ReAct | 61 | not support directly | 134 | 2.20 | |

| SoT | 59 | 120 | 2.03 | 107 | 1.81 |

| MemWalk | 36 | 64 | 1.78 | 60 | 1.67 |

As shown in Table 3, LMQL, SGLang, and Guidance need about twice AST nodes than \sys for implementing the same tasks, highlighting the succinctness of \sys.

6 Conclusion

This paper proposes APPL, a Prompt Programming Language that seamlessly integrates conventional programs and natural language prompts. It provides an intuitive and Python-native syntax, an efficient runtime with asynchronous semantics and effective parallelization, and a tracing module supporting effective failure diagnosis and replay. In the future, with the continuous advancement of generative AIs, designing new languages and efficient runtime systems that enhance AI with programmability and facilitate AI-based application development will become increasingly important.

Acknowledgement

We thank NingNing Xie, Chenhao Jiang, Shiwen Wu, Jialun Lyu, Shunyu Yao, Jiacheng Yang, the Xujie group and the Maddison group for their helpful discussions or feedback on the project. We thank Microsoft Accelerate Foundation Models Research (AFMR) for providing us with Azure credits for evaluating LLMs. This work was supported, in part, by Individual Discovery Grants from the Natural Sciences and Engineering Research Council of Canada, and the Canada CIFAR AI Chair Program.

References

- Ahn et al. (2022) Michael Ahn, Anthony Brohan, Noah Brown, Yevgen Chebotar, Omar Cortes, Byron David, Chelsea Finn, Chuyuan Fu, Keerthana Gopalakrishnan, Karol Hausman, et al. Do as i can, not as i say: Grounding language in robotic affordances. arXiv preprint arXiv:2204.01691, 2022.

- Andrej Karpathy (2023) Andrej Karpathy. LLM OS. Tweet, November 2023. URL https://twitter.com/karpathy/status/1723140519554105733. Accessed: 2024-03-22.

- BerriAI (2023) BerriAI. litellm. https://github.com/BerriAI/litellm, 2023.

- Besta et al. (2023) Maciej Besta, Nils Blach, Ales Kubicek, Robert Gerstenberger, Lukas Gianinazzi, Joanna Gajda, Tomasz Lehmann, Michal Podstawski, Hubert Niewiadomski, Piotr Nyczyk, et al. Graph of thoughts: Solving elaborate problems with large language models. arXiv preprint arXiv:2308.09687, 2023.

- Beurer-Kellner et al. (2023) Luca Beurer-Kellner, Marc Fischer, and Martin Vechev. Prompting is programming: A query language for large language models. Proceedings of the ACM on Programming Languages, 7(PLDI):1946–1969, 2023.

- Brown et al. (2020) Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, et al. Language models are few-shot learners. Advances in neural information processing systems, 33:1877–1901, 2020.

- Chase (2022) Harrison Chase. LangChain, October 2022. URL https://github.com/langchain-ai/langchain.

- Chen et al. (2023) Howard Chen, Ramakanth Pasunuru, Jason Weston, and Asli Celikyilmaz. Walking down the memory maze: Beyond context limit through interactive reading. arXiv preprint arXiv:2310.05029, 2023.

- Chowdhery et al. (2023) Aakanksha Chowdhery, Sharan Narang, Jacob Devlin, Maarten Bosma, Gaurav Mishra, Adam Roberts, Paul Barham, Hyung Won Chung, Charles Sutton, Sebastian Gehrmann, et al. Palm: Scaling language modeling with pathways. Journal of Machine Learning Research, 24(240):1–113, 2023.

- (10) Samuel Colvin, Eric Jolibois, Hasan Ramezani, Adrian Garcia Badaracco, Terrence Dorsey, David Montague, Serge Matveenko, Marcelo Trylesinski, Sydney Runkle, David Hewitt, and Alex Hall. Pydantic. URL https://docs.pydantic.dev/latest/.

- Ge et al. (2023) Yingqiang Ge, Yujie Ren, Wenyue Hua, Shuyuan Xu, Juntao Tan, and Yongfeng Zhang. Llm as os (llmao), agents as apps: Envisioning aios, agents and the aios-agent ecosystem. arXiv preprint arXiv:2312.03815, 2023.

- Gerganov (2023) Georgi Gerganov. llama.cpp. https://github.com/ggerganov/llama.cpp, 2023.

- GuidanceAI (2023) GuidanceAI. Guidance. https://github.com/guidance-ai/guidance, 2023.

- Hao et al. (2023) Shibo Hao, Yi Gu, Haodi Ma, Joshua Jiahua Hong, Zhen Wang, Daisy Zhe Wang, and Zhiting Hu. Reasoning with language model is planning with world model. arXiv preprint arXiv:2305.14992, 2023.

- Hong et al. (2023) Sirui Hong, Mingchen Zhuge, Jonathan Chen, Xiawu Zheng, Yuheng Cheng, Jinlin Wang, Ceyao Zhang, Zili Wang, Steven Ka Shing Yau, Zijuan Lin, et al. Metagpt: Meta programming for multi-agent collaborative framework. In The Twelfth International Conference on Learning Representations, 2023.

- HuggingFace (2023) HuggingFace. Text generation inference. https://github.com/huggingface/text-generation-inference, 2023.

- Khattab et al. (2023) Omar Khattab, Arnav Singhvi, Paridhi Maheshwari, Zhiyuan Zhang, Keshav Santhanam, Sri Vardhamanan, Saiful Haq, Ashutosh Sharma, Thomas T Joshi, Hanna Moazam, et al. Dspy: Compiling declarative language model calls into self-improving pipelines. arXiv preprint arXiv:2310.03714, 2023.

- Kim et al. (2023) Sehoon Kim, Suhong Moon, Ryan Tabrizi, Nicholas Lee, Michael W Mahoney, Kurt Keutzer, and Amir Gholami. An llm compiler for parallel function calling. arXiv preprint arXiv:2312.04511, 2023.

- Kwon et al. (2023) Woosuk Kwon, Zhuohan Li, Siyuan Zhuang, Ying Sheng, Lianmin Zheng, Cody Hao Yu, Joseph Gonzalez, Hao Zhang, and Ion Stoica. Efficient memory management for large language model serving with pagedattention. In Proceedings of the 29th Symposium on Operating Systems Principles, pp. 611–626, 2023.

- Li et al. (2023) Guohao Li, Hasan Abed Al Kader Hammoud, Hani Itani, Dmitrii Khizbullin, and Bernard Ghanem. Camel: Communicative agents for” mind” exploration of large scale language model society. arXiv preprint arXiv:2303.17760, 2023.

- Liang et al. (2023) Jacky Liang, Wenlong Huang, Fei Xia, Peng Xu, Karol Hausman, Brian Ichter, Pete Florence, and Andy Zeng. Code as policies: Language model programs for embodied control. In 2023 IEEE International Conference on Robotics and Automation (ICRA), pp. 9493–9500. IEEE, 2023.

- Liu (2023) Jason Liu. Instructor. https://github.com/jxnl/instructor, 2023.

- Liu et al. (2022) Ruibo Liu, Jason Wei, Shixiang Shane Gu, Te-Yen Wu, Soroush Vosoughi, Claire Cui, Denny Zhou, and Andrew M Dai. Mind’s eye: Grounded language model reasoning through simulation. arXiv preprint arXiv:2210.05359, 2022.

- Nakano et al. (2021) Reiichiro Nakano, Jacob Hilton, Suchir Balaji, Jeff Wu, Long Ouyang, Christina Kim, Christopher Hesse, Shantanu Jain, Vineet Kosaraju, William Saunders, et al. Webgpt: Browser-assisted question-answering with human feedback. arXiv preprint arXiv:2112.09332, 2021.

- Ning et al. (2023) Xuefei Ning, Zinan Lin, Zixuan Zhou, Zifu Wang, Huazhong Yang, and Yu Wang. Skeleton-of-thought: Large language models can do parallel decoding. In The Twelfth International Conference on Learning Representations, 2023.

- Okuda & Amarasinghe (2024) Katsumi Okuda and Saman Amarasinghe. Askit: Unified programming interface for programming with large language models. In 2024 IEEE/ACM International Symposium on Code Generation and Optimization (CGO), pp. 41–54. IEEE, 2024.

- (27) OpenAI. Openai api. https://platform.openai.com/docs/api-reference.

- OpenAI (2022) OpenAI. Introducing chatgpt, November 2022. URL https://openai.com/blog/chatgpt. Accessed: 2024-03-22.

- OpenAI (2023) OpenAI. Gpt-4 technical report, 2023.

- Packer et al. (2023) Charles Packer, Vivian Fang, Shishir G Patil, Kevin Lin, Sarah Wooders, and Joseph E Gonzalez. Memgpt: Towards llms as operating systems. arXiv preprint arXiv:2310.08560, 2023.

- Pang et al. (2022) Richard Yuanzhe Pang, Alicia Parrish, Nitish Joshi, Nikita Nangia, Jason Phang, Angelica Chen, Vishakh Padmakumar, Johnny Ma, Jana Thompson, He He, and Samuel Bowman. QuALITY: Question answering with long input texts, yes! In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 5336–5358, Seattle, United States, July 2022. Association for Computational Linguistics. URL https://aclanthology.org/2022.naacl-main.391.

- Park et al. (2023) Joon Sung Park, Joseph C O’Brien, Carrie J Cai, Meredith Ringel Morris, Percy Liang, and Michael S Bernstein. Generative agents: Interactive simulacra of human behavior. arXiv preprint arXiv:2304.03442, 2023.

- Paszke et al. (2019) Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. Pytorch: An imperative style, high-performance deep learning library. Advances in neural information processing systems, 32, 2019.

- Patil et al. (2023) Shishir G Patil, Tianjun Zhang, Xin Wang, and Joseph E Gonzalez. Gorilla: Large language model connected with massive apis. arXiv preprint arXiv:2305.15334, 2023.

- Prefect (2023) Prefect. marvin. https://github.com/PrefectHQ/marvin, 2023.

- Qian et al. (2023) Chen Qian, Xin Cong, Wei Liu, Cheng Yang, Weize Chen, Yusheng Su, Yufan Dang, Jiahao Li, Juyuan Xu, Dahai Li, Zhiyuan Liu, and Maosong Sun. Communicative agents for software development, 2023.

- Qin et al. (2023) Yujia Qin, Shihao Liang, Yining Ye, Kunlun Zhu, Lan Yan, Yaxi Lu, Yankai Lin, Xin Cong, Xiangru Tang, Bill Qian, et al. Toolllm: Facilitating large language models to master 16000+ real-world apis. arXiv preprint arXiv:2307.16789, 2023.

- Raffel et al. (2020) Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, and Peter J Liu. Exploring the limits of transfer learning with a unified text-to-text transformer. The Journal of Machine Learning Research, 21(1):5485–5551, 2020.

- Richards (2023) Toran Bruce Richards. Auto-gpt: Autonomous artificial intelligence software agent. https://github.com/Significant-Gravitas/Auto-GPT, 2023. URL https://github.com/Significant-Gravitas/Auto-GPT. Initial release: March 30, 2023.

- Ruan et al. (2024) Yangjun Ruan, Honghua Dong, Andrew Wang, Silviu Pitis, Yongchao Zhou, Jimmy Ba, Yann Dubois, Chris J Maddison, and Tatsunori Hashimoto. Identifying the risks of lm agents with an lm-emulated sandbox. In The Twelfth International Conference on Learning Representations, 2024.

- Team (2023) XAgent Team. Xagent: An autonomous agent for complex task solving, 2023.

- Touvron et al. (2023a) Hugo Touvron, Thibaut Lavril, Gautier Izacard, Xavier Martinet, Marie-Anne Lachaux, Timothée Lacroix, Baptiste Rozière, Naman Goyal, Eric Hambro, Faisal Azhar, et al. Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971, 2023a.

- Touvron et al. (2023b) Hugo Touvron, Louis Martin, Kevin Stone, Peter Albert, Amjad Almahairi, Yasmine Babaei, Nikolay Bashlykov, Soumya Batra, Prajjwal Bhargava, Shruti Bhosale, et al. Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288, 2023b.

- Wang et al. (2023) Guanzhi Wang, Yuqi Xie, Yunfan Jiang, Ajay Mandlekar, Chaowei Xiao, Yuke Zhu, Linxi Fan, and Anima Anandkumar. Voyager: An open-ended embodied agent with large language models. arXiv preprint arXiv:2305.16291, 2023.

- Wang et al. (2022) Xuezhi Wang, Jason Wei, Dale Schuurmans, Quoc V Le, Ed H Chi, Sharan Narang, Aakanksha Chowdhery, and Denny Zhou. Self-consistency improves chain of thought reasoning in language models. In The Eleventh International Conference on Learning Representations, 2022.

- Wei et al. (2022) Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Fei Xia, Ed Chi, Quoc V Le, Denny Zhou, et al. Chain-of-thought prompting elicits reasoning in large language models. Advances in Neural Information Processing Systems, 35:24824–24837, 2022.

- Willard & Louf (2023) Brandon T Willard and Rémi Louf. Efficient guided generation for large language models. arXiv e-prints, pp. arXiv–2307, 2023.

- Wu et al. (2023) Qingyun Wu, Gagan Bansal, Jieyu Zhang, Yiran Wu, Shaokun Zhang, Erkang Zhu, Beibin Li, Li Jiang, Xiaoyun Zhang, and Chi Wang. Autogen: Enabling next-gen llm applications via multi-agent conversation framework. arXiv preprint arXiv:2308.08155, 2023.

- Yao et al. (2023) Shunyu Yao, Jeffrey Zhao, Dian Yu, Nan Du, Izhak Shafran, Karthik R Narasimhan, and Yuan Cao. React: Synergizing reasoning and acting in language models. In The Eleventh International Conference on Learning Representations, 2023.

- Yao et al. (2024) Shunyu Yao, Dian Yu, Jeffrey Zhao, Izhak Shafran, Tom Griffiths, Yuan Cao, and Karthik Narasimhan. Tree of thoughts: Deliberate problem solving with large language models. Advances in Neural Information Processing Systems, 36, 2024.

- Yu et al. (2022) Gyeong-In Yu, Joo Seong Jeong, Geon-Woo Kim, Soojeong Kim, and Byung-Gon Chun. Orca: A distributed serving system for Transformer-Based generative models. In 16th USENIX Symposium on Operating Systems Design and Implementation (OSDI 22), pp. 521–538, 2022.

- Zheng et al. (2023) Lianmin Zheng, Liangsheng Yin, Zhiqiang Xie, Jeff Huang, Chuyue Sun, Cody Hao Yu, Shiyi Cao, Christos Kozyrakis, Ion Stoica, Joseph E Gonzalez, et al. Efficiently programming large language models using sglang. arXiv preprint arXiv:2312.07104, 2023.

- Zhou et al. (2023) Andy Zhou, Kai Yan, Michal Shlapentokh-Rothman, Haohan Wang, and Yu-Xiong Wang. Language agent tree search unifies reasoning acting and planning in language models. arXiv preprint arXiv:2310.04406, 2023.

Appendix

Appendix A Implementation Details

A.1 Standalone f-string

Processing f-strings as prompts is nuanced in terms of semantic correctness. When users write a stand-alone f-string as in Figure 10, they usually expect the program to first insert prefix to the prompt context, then call the generation function, and finally add suffix to the context. However, if the whole f-string is processed as a single prompt statement, the generation call will be triggered first without having prefix added into the context. Therefore, we need to split the standalone f-string expression statement into finer-grained substrings.

A.2 Context Management

Figure 11 is an example of three different prompt context passing methods in \sys, namely new, same, and copy (resume is not shown here). ask_questions is the top-level function, which calls intro, addon, and query. When intro is called, a new empty context is created, and then the local prompt context is returned, which contains only one line of text – “Today is …”. This return value is appended into ask_questions’s prompt context. When calling addon, the same prompt context is shared across addon and ask_questions, so any modification to the prompt context by addon is also effective for ask_questions. For query, it can read the previous prompt history of ask_questions, but any change to the prompt context will not be written back.

A.3 Tool specification

can automatically extract information from the function signature and docstring to create a tool specification in JSON format from a Python function.

The docstring needs to follow parsable formats like Google Style. As shown in Figures 12 and 13, the tool specification in JSON that follows OpenAI’s API format is automatically created by analyzing the signature and docstring of the function is_python.

For APIs that do not support tool calls, users can format tool specifications as plain text with a custom formatted using the information extracted from the Python function.

Appendix B Parallelization Experiment Details

In the parallelization experiments, we test both OpenAI APIs and the locally deployed Llama-7B model running on SGLang’s backend SRT. The OpenAI model we use is gpt-3.5-turbo-1106. For local SRT benchmarking, we use one NVIDIA RTX 3090 with 24 GiB memory.

CoT-SC

Skeleton-of-Thought

Skeleton-of-Thought (SoT) (Ning et al., 2023) is a prompting technique used in generating long paragraphs consisting of multiple points. It first generates the outline and expands each point separately. The generation of each point can be parallelized. The dataset we use contains the top 20 data instances of the vicuna-80 dataset from the original SoT paper. The main part of SoT is shown in Figure 14.

Hierarchical Summarization

MemWalker (Chen et al., 2023) is a new technique addressing the challenge of extremely long contexts. Given a very long text, MemWalker will first divide it into multiple segments, summarize each segment, and further summarize the combination of multiple segments, forming a hierarchical summarization. This summarization will be used in future navigation. The steps without inter-dependency can be parallelized. In this experiment, we use the first 20 articles from the QuALITY dataset (Pang et al., 2022) and compute the average time required to summarize these articles. The summarization has three layers. Each leaf node summarizes 4,000 characters. Every four leaf nodes are summarized into one intermediate node, and two intermediate nodes are summarized into one top-level node. The implementation can be found in Figure 15.

B.1 Speedup Estimation

This section details the calculation of the estimated speedup shown in Table 2. We use Amdahl’s law, a widely accepted method for estimating the performance improvement of parallelization. It can be expressed as where is the estimated speedup, is the proportion of runtime that can be parallelized, is the speedup ratio for the parallelizable portion. In our scenarios, if a program can be divided into stages, each taking up of the total runtime and accelerated by times, the overall estimated speedup is given by

We approximate using the number of requests, which is profiled on the datasets used in the evaluation, and using the number of maximum parallelizable requests (e.g. the number of branches in CoT-SC).

Appendix C Tracing and Failure Recovery

C.1 Implementation

To implement tracing and failure recovery correctly, robustness (the program might fail at any time) and reproducibility must be guaranteed. Reproducibility can be further categorized into two levels, namely strict and non-strict.

Strict reproducibility requires the execution path of the program to be identical when being re-run. This can be achieved given that the main thread of \sys is sequential. We annotate each generation request with a unique identifier and record a SendRequest event when it’s created, and we record a FinishRequest event together with the generation results when it’s finished. Note that SendRequest are in order and FinishRequest can be out of order, so their correspondence depends on the request identifier.

Non-strict reproducibility requires the re-run generations to be statistically correct during the sampling process. For example, multiple requests sharing the same prompt and sampling parameters might have different generation outputs, and during re-run their results are exchangeable, which is still considered correct. Therefore, \sys groups cached requests according to their generation parameters, and each time a cache hit happens, one cached request is popped from the group. A nuance here is that when a generation request is created, its parameters might not yet be ready (e.g. its prompt can be a StringFuture). Therefore we add another type of event called CommitRequest to denote the generation requests are ready and record its parameters.

C.2 Visualization

provides two interfaces to visualize the execution traces for debugging and more analysis: (1) A timeline showing the start and end time of each LLM call (as shown in Figure 16; (2) A detailed hierarchical report showing the information of each function and LLM calls (as shown in Figure 17). The timeline is generated in JSON format and can be read by the tracing interface of the Chrome browser.

Appendix D Structured and Modularized Long Prompt

We re-implemented the long prompt for the agent in ToolEmu (Ruan et al., 2024) using \sys. This long prompt demonstrates how to structure the prompt using \sys functions with definitions for references and prompt compositors for format control.

The corresponding prompt is listed below as plain text. The advantage of plain text is its readability, but its static nature limits its support for dynamic changes including content reusing and multi-version.