[table]capposition=top \newfloatcommandcapbtabboxtable[][\FBwidth]

Synthetic Training for

Monocular Human Mesh Recovery

Abstract

Recovering 3D human mesh from monocular images is a popular topic in computer vision and has a wide range of applications. This paper aims to estimate 3D mesh of multiple body parts (e.g., body, hands) with large-scale differences from a single RGB image. Existing methods are mostly based on iterative optimization, which is very time-consuming. We propose to train a single-shot model to achieve this goal. The main challenge is lacking training data that have complete 3D annotations of all body parts in 2D images. To solve this problem, we design a multi-branch framework to disentangle the regression of different body properties, enabling us to separate each component’s training in a synthetic training manner using unpaired data available. Besides, to strengthen the generalization ability, most existing methods have used in-the-wild 2D pose datasets to supervise the estimated 3D pose via 3D-to-2D projection. However, we observe that the commonly used weak-perspective model performs poorly in dealing with the external foreshortening effect of camera projection. Therefore, we propose a depth-to-scale (D2S) projection to incorporate the depth difference into the projection function to derive per-joint scale variants for more proper supervision. The proposed method outperforms previous methods on the CMU Panoptic Studio dataset according to the evaluation results and achieves comparable results on the Human3.6M body and STB hand benchmarks. More impressively, the performance in close shot images gets significantly improved using the proposed D2S projection for weak supervision, while maintains obvious superiority in computational efficiency.

Index Terms:

3D mesh recovery, synthetic training, 3D-to-2D projection, multi-part estimation.I Introduction

Human 3D mesh recovery (reconstruction/modeling) is a hot and challenging research topic in the computer vision community, which has drawn more and more attention recently. Different from the general pose estimation that detects several 2D or 3D keypoints, the 3D human mesh contains thousands of vertices that can provide subtle cues for understanding human posture, behavior, and interaction. It is essential for many applications such as virtual try-on, human behavior understanding, human-scene interpretation, etc. The early works focused on integrating 3D body information collected from a multi-view or RGB-D camera system [1, 2] which is expensive and not easily available. Recently, more works try to directly recover the 3D human body mesh from a single image [3, 4, 5, 6, 7] to facilitate application. Besides, a more challenging problem has targeted 3D face [8, 9, 10] and hand [11, 12, 13] recovery from monocular face/hand images. Undoubtedly, the next break-through will be the integration of single-body-part 3D mesh recovery tasks within a unified framework, which leads to recover multiple body parts from a single image. We think it is much closer to the actual application scenario. Obviously, due to the image blur, occlusion, and truncation in real scenes, it is much more challenging.

Previous works mainly focus on the single-body-part mesh recovery from monocular images, while research for the multi-part body mesh recovery is still at a relatively early stage. Existing works [14, 15] adopt a multi-stage optimization-based framework. They separately estimate the 2D/3D keypoints of each part using the individual module, and then make iterative optimizations on the statistical body model (e.g.SMPL-X [15], Adam [16]) to fit the keypoint positions. They do not need much training data but rely on the multi-stage optimization strategy. Although the results seem promising, the iterative optimization process is very time-consuming. It takes tens of seconds to process an image, which makes it difficult to meet actual needs. Therefore, in this paper, we explore a neural network to reduce the run-time to milliseconds and keep the accuracy stand.

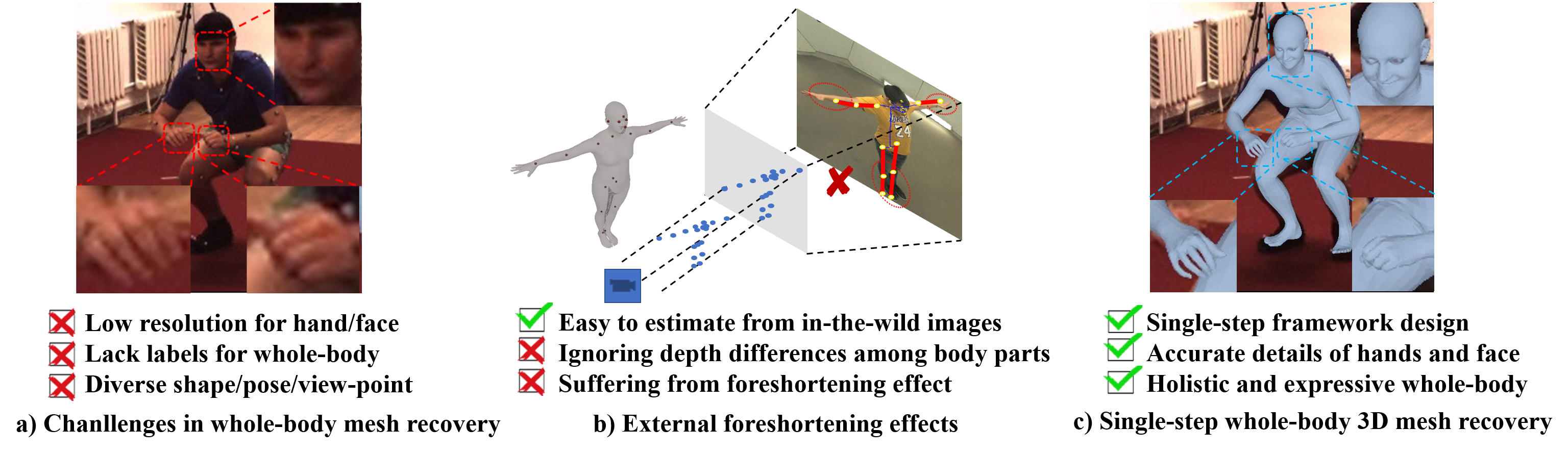

The challenges of applying a learning-based algorithm on this task mainly come from two aspects, data lacking and large scale differences, as shown in Fig. 2 (a). Firstly, we lack data with paired 2D images and 3D annotations for training. Due to the inherent physical size difference, it is difficult to precisely capture large torsos and small body parts at the same time. Therefore, there are merely datasets that contain 2D images with whole-body 3D mesh and motion annotations. In contrast, there are some single-body-part datasets of 3D body pose, hands gesture, and face expression available, which contain rich annotations of the individual parts in constrained experimental environments. In addition, due to the specific capturing configurations, sparse shape/pose space, large scale difference, et al., it is hard to directly combine them for training. To meet the need for data, we need to design a suitable framework that can be trained using the existing un-paired data. Secondly, how to deal with the large scale differences between different human parts in one network is challenging. Generally, we tend to recover the 3D hands/face/body from cropped high-resolution images with a centered target and most single-body-part 3D datasets [17, 18, 19, 20] are collected in this manner. However, as shown in Fig. 2, in general cases, the image patches of small body parts are likely to be blurred, partially invisible, and randomly placed in the whole-body image. The scale issue requires the network to handle different scale characteristics of each part. When recovering the whole-body 3D mesh, the body requires a large respective filed to learn the structure constraints, while the small parts, like hands, require a higher quality of input for complex pose prediction.

To address these problems, we design a multi-branch framework that can be trained using unpaired data in a synthetic training manner. Firstly, to deal with the large-scale differences between human parts, the network needs to provide a diverse receptive field for different body parts. The key idea is to disentangle the estimation of different body properties, such as body pose, shape, and hand pose, in the convenience of separate training. Therefore, we design the framework with two branches that can learn the global and partial representation respectively. The global branch estimates the body shape and camera parameters with the high-level image features, while the partial branch achieves the estimation for small body parts, like 3D hand pose, individually. Benefited from this decoupled architecture design, each individual human part along with the holistic information can be recovered.

Secondly, for the multi-branch framework training, we propose a synthetic training scheme (STS) to generate the paired data. Specifically, for the pose-related estimation in both branches, we employ the whole-body 2D pose predicted from the 2D image as an intermediate representation. In this way, the model gets trained to learn the mapping from 2D pose coordinates to 3D joint rotations. The training sample is supposed to contain a whole-body 2D pose along with the 3D rotation of each body joint. Since most existing datasets only contain 2D/3D pose of individual body parts that are scale different and captured in diverse configurations and shape/pose space, directly combing those individual data will cause weird poses. Instead, we propose STS to integrate them naturally and train the model via exploiting the consistency between the synthetic mesh and the regressed mesh. In this way, the STS helps to solve the lack of paired data for the directly supervised training, and meanwhile increases the diversity of the training data (e.g. full shooting angles, abundant shape/pose space, unified annotations) by combining datasets from multiple resources and generating data with controllable settings.

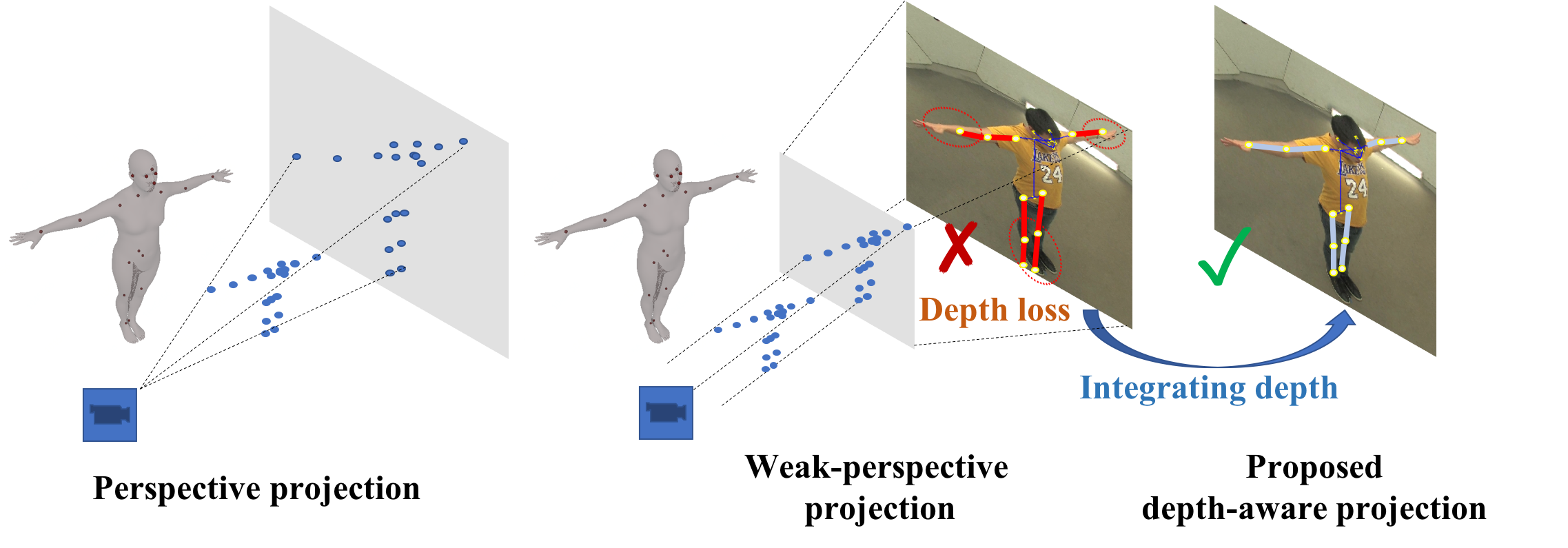

On the other hand, using synthetic data only for training brings the generalization problem. For instance, during training, we use synthetic data as input which are accurate and complete, while during inference the inputs are estimated 2D poses from in-the-wild images which are usually inaccurate and incomplete. The gap between synthetic and real may lead to poor in-the-wild performance. However, in-the-wild 3D datasets are hard to obtain. To solve them, existing methods [4, 5, 7, 9, 14] usually employ in-the-wild 2D pose datasets for better generalization. In this process, due to the in-the-wild 2D images that are un-calibrated, weak-perspective camera model is widely adopted in the 3D-to-2D projection for supervision. Nonetheless, in the weak-perspective model, the depth difference between different body parts is ignored. As shown in Fig. 2 (b), it may cause the failure of dealing with the foreshortening effects and makes the 3D-to-2D projection prone to misalignment. To cope with this problem, we design a D2S (D2S) projection function to calibrate the projection scale of each keypoint separately by involving the depth difference in the projection formulation. It can be regarded as a per-joint version of the weak-perspective model that can deal with the foreshortening effects and rectify the 2D pose supervision.

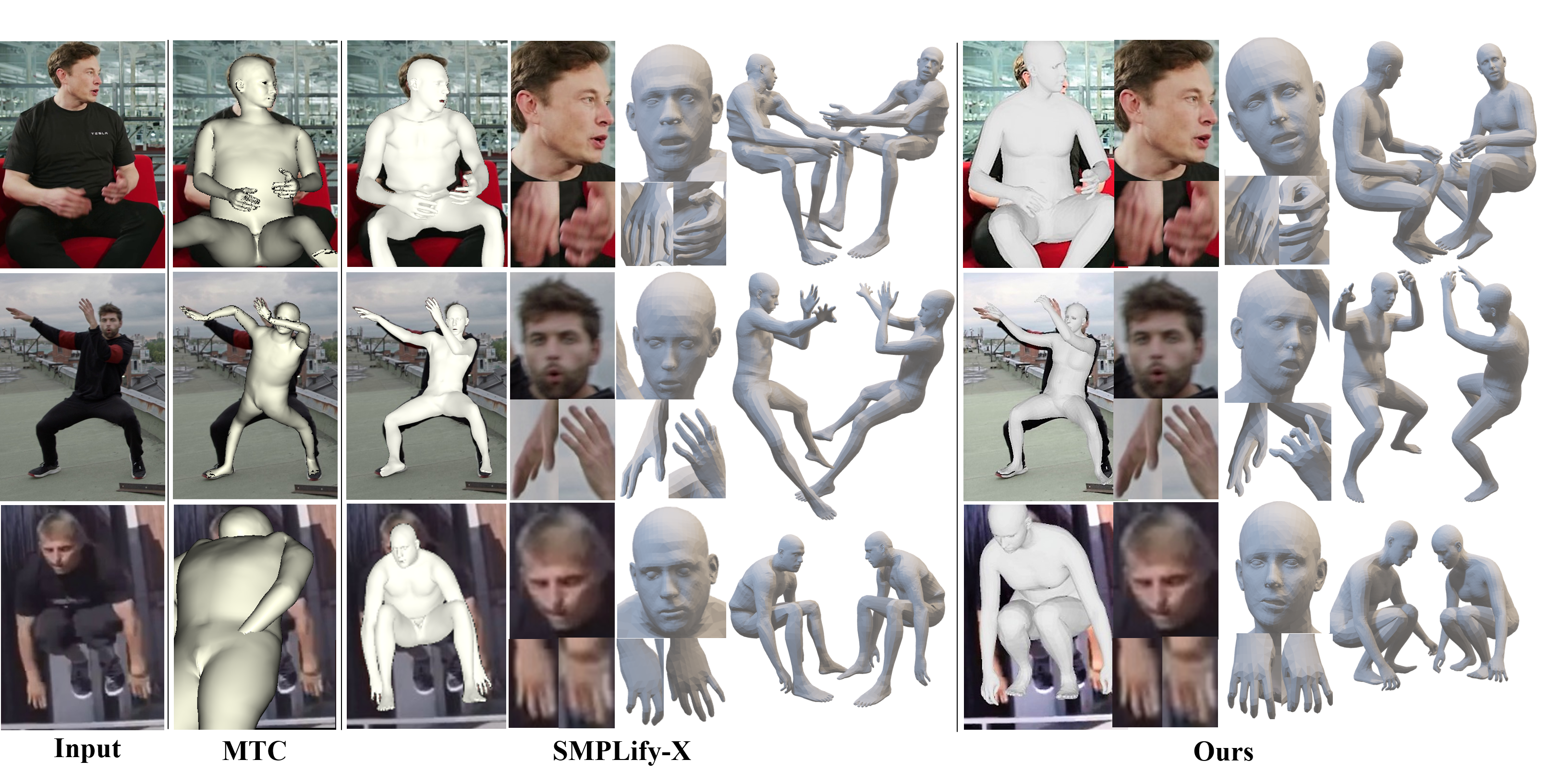

The proposed method is well evaluated both qualitatively and quantitatively. As shown in Fig. 1, compared with previous SOTA methods, MTC [14] and SMPLify-X [15] , the model trained with the proposed synthetic training method shows an obvious advance in the estimation of body pose, shape, and expression. Especially benefited from getting rid of the iterative optimization process, the computational efficiency of our proposed single-step framework gets greatly improved. Our network requires only one forward pass to obtain results, while previous methods [14, 15] need multiple iterative processes to optimize the complex expectation functions. We conduct experiments and evaluations on three benchmarks. State-of-the-art results are achieved on CMU Panoptic Studio dataset [14]. In addition, compared with single part 3D recovery, comparative results are achieved on Human3.6M [21] body and STB hand dataset [17]. Visualization results of the recovered expressive 3D human meshes also demonstrate the effectiveness of the proposed framework.

The main contributions of our paper are listed as follows:

-

•

We design a decoupled framework for monocular human mesh recovery, which can be trained using unpaired data in a synthetic training manner.

-

•

A novel D2S projection is designed to deal with the camera foreshortening effects that the weak-perspective camera ignores in un-calibrated conditions.

- •

II Related Work

Human Pose Estimation. With the development of deep learning, a great improvement has been made in estimating keypoints of human skeleton, hands or face. 2D human pose estimation [22, 23, 24, 25, 26] aims to detect 2D keypoint locations, while 3D human pose estimation [27, 28, 29, 30, 31, 32, 33] further infers the 3D locations from 2D image. 3D human pose estimation has shown the advantage of human spatial representation and there are two kinds of 3D human pose estimation approaches. One is the two-stage scheme that first detects 2D locations then infers 3D locations from the 2D keypoints input [27, 28, 29]. The other scheme directly predicts 3D human pose from images [30, 31, 32]. Human pose estimation can provide keypoint locations for skeleton description, however, the sparse location representation is not enough for understanding humans sufficiently. In our work, we use the 2D/3D keypoints descriptions as the intermediate supervision for our 3D mesh recovery framework.

3D Human Mesh Recovery. To reduce the complexity, many existing approaches regard this problem as recovering parameters of a statistical 3D human model. The 3D body statistical model SMPL [34] has been widely used because of its good performance and free access. Recently, plenty of ConvNet-based parameters recovery methods [4, 6, 35, 36, 37] are proposed. Compared with the optimization-based methods (e.g. SMPLify [3]), ConvNet-based methods have shown obvious advantages in both performance and computational efficiency. However, these learning-based methods are limited by lacking paired data for training. Therefore, many methods focus on developing various loss functions for supervising the model with data available. For example, HMR [4] trained a convolution neural network using unpaired data in a generative adversarial manner. They employ a discriminator to distinguish the rationality of the predicted SMPL parameters using 3D motion capture data. DenseRaC [38] rendered the estimated 3D mesh (textured with the part segmentation map) back to the 2D plane and utilized the DensePose results for supervision. TexturePose [39] utilized the appearance consistency of the person among multi-viewpoints (or even adjacent video frames) for supervision. Kundu et. al. [40] also employed the appearance consistency to make up the lack of paired training data but in a self-supervised manner. They have used the image pairs of the same person to disentangle the foreground human appearance from the background and map it to body mesh for supervising the consistency of vertex-level appearance. Besides, other methods seek to employ disentangled representation for intermediate supervision. For instance, Sun et al. [7] takes the 2D pose as an intermediate representation and develop a skeleton-disentangled representation to tackle the feature coupling problem and reduce the task complexity. More and more researchers realize the importance of 2D poses for better generalization. Choi et. al. [41] proposed a graph neural network to hierarchically regress the 3D human mesh from the 2D pose in a coarse-to-fine manner. In this work, we employ 2D pose as an intermediate representation to connect the 3D space and 2D image plane.

3D Hands and Face Mesh Recovery. Modeling hands and faces are independent research areas from human body recovery. Statistical models such as MANO [13] and FLAME [8] have been widely adopted to reduce complexity. Lacking a 3D dataset is still the main challenge. Kulon et al. [42] proposed to collect internet images with 3D hand mesh annotations via an iterative fitting hand model based on its 2D pose. Zhou et al. [43] developed a multi-stage framework for 3D hand mesh recovery, which can make use of all the sources of available training data, such as 2D/3D pose and 3D motion capture data. Especially, a model is trained to estimate MANO pose and shape parameters from the 3D pose position. Besides, face modeling is promoted by 3D scans models [10], which can represent various face shapes and expressions. FLAME [8] first models the whole head region instead of the face region solely, and outputs the head rotations along with the neck region. Based on FLAME, RingNet [9] designs an end-to-end 3D face and expression estimation network with a shape consistency loss to learn 2D-to-3D mapping. However, most existing methods for 3D hand/face mesh recovery only focus on estimating from the 2D high-resolution cropped images of face/hand, which is different from the blurred whole-body image we used.

3D Whole-body Recovery with Body, Hands, and Face. Different from the separate models, Joo et al. [16] introduced the Adam model to capture human motion by stitching the body, hands and, face model together. SMPL+H [13] integrates a 3D hand model into the SMPL body model while it does not consider face modeling. Xiang et al. [14] use separate CNN networks for the body, hand, and face, and then jointly fits the Adam model to the outputs of all body parts using an optimization-based algorithm. A part orientation field [22, 23] is adopted to encode 3D orientation of body parts w.r.t. 2D space. SMPL-X [15] adds the FLAME head model [8] to SMPL+H and learns the shape and pose-dependent blend shapes by fitting the model to 3D scans data. Furthermore, SMPLify-X [15] is proposed to recover the full human 3D mesh by iterative fitting SMPL-X model to 2D keypoints of face, hands, and body. Those previous works promote the 3D body recovery with hands and faces. However, they are all based on the iterative optimization algorithm, which is time-consuming and not optimal. In this paper, our work further explores the single-step model for the body, hands, and face recovery.

III Method

III-A Overview

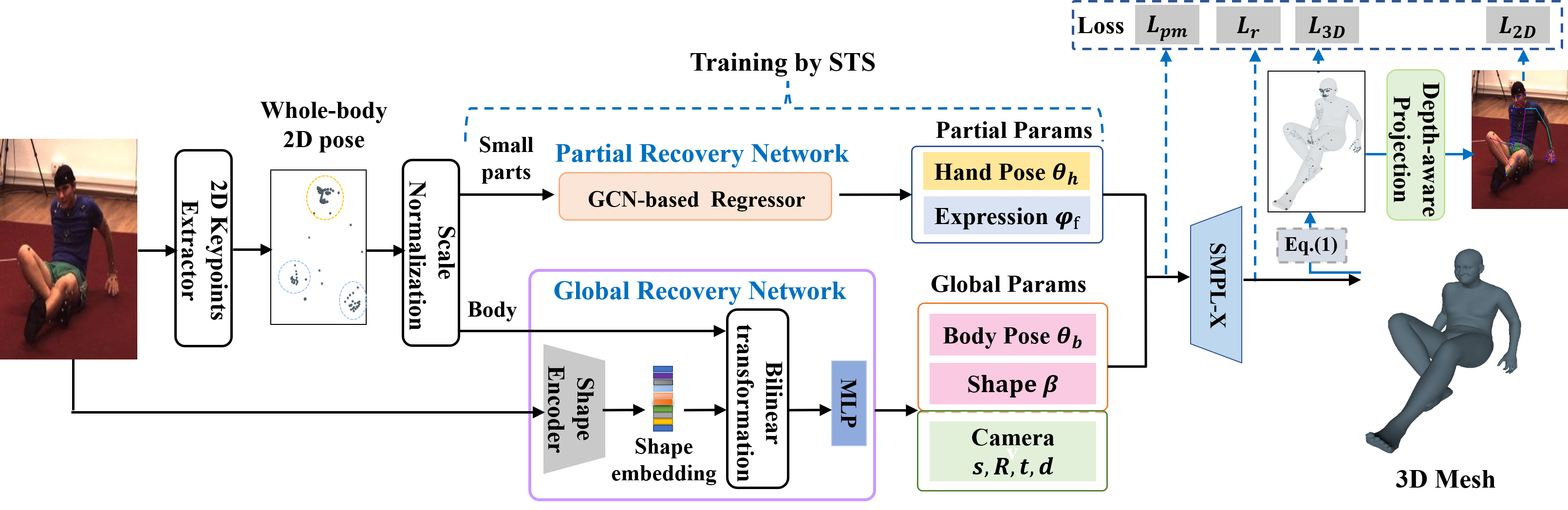

We aim to train a single-shot model for whole-body 3D human mesh recovery, including body, hands, and expression from a single uncalibrated image. By involving the statistical model SMPL-X [15] to encode the whole-body 3D human mesh, the task is converted to estimate the SMPL-X parameters from a 2D image. Fig. 3 shows an overview of the proposed framework. Take a single 2D image as input, the outputs of our network are global body/camera and partial hands/expression parameters for the SMPL-X model that derives the 3D mesh. In our proposed framework, a two-branch design is adopted to estimate parameters of 1) large-scale body and camera, 2) small-scale 3D hands pose, and facial expression, respectively. Since we lack the paired data to support the directly supervised training of the partial branch, we propose a synthetic training scheme (STS) to help regress hands and face parameters with the unpaired 3D data in a self-supervised manner. Besides, for better generalization and richer representation learning, we supervise the 3D pose of the estimated 3D mesh with 2D pose using the proposed D2S projection. We will introduce the network architecture and main modules of our framework in the following.

III-B Background

III-B1 SMPL-X Model

We employ a unified parametric model, SMPL-X [15], for recovering the 3D human mesh with our estimated body, hands, and expression parameters as input. In this part, we summarize the salient aspects of the model in our notation. SMPL-X can be regarded as the integration of the SMPL body model, FLAME head model, and MANO hand model [13]. In SMPL-X, statistical parameters of pose , shape , and expression are disentangled and used to control the human 3D mesh variations of different aspects. An efficient mapping is established to recover the 3D human mesh with vertices, where represents the statistical prior of human body mesh.

The pose parameter represents the relative 3D rotation of keypoints, including poses of body , each hand , jaw , each eye and global rotation . The shape parameter represents the joint shape of body, face and hands. The expression parameter represents the facial expression. Both and are PCA coefficients that denote the variations of first 10 principle components. Here, for simplification, we use a single vector to represent the combination of and . is a PCA coefficient for each hand to capture the pose variations of 15 keypoints (45 parameters). Besides, a linear regressor is developed to derive the 3D keypoint locations from vertices of human 3D mesh by

| (1) |

The SMPL-X model encodes the complex human mesh into a one-dimensional parameter vector and decouples different body part factors (e.g. shape, pose, facial expression) into individual parameters. We use the mapping to recover the total 3D human mesh and those statistical parameters are yielded by our proposed network.

III-B2 Perspective projection

In general, a perspective model is employed to carry out the 3D-to-2D projection, establishing the mapping from real 3D space to 2D image. The projection from the -th human 3D keypoint to image coordinates can be derived as

| (2) |

where is the camera intrinsic matrix, and are extrinsic parameters denoting the camera 3D rotation and translation respectively. For simplification, we integrate the camera rotation expression into the human body rotation parameter and ignore the camera distortion. In this way, the coordinates of can be derived as

| (3) |

| (4) |

where is the 3D translation from body center to camera. Besides, and where is the camera focal length, and are per-pixel physical sizes in the height and width dimension, respectively.

III-B3 Weak-perspective projection

For the convenience of supervising 3D human mesh with 2D pose labels, it is necessary to represent a 3D human body in camera coordinates. However, the input 2D images are un-calibrated and the complete perspective camera parameters are hard to retrieve. In this situation, weak-perspective camera model is widely used in most existing methods for calculating the 2D projection of 3D keypoints by

| (5) |

where is the global rotation parameter, is an orthographic projection operation, and represent translation and scale on the image plane, respectively. In detail, can be derived as

| (6) |

| (7) |

III-C 3D Human Parameters Recovery Network

As shown in Fig. 3, a two-branch framework is designed to predict the SMPL-X and camera parameters from a 2D image for whole-body 3D mesh recovery. Given 2D images, we use OpenPose [22] and a shape encoder to predict the whole-body 2D keypoints and shape embedding, respectively. Then, to balance the scale differences between different body parts, we develop a scale normalization layer to transform the 2D keypoints of the face, hands, and body into a standardized space. In detail, keypoints of different parts are first translated to their center by subtracting the mean value and then scaled by dividing the half size of their bounding boxes. Then the re-scaled 2D keypoints of body and hands/face are separately put into the two sub-networks for global and partial parameter retrieval.

In global branch, with the re-scaled 2D body keypoints and the extracted shape embedding (i.e, 512-D global feature vector from Resnet-50 [44]), we use the global recovery sub-network to estimate global parameters. In this process, following [7], we employ a bilinear transformation layer to re-organize the global features for better performance. Then, we put the yielded skeleton-disentangled representation into a Multi-Layer Perceptron (MLP) to regress the body shape , pose parameters , and the camera parameters . is the 3D rotation of the entire human mesh, and are translation and scale in image plane, respectively. is a distance factor of D2S projection, which will be introduced in Sec.III-E.

In partial branch, with the re-scaled 2D face and hand keypoints, we use the partial recovery sub-network to predict the small-scale 3D hands pose and facial expression parameters. Especially, since both face and hand has the structure prior (e.g., symmetry, connection), we leverage the GCNs to explore their structural and spatial relations. The design of the GCN-based face regressor follows [45] and consists of four semantic graph convolution layers. Similarly, the GCN-based hand regressor estimates hands pose parameters . The only difference is that the face regressor employs an additional MLP to estimate the expression from whole-face features.

With these estimated parameters from both the global and partial branches, we directly connect all these parameters and put into the SMPL-X model described in Sec.III-B to obtain the final 3D human mesh. In other word, the parameter vector is adopted to represent the 3D human whole-body in camera coordinates.

Loss function. The supervision of the proposed framework is conducted in both 3D and 2D to make full use of existing data. The total loss is the weighted sum of parameter , 3D keypoints , 2D projected keypoints and the rationality where are weights of these loss items. Since the ground truth parameters are not fully available in most datasets, the supervision is usually carried out on the different aspects of the recovered 3D human mesh. As stated in Eq.1, 3D keypoints can be derived from human mesh via a linear keypoint regressor. is employed to supervise the 3D keypoints of the estimated whole-body mesh using the loss.

In 3D level, we employ loss and to supervise the estimated parameters and the 3D poses (derived by Eq.1) of all human parts respectively. In detail, is employed to supervise the pose and expression . The angular pose is converted from axis-angle representation to rotation matrices via the Rodrigues formula for more stable training [4, 6]. However, due to the inherent scale difference, it is hard to simultaneously capture large torsos and small body parts. Therefore, no dataset has full whole-body 3D annotations. In this case, general supervised training is not feasible. In contrast, there are some single-body-part datasets of 3D body pose, hand gesture, and facial expression available, which are not paired. Therefore, we develop a self-supervised learning scheme (STS) in Sec. III-D to train the partial branch in a learning-from-synthetic manner.

The existed datasets are mostly captured in restricted environments. The model solely trained on them is hard to work well in the wild. To solve this generalization problem, in the 2D level, we project the 3D joints of the estimated 3D mesh onto the 2D image plane using the estimated camera parameters for better generalization. The loss is used to make 2D pose supervision. However, the widely used weak-perspective camera model ignores the foreshortening effects in 2D images, which makes it prone to fail when the images are captured closely. Therefore, we propose a novel D2S projection in Sec.III-E to make the projection scale adjustment for each keypoint in terms of the depth difference between them. Finally, following [4], we also supervise the rationality of the keypoint angles and whole-body shape for preventing physically impossible 3D human mesh, especially when we use the unpaired body, hands, and face data.

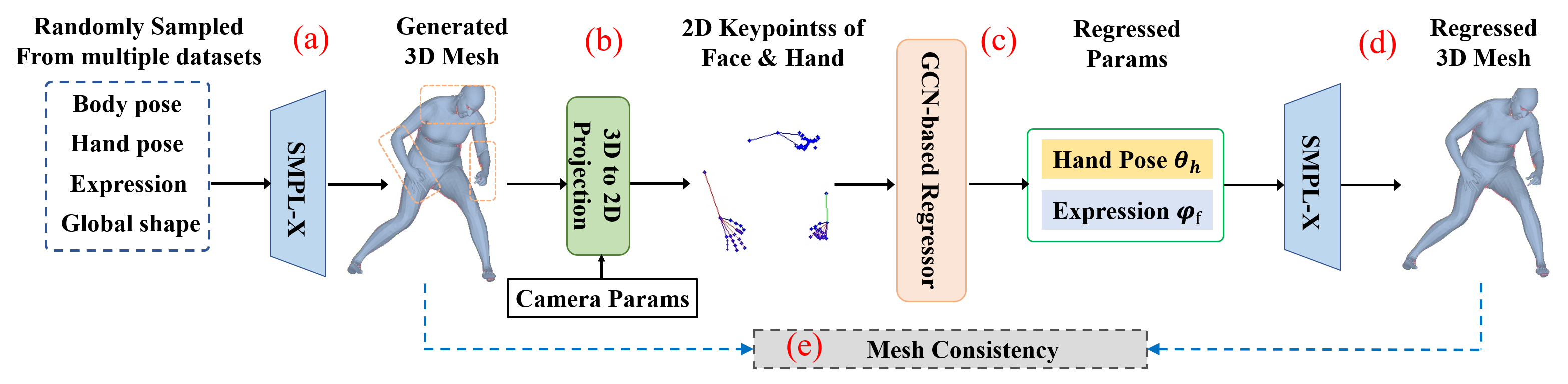

III-D STS: synthetic training Scheme

As we stated in Sec. I and Sec. 3.3, lacking complete annotations of whole-body parts makes it hard to directly train the network end-to-end. Therefore, we develop a synthetic training scheme (STS) to supervise the network under this circumstance. The STS is designed to get reasonable synthetic data from the original unpaired 3D data and learn the hands and face recovery in a self-supervised manner.

The illustration of STS is shown in Fig. 4. Firstly, we collect the 3D pose , shape and expression parameters from these unpaired datasets by fitting the statistical models to establish a reasonable parameter bank. Specifically, we get facial expression by fitting FLAME [8] model to 3D head mesh data [20] or 2D facial keypoints [46]. The body poses are brought from several Mocap datasets [47], and 3D hands poses are brought from MANO datasets [13, 18, 47]. Then, as shown in Fig. 4 (a) we randomly sample these parameters from the bank to form the complete parameter vector and put it into the SMPL-X model to derive the whole-body 3D mesh. Next (b), we map the joints of the driven 3D whole body mesh back to the 2D image plane to establish the mapping between 3D mesh and 2D pose. In this way, 2D pose along with its complete 3D annotations are generated. With these synthetic paired data, in (c) and (d), we exploit the GCN-based regression network for estimating hand gesture and facial expression respectively from their 2D landmarks. Finally in (e), the face/hand partial recovery sub-network can be trained by self-supervising the consistency between the generated 3D meshes and final regressed 3D mesh. Due to the full 3D annotations are available, we only supervise the and .

III-E D2S Projection

As we introduced in Sec.I and Sec. 3.3, available 3D datasets [18, 21] are always captured in a constrained environment with the fixed camera configurations. In this case, using in-the-wild 2D pose datasets [48, 49, 50] for training is a good choice for better generalization and adopted by many methods. To supervise the 3D pose of the estimated 3D human mesh with 2D pose, we need a camera model to make 3D-to-2D projection. In previous works [4, 5, 7, 14, 18], weak-perspective projection is widely adopted. Compared with the perspective projection that needs complex camera parameters (e.g. 3D translation , focal length , et al.), the weak-perspective one is much more simple. It only composes of two factors, scale and 2D translation in image plane. The 2D projection of the -th 3D keypoint (takes the body gravity center as the origin) can be simply derived in a weak-perspective manner using Eq.(6) and Eq.(7) while its perspective projection can be derived using Eq.(3) and Eq.(4).

We can notice that the weak-perspective projection shares the same scale among all keypoints, while the standard perspective projection provides an individual scale for each keypoint. As shown in Fig.5, the weak-perspective projection ignores the difference induced by the foreshortening effect and results in 3D-to-2D misalignment. In this case, even if the 3D pose of the estimated human mesh is correct, the weak-perspective projection may lead to the wrong 2D pose supervision. Therefore, we develop a novel D2S projection to provide an individual projection scale for each keypoint w.r.t their depth difference. To unify the different representations, we assume that and are approximating the projection factor of a virtual body center whose . In this way, the approximation relationship between the perspective and the weak-perspective projection can be represented as

| (8) |

| (9) |

Based on this assumption, the difference () between the real and the approximate factor can be derived as

| (10) |

| (11) |

From Eq.(8) and Eq.(9), we can know that, when is significantly larger than , the difference between the perspective and the weak-perspective projection is small. Otherwise, the difference is obvious. In other words, when a person is close to the camera, the weak-perspective camera is prone to fail especially for poses with a large . The difference is mainly brought by the depth . Therefore, we design a D2S projection that converts the depth differences to projection scale variances among body parts. The goal is to make up the and , meanwhile, reduce the parameters that need to be estimated.

Instead of using a unified projection scale and translation for all keypoints, the individual scale factor for the -th keypoint is estimated to re-scale the output of weak-perspective projection. So the coordinate of the projected 2D keypoint can be derived as

| (12) |

| (13) |

from which we can see that is a key coefficient converting the per-joint depth differences into the projection scale variation. In our framework, is estimated as a part of the camera parameters. Compared with the weak-perspective projection, we just need to estimate one more factor . For better generalization on in-the-wild images, large-scale 2D pose datasets [49, 50] are used to learn the estimation of . The D2S projection works better in the scenarios whose depth difference between different parts is obvious and the field of view is large. For example, taking a selfie with a handheld camera. On the contrary, when people are far away from the camera, it degrades to the weak-perspective model.

IV Experiments

We evaluate our proposed framework on three public large-scale benchmarks, CMU Panoptic Studio Dataset [14], Human3.6M [21] body, and STB hand dataset [17]. Following previous works [14, 15], we also provide the visual results on expression estimation. Quantitative experiments favorably compare to the state-of the-art methods. Our qualitative results show accurate and robust recovery performance.

IV-A Dataset

We firstly introduce the three benchmarks for experiment evaluation. We also introduce several datasets that we put together to establish the real distribution of whole-body parts for model training.

CMU Panoptic Studio Dataset: We use the “Monocular Mocap” part [14] that contains multi-view images (taken from 30 viewpoints) with 3D pose annotations. It contains subjects and body images with corresponding 3D body pose annotations. Following [14], we only focus on the evaluation of 3D body pose in this paper. We report both mean per joint position error (MPJPE) and Procrustes Aligned MPJPE (PA-MPJPE) that is MPJPE after rigid alignment of the predicted pose with the ground truth.

Human3.6M Dataset: It is a large-scale human MoCap dataset [21] with 3D pose annotations and has been widely used as the 3D pose benchmark. It contains videos of 7 actors performing 17 activities. Videos are captured in a controlled environment. For removing the redundancy, we down-sample all videos from 50fps to 10fps. We use the dataset to quantitatively evaluate the 3D body keypoint error of the recovered mesh and follow the same protocol as in [4] for evaluation. We evaluate both MPJPE and PA-MPJPE of the 3D body keypoints.

STB Hand Dataset: Stereo Hand Pose Tracking Benchmark (STB) [17] is a 3D hand pose dataset, containing 18,000 images with corresponding depth images. The 3D hand annotations involve 21 keypoints locations. Following [11], we split it into 15,000 samples for training and 3,000 samples for testing. Besides, for a fair comparison, we use Openpose [22] for bounding box detection. We measure the MPJPE for comparison.

Datasets for STS and Model Generalization: For rationally sampling the SMPL-X model space, we use multiple 3D datasets to obtain the real whole-body parameters. 1) 3D body poses are obtained from the AMASS dataset [47]. It contains 300 subjects, more than 11000 motions (with corresponding SMPL 3D pose parameters), which is significantly richer than existing 3D pose datasets. 2) 3D hand poses are obtained from the MANO [13], Freihand [18], and AMASS [47]. MANO and AMASS contain the 3D hand pose parameters. Freihand contains image data along with the 3D hand annotations. We split their training set into two sets for training (30000 samples) and validation (2560 samples) respectively. 3) Facial expression are extracted from D3DFACS 3D face mesh dataset [20] and LS3D [46] 2D facial dataset. Besides, we employ two in-the-wild 2D pose datasets, AI-CH [50] and MPII [49], to provide 2D pose supervision. Different from the 3D datasets captured in limited environments, they can greatly help the model generalize to various poses and camera configurations.

IV-B Implementation Details

Considering that only a part of the data has 3D hand pose annotations, the STS is used one time in every 5 iterations. The Adam [51] optimizer is adopted with betas=(0.9,0.999), momentum=0.9. The learning rate and batch size are set to 1e-4 and 16 respectively. The weights of loss items are set as . Training of the partial parameter retrieval branch consists of two phases. Firstly, we train the face and hand GCN-based regressor with the assistance of the FLAME head model and MANO hand model respectively. Then, pre-trained models are loaded and the entire branch is trained by STS.

IV-C Effect of D2S Projection.

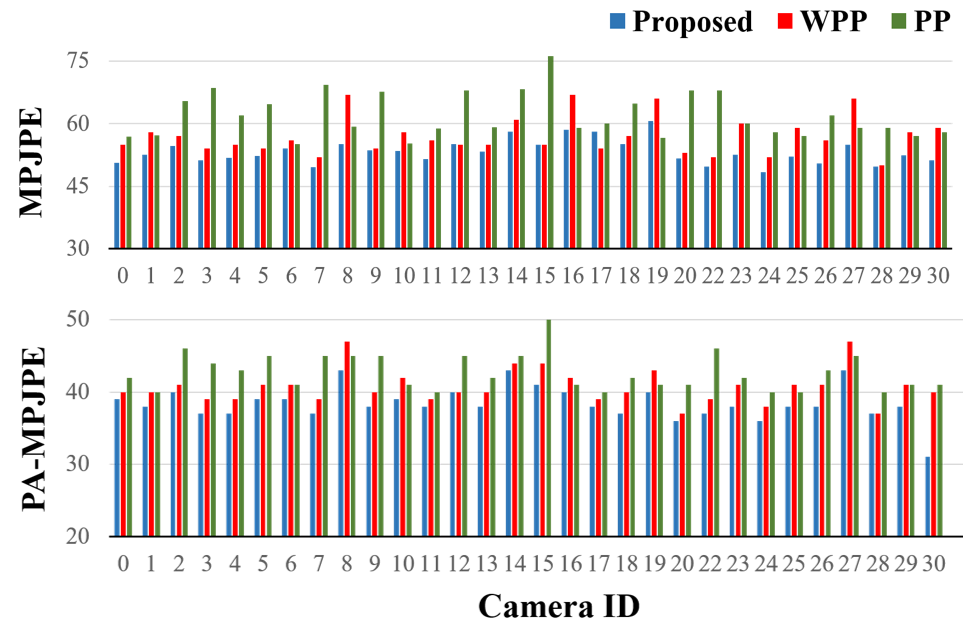

In this section, we introduce the comprehensive ablation study of D2S projection on the CMU Panoptic Studio dataset. To validate the superior of the proposed D2S projection under an uncalibrated condition, we compare it with other camera models, which are the perspective projection (PP) and the weak-perspective projection (WPP) introduced in Sec. III-B. For a fair comparison, the model is trained to estimate the camera parameters of these projection models based on the 2D pose only without using the ground truth parameters. Due to the complete camera parameters of perspective projection are too complex to predict, we simplify the parameters and estimate the in Eq.(3) and Eq.(4). For WPP, we just estimate the in Eq.(6) and Eq.(7).

As shown in Tab. I, PP achieves comparative results with MTC, while greatly outperformed by the proposed D2S projection 8.6mm MPJPE and 4.1mm PA-MPJPE, corresponding to and error reduction. Especially, the training of the proposed D2S projection is much more stable than the PP, which is prone to collapse. WPP is outperformed by 4.3mm MPJPE and 2.1mm PA-MPJPE, corresponding to and error reduction. We think the reason is that PP is too hard to estimate from a single monocular image, while the WPP is so rough that may misguide the model. In contrast, the proposed D2S projection finds a good balance of estimation difficulty and projection accuracy for proper supervision. It demonstrates that the proposed D2S projection is more suitable for the un-calibrated condition in this task.

Moreover, since the Panoptic Studio Dataset contains multi-view images, we further evaluate the MPJPE and PA-MPJPE in different viewpoints. As shown in Fig. 6, the recovery error of the WPP and PP dramatically changes along with the viewpoint. The proposed D2S projection can alleviate this phenomenon to some degree, demonstrating that the proposed D2S projection is effective to make up the depth loss of weak-perspective projection.

IV-D Comparisons to the State-of-the-arts

IV-D1 Evaluation on CMU Panoptic Studio Dataset.

We compare our framework with MTC (monocular total capture) [14] on all testing samples of CMU Panoptic Studio Dataset for evaluating 3D body pose accuracy. MTC deals with the same task as ours, while it makes the recovery of each human part independently by the combination of CNNs and optimization algorithms. As shown in the first two rows of Tab. I, our proposed method outperforms MTC by 9.1mm in terms of MPJPE, corresponding to error reduction. It indicates the superiority of our single-step model-based framework over the optimization-based method. The results demonstrate the effectiveness of the proposed synthetic training strategy. Moreover, our proposed D2S projection further boosts the 3D body recovery performance.

| Method | MPJPE | PA-MPJPE |

|---|---|---|

| MTC [14] | 63.0 | – |

| Proposed | 53.2 | 39.2 |

| - Weak-perspective proj. | 57.5 | 41.3 |

| - Perspective proj. | 61.8 | 43.3 |

| Method | MPJPE | PA-MPJPE |

|---|---|---|

| HMR [4] | 87.9 | 58.1 |

| GCMR [5] | 74.7 | 51.9 |

| MTC [14] | 58.3 | - |

| SMPLify-X [15] | - | 75.9 |

| Ours | 67.4 | 52.0 |

| Method | GraphHand [11] | FHGR | Ours | |

|---|---|---|---|---|

| MPJPE | 11.3 | 13.1 | 12.1 | 9.1 |

IV-D2 Evaluation on Human3.6M Dataset.

As shown in Tab.II, we compare our method with state-of-the-art methods on Human3.6M for 3D body keypoints estimation. Similar to us, the whole-body recovery method SMPLify-X [15] also fits the SMPL-X model, but it is an optimization-based algorithm. SMPLify-X is significantly outperformed by our single-step regressor method in terms of the PA-MPJPE. It demonstrates our performance. Furthermore, HMR [4] and GCMR [5] are end-to-end methods for sole 3D body mesh recovery only. As shown in Tab. II, our method achieves competitive performance in terms of both MPJPE and PA-MPJPE. It demonstrates that our proposed method preserves the stable performance of the skeleton pose recovery, meanwhile, can accomplish the whole-body recovery. Besides, we also show some 3D pose estimation methods in the left part of Tab. II, which perform extremely well on this dataset, since they directly predict the 3D body keypoint other than 3D mesh. Here, we focus on the 3D mesh recovery methods and our method achieves comparable results.

IV-D3 Evaluation on STB Hand Dataset.

We evaluate our method on the STB hand benchmark and present the MPJPE of hand keypoints. As shown in Tab. III, our whole-body method can achieve comparable performance with GraphHand [11] which is the most recently proposed state-of-the-art method specially designed for hand recovery only. It demonstrates that our framework can well deal with the partial part recovery along with the whole-body setting.

To further evaluate the effectiveness of the partial part recovery, we conduct ablation studies on the partial parameter retrieval branch of our framework. The face/hand GCN-based regressor for estimating partial parameters (see Fig. 3) is denoted as FHGR for simplification. In this dataset setting, we simply change the target of FHGR to estimate 3D hand pose from 2D hand landmarks. In Tab. III, the FHGR is solely trained on the STB dataset, while our method adds the synthetic training scheme (STS) to train the FHGR using synthetic data simultaneously. With the help of STS, FHGR moves towards GraphHand [11], indicating that STS is vital for recovering the partial parameters when only unpaired data (body and hand/face) available. Besides, the result using ground truth 2D pose in Tab. III shows that better 2D pose estimation leads to better performance.

IV-E Efficiency analysis

In Tab.IV, we compare the computational efficiency with the most related works SMPLify-X [15] and MTC [14]. For a fair comparison, we directly use the released official code and test on the same dataset [19] on the same device (Tesla P40 GPU). Considering that both SMPLify-X and our method employ the OpenPose to estimate the whole-body 2D keypoints, we only compare the time consuming of predicting the whole-body 3D mesh from a single 2D image and the corresponding OpenPose output. SMPLify-X takes 82.72 seconds per image, while the proposed single-step framework only consumes 0.09 seconds per image. The time consumption is mainly costed by the 5-stage iterative optimization strategy in their methods, which demonstrate our motivation. Based on their official code, MTC costs about 12 seconds per image. The single-step design enables us to enjoy parallel computation, which greatly accelerates the calculation. In contrast, the proposed single-step method can usually be dozens or hundreds of times faster than the existing optimization-based methods.

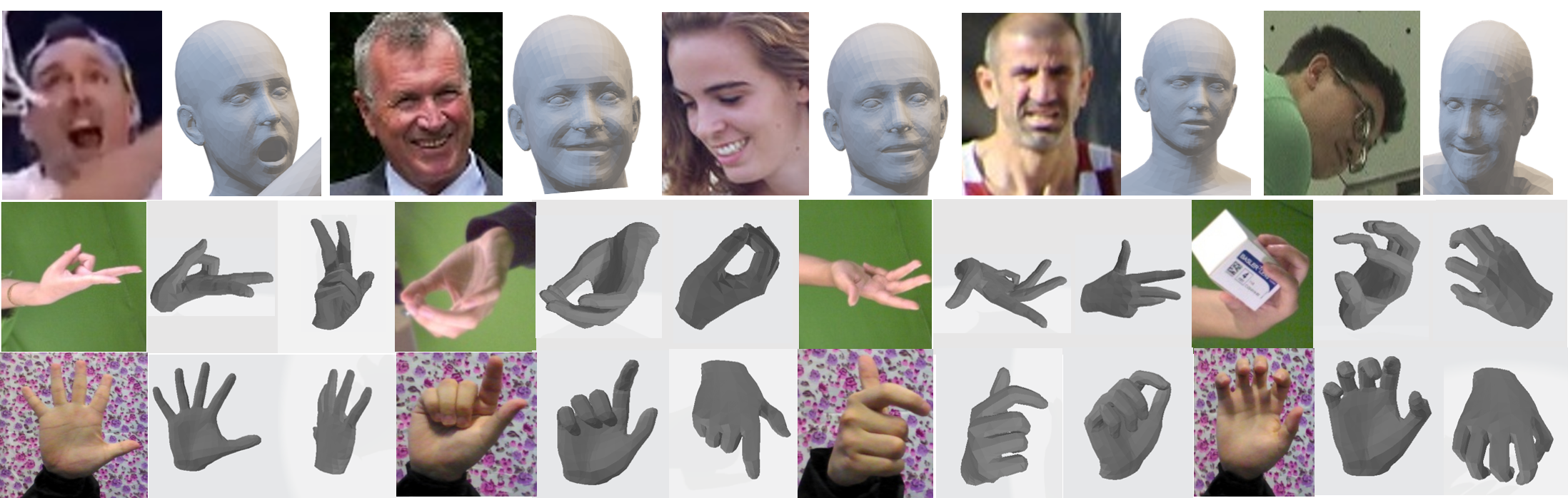

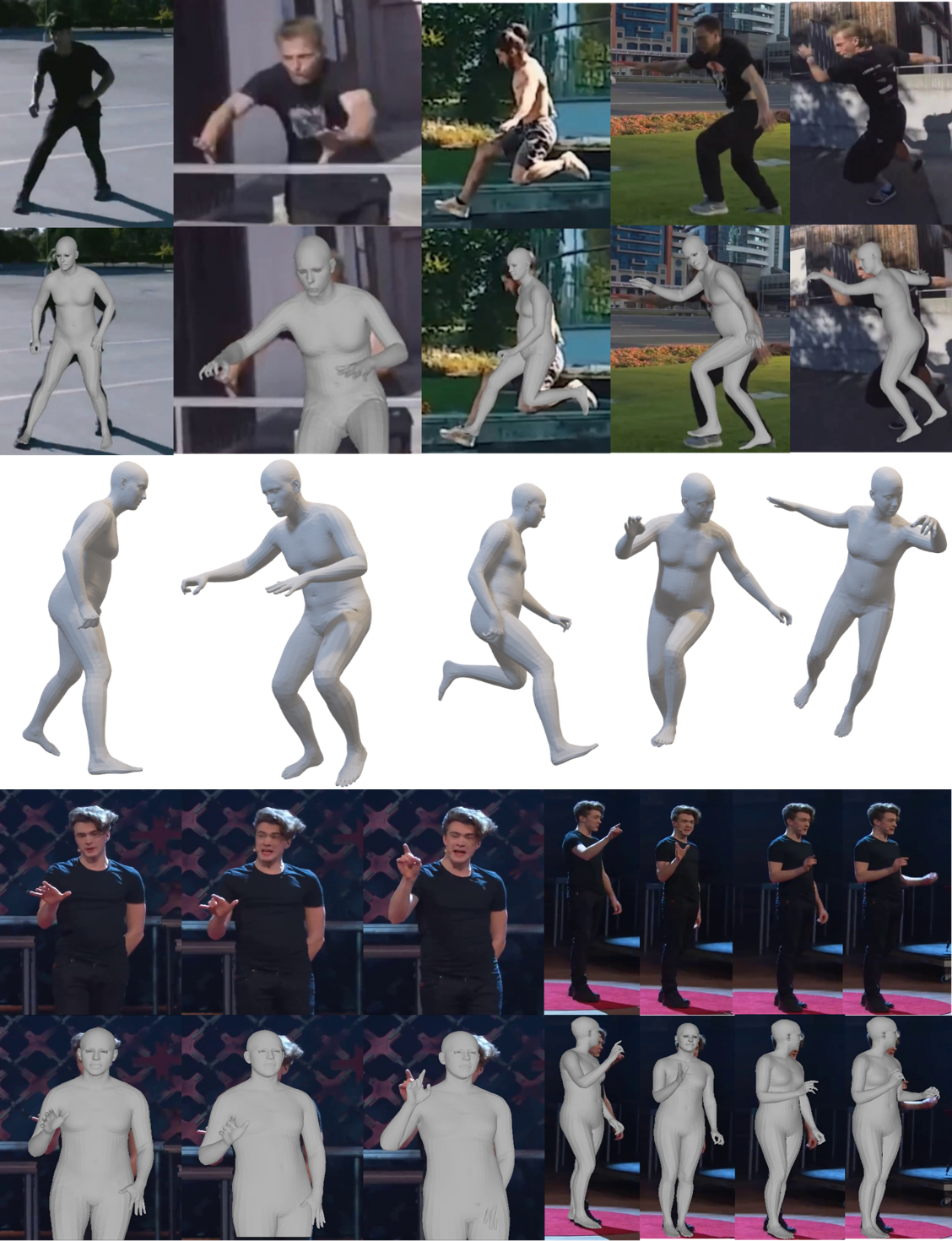

IV-F Qualitative Evaluation

We present qualitative results of our method on images for the whole-body mesh recovery and the face/hand partial recovery. To test the effectiveness and the stability of the proposed method (STS and D2S projection), we evaluate our model on internet images. The results of MTC [14], SMPLify-X [15] and the proposed method are presented in Fig.1. MTC and SMPLify-X can indeed align the human body in 2D images better, but their performance in depth and shape estimation is difficult to satisfy. Especially, when some body parts are invisible (such as the invisible feet at the first raw of Fig.1), the algorithm is prone to fail. The estimated body shape is weird and not coordinated. In contrast, the estimated body pose, shape, and expression of our method are much better. Benefited from the D2S projection, our method shows some prior in perceiving depth information. Considering most 3D datasets are captured in a close range, we deliberately select some videos where people are far from the camera. The results are presented in Fig.8. It demonstrates that the proposed D2S projection can handle a wide range of human-to-camera distance. Besides, qualitative results of face and hands are shown in Fig. 7. The top row presents the recovered meshes on face data for both in indoor images from [14] and in-the-wild images. The second and third rows show the recovered hand meshes on the Freihand dataset [18] and the STB dataset [17], respectively. These results demonstrate the effectiveness of the proposed method.

IV-G Discussion

The proposed method successfully reduces the network’s dependence on data and improves computational efficiency. However, there are still some limitations.

Reliance on 2D pose estimation. The performance relies on the quality of the 2D pose estimation. A stronger 2D pose estimation model will lead to better performance. Also, the representation learning of the body shape needs to be further enhanced. Besides, self-occlusion, such as hands overlapping, also affects the performance. We will investigate those problems in our further work.

About face shape. Besides, due to the limitation of the SMPL-X model, we only estimate the facial expression instead of the 3D face mesh. Because the face shape of SMPL-X is changed along with the body shape and cannot be controlled individually, which makes it hard to estimate the precise facial mesh. Therefore, we show the visualization results other than evaluating the facial keypoints. We will dig into developing a whole-body statistical model whose partial mesh could be preciously controlled.

V Conclusion

In this paper, we present a synthetic training framework for learning a single-step regression model that jointly recovers the mesh of multiple 3D human body parts from a single whole-body image using unpaired data. Compared with the previous optimization-based method, the proposed method shows an obvious advantage in computational efficiency. To deal with the large scale difference among different body parts, the network architecture is designed in a disentangled manner. It facilitates the individual learning of different body properties. Additionally, the depth loss of weak-perspective projection is also investigated and a D2S projection is proposed to learn the projection distortion. With the D2S projection, we exploit the 2D pose supervision for generalization and rich representation. Extensive experiments with ablation analysis show that our proposed method achieves superior performance on relevant benchmarks.

References

- [1] E. De Aguiar, C. Stoll, C. Theobalt, N. Ahmed, H.-P. Seidel, and S. Thrun, “Performance capture from sparse multi-view video,” in ACM SIGGRAPH, 2008, pp. 1–10.

- [2] D. Vlasic, I. Baran, W. Matusik, and J. Popović, “Articulated mesh animation from multi-view silhouettes,” in ACM SIGGRAPH, 2008.

- [3] F. Bogo, A. Kanazawa, C. Lassner, P. Gehler, J. Romero, and M. J. Black, “Keep it smpl: Automatic estimation of 3D human pose and shape from a single image,” in ECCV, 2016.

- [4] A. Kanazawa, M. J. Black, D. W. Jacobs, and J. Malik, “End-to-end recovery of human shape and pose,” in IEEE CVPR, 2018.

- [5] N. Kolotouros, G. Pavlakos, and K. Daniilidis, “Convolutional mesh regression for single-image human shape reconstruction,” in IEEE CVPR, 2019.

- [6] G. Pavlakos, L. Zhu, X. Zhou, and K. Daniilidis, “Learning to estimate 3D human pose and shape from a single color image,” in IEEE CVPR, 2018.

- [7] Y. Sun, Y. Ye, W. Liu, W. Gao, Y. Fu, and T. Mei, “Human mesh recovery from monocular images via a skeleton-disentangled representation,” in IEEE ICCV, 2019.

- [8] T. Li, T. Bolkart, M. J. Black, H. Li, and J. Romero, “Learning a model of facial shape and expression from 4D scans,” ACM SIGGRAPH Asia, 2017.

- [9] S. Sanyal, T. Bolkart, H. Feng, and M. J. Black, “Learning to regress 3d face shape and expression from an image without 3d supervision,” in IEEE CVPR, 2019.

- [10] M. Zollhöfer, J. Thies, P. Garrido, D. Bradley, T. Beeler, P. Pérez, M. Stamminger, M. Nießner, and C. Theobalt, “State of the art on monocular 3d face reconstruction, tracking, and applications,” in Computer Graphics Forum, 2018.

- [11] L. Ge, Z. Ren, Y. Li, Z. Xue, Y. Wang, J. Cai, and J. Yuan, “3d hand shape and pose estimation from a single rgb image,” in IEEE CVPR, 2019.

- [12] M. de La Gorce, D. J. Fleet, and N. Paragios, “Model-based 3d hand pose estimation from monocular video,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2011.

- [13] J. Romero, D. Tzionas, and M. J. Black, “Embodied hands: Modeling and capturing hands and bodies together,” ACM Transactions on Graphics, 2017.

- [14] D. Xiang, H. Joo, and Y. Sheikh, “Monocular total capture: Posing face, body, and hands in the wild,” in IEEE CVPR, 2019.

- [15] G. Pavlakos, V. Choutas, N. Ghorbani, T. Bolkart, A. A. Osman, D. Tzionas, and M. J. Black, “Expressive body capture: 3d hands, face, and body from a single image,” in IEEE CVPR, 2019.

- [16] H. Joo, T. Simon, and Y. Sheikh, “Total capture: A 3d deformation model for tracking faces, hands, and bodies,” in IEEE CVPR, 2018.

- [17] J. Zhang, J. Jiao, M. Chen, L. Qu, X. Xu, and Q. Yang, “3d hand pose tracking and estimation using stereo matching,” arXiv:1610.07214, 2016.

- [18] Z. Christian, C. Duygu, Y. Jimei, R. Bryan, A. Max, and B. Thomas, “Freihand: A dataset for markerless capture of hand pose and shape from single rgb images,” in IEEE ICCV, 2019.

- [19] R. Vemulapalli and A. Agarwala, “A compact embedding for facial expression similarity,” in IEEE CVPR, 2019, pp. 5683–5692.

- [20] D. Cosker, E. Krumhuber, and A. Hilton, “A facs valid 3d dynamic action unit database with applications to 3d dynamic morphable facial modeling,” in IEEE ICCV, 2011.

- [21] C. Ionescu, D. Papava, V. Olaru, and C. Sminchisescu, “Human3.6M: Large scale datasets and predictive methods for 3D human sensing in natural environments,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014.

- [22] Z. Cao, T. Simon, S.-E. Wei, and Y. Sheikh, “Realtime multi-person 2d pose estimation using part affinity fields,” in IEEE CVPR, 2017.

- [23] G. Hidalgo, Y. Raaj, H. Idrees, D. Xiang, H. Joo, T. Simon, and Y. Sheikh, “Single-network whole-body pose estimation,” in IEEE ICCV, 2019.

- [24] X. C. Nie, J. S. Feng, J. L. Xing, S. T. Xiao, and S. C. Yan, “Hierarchical contextual refinement networks for human pose estimation,” IEEE Transactions on Image Processing, pp. 924–936, 2018.

- [25] Y. M. Luo, Z. T. Xu, P. Z. Liu, Y. Z. Du, and J. M. Guo, “Multi-person pose estimation via multi-layer fractal network and joints kinship pattern,” IEEE Transactions on Image Processing, pp. 142–155, 2018.

- [26] L. R. Fu, J. G. Zhang, and K. Q. Huang, “Orgm: occlusion relational graphical model for human pose estimation,” IEEE Transactions on Image Processing, pp. 927–941, 2016.

- [27] C.-H. Chen and D. Ramanan, “3d human pose estimation= 2d pose estimation+ matching,” in IEEE CVPR, 2017.

- [28] H.-S. Fang, Y. Xu, W. Wang, X. Liu, and S.-C. Zhu, “Learning pose grammar to encode human body configuration for 3d pose estimation,” in AAAI, 2018.

- [29] B. X. Nie, P. Wei, and S.-C. Zhu, “Monocular 3d human pose estimation by predicting depth on joints,” in IEEE ICCV, 2017.

- [30] D. C. Luvizon, D. Picard, and H. Tabia, “2d/3d pose estimation and action recognition using multitask deep learning,” in IEEE CVPR, 2018.

- [31] X. Sun, J. Shang, S. Liang, and Y. Wei, “Compositional human pose regression,” in IEEE ICCV, 2017.

- [32] X. Zhou, Q. Huang, X. Sun, X. Xue, and Y. Wei, “Towards 3d human pose estimation in the wild: a weakly-supervised approach,” in IEEE ICCV, 2017.

- [33] X. T. Zheng, X. M. Chen, and X. Q. Lu, “A joint relationship aware neural network for single-image 3d human pose estimation,” IEEE Transactions on Image Processing, pp. 4747–4758, 2020.

- [34] M. Loper, N. Mahmood, J. Romero, G. Pons-Moll, and M. J. Black, “SMPL: A skinned multi-person linear model,” ACM Transactions on Graphics, 2015.

- [35] Y. Yoshiyasu, R. Sagawa, K. Ayusawa, and A. Murai, “Skeleton transformer networks: 3d human pose and skinned mesh from single rgb image,” arXiv:1812.11328, 2018.

- [36] X. Zhou, M. Zhu, G. Pavlakos, S. Leonardos, K. G. Derpanis, and K. Daniilidis, “Monocap: Monocular human motion capture using a cnn coupled with a geometric prior,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018.

- [37] W. Xu, A. Chatterjee, M. Zollhöfer, H. Rhodin, D. Mehta, H.-P. Seidel, and C. Theobalt, “Monoperfcap: Human performance capture from monocular video,” ACM Transactions on Graphics, 2018.

- [38] Y.-L. Xu, S.-C. Zhu, and T. Tung, “Denserac: Joint 3d pose and shape estimation by dense render-and-compare,” in IEEE ICCV, 2019.

- [39] G. Pavlakos, N. Kolotouros, and K. Daniilidis, “Texturepose: Supervising human mesh estimation with texture consistency,” in IEEE ICCV, 2019.

- [40] K. Jogendra, Nath, R. Mugalodi, J. Varun, V. Rahul, Mysore, and B. R., Venkatesh, “Appearance consensus driven self-supervised human mesh recovery,” in ECCV, 2020.

- [41] C. Hongsuk, M. Gyeongsik, and L. Kyoung, Mu, “Pose2mesh: Graph convolutional network for 3d human pose and mesh recovery from a 2d human pose,” in ECCV, 2020.

- [42] D. Kulon, R. A. Guler, I. Kokkinos, M. M. Bronstein, and S. Zafeiriou, “Weakly-supervised mesh-convolutional hand reconstruction in the wild,” in IEEE CVPR, 2020.

- [43] Y. Zhou, M. Habermann, W. Xu, I. Habibie, C. Theobalt, and F. Xu, “Monocular real-time hand shape and motion capture using multi-modal data,” in IEEE CVPR, 2020.

- [44] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in IEEE CVPR, 2016.

- [45] L. Zhao, X. Peng, Y. Tian, M. Kapadia, and D. N. Metaxas, “Semantic graph convolutional networks for 3d human pose regression,” in IEEE CVPR, 2019.

- [46] A. Bulat and G. Tzimiropoulos, “How far are we from solving the 2d & 3d face alignment problem?” in IEEE ICCV, 2017.

- [47] N. Mahmood, N. Ghorbani, N. F. Troje, G. Pons-Moll, and M. J. Black, “AMASS: Archive of motion capture as surface shapes,” in IEEE ICCV, 2019.

- [48] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in ECCV, 2014.

- [49] M. Andriluka, L. Pishchulin, P. Gehler, and B. Schiele, “2d human pose estimation: New benchmark and state of the art analysis,” in IEEE CVPR, 2014.

- [50] J. Wu, H. Zheng, B. Zhao, Y. Li, B. Yan, R. Liang, W. Wang, S. Zhou, G. Lin, Y. Fu et al., “Ai challenger: A large-scale dataset for going deeper in image understanding,” arXiv:1711.06475, 2017.

- [51] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in ICLR, 2015.