Synthetic data generation method for data-free knowledge distillation in regression neural networks

Abstract

Knowledge distillation is the technique of compressing a larger neural network, known as the teacher, into a smaller neural network, known as the student, while still trying to maintain the performance of the larger neural network as much as possible. Existing methods of knowledge distillation are mostly applicable for classification tasks. Many of them also require access to the data used to train the teacher model. To address the problem of knowledge distillation for regression tasks in the absence of original training data, the existing method uses a generator model trained adversarially against the student model to generate synthetic data to train the student model. In this study, we propose a new synthetic data generation strategy that directly optimizes for a large but bounded difference between the student and teacher model. Our results on benchmark experiments demonstrate that the proposed strategy allows the student model to learn better and emulate the performance of the teacher model more closely.

1 Introduction

In the recent decade, advances in algorithms, computational hardware and data availability have enabled significant developments in artificial neural networks and deep learning [Lecun et al., 2015]. Neural networks models are now state-of-the-art in many fields of application including computer vision ([O’Mahony et al., 2020, Chai et al., 2021], natural language processing [Otter et al., 2021], and signal processing [Purwins et al., 2019, Rim et al., 2020]. However, as models become increasingly larger in size measured by number of parameters, they too become computationally expensive to store and perform inference on. Large neural networks can be unusable for real world deployment scenarios where hardware may be limited, such as on mobile devices or microcontrollers, or when deployed for as a service to a large number of users such as web applications [Cheng et al., 2018, Deng et al., 2020, Liu et al., 2022].

Knowledge distillation is a class of method to address this problem by distilling the predictive capabilities of a larger neural network into a smaller neural network, allowing for faster inference and lower memory requirements [Gou et al., 2021]. There have been several knowledge distillation methods proposed in the past, typically requiring the original data that was used to train the teacher model. However, in many real-world applications, the original data may not be available for performing knowledge distillation to student models due to reasons such as data size and data privacy [Chen et al., 2019, Gou et al., 2021].

To deal with such situations, data-free knowledge distillation methods have been proposed to allow distillation of knowledge without the original training data [Hu et al., 2020, Lopes et al., 2017, Micaelli and Storkey, 2019, Ye et al., 2020, Yoo et al., 2019]. Data-free knowledge distillation works by generating synthetic data and training the student model with these data and their teacher model predicted labels.

Much of the existing research for knowledge distillation has been focused on classification tasks. However, regression tasks are common in many engineering applications [Guo et al., 2021, Schweidtmann et al., 2021, Tapeh and Naser, 2022, Thai, 2022] and there are limited methods available on knowledge distillation for regression neural networks. Knowledge distillation for real-world regression applications include lightweight object detectors for remote sensing [Yang et al., 2022], turbine-scale wind power prediction [Chen, 2022]. Recently, [Kang and Kang, 2021] proposed the first data-free knowledge distillation method for regression where a generator model was trained in an adversarial manner to generate synthetic data. Motivated by the need for data-free model distillation on regression models in real world applications, in this work we investigate the behaviors of several synthetic data generation methods including random sampling and adversarial generator.

The main contribution of this paper is to propose an improved method to generate synthetic data for data-free knowledge distillation of regression neural networks. This is achieved by optimizing for a loss function defined using the student and teacher model predictions directly rather than implicitly through an additional generator model. Compared to existing methods, our synthetic data generation method can provide large difference in prediction between the student and teacher model while mimicking real data better. We demonstrate that this method for synthetic data generation can provide better performance than existing methods through experiments in 7 standard regression datasets, as well as on the MNIST handwritten digit dataset adapted for regression, and a real-world bioinformatics case study of protein solubility prediction. This study contributes in furthering the understanding of data-free distillation for regression and has potential applications in the deployment of distilled models for many practical regression tasks in various engineering applications.

2 Related work

2.1 Knowledge distillation

As neural networks become increasingly large in number of parameters, the deployment of such models faces a difficult challenge for applications such as mobile devices and embedded systems due to limitations in computational resources and memory [Cheng et al., 2018, Deng et al., 2020]. To address such problems, model compression through knowledge distillation has become an active area of research in recent years [Liu et al., 2022, Wang et al., 2022]. Knowledge distillation is the technique where knowledge learned by a larger teacher model is transferred to a smaller student model [Gou et al., 2021, Hinton et al., 2015, Wang and Yoon, 2022]. The main idea is that the student model mimics the teacher model to achieve a similar or even a superior performance.

Various methods of knowledge distillation define and focus on different forms of knowledge. Following the nomenclature in [Gou et al., 2021], these can be largely grouped as response-based knowledge, feature-based knowledge, and relation-based knowledge. For response-based knowledge, outputs of the teacher model are used to supervise the training of the student model. For example, [Hinton et al., 2015] uses soft targets from the logits output of the teacher model to train the student. For feature-based knowledge, outputs of intermediate layers, or feature maps learned by the teacher model can be to supervise the training of the student model. For example, [Romero et al., 2014] trains the student model to match the feature activations of the teacher model. For relationship-based knowledge, the relationships between different layers or data samples are used. For example, [Yim et al., 2017] uses the inner products between features from two layers to represent the relationship between different layers, while [Chen et al., 2021] trains the student model to learn to preserve the similarity of samples’ feature embeddings in the intermediate layers of the teacher models.

2.2 Data-free knowledge distillation

In some situations, access to the original data used to train the teacher model is not available due to issues such as privacy and legal reasons. Data-free knowledge distillation methods have been proposed to allow model distillation in the absence of original training data [Chawla et al., 2021, Chen et al., 2019, Hu et al., 2020, Lopes et al., 2017, Micaelli and Storkey, 2019, Nayak et al., 2021, Ye et al., 2020, Yoo et al., 2019]. This is achieved by generating synthetic data for training. Many methods achieve this by using generative adversarial networks (GAN) [Chen et al., 2019, Hu et al., 2020, Micaelli and Storkey, 2019, Ye et al., 2020, Yoo et al., 2019]. For example, [Micaelli and Storkey, 2019] train a generator model to generate synthetic images that maximizes the difference in prediction (measured by KL divergence) between the teacher and student models. The student model is then trained to minimize the difference on these synthetic images. When applied to image classification datasets (SVHN and CIFAR-10 datasets), they were able to train student models with performance close to distillation using actual full training set.

Other methods such as [Lopes et al., 2017] make use of metadata collected during training of the teacher model, in the form of the layer activation records of the teacher model to reconstruct dataset for training the student model.

2.3 Knowledge distillation for regression

Most of the methods currently existing in knowledge distillation literature deal with classification problems. These methods generally are not immediately applicable to regression problems where the predictions are unbounded real values. For regression problems, [Chen et al., 2017] uses a teacher bounded regression loss where the teacher’s predictions serve as an upper bound for the student model instead of using it directly as a target. This method was the first successful application of knowledge distillation to object detection problem and achieved strong results.

[Saputra et al., 2019] uses the teacher loss as a confidence score that assigns relative importance on the teacher prediction. This confidence score is then used in the main training task via an attentive imitation loss which adaptively down-weight the loss between the teacher and student model predictions when a particular teacher model’s prediction is not reliable. This method successfully performed knowledge distillation from a deep pose regression network, achieving a student model prediction close to the teacher model while reducing number of parameters by more than 90%.

[Takamoto et al., 2020] uses a teacher outlier rejection loss, that rejects outliers in training samples based on the teacher model predictions. This method was applied to predict gaze angles from images of human face and was demonstrated to be effective even with noisy labels.

[Xu et al., 2022] proposed the Contrastive Adversarial Knowledge Distillation (CAKD) approach for time-series regression tasks. CAKD uses adversarial adaptation to align feature distributions between student and teacher models. To achieve better alignment on fine-grained features, contrastive learning is also used to increase similarity between the features extracted by teacher and student models for the same sample. The teacher model prediction is used as soft labels to guide the training for the student.

[Kang and Kang, 2021] introduced the first work, and to our knowledge the only work that addresses data-free knowledge distillation for regression, by using a generator model that generates synthetic datapoints that is trained adversarially together with the student model. The method was able to substantially outperform the baseline methods of random sampling and data impression on various benchmark regression datasets. However, it was found that both the baseline methods and the generator method proposed here did not work well for deeper neural networks.

3 Material and methods

3.1 Overview of methods

Given a trained teacher model T, and a student model parameterized by , we generate synthetic data via some data generation method. The student model is trained by minimizing the student loss defined in equation 1 using gradient descent. This generic method is illustrated in Figure 1.

| (1) |

The performance of the student model in mimicking the performance of the teacher is dependent on the representation strength of the student model, and the data used to train it, and the optimization process of minimizing student loss. Hence for a fixed student model architecture and training process, the synthetic data generation process plays the key role in determining the performance of the student model.

3.2 Synthetic data generation methods

Three types of synthetic data generation methods are investigated in this study: random sampling, generative model, and direct optimization.

3.2.1 Random sampling

Synthetic data are generated by sampling randomly from an input distribution. To obtain the best results, the synthetic data should be drawn from a similar distribution as the actual data.

In the case where actual input data have been standardized, random samples can be drawn from a Gaussian distribution . However in other cases, where the input has been scaled differently, or has clearly defined input space boundaries, it is important to confirm the data distribution and input space boundaries, and design the sampling method such that samples conform to the actual input distribution as much as possible. The input distribution and input space bounds may be defined using the available validation or test set or based upon some prior knowledge. For example, for image input, the value for each pixel is bounded from 0 – 1, and each pixel position may have different value mean and variance. A random sampling strategy in this case could be to draw each pixel from a separate Gaussian distribution with mean and variance computed from the test set, and clipping the value between 0 to 1.

3.2.2 Generator model

Adversarially trained generator models (GANs) are used to generate realistic synthetic data with the same statistics as the training set in a wide range of applications. Common applications include image generation, 3D model generation, audio generation, and other signal generation for purposes such as machinery fault diagnosis [Aggarwal et al., 2021, Gui et al., 2023, Lou et al., 2022].

Generator model for generating synthetic data was proposed for data-free knowledge distillation for regression tasks by [Kang and Kang, 2021], follows similar methods in classification tasks [Micaelli and Storkey, 2019]. In this method, a generator model parameterized by is trained to output samples that would result in a large difference between the student and teacher model’s predictions. This generator model is trained in an adversarial manner against the student model during the distillation process by optimizing the generator loss function in equation 2.

| (2) |

The student is trained using the student loss to minimize the difference between teacher and its own predictions, the two opposing learning objectives are trained in a sequential adversarial manner, and the student model is able to learn to match the predictions of the teacher model as training continues. This process is illustrated in Figure 2.

In practice, regularization terms may be added to the generator loss to prevent complete deviation from underlying data distribution, for e.g. by adding the square of -norm of and , yielding:

| (3) |

3.2.3 Direct optimization from random samples

The generator model approach attempts to train the generative model to approximate the inverse function of the student loss implicitly, where the generative model predicts given the objective of high student loss. It is not immediately clear whether the generative model is able to learn this inverse function easily. This is because generative adversarial models have been found to be difficult to train well empirically due to many reasons [Arjovsky and Bottou, 2017, Lucic et al., 2018, Salimans et al., 2016, Saxena and Cao, 2021]. Many design choices such as the number of training steps of the generator model vis-à-vis training steps of the student model and generator model architecture have large impacts on training stability and model performance and are difficult or tedious to tune. They often also suffer from problems such as mode collapse, and vanished gradients that make the generated synthetic data unsuitable for training the student model.

Since the goal of the generative model approach is to generate samples that maximize the student loss, it is more straightforward to maximize the student loss directly, as formulated below.

Or following conventions:

| (4) |

In practice, following the generator method, we may add regularization terms as well, such as in equation 5.

| (5) |

It is later shown in 3.5 and 3.6 that the methodology is very flexible, and any arbitrary loss function may be used to incorporate loss terms designed to capture important properties of the data.

This minimization can be done through various optimization algorithms. If both the student and teacher models are differentiable, gradient descent can be used. Black box metaheuristic optimization methods such as genetic algorithms and simulated annealing may also be used, especially if the teacher model gradients are unavailable. The method is illustrated in Figure 3.

When using direct optimization of the student loss with gradient descent, it is possible to derive theoretical guarantees for (a) generating samples that are better than random sample and (b) generating samples that are bounded in their deviation away from underlying distribution.

The gradient descent updates as such:

| (6) |

Assuming the neural networks are locally smooth (Lipschitz continuous), given some sufficiently small learning rate , always improves upon fulfilling guarantee (a). Given some learning rate and number of gradient descent steps , deviates from randomly sampled from underlying distribution by an arbitrary bound, fulfilling guarantee (b). Proof for guarantee (a) is provided in [Boyd and Vandenberghe, 2009] p.466 and proof for guarantee (b) is provided in the supplementary materials.

It is not obvious to us that the generator model method can fulfil guarantee (a) because is generated from Gaussian noise of an arbitrary dimension and is not related to random samples in input space; and to fulfil guarantee (b), a bound on the deviation of from 0 exist only if a regularization term is applied to . The proof for bound on magnitude of for generator method with regularization is provided in the supplementary materials.

3.2.4 Proposed method for knowledge distillation

The proposed data-free knowledge distillation method generates training data through direct optimization of student loss with gradient descent. In the synthetic data generation step, assuming inputs are standardized, a batch of random samples are drawn from a Gaussian distribution . Gradient descent is used to perturb these random samples to the direction of maximizing their student loss values, obtaining . In the student training step, the student weights are updated to minimize the student loss with respect to the synthetic data .

Following the methods proposed in [Kang and Kang, 2021], generated data is also supplemented with random samples drawn from Gaussian distribution . The sample weights for the generated samples and random samples are controlled by a factor , which can be a fixed value or follow a schedule based on the training epoch.

| (7) |

Setting to 0 is equivalent to the random sampling strategy. Setting to 1 is a pure generative sampling strategy. Note that for both edge cases, since the loss of only 1 set of samples contributes to the training, the number of training samples in each epoch needs to be doubled for a fair comparison with cases where is between 0 and 1. We investigate a decreasing alpha schedule as well as a pure training strategy in the experiments.

The proposed method is illustrated in the block diagram in Figure 4.

More formally, the training procedures are described in algorithm 1 & 2. In the main procedure Data-free model distillation where the data distillation training happens, the number of training epochs for the student model is defined as , and the number of batches per epoch is defined as . In the sub-procedure Optimize, where direct optimization to generate synthetic data is done via gradient descent, the number of gradient descent steps is defined as .

3.3 Regression datasets for experiments

To facilitate comparison with the previous work by [Kang and Kang, 2021], the experiments were conducted on the same datasets. These 7 datasets are regression problem sets available from UCI machine learning repository [Dua and Graff, 2017] and KEEL dataset repository [Alcala-Fdez et al., 2010]. ‘longitude’ was selected as the output variable for Indoorloc. Details of the datasets are provided in the Table 1.

| Dataset | Number of features | Number of samples |

|---|---|---|

| Compactiv | 21 | 8192 |

| Cpusmall | 12 | 8192 |

| CTScan | 384 | 53500 |

| Indoorloc | 520 | 19337 |

| Mv | 10 | 40768 |

| Pole | 26 | 14998 |

| Puma32h | 32 | 8192 |

The data are split into training and test set. The training set consists of 5000 samples for each dataset. 10% of the remainder samples are placed into the validation set, and the remaining 90% is the test set. The validation set is used to periodically evaluate the training of the student model.

For data processing step, all values were standardized to a mean of 0 and a standard deviation of 1. Two processing workflows were tested where the scaling factors were calculated for the training set only and then applied to the test set, and where the scaling was done on the whole dataset prior to splitting of training and testing data. No significant differences were observed for both workflows, with differences in the RMSE of teacher models differing by less than 1.5%. Therefore, the second workflow was used for the results for simplicity.

3.4 Experiment setup for regression datasets

To facilitate comparison, we used the same experiment setup for the neural networks as was used in [Kang and Kang, 2021]. The teacher model is a fully connected feed forward network containing 1 hidden layer of 500 units with Tanh activation function. The student model is also a fully connected feed forward network containing 1 hidden layer of either 25, or 50 units with Tanh activation function.

The teacher model is trained with the training data, while student models are trained without access to any real data from the training set. RMSProp optimizer is used for gradient descent, with a learning rate of and weight decay regularization of . Batch size is set to be 50, and the number of batches in each epoch, is set to be 10. and are selected to be . The number of epochs is selected as 2000. Models that performed the best on the validation loss was used to evaluate on the test set. For the direct optimization method to generate synthetic data, RMSProp optimizer with a learning rate of , and 2 epochs were used, how these two hyperparameters were selected are elaborated in the results section 4.1.

3.5 Experiments on MNIST dataset

To further test the applicability of our method on different types of inputs, and on deeper and more complex neural network architectures, we designed an experiment for data-free knowledge distillation for regression on the MNIST handwritten digits dataset.

The MNIST dataset is originally intended to be used for classification task, following the method presented in [Wang et al., 2020], we adapt it for regression task by making the neural network to predict a continuous number that represent the class value of the digit label of the input image. The performance of the model is measured in mean absolute error (MAE) between the predicted value and the actual value of the digit. For e.g. for perfect performance, the model should predict a value of 3.0 for an image with the handwritten digit 3. A prediction of 2.9 will result in a MAE of 0.1.

The input image in MNIST is a single channel image of size 28 by 28 pixels, each pixel taking a value between 0 – 1. The mean and standard deviation of each pixel position is calculated for the entire dataset and is used to generate random datapoints with a normal distribution clipped between 0 – 1. This is done to achieve as much as possible a similar distribution as real data.

As proposed by [Wang et al., 2020], we used a multi-layer convolutional neural network with the architecture specified in Table 2. The teacher and student network follow the same architecture, except that the number of filters, for each convolutional layer in the teacher network is higher than that in the student network. is chosen to be 10 for the teacher network and 5 for the student network. Log hyperbolic cosine (Log-Cosh) loss was used instead of mean squared error as the loss function to improve training following method proposed by [Wang et al., 2020].

| Name | Filters/units | Activation function |

|---|---|---|

| Conv2D-1 | 3 x 3 x f | ReLU |

| Conv2D-2 | 3 x 3 x 2f | softplus |

| Maxpool2D-1 | 2 x 2 | |

| Conv2D-3 | 3 x 3 x 4f | softplus |

| Maxpool2D-2 | 2 x 2 | |

| Flatten | ||

| Fully connected-1 | 500 | softplus |

| Dropout-1 (0.5) | ||

| Fully connected-1 | 100 | softplus |

| Dropout-2 (0.25) | ||

| Fully connected-1 | 20 | softplus |

| Fully connected-1 | 1 | softplus |

Due to the different nature of the input, which are images rather than standardized tabular data in the regression datasets, and the output which are natural number, we designed a different loss function for generating synthetic data. This loss function differs from equations 3 and 5 by replacing the mean-squared error loss with Log-Cosh loss and by changing the regularization terms to better capture the distribution of real data. Firstly, instead of penalizing the norm of , we penalize the norm of because the handwritten digits image tends to be sparse. Secondly, instead of penalizing the student prediction on , we randomly sample a whole number from 0 – 9 and penalize the distance of the teacher’s prediction to the random whole number. The purpose of this penalty is to allow the synthetically generated sample to match more closely with the actual data distribution, as real data should generally not be predicted too far away from whole number by the teacher model for this task. This strategy may also be helpful for other regression tasks where the output lies in a fixed set of discrete values.

| (8) |

To train the student model, RMSProp optimizer was used for gradient descent, with a learning rate of and weight decay regularization of . Batch size is set to be 50, and the number of batches in each epoch, is set to be 10. The number of epochs is selected as 1000.

For the direct optimization method to generate synthetic data, RMSProp optimizer with a learning rate of , and 20 epochs were used. For the generator network, the number of rounds for training the generator per epoch was also set to 20.

3.6 Case study on protein solubility prediction

A bioinformatics problem, predicting continuous protein solubility value with the constituent amino acids [Han et al., 2019], was used as a case study to test the effectiveness of data-free knowledge distillation for regression on a real-world scientific problem. Predicting continuous solubility value is useful for in-silico screening and design of proteins for industrial applications [Han et al., 2020].

We also want to test how the method can be used when the gradients of the teacher model are not available. For example, many bioinformatics tools such as protein solubility prediction are hosted on servers that allow users to query proteins and obtain predictions. However, both the model and data used to train the model are not available to the user. To recreate the model, data-free knowledge distillation without gradient access to the teacher model is required. If gradient information of the teacher model is unavailable, it is not possible to train the generative network as described in 3.2.2 directly. However, for direct optimization, it is possible to use metaheuristics optimization that does not require gradients instead of gradient descent.

The dataset used contains 3148 proteins with solubility represented as a continuous value between 0 – 1 from the eSol database [Niwa et al., 2009]. The input features are the proportion of each of the 20 amino acids within the protein sequence. 2500 proteins are selected for the training set, and the remaining as test set. The teacher model used is a support vector machine, which represents the black-box teacher model that contains no gradient information and only output prediction value is available.

As in the MNIST example, we introduce diversity in the predicted value by the teacher model on with a penalty term on distance away from a random value sampled for every batch.

| (9) |

The student model is made up of a fully connected Gaussian kernel radial basis function layer with output size of 100, followed by a fully connected linear layer that outputs the prediction. For training the student models in both baseline and direct optimization method, RMSProp optimizer is used for gradient descent, with a learning rate of and weight decay regularization of . Batch size is set to be 50 with decreasing schedule.

Random sampling was used for training baseline model and providing initial points for direct optimization method. The mean and standard deviation of each amino acid feature is calculated from the training dataset and is used to generate random datapoints with a normal distribution clipped between 0 – 1. The feature values are then normalized such that the value sums to 1. This is done as the features which are proportion of each of the 20 amino acids within the protein sequence must sum to 1. For the direct optimization method to generate synthetic data, differential evolution algorithm [Storn and Price, 1997] with 25 iterations of best2bin strategy was used, with initial points generated with the random sampling just described.

4 Results

4.1 Properties of synthetic data generated

We first investigate the properties of the synthetic data generated by various methods, namely the student loss value of the synthetic data, and the distribution of the synthetic data.

4.1.1 Student loss value of synthetic data

Intuitively, the goal of the synthetic data generation process is to generate data that gives large differences in student and teacher prediction (i.e. student loss in equation 1) in the hope that by learning to correct these large mistakes, the student model is able to learn faster and better mimic the outputs of the teacher model.

To verify the actual behavior of the various methods at achieving this goal, we compare the student loss values of synthetic data generated by the various methods at different stages of training a student model with random samples: when student is first randomly initialized at 0th epoch, during the middle stage of training at the 50th and 100th epoch, and when the student model has converged at the 500th epoch. The results shown in Figure 5 are for Indoorloc dataset.

As expected, it can be observed that the synthetic data generated by the generator method and the direct optimization method have higher student loss than random Gaussian samples at all stages of training. Compared to the direct optimization method, the generator method tends to generate data with smaller loss at the early stages of training, and larger loss at later stages of training.

Directly optimizing with metaheuristics algorithms, in this case differential evolution is also capable of generating synthetic data with high loss. However, the running speed of metaheuristics algorithms is much slower than gradient descent and is not ideal practically unless gradient information is unavailable.

4.1.2 Distribution of synthetic data

Synthetic data generated should reasonably overlap with the underlying distribution. Out of distribution data generated may either be not useful or even detrimental to model performance on test data. Ideally the synthetic data generated should also be well spread out from each other rather than clustered closely together to allow for better coverage of the data distribution.

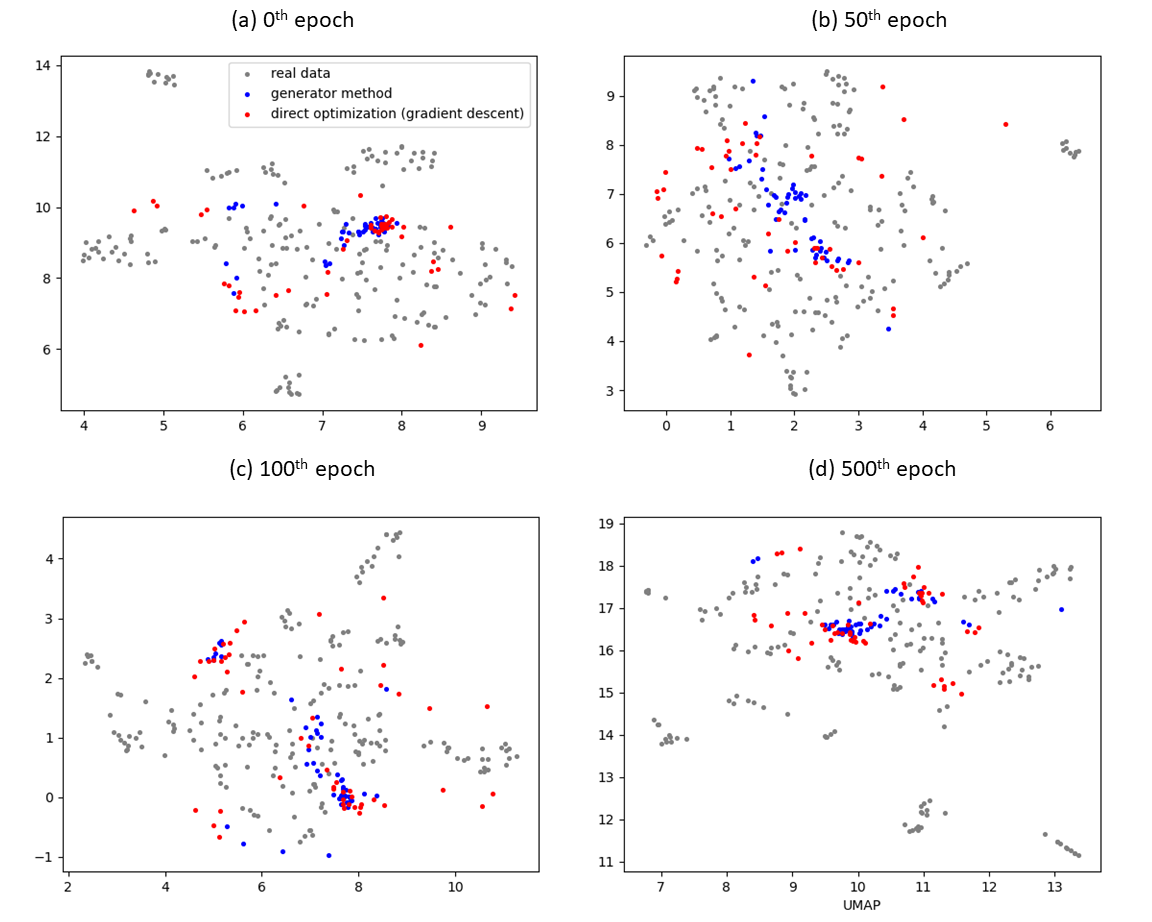

To verify the actual behavior of the generator and direct optimization methods at achieving this goal, we visualize the distribution of synthetic datapoints generated by the generator method and direct optimization (gradient descent) method at different stages of training using plots of 2D UMAP (Uniform Manifold Approximation and Projections) shown in Figure 6.

It is observed that the synthetic data generated by the generator approach tends to converge around one or two tight clusters, leaving the rest of the input space untouched. Even though the direct optimization approach also tends to have some datapoints concentrated at a few clusters, the rest of the datapoints tends to be much better spread out in the input space, while still maintaining similarity with real data. This suggests greater diversity of synthetic data generated with direct optimization should be helpful for training the student model.

It is also observed that at the later stage of training, many more datapoints generated by the generator method cluster at regions where there are no real datapoints compared to datapoints generated by the direct optimization method. This may explain the larger student loss for the generator method than direct optimization method at later stage of training. This suggests that the decreasing schedule for sample weights parameter which controls how much the generated data, influence the training loss compared to random samples , would likely play a much more important role when using the generator method. Because at later stage of training, generated by the generator method will likely deviate more from the underlying distribution and may lead to negative learning, which necessitates a smaller weight .

We have experimented and found that direct optimizing for 2 steps with a step size of leads to generating synthetic data that do not deviate much from the underlying distribution while still providing a substantially higher student loss than random samples. Hence these two hyperparameters were selected for the direct optimization method.

4.2 Comparison of different methods for data-free distillation on regression datasets

Table 3 and Table 4 shows the comparison of root mean squared error (RMSE) for 5 methods of data-free distillation using student size of 25 and 50 respectively. For the generator method and direct optimization method, both a decreasing schedule and value of 1 are tested. The value of 1 means that the training uses the generated synthetic data entirely without any randomly sampled datapoints.

Comparing the results for student model size of 25 and 50 hidden units, it is observed that with an increase in student model size, the RMSE is lower for all datasets due to the greater representation power of the student model. For most of the datasets tested, the direct optimization method achieves the lowest RMSE and most closely matches the performance of the teacher model. Compared against random sampling, direct optimization with decreasing alpha achieves lower RMSE on 6 out of 7 datasets. Compared against generator method with decreasing , direct optimization with decreasing achieves lower RMSE on 5 out of 7 datasets.

| Dataset | Teacher Model | Random Sampling | Generator; decreasing | Generator; = 1 | Direct optimizer; decreasing | Direct optimizer; = 1 |

|---|---|---|---|---|---|---|

| Compactv | 0.1441 ± 0.0039 | 0.1588 ± 0.0050 | 0.1606 ± 0.0061 | 0.1693 ± 0.0069 | 0.1562 ± 0.0043 | 0.1599 ± 0.0067 |

| Cpusmall | 0.1672 ± 0.0031 | 0.1840 ± 0.0065 | 0.1875 ± 0.0070 | 0.1918 ± 0.0101 | 0.1817 ± 0.0042 | 0.1822 ± 0.0048 |

| CTScan | 0.1058 ± 0.0060 | 0.2248 ± 0.0170 | 0.1601 ± 0.0044 | 0.2091 ± 0.0090 | 0.1649 ± 0.0058 | 0.1593 ± 0.0054 |

| Indoorloc | 0.0847 ± 0.0018 | 0.105 ± 0.0051 | 0.1034 ± 0.0034 | 0.1629 ± 0.0134 | 0.0944 ± 0.0015 | 0.0957 ± 0.0035 |

| Mv | 0.0236 ± 0.0022 | 0.0250 ± 0.0019 | 0.0255 ± 0.0016 | 0.0428 ± 0.0045 | 0.0252 ± 0.0016 | 0.0284 ± 0.0017 |

| Pole | 0.1549 ± 0.0064 | 0.2893 ± 0.0141 | 0.2748 ± 0.0161 | 0.3484 ± 0.0304 | 0.2836 ± 0.0198 | 0.3523 ± 0.0206 |

| Puma32h | 0.2589 ± 0.0055 | 0.2474 ± 0.0043 | 0.2499 ± 0.0035 | 0.2686 ± 0.0091 | 0.2464 ± 0.0034 | 0.2460 ± 0.0034 |

| Dataset | Teacher Model | Random Sampling | Generator; decreasing | Generator; = 1 | Direct optimizer; decreasing | Direct optimizer; = 1 |

|---|---|---|---|---|---|---|

| Compactv | 0.1450 ± 0.0062 | 0.15534 ± 0.0077 | 0.1551 ± 0.0060 | 0.1837 ± 0.0124 | 0.1514 ± 0.0068 | 0.1531 ± 0.0066 |

| Cpusmall | 0.1663 ± 0.0037 | 0.1760 ± 0.0043 | 0.1744 ± 0.0040 | 0.1842 ± 0.0079 | 0.1737 ± 0.0027 | 0.1737 ± 0.0049 |

| CTScan | 0.1032 ± 0.0048 | 0.1980 ± 0.0111 | 0.1458 ± 0.0058 | 0.2165 ± 0.0092 | 0.1320 ± 0.0047 | 0.1316 ± 0.0050 |

| Indoorloc | 0.0844 ± 0.0039 | 0.0965 ± 0.0043 | 0.1020 ± 0.0035 | 0.1549 ± 0.0076 | 0.0890 ± 0.0021 | 0.0913 ± 0.0027 |

| Mv | 0.0226 ± 0.0027 | 0.0237 ± 0.0023 | 0.0235 ± 0.0019 | 0.0441 ± 0.0059 | 0.0235 ± 0.0021 | 0.0271 ± 0.0022 |

| Pole | 0.1539 ± 0.0055 | 0.2163 ± 0.0143 | 0.1964 ± 0.0074 | 0.2094 ± 0.0059 | 0.2092 ± 0.0114 | 0.2324 ± 0.0165 |

| Puma32h | 0.2625 ± 0.0057 | 0.2521 ± 0.0035 | 0.2554 ± 0.0036 | 0.2639 ± 0.0123 | 0.2518 ± 0.0057 | 0.2495 ± 0.0045 |

When is set to 1, we observe a substantial increase in RMSE for the generator method. However, for the direct optimization method, setting to 1 generally does not lead to much worse performance. This matches our hypothesis that a decreasing schedule is much more important for the generator method as the synthetic datapoint generated tends to deviate more from the underlying distribution at a later stage of training. Compared with generator method with decreasing , direct optimization (i.e. training on only) achieves lower RMSE on 5 out of 7 datasets.

Figure 7 shows the RMSE on the validation set over the course of training of the student model of size 50. The direct optimization method shows a faster decrease in RMSE and a generally more stable learning behavior than the generator method. (Plot values have been smoothed with a Savitzky–Golay filter of window size of 15 epochs to reduce noise for better visualization)

We also examine the student loss on for the two models where . As seen in Figure 8, the generator method often produce unexpectedly large losses during training that could results in negative learning for the model, whereas the direct optimization method generally produces a stable and consistently decreasing loss.

4.3 Comparison of different methods for data-free distillation on MNIST

We experimented with different settings of , and weights for the various components in the loss function (equation 8) and found that a low value (), high value (set to 1), low value (set to ) and provides good regularization that encourages synthetic data generated to be diverse and resemble real data distribution more closely.

Table 5 below shows the comparison of mean absolute error (MAE) as well as the RMSE achieved by training the student model with synthetic data sampled randomly, generated by the generator method and by the direct optimization method. MAE was used as the evaluation metric following the original study [Wang et al., 2020]. Results are averaged over 5 runs. Note that the best performing random model that outputs a constant value of 4.5 would give a MAE of approximately 2.5 for a class balanced test set.

| Metrics | Teacher Model | Random Sampling | Generator | Direct Optimizer | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| MAE | 0.157 |

|

|

|

||||||

| RMSE | 0.165 |

|

|

|

We can observe that the generator method performs only slightly better than random prediction. The direct optimization method was able to provide a substantial improvement in performance compared to the other methods. We examine samples of the synthetic data generated by each method in Figure 9. It is observed that the direct optimization method generates synthetic data closer to what appears to be handwritten digits compared to the other methods. We also examine the histograms of predicted values by teacher networks on a batch of 50 synthetically generated data in Figure 10. It is observed that direct optimization method generates samples with the most diversity while maintaining closeness to integer values. The closer resemblance to real data distribution is likely the reason the student model trained on those synthetic data distills more useful knowledge from the teacher model and outperforms the other methods.

This experiment demonstrates that the direct optimization method can be easily and effectively adapted for data-free knowledge distillation for regression tasks on image inputs, different types of student or generator loss functions and for multilayer networks with non-MLP architectures which potentially addresses the limitation raised in [Kang and Kang, 2021] on poor applicability of the generator method for data-free knowledge distillation of multilayer networks for regression.

4.4 Case study of data-free distillation for protein solubility predictions

We experimented with different settings of value and found that a value of 0.05 encourages synthetic data generated to be diverse and provides the best training results.

Table 6 shows the comparison of root mean squared error (RMSE) for the teacher model. The performance obtained for the teacher model is comparable with those obtained in the original study [Han et al., 2019] on regression predictive model for protein solubility. Results are averaged over 5 runs. Note that for this dataset, a random model that outputs values uniformly drawn from 0 – 1 will give a RMSE of approximately 0.43.

| Teacher Model (SVM) | Random Sampling | Direct optimizer | ||||

|---|---|---|---|---|---|---|

| 0.250 |

|

|

It is observed that direct optimization method with differential evolution outperforms random sampling significantly and approaches the RMSE of the teacher model. This case study demonstrated that direct optimization can be easily and effectively applied to cases where gradient information from teacher model is not available, or if the teacher model is not a differentiable neural network at all, such as the support vector machine teacher model in this case. This is achieved by simply swapping the gradient descent with a metaheuristics algorithm for direct optimization. Whereas, using a conventional neural network generative model for synthetic data generation is not possible as the training for the generative model relies on gradients of both the teacher and student model.

The limitation however is that metaheuristics optimization methods tend to be much slower than gradient based optimization and thus incur and significant increase in runtime over the baseline method during training. This may be improved by using a faster metaheuristics algorithm, or one that has been optimized to run on GPU, but that is beyond the scope of this paper.

5 Conclusion

In this study, we investigated the behavior of various synthetic data generation methods including random sampling and using an adversarially trained generator. We propose a straightforward synthetic data generation strategy that optimizes the difference between the student and teacher model predictions directly, with additional flexibility to incorporate arbitrary regularization terms that capture properties of the data. We show that synthetic data generated by an adversarially trained generator tends not to represent underlying data distribution well, requiring the need to supplement training with random samples and balancing the loss contributions. Our proposed strategy of direct optimization generates synthetic data with higher loss than random samples while deviating less from underlying distribution than the generator method.

The proposed method allows the student model to learn better and emulate the performance of the teacher model more closely. This is demonstrated in the experiments, where the proposed method achieves lower RMSE than baseline and generator method for most regression datasets tested. We also demonstrate the applicability and flexibility of the method applied to image inputs and deeper convolutional networks on the MNIST dataset, as well as performing distillation on a non-differentiable model in the case study for predicting protein solubility. Nevertheless, there could be many other applications of data-free knowledge distillation for regression with different input data types that we have not investigated. Future work can be done to investigate the performance of this and previous methods on data types such as signal and time series data which can be useful for deployment on small mobile or microcontroller devices for industrial monitoring applications [Kumar et al., 2022, Lou et al., 2022]. We have used fixed step gradient descent and differential evolution for direct optimization of student loss. Future work can also explore how different optimizers and their settings may affect performance.

We hope that this study furthers the understanding of data-free distillation for regression and highlights the key role of the synthetic data generation process in allowing the student model to effectively distill the teacher model. All codes and data used in this study are available at https://github.com/zhoutianxun/data_free_KD_regression.

References

- [Aggarwal et al., 2021] Aggarwal, A., Mittal, M., and Battineni, G. (2021). Generative adversarial network: An overview of theory and applications. International Journal of Information Management Data Insights, 1:100004.

- [Alcala-Fdez et al., 2010] Alcala-Fdez, J., Fernández, A., Luengo, J., Derrac, J., Garcia, S., Sanchez, L., and Herrera, F. (2010). Keel data-mining software tool: Data set repository, integration of algorithms and experimental analysis framework. Journal of Multiple-Valued Logic and Soft Computing, 17:255–287.

- [Arjovsky and Bottou, 2017] Arjovsky, M. and Bottou, L. (2017). Towards principled methods for training generative adversarial networks. 5th International Conference on Learning Representations, ICLR 2017 - Conference Track Proceedings.

- [Boyd and Vandenberghe, 2009] Boyd, S. and Vandenberghe, L. (2009). Convex Optimization. Cambridge University Press, 7 edition.

- [Chai et al., 2021] Chai, J., Zeng, H., Li, A., and Ngai, E. W. (2021). Deep learning in computer vision: A critical review of emerging techniques and application scenarios. Machine Learning with Applications, 6:100134.

- [Chawla et al., 2021] Chawla, A., Yin, H., Molchanov, P., and Alvarez, J. (2021). Data-free knowledge distillation for object detection. Proceedings - 2021 IEEE Winter Conference on Applications of Computer Vision, WACV 2021, pages 3288–3297.

- [Chen et al., 2017] Chen, G., Choi, W., Yu, X., Han, T., and Chandraker, M. (2017). Learning efficient object detection models with knowledge distillation. Advances in Neural Information Processing Systems, 30.

- [Chen, 2022] Chen, H. (2022). Knowledge distillation with error-correcting transfer learning for wind power prediction.

- [Chen et al., 2021] Chen, H., Wang, Y., Xu, C., Xu, C., and Tao, D. (2021). Learning student networks via feature embedding. IEEE Transactions on Neural Networks and Learning Systems, 32:25–35.

- [Chen et al., 2019] Chen, H., Wang, Y., Xu, C., Yang, Z., Liu, C., Shi, B., Xu, C., Xu, C., and Tian, Q. (2019). Data-free learning of student networks. Proceedings of the IEEE International Conference on Computer Vision, 2019-October:3513–3521.

- [Cheng et al., 2018] Cheng, Y., Wang, D., Zhou, P., and Zhang, T. (2018). Model compression and acceleration for deep neural networks: The principles, progress, and challenges. IEEE Signal Processing Magazine, 35:126–136.

- [Deng et al., 2020] Deng, B. L., Li, G., Han, S., Shi, L., and Xie, Y. (2020). Model compression and hardware acceleration for neural networks: A comprehensive survey. Proceedings of the IEEE, 108:485–532.

- [Dua and Graff, 2017] Dua, D. and Graff, C. (2017). Uci machine learning repository.

- [Gou et al., 2021] Gou, J., Yu, B., Maybank, S. J., and Tao, D. (2021). Knowledge distillation: A survey. International Journal of Computer Vision 2021 129:6, 129:1789–1819.

- [Gui et al., 2023] Gui, J., Sun, Z., Wen, Y., Tao, D., and Ye, J. (2023). A review on generative adversarial networks: Algorithms, theory, and applications. IEEE Transactions on Knowledge and Data Engineering, 35:3313–3332.

- [Guo et al., 2021] Guo, K., Yang, Z., Yu, C. H., and Buehler, M. J. (2021). Artificial intelligence and machine learning in design of mechanical materials. Materials Horizons, 8:1153–1172.

- [Han et al., 2020] Han, X., Ning, W., Ma, X., Wang, X., and Zhou, K. (2020). Improving protein solubility and activity by introducing small peptide tags designed with machine learning models. Metabolic Engineering Communications, 11:e00138.

- [Han et al., 2019] Han, X., Wang, X., and Zhou, K. (2019). Develop machine learning-based regression predictive models for engineering protein solubility. Bioinformatics, 35:4640–4646.

- [Hinton et al., 2015] Hinton, G., Vinyals, O., and Dean, J. (2015). Distilling the knowledge in a neural network.

- [Hu et al., 2020] Hu, H., Xie, L., Hong, R., and Tian, Q. (2020). Creating something from nothing: Unsupervised knowledge distillation for cross-modal hashing. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 3120–3129.

- [Kang and Kang, 2021] Kang, M. and Kang, S. (2021). Data-free knowledge distillation in neural networks for regression. Expert Systems with Applications, 175:114813.

- [Kumar et al., 2022] Kumar, A., Parkash, C., Vashishtha, G., Tang, H., Kundu, P., and Xiang, J. (2022). State-space modeling and novel entropy-based health indicator for dynamic degradation monitoring of rolling element bearing. Reliability Engineering & System Safety, 221:108356.

- [Lecun et al., 2015] Lecun, Y., Bengio, Y., and Hinton, G. (2015). Deep learning. Nature 2015 521:7553, 521:436–444.

- [Liu et al., 2022] Liu, D., Kong, H., Luo, X., Liu, W., and Subramaniam, R. (2022). Bringing ai to edge: From deep learning’s perspective. Neurocomputing, 485:297–320.

- [Lopes et al., 2017] Lopes, R. G., Fenu, S., and Starner, T. (2017). Data-free knowledge distillation for deep neural networks.

- [Lou et al., 2022] Lou, Y., Kumar, A., and Xiang, J. (2022). Machinery fault diagnosis based on domain adaptation to bridge the gap between simulation and measured signals. IEEE Transactions on Instrumentation and Measurement, 71.

- [Lucic et al., 2018] Lucic, M., Kurach, K., Michalski, M., Bousquet, O., and Gelly, S. (2018). Are gans created equal? a large-scale study. pages 698–707. Curran Associates Inc.

- [Micaelli and Storkey, 2019] Micaelli, P. and Storkey, A. J. (2019). Zero-shot knowledge transfer via adversarial belief matching. Advances in Neural Information Processing Systems, 32.

- [Nayak et al., 2021] Nayak, G. K., Mopuri, K. R., and Chakraborty, A. (2021). Effectiveness of arbitrary transfer sets for data-free knowledge distillation. Proceedings - 2021 IEEE Winter Conference on Applications of Computer Vision, WACV 2021, pages 1429–1437.

- [Niwa et al., 2009] Niwa, T., Ying, B. W., Saito, K., Jin, W., Takada, S., Ueda, T., and Taguchi, H. (2009). Bimodal protein solubility distribution revealed by an aggregation analysis of the entire ensemble of escherichia coli proteins. Proceedings of the National Academy of Sciences of the United States of America, 106:4201–4206.

- [Otter et al., 2021] Otter, D. W., Medina, J. R., and Kalita, J. K. (2021). A survey of the usages of deep learning for natural language processing. IEEE Transactions on Neural Networks and Learning Systems, 32:604–624.

- [O’Mahony et al., 2020] O’Mahony, N., Campbell, S., Carvalho, A., Harapanahalli, S., Hernandez, G. V., Krpalkova, L., Riordan, D., and Walsh, J. (2020). Deep learning vs. traditional computer vision. Advances in Intelligent Systems and Computing, 943:128–144.

- [Purwins et al., 2019] Purwins, H., Li, B., Virtanen, T., Schlüter, J., Chang, S. Y., and Sainath, T. (2019). Deep learning for audio signal processing. IEEE Journal on Selected Topics in Signal Processing, 13:206–219.

- [Rim et al., 2020] Rim, B., Sung, N. J., Min, S., and Hong, M. (2020). Deep learning in physiological signal data: A survey. Sensors 2020, Vol. 20, Page 969, 20:969.

- [Romero et al., 2014] Romero, A., Ballas, N., Kahou, S. E., Chassang, A., Gatta, C., and Bengio, Y. (2014). Fitnets: Hints for thin deep nets. 3rd International Conference on Learning Representations, ICLR 2015 - Conference Track Proceedings.

- [Salimans et al., 2016] Salimans, T., Goodfellow, I., Zaremba, W., Cheung, V., Radford, A., Chen, X., and Chen, X. (2016). Improved techniques for training gans. volume 29. Curran Associates, Inc.

- [Saputra et al., 2019] Saputra, M. R. U., Gusmao, P., Almalioglu, Y., Markham, A., and Trigoni, N. (2019). Distilling knowledge from a deep pose regressor network. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), 2019-October:263–272.

- [Saxena and Cao, 2021] Saxena, D. and Cao, J. (2021). Generative adversarial networks (gans). ACM Computing Surveys (CSUR), 54.

- [Schweidtmann et al., 2021] Schweidtmann, A. M., Esche, E., Fischer, A., Kloft, M., Repke, J. U., Sager, S., and Mitsos, A. (2021). Machine learning in chemical engineering: A perspective. Chemie Ingenieur Technik, 93:2029–2039.

- [Storn and Price, 1997] Storn, R. and Price, K. (1997). Differential evolution – a simple and efficient heuristic for global optimization over continuous spaces. Journal of Global Optimization 1997 11:4, 11:341–359.

- [Takamoto et al., 2020] Takamoto, M., Morishita, Y., and Imaoka, H. (2020). An efficient method of training small models for regression problems with knowledge distillation. Proceedings - 3rd International Conference on Multimedia Information Processing and Retrieval, MIPR 2020, pages 67–72.

- [Tapeh and Naser, 2022] Tapeh, A. T. G. and Naser, M. Z. (2022). Artificial intelligence, machine learning, and deep learning in structural engineering: A scientometrics review of trends and best practices. Archives of Computational Methods in Engineering 2022 30:1, 30:115–159.

- [Thai, 2022] Thai, H. T. (2022). Machine learning for structural engineering: A state-of-the-art review. Structures, 38:448–491.

- [Wang et al., 2022] Wang, C. H., Huang, K. Y., Yao, Y., Chen, J. C., Shuai, H. H., and Cheng, W. H. (2022). Lightweight deep learning: An overview. IEEE Consumer Electronics Magazine.

- [Wang and Yoon, 2022] Wang, L. and Yoon, K. J. (2022). Knowledge distillation and student-teacher learning for visual intelligence: A review and new outlooks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44:3048–3068.

- [Wang et al., 2020] Wang, Z., Wu, S., Liu, C., Wu, S., and Xiao, K. (2020). The regression of mnist dataset based on convolutional neural network. Advances in Intelligent Systems and Computing, 921:59–68.

- [Xu et al., 2022] Xu, Q., Chen, Z., Ragab, M., Wang, C., Wu, M., and Li, X. (2022). Contrastive adversarial knowledge distillation for deep model compression in time-series regression tasks. Neurocomputing, 485:242–251.

- [Yang et al., 2022] Yang, Y., Sun, X., Diao, W., Li, H., Wu, Y., Li, X., and Fu, K. (2022). Adaptive knowledge distillation for lightweight remote sensing object detectors optimizing. IEEE Transactions on Geoscience and Remote Sensing, 60.

- [Ye et al., 2020] Ye, J., Ji, Y., Wang, X., Gao, X., and Song, M. (2020). Data-free knowledge amalgamation via group-stack dual-gan. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pages 12513–12522.

- [Yim et al., 2017] Yim, J., Joo, D., Bae, J., and Kim, J. (2017). A gift from knowledge distillation: Fast optimization, network minimization and transfer learning. Proceedings - 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, 2017-January:7130–7138.

- [Yoo et al., 2019] Yoo, J., Cho, M., Kim, T., and Kang, U. (2019). Knowledge extraction with no observable data. Advances in Neural Information Processing Systems, 32.

Supplementary Materials

Guarantee (b) for direct optimization method with fixed steps:

Assuming a -dimensional -Lipschitz continuous loss function, direct optimization of with gradient descent in fixed number of steps on the loss function results in that is bounded in distance away from .

Proof:

Given a -dimensional -Lipschitz function, by definition, for all real and :

Let and taking the limits of :

For gradient descent, the update formula is:

The squared distance moved in the first step of gradient descent is bounded:

Therefore, the distance moved in steps of gradient descent where on a -dimensional -Lipschitz function is bounded by:

Guarantee (b) for generator method with L2 regularization:

Assuming a -dimensional -Lipschitz continuous loss function, generating synthetic data with a generator network trained with the regularized loss function [equation 3] will result in that is bounded in distance away from 0, i.e. norm of .

Proof: Given a -dimensional -Lipschitz continuous loss function, the gradient at any point is bounded by :

The generator network is trained to map random noise vector to to minimize the loss function with a regularization on , i.e

As we are minimizing the loss function, we only focus on the lower bound on

Then assuming sufficiently small learning rate, the solution will converge upon a point where gradient of the loss function is 0:

Therefore, the bound for the norm of is: