Symbolic Music Playing Techniques Generation as a Tagging Problem

Abstract

Music generation has always been a hot topic. When discussing symbolic music, melody or harmonies are usually seen as the only generating targets. But in fact, playing techniques are also quite an important part of the music. In this paper, we discuss the playing techniques generation problem by seeing it as a tagging problem. We propose a model that can use both the current data and external knowledge. Experiments were carried out by applying the proposed model in Chinese bamboo flute music, and results show that our method can make generated music more lively.

1 Introduction

Music generation has always been a hot topic. As early as the classical music period, Mozart used the method of rolling dice to automatically generate music. In recent years, most of the automatic music generation methods are related to deep learning, various kinds of model such as the encoder-decoder framework Yang et al. (2017), generative adversarial networks (GAN) Dong et al. (2017), variational autoencoders (VAE) Hennig et al. (2017), long-short-term memory (LSTM) Hadjeres et al. (2017) and recurrent Boltzmann machines (RBM) Boulanger-Lewandowski et al. (2012) are used widely.

Unfortunately, previous studies about music generation hardly ever took playing techniques into account at the symbolic level. In fact, playing techniques are also quite an important part of the music. For example, In Chinese bamboo flute music, different music styles have different playing techniques, which is helpful to better show the features of different styles. Even in some kinds of music, the playing techniques are more important than the melody. For example, scores of Guqin, can have no stable tonality and no stable duration of the pitch but must have definite playing techniques recorded.

In this paper, we discuss the symbolic music playing techniques generation problem. We solve this problem by seeing it as a tagging problem. There is much discussion about tagging problem in natural language processing. Some sequence tagging models like Conditional Random Fields (CRF) Lafferty et al. (2001), Bidirectional LSTM (BiLSTM) Graves et al. (2013) and BiLSTM with a CRF layer (BiLSTM-CRF) Huang et al. (2015) perform well in many tagging tasks. However, they are only purely data-driven learning. In fact, especially for music, human perception of them depends not only on learning data from the current scene but also on some more general knowledge from the past. For example, when you try to study a new instrument, you can not only benefit from some knowledge from the current instrument which you are learning but also can benefit from some music knowledge which you have learned in the past. Therefore, we propose a model that can use both current data and external knowledge.

Our proposed framework is composed of three parts. The first part is studying the current data. In this part, a general sequence tagging model (like CRF, BiLSTM, BiLSTM-CRF, and so on) is used. The second part is studying external knowledge. In this part, external knowledge is first constructed into logic rules, then a weight matrix that implies logic rules is generated using an algorithm. The third part is to combine the previous two parts through matrix operations. We evaluate our model using a Chinese bamboo flute music dataset, and the results show that our method can make generated music more lively.

To the best of our knowledge, we are the first to explore playing techniques generation at the symbolic level, another contribution is that we propose a playing techniques generation model that can use both current data and external knowledge.

2 Task Description

In this paper, we focus on monophonic music, but it can also be extended to polyphonic music easily. We see the playing techniques generation problem as a tagging problem, which consists of two processes. The first process is training a tagging model from a training dataset, the second process is applying the trained tagging model into a testing dataset to generate playing techniques. The goal of the tagging problem is that, given an observation sequence input, a tagging sequence (a state sequence) output can be predicted. In the playing techniques generation problem, a note sequence represents an observation sequence and a playing technique sequence represents a tagging sequence (see Figure 1). Which is to say, playing techniques can be generated based on note sequences and a trained tagging model.

3 Data Representation

A monophonic melody can be seen as a note sequence. In this paper, each note is composed of the following features:

-

•

Pitch: Chromatic scale is used to measure pitch.

-

•

Duration: We use quarter length (ql) to measure the duration of a note. For example, a whole note is 4ql duration, and an eighth note is ql duration.

For example, a note whose pitch is C1 and duration is 4ql duration can be represented as “C14”. Another example is shown in Figure 1, the note sequence in this figure can be represented as a list of [a12, b12, c24, a12, e12], and the corresponding tagging sequence is [trills, none techniques, fermata, none techniques, mordent].

4 Model

The overview of the proposed model can be seen in Figure 2. Overall, this model is composed of three parts:

-

•

Part 1: Studying current data

Model studies from current data using some general sequence tagging models (like CRF, BiLSTM, BiLSTM-CRF, and so on), then a trained base model can be gotten. Applying this trained model into an observation sequence, a prediction matrix (The number of columns represents the length of the sequence, the number of rows represents the number of the tag) and a prediction sequence can be gotten. Besides, this prediction sequence and this observation sequence can be a part of the input of Part 2.

-

•

Part 2: Studying external knowledge

In this paper, we focus on external knowledge that can be constructed into some logic rules. Using prediction sequence and observation sequence from Part 1, and some logic rules constructed from external knowledge. a weight matrix (has the same number of rows and columns as ) is finally generated (More details are in section 5.1).

-

•

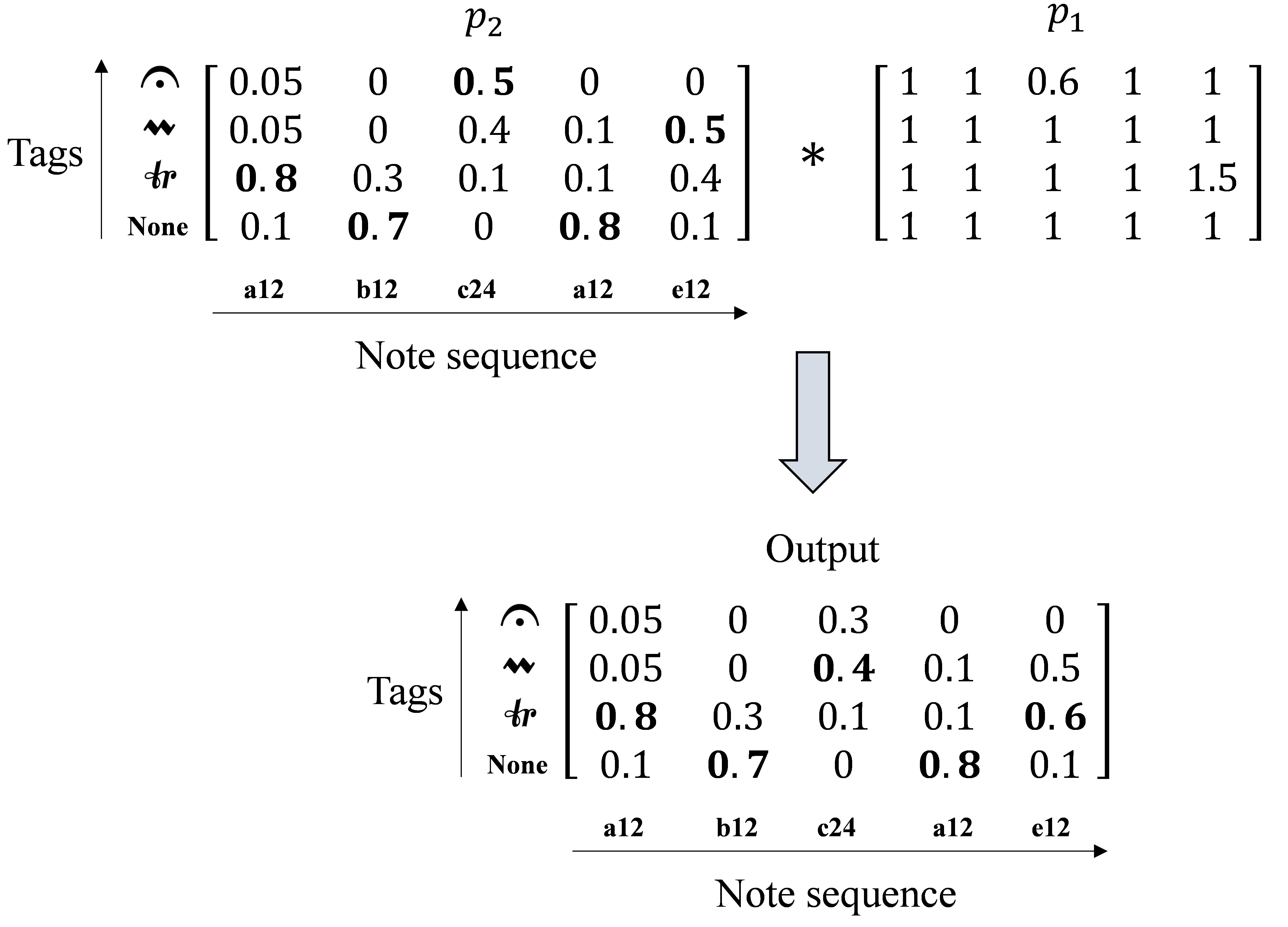

Part 3: Combination

By calculating the Hadamard product of and , the final output can be gotten. (More details are in section 5.2).

4.1 Studying External Knowledge

How to construct logic rules from external knowledge is different in different situations. Many methods have been discussed in discrete mathematics Rosen and Krithivasan (2012). In this paper, we mainly describe how to use logic rules to generate a weight matrix based on state sequence (prediction sequence) and observation sequence.

At first, we should make out what kinds of logic rules are there in this playing techniques generation problem. Let be the observation sequence of length , and be the corresponding state sequence. Then and can be represented as and . Let be a tag set with elements, it can be represented as . Let be a login rule set. There are two kinds of logic rules in :

-

•

Rule 1: Observation sequence constrains state sequence

Let and represent predicates in logic rules. The statement is the value of the propositional function at a observation sequence . The statement is the value of the propositional function at a state and a tag. The corresponding logic rule can be represented as:

(1) The confidence of this logic rule is set as an adjustable parameter. We take a specific logic rule as an example, it is:

(2) In this example, predicate refers to “has a note whose duration is greater than 3”, predicate refers to “The state corresponding with the note is”.

-

•

Rule 2: State sequence constrains state sequence

Let and represent predicates in logic rules. The statement is the value of the propositional function at a state sequence . The statement is the value of the propositional function at a state and a tag. The corresponding logic rule can be represented as:

(3) The confidence of this logic rule is set as an adjustable parameter, too.

Then, a new weight matrix can be generated using Algorithm 1. Before this algorithm, the weight matrix (has the same size as the prediction matrix ) is initialized to a matrix with each element being 1. and is set as parameters to reflect the confidence of logic rules.

| Algorithm 1: Generating a new weight matrix |

| Input: The old weight matrix , |

| The observation sequence , |

| The state sequence , |

| The rule set , |

| Parameters: , Measure confidence |

| 1: for each rule in : |

| 2: if == True: |

| 3: |

| 4: if == True: |

| 5: |

| Output: A new weight matrix , |

4.2 Combination

By calculating the Hadamard product of and , the final output can be gotten. An example is shown in Figure 3. Suppose this instrument has four kinds of tags. In this example, when external knowledge is not used, the prediction sequence is [trills, none techniques, fermata, none techniques, mordent]. When external knowledge is used, the prediction result is [trills, none techniques, mordent, none techniques, trills].

5 Experiments

5.1 Setup

Playing techniques can’t be generated without melodies. Therefore, our playing techniques generation experiment is based on the melodies that have been generated. We first used some music style transfer methods introduced in Zalkow et al. (2016) to generate melodies of a specific style, then used the model proposed in this paper to generate playing techniques. We let humans evaluate the similarity between the generated music and the target music style, to compare the effect of generating only the melody with the effect of generating the melody and playing techniques. We use Chinese bamboo flute music as the experiment subject, and the dataset is from Li (2003) and Yan and Yu (1994). The data we used to train the playing techniques generation model includes 7320 notes in total. The data we used to test includes 4 pieces of melody.

The parameter setting of the proposed model in this paper is as follows. We use BiLSTM as the base model and use the external knowledge summarized in Wang (2014), to construct 6 logic rules. We use a learning rate of 0.001. We set the dimension of the “word” vector to 256, and the hidden layer size to 128. A batch size of 32 is used. We trained the model for 30 epochs.

After generating symbolic music (melodies and playing techniques), we played them in Chinese bamboo flute to get audios, and used these audios to do the evaluation. Our evaluation was carried out with 35 participants. 12 of these participants have the experience of being a Chinese bamboo flute player, or have received formal education about Chinese bamboo flute music, or have work experience in this field. The other participants have other related music backgrounds. The evaluation score is in the scale from 1 to 10, where 1 represents the generated music is completely different from the target music style, 10 represents the generated music is very similar to the target music style.

5.2 Results

The experiment results are shown in Figure 4. We can see that in all examples, the score is higher when the playing techniques generation model is used. This result shows that using our model can make music more lively compared with only generating melodies.

5.3 A Generation Example

An example of generated music is shown in Figure 5. The generated music belongs to the style of the Northern school in Chinese bamboo flute. The generated playing techniques like tonguing and appoggiatura can make music style closer to the style of the Northern school.

6 Conclusion and Future Work

Seeing playing techniques generation as a tagging problem, we have developed a framework that can use both the current data and external knowledge to generate playing techniques. Experiment results have shown that our proposed model can make generated music more lively.

There is still a lot of work to be done in the future, which includes more experiments and more applications. As a general playing techniques generation framework, it can be used not only in more music categories but also in other fields of music technology (e.g., music style transfer and music synthesis).

References

- Boulanger-Lewandowski et al. (2012) Nicolas Boulanger-Lewandowski, Yoshua Bengio, and Pascal Vincent. 2012. Modeling temporal dependencies in high-dimensional sequences: Application to polyphonic music generation and transcription. In Proceedings of the 29th International Coference on International Conference on Machine Learning, ICML’12, page 1881–1888, Madison, WI, USA. Omnipress.

- Dong et al. (2017) Hao-Wen Dong, Wen-Yi Hsiao, Li-Chia Yang, and Yi-Hsuan Yang. 2017. Musegan: Symbolic-domain music generation and accompaniment with multi-track sequential generative adversarial networks. arXiv preprint arXiv:1709.06298.

- Graves et al. (2013) Alex Graves, Navdeep Jaitly, and Abdel-rahman Mohamed. 2013. Hybrid speech recognition with deep bidirectional lstm. In 2013 IEEE workshop on automatic speech recognition and understanding, pages 273–278. IEEE.

- Hadjeres et al. (2017) Gaëtan Hadjeres, François Pachet, and Frank Nielsen. 2017. Deepbach: a steerable model for bach chorales generation. In International Conference on Machine Learning, pages 1362–1371.

- Hennig et al. (2017) Jay A Hennig, Akash Umakantha, and Ryan C Williamson. 2017. A classifying variational autoencoder with application to polyphonic music generation. arXiv preprint arXiv:1711.07050.

- Huang et al. (2015) Zhiheng Huang, Wei Xu, and Kai Yu. 2015. Bidirectional lstm-crf models for sequence tagging. arXiv preprint arXiv:1508.01991.

- Lafferty et al. (2001) John Lafferty, Andrew McCallum, and Fernando CN Pereira. 2001. Conditional random fields: Probabilistic models for segmenting and labeling sequence data.

- Li (2003) Zhen Li. 2003. Anthology of Zhen Li’s Dizi music. People’s Music Publishing House.

- Rosen and Krithivasan (2012) Kenneth H Rosen and Kamala Krithivasan. 2012. Discrete mathematics and its applications: with combinatorics and graph theory. Tata McGraw-Hill Education.

- Wang (2014) He Wang. 2014. Research on Chinese traditional bamboo flute playing techniques. Master’s thesis, Shaanxi Normal University.

- Yan and Yu (1994) Niwen Yan and Yunfa Yu. 1994. Collection of famous Chinese bamboo flute music. Shanghai Music Publishing House.

- Yang et al. (2017) Li-Chia Yang, Szu-Yu Chou, and Yi-Hsuan Yang. 2017. Midinet: A convolutional generative adversarial network for symbolic-domain music generation. arXiv preprint arXiv:1703.10847.

- Zalkow et al. (2016) Frank Zalkow, Stephan Brand, and Bejamin Graf. 2016. Musical style modification as an optimization problem. In Proceedings of the International Computer Music Conference, pages 206–211.