SwinFi: a CSI Compression Method based on Swin Transformer for Wi-Fi Sensing

Abstract

Wi-Fi sensing is a transformative approach that enables a large of applications through CSI analysis. The challenge lies in the high computational and communication costs with the increasing granularity of CSI data. In this letter, we propose SwinFi, a pioneering solution that compresses CSI at the edge into a succinct feature image and reconstructs at the cloud for further processing. SwinFi employs a Swin Transformer-based autoencoder-decoder architecture that ensures SOTA performance in both CSI reconstruction and sensing tasks. We utilize a dataset for PIR task and conduct extensive experiments to evaluate SwinFi. The results show that SwinFi achieves the reconstruction quality with the NMSE of -37.74dB and the classification accuracy of 95.3% at the same time.

Index Terms:

CSI compression, Wi-Fi sensing, Swin Transformer, autoencoder-decoder.I Introduction

Wi-Fi sensing has emerged as a pivotal technology in a variety of applications, ranging from human activity recognition to object sensing and localization. Its widespread adoption is underpinned by the unique advantages it offers over traditional sensing methods, notably its cost-effectiveness, device-free nature, and privacy-preserving characteristics. This burgeoning field leverages the extraction of Channel State Information (CSI) to establish key mappings from channel states to recognition targets, facilitating a multitude of sensing capabilities [1]. The advancement of Multiple-Input Multiple-Output (MIMO) and Orthogonal Frequency-Division Multiplexing (OFDM) technologies endow CSI with higher granularity, providing robust support for Wi-Fi sensing technologies. This enhancement, however, brings a substantial increase in computational resource requirements. While cloud and edge computing capabilities offer viable solutions to alleviate computational burdens, they concurrently impose a considerable strain on communication systems due to the extensive data transmission involved. In response, CSI compression technology stands out for its ability to markedly reduce data processing and transmission requirements, thereby bolstering the efficiency and practicality of Wi-Fi sensing systems.

Recent developments in deep learning contribute to new methods in the field of CSI compression. [2] introduces CsiNet, which applies convolutional neural networks (CNN) for the learning and reconstruction of CSI features. Building on this, further studies incorporated temporal dynamics into CSI processing. The integration of CNN with Long Short-Term Memory (LSTM) networks in [3] enables the extraction of both spatial and temporal features from CSI data, leading to enhancements in reconstruction accuracy, particularly in environments with dynamic network conditions. Additionally, [4] explores the application of Transformer models, utilizing multi-head self-attention (MSA) mechanisms to adeptly handle long-range dependencies in CSI data.

However, the aforementioned works are primarily oriented towards 5G communication, where CSI feedback plays a crucial role in ensuring high-quality precoding and efficient communication transmission, with the emphasis on correct reconstruction. [5] presents a different perspective, asserting that CSI compression for Wi-Fi sensing fundamentally diverges from the approach in 5G communication. Although the tasks in both domains are similar, in Wi-Fi sensing, the compressed CSI data requires not only reconstructability but also discriminative feature to support various sensing applications. In other words, the compressed feature map should preserve essential characteristics for both recognition and sensing tasks.

In this letter, we propose an innovative method for CSI compression of Wi-Fi sensing. Inspired by [5], we focus on both reconstruction accuracy and classification features as key metrics. To capture the spatial and temporal dynamics of CSI, we treat a sequence of CSI matrices over time as a unique form image. Leveraging the principles of Swin Transformer, we design an autoencoder-decoder architecture tailored for Wi-Fi sensing applications. The contributions of this letter are summarized as follows:

-

1.

To reduce the communication and computational costs of Wi-Fi sensing, we propose SwinFi, a novel method that compresses CSI data into a compact feature image at the edge and reconstructs the original CSI data at the cloud for further processing.

-

2.

We design a multitask joint model that, building upon the SwinFi Encoder, integrates a linear classification head to enable the recognition of categorical features within CSI feature images.

-

3.

To evaluate the performance of SwinFi, we produce a dataset for personnel identity recognition (PIR) tasks based on Wi-Fi CSI. We compare SwinFi with several state-of-the-art (SOTA) methods and the results show that SwinFi achieves the best reconstruction quality with the Normalized Mean Squared Error (NMSE) of -37.74dB, while concurrently attaining a classification accuracy of 95.3%.

II Methodologies

We tend to adapt the latest computer vision (CV) methods to CSI processing. Therefore, in this section, we first introduce two SOTA methods based on Transformer. Then we delve into the architecture and details of our proposed SwinFi.

II-A Vision Transformer

Vision Transformer (ViT) is introduced in [6], representing a significant breakthrough in CV. ViT adapts the Transformer architecture, traditionally used in natural language processing (NLP), for image analysis tasks. This adaptation demonstrates remarkable success in CV, including classification, segmentation, and object detection.

The core idea of ViT is to treat an image as a sequence of fixed-size patches, analogous to the words in a text. The patches are then linearly embedded into a sequence of vectors. The model applies a Transformer encoder to these embeddings to capture the complex relationships between the patches. The encoder consists of multiple layers, each containing two main components: MSA and a fully connected feed-forward network. After the encoder, the output is leveraged for various downstream tasks.

II-B Swin Transformer

While ViT treats images as sequences of patches similar to words, this approach leads to exponential increases in computational cost with image size due to global MSA. Furthermore, the patch method may overlook the details within itself, leading to a loss of local structural information.

Based on ViT, Swin Transformer [7] proposes several key innovations that further adapt the Transformer architecture for CV. Swin Transformer incorporates the notion of local windows into ViT architecture, similar in spirit to the receptive fields of convolutional neural networks (CNN). The input image is divided into patches smaller than ViT does. Instead of processing all patches at once, Swin Transformer applies MSA within local windows. Another pivotal innovation is the shift window mechanism, which enables information to flow across the entire image by alternating the window positions across layers. This mechanism effectively achieves a global receptive field while maintaining a linear computational complexity with the image size. The complexity comparison between MSA and window MSA (W-MSA) is as follows:

| (1) | |||

where and are the patch number, is the dimension of patch, and is the window size.

II-C SwinFi

In the context of CSI compression and reconstruction, the data, characterized by its multi-dimensional nature (subcarriers, time, and multiple antennas), presents a formidable challenge. To adapt CV methods for CSI data, we consider each CSI matrix as an ”image” where each pixel represents the amplitude or phase of a subcarrier at a specific time.

Inspired by Swin Transformer, we propose SwinFi as shown in Fig 1, a novel method employing an end-to-end autoencoder-decoder architecture, specifically for CSI compression and PIR tasks. SwinFi is designed to capture the spatial and temporal dynamics of CSI data, enabling efficient and effective compression. The components of SwinFi are as follows:

1. Encoder: Encoder consists of a patch embedding block and an encoder block. The patch embedding block transforms the input into , where is the batch size, is the number of channels, is the number of subcarriers, is the number of time slots, is the number of patches, and is the patch embedding dimension. The patch focuses on the temporal dimension, with each patch being of size . The encoder block comprises several Swin Transformer Block layers and Patch Merge layers, each Swin Transformer Block including a W-MSA, a window position encoding layer, and an MLP layer.

2. Decoder: Decoder mirrors the architecture of Encoder but with Patch Merge layers replaced by Patch Split layers. It decodes the encoded CSI feature image back into the original space. During training, the parameters of Encoder and Decoder are jointly optimized to minimize the NMSELoss between the original CSI data and the reconstructed data.

3. Classifier: Classifier is a straightforward linear structure to identify the categorical features within feature images for PIR tasks. Training of Classifier utilizes the CrossEntropyLoss to update its parameters.

It is noteworthy that, unlike the square shapes commonly utilized in CV, both the patch and window sizes in SwinFi adopt rectangular dimensions, with a patch width of 1, meaning that each time slot occupies a separate patch. Similarly, the windows have a length of 1, as we aim to avoid computing MSA across patches that are neither adjacent in time slots nor subcarriers, a measure grounded in the belief that such quantities lack physical significance. The superiority of this approach will be substantiated in the experimental section.

III Experimental Results

In this section, we first present the CSI datasets made for PIR tasks. Then we introduce the experimental setup and evaluation of SwinFi.

III-A Datasets

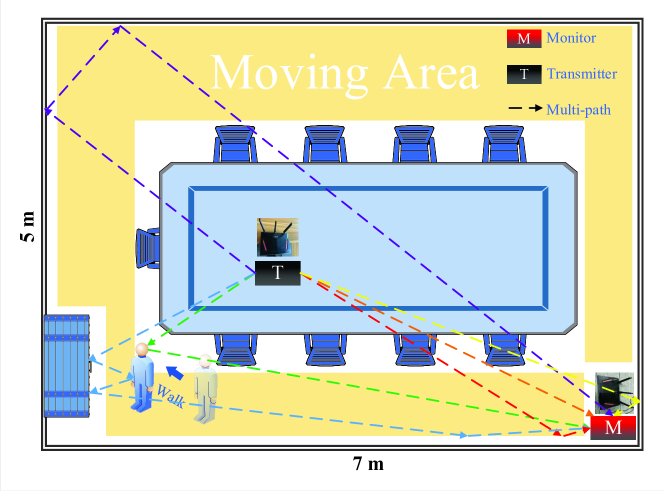

In this letter, we produce a specialized Wi-Fi CSI dataset designed for PIR tasks. The dataset is assembled using two 802.11ac routers equipped with Nexmon tools [8, 9], set up in a meeting room as depicted in Figure 2. We recorded the CSI data of 20 participants, each freely moving within the room. A single-antenna transmitter transmits signals at an 80MHz frequency every 10 milliseconds, while a receiver with four antennas captures the CSI data, which includes gait characteristics crucial for identifying individuals.

The raw communication cost of this setup, without any preprocessing, is calculated to be Bytes/s, equivalent to approximately 6.55Mbps. This corresponds to bytes per measurement, with each complex number (detailing amplitude or phase) stored using two floating points: 4 bytes each for the real and imaginary parts. Based on the aforementioned setup, we conduct experiments that involve collecting five minutes of CSI data for each of the 20 participants, as well as for an empty meeting room, resulting in a dataset that categorizes 21 different classes.

As identified in [10], phase errors in CSI measurements can stem from several sources related to hardware imperfections. These include packet detection delay (PDD), sampling frequency offset (SFO), carrier frequency offset (CFO), random initial phase offset, and phase ambiguity. Such errors compromise the accuracy of phase information.

To address these issues, we implement a two-step correction process, as shown in Fig 3. The initial step involves phase unwrapping along the subcarrier dimension, effectively mitigating discontinuities that arise due to the cyclic nature of phase measurements. This unwrapping process is described as:

| (2) |

where represents the phase of the -th subcarrier.

Following the unwrapping, the phase data is subjected to a linear fitting process. The process is governed as:

| (3) |

| (4) |

| (5) |

where denotes the index of the -th subcarrier, and and are the coefficients determined through the fitting. The real phase is then recalculated by subtracting the derived linear term from the measured phase , resulting in a corrected phase profile.

Here are the examples of CSI data, as shown in Fig 4.

III-B Experimental Setup

Criterion: For evaluating the performance of models, the primary factors we consider are the rate of compression, the quality of reconstruction, and the accuracy of classification. The compression rate is calculated as the ratio of the communication cost of the raw data to the communication cost of the compressed data, as follows:

| (6) | ||||

where is the number of feature dimensions, and are the patch sizes in the spatial and temporal dimensions, respectively.

The quality of reconstruction is measured by NMSE in decibels (dB), calculated as:

| (7) |

The classification accuracy is calculated as the percentage of correctly classified samples.

III-C Evaluation and Disscussion

We first evaluate the performance of SwinFi with the baseline methods mentioned above in Table II. From Equation 6, the compression rate is decided by several factors. Given our intention to maintain a finely-grained window receptive field through the use of small patch sizes, we are thus constrained to modulate the compression ratio by adjusting the feature dimensions and the configuration of Swin Transformer blocks. We design a series of experiments as shown in Table I, allowing the compression ratio to vary from 64 to 1024. Note that the mixed data contain amplitude and phase for each of the 4 channels. To maintain the same compression ratio, Dim is doubled.

| Input | Patch_size | Dim | Depth | |

| Amplitude or | 32 | [2,2,6,2] | 64 | |

| Phase | 16 | [2,2,6,2] | 128 | |

| 32 | [2,2,2,6,2] | 256 | ||

| 16 | [2,2,2,6,2] | 512 | ||

| 32 | [2,2,2,2,6,2] | 1024 | ||

| Mixed | 64 | [2,2,6,2] | 64 | |

| 32 | [2,2,6,2] | 128 | ||

| 64 | [2,2,2,6,2] | 256 | ||

| 32 | [2,2,2,6,2] | 512 | ||

| 64 | [2,2,2,2,6,2] | 1024 |

In Table II, it is observed that SwinFi outperforms the baseline methods in terms of the least reconstruction error with the NMSE of -37.74dB. Even at high compression rates, SwinFi maintains a high quality of reconstruction along with superior classification accuracy. This performance stands on par with, or even surpasses, that of single-task models.

| Method | NMSE (dB) | Accuracy (%) | |

| CSINet [2] | 4 | -29.18 | N/A |

| 16 | -26.18 | N/A | |

| 32 | -20.40 | N/A | |

| 64 | -18.07 | N/A | |

| WiWho [11] | N/A | N/A | 67.3 |

| AutoID [12] | N/A | N/A | 77.6 |

| SimpleViTFi [13] | N/A | N/A | 96.7 |

| EfficientFi [5] | 66.8 | -35.18 | 84.5 |

| 148.4 | -34.23 | 81.6 | |

| 334.0 | -30.19 | 82.7 | |

| 763.4 | -29.18 | 82.1 | |

| 1781.3 | -27.70 | 83.3 | |

| SwinFi | 64 | -37.74 | 95.3 |

| 128 | -37.56 | 93.8 | |

| 256 | -35.81 | 92.2 | |

| 512 | -31.47 | 90.4 | |

| 1024 | -29.19 | 87.3 |

The baseline methods focus exclusively on CSI amplitude. Accordingly, the comparative experiments of SwinFi also utilize only the amplitude. Subsequently, we conduct a series of experiments on both the phase and mixed data of CSI. These experiments aim to provide a comprehensive understanding of the extent to which SwinFi can effectively process and leverage the full spectrum of CSI signal components.

Table III provides a comparison of the performance across phase and mixed data. Combined with amplitude results in Table II, while SwinFi can handle phase data effectively, the reconstruction quality and classification accuracy are less satisfactory. However, by mixed data, SwinFi achieves the best classification accuracy. This suggests that the distinct features of human gait, used for classification, are distributed across both amplitude and phase components of the CSI data.

| Input | NMSE (dB) | Accuracy (%) | |

| Phase | 64 | -15.16 | 93.3 |

| 128 | -15.73 | 80.2 | |

| 256 | -16.85 | 91.4 | |

| 512 | -16.14 | 84.4 | |

| 1024 | -13.87 | 89.4 | |

| Mixed | 64 | -30.13 | 97.8 |

| 128 | -27.08 | 93.8 | |

| 256 | -25.24 | 98.3 | |

| 512 | -25.13 | 93.4 | |

| 1024 | -22.93 | 89.6 |

Finally, we investigate the impact of different patch shapes and window shapes on the performance of SwinFi. The results are shown in Table IV. It is observed that the rectangular shapes outperform the square shapes in both NMSE and classification accuracy under the similar . This result is consistent with the intuition that rectangular shapes can better capture the spatial and temporal dynamics of CSI data.

| Window_size | Patch_size | NMSE (dB) | Accuracy (%) | |

| 72 | -32.63 | 90.8 | ||

| 288 | -27.79 | 89.2 | ||

| 64 | -37.74 | 95.3 | ||

| 256 | -35.81 | 92.2 |

IV Conclusion

In this letter, we introduce SwinFi, leveraging Swin Transformer within an autoencoder-decoder architecture for the efficient compression and reconstruction of CSI data in Wi-Fi sensing. The approach not only reduces computational and communication demands but also demonstrates SOTA performance in reconstruction quality and classification accuracy. Through extensive experiments, SwinFi achieves satisfactory results and outperforms existing methods.

Anticipating future research, we will explore the integration of compressed feature images with Diffusion model as shown in Fig 1. Our aim is to investigate the potential of Diffusion model for generating synthetic CSI data to augment the existing datasets. Considering computational efficiency, we propose utilizing the compressed latent variables as inputs to Diffusion, where the output generated data are then reconstructed to achieve a lightweight generative process.

References

- [1] Y. Ma, G. Zhou, and S. Wang, “Wifi sensing with channel state information: A survey,” ACM Comput. Surv., vol. 52, no. 3, jun 2019. [Online]. Available: https://doi.org/10.1145/3310194

- [2] C.-K. Wen, W.-T. Shih, and S. Jin, “Deep learning for massive mimo csi feedback,” IEEE Wireless Communications Letters, vol. 7, no. 5, pp. 748–751, 2018.

- [3] T. Wang, C.-K. Wen, S. Jin, and G. Y. Li, “Deep learning-based csi feedback approach for time-varying massive mimo channels,” IEEE Wireless Communications Letters, vol. 8, no. 2, pp. 416–419, 2019.

- [4] X. Bi, S. Li, C. Yu, and Y. Zhang, “A novel approach using convolutional transformer for massive mimo csi feedback,” IEEE Wireless Communications Letters, vol. 11, no. 5, pp. 1017–1021, 2022.

- [5] J. Yang, X. Chen, H. Zou, D. Wang, Q. Xu, and L. Xie, “Efficientfi: Toward large-scale lightweight wifi sensing via csi compression,” IEEE Internet of Things Journal, vol. 9, no. 15, pp. 13 086–13 095, 2022.

- [6] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly, J. Uszkoreit, and N. Houlsby, “An image is worth 16x16 words: Transformers for image recognition at scale,” 2021.

- [7] Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, S. Lin, and B. Guo, “Swin transformer: Hierarchical vision transformer using shifted windows,” in 2021 IEEE/CVF International Conference on Computer Vision (ICCV), 2021, pp. 9992–10 002.

- [8] F. Meneghello, N. D. Fabbro, D. Garlisi, I. Tinnirello, and M. Rossi, “A csi dataset for wireless human sensing on 80 mhz wi-fi channels,” IEEE Communications Magazine, vol. 61, no. 9, pp. 146–152, 2023.

- [9] F. Gringoli, M. Schulz, J. Link, and M. Hollick, “Free your csi: A channel state information extraction platform for modern wi-fi chipsets,” ser. WiNTECH ’19. New York, NY, USA: Association for Computing Machinery, 2019, p. 21–28.

- [10] H. Zhu, Y. Zhuo, Q. Liu, and S. Chang, “-splicer: Perceiving accurate csi phases with commodity wifi devices,” IEEE Transactions on Mobile Computing, vol. 17, no. 9, pp. 2155–2165, 2018.

- [11] Y. Zeng, P. H. Pathak, and P. Mohapatra, “Wiwho: Wifi-based person identification in smart spaces,” in 2016 15th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), 2016, pp. 1–12.

- [12] H. Zou, Y. Zhou, J. Yang, W. Gu, L. Xie, and C. J. Spanos, “Wifi-based human identification via convex tensor shapelet learning,” in Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence and Thirtieth Innovative Applications of Artificial Intelligence Conference and Eighth AAAI Symposium on Educational Advances in Artificial Intelligence, ser. AAAI’18/IAAI’18/EAAI’18. AAAI Press, 2018.

- [13] J. Bian, M. Zheng, H. Liu, J. Mao, H. Li, and C. Tan, “Simplevitfi: A lightweight vision transformer model for wi-fi-based person identification,” IEICE Transactions on Communications, vol. E107-B, no. 4, 2024.