Swift: High-Performance Sparse Tensor Contraction for Scientific Applications

Abstract

In scientific fields such as quantum computing, physics, chemistry, and machine learning, high dimensional data are typically represented using sparse tensors. Tensor contraction is a popular operation on tensors to exploit meaning or alter the input tensors. Tensor contraction is, however, computationally expensive and grows quadratically with the number of elements. For this reason, specialized algorithms have been created to only operate on the nonzero elements. Current sparse tensor contraction algorithms utilize sub-optimal data structures that perform unnecessary computations which increase execution time and the overall time complexity. We propose Swift, a novel algorithm for sparse tensor contraction that replaces the costly sorting with more efficient grouping, utilizes better data structures to represent tensors, and employs more memory-friendly hash table implementation. Swift is evaluated against the state-of-the-art sparse tensor contraction algorithm, demonstrating up to 20 speedup in various test cases and being able to handle imbalanced input tensors significantly better.

keywords:

Tensor contraction, Sparsity, Algorithm, Scientific application1 Introduction

Tensors are multidimensional arrays which are used to represent data in the fields of quantum chemistry, quantum physics, machine learning, and more[1, 2, 3, 4, 5, 6]. In many scenarios it is common for the tensors used in these fields to have a small percentage of nonzero elements, in which case they are referred to as sparse tensors [7]. Tensor contraction (matrix multiplication in higher dimensions) is an operation that can be used to exploit meaning or alter the input tensors to better understand the data they represent. However, this operation is computationally expensive [8] and execution time increases quadratically with respect to the number of elements contained in the input tensors. This can slow down the progress of research which is why computer scientists have created specialized software algorithms and hardware modules specifically to perform the operation of sparse tensor contraction. Sparse tensor contraction is the contraction of two tensors where one or both of them are sparse, which can be performed orders of magnitude faster than traditional tensor contraction algorithms on sparse input tensors because the speed of the operation scales as a function of the nonzero elements rather than the shape.

Conventional tensor contraction algorithms do not take advantage of sparsity which results in redundant calculations being performed with zero-valued entries. Specialized algorithms [9, 10, 11, 12] attempt to overcome this by ignoring entries that have no value by doing element-wise operations on the remaining important elements. The main challenges facing sparse tensor contraction algorithms are: irregular memory access patterns, unknown output tensor size, and high amounts of intermediate data. Irregular memory access patterns arise from the fact that in sparse tensor contraction, elements are typically fetched from memory based on their coordinates in the tensor. The coordinate of a tensor element can be broken up into the contracting modes (i.e., dimensions) which are used to find what elements it should contract with, and free modes which are used to determine where the contracted element is located in the output tensor. Unknown output tensor size arises from the idea that even if it is known the density of the input tensors, it is impossible to know the density of the output tensor. This is because tensor contraction is dependent on where those elements are located in the input tensors which would be unknown without processing all the elements. Finally, high amounts of intermediate data arise from the fact that after the output entries are calculated there will be a large portion of them which share the same coordinates. These entries must be found and added together to have one output element representing that coordinate value.

Sparse tensor contraction hardware accelerators, including Tensaurus[13], Extensor[14], and Outerspace[15], are time consuming to physically design and are much more expensive compared to a software implementation. Consequently, high performance software contraction algorithms are naturally attractive, as they can be employed for noteworthy speedups over conventional software libraries but without requiring additional hardware.

Consequently, several popular machine learning software libraries, such as PyTorch [16] and TensorFlow [17], offer support for sparse tensor contraction specifically. However, due to the main challenges of sparse tensor contraction, there are limited high performance software algorithms. The most prominent is called the Sparta algorithm [9] which can be summarized into 4 main stages. The first stage involves sorting the input tensor by free mode(s) and hashing the input tensor by contracting mode(s). This will make the input tensors easier to process. The second step is the contraction stage which involves fetching elements from the two input tensors that share the same contracting modes, and saving an output entry which has its coordinates located at the concatenation of the free modes of both entries and its value is the product of the two input entries. The third step is to find entries which share the same coordinates and add their values together so only one entry remains to represent the value at that coordinate. Finally, the fourth step is to write all of the output elements to a data structure which represents the output tensor. Sparta also offers an optional fifth step to sort the output entries but it is unnecessary and won’t be focused on in this work.

There are three areas of possible improvement of this algorithm which this work aims to address. First, input tensor needs to have input entries that share the same free mode next to each other in memory. The use of sorting to achieve this goal is a very computationally expensive choice which increases the execution time and time complexity. Second, the method of using a hash table to efficiently fetch elements from an input tensor based on contracting mode is generally cache-unfriendly[18] and cause unnecessary searching through other entries which were hashed to the same slot. Third, Sparta’s use of chained hash tables leads to irregular memory access patterns, unnecessarily bloating memory access time.

We propose Swift, an improved algorithm for sparse tensor contraction that replaces the costly sorting with more efficient grouping, utilizes better data structures to represent tensors, and employs more memory-friendly hash table implementation. The choice of data structures is guided on what would experience the most cache-efficiency and how well it integrates into the following stages in the algorithm. For the input processing stage, our algorithm incorporates grouping, which ensures input tensor elements with the same free modes are located next to each other in memory, to both improve the execution time and time complexity compared to sorting the entries. This technique makes the time complexity of the input processing stage with respect to the number of entries in both input tensors, which is an improvement upon the time complexity of the state-of-the-art input processing stage. In our scheme, the second input tensor is represented as an array rather than a hash table which improves memory access time as elements are located next to each other in memory to utilize cache-locality, rather than using pointers to locate elements. Finally, for the accumulation stage a probing hash table is used to locate elements with the same contracting modes compared to a chaining hash table which leads to pointer chasing (following chains of pointers to distant locations in memory). The performance benefits of these changes are evaluated using the contraction time and peak memory consumption relative to the state-of-the-art sparse tensor contraction algorithm. A variety of different test cases were used that vary in entry count, order, and evenness of entries in both tensors.

The main contributions of this work are:

-

•

Analyzed state-of-the-art sparse tensor contraction algorithm and inefficiencies.

-

•

Proposed an improved algorithm with explanation of key aspects to achieve better performance while improving time complexity.

-

•

Evaluated the proposed algorithm versus state-of-the-art sparse tensor contraction program for various input tests cases, demonstrating 2-20 speedup in execution time.

2 Background & Related Work

2.1 Sparse Tensor Contraction

2.1.1 Tensors

Tensors are multidimensional arrays which have found applications in numerous scientific fields that work with higher dimensional data [19]. A mode is another title for dimension, so an -dimension tensor is alternatively known as an -mode tensor. The number of modes a tensor has is also referred to as the order of the tensor. For example, the temperature at any given point in a room could be represented as 3-mode tensor, which has order 3 because each point in the room could be addressed by 3D coordinates, and the temperature would be the value of the tensor at these coordinates.

It is standard notation to symbolize a tensor as , and individual tensor entry values as . denotes the length of the th mode of the tensor and denotes an index along this mode where . For example, tensor would have a maximum index of 5 in the first dimension, a maximum index of 2 in the second dimension, and a maximum index of 3 in the third dimension. would denote the value of the tensor entry located at .

2.1.2 Sparse Tensors

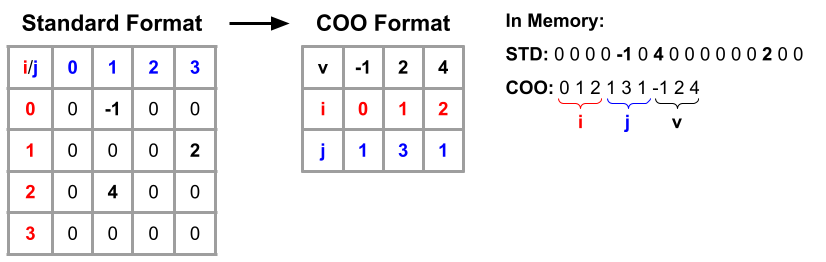

Sparse tensors have a majority of entries with the value of 0, where the percentage of nonzero elements is referred to as the density. Because of this, it would be inefficient to represent a sparse tensor with the standard method of listing the elements contiguously in memory, as the majority of the entries will have the value 0. Instead, the elements of a sparse tensor would be better represented using COOrdinate List format (COO) [17]. This methodology lists the elements as coordinate value pairs, ignoring pairs that have a value equal to zero. This ensures that sparse tensors are represented in such a way that only meaningful elements are accounted for. An example of this can be seen in Figure 1. In this example the density is , because there are 3 nonzero elements () and 16 total elements. In practical applications the density of sparse tensors can range from to [20, 21, 22, 23, 24, 25] which leads to much greater memory savings.

2.2 Tensor contraction

Tensor contraction can be expressed using the form shown in Equation 1, which can be expanded to Equation 2.

| (1) |

| (2) |

The tensor contraction operation takes input tensor , input tensor , the list of contracting modes (top), and the list of contracting modes (bottom), as exemplified in Equations 3 & 4. If a certain mode of tensor is contracted with another mode of tensor , both of these modes must have the same length, similar to how for matrix multiplication the number of columns in the first matrix must match the number of rows in the second matrix. The tensor contraction operation focuses on finding entries in and that share contracting indices, multiplying the values together, and adding that result to the output tensor . This can be seen in Equations 2 and 4 where a matching contracting index is denoted as c. All instances of matching contracting indices are summed with output coordinates which share the same free modes of and , hence the summation sign.

| (3) |

| (4) |

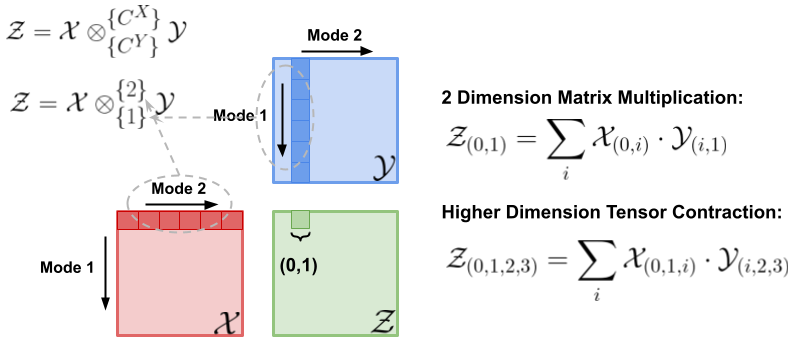

Tensor contraction is a generalization of matrix multiplication as shown in Figure 2. Note that the element at index in the output tensor is the dot product of the th row of tensor with the th column of tensor . The resulting element in tensor is the concatenation of the free modes (first mode from and second mode from , which correspond to indices 0 and 1, respectively), which mirrors Equation 2. The example shown in this figure can be applied to tensors with higher dimensionality which leads to the general concept of tensor contraction. Sparse tensor contraction occurs when one or both of the input tensors of a contraction operation are sparse [26].

2.3 Hash Tables

Hash tables are used repeatedly in both related work and our proposed algorithm. After the element-wise contraction stage, which will be explained in section 3, each of the output entries must be combined with entries that share the same coordinate. Hash tables are a common solution for this problem as they operate on key-value pairs. In this instance the key would be coordinate of the entry and the value would be the value of the entry. The key is mapped to a table slot using a hash function, and the key-value pair is placed at that slot. The hash table achieves search time derived from the fact that this hashing process described is theoretically invariant of how many elements are currently in the table [18]. A major design decision with this data structure is how to handle the scenario of two different keys being hashed to the same slot. The two most prominent solutions are probing and chaining.

2.3.1 Linear Probing Hash Tables

Linear probing hash tables handle collision by placing the key-value pair at the next available slot in the table by iterating down the table until it finds an empty location. If the value needs to be fetched, the key will be hashed to the original slot and iterated down until the key is found and the value is returned. One obvious downside of this approach is that as the table begins to fill and probing will take longer. This is why it is a common practice to make probing hash tables [27] the expected maximum number of elements that need to be stored.

2.3.2 Chaining Hash Tables

Chaining hash tables handle collision by treating each slot as if it were a linked list (i.e., the entire hash table is an array of linked lists) and adding the new entry by hashing to a slot and adding to the head of the linked list. If the value needs to be fetched, the key will hash to the correct slot and the linked list will be traversed until the desired key-value pair is found and the value will be returned. A disadvantage of this approach is that it leads to irregular memory accesses due to the pointer chasing involved in traversing a linked list which often consumes much more time than a probing hash table. A major advantage of a chaining hash table is that it can store an unknown number of elements because linked lists can grow indefinitely whereas the probing hash table is fixed in size.

In practice, neither of these methods will achieve constant hash time because the more entries in the same slot the longer it takes to fetch or add a new element. However, near constant hashing time will be achieved, so long as the number of elements is comparable to the number of slots.

2.4 Related Work

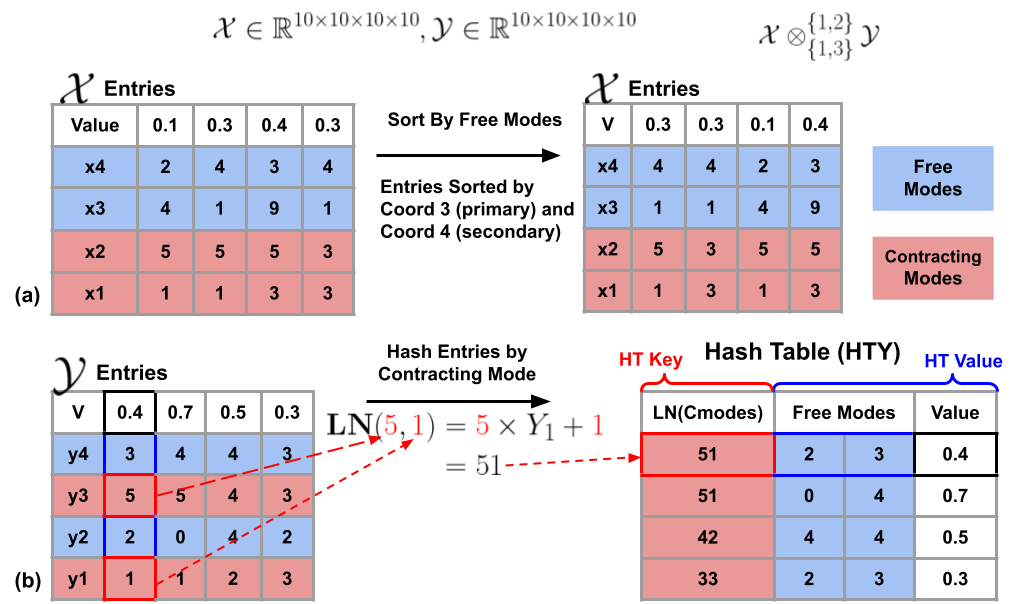

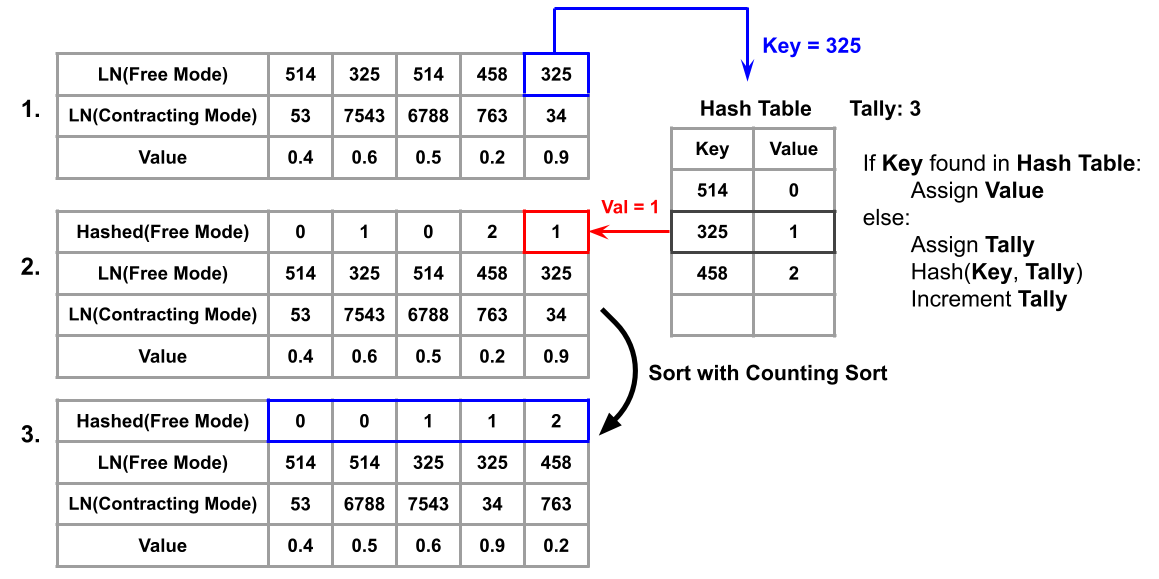

Acceleration of sparse tensor contraction has been approached using hardware accelerators and high performance software algorithms. Hardware accelerators [13, 14, 15, 28, 29] almost always outperform software algorithms, but there is extreme commitment in the creation of hardware. In the realm of high performance software, Sparta is a state-of-the-art sparse tensor contraction algorithm which operates on COO formatted tensors. This algorithm addresses the main concerns with sparse contraction which are: irregular memory access patterns, unknown output tensor size, and high amounts of intermediate data. Machine learning libraries such as PyTorch and TensorFlow offer support for sparse tensor contraction but they do not have comparable contraction time to Sparta. The Sparta algorithm can be broken down into the Input Processing stage (Figure 3), Contraction & Accumulation (Figure 4), and Writeback, which are explained below.

2.4.1 Input Processing

Input processing comprises of manipulating the input tensors and such that they can be more easily processed by the following stages. An example of this is shown in Figure 3. The objective is to have the entries of tensor with the same free modes to be located next to each other for easier data fetching and contradiction (more in the next subsection). Thus the tensor entries will be sorted by free mode. In this examples, coordinates 3 and 4 make up the free modes for this tensor so the entries will be sorted by coordinate 3 primarily and coordinate 4 secondarily.

For the contraction operation, the entries must be easily fetched from memory based on its contracting mode. To achieve this, Sparta converts tensor from a list of entries into a hash table representation which has O(1) access time given a search query. This is shown in Figure 3(b) where the key is the large number representation (LN; see the figure for calculation illustration) of the contraction modes, and the values of the hash table is the free modes and the value of the tensor at that coordinate. As you can see in this example, there are 2 coordinates which share the same key (51) in the hash table. If this key were to be requested, both entries would be fetched from the table. For the sake of clarity we show these two entries in the table as separate but in implementation they would both be contained in the same slot.

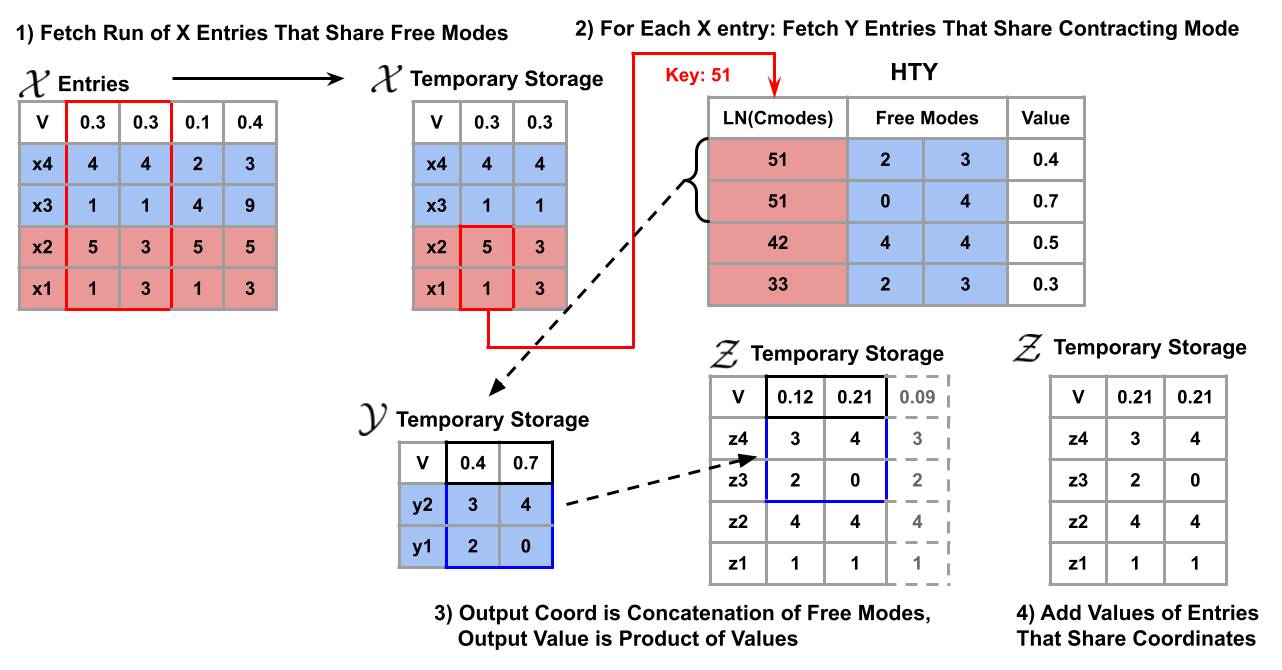

2.4.2 Contraction & Accumulation

Due to input sorting, a subset of entries that share the same free mode can be fetched from memory together. Each entry in this list will be iterated over during the contraction phase, and the process for each entry is as follows. First the contracting modes of the will be noted. Next, every entry from tensor that has the same contracting mode will be fetched from the hash table. The coordinate of the output tensor is the concatenation of the free modes of tensor with the free modes of tensor . The value of the output entry is simply the product of the value with the value. The output entry is then placed into an accumulation hash table.

During accumulation, there will be a large number of intermediate output entries, many of which have duplicate coordinates. Entries with matching coordinates must be located efficiently so their values can be added together. Because output entry coordinates are a concatenation of the tensor free mode and the tensor free mode, two output entries will certainly not have matching coordinates if the coordinate contribution from entry is not the same. This is why it would be advantageous to sort the entries of tensor in the input processing stage so that all of the intermediate output entries with matching free modes are located next to each other. Because the entries with matching coordinates are already next to each other in temporary storage, all that remains to be done is finding matching tensor coordinates to see if the overall output coordinates match. An example of this can be seen in Figure 4. In this case the free mode being contracted is so all entries that have this free mode pair are fetched from memory and placed into temporary storage. For each of the entries in temporary storage, the hash table HtY is used to fetch all that share the same contracting mode. In this example, the first entry is being contracted which has a contracting mode set of so all entries that share this contracting mode set are placed into temporary storage. Note that only the free modes must be saved for each entry fetched because the contracting modes of these entries are not present in the output tensor entries. As previously stated, the output entries are a concatenation of the free modes of and the free modes of so these free modes are concatenated with the free mode of and added to temporary storage. All output entries that share the same coordinate are then mapped to the same slot and their values are added together. In this case, two output entries share the same coordinate so their values and are added to get the final value for this coordinate which is . This Figure shows the accumulation happening after contraction for ease of understanding, but in the implementation entries are filtered as they enter the accumulation hash table for temporary storage of entries.

2.5 Writeback

After Contraction & Accumulation, because the algorithm was implemented using parallelism, each thread has a sub-collection of entries which belong to the output tensor. During Writeback, the thread-local collections of entries are iterated over and saved to a global data structure which represents the output tensor.

3 Motivation

3.1 Time Complexity

Time complexity of Sparta can be calculated by adding the time complexities of each individual stage in order to give an overview of how well the algorithm scales. Equation 3.1 shows the time complexity of the Sparta Algorithm where denotes the number of nonzero entries in tensor , and denotes the average number of entries associated with each contracting mode of .

The first term expresses the time complexity of the input processing stage. The input processing stage has time complexity with respect to, , the number of nonzero entries in tensor because its entries were sorted using quicksort. In contrast, the input processing stage has linear time complexity with respect to, , the number of nonzero entries in tensor because each was hashed into a table. The second term is the time complexity of the contraction and accumulation phases which must iterate over each nonzero entry, and for each entry the average mode length of must be iterated over to get the resulting output entry which is added to a hash table. Finally, the third term expresses the time complexity of the Writeback stage which is equal to the number of output entries because each entry must be saved to the output tensor.

| (5) | ||||

| where: | ||||

The time complexity of the Contraction & Accumulation stages cannot be improved upon as it is necessary to iterate over each entry and the corresponding entries to generate the correct output entries. Similarly, the time complexity of the Writeback stage cannot be improved upon as each entry must be exported. There is, however, potential to improve the time complexity of the input processing stage. It is also, of course, possible to improve the overall performance of each stage, even if the time complexity remains unchanged. Accessing the same number of elements sequentially in memory generally leads to performance improvement compared to random access, and two linear algorithms can have vastly different execution times based on the number of steps per element even if that number is constant.

3.2 Hash Table Leads to Increased Memory Access Time

Sparta represents the tensor as a hash table (HtY) which can be used to fetch a run of entries that share the same contracting mode (). Although the creation of the hash table can be done in time and is generally fast, there is room for improvement when it comes to the time it takes to fetch elements during the contraction stage of the algorithm.

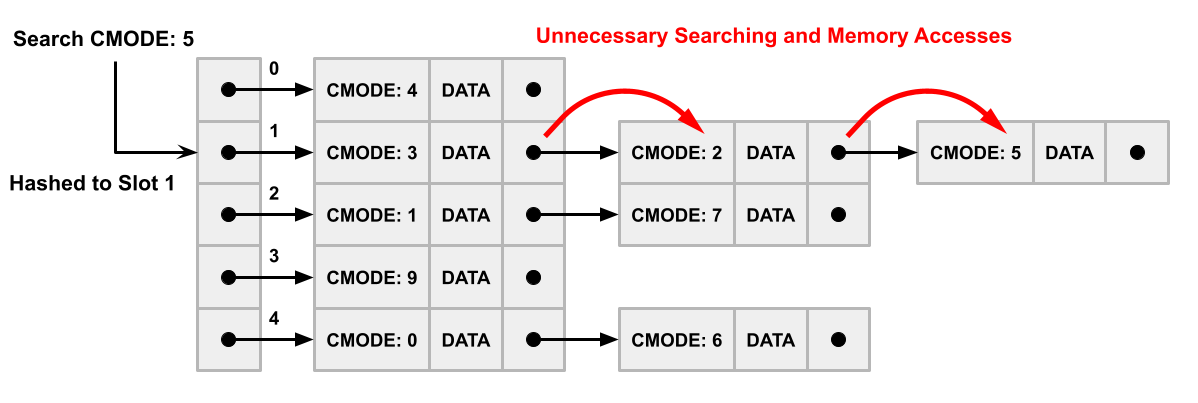

One aspect that makes this approach in Sparta slow compared to an array is the fact that chaining hash tables (the variant used in Sparta) leads to pointer chasing when traversing a linked list. This causes irregular memory accesses which harms cache performance. The second aspect that makes this approach slower is that during the hashing process the hash function commonly maps different keys to the same slot. This means if a contracting mode must be searched in the table, there will be undesired elements to filter through to access the desired entries. An example of this can be seen in Figure 5. In this case the requested contracting mode is 5, shown in top left, but two other nodes must be traversed to access that element because 3 entries were mapped to that slot of the table.

Combining the above two aspects, in a chaining implementation of a hash table, an additional pointer to the next entry must be fetched for each undesired contracting mode. This consumes a lot of time as there is a high probability a new line of memory must be fetched and placed into cache numerous times during this process.

Similarly, chaining hash tables are also used for the storage of output entries in the accumulation stage where an alternative data structure would have much better memory access time and cache-efficiency.

3.3 Input Sorting is Unnecessary for Input Processing

As previously stated, the input processing stage is composed mainly of the sorting of the entries and the hashing of the entries. Our experiments show that the sorting of vastly overshadows the hashing of which is reflected in the time complexity equation of Sparta. During the input processing stage of Sparta, tensor is sorted by the free modes of its coordinates in order to get matching free modes next to each other. Sorting also has the additional property of putting these entries in ascending order based on the free modes. If there is a way to remove the reliance on the ascending order while still maintaining the correctness of contraction, much time can be saved from not needing to sort . This is achieved by using our proposed methods described in the following section.

4 Approach

In this section, we present our proposed Swift, a novel and much improved algorithm for sparse tensor contraction. Our approach improves upon Sparta by replacing sorting with grouping, utilizes better data structures to represent tensors, and employs memory-friendly hash table implementation. In the following subsections, we describe the proposed improvement to the input processing, contraction, and accumulation stages in detail.

4.1 Perform Grouping at Input Processing Stage

If entries of tensor are fetched in chunks where free modes are shared amongst entries, fetching is rendered more efficient due to locality. Therefore, the input processing stage should arrange the entries of tensor such that entries which share free modes are placed contiguously in an array. This allows for a block of entries that share the same free mode to be fetched in constant time utilizing pointers to the start of runs. During the input processing stage, Swift utilizes grouping by free mode instead of sorting by free mode to manipulate the order of the input entries. Grouping by free mode simply means placing entries that share free mode next to each other without ensuring that entry free modes are in any particular order. While this might still take in general cases, a linear time version is possible here by assigning a small metric (numerical proxy) to represent each unique free mode and applying a less stringent sorting algorithm to this metric. In particular, we employ a relaxed counting sort in this work, which is an algorithm that can achieve linear time complexity when the sorting metric does not exceed the number of entries being sorted so it is ideal for this scenario.

4.1.1 Counting Sort for Small Value Ranges

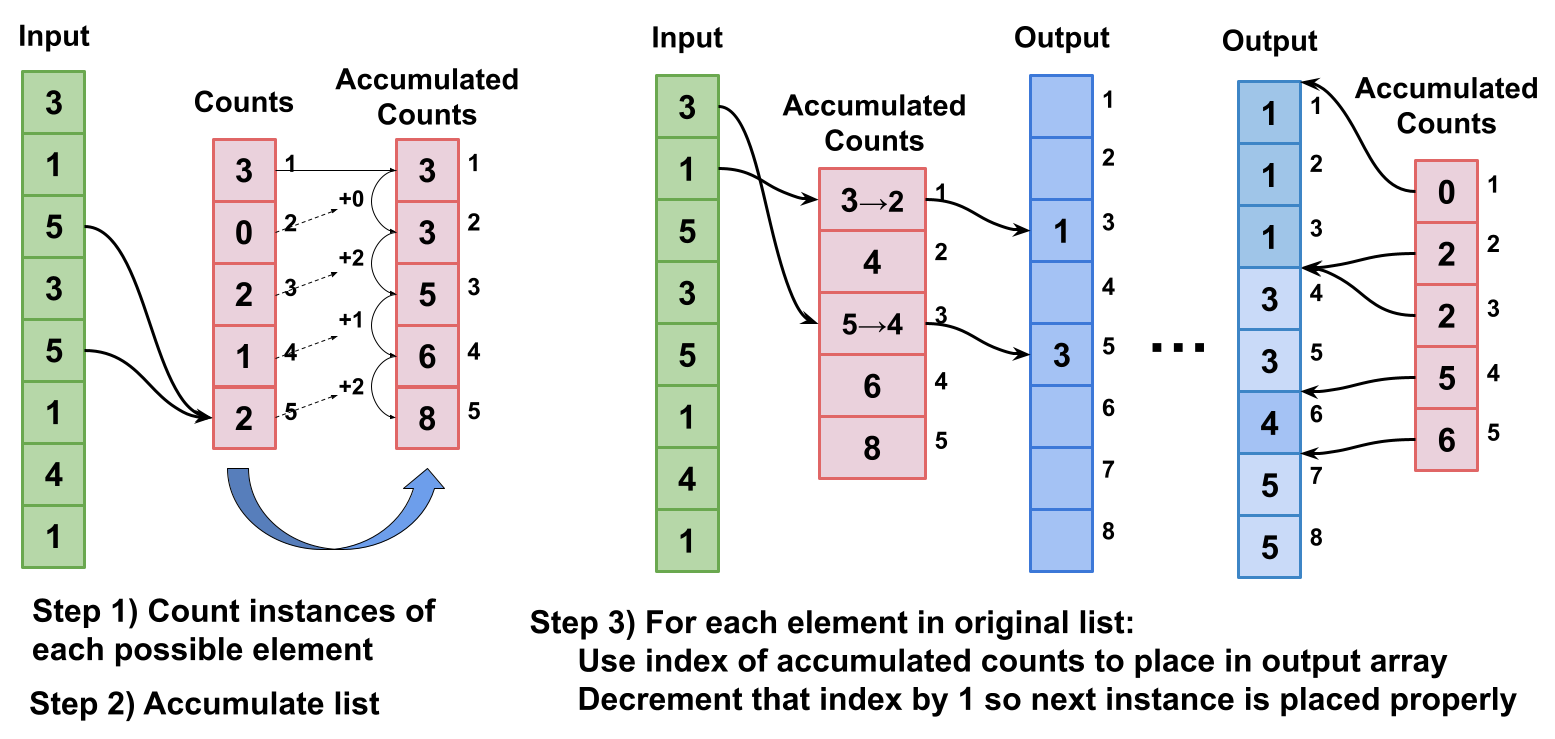

Counting sort is a sorting algorithm that achieves a time complexity of where is the number of elements and is the number of different values that elements can be assigned. An example of this algorithm can be seen in Figure 6. In the first step, the array of elements is iterated over and the total number of instances of each possible value is found and placed into a count array (e.g., element 1 appears 3 times). The algorithm then takes the cumulative sum of the Count Array, yielding dividers to where elements of each value should be placed. Finally, the input array is iterated over and placed at the corresponding pointer (e.g., element 3 is placed at index 5 in the output), and the accumulated count is then decremented. The final output is a sorted array. This algorithm is much faster than quicksort when is small as it only requires 2 iterations over the input array and 1 iteration over the Count Array. If can be guaranteed to be less than or equal to , this algorithm achieves sorting.

4.1.2 Small Numbers for Free Modes

In order to render counting sort more efficient than alternatives, the value of must be reduced, ideally to be less than for linear time complexity. Assignment of a metric with the smallest range of values can be done by hashing the free modes of each entry which is the process described in Figure 7. The first step is to go through each entry and map the free modes representation to a metric which has a minimum range of possible values. This can be done by using a hash table to see if the free modes have already been hashed. If so, the entry is assigned the smaller metric. If not, the entry is assigned the metric equal to the number of elements currently in the table. Using the example shown in Figure 7, the key was found in the hash table with the value , thus that entry was assigned the metric in the second array. If the key was , it would not be found in the hash table, and the entry would have been assigned metric because there are currently key value pairs in the hash Table. The reason for the hashing to smaller numbers is because Counting Sort has a time complexity dependent on the range of possible elements.

4.1.3 Fast Grouping

The mapping of free modes to a smaller metric described in the previous subsection has guaranteed the maximum sorting metric is no greater than the number of elements in the array. Thus Counting Sort can be applied to this sorting metric which will have a time complexity of , where , which means grouping the elements has a time complexity of . Note that after the sorting process the free modes are not in any particular order, i.e. ascending or descending, but that is of no importance for the contraction algorithm.

4.2 Array Representation of Tensor Y

A similar process to the one described in Section 4.1.3 above will be performed on the entries of tensor but with respect to the contracting modes instead of the free modes. The access time for a sequence of entries that share a desired contracting mode can be found in O(1) time. This can be done using the table of pointers to divisions in contracting modes which is given by Counting Sort results in an array of sorted elements with a corresponding vector of pointers to divisions of contracting modes, the access time for a sequence of entries that share a desired contracting mode can be found in O(1) time using the table of pointers to divisions in contracting modes.

When it comes to implementation, this data structure is significantly faster than the hash tables used in Sparta for three reasons. First, with a hash table it is very likely that two blocks of contracting modes will be hashed to the same slot. As a consequence, in order to get the desired run of contracting indices, the program must unnecessarily filter through the extraneous contracting modes that were hashed to the same slot. Second, the entries are stored contiguously in memory which greatly improves cache efficiency. Third, the performance of a hash table can vary based on its implementation. In the case of a chaining hash table, excessive time may be spent following pointers, while a probing hash table aiming for peak execution time is expected to consume around 1.3 times more memory compared to an array. For HtY, Sparta implements a chaining hash table whereas we use the faster probing hash table at the cost of slightly increased memory.

4.3 Probing Hash Table for Intermediate Entry Storage

Chaining tables are required for Sparta’s accumulation stage because the of the output tensor is unknown. However, the accumulation stage could be improved by using a probing hash table instead of a chaining hash table to store output entries from the contraction stage. Due to cache locality, it is much faster to iterate through a table in array format than to perform pointer chasing as would be done with a chaining table. The challenge lies in the fact that a probing hash table requires a predefined size, which can be difficult to estimate before the contraction process begins.

We address this challenge with the following clever method. During the grouping stage of tensor , a vector is available that records the number of entries corresponding to each contracting index. One could go through every entry that shares the same free mode and accumulate the number of entries associated with each of these operations. This can be used to assign an upper bound for the number of slots in the probing hash table such that dynamically allocated memory is not required. Using Figure 6 as an example, the contents of the array could represent the large number representation of the contracting modes of tensor , thus the counts array will store the count of each contracting mode set.

As an example, given a set of 4 entries that share the same free modes, the associated contracting modes could be . Thus, the total number of entries to be fetched (and therefore output entries to be allocated), would be equal to . For this sub-operation, a maximum of 9 slots is needed for the accumulation probing hash table and there is no need for dynamic allocation of additional memory.

4.4 Summary of Performance Improvement

The approach described has the following time complexity, which represents an improvement over that of Sparta. The input processing stage is linear with respect to the number of nonzero entries in tensor and all remaining stages have unaltered time complexity to Sparta. The reduced time complexity of the Swift algorithm leads to a reduction in execution time while maintaining the correctness of the contraction results 222An even faster time complexity could have been achieved by simply leaving the entries of tensor alone during the input processing stage. This would, however, force the accumulation stage to be performed after combining intermediate entries to a global array, which would cause a significant decrease in practical performance..

| (6) | ||||

| where: | ||||

5 Evaluation

5.1 Evaluation Methodology

To validate the potential of our proposed method, Swift, we evaluated the execution time and peak memory usage for sparse tensor contraction operations on input tensors with varying and order. For fair comparison, both Sparta and Swift were implemented in C and compiled using gcc with the optimization option -O3 and employed OpenMP for parallelism. Both programs used 8 threads maximum and were executed on a 11th Gen Intel(R) Core(TM) i7-11375H which runs @3.3GHz with 32GB of RAM. For execution time and peak memory measurements, two randomly generated tensor datasets were created with difference in mode length to observe the effect of change in sparsity. Additionally, we examined sparse tensor contraction operations between two tensors with a significant difference in to assess how the algorithms handle load imbalance.

. Name Shape NNZ Name Shape NNZ Set 1 A050 50K B050 50K A100 100K B100 100K A200 200K B200 200K A300 300K B300 300K A500 500K B500 500K Set 2 A050 50K B050 50K A100 100K B100 100K A200 200K B200 200K A300 300K B300 300K A500 500K B500 500K Set 3 A2190 198K B2190 81 A2178 162K B2178 81 A2177 134K B2177 95 A2164 157K B2164 81 A2163 130K B2163 95

| Name | Order | Shape | NNZ | Density |

|---|---|---|---|---|

| Enron [30] | 3 | 9838 | ||

| LBNL [31] | 3 | 27269 | ||

| Facebook [32] | 3 | 738K | ||

| Hubbard-1D-P [33] | 5 | 300K | ||

| Hubbard-1D-T [33] | 5 | 400K | ||

| Hubbard-1D-Z [33] | 5 | 100K | ||

| Hubbard-2D [33] | 5 | 300K | ||

| NIPS [9] | 4 | 3M |

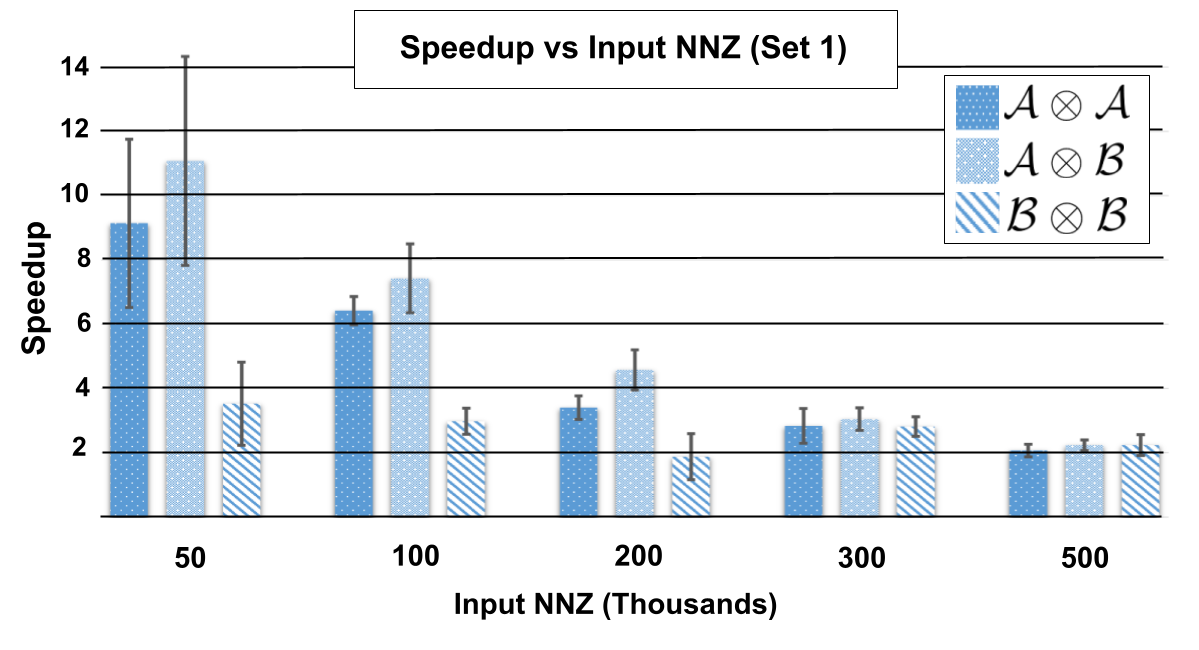

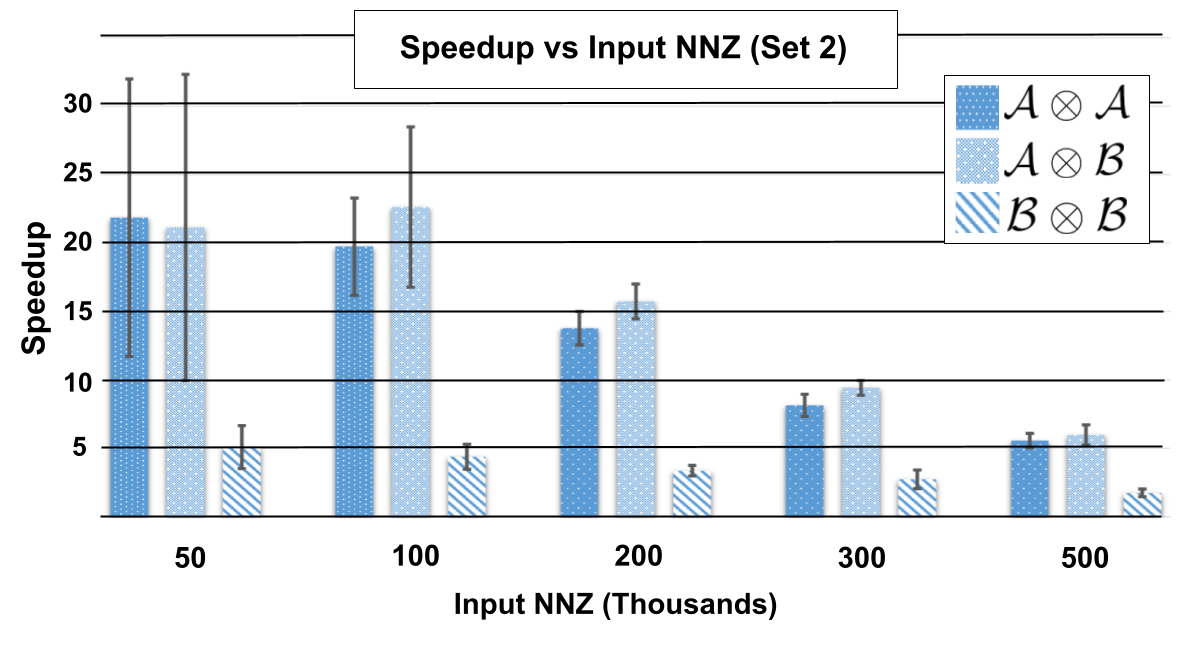

Figure 8 shows speedup in contraction time over the state-of-the-art as a function of the number of nonzero elements in the input tensors. The specifications of the tensors used in the operations can be seen in Table 1, Set 1, where tensors labeled are of order 5 and tensors labeled are of order 4. Testing different order combinations was done to show that both designs are flexible to shape. For these measurements, both & have all modes of equal length 50. Figure 9 similarly shows speedup as a function of using the tensors described in Table 1, Set 2, where modes are of equal length 100. Tensors have randomly generated entries and values to ensure that there is no general structure that would bias the results.

5.2 Contraction Time Versus Input Entry Counts

For Set 1, the minimum speedup from employing Swift sits at a solid 1.8x for large with small orders to a maximum of a blistering 7.7x observed speedup for small with higher orders. The speedup is much more extreme for experiments involving tensors from Set 2 which are orders of magnitude more sparse. The fastest speedup is 19.6x for small sparse tensors, and the lowest speedup is still a substantial factor of 2.3x for more dense operations.

This shows the effect sparsity can have on speedup, regardless if the of the input tensors is constant. Note the formulas for time complexity of Sparta, Equation 3.1, and Swift, Equation 4.4, both include the term which represents the average number of Y entries assigned the same contracting modes. As sparsity increases, will decrease which means a proportionately more amount of time is spent fetching entries from memory compared to processing them. Sparta and Swift perform the same amount of time processing entries, but Swift has much faster rate of fetching entries because of its cache-friendly storage of elements inside an array compared to a chaining hash-table. Keeping and mode length constant, decreasing the order by 1 will have profound increase in density, thus self-contractions between experience a lesser speedup. For reference,the sparsity for tensors from Set 1 range from 0.016% to 8% while tensors from Set 2 exhibit sparsity in the range of 0.0005% to 0.5%.

There is a visible decrease in speedup as the number of nonzero entries increases, despite having similar time complexities. As the number of output entries increases, most of the computation time will be concentrated in the Accumulation and Writeback stages, which are comparable between the two algorithms. Consequently, less time will be spent on the Input Processing and Contraction stages, where the primary differences between the algorithms lie. There are, however, many scientific applications that utilize tensors which fall into this range of . In such cases, Swift would provide significant speedup.

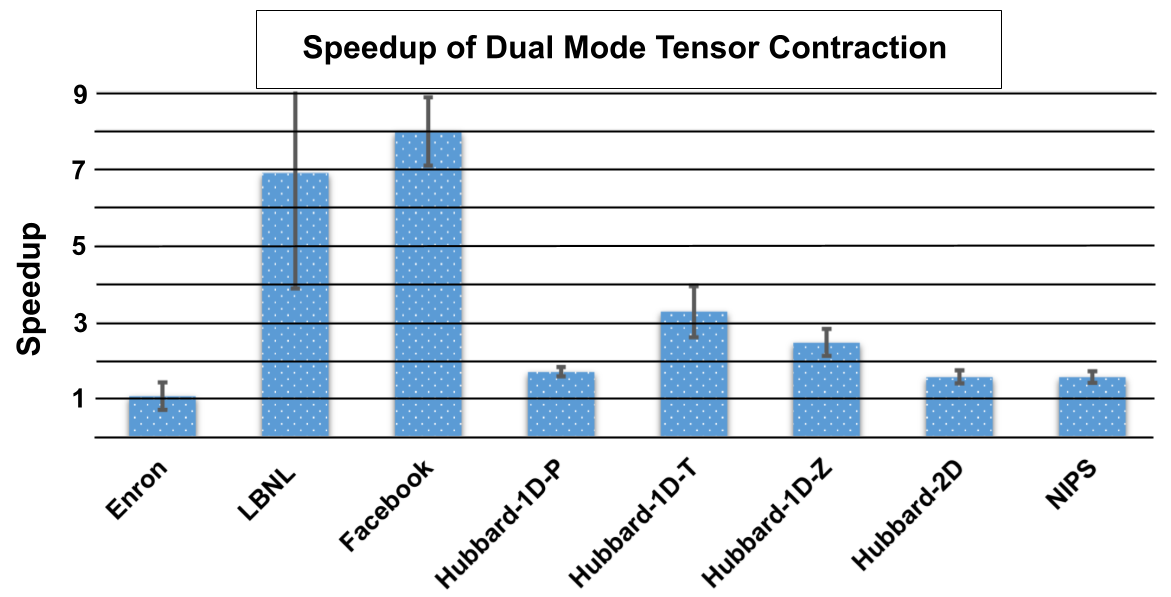

Figure 10 shows contractions between tensors found in scientific applications. Tensor specifications for these experiments can be found in Table 2. These examples further illustrate the strength of Swift when tensors are extremely sparse. When tensors have low density, a greater proportion of execution time will be devoted towards fetching entries compared to performing the contraction operation on the entries. Tensor LBNL has a significantly lower density () which means modes being fetched from memory will have very few elements and thus fetching must happen more often. Swift utilizes an array to store elements which has much faster access time compared to a chaining hash table. Tensors with such few elements, such as Enron, have little room for improvement because there are so few operations being performed and thus a reduced time complexity will have minimal impact.

5.3 Memory Consumption Comparison

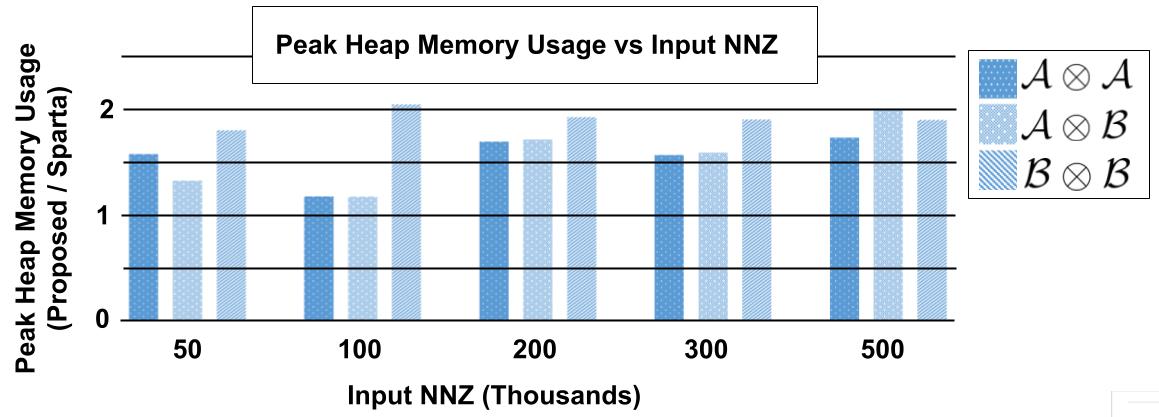

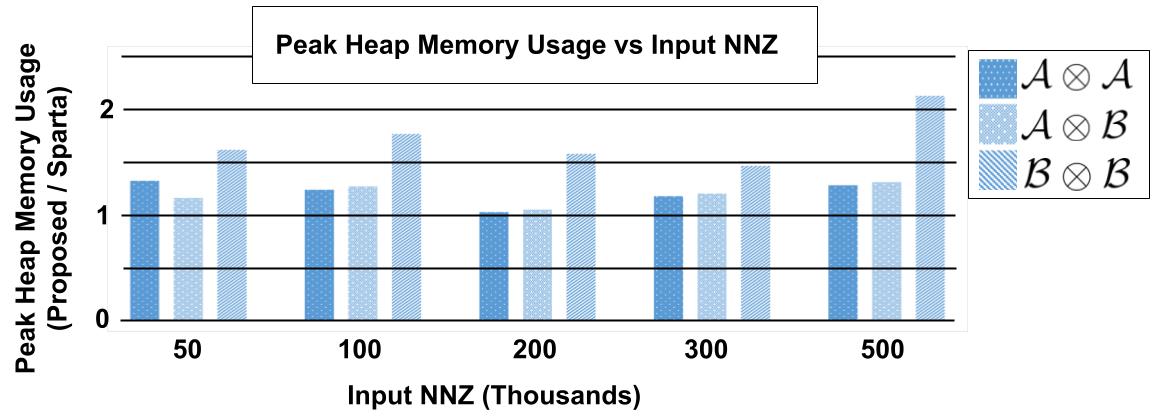

For each of the experiments performed in Section 5.2, the peak heap memory consumption was also measured using memusage on the executable files. The ratio of memory consumption from the proposed algorithm divided by that of Sparta is shown in Figures 11 and 12, for tensor contraction combinations found in Table 1 Set 1 and 2, respectively. There is a stable increase in memory consumption as the for input tensors increases, where tensors contractions between lower order tensors result in a higher ratio of memory being used. Factors which cause Swift to require more memory are 1) the added array needed to store the counts of each contracting mode set for tensor during the contraction phase, and 2) the use of upper bounds for the accumulation hash table compared to the bare minimum being used by a chaining hash table in Sparta’s accumulation stage. The peak memory allocations to the stack from both programs is constant with respect to the number of nonzero entries, both allocating a negligible 1.9KB peak to the stack.

5.4 Imbalanced Contractions

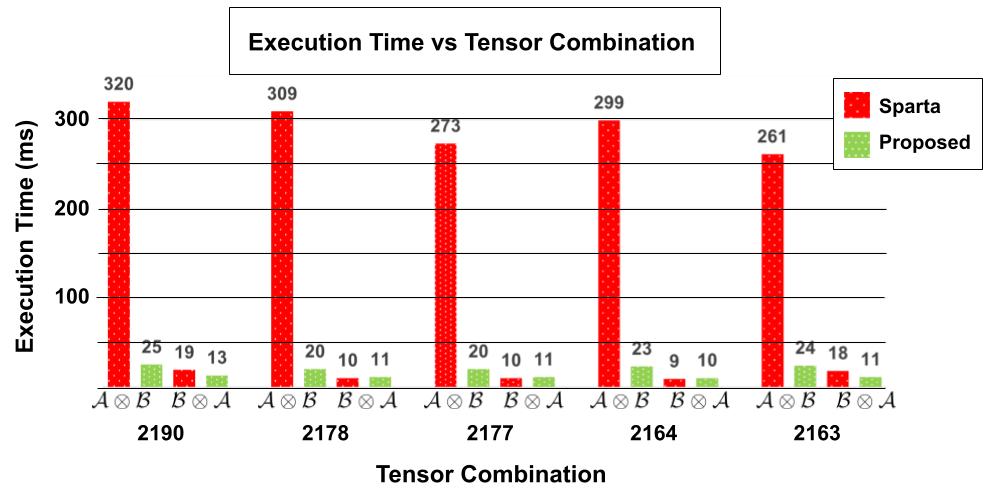

The third set of tensor contractions in Table 1 contains imbalanced operations in which the left tensor has vastly more non-zero elements compared to the right. Although both operations result in the same output tensor, this section compares the difference in contraction time when the order of the tensors in the operation are swapped. This can be important in the case of unknown tensor sizes such as the instance these algorithms are used in a chain of contractions where the output tensor of the previous layer has an unknown number of non-zero elements. The measurements are displayed in Figure 13. As can be seen, the operation is similar for both algorithms, but the operation is very imbalanced and Sparta has much worse performance. This imbalance should be due to the imbalanced time complexity and the difference in tensor representations based on the order of contraction for Sparta, versus Swift which represents both tensors in the same fashion and has a symmetric time complexity. For these extremely imbalanced cases, the Sparta execution time increases by a factor of 26x on average when the order of the operations is swapped, and Swift execution time increases by a factor of 0.96x when the order of operations is swapped.

6 Conclusion

Sparse Tensor Contraction is important for a large range of applications that work with higher dimensional data. There are numerous challenges, the most prominent of which are irregular memory access patterns, unknown output tensor size, and high amounts of intermediate data. The state-of-the-art handles these problems through the use of hash table based representation of tensor , sorting tensor , and using chaining hash tables to place intermediate entries into a dynamic array. This work presents alterations to key stages that are shown to improve both the performance of the operation and time complexity compared to the state-of-the-art sparse tensor contraction algorithm. The most important changes are the grouping of tensor to improve performance and time complexity, representing tensor as an array with pointers to divisions, and using a probing hash table at the output of accumulation for better memory access time. These improvements are shown to have up to 20 speedup over diverse test cases with a trade-off of slightly increased memory allocation.

7 Acknowledgment

This research was supported, in part, by the National Science Foundation grants 2217028 and 2316203.

References

- \bibcommenthead

- Aprà et al. [2020] Aprà, E., et al.: Nwchem: Past, present, and future. The Journal of Chemical Physics 152(18) (2020) https://doi.org/10.1063/5.0004997

- Fishman et al. [2022] Fishman, M., White, S., Stoudenmire, E.: The itensor software library for tensor network calculations. SciPost Physics Codebases (2022) https://doi.org/10.21468/scipostphyscodeb.4

- Köppl and Werner [2016] Köppl, C., Werner, H.-J.: Parallel and low-order scaling implementation of hartree–fock exchange using local density fitting. Journal of Chemical Theory and Computation 12 (2016) https://doi.org/10.1021/acs.jctc.6b00251

- Li et al. [2022] Li, L., Yu, W., Batselier, K.: Faster tensor train decomposition for sparse data. Journal of Computational and Applied Mathematics 405, 113972 (2022) https://doi.org/10.1016/j.cam.2021.113972

- Pavošević et al. [2016] Pavošević, F., Pinski, P., Riplinger, C., Neese, F., Valeev, E.: Sparsemaps—a systematic infrastructure for reduced-scaling electronic structure methods. iv. linear-scaling second-order explicitly correlated energy with pair natural orbitals. The Journal of Chemical Physics 144, 144109 (2016) https://doi.org/10.1063/1.4945444

- Roberts et al. [2019] Roberts, C., Milsted, A., Ganahl, M., Zalcman, A., Fontaine, B., Zou, Y., Hidary, J., Vidal, G., Leichenauer, S.: TensorNetwork: A Library for Physics and Machine Learning (2019)

- Choy [2020] Choy, C.: Sparse tensor basics¶ (2020). https://nvidia.github.io/MinkowskiEngine/tutorial/sparse_tensor_basic.html

- Kisil et al. [2021] Kisil, I., Calvi, G.G., Konstantinidis, K., Xu, Y.L., Mandic, D.P.: Reducing Computational Complexity of Tensor Contractions via Tensor-Train Networks (2021). https://arxiv.org/abs/2109.00626

- Liu et al. [2021] Liu, J., Ren, J., Gioiosa, R., Li, D., Li, J.: Sparta: high-performance, element-wise sparse tensor contraction on heterogeneous memory. In: Proceedings of the 26th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming. PPoPP ’21, pp. 318–333. Association for Computing Machinery, New York, NY, USA (2021). https://doi.org/10.1145/3437801.3441581 . https://doi.org/10.1145/3437801.3441581

- Xiao et al. [2024] Xiao, G., Yin, C., Chen, Y., Duan, M., Li, K.: Efficient utilization of multi-threading parallelism on heterogeneous systems for sparse tensor contraction. IEEE Transactions on Parallel and Distributed Systems 35(6), 1044–1055 (2024) https://doi.org/10.1109/TPDS.2024.3391254

- Xiao et al. [2022] Xiao, G., Yin, C., Chen, Y., Duan, M., Li, K.: Gsptc: High-performance sparse tensor contraction on cpu-gpu heterogeneous systems. In: 2022 IEEE 24th Int Conf on High Performance Computing & Communications; 8th Int Conf on Data Science & Systems; 20th Int Conf on Smart City; 8th Int Conf on Dependability in Sensor, Cloud & Big Data Systems & Application (HPCC/DSS/SmartCity/DependSys), pp. 380–387 (2022). https://doi.org/10.1109/HPCC-DSS-SmartCity-DependSys57074.2022.00080

- Liu et al. [2021] Liu, J., Li, D., Gioiosa, R., Li, J.: Athena: high-performance sparse tensor contraction sequence on heterogeneous memory. In: Proceedings of the 35th ACM International Conference on Supercomputing. ICS ’21, pp. 190–202. Association for Computing Machinery, New York, NY, USA (2021). https://doi.org/10.1145/3447818.3460355 . https://doi.org/10.1145/3447818.3460355

- Srivastava et al. [2020] Srivastava, N., Jin, H., Smith, S., Rong, H., Albonesi, D., Zhang, Z.: Tensaurus: A versatile accelerator for mixed sparse-dense tensor computations. In: 2020 IEEE International Symposium on High Performance Computer Architecture (HPCA), pp. 689–702 (2020). https://doi.org/10.1109/HPCA47549.2020.00062

- Hegde et al. [2019] Hegde, K., Asghari-Moghaddam, H., Pellauer, M., Crago, N., Jaleel, A., Solomonik, E., Emer, J., Fletcher, C.W.: Extensor: An accelerator for sparse tensor algebra. In: Proceedings of the 52nd Annual IEEE/ACM International Symposium on Microarchitecture. MICRO ’52, pp. 319–333. Association for Computing Machinery, New York, NY, USA (2019). https://doi.org/10.1145/3352460.3358275 . https://doi.org/10.1145/3352460.3358275

- Pal et al. [2018] Pal, S., Beaumont, J., Park, D.-H., Amarnath, A., Feng, S., Chakrabarti, C., Kim, H.-S., Blaauw, D., Mudge, T., Dreslinski, R.: Outerspace: An outer product based sparse matrix multiplication accelerator. In: 2018 IEEE International Symposium on High Performance Computer Architecture (HPCA), pp. 724–736 (2018). https://doi.org/10.1109/HPCA.2018.00067

- Paszke et al. [2019] Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., Desmaison, A., Kopf, A., Yang, E., DeVito, Z., Raison, M., Tejani, A., Chilamkurthy, S., Steiner, B., Fang, L., Bai, J., Chintala, S.: Pytorch: An imperative style, high-performance deep learning library. In: Wallach, H., Larochelle, H., Beygelzimer, A., Alché-Buc, F., Fox, E., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 32. Curran Associates, Inc., ??? (2019). https://proceedings.neurips.cc/paper_files/paper/2019/file/bdbca288fee7f92f2bfa9f7012727740-Paper.pdf

- Abadi et al. [2016] Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean, J., Devin, M., Ghemawat, S., Irving, G., Isard, M., Kudlur, M., Levenberg, J., Monga, R., Moore, S., Murray, D.G., Steiner, B., Tucker, P., Vasudevan, V., Warden, P., Wicke, M., Yu, Y., Zheng, X.: TensorFlow: A system for Large-Scale machine learning. In: 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), pp. 265–283. USENIX Association, Savannah, GA (2016). https://www.usenix.org/conference/osdi16/technical-sessions/presentation/abadi

- Bender et al. [2022] Bender, M.A., Farach-Colton, M., Kuszmaul, J., Kuszmaul, W., Liu, M.: On the optimal time/space tradeoff for hash tables. In: Proceedings of the 54th Annual ACM SIGACT Symposium on Theory of Computing. STOC 2022, pp. 1284–1297. Association for Computing Machinery, New York, NY, USA (2022). https://doi.org/10.1145/3519935.3519969 . https://doi.org/10.1145/3519935.3519969

- Cichocki [2014] Cichocki, A.: Era of Big Data Processing: A New Approach via Tensor Networks and Tensor Decompositions (2014). https://arxiv.org/abs/1403.2048

- Zhong et al. [2024] Zhong, K., Zhu, Z., Dai, G., Wang, H., Yang, X., Zhang, H., Si, J., Mao, Q., Zeng, S., Hong, K., Zhang, G., Yang, H., Wang, Y.: Feasta: A flexible and efficient accelerator for sparse tensor algebra in machine learning. In: Proceedings of the 29th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, Volume 3. ASPLOS ’24, pp. 349–366. Association for Computing Machinery, New York, NY, USA (2024). https://doi.org/10.1145/3620666.3651336 . https://doi.org/10.1145/3620666.3651336

- Smith et al. [2015] Smith, S., Ravindran, N., Sidiropoulos, N.D., Karypis, G.: Splatt: Efficient and parallel sparse tensor-matrix multiplication. In: 2015 IEEE International Parallel and Distributed Processing Symposium, pp. 61–70 (2015). https://doi.org/10.1109/IPDPS.2015.27

- Li et al. [2016] Li, J., Ma, Y., Yan, C., Vuduc, R.: Optimizing Sparse Tensor Times Matrix on Multi-core and Many-Core Architectures (2016). https://doi.org/10.1109/IA3.2016.010

- Li et al. [2018] Li, J., Sun, J., Vuduc, R.: Hicoo: Hierarchical storage of sparse tensors. In: SC18: International Conference for High Performance Computing, Networking, Storage and Analysis, pp. 238–252 (2018). https://doi.org/10.1109/SC.2018.00022

- Nisa et al. [2019] Nisa, I., Li, J., Sukumaran-Rajam, A., Rawat, P.S., Krishnamoorthy, S., Sadayappan, P.: An efficient mixed-mode representation of sparse tensors. In: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis. SC ’19. Association for Computing Machinery, New York, NY, USA (2019). https://doi.org/10.1145/3295500.3356216 . https://doi.org/10.1145/3295500.3356216

- Li et al. [2020] Li, J., Lakshminarasimhan, M., Wu, X., Li, A., Olschanowsky, C., Barker, K.: A sparse tensor benchmark suite for cpus and gpus. In: 2020 IEEE International Symposium on Workload Characterization (IISWC), pp. 193–204 (2020). https://doi.org/%****␣sn-article.bbl␣Line␣525␣****10.1109/IISWC50251.2020.00027

- Li et al. [2016] Li, J., Ma, Y., Yan, C., Vuduc, R.: Optimizing sparse tensor times matrix on multi-core and many-core architectures. In: Proceedings of the Sixth Workshop on Irregular Applications: Architectures and Algorithms, pp. 26–33. IEEE Press, ??? (2016)

- Cafiero [2023] Cafiero, C.: Hash collisions. The University of Vermont. Available at: https://uvm.edu/~cbcafier/cs2240/content/09_hashing/03_collisions.html (2023)

- Kulp et al. [2024] Kulp, G., Ensinger, A., Chen, L.: FLAASH: Flexible Accelerator Architecture for Sparse High-Order Tensor Contraction (2024). https://arxiv.org/abs/2404.16317

- Srivastava et al. [2020] Srivastava, N., Jin, H., Liu, J., Albonesi, D., Zhang, Z.: Matraptor: A sparse-sparse matrix multiplication accelerator based on row-wise product. In: 2020 53rd Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), pp. 766–780 (2020). https://doi.org/10.1109/MICRO50266.2020.00068

- Cohen [2015] Cohen, W.W.: Enron Email Dataset. Available at: =https://www.cs.cmu.edu/ enron/, journal=Enron email dataset (2015)

- Pang et al. [2005] Pang, R., Allman, M., Bennett, M., Lee, J., Paxson, V., Tierney, B.: A first look at modern enterprise traffic. In: Proceedings of the 5th ACM SIGCOMM Conference on Internet Measurement. IMC ’05, p. 2. USENIX Association, USA (2005)

- Viswanath et al. [2009] Viswanath, B., Mislove, A., Cha, M., Gummadi, K.P.: On the evolution of user interaction in facebook. In: Proceedings of the 2nd ACM Workshop on Online Social Networks. WOSN ’09, pp. 37–42. Association for Computing Machinery, New York, NY, USA (2009). https://doi.org/10.1145/1592665.1592675 . https://doi.org/10.1145/1592665.1592675

- Esslinger [2010] Esslinger, T.: Fermi-hubbard physics with atoms in an optical lattice. Annual Review of Condensed Matter Physics 1(1), 129–152 (2010) https://doi.org/10.1146/annurev-conmatphys-070909-104059