11email: [email protected] 22institutetext: Department of Computer Science, Faculty of Fundamental Problems of Technology, Wrocław University of Science and Technology, Wrocław, Poland 33institutetext: Faculty of Electronics, Wrocław University of Science and Technology, Wrocław, Poland

SUPPNet: Neural network for stellar spectrum normalisation

Abstract

Context. Precise continuum normalisation of merged échelle spectra is a demanding task necessary for various detailed spectroscopic analyses. Automatic methods have limited effectiveness due to the variety of features present in the spectra of stars. This complexity often leads to the necessity of manual normalisation which is a time demanding task.

Aims. The aim of this work is to develop a fully automated normalisation tool that works with order-merged spectra and offers flexible manual fine-tuning, if necessary.

Methods. The core of the proposed method uses the novel fully convolutional deep neural network (SUPP Network) that was trained to predict a pseudo-continuum. The post-processing step uses smoothing splines that gives access to regressed knots useful for optional manual corrections. The active learning technique was applied to deal with possible biases that may arise from training with synthetic spectra and to extend the applicability of the proposed method to features absent in this kind of spectra.

Results. The developed normalisation method was tested with high-resolution spectra of stars having spectral types from O to G, and gave root mean squared (RMS) error over the set of test stars equal 0.0128 in the spectral range from 3900 Å to 7000 Å and 0.0081 in the range from 4200 Å to 7000 Å. Experiments with synthetic spectra give RMS of the order of 0.0050.

Conclusions. The proposed method gives results comparable to careful manual normalisation. Additionally, this approach is general and can be used in other fields of astronomy where background modelling or trend removal is a part of data processing. The algorithm is available online: https://git.io/JqJhf.

Key Words.:

Techniques: spectroscopic – Methods: numerical – Stars: general – Line: profiles1 Introduction

Electromagnetic spectra of astronomical objects such as stars, galaxies or exoplanets are a very abundant source of information and enable us to study the physics of the objects in detail. Low-resolution spectra are closely related to photometry and can be used to determine basic properties of stars, like effective temperatures, surface gravities or metallicities. High-resolution spectra allow us to investigate profiles of separate absorption and emission lines in detail, and make it possible to measure individual abundances of elements, understand the vertical structure of a stellar atmosphere, and study its velocity fields (e.g. microturbulence, macroturbulence, rotation, stellar oscillations, granulation).

The continuum-normalisation process is an important part of spectrum preprocessing because usually for the next steps of the analysis the pseudo-continuum should be correctly subtracted. The problem of continuum normalisation is non-trivial, as several factors are responsible for the shape of a spectrum, including real stellar continuum shape, the Earth atmosphere, characteristics of optical and electronic components of a spectrograph, presence of cosmic rays, and last but not least, residuals introduced by pipelines used by observatories to calibrate and reduce spectra, due to, for example, imperfect orders merging or blaze function removal. Several existing normalisation methods are worth mentioning, e.g. filtering methods (moving window maximum filtering, asymmetric sigma clipping, etc.) followed by low order polynomial fitting, smoothing in the frequency domain, and methods based on the concept of convex-hull and alpha-shape theories (Xu et al., 2019; Cretignier et al., 2020). They all try to remove spectral lines from the spectrum and then fit a low-order polynomial or spline function to the remaining pseudo-continuum, and they all contain some free parameters that need to be adjusted manually. For a broad overview of methods and problems present in the normalisation of stellar spectra we recommend the article of Cretignier et al. (2020).

Normalisation methods based on the polynomial fitting and frequency domain filtering suffer due to the trade-off between the treatment of rapidly changing parts of a continuum (e.g. ripples often present in merged échelle spectra) and the presence of wide spectral features (e.g. hydrogen lines or molecular bands). These limitations can be partially overcome by introducing some adaptive penalised least-squares terms in the minimisation objective. This approach is extensively used in the field of Raman spectroscopy (Cadusch et al., 2013). Methods based on the concept of convex hulls also have some limitations. They assume that the local maximum is a good indicator of a stellar continuum. In most cases, this is a reasonable attitude, but breaks for spectra with emission features present and in regions with extensively blended lines, where the continuum is well above the measured spectrum (e.g. in the optical spectra of G or later stars). All these methods share the problem that features located in regions covered with spectral lines are lost. This does not allow for the effective recovery of the pseudo-continuum shape in the parts of spectra mentioned above. The continuum in these parts is only smoothed to match the surroundings of the line. Additionally, those methods are particularly sensitive to the level of noise, projected rotational velocity, and resolution of the instrument used to observe the spectra.

These limitations can be overcome when we have a template for a given spectrum. Normalisation can then be reduced to dividing the spectrum by the model and then using the selected trend fitting tool, e.g. adaptive spline functions, to model a pseudo-continuum. In this case, the challenge is to successfully find a model for the spectrum, the parameters of which are unknown at the first stage of the analysis. In turn, the proposed approach utilising information from the entire spectrum, also from areas with spectral lines, is resistant to the above-mentioned problems and can be used in a fully automatic manner, offering, at the same time, the flexibility of manual corrections, if necessary.

Nonetheless, an astronomer experienced in spectrum normalisation is able to fit a pseudo-continuum taking most of the complexities into account, especially when normalisation is done iteratively during model fitting. One of the tools that make the fully manual continuum fitting and initial stellar parameters’ estimation straightforward is the application HANDY111https://rozanskit.com/HANDY/. However, manual normalisation has several important drawbacks. It is very time-consuming, prone to human biases, and not reproducible. The manual normalisation cannot be done reliably in regions covered by wide lines and line blends when synthetic spectra are not used as a reference. Manual normalisation strengths suggest that the key points are the understanding of a spectrum and the experience with features that may appear in the pseudo-continuum. These findings suggest that machine learning algorithms are promising tools to overcome mentioned limitations.

The use of machine learning algorithms becomes more and more frequent in physical sciences (Carleo et al., 2019), including astronomy (Ball & Brunner, 2010; Baron, 2019). This progress becomes possible in recent years due to important developments in the algorithms of machine learning, especially in the neural network field, and due to the constant and rapid increase of available computational power. On the other hand, astronomy delivers more numerous and complex astrometric (e.g. Gaia mission Gaia Collaboration et al., 2016), photometric (e.g. Zwicky Transient Facility and future Vera Rubin Observatory with Legacy Survey of Space and Time Mahabal et al., 2019; Ivezić et al., 2019) and spectroscopic (e.g. ESO database222https://archive.eso.org/cms/data-portal.html; APOGEE, Majewski et al. (2017); LAMOST, Zhao et al. (2012)) databases, which grow in size rapidly. Machine learning was applied for various tasks in the field of astronomy, e.g. real-time detection of gravitational waves and parameter estimation (George & Huerta, 2018), estimation of effective temperatures and metallicities of M-type stars (Antoniadis-Karnavas, A. et al., 2020), estimation of initial parameters for asteroseismic modelling (Hendriks & Aerts, 2019), classification of diffuse interstellar bands (Hendriks & Aerts, 2019) and morphological segmentation of galaxies (Farias et al., 2020).

We explore deep artificial neural networks (DNN) in search of architectures that can deal with a pseudo-continuum prediction task. We propose a new algorithm, based on the neural network SUPPNet, that achieves results comparable to human professionals.

2 Machine Learning and spectrum normalisation

Up to our knowledge, this is the first approach towards automated stellar spectrum normalisation using tools rooted in the deep learning field. From the above examples, it is clear that fundamental limitations of the previous methods are: an implicit assumption that a local maximum in a spectrum is a good indicator of a pseudo-continuum; the necessity of manual adjustments of several parameters; often poor results when the pseudo-continuum passes trough emission features; ripples that arise from orders merging (échelle spectra). Deep neural networks are natural candidates to overcome these limitations, as in principle they are capable to grasp complex priors and approximate any function. It means that a suitable neural network model after training on a representative dataset should learn to recognise a type of spectrum and a pseudo-continuum and be able to fit the pseudo-continuum correctly in most cases. In this approach, the quality of a spectrum normalisation algorithm is restricted by the generality and quality of an available training set.

To fit the normalisation problem in the deep learning framework we consider spectrum normalisation as filtering from the domain of spectrum measurement to the domain of possible pseudo-continua. As a filter, that is flexible enough to implement such a mapping, we used a fully convolutional neural network of novel architecture, which is mainly based on the work done in the field of computer vision, in particular in the semantic segmentation problem.

2.1 Training data description

A machine learning model can be as good as data used for its training but not any better. Because of that, much attention was paid to preparing the training data properly. We applied the active learning technique so we prepared two datasets: the first, composed of synthetic spectra only, and the second based on automatically normalised and manually corrected observational spectra. In this work, we denote supplementation of the training process with spectra and pseudo-continua from manual normalisation as active machine learning.

We started by preparing an extensive set of synthetic continuum-normalised spectra. We used SYNTHE/ATLAS (Kurucz, 1970) codes to compute 10000 spectra with randomly selected atmospheric parameters (effective temperature, , ranging from 3000 K to 30000 K and logarithm of surface gravity, , from 1.0 to 5.5). We also used BSTAR and OSTAR grids (Lanz & Hubeny, 2003, 2007), which together span effective temperature range from 15000 K to 55000 K, and logarithm of surface gravity from 1.75 to 4.75 (not in the whole range of temperatures). The main difference between these two sources of spectra is a different treatment of atomic levels populations. The ATLAS code solves the classical stellar atmosphere problem, and SYNTHE computes the synthetic spectrum assuming local thermodynamic equilibrium (LTE), which means that level populations are calculated using the Boltzmann distribution and the Saha ionisation equation. In the case of BSTAR and OSTAR grids, they were calculated using SYNSPEC/TLUSTY codes that explicitly solve rate equations for a chosen set of levels. This is especially important for hotter stars, where the radiative processes dominate the collisional transitions and non-LTE effects become prominent. Including non-LTE effects leads to changes of line depths and, what is more important, frequently results in the appearance of emission features.

Some families of analytical functions (sinusoidal, smoothed sawtooth, Akima spline with 5 knots) and also some continua coming from manual normalisation were used as artificial pseudo-continua shapes. To build the first training dataset the spectrum from the set of prepared synthetic spectra was sampled, part of it was randomly chosen. Next, it was convolved with a random broadening kernel and finally multiplied by an artificial continuum. This procedure repeated about 150000 times gave a large set composed of diverse training samples. The broadening kernel included rotation, macroturbulence and instrumental broadening. Rotation and macroturbulence velocities were chosen randomly in physically reasonable ranges. A projected rotation velocity () range depends on effective temperature and is drawn from a uniform distribution with the lower boundary equal to zero, and the upper one successively equal to 50, 100, 200, 300, 400 km s-1 in the ranges (¡ 5000), (5000, 6000), (6000, 7500), (7500, 10000) and (¿10000) K, respectively. This distribution is based on the discussion presented by Royer (2009). The macroturbulence velocity, , was drawn from the same uniform distribution in the range from 0 to 30 km s-1, regardless of effective temperature. The chosen instrumental resolution (from 40000 to 120000) covers a range of the most available high-resolution spectrographs.

The second stage of learning used the training set extended by the active dataset, which is composed of automatically normalised and manually corrected observational spectra (application and description of active learning in astronomical context can be found in the work by Škoda et al., 2020). First, the model trained on synthetic data was used to normalise spectra from UVES Paranal Observatory Project (UVES POP333https://www.eso.org/sci/observing/tools/uvespop.html; Bagnulo et al., 2003) (IC 2391, NGC 6475, and the brightest stars of southern sky, resolution equal 80000), and the set of FEROS444https://www.eso.org/sci/facilities/lasilla/instruments/feros.html spectra (153 spectra with without object duplicates, resolution equal 48000). Then normalisation was manually checked and carefully corrected for each automatically processed spectrum. That resulted in a set of around 250 normalised spectra and pseudo-continua fits, making up the active dataset.

2.2 Tasks description

Initial tests of deep learning methods in stellar spectrum processing focused on two distinct tasks: segmentation of spectrum into pseudo-continuum and non-pseudo-continuum parts, and pseudo-continuum prediction. The segmentation can be considered as a subclass of a classification problem. It aims to predict the class that a segment of input data belongs to. The segment can be as small as one pixel in the case of image segmentation or flux measurement at a given wavelength (sample) in the case of one-dimensional spectral data segmentation. In our case we classify spectrum sample-wise into two classes: ”continuum”, and ”non-continuum” (see the bottom panel of Fig. 1 for an example).

It can be given by a function , where , is the number of samples in the input and the output, corresponds to the pseudo-continuum class, and to the non-pseudo-continuum class. It is important to note that the result returned by the last layer of a neural network is composed of real numbers in the range from 0 to 1. Thresholding must be applied to obtain discrete classes, . The common choice used also in this work is . Spectrum segmentation was considered here as an auxiliary task and as a potential regularisation technique (Kukačka et al., 2017).

A functional form of continuum prediction, which is a multidimensional regression, differs slightly from the segmentation and is given by , with the same notation as above. The co-domain is here over the real numbers, instead of a discrete set of values that represent different classes. An exemplary result of such a prediction for both, segmentation and a pseudo-continuum prediction task is given in Fig. 1.

2.3 Loss functions, metrics and optimisers

A neural network learning needs an optimisation algorithm that adjusts well free parameters of the model to the approximate trained function. The quality of the obtained mapping can be evaluated using various loss functions and metrics. A loss function denotes a differentiable function whose value is optimised during the training process by the algorithm that iteratively adjusts free parameters of a neural network under the training. A metric is a function that is used to evaluate the performance of the model but is not directly used for optimisation during the training. Metric functions can be non-differentiable. The adopted naming convention comes from the TensorFlow machine learning library (Abadi et al., 2015).

The cross-entropy loss function is often used for segmentation:

where is a number of samples, is a vector of target classes for i-th sample, and is a vector of the model’s output for the same sample. equals only if i-th sample belongs to j-th class, so , where M is a number of classes. The cross-entropy equals zero if a model perfectly assigns classes to samples. In this work, the binary-cross-entropy loss was applied as there are only two distinct classes. The accuracy, which is given by the number of correct predictions divided by the number of all predictions was used as a metric. Here, in the case of the binary segmentation, the , where TP denotes the number of true positive predictions, TN corresponds to true negative, and gives the number of samples.

The mean squared error (MSE) was used as a loss function for the pseudo-continuum prediction. It is given by:

where denotes the number of samples, is a vector of target values, and is an estimated vector. MSE is a standard loss function for a regression problem. The mean absolute error (MAE) was used as a metric for this task. The functional form of MAE is given by:

All performed experiments use the Nesterov-accelerated Adaptive Moment Estimation optimiser (Nadam; Dozat, 2016), which iteratively adjusts free parameters of a neural network during a training process. It is based on two main concepts. The first is Nesterov’s momentum which is beneficial in dimensions of an objective function with small curvature that consistently points one direction (Nesterov, 1983). The second concept is the adaptive learning rate that is able to compute individual learning rates based on the first and the second moments of gradients (Adam; Kingma & Ba, 2017), and works well with sparse gradients and non-stationary objectives.

2.4 Exploratory neural network tests

Initial exploration of neural network architectures was focused on tasks of pseudo-continuum prediction and spectrum segmentation. As these problems are inherently one-dimensional (inputs and targets are sequential data), we have implemented and tested one-dimensional versions of several neural network architectures that were successfully used in the field of image segmentation. We expected that advances made in the two-dimensional domain, with necessary adaptations and some minor changes, could be adopted for the processing of one-dimensional signals that stellar spectra are. The purpose of these tests was to check this hypothesis. To some extent, the method to find the best neural network follows the work of Radosavovic et al. (2020).

The selection of potential architectures was based in particular on the paper of Hoeser & Kuenzer (2020), which gives a detailed overview of both, the historical development and current state-of-the-art solutions. The architectures selected for the experiments were: Fully Convolutional Network (FCN, Long et al., 2015), Deconvolution Network (DeconvNet, Noh et al., 2015), U-Net (Ronneberger et al., 2015), UNet++, (Zhou et al., 2018), Feature Pyramid Network (FPN, Lin et al., 2017; Kirillov et al., 2019), and Pyramid Scene Parsing Network (PSPNet, Zhao et al., 2017). Additionally, a new architecture – UPPNet, which is an extension of the U-Net architecture was proposed.

The same training and evaluation scheme was used for all tests to make the comparison informative. Each tested neural network is composed of a body and a prediction head. The body is the part sampled from the adopted parameters space, considering the type of architecture and its free hyper-parameters (e.g. a number of layers, etc.). As an input, it takes a one-dimensional spectrum (vector of the length equals 8192) and outputs several feature maps of the same length as the input spectrum (e.g. in the case of a body that return 8 feature maps, a matrix of size (8192,8) is an output). In the context of a one-dimensional spectrum, feature maps can represent different shapes of spectral lines. Schematic diagrams of all tested bodies can be found in Figs. 12-18 in Appendix A. As the main building block, all networks use residual bottleneck blocks with group convolution (Xie et al., 2016), referred here as residual blocks (RB). Hyper-parameters of RBs are: a number of feature maps, a group width and a bottleneck ratio (in all experiments the bottleneck ratio was fixed to one, see Radosavovic et al. (2020)). The head takes feature maps from the body part as an input and returns a final prediction. All heads share the same architecture, i.e. have three point-wise convolutional layers (LeCun et al., 1989) with the number of features equal 64, 32 followed by 1. In the first two layers the ReLU () activation function was used, while in the third one, it was the softmax function for segmentation and ReLU for pseudo-continuum prediction. The exception is the Fully Convolutional Network, whose architecture does not allow for this consistent approach. In this case, we skipped some of the tests (details are given in the architecture description below).

One hundred neural architecture realisations with a number of free parameters ranging from 200000 to 300000 were sampled from each architecture and trained in a low-data regime (5000 training samples from the synthetic training dataset, 30 epochs training, where epoch means single complete pass through the training data) in a pseudo-continuum prediction task. Networks’ validation was performed on the validation dataset separated at the beginning from the training set. The obtained distribution of models that can be found in Fig. 2, which shows the probability that the loss value on a validation set of a neural network sampled from a given architecture will be lower than a corresponding abscissa value. For example, approximately 60 percent of neural networks of the UPPNet (full) architecture have a loss function value lower than . This plot shows that neural networks of different architectures differ in the concentration of high-quality models in their parameters space and in the mean squared error of the best neural network. The UPPNet (full) can be thus considered the most promising architecture as it gives many relatively good models and also has the best model among all the trained networks.

In the second step of exploration, the best neural network from each architecture was picked and trained in segmentation/pseudo-continuum prediction, and also in both tasks simultaneously. In the case of the simultaneous training neural networks were equipped with two independent prediction heads. This training covered the entire synthetic dataset and lasted 150 epochs, where epoch means a single complete pass through the training data. The metrics were calculated on the same validation dataset as in the first step of exploration. The first 100 epochs used a learning rate equal and a learning rate equal was used for the remaining steps.

Brief summary of the results can be found in Table 1. Mean absolute error or accuracy is shown for each architecture and task. The best value in each column is given in bold. UPPNet (full), trained on both tasks simultaneously is leading in pseudo-continuum prediction with MAE equal 0.0110, while in segmentation the best is UPPNet (sparse) trained only in the segmentation task, with accuracy equal 0.9166. The best, when trained in pseudo-continuum prediction only, is the U-Net network, with MAE equal to 0.0112. Training in both tasks simultaneously results in a systematic decrease in the segmentation quality but has little effect on the pseudo-continuum estimation. An overview of original models used in the image semantic segmentation, with a short note about their one-dimensional versions with the results and the conclusions is provided below.

| Architecture | C | S | C&S | |

|---|---|---|---|---|

| C | S | |||

| FCN | 0.0580 | 0.8877 | – | – |

| DeconvNet | 0.0124 | 0.8991 | 0.0128 | 0.8763 |

| U-Net | 0.0112 | 0.9105 | 0.0119 | 0.8866 |

| UNet++ | 0.0146 | 0.8945 | 0.0133 | 0.8679 |

| FPN | 0.0125 | 0.9063 | 0.0119 | 0.8887 |

| PSPNet | 0.0115 | 0.9154 | 0.0119 | 0.8914 |

| UPPNet (sparse) | 0.0122 | 0.9166 | 0.0126 | 0.8857 |

| UPPNet (full) | 0.0116 | 0.9065 | 0.0110 | 0.9033 |

| SUPPNet (synth) | - | - | 0.0092 | 0.9132 |

2.4.1 Fully Convolutional Network

This type of convolutional neural network (CNN) was proposed by Long et al. (2015) and influenced the whole field of the image semantic segmentation – most of the following architectures used in the semantic segmentation are also fully convolutional neural networks. This end-to-end trainable model uses a VGG-16 network (Simonyan & Zisserman, 2015) as a backbone. This is a simple network that contains 16 trainable layers. The segmentation prediction is a function of feature maps previously upsampled by trained deconvolution layers from several intermediate representations, and merged by summation. This design gives spatial resolution and access to high level semantic classes. In the context of one-dimensional spectrum processing, high level semantic information can for example correspond to the presence of emission or wide absorption features in a spectrum. In all the following networks it is always the main point: to transfer somehow to the final segmentation result the information about precise localisation that the input image contains, but also to discover the class, that complex objects present in the input belong to. The schematic diagram of this architecture can be found in Appendix A in Fig. 12.

FCN architecture does not fit the comparison strategy used in our work, but we include it here as a prototype for all fully convolutional neural networks. It forms the low-resolution prediction, gradually refines it, and increases its resolution by upsampling and addition. This is contrary to other network architectures, that form high resolution features used by a neural network head for the final prediction. Due to these characteristics, the FCN network that predicts pseudo-continuum and segmentation simultaneously was not implemented.

In the context of stellar spectra processing, this network has the worst results among all tested architectures. MAE of the predicted pseudo-continuum equals 0.0580 and the accuracy of segmentation is 0.8877. It shows that strategy of upsampling low-resolution prediction is not the efficient approach to the investigated problems.

2.4.2 Deconvolution Network

This network (Noh et al., 2015) is an example of a so called encoder-decoder architecture. It is composed of two parts, encoder – narrowing part, which is the VGG-16 network, and decoder – widening part, the mirrored VGG-16 where pooling is replaced with unpooling layers that use spatial positions of elements pooled in the decoder. This means the localisation information is forwarded to the final segmentation output. The schematic diagram of this architecture can be found in Appendix A in Fig. 13.

One-dimensional version of this architecture gives moderate results (separate training: , ). Training in both tasks decreases prediction quality (C&S training: , ). To accurately preserve spatial location information, which is a key to accurately predict pseudo-continuum and segmentation, is the challenge with this architecture. We believe that this is the reason for the moderate performance of this network.

2.4.3 U-Net

The U-Net architecture proposed by Ronneberger et al. (2015) is a very successful and broadly exploited model. It is another encoder-decoder network. Its encoder is composed of five convolutional blocks that double the number of feature maps at each stage, and that are separated by maximum pooling layer (comparison of pooling methods can be found in Scherer et al., 2010) with size and stride. The decoder is also composed of five blocks and upsamples the low resolution – high semantic level feature maps, but in contrary to Deconvolution Network, blocks do not use only pooling indices but also concatenate the encoder feature map of the corresponding resolution. This is an example of a widely used concept of skipped connections, that helps to propagate the gradient in the training process, and helps the network to recover spatial localisation of objects in the input image. The blocks use convolution kernels, except the final layer which uses convolution for the final prediction. The schematic diagram of this architecture can be found in Appendix A in Fig. 14.

Tested implementation of one-dimensional U-Net architecture gives very good results in both segmentation and pseudo-continuum prediction (separate training: , ). Its pseudo-continuum prediction MAE is the lowest among networks with one output. Simultaneous training to predict both targets does not lead to any improvements (C&S training: , ). This simple architecture is a very strong baseline for any further experiments.

2.4.4 UNet++

UNet++ (Zhou et al., 2018), is an extension of U-Net architecture. It replaces the skipped connections with densely connected blocks and uses deep supervision for regularisation. The authors of the original article argue that the proposed connection scheme bridges a semantic gap between feature maps obtained in encoder and decoder part. The schematic diagram of this architecture can be found in Appendix A in Fig. 15.

In the original work, the authors used intermediate supervision, but we do not use it for the sake of fair comparison to other architectures. Although in theory UNet++ is superior to simpler U-Net architecture, tested one-dimensional UNet++ network shows poor results (separate training: , ). Training in both tasks simultaneously slightly improves pseudo-continuum quality () but degrades segmentation substantially (). Nonetheless, we suspect that this architecture could outperform U-Net in the regime of bigger networks, where the U-Net performance could potentially saturate.

2.4.5 Feature Pyramid Network

Feature Pyramid Network (FPN) was originally developed as a backbone for a two-stage object detection model and later was used in a panoptic segmentation task (the task that unifies image semantic and instance segmentation., Kirillov et al., 2019). The basic idea is to incorporate the construction of a feature pyramid as a part of neural network architecture. The feature pyramid is implemented as a path that upsamples the features using the nearest neighbour interpolation and uses lateral connections for better spatial localisation of high-resolution feature maps. It is visually similar to the U-Net architecture but conceptually implements a different idea. FPN propagates the same feature maps across different resolutions, while U-Net in principle may alter the feature maps towards the network output. The schematic diagram of this architecture can be found in Appendix A in Fig. 16.

FPN gives promising results in spectra processing (separate training: , ). Both-task training slightly improves the metrics of pseudo-continuum prediction () but worsens segmentation quality ().

2.4.6 Pyramid Scene Parsing Network

Pyramid Scene Parsing Network (PSPNet) (Zhao et al., 2017) is composed of three parts: a backbone network that delivers a feature map, Pyramid Pooling Module (PPM) that helps to introduce contextual information from different parts of an image to the final prediction, and an output convolutional network that is responsible for the final prediction. Usage of the PPM that pools the features on different scales, applies convolution, bilinear upsampling, and concatenate produced features to input the feature map of the module is the novelty. The authors experimentally show that such a lightweight module is able to introduce contextual information in the final prediction. The schematic diagram of this architecture can be found in Appendix A in Fig. 17.

One dimensional version of PSPNet uses PPM depicted schematically in Fig. 3. Although PSPNet does not use skipped connections in between its encoder and decoder, it gives great results (separate training: , ). Its quality slightly degrades when trained on both tasks (C&S training: , ). Its results in pseudo-continuum prediction are very close to results of the U-Net, while in segmentation it achieves results about 0.5% better in accuracy.

2.5 UPPNet

Insights from the previously mentioned architectures have encouraged us to experiment with a different placement of skipped connections together with the use of Pyramid Pooling Modules. This has led to U-Net with Pyramid Pooling Modules (UPPNet). First, we hypothesised that not all skipped connections are needed, but at the same time we suspected that PPMs included at some depths may result in better predictions. The proposed architecture is derived from U-Net by randomly dropping skipped connections or by replacing them with PPM. This version of UPPNet is denoted as sparse. U-Net, DeconvNet, and PSPNet are special cases of this architecture. Next, we decided to experiment with a version of UPPNet which is derived from the U-Net by replacing all skipped connections with PPMs, with an additional PPM module at the bottom of the network. This version of UPPNet is denoted as full. The schematic diagram of UPPNet (full) can be found in Fig. 4.

Figure 2 partially justifies the idea behind sparse UPPNet as there are relatively many networks giving loss values lower that , but Table 1 shows that in full training regime this additional degree of freedom in skipped connections arrangement not necessarily leads to results better than obtained with U-Net and PSPNet (separate training: , , C&S training: , ).

The regular UPPNet (full) architecture generally gives better results. Figure 2 shows that there are relatively many models with low loss value and that the best single network is of this kind. In the training on the full synthetic dataset (see Table 1) the advantage of this network decreases and the quality of pseudo-continuum prediction is comparable to those of U-Net and PSPNet models (separate training: , C&S training: ). Nonetheless, the best result in pseudo-continuum normalisation belongs to UPPNet (full) architecture when trained in both tasks. Additionally, visual inspection of the results of U-Net and UPPNet showed that the latter gives slightly smaller residuals on hydrogen H and H spectral lines which are important for atmospheric parameters’ estimation. The quality of segmentation is moderate in the case of training only in this task (), but is the best among models trained in both tasks simultaneously (). Because of these findings, the final model for pseudo-continuum prediction uses UPPNet (full) architecture as its main building block and is trained in both tasks simultaneously.

3 SUPP Network

Stacked U-Net with Pyramid Pooling Modules (SUPPNet) is a proposed neural network that gives the best results in the pseudo-continuum prediction task (see Table 1). This neural network was inspired by the three following well-known solutions present in machine learning literature: the U-Net architecture (Ronneberger et al., 2015), which is a basic block effective in tasks that combines precise localisation of features with complex semantic concepts, the Pyramid Scene Parsing Module (Zhao et al., 2017), which enables more effective share of contextual information across the whole receptive field, and the Stacked Hourglass Network (Newell et al., 2016), for which repetitive bottom-up processing, in conjunction with deep supervision, allows the network to learn fine-grained predictions.

3.1 Architecture details

SUPPNet is composed of two UPPNet (full) blocks and four prediction heads (Seg 1, Seg 2, Cont 1 and Cont 2; see Fig. 5). The UPPNet module was chosen as the main building block because of its high quality reviewed in Section 2.5. The heads compute final predictions from the high-resolution feature maps created by UPPNet blocks and have simple architecture, described in Section 2.4. The first block is responsible for the coarse prediction of a continuum/non-continuum segmentation mask (Seg 1) and pseudo-continuum (Cont 1). The mentioned outputs are used only while training, for deep supervision (intermediate supervision) (Newell et al., 2016; Zhou et al., 2018), which is beneficial in two ways. First, it helps to deal with the vanishing gradient problem. Second, the deep supervision regularises the neural network model. As an input, the second block takes spectrum, intermediate predictions (Seg 1 and Cont 1) and high-resolution feature maps from the first UPPNet block concatenated together. It outputs improved features that are used by the Seg 2 and Cont 2 heads for final predictions.

Both UPPNet blocks shared hyper-parameters (e.g. a depth, a number of residual blocks in each residual stage, etc.) but not their weights. The choice of hyper-parameters was based on a sampling of 400 UPPNet models that were trained in a low-data regime in a pseudo-continuum prediction task, following the procedure from Section 2.4. Subsequently, the best UPPNet was selected. The depth of this model equals 8 (its narrowing path has 8 pooling layers in total), with the number of residual blocks in residual stages successively equal 1, 1, 1, 2, 2, 5, 6, 7, 10, 7, 6, 5, 2, 2, 1, 1, 1 with the number of filters at each stage equal respectively 12, 16, 16, 20, 24, 32, 44, 44, 44, 44, 44, 32, 24, 20, 16, 16, 12. The narrowing and widening parts are symmetrical with respect to the bottom residual stage. PPM modules in SUPPNet have four filters and use residual stages that are composed of a single residual block. Their group width equals 64 so residual stages effectively use non-grouped convolutions. The total number of free parameters of the proposed SUPPNet approximately equals one million.

3.2 Training details

First, the SUPPNet model was trained on a synthetic dataset only. The training data was fed into the model using a data generator that augmented training examples by randomly cropping the spectra with ratio ranging from 0.7 to 2.5, reflecting the spectrum over the y-axis with 0.5 probability, and adding Gaussian noise to obtain a signal-to-noise ratio from 30 to 500. The ratio equals one means that an input sampling of 0.03 Å was kept. The ratio 0.7 corresponds to a sampling 0.021 Å and a spectral range Å (), and 2.5 to a sampling of 0.075 Å that corresponds to an Åinput window width. In this setting, the average sampling of the training data was equal Åand this is the value used later for prediction.

The Nadam optimiser was used for training as in all other experiments described in this work. A learning rate (lr) equals for the first 100 epochs and was decreased to for the remaining 50 epochs. After these 150 epochs, a learning curve flattened. We did not experiment with different parameters of the Nadam optimiser and left them equal to their default values (, ). A batch size equals 128. We denote this version SUPPNet (synth, S).

For active learning, the SUPPNet (synth) model was loaded and the learning continued for the next 100 epochs (for first 50 epochs , and later ), using the same augmentations but with 10 percent of the synthetic training, examples replaced with spectra and pseudo-continua acquired from manual normalisation. This resulted in SUPPNet (active, A) network.

All neural network outputs were used in the training process. As in previous experiments, a mean squared error was used as a loss for pseudo-continuum regression, and mean binary cross-entropy for segmentation. The MSE loss was multiplied by a factor of 400 to give it the same order of magnitude as loss used in segmentation branches.

3.3 Spectrum processing

The proposed spectrum normalisation method consists of three stages. First, the spectrum is re-sampled with the sampling of 0.05 Å, and the sliding window of the size equal 8192 samples with the shift of 256 samples is applied. This particular shift means that each sample is normalised times. The data prepared in this way is then re-scaled (min-max normalisation is applied). The second step is the application of the SUPP Network. As the post-processing step, the predicted pseudo-continua are scaled back. Finally, the result is calculated as a weighted average over all predictions for each sample. This is also used to obtain a qualitative measure of the model’s inherent uncertainty of both predictions (pseudo-continuum and segmentation).

The processing described above gives final pseudo-continuum and segmentation mask predictions. Because of the noisy pattern of the order of 0.001 of the relative magnitude present in the final result, we recommended an application of additional final post-processing. We used a smoothing spline available in SciPy Python module (Virtanen et al., 2020, function UnivariateSpline). A useful advantage of this approach toward smoothing is the possibility of incorporating the estimated pseudo-continuum errors in the smoothing process. A smoothing spline fit contains a knots arrangement that takes into account the uncertainty of the predicted pseudo-continuum. For example, it places fewer knots in the ranges of greater uncertainty. Example SUPPNet result can be found in Fig. 1.

4 Results

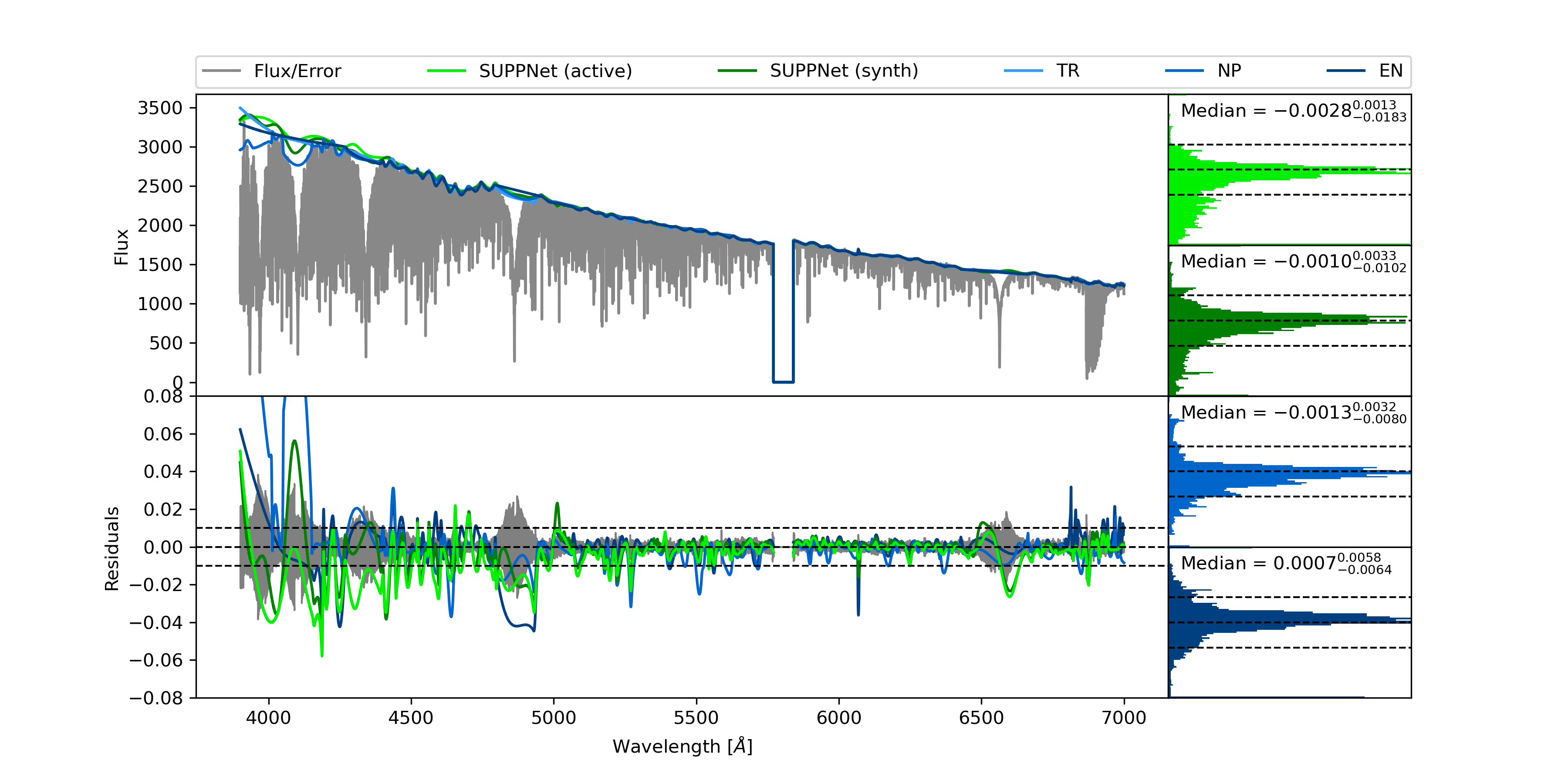

Several statistics were used for SUPPNet normalisation quality assessment. All were computed using residuals between the normalised spectrum (manually or automatically using SUPPNet) and the reference normalised spectrum. Either the synthetic or manually normalised spectrum served as the reference spectrum. Observed spectra analysed here come from UVES POP, while synthetic spectra were computed using ATLAS/SYNTHE codes. Among all normalised UVES POP spectra, six were chosen as representative and were normalised by three of us (TR, EN, and NP). These spectra show most features present in stellar spectra (for a detailed description see the following text and Table 2). Statistics mostly used in this section are the following percentiles: 2.28, 15.87, 50.00 (median), 84.13, and 97.73 and root-mean-square (RMS) error. In the case of normally distributed residuals 15.87–84.13 and 2.28–97.73 ranges would correspond to respectively one- and two-sigma bands.

The significance of the obtained results was tested using the bootstrap method. We tested the hypothesis that median and measure of spread, defined as a difference between 15.87 and 84.13 residuals, are equal in tested groups. In all statements regarding significance, we adopted 95% symmetrical confidence intervals.

| HD number | Name | Spectral type | V [mag] | [K] | [km s-1] | |

|---|---|---|---|---|---|---|

| 155806 | HR 6397 | O7.5 IIIe | 5.53 | - | - | 91 (1) |

| 90882 | Sex | B9.5 V | 5.18 | 10139 (2) | - | 152 (3) |

| 27411 | HR 1353 | A3m | 6.06 | 7600 (4) | 4.0 (4) | 20.5 (4) |

| 37495 | Col | F5 V | 5.31 | 6417 (5) | 3.79 (5) | 27.2 (6) |

| 59967 | HR 2882 | G3 V | 6.64 | 5836 (7) | 4.53 (7) | 3.76 (8) |

| 25069 | HR 1232 | K0 III | 5.83 | 4917 (9) | 3.24 (9) | 3.24 (9) |

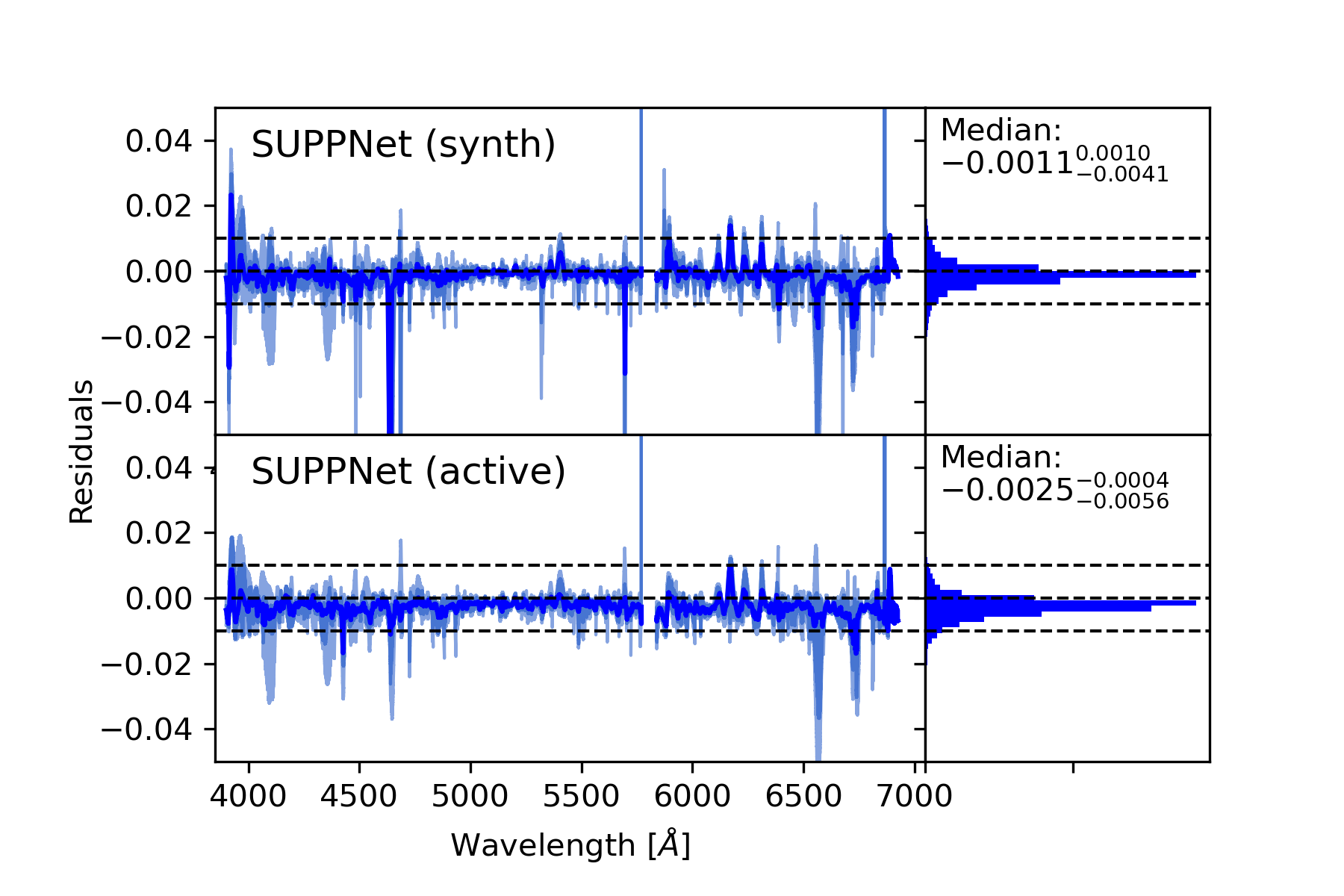

4.1 Synthetic spectra normalisation

To measure normalisation quality and minimise uncertainty introduced by the manual normalisation, the first test used only synthetic spectra. In the beginning, six chosen stars were modelled using ATLAS/SYNTHE codes. Parameters for synthetic spectra were taken from Table 2 and related articles. Missing parameters were manually estimated to be equal K, in the case of HD 155806 (O7.5 IIIe) star, and for HD 90882 (B9.5 V). Then, the synthetic normalised spectrum for each star was multiplied by about 200 different pseudo-continua derived from the manual normalisation. That gave about 1200 spectra in total, which were later normalised using the two versions of SUPPNet (synth and active), and used to calculate normalisation metrics.

| Star | HD 155806 | HD 90882 | HD 27411 | HD 37495 | HD 59967 | HD 25069 |

|---|---|---|---|---|---|---|

| Spectral type | O7.5 V | B9.5 V | A3m | F4 V | G4 V | K0 III |

| SUPPNet (synth) | ||||||

| SUPPNet (active) | ||||||

| SUPPNet (synth) | ||||||

| SUPPNet (active) | ||||||

| NP–TR | ||||||

| EN–TR |

The summary statistics of this experiment can be found in the top two rows of Table 3, in Fig. 6 and Fig. 19 in Appendix B. Medians of residuals, which measure a normalisation bias, are between (SUPPNet active, HD 25069, K0 III) and 0.0017 (SUPPNet synth, HD 27411, A3m). Residuals in the case of SUPPNet trained using active learning are systematically smaller than when trained using only synthetic dataset. This means that the latter places pseudo-continuum systematically lower. The dispersion of residuals measured as a difference between 84.13 and 15.87 percentiles are between 0.0022 (SUPPNet synth, HD 155806, O7.5 IIIe) and 0.0095 (SUPPNet synth, HD 27411, A3m). The residuals are often slightly smaller when active learning is applied. For SUPPNet trained using the active learning the dispersion of residuals is slightly but significantly smaller in the case of HD 27411 (A3m), HD 37495 (G4 V) and HD 25069 (K0 III), is significantly larger for HD 90882 (B9.5 V). There are no statistically significant differences between dispersions of residuals for HD 155806 (O7.5 V) and HD 37495 (F4 V). The detailed inspection of Fig. 6 shows that systematic errors vary significantly across both wavelength and spectra parameters. They are especially prominent for A3m (HD 27411) and F4V (HD 37495) stars, in wavelength shorter than 4500 Å, where they reach 0.03. Figure 7 is a close-up of the 3900–4500 Å wavelength range of the A3 V synthetic spectra with the mean normalisation result and residuals. It can be seen, that a significant normalisation bias arises in a range where the whole spectrum is below the continuum level. Average biases in other wavelength ranges of synthetic spectra’s residuals are generally below 0.01.

We consider this statistics to be close to the realistic normalisation uncertainty in wavelength ranges with spectral features well represented in synthetic models. Nonetheless, normalisation of the synthetic spectra does not allow us to draw conclusions about the normalisation quality of the observed spectra, as they contain additional features (e.g. complex emission spectral lines).

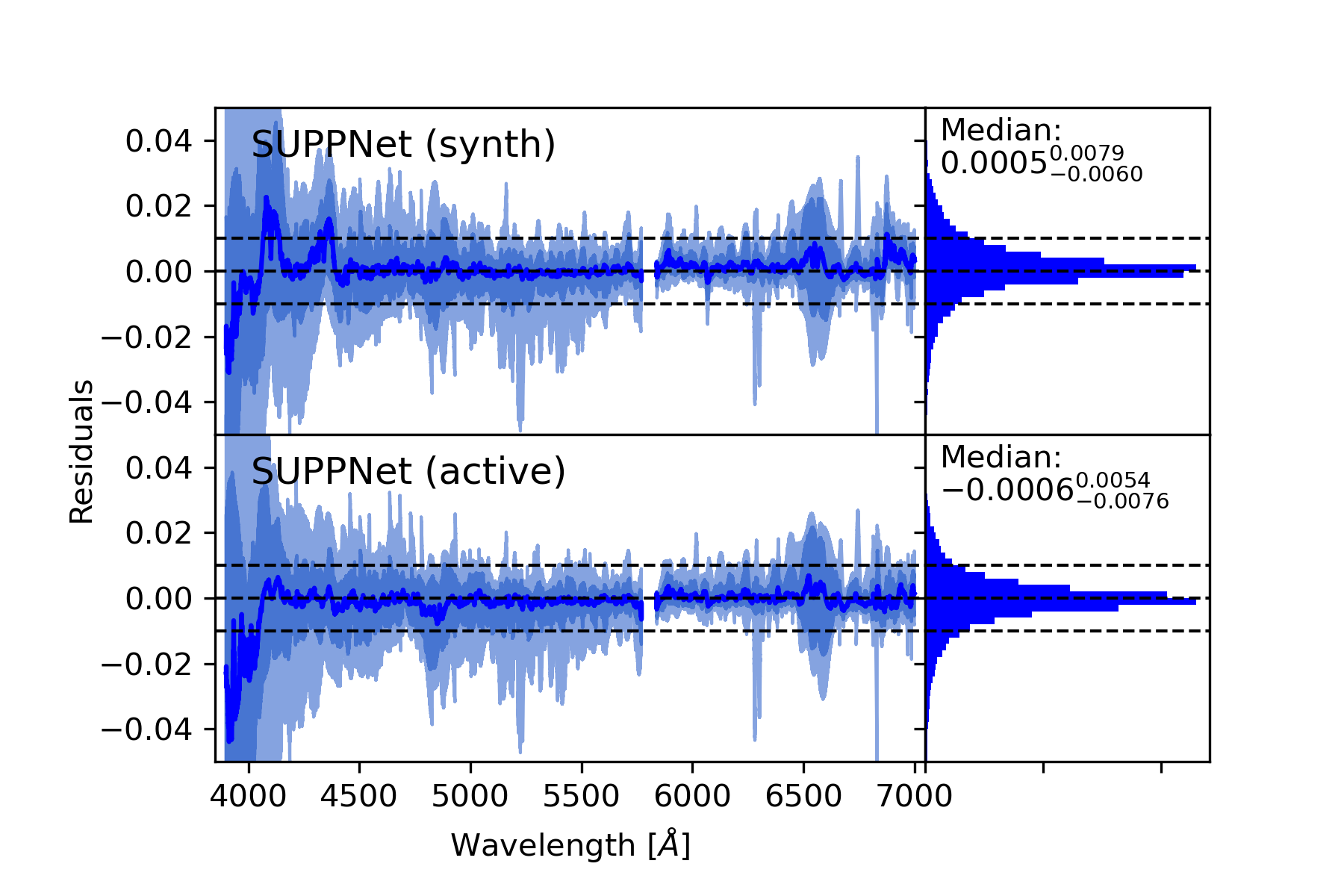

4.2 Summary UVES POP normalisation statistics

Approximately a hundred spectra from the UVES POP library were used to assess the quality of SUPPNet on observed stellar spectra. In the beginning, the majority of spectra from spectral type O to G were manually normalised. Then spectra were normalised using both versions of SUPPNet, and residuals’ statistics were calculated. The results are summarised in Fig. 8.

In the case of the model trained using only synthetic data some biases are prominent around 6750 Åand for wavelengths shorter than 4500 Å. Strong telluric lines at approximately 6870 Å, which were absent in synthetic spectra, are the cause of the first bias. The second problem appears because SUPPNet (synth) systematically places pseudo-continuum too low in wide hydrogen absorption lines of A and F spectra types. Check Figs. 20-24 in Appendix B for separate plots for each spectral type. The median of residuals is significantly different from 0 and equals , which means that the model places pseudo-continuum slightly above levels chosen by astronomers. The dispersion of residuals measured as a difference between 84.13 and 15.87 percentiles equals 0.0124. Root mean squared error equals 0.0122.

SUPPNet trained using an active learning approach shows slightly better characteristics. Dispersion of its residuals (0.0118, ) is not significantly different from the SUPPNet (synth), but most of the systematic effects that can be seen in Fig. 8 are reduced (compare bias in wavelengths shorter than 4500 Å) after training that included real spectra. Nonetheless, SUPPNet (active) shares the tendency of the model trained on a synthetic dataset, to place the pseudo-continuum higher than when a spectrum is manually normalised. This tendency is measured by a median of residuals which equals and is significantly (for a 95% confidence interval) different from 0. This effect can potentially be introduced by the human, who often model pseudo-continuum to lie lower than in reality. Differences in medians of residuals for both SUPPNet versions, although statistically significant, are at most comparable and often smaller than an intrinsic error of manual normalisation described in detail in Section 4.3.

Normalisation quality and active learning importance vary significantly across spectral types. In general the later the spectral type is the larger is the dispersion of normalisation residuals. Differences between residuals’ medians of both versions of SUPPNets for O, B, and A type stars are statistically significant. However, SUPPNet synth and active are not significantly different in terms of residuals’ dispersions and remaining medians. The results are summarised in Table 4 and summary plots for each spectral type can be found in Figs. 20-24 in Appendix B. The tendency to place pseudo-continuum higher than during manual normalisation holds for most spectral types, with a notable exception of G type stars. Inspection showed that in spectral ranges with substantial narrow absorption lines blending, where the continuum is absent across wide parts of a spectrum, the model tends to place pseudo-continuum below the correct level. A simple workaround for this SUPPNet limitation is to reduce default sampling from 0.05 Å to 0.03–0.04 Å, when working with spectral types later than G5.

| Spectral type | SUPPNet (synth) | SUPPNet (active) |

|---|---|---|

| O | ||

| B | ||

| A | ||

| F | ||

| G |

The second issue is the relatively high uncertainty in the H Balmer line, prominent especially in the case of F and A type stars. Closer examination showed that this can be explained by erroneous manual normalisation. For this spectral range, the manual normalisation is impossible as a wavy pattern introduced by imperfections of an instrument pipeline crosses and changes this spectral feature significantly (see Fig. 9 for details).

The positive influence of active learning on SUPPNet normalisation quality is the most prominent in the case of O type stars, where strong emission features are often present. A spectral range of the HD 148937 (O6.5) star, with H Balmer and He I 6678 Å lines in emission is shown in the Fig. 10 . SUPPNet (active) relatively well predicts pseudo-continuum across these features while SUPPNet, trained using synthetic data only, treats these features as a part of pseudo-continuum.

4.3 Detailed analysis of chosen stars

In the last step of the SUPPNet analysis, six stars were normalised automatically and manually by three of us (TR, EN and NP), and carefully compared. Their spectral types range from O7.5 V to K0 III and show most of the typical spectral features. The main characteristics of the selected stars are gathered in Table 2.

HD 155806 (HR 6397, mag) is the hottest Galactic Oe star (Fullerton et al., 2011). Its initial spectral classification O7.5 V[n]e (Walborn, 1973) was changed to O7.5 IIIe because of the strength of its metallic features (Negueruela et al., 2004). Stars of this type are rare and show a double-peaked or central emission in their Balmer lines.

HD 27411 (HR 1353, mag, A3m) is an example of a chemically peculiar (CP) star. Its atmospheric parameters and abundances were investigated in detail in the context of diffusion theory in the work of Catanzaro & Balona (2012).

HD 90882 (HR 4116, Sex, B9.5 V), HD 37495 (HR 1935, Col, F5 V), and HD 59967 (HR 2882, G3 V) are typical representatives of their spectral types. The first is a rapidly rotating B type star, and the last is a young ( Gyr), active, slowly rotating solar-twin star.

The last star for detailed analysis is HD 25069 (HR 1232, mag). In the UVES POP catalogue, its spectral type is G9 V, while SIMBAD’s database sources give K0 III or K0/1 III. Here K0 III is used. This star is a representative example of a late G and early K spectral type.

The results for all stars can be found in the four bottom rows of Table 3. Figure 11 contains detailed pseudo-continua and residuals for the HD 27411 (A3m) star. The results show that the differences between manually normalised spectra are between and in the median with typical dispersion, defined by a 15.87–84.13 percentiles band, ranging approximately from 0.0040 to 0.0330 (see NP–TR and EN–TR rows in Table 3). This is the scale of uncertainty inherent in manual normalisation. In terms of residual’s statistics, the quality of the SUPPNet normalisation method is superior in comparison to the quality of the manual normalisation.

The left panels of Fig. 11 and Figs. 25-29 in Appendix B convince why the pseudo-continuum fitting is a challenging task. In HD 27411 most of the spectrum is disturbed by a semi-periodic pattern that arises from imperfect orders merging and a blaze function removal. SUPPNet models this pseudo-continuum type relatively well for wavelengths longer than the Balmer H line and significantly worse in a spectral range from 4400 to 4800 Å, where the amplitude and frequency of this pattern significantly increase. Nonetheless, the error amplitude is of the order of 0.02, both for manual and SUPPNet normalisation. For wavelengths shorter than 4200 Å the dispersion between different normalised fluxes grows considerably. In this range, it is difficult to assess the normalisation quality without referring to the synthetic spectral model, which could potentially guide manual normalisation.

The uncertainty estimated by SUPPNets has only qualitative meaning and informs the users where they can expect normalisation results to be most uncertain, but not necessarily where the model failed in predicting pseudo-continuum. As can be seen in the bottom panel of Fig. 11 the estimated uncertainty is the highest in the wide absorption lines. However, in the spectral range from 4400 to 4800 Å the proposed method did not capture the ambiguous character of the predicted pseudo-continuum.

4.4 Resolution, rotational velocity, and noise

Stellar spectra present in databases have various levels of noise, resolutions, and projected rotational velocities. We tested the consistency of normalisation with respect to those parameters. For that purpose synthetic spectra calculated for parameters of HD 27411 (A3m) with a signal-to-noise equal 30 and 500, a projected rotational velocity equal 0, 50, 100, 200 km s-1, and resolution equal and were used.

Predicted pseudo-continuum is generally consistent for the tested signal-to-noise ratios. The differences between results increase for low-resolution spectra and in heavily blended line regions where there is no real continuum in the flux. The test with low-quality medium-resolution spectra showed that in this case, the proposed method places the pseudo-continuum significantly too high. This problem can be suppressed by increasing a sampling step or by training SUPPNet using low-resolution, noisy spectra.

For a spectrum broadened due to the projected rotational velocity, the differences between predicted pseudo-continua are generally smaller than 0.01, except for the wavelengths shorter than 4300 Å where a flux is well below expected pseudo-continuum.

5 Conclusions

Machine learning methods are becoming more and more important in science, particularly in astronomy. Here we had presented a method for spectrum normalisation that uses novel, one dimensional, fully convolutional, deep neural network architecture – SUPP Network. Its automatic character makes SUPPNet’s results reproducible and more homogeneous which is very important especially in the context of time series of spectra. Usage of synthetic spectra during the training makes the model aware of features present in spectra and helps to recover pseudo-continuum in regions where it cannot be done manually, e.g. in regions heavily blended by lines or across Balmer hydrogen lines with instrumental ripples. The accuracy of SUPPNet places it next to careful manual normalisation and makes it possible to omit human intervention in this step of spectrum pre-processing. It works well both with emission and absorption spectral features, with blended lines and spectral ranges where the real continuum is absent. If manual correction is necessary it can be done using a developed Python application or online, see Appendix D.

The main drawback of the proposed method is the fact that it uses a sliding window technique while in principle it is not necessary for fully convolutional neural networks, as they accept inputs of any length. Sliding window is necessary, as we used a min-max normalisation strategy for inputs. As a beneficial side effect, we are able to give some estimates of pseudo-continuum uncertainty.

Directions of future developments are the following: extension of the training set to include spectra from spectrographs other than UVES and FEROS, an extension of the set of empirical pseudo-continua to include fits from other astronomers, experiments with alternative input normalisation techniques that would eliminate the sliding window approach, and exploration of alternative ways to estimate prediction uncertainties. Other astronomers’ pseudo-continua and observations from different instruments are expected to reduce biases. The proposed technique can be extended in such a way that it uses coarse spectral type estimation and wavelength range information, which can further improve normalisation quality. Other possible development paths are exploitation of different neural network architectures and modules (e.g. attention module) and scaling the SUPPNet model up. The longstanding purpose is to use an automatically normalised spectrum for stellar parameters’ and abundances’ estimation that consistently includes normalisation errors.

The most important characteristic of the proposed method is its generality. It is straightforward to use the SUPP Network in similar tasks, like a trend or background modelling, and also use UPP modules in contexts other than related to one-dimensional signals processing, e.g. in image segmentation.

Acknowledgements.

T. Różański was financed from budgetary funds for science in 2018-2022 as a research project under the program ”Diamentowy Grant”, no. DI2018 024648. Research project partly supported by program ”Excellence initiative - research university” for years 2020-2026 for University of Wrocław.References

- Abadi et al. (2015) Abadi, M., Agarwal, A., Barham, P., et al. 2015, TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems, software available from tensorflow.org

- Aguilera-Gómez et al. (2018) Aguilera-Gómez, C., Ramírez, I., & Chanamé, J. 2018, A&A, 614, A55

- Antoniadis-Karnavas, A. et al. (2020) Antoniadis-Karnavas, A., Sousa, S. G., Delgado-Mena, E., et al. 2020, A&A, 636, A9

- Bagnulo et al. (2003) Bagnulo, S., Jehin, E., Ledoux, C., et al. 2003, Messenger, 114, 10

- Ball & Brunner (2010) Ball, N. M. & Brunner, R. J. 2010, International Journal of Modern Physics D, 19, 1049–1106

- Baron (2019) Baron, D. 2019, Machine Learning in Astronomy: a practical overview

- Cadusch et al. (2013) Cadusch, P. J., Hlaing, M. M., Wade, S. A., McArthur, S. L., & Stoddart, P. R. 2013, Journal of Raman Spectroscopy, 44, 1587–1595

- Carleo et al. (2019) Carleo, G., Cirac, I., Cranmer, K., et al. 2019, Reviews of Modern Physics, 91

- Catanzaro & Balona (2012) Catanzaro, G. & Balona, L. A. 2012, Monthly Notices of the Royal Astronomical Society, 421, 1222

- Cretignier et al. (2020) Cretignier, M., Francfort, J., Dumusque, X., Allart, R., & Pepe, F. 2020, Astronomy & Astrophysics, 640, A42

- dos Santos et al. (2016) dos Santos, L. A., Meléndez, J., do Nascimento, J.-D., et al. 2016, A&A, 592, A156

- Dozat (2016) Dozat, T. 2016

- Farias et al. (2020) Farias, H., Ortiz, D., Damke, G., Jaque Arancibia, M., & Solar, M. 2020, Astronomy and Computing, 33, 100420

- Fullerton et al. (2011) Fullerton, A. W., Petit, V., Bagnulo, S., Wade, G. A., & Wade. 2011, in Active OB Stars: Structure, Evolution, Mass Loss, and Critical Limits, ed. C. Neiner, G. Wade, G. Meynet, & G. Peters, Vol. 272, 182–183

- Gaia Collaboration et al. (2016) Gaia Collaboration, Prusti, T., de Bruijne, J. H. J., et al. 2016, A&A, 595, A1

- George & Huerta (2018) George, D. & Huerta, E. 2018, Physics Letters B, 778, 64–70

- Hendriks & Aerts (2019) Hendriks, L. & Aerts, C. 2019, PASP, 131, 108001

- Hoeser & Kuenzer (2020) Hoeser, T. & Kuenzer, C. 2020, Remote Sensing, 12, 1667

- Hojjatpanah et al. (2019) Hojjatpanah, S., Figueira, P., Santos, N. C., et al. 2019, A&A, 629, A80

- Howarth et al. (1997) Howarth, I. D., Siebert, K. W., Hussain, G. A. J., & Prinja, R. K. 1997, MNRAS, 284, 265

- Ivezić et al. (2019) Ivezić, v., Kahn, S. M., Tyson, J. A., et al. 2019, The Astrophysical Journal, 873, 111

- Kingma & Ba (2017) Kingma, D. P. & Ba, J. 2017, Adam: A Method for Stochastic Optimization

- Kirillov et al. (2019) Kirillov, A., Girshick, R., He, K., & Dollár, P. 2019, Panoptic Feature Pyramid Networks

- Kukačka et al. (2017) Kukačka, J., Golkov, V., & Cremers, D. 2017, arXiv e-prints, arXiv:1710.10686

- Kurucz (1970) Kurucz, R. L. 1970, SAO Special report, 309

- Lanz & Hubeny (2003) Lanz, T. & Hubeny, I. 2003, ApJS, 146, 417

- Lanz & Hubeny (2007) Lanz, T. & Hubeny, I. 2007, ApJS, 169, 83

- LeCun et al. (1989) LeCun, Y., Boser, B., Denker, J. S., et al. 1989, Neural Computation, 1, 541

- Lin et al. (2017) Lin, T.-Y., Dollár, P., Girshick, R., et al. 2017, Feature Pyramid Networks for Object Detection

- Long et al. (2015) Long, J., Shelhamer, E., & Darrell, T. 2015, Fully Convolutional Networks for Semantic Segmentation

- Mahabal et al. (2019) Mahabal, A., Rebbapragada, U., Walters, R., et al. 2019, PASP, 131, 038002

- Majewski et al. (2017) Majewski, S. R., Schiavon, R. P., Frinchaboy, P. M., et al. 2017, AJ, 154, 94

- Negueruela et al. (2004) Negueruela, I., Steele, I. A., & Bernabeu, G. 2004, Astronomische Nachrichten, 325, 749

- Nesterov (1983) Nesterov, Y. E. 1983, in Dokl. akad. nauk Sssr, Vol. 269, 543–547

- Newell et al. (2016) Newell, A., Yang, K., & Deng, J. 2016, Stacked Hourglass Networks for Human Pose Estimation

- Nissen et al. (2020) Nissen, P. E., Christensen-Dalsgaard, J., Mosumgaard, J. R., et al. 2020, A&A, 640, A81

- Noh et al. (2015) Noh, H., Hong, S., & Han, B. 2015, Learning Deconvolution Network for Semantic Segmentation

- Radosavovic et al. (2020) Radosavovic, I., Prateek Kosaraju, R., Girshick, R., He, K., & Dollár, P. 2020, arXiv e-prints, arXiv:2003.13678

- Ronneberger et al. (2015) Ronneberger, O., Fischer, P., & Brox, T. 2015, U-Net: Convolutional Networks for Biomedical Image Segmentation

- Royer (2009) Royer, F. 2009, On the Rotation of A-Type Stars, Vol. 765, 207–230

- Savitzky & Golay (1964) Savitzky, A. & Golay, M. J. E. 1964, Analytical Chemistry, 36, 1627

- Scherer et al. (2010) Scherer, D., Müller, A., & Behnke, S. 2010, in International conference on artificial neural networks, Springer, 92–101

- Schröder et al. (2009) Schröder, C., Reiners, A., & Schmitt, J. H. M. M. 2009, A&A, 493, 1099

- Simonyan & Zisserman (2015) Simonyan, K. & Zisserman, A. 2015, Very Deep Convolutional Networks for Large-Scale Image Recognition

- Swihart et al. (2017) Swihart, S. J., Garcia, E. V., Stassun, K. G., et al. 2017, AJ, 153, 16

- Virtanen et al. (2020) Virtanen, P., Gommers, R., Oliphant, T. E., et al. 2020, Nature Methods, 17, 261

- Škoda et al. (2020) Škoda, P., Podsztavek, O., & Tvrdík, P. 2020, A&A, 643, A122

- Walborn (1973) Walborn, N. R. 1973, AJ, 78, 1067

- Xie et al. (2016) Xie, S., Girshick, R., Dollár, P., Tu, Z., & He, K. 2016, arXiv e-prints, arXiv:1611.05431

- Xu et al. (2019) Xu, X., Cisewski-Kehe, J., Davis, A. B., Fischer, D. A., & Brewer, J. M. 2019, The Astronomical Journal, 157, 243

- Zhao et al. (2012) Zhao, G., Zhao, Y., Chu, Y., Jing, Y., & Deng, L. 2012, LAMOST Spectral Survey

- Zhao et al. (2017) Zhao, H., Shi, J., Qi, X., Wang, X., & Jia, J. 2017, Pyramid Scene Parsing Network

- Zhou et al. (2018) Zhou, Z., Siddiquee, M. M. R., Tajbakhsh, N., & Liang, J. 2018, UNet++: A Nested U-Net Architecture for Medical Image Segmentation

- Zorec & Royer (2012) Zorec, J. & Royer, F. 2012, A&A, 537, A120

Appendix A Diagrams of neural networks architectures

Block diagrams of neural network architectures included in exploratory tests.

Appendix B Plots

Detailed plots of the results.

Appendix C Resolution, rotational velocity, and noise

The results of resolution, rotation velocity, and noise influence on predicted pseudo-continuum are summarised in Fig. 30

Appendix D Codes

D.1 SUPPNet – online

An online version of the SUPPNet method is available: https://git.io/JqJhf. It is a fully front-end JavaScript application that gives access to the basic features of SUPPNet. It is a simple way to experiment with the proposed method and is intended to be the first choice for users who do not need all features of the full version of the code and for whom the performance offered by the website is sufficient. Additionally, the webpage contains interactive versions of plots gathered in this work.

Its basic limitations are a predetermined value of the sliding window shifts (equals 1024 samples), and a missing feature of adaptive spline smoothing which is replaced with Savicky-Golay filtering (Savitzky & Golay, 1964) in the online version. Users who are interested in automatised normalisation of big sets of spectra are encouraged to use the Python version of the code.

D.2 SUPPNet – Python version

The Python version of SUPPNet includes all features for spectrum normalisation, with relatively easy access to GPU acceleration for users who need top performance. It is available on GitHub https://github.com/RozanskiT/suppnet.

D.3 HANDY

HANDY555https://rozanskit.com/HANDY/ is an interactive tool for manual spectrum normalisation. Pseudo-continuum fitting in HANDY is based on manual selection of parts of the continuum, to which the Chebyshev polynomial of the selected order is then fitted in the defined areas. Akima spline functions are used between these areas. Additionally, HANDY includes the possibility to interpolate spectrum on the predefined grid of synthetic spectra, wraps SYNTHE/ATLAS codes (Kurucz, 1970), for spectrum synthesis that gives access to lines identification lists and includes radial velocity correction unit. Mentioned features make it a handy tool for initial spectrum exploration and/or manual normalisation.