Supervised and unsupervised learning of directed percolation

Abstract

Machine learning (ML) has been well applied to studying equilibrium phase transition models, by accurately predicating critical thresholds and some critical exponents. Difficulty will be raised, however, for integrating ML into non-equilibrium phase transitions. The extra dimension in a given non-equilibrium system, namely time, can greatly slow down the procedure towards the steady state. In this paper we find that by using some simple techniques of ML, non-steady state configurations of directed percolation (DP) suffice to capture its essential critical behaviors in both (1+1) and (2+1) dimensions. With the supervised learning method, the framework of our binary classification neural networks can identify the phase transition threshold, as well as the spatial and temporal correlation exponents. The characteristic time , specifying the transition from active phases to absorbing ones, is also a major product of the learning. Moreover, we employ the convolutional autoencoder, an unsupervised learning technique, to extract dimensionality reduction representations and cluster configurations of (1+1) bond DP. It is quite appealing that such a method can yield a reasonable estimation of the critical point.

I Introduction

The ability of modern machine learning (ML) [1] technology to classify, identify, or interpret large number of data sets, such as images, presupposes that it is suitable for providing similar analysis involved in exponentially large data sets of complex systems in physics. Indeed recently ML techniques have been widely applied to various branches of physics, including statistical physics [2, 3], condensed matter physics [4, 5, 6, 7], biophysics [8, 9], astrophysics [10], and many more fields in physics [11, 12]. ML techniques, especially the deep learning architecture [13, 14] equipped with deep neural networks, have become very powerful and have shown great potential in discovering basic physics laws.

ML has been explored as an important tool for statistical physics by converting the lengthy Monte Carlo simulations into time-saving pattern recognition process. In particular, ML has led to great success in the classification of phases and in identifying transition points of various protocol models such as the Ising model, potts model and XY model, etc[5, 6, 7, 15, 16]. Generally, in statistical physics we use ML techniques in conjunction with configurations, of small-sized systems, generated by Monte Carlo simulations [17]. For instance, the supervised ML method has been implemented to study the Ising model [5] of equilibrium phase transitions, in which both the fully connected network (FCN) and convolutional neural network (CNN) are used to train and learn raw configurations of phases. The results indicated that ML could predict the critical temperature and the spatial correlation exponent of the Ising model. In [16], the supervised ML method is also utilized to learn the percolation model and XY model with flying colors, and the critical threshold and the critical exponent are accurately predicted. These are just two typical examples of using the supervised ML method to study phase transitions. Of course, the study of unsupervised ML in phase transitions seems more appealing, despite its capability in lack of information [6, 18, 19, 20]. Unsupervised ML techniques, like principal component analysis (PCA) and autoencoder, have been applied to dimensionality reduction representations of the original data and identifying distinct phases of matter. These unsupervised ML techniques are efficient in capturing latent information that can describe phase transitions.

So far the discussion is only concerned about the equilibrium phase transitions, most of which has been very well studied in regards of critical features and henceforth universality class. Since the 1970’s, non-equilibrium critical phenomena [21, 22, 23, 24] have been attracting a lot of attention as they haven’t been studied thoroughly yet. Unlike the equilibrium phase transitions, the universality class of non-equilibrium phase transitions remains mysterious. Neither do we know what universality classes we have, nor do we know how many of them there are. Although it is generally believed that there exists a large number of systems whose phase transition behaviors belong to the university class of directed percolation (DP), according to the so-called DP conjecture. Moreover, the means to handle the non-equilibrium phase transitions is very limited. Even for the simplest and most well-known non-equilibrium phase transition model, the DP process, its (1+1)-dimensional analytic solution is yet to be achieved [22, 23]. Therefore, for low-dimensional systems (below the critical dimension ), the most reliable research method is still Monte Carlo simulations. However, the extra, namely time dimension extremely prolongs the procedure towards the steady state, which adds more difficulty in calculating the critical exponents and brings up more uncertainty in the measurements. Although some research methods for equilibrium critical phenomena [25, 26] can also be applied to non-equilibrium phase transitions but they are mostly model specific. Apparently, compared to the equilibrium phase transitions, the non-equilibrium phase transitions are harder to be tackled. Henceforth the question is: can we find an efficient method to deal with non-equilibrium phase transitions, in terms of the range of validity and the computation time? That is, the method should be both general and time saving.

In this work, we adopt both supervised learning and unsupervised learning to study the bond DP process. For the supervised learning techniques, the neural networks we use here are FCN and CNN [13], which are fit for 2-D image recognition. One of the findings is that the non-steady state configurations are sufficient for capturing the essential information of the critical states, in both (1+1)- and (2+1)-dimensional DP. Naturally, our neural networks can train and detect some latent parameters and exponents by sampling the configurations generated by Monte Carlo simulations [27, 28] of small-sized systems. For the unsupervised learning, we employ the convolutional autoencoder to extract dimensionality reduction representations and cluster configurations of (1+1) bond DP.

The main structure of this paper is as follows. In Sec.II.A, we briefly introduce the model of bond DP. Sec.II.B displays the basic architectures of two neural networks that will be used in the supervised learning. Sec.II.C is the data sets and ML results of (1+1)- and (2+1)-dimensional bond DP. Here, we successfully confirmed the critical points, the temporal and spatial correlation exponents, and the characteristic time exponent. In Sec.III, the autoencoder method of unsupervised ML is implemented to extract dimensionality reduction representations and cluster the generated configurations of (1+1)-dimensional bond DP, from which we can estimate the critical point. Finally, Sec.IV is a summary of this paper.

II Supervised Learning of Directed Percolation

II.1 The Model of DP

The non-trivial DP lattice model is simple and easy to simulate. The phenomenological scaling theory can be applied to describing DP, by which critical exponents are determined. Once the whole set of critical exponents of a model is complete, the universality class that the model belongs to establishes.

The DP model, exhibiting a continuous phase transition, is the most prominent universality class of absorbing phase transitions [29], which can describe a wide range of non-equilibrium critical phenomena such as contact processes [30, 31], forest fires [32, 33], epidemic spreadings [34], as well as catalytic reactions [35], etc. The conventional approaches for studying the scaling behaviors of DP are mean-field theory [36], renormalization groups [37], field theory approach [38] and numerical simulations [39] and so on. Generally, for DP, the mean-field approximation is valid above the upper critical dimension , as the particles diffusion mix exactly well. In contrast, the field theory method is applicable below the upper critical dimension. For low-dimension cases, numerical simulations are usually more convenient to implement. In the numerical simulation of DP, to obtain relatively accurate results, we usually take a large system size (for instance, ). This condition, in turn, causes the characteristic time [22] of the system being too large such that it takes extremely long to approach the steady states. This is the tricky thing that one has to deal with when simulating non-equilibrium systems. Given the advantages of ML techniques over small-sized systems, this paper will employ them to learn the bond DP model. For small-sized systems, in particular, it turns out that ML can quickly and accurately determine critical thresholds and critical exponents of many phase transition models.

The main work of this paper is to apply ML techniques to (1+1)- and (2+1)- dimensional bond DP [28, 40, 41] of non-equilibrium phase transitions. Before implementing ML, it is necessary to introduce the bond DP model. The model goes in two ways, either starting from a fully occupied initial state or from a single active seed (configurations are shown in Fig. 1). Then the model evolves through intermediate configurations according to the dynamic rules of the following equations,

| (1) |

where represents the state of node at time , are two random numbers. Configurations of two types of (1+1)-dimensional bond DP are shown in Fig. 1, where two adjacent sites are connected by a bond with probability . If the bond probability is large enough, a connected cluster percolates throughout the system.

The evolution of DP configurations is closely related to bond probability. In the case of a fully occupied lattice, for , the number of active particles will decay exponentially, and eventually the system reaches an absorbing phase. On the contrary, for , the number of active particles will reach a saturation value and we say the system is in an active phase. At the critical point of , the number of particles decreases slowly, following a power-law. On the other hand, how the bond DP starting from a single active seed evolves is shown in the bottom panel of Fig. 1. For , the number of active particles increases for a short time and then decays exponentially, while for the probability to form an infinite cluster is finite. At the critical point , an infinite cluster will be formed.

The critical behavior of DP can be described in two different ways, either by percolation probability, or via the order parameter of steady-state density,

| (2) |

where represents the probability that a site belongs to a percolating cluster, denotes particle density, and is resulted by rapidity-reversal symmetry. There are some other observations in the DP model, such as the number of active sites , the survival probability , and the average mean square distance of the spreading . Besides, we also attach importance to two non-independent correlation lengths, which are called temporal correlation length and spatial correlation length . At criticality, they exhibit the following asymptotical power-law behaviors

| (3) |

| (4) |

where and are the temporal correlation exponent and the spatial correlation exponent, respectively.

In non-equilibrium statistical mechanics, systems evolve over time which is an independent degree of freedom on equal footing with the spatial degrees of freedom. When a fully occupied lattice as an initial state is specified for a system, close to the critical state, the density of active sites decays algebraically as

| (5) |

where . Similarly, the spatial correlation length grows as

| (6) |

where . In finite-size systems, one finds deviations from these asymptotic power-laws. There will be finite-size effects after a typical time which grows with the system size. If is the lateral size of the system (and is the total number of sites), the absorbing state reaches at a characteristic time [21].

The critical exponents of DP are not independent and satisfy the following scaling relations,

| (7) |

Unlike isotropic percolation, the upper critical dimension of DP is [42, 43]. The scaling relation with the dimension holds only if . The critical thresholds and scaling exponents of DP can be checked in Table. 3.

II.2 Methods of Machine Learning

a

b

Usually, one uses ML methods, which include supervised learning, unsupervised learning, reinforcement learning, and so on, to recognize hidden patterns embedded in complex data. With the continuous improvement of computing power, neural network based algorithms play significant roles in ML. Extremely complex tasks usually require deep neural networks, which contain many hidden layers. The two main tasks of supervised learning are regression and classification. Before classification learning, we implemented a linear regression machine learning on DP’s critical configurations. To classify the phases of DP, all we need is a simple neural network. The neural networks we use here are the most common and widely used, FCN and CNN, as shown in Fig. 2. These two networks have excellent learning effects for image recognition and classification. In our neural networks, the input data is the bond DP configurations generated from Monte-Carlo simulations of small-sized systems.

In artificial neural networks, the structure of FCN generally consists of an input layer, a hidden layer, and an output layer. Its neurons are fully connected to the others of the previous layer and the next layer. For our FCN, the hidden layer contains neurons, and two neurons are in the output layer so that we can carry out a binary classification between active phases and absorbing phases.

Our CNN structure consists of two layers, convolutional layer and pooling layer. Actually we do not have to limit ourselves to two layers, and we could instead employ a stack of convolutional and pooling layers if needed. The images that are fed into CNN are DP configurations. For each of them, of an image represents the lattice length of DP, and is the time step. The convolution kernel for convolving the two-dimensional images is also two dimensional. The padding form in CNN takes the form of ”same”, which keeps the size of the feature map of the image after the convolution operation. As a two-dimensional convolution kernel can only extract one feature in the convolution operation of the whole two-dimensional image, henceforth the kernels share weights. So we can use multiple convolution kernels to extract multiple features. After the feature map processing through the convolutional layer and pooling layer, the data flow is flattened and then transmitted to a fully connected layer. Finally, we take a binary classification via the fully connected layer to produce the classification result. To prevent over-fitting [44], we add the L2-norm () to the loss function. The AdamOptimizer is used to speed up our neural networks. Our ML is implemented based on TensorFlow 1.15.

II.3 Data Sets and Results

In supervised learning, the data sets generated by Monte Carlo simulations include a training set, a validation set, a test set and their corresponding label sets. Among them, the generating mechanisms of the training, validation and test sets are the same. And the use of the validation set is to optimize our model parameters.

Before learning, the generated data of configurations need to be labeled, and some hyper-parameters of neural networks are defined. In the input layer, the number of neurons equals the dimensions of the input data, which is ( represents the percolation time step). An input configuration generated with the bond probability is labeled as ”0”, which means that the system reaches an absorbing phase. For , an input configuration is labeled as ”1”, and the system is thought of as percolating. Here, five different system sizes of the fully occupied initial state are used to generate data, where is 8, 16, 32, 48, and 64, respectively. For each size, in (1+1)-dimensional DP, the configuration is generated by selecting bond probabilities between with an interval of , and samples per probability. The ratio of training set, verification set and test set samples is . The bond probability range in the (2+1)-dimensional case is , where we also use a similar sampling mechanism.

In order to study the effect of input data transformation on supervised ML, we will use FCN and CNN respectively to learn the original data after transformation. The data transformation for DP is as follows. In the case of (1+1)-dimensional DP, we flatten the data of each time step into a one-dimensional array and then feed it to FCN. And CNN, on the other hand, is fed a two-dimensional array. In the case of (2+1) dimensions, the configuration data of DP is also flattened into a one-dimensional array and then fed to FCN. The data that CNN receives is still a two-dimensional array transformed from a three-dimensional image. In addition, in the case of (1+1) dimensions, a configuration is just an image or a two-dimensional array, and we do not need to remove or filter any input data, but intercept a part of it. Through testing, we find that even if we only select configurations corresponding to a part of the time series, we are able to learn the correct results. Namely, the characteristic time of DP is , which signifies the zone of steady-state, but we only choose (, ) to learn, where and respectively represent the length and width of the input data. This choice greatly reduces the computational effort.

Fig. 1 shows two configurations generated from two different initial conditions under the same system size. It is natural to think which one will be more applicable for the learning. After systematic tests, we found that configurations generated by a fully occupied initial state are more suitable for our algorithm. This is empirically evidenced by the testing accuracy of the initial condition with a single active seed as is also presented in Tab. 1. It can be clearly seen that the fully occupied initial state has higher testing accuracy than the single-seed one. We conjecture that the fully occupied initial state possesses more sites, which can provide much more information to the neural network so that the ML results obtained are more accurate. Therefore, the following discussions are based on configurations generated from a fully occupied initial state.

II.3.1 Learning DP’s critical configuration via linear regression

|

|

| (a) | (b) |

If one needs to understand the meaning of the time variable in DP, the particle density at a time step, namely the ratio of the occupied lattice divided to the total number of lattice points, could be measured.

The left panel of Fig. 3 shows the Monte Carlo simulation results of (1+1)-dimensional bond DP with a fully occupied initial state. According to Eq. 5, the critical exponent can be obtained by fitting the particle density versus . With this as a reference, we now perform a linear regression for the critical configuration of DP, as shown in the right panel of Fig. 3. Our original purpose is to predict at a certain moment by the linear regression method of machine learning. That is to say, fed an input of at an early time step, the regression can predict at a later time step. In (b) of Fig. 3, after iterations, the weight learned from the regression model is , that is, . As can be seen, a single time step can correspond to many different particle densities, so the exponent obtained by such a regression model is not accurate. The main reason is that the critical configuration fluctuates greatly. Therefore, for DP, we believe that linear regression is not a good tool.

Next, we move to other critical exponents of DP.

II.3.2 Learning DP phases via FCN

The main goal of using supervised ML in this article is to make phase classification of DP configurations generated by different bond probabilities, from which the critical properties, such as critical points and critical exponents, can be obtained. This target is very different from time series classification or prediction, for instance the studies given in Refs. [45, 46]. Although our model produces time series, we are dealing with phase classification rather than time series classification. Namely, all the configurations generated by a single bond percolation probability belongs to the same category. For example, in the case of (1+1)-d DP, the configuration corresponding to a certain time step is one-dimensional. We combine the configurations of the all-time series into a two-dimensional image for machine learning. Therefore, in our study time is just another dimension.

a

b

c

d

e

f

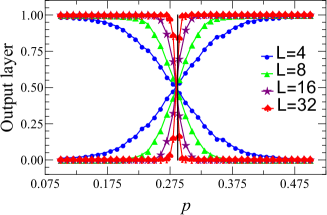

Fig. 4 shows the ML results of (1+1)-dimensional and (2+1)-dimensional bond DP by using FCN. In panel (a) of Fig. 4, the mean prediction of the output layer is given for (1+1)-dimensional DP, which distinguishes DP configurations in the range of for five different system sizes, with = 8, 16, 32, 48, and 64. We illustrate with a pair of blue curves for , which correspond to the two output predictions of the neural network. As , the bond probability, increases, the prediction probability that the configurations generated by the corresponding bond probability are in an active phase also increases. Whereas, the other curve indicates that with the increasing of , the prediction probability that the configuration will reach an absorbing phase decreases continuously. And the intersection point of these two curves is the phase transition point predicted by the neural network [5, 16]. Since all configurations are generated by finite-size lattices, the range of will restrict the length of connectedness, and no real phase transition occurs with limited degrees of freedom. So for the spatial correlation length, near the phase transition point we have,

| (8) |

In order to eliminate the influence of finite-size effects, the abscissa of Fig. 4 (a) is rescaled to , which yields Fig. 4 (b). By tuning to make all five pairs of curves collapse, we obtain . We notice that in Fig. 4 (b) the blue curves appear a little bit bumped compared to other curves, due to its small lattice size. Then, taking out the five crossing critical thresholds predicted in five different system sizes, we plot them as a function of to achieve finite-size scaling with extrapolation from being finite to . As shown in Fig 4 (c), the critical point is through finite-size scaling fitting.

By using the same output layer of the above five systems, one can also get the temporal correlation exponent in the (1+1)-dimensional bond DP, provided that the dynamic exponent , in Eq. 7, is known. The scaling governing the temporal correlation length is given by,

| (9) |

where the temporal correlation exponent can be obtained by the data collapse involving rescaling the abscissa into .

The critical threshold of (1+1)-dimensional bond DP, through learning via FCN, conforms to the counterpart given by the literature [22]. Furthermore, the spatial and temporal correlation exponents of DP can be obtained by the data collapse of finite-size scalings.

In addition, the FCN is also applied to learn and predict the (2+1)-dimensional bond DP, and the results are shown in the bottom row of Fig. 4. For the sake of calculation, the time steps are still selected to be (). It’s worth noting that in (1+1) dimensions we learn and predict the configurations of dimension , where , . But for the case of (2+1) dimensions, we need to transformed its configurations from into . The fact is that, we reshape and into a two-dimensional image as the input configuration. As expected, the results of the (2+1)-dimensional bond DP indicates that although the input data are reshaped, the FCN can still learn the trend of configurations.

II.3.3 Learning DP phases via CNN

In this part, we present the learning results of bond DP by using CNN, as shown in Fig. 5. For the configurations of (1+1)-dimensional DP, we convert the one-dimensional lattice of each time step into a two-dimensional image. And for the case of (2+1)-dimensional DP, we reshape into (). It turns out that the reshaped data still contain essential characteristics of the configurations from which CNN would explore.

a

b

c

d

e

f

| initial condition | dimension | Neural network | |||||

|---|---|---|---|---|---|---|---|

| single seed | FCN | 0.7403 | 0.7819 | 0.8183 | 0.8373 | 0.8888 | |

| fully occupied | FCN | 0.7698 | 0.8675 | 0.9137 | 0.9577 | 0.9683 | |

| fully occupied | FCN | 0.9060 | 0.9511 | 0.9779 | 0.9933 | 0.9966 | |

| fully occupied | CNN | 0.8236 | 0.8856 | 0.9261 | 0.9622 | 0.9743 | |

| fully occupied | CNN | 0.9201 | 0.9576 | 0.9840 | 0.9944 | 0.9980 |

|

|

| (a) | (b) |

|

|

| (a) | (b) |

|

|

| (c) | (d) |

In (1+1)-dimensional bond DP, we have verified through ML if configurations are generated only between or , this would lead to large uncertainty in predicating the critical threshold. It turns out that when using the supervised learning method in the DP model, the more dense labeled data we have in the training set, the more accurate the testing results we will achieve.

Besides, for ML of (1+1)-dimensional and (2+1)-dimensional bond DP, we also present their test accuracies in Table. 1. From Table. 1, it can be seen that with the same system size, the test accuracy of the (2+1)-dimensional bond DP is significantly higher than that of the (1+1)-dimensional one. When we use the same neural network to study the DP configuration, the absolute size of the higher dimensional system size will be larger, which could lead to more accurate results. This might be that in higher dimensions, particles have more degrees of freedom, and they diffuse better. In higher dimensions, even small ensemble averages can yield very accurate results. On the other hand, learning configurations of DP in the same dimension, CNN has a higher test accuracy than the FCN. It is mainly because, compared to the FCN, CNN shares only one parameter or weight during the training process.

II.3.4 Optimal range and transformation effect

So far, we have completed supervised ML for (1+1)-dimensional and (2+1)-dimensional bond DP. It seems that we could have some discussion over the optimal range of the bond probability and effect of the transformation of the input data.

In order to obtain the optimal sample value range for ML, we have conducted systematic and extensive experiments. There is unfortunately no theory about what range is optimal. The final bond probability range we select in (1+1)-dimensional DP is . Here is how we make the choice. Table 2 displays the test accuracies of six different ranges. It can be seen that the last two ranges, and , yield much higher accuracies than the rest of them. Fig. 8 demonstrates the learning curves by the same six different ranges and still the last two have the best over-all performance. So combining Table 2 and Fig. 8, would be optimal with higher accuracy, better performance and less computational cost. It is interesting to notice that the red curve in Fig. 8, representing the range of , which contains only 10 percent of the whole bond probability range . But its test accuracy of is only and the learning is very poor. This could be that neural networks do not have enough information to learn the trend of a series of configurations. Because close to the critical point, the configurations of the bond probability fluctuate greatly.

| range | [0.6,0.7] | [0.55,0.75] | [0.50,0.80] | [0.45,0.85] | [0.40,0.90] | [0.35,0.95] |

| test accuracy | 0.6913 | 0.8093 | 0.8686 | 0.9035 | 0.9137 | 0.9251 |

We have compared the learning curves for using FCN and CNN. In the case of (1+1) dimensions, the comparison of Fig. 4 (b) and Fig. 5 (b) show that FCN and CNN can lead to the same prediction results of spatial correlation exponent. Fig. 4 (c) and Fig. 5 (c) show that they also give the same values of critical points. The same comparisons in (2+1) dimensions have also been done and we find no significant difference of the critical points and critical exponent of interest. This indicates that the transformation on the input data, at least through FCN and CNN, has little effect on the final results.

II.3.5 ML characteristic time of DP

Conventionally, in equilibrium statistical mechanics, we can deal with statistical properties associated with static conditions. While in out-of-equilibrium cases, we have to process an explicit dynamical description, where time takes the form of an extra dimension. Non-equilibrium steady states do not satisfy detailed balance. DP displays a non-equilibrium phase transition from a fluctuating phase into an absorbing state, which we call an absorbing phase transition. Different from equilibrium phase transitions, absorbing phase transitions are characterised by at least three independent critical exponents , , (or ).

Since learning the critical threshold of bond DP has been successful, it is natural to ask whether the supervised ML method is also applicable to the prediction of the characteristic time of the same model. Here we fix the given critical bond probability and chose different time steps to generate configurations. For example, for a lattice size of and time steps of , the input data in the neural network is , and .

In ML of the characteristic time , for different system sizes, 2500 samples of configurations are selected for each time step. To avoid the data from being too large, for each system size, we only choose time steps adjacent to the characteristic time for ML. Nonetheless, we can achieve a test accuracy of at least . If we enlarge the interval of consecutive time steps, one can achieve a test accuracy above .

Fig. 6 shows the results of FCN and CNN in supervised ML of characteristic time . Here we only take FCN as an example. The intersection of the two curves gives the characteristic time. Configurations generated by time steps smaller than the characteristic time are labeled as ”0”, which indicates the system is still in an active state. Otherwise, configurations generated by time steps larger than the characteristic time are labeled as ”1”, which means that the system has reached an absorbing phase. We then take the intersections of the five different size results and present them on the versus in Fig. 7 (a). Finally, the power-law fitting yields the power exponent in (1+1) dimensions, which is consistent with the theoretical value. On this basis, we conclude that the supervised ML can also be applied to the learning and prediction of the characteristic time .

III Unsupervised ML of DP

In dimensionality reduction for data visualization of phase transitions, many methods such as PCA [6], T-SNE [16] and Autoencoder [18, 19] have been exploited. PCA is a linear dimensionality reduction method and is usually used only for processing linear data. Autoencoder, on the other hand, can be regarded as the generalization of PCA, which can learn nonlinear relations and has stronger ability to extract information. Autoencoder is based on network representation, so it can be used as a layer to construct deep learning networks. With proper dimensionality and sparse constraints, autoencoder can learn more interesting data projection than PCA and other methods. Here our purpose is mainly to extract latent features of the configuration data, so autoencoder is a suitable tool.

We regard a given configuration of (1+1)-dimensional bond DP as a two-dimensional image containing a time dimension. Through compression representations, the neural network non-linearly transforms and encodes input data, which is then transferred to the hidden neurons. Two hidden neurons are included in our setup of autoencoder, the structure schematic diagram of which is shown in Fig. 9. Once a set of DP configurations generated by a series of bond probabilities are fed in, the autoencoder starts to train weights using methods like back propagation to return the target values equal to the input one. In this work, we choose a two-dimensional convolutional neural network as the encoding and decoding network. After passing through two convolutional layers and pooling layers in the encoding network, the data flow will be compressed by a fully connected layer into one or two hidden neurons. The decoding process is the inverse process of the encoding process, which can be regarded as a generative network. Here we focus on the dimensionality reduction process of encoding.

In the case of (1+1)-dimensional bond DP, the lattice size we choose to learn is . According to , the corresponding characteristic time should be . In bond DP, if , the system could not reach an absorbing state until . Artificially, we choose the time step which is larger than . By doing so, the neural network can receive more information about the configurations of DP.

In the encoder neural network, we assign 200 hidden neurons to the latent variable. In Fig. 10, we can see that the details of the raw DP configurations are lost during the reconstruction process. But the basic information of the input configuration is preserved in the latent variable. More Importantly, the general picture of many areas may still exist, so the latent variable is able to save information of the original configuration.

First, we limit the autoencoder network to two hidden neurons and display the results in Fig. 11. Among them, the left part in Fig. 11 represents the clusters of ten kinds of configurations generated by different bond probabilities, which are from with an interval of , and each bond probability produces sets of configurations. We can clearly see that the configurations generated with the same probability are clustered in a close position. The right part of Fig. 11 is the clustering diagram of configurations generated by different bond probabilities between and with equal intervals, from which we can find that obvious transition phenomenon occurs near a certain point. It is worth noting that, without changing any parameters of the neural network every time running the autoencoder neural network we may get a different cluster diagram. In particular, the horizontal and vertical coordinates of the versus visualization of the neural network would change too.

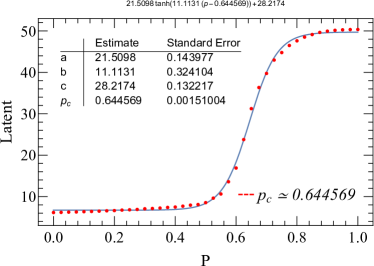

|

|

| (a) | (b) |

To find the transition point, we limit the latent layer of the two-dimensional convolutional autoencoder neural network to just one single hidden neuron whose results are displayed in the left part of Fig. 12. Let the hyper-parameters of the autoencoder neural network remain unchanged, we continue to execute the autoencoder program and get another similar result, as shown in the right part of Fig. 12. Take the left one as an example, red dots indicate that the single hidden neuron is regarded as a function of corresponding bond probabilities. To determine the critical point, we perform a non-linear fitting in the form of the hyperbolic tangent function (the blue line is the fitting curve), and the jumping location is exactly what we are looking for. The prediction of the critical point of the left figure is , while the right one is . They are very close to the value given by the literature [22] of (1+1)-dimensional bond DP, in which .

|

|

| (a) | (b) |

|

|

| (c) | (d) |

In order to capture the meaning of the information contained in the latent variables, we perform the following two studies. One is the reconstruction of the configurations shown in Fig. 12, which shows that the latent variable contains basic information about the original configurations. On the other hand, we implemented another Monte Carlo simulation method for (1+1)-dimensional DP, and monitored the corresponding particle density for each bond probability. As with autoencoder, this Monte Carlo simulation corresponds to a lattice size of and a time step of , respectively. The result is shown in Fig. 12 (c), where the jumping point is the critical point, yielding approximately . Fig. 12 (d) is the same as Fig. 12 (b), and the only difference is that the y-axis of the former is rescaled by the maximum value. Intuitively, we find that the Monte Carlo simulation results and the autoencoder results have very similar trends. For the same bond probability, the particle density and the single latent variable have nearly the same curve. So we believe that the latent variable should be at least numerically proportional to the particle density, which is the order parameter. And from this point of view, it is not surprising that the transition point in the latent variable versus bond probability is simply the critical point.

The findings of this study indicate that the convolutional autoencoder neural network can accurately capture the phase transition point, and compress essential information of bond DP configurations into one neuron or two.

We hope that this finding may shed some light on the study of non-equilibrium phase transitions. In general, the standard Monte Carlo simulations are costly, compared to the machine learning techniques. However, we notice that machine learning is not working for all the critical exponents. This is why we may consider combining the two techniques. ML can predict the critical points quickly and accurately whereas the Monte-Carlo simulations have its own advantages.

IV Conclusion

This paper mainly studied critical thresholds, spatial and temporal correlation exponents, and characteristic times of the bond DP model in both (1+1) and (2+1) dimensions by using artificial neural networks. Our conclusions are as follows.

Firstly, we successfully predicted critical thresholds and spatial and temporal correlation exponents of this model by using supervised ML. The results are in Tab. 3. Through this, we found that supervised ML can give a prediction result very close to the literature. Additionally, with the same size, the test accuracy of the (2+1)-dimensional bond DP is significantly higher than that of the (1+1)-dimensional one. Even by reshaping the configurations of the complex system, neural networks can still learn its trend very well. And we also found that learning configurations of DP in the same dimension, CNN has a slightly higher test accuracy than FCN.

| exponents | literature | prediction | literature | prediction |

|---|---|---|---|---|

| 0.6447 | ||||

| 1.733847 | ||||

| 1.096854 | ||||

| 0.276486 | 0.2728 |

Second, regarding time as an additional dimension of non-equilibrium phase transitions, we then studied the characteristic time of the bond DP from a fully occupied initial state to an absorbing state by supervised ML. As we can see, the predictions of the two neurons of the output layer by using FCN do not follow smooth curves, which implies that the system is greatly affected by finite-size effects and fluctuations. However, when CNN is employed, we can have much better curves. After training the configurations generated by different time steps, the neural network will identify whether the system has reached an absorbing state or not, as a new similar configuration is fed in. Then, the intersection of two output neuron curves is the predicted characteristic time.

Third, the (1+1)-dimensional bond DP is learned by using the unsupervised autoencoder method. We not only successfully made a clustering of its configurations generated by different bond probabilities but also obtained an estimation of the critical point.

When handling the problem of DP with Monte Carlo simulations, we need a sufficiently large lattice size and time steps to obtain a relatively accurate critical threshold of . However, ML works for much smaller systems. The research of phase transitions in statistical physics is greatly benefiting from ML algorithms. ML can not only learn and predict the critical point but also estimate critical exponents of correlation exponents when the system approaches the critical point. Analogous to the Ising model in equilibrium phase transitions, the raw configurations of DP in non-equilibrium phase transitions can also be learned and predicted by neural networks.

In summary, in this paper we argued that ML techniques can be applied to non-equilibrium phase transitions. It is optimistic that with the continuous advancement of computer hardware technology and ML algorithms, plenty of ML techniques will be extended to solving problems of non-equilibrium phase transitions in statistical physics.

V Acknowledgements

We wish to thank Juan Carrasquilla and Wanzhou Zhang for the helpful computer programs. We thank the two referees for their constructive suggestions and comments. This work was supported in part by the Fundamental Research Funds for the Central Universities, China (Grant No. CCNU19QN029), the National Natural Science Foundation of China (Grant No. 11505071, 61702207 and 61873104), and the 111 Project 2.0, with Grant No. BP0820038.

References

- Jordan and Mitchell [2015] M. I. Jordan and T. M. Mitchell, Science 349, 255 (2015).

- Engel and Van den Broeck [2001] A. Engel and C. Van den Broeck, Statistical mechanics of learning (Cambridge University Press, 2001).

- Mehta and Schwab [2014] P. Mehta and D. J. Schwab, arXiv preprint arXiv:1410.3831 (2014).

- Carleo and Troyer [2017] G. Carleo and M. Troyer, Science 355, 602 (2017).

- Carrasquilla and Melko [2017] J. Carrasquilla and R. G. Melko, Nature Physics 13, 431 (2017).

- Wang [2016] L. Wang, Physical Review B 94, 195105 (2016).

- Van Nieuwenburg et al. [2017] E. P. Van Nieuwenburg, Y.-H. Liu, and S. D. Huber, Nature Physics 13, 435 (2017).

- McKinney et al. [2006] B. A. McKinney, D. M. Reif, M. D. Ritchie, and J. H. Moore, Applied bioinformatics 5, 77 (2006).

- Schafer et al. [2014] N. P. Schafer, B. L. Kim, W. Zheng, and P. G. Wolynes, Israel journal of chemistry 54, 1311 (2014).

- VanderPlas et al. [2012] J. VanderPlas, A. J. Connolly, Ž. Ivezić, and A. Gray, in 2012 conference on intelligent data understanding (IEEE, 2012) pp. 47–54.

- Mehta et al. [2019] P. Mehta, M. Bukov, C.-H. Wang, A. G. Day, C. Richardson, C. K. Fisher, and D. J. Schwab, Physics reports 810, 1 (2019).

- Carleo et al. [2019] G. Carleo, I. Cirac, K. Cranmer, L. Daudet, M. Schuld, N. Tishby, L. Vogt-Maranto, and L. Zdeborová, Reviews of Modern Physics 91, 045002 (2019).

- Goodfellow et al. [2016] I. Goodfellow, Y. Bengio, and A. Courville, Deep learning (MIT press, 2016).

- Krizhevsky et al. [2012] A. Krizhevsky, I. Sutskever, and G. E. Hinton, in Advances in neural information processing systems (2012) pp. 1097–1105.

- Broecker et al. [2017] P. Broecker, J. Carrasquilla, R. G. Melko, and S. Trebst, Scientific reports 7, 1 (2017).

- Zhang et al. [2019] W. Zhang, J. Liu, and T.-C. Wei, Physical Review E 99, 032142 (2019).

- Hammersley [2013] J. Hammersley, Monte carlo methods (Springer Science & Business Media, 2013).

- Wetzel [2017] S. J. Wetzel, Physical Review E 96, 022140 (2017).

- Hu et al. [2017] W. Hu, R. R. Singh, and R. T. Scalettar, Physical Review E 95, 062122 (2017).

- Wang and Zhai [2017] C. Wang and H. Zhai, Physical Review B 96, 144432 (2017).

- Henkel et al. [2008] M. Henkel, H. Hinrichsen, S. Lübeck, and M. Pleimling, Non-equilibrium phase transitions, Vol. 1 (Springer, 2008).

- Hinrichsen [2000] H. Hinrichsen, Advances in physics 49, 815 (2000).

- Lübeck [2004] S. Lübeck, International Journal of Modern Physics B 18, 3977 (2004).

- Haken [1975] H. Haken, Reviews of Modern Physics 47, 67 (1975).

- Amit and Martin-Mayor [2005] D. J. Amit and V. Martin-Mayor, Field Theory, the Renormalization Group, and Critical Phenomena: Graphs to Computers Third Edition (World Scientific Publishing Company, 2005).

- Domb [1996] C. Domb, The critical point: a historical introduction to the modern theory of critical phenomena (CRC Press, 1996).

- Dhar and Barma [1981] D. Dhar and M. Barma, Journal of Physics C: Solid State Physics 14, L1 (1981).

- Grassberger [1989] P. Grassberger, Journal of Physics A: Mathematical and General 22, 3673 (1989).

- Grinstein and Muñoz [1997] G. Grinstein and M. A. Muñoz, in Fourth Granada Lectures in Computational Physics (Springer, 1997) pp. 223–270.

- Liggett [2012] T. M. Liggett, Interacting particle systems, Vol. 276 (Springer Science & Business Media, 2012).

- Dickman and Burschka [1988] R. Dickman and M. A. Burschka, Physics Letters A 127, 132 (1988).

- Clar et al. [1996] S. Clar, B. Drossel, and F. Schwabl, Journal of Physics: Condensed Matter 8, 6803 (1996).

- Albano [1994] E. V. Albano, Journal of Physics A: Mathematical and General 27, L881 (1994).

- Mollison [1977] D. Mollison, Journal of the Royal Statistical Society: Series B (Methodological) 39, 283 (1977).

- Ziff et al. [1986] R. M. Ziff, E. Gulari, and Y. Barshad, Physical Review Letters 56, 2553 (1986).

- De’Bell and Essam [1983] K. De’Bell and J. Essam, Journal of Physics A: Mathematical and General 16, 385 (1983).

- Kinzel and Yeomans [1981] W. Kinzel and J. Yeomans, Journal of Physics A: Mathematical and General 14, L163 (1981).

- Janssen and Täuber [2005] H.-K. Janssen and U. C. Täuber, Annals of Physics 315, 147 (2005).

- Hinrichsen and Koduvely [1998] H. Hinrichsen and H. M. Koduvely, The European Physical Journal B-Condensed Matter and Complex Systems 5, 257 (1998).

- Maslov and Zhang [1996] S. Maslov and Y.-C. Zhang, Physica A: Statistical Mechanics and its Applications 223, 1 (1996).

- Wang et al. [2013] J. Wang, Z. Zhou, Q. Liu, T. M. Garoni, and Y. Deng, Physical Review E 88, 042102 (2013).

- Obukhov [1980] S. Obukhov, Physica A: Statistical Mechanics and its Applications 101, 145 (1980).

- Cardy and Sugar [1980] J. L. Cardy and R. Sugar, Journal of Physics A: Mathematical and General 13, L423 (1980).

- Glassner [2018] A. Glassner, Seattle, WA, USA: The Imaginary Institute (2018).

- Frank et al. [2001] R. J. Frank, N. Davey, and S. P. Hunt, Journal of Intelligent and Robotic Systems 31, 91 (2001).

- Liu et al. [2018] C. L. Liu, H. Wen-Hoar, and Y. C. Tu, IEEE Transactions on Industrial Electronics PP, 1 (2018).