Superhuman Fairness

Abstract

The fairness of machine learning-based decisions has become an increasingly important focus in the design of supervised machine learning methods. Most fairness approaches optimize a specified trade-off between performance measure(s) (e.g., accuracy, log loss, or AUC) and fairness metric(s) (e.g., demographic parity, equalized odds). This begs the question: are the right performance-fairness trade-offs being specified? We instead re-cast fair machine learning as an imitation learning task by introducing superhuman fairness, which seeks to simultaneously outperform human decisions on multiple predictive performance and fairness measures. We demonstrate the benefits of this approach given suboptimal decisions.

1 Introduction

The social impacts of algorithmic decisions based on machine learning have motivated various group and individual fairness properties that decisions should ideally satisfy (Calders et al., 2009; Hardt et al., 2016). Unfortunately, impossibility results prevent multiple common group fairness properties from being simultaneously satisfied (Kleinberg et al., 2016). Thus, no set of decisions can be universally fair to all groups and individuals for all notions of fairness. Instead, specified weightings, or trade-offs, of different criteria are often optimized (Liu & Vicente, 2022). Identifying an appropriate trade-off to prescribe to these fairness methods is a daunting task open to application-specific philosophical and ideological debate that could delay or completely derail the adoption of algorithmic methods.

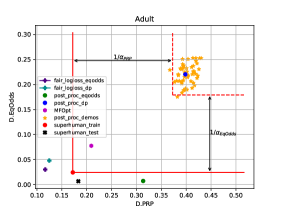

We consider the motivating scenario of a fairness-aware decision task currently being performed by well-intentioned, but inherently error-prone human decision makers. Rather than seeking optimal decisions for specific performance-fairness trade-offs, which may be difficult to accurately elicit, we propose a more modest, yet more practical objective: outperform human decisions across all performance and fairness measures with maximal frequency. We implicitly assume that available human decisions reflect desired performance-fairness trade-offs, but are often noisy and suboptimal. This provides an opportunity for superhuman decisions that Pareto dominate human decisions across predictive performance and fairness metrics (Figure 1) without identifying an explicit desired trade-off.

To the best of our knowledge, this paper is the first to define fairness objectives for supervised machine learning with respect to noisy human decisions rather than using prescriptive trade-offs or hard constraints. We leverage and extend a recently-developed imitation learning method for subdominance minimization (Ziebart et al., 2022). Instead of using the subdominance to identify a target trade-off, as previous work does in the inverse optimal control setting to estimate a cost function, we use it to directly optimize our fairness-aware classifier. We develop policy gradient optimization methods (Sutton & Barto, 2018) that allow flexible classes of probabilistic decision policies to be optimized for given sets of performance/fairness measures and demonstrations.

We conduct extensive experiments on standard fairness datasets (Adult and COMPAS) using accuracy as a performance measure and three conflicting fairness definitions: Demographic Parity (Calders et al., 2009), Equalized Odds (Hardt et al., 2016), and Predictive Rate Parity (Chouldechova, 2017)). Though our motivation is to outperform human decisions, we employ a synthetic decision-maker with differing amounts of label and group membership noise to identify sufficient conditions for superhuman fairness of varying degrees. We find that our approach achieves high levels of superhuman performance that increase rapidly with reference decision noise and significantly outperform the superhumanness of other methods that are based on more narrow fairness-performance objectives.

2 Fairness, Elicitation, and Imitation

2.1 Group Fairness Measures

Group fairness measures are primarily defined by confusion matrix statistics (based on labels and decisions/predictions produced from inputs ) for examples belonging to different protected groups (e.g., ).

We focus on three prevalent fairness properties in this paper:

-

•

Demographic Parity (DP) (Calders et al., 2009) requires equal positive rates across protected groups:

-

•

Equalized Odds (EqOdds) (Hardt et al., 2016) requires equal true positive rates and false positive rates across groups, i.e.,

-

•

Predictive Rate Parity (PRP) (Chouldechova, 2017) requires equal positive predictive value () and negative predictive value () across groups:

Violations of these fairness properties can be measured as differences:

| (1) | ||||

| (2) | ||||

| (3) |

2.2 Performance-Fairness Trade-offs

Numerous fair classification algorithms have been developed over the past few years, with most targeting one fairness metric (Hardt et al., 2016). With some exceptions (Blum & Stangl, 2019), predictive performance and fairness are typically competing objectives in supervised machine learning approaches. Thus, though satisfying many fairness properties simultaneously may be naïvely appealing, doing so often significantly degrades predictive performance or even creates infeasibility (Kleinberg et al., 2016).

Given this, many approaches seek to choose parameters for (probabilistic) classifier that balance the competing predictive performance and fairness objectives (Kamishima et al., 2012; Hardt et al., 2016; Menon & Williamson, 2018; Celis et al., 2019; Martinez et al., 2020; Rezaei et al., 2020). Recently, Hsu et al. (2022) proposed a novel optimization framework to satisfy three conflicting fairness metrics (demographic parity, equalized odds, and predictive rate parity) to the best extent possible:

| (4) | |||

2.3 Preference Elictation & Imitation Learning

Preference elicitation (Chen & Pu, 2004) is one natural approach to identifying desirable performance-fairness trade-offs. Preference elicitation methods typically query users for their pairwise preference on a sequence of pairs of options to identify their utilities for different characteristics of the options. This approach has been extended and applied to fairness metric elicitation (Hiranandani et al., 2020), allowing efficient learning of linear (e.g., Eq. (4)) and non-linear metrics from finite and noisy preference feedback.

Imitation learning (Osa et al., 2018) is a type of supervised machine learning that seeks to produce a general-use policy based on demonstrated trajectories of states and actions, . Inverse reinforcement learning methods (Abbeel & Ng, 2004; Ziebart et al., 2008) seek to rationalize the demonstrated trajectories as the result of (near-) optimal policies on an estimated cost or reward function. Feature matching (Abbeel & Ng, 2004) plays a key role in these methods, guaranteeing if the expected feature counts match, the estimated policy will have an expected cost under the demonstrator’s unknown fixed cost function weights equal to the average of the demonstrated trajectories:

| (5) | |||

where .

Syed & Schapire (2007) seeks to outperform the set of demonstrations when the signs of the unknown cost function are known, , by making the inequality,

| (6) |

strict for at least one feature. Subdominance minimization (Ziebart et al., 2022) seeks to produce trajectories that outperform each demonstration by a margin:

| (7) |

under the same assumption of known cost weight signs. However, since this is often infeasible, the approach instead minimizes the subdominance, which measures the -weighted violation of this inequality:

| (8) |

where is the hinge function and the per-feature margin has been reparameterized as . Previous work (Ziebart et al., 2022) has employed subdominance minimization in conjunction with inverse optimal control:

learning the cost function parameters for the optimal trajectory that minimizes subdominance. One contribution of this paper is extending subdominance minimization to the more flexible prediction models needed for fairness-aware classification that are not directly conditioned on cost features or performance/fairness metrics.

3 Subdominance Minimization for Improved Fairness-Aware Classification

We approach fair classification from an imitation learning perspective. We assume vectors of (human-provided) reference decisions are available that roughly reflect desired fairness-performance trade-offs, but are also noisy. Our goal is to construct a fairness-aware classifier that outperforms reference decisions on all performance and fairness measures on withheld data as frequently as possible.

3.1 Superhumanness and Subdominance

We consider reference decisions that are drawn from a human decision-maker or baseline method , on a set of M items, , where L is the number of attributes in each of items . Group membership attributes from vector indicate to which group item belongs.

The predictive performance and fairness of decisions for each item are assessed based on ground truth and group membership using a set of predictive loss and unfairness measures .

Definition 3.1.

A fairness-aware classifier is considered -superhuman for a given set of predictive loss and unfairness measures if its decisions satisfy:

If strict Pareto dominance is required to be -superhuman, which is often effectively true for continuous domains, then by definition, at most of human decision makers could be -superhuman. However, far fewer than may be superhuman if pairs of human decisions do not Pareto dominate one another in either direction (i.e., neither nor for pairs of human decisions and ). From this perspective, any decisions with superhuman performance more than of the time exceed the performance limit for the distribution of human demonstrators.

Unfortunately, directly maximizing is difficult in part due to the discontinuity of Pareto dominance (). The subdominance (Ziebart et al., 2022) serves as a convex upper bound for non-dominance in each metric and on in aggregate:

| (9) |

Given vectors of reference decisions as demonstrations, , the subdominance for decision vector with respect to the set of demonstrations is111For notational simplicity, we assume all demonstrated decisions correspond to the same items represented in . Generalization to unique for each demonstration is straightforward.

where is the predictions produced by our model for the set of items , and is the set of these prediction sets, . The subdominance is illustrated by Figure 2. Following concepts from support vector machines (Cortes & Vapnik, 1995), reference decisions that actively constrain the predictions in a particular feature dimension, k, are referred to as support vectors and denoted as:

3.2 Performance-Fairness Subdominance Minimization

We consider probabilistic predictors that make structured predictions over the set of items in the most general case, but can also be simplified to make conditionally independent decisions for each item.

Definition 3.2.

The minimally subdominant fairness-aware classifier has model parameters chosen by:

Hinge loss slopes are also learned from the data during training. For subdominance of feature, indicates the degree of sensitivity to how much the algorithm fails to sufficiently outperform demonstrations in that feature. When value is higher, the algorithm chooses that feature to minimize subdominance. In our setting, features are loss/violation metrics defined to measure the performance/fairness of a set of reference decisions.

We use the subgradient of subdominance with respect to and to update these variables iteratively, and after convergence, the best learned weights are used in the final model . A commonly used model like logistic regression can be used for .

Theorem 3.3.

The gradient of expected subdominance under with respect to the set of reference decisions is:

where the optimal for each is obtained from:

using to represent the value that would make the demonstration with the smallest feature, , a support vector with zero subdominance.

Using gradient descent, we update the model weights using an approximation of the gradient based on a set of sampled predictions from the model :

We show the steps required for the training of our model in Algorithm 1. Reference decisions from a human decision-maker or baseline method are provided as input to the algorithm. is set to an initial value. In each iteration of the algorithm, we first sample a set of model predictions from for the matching items used for reference decisions . We then find the new (and ) based on the algorithms discussed in Theorem 3.3.

Draw N set of reference decisions from a human decision-maker or baseline method . Initialize: while not converged do

such that Compute

3.3 Generalization Bounds

With a small effort, we extend the generalization bounds based on support vectors developed for inverse optimal control subdominance minimization (Ziebart et al., 2022).

Theorem 3.4.

A classifier trained to minimize on a set of iid reference decisions has the support vector set defined by the union of support vectors for any decision with support under . Such a classifier is on average -superhuman on the population distribution with: .

This generalization bound requires overfitting to the training data so that the has restricted support (i.e., for many ) or becomes deterministic.

4 Experiments

The goal of our approach is to produce a fairness-aware prediction method that outperforms reference (human) decisions on multiple fairness/performance measures. In this section, we discuss our experimental design to synthesize reference decisions with varying levels of noise, evaluate our method, and provide comparison baselines.

4.1 Training and Testing Dataset Construction

To emulate human decision-making with various levels of noise, we add noise to the ground truth data of benchmark fairness datasets and apply fair learning methods over repeated randomized dataset splits. We describe this process in detail in the following section.

Datasets

We perform experiments on two benchmark fairness datasets:

-

•

UCI Adult dataset (Dheeru & Karra Taniskidou, 2017) considers predicting whether a household’s income is higher than $50K/yr based on census data. Group membership is based on gender. The dataset consists of 45,222 items.

-

•

COMPAS dataset (Larson et al., 2016) considers predicting recidivism with group membership based on race. It consists of 6,172 examples.

Partitioning the data

We first split entire dataset randomly into a disjoint train (train-sh) and test (test-sh) set of equal size. The test set (test-sh) is entirely withheld from the training procedure and ultimately used solely for evaluation. To produce each demonstration (a vector of reference decisions), we split the (train-sh) set, randomly into a disjoint train (train-pp) and test (test-pp) set of equal size.

Noise insertion

We randomly flip of the ground truth labels and group membership attributes to add noise to our demonstration-producing process.

Fair classifier :

Using the noisy data, we provide existing fairness-aware methods with labeled train-pp data and unlabeled test-pp to produce decisions on the test-pp data as demonstrations . Specifically, we employ:

-

•

The Post-processing method of Hardt et al. (2016), which aims to reduce both prediction error and {demographic parity or equalized odds} at the same time. We use demographic parity as the fairness constraint. We produce demonstrations using this method for Adult dataset.

-

•

Robust fairness for logloss-based classification (Rezaei et al., 2020) employs distributional robustness to match target fairness constraint(s) while robustly minimizing the log loss. We use equalized odds as the fairness constraint. We employ this method to produce demonstrations for COMPAS dataset.

We repeat the process of partitioning train-sh times to create randomized partitions of train-pp and test-pp and then produce a set of demonstrations .

4.2 Evaluation Metrics and Baselines

Predictive Performance and Fairness Measures

Our focus for evaluation is on outperforming demonstrations in multiple fairness and performance measures. We use measures: inaccuracy (Prediction error), difference from demographic parity (D.DP), difference from equalized odds (D.EqOdds), difference from predictive rate parity (D.PRP).

Baseline methods

As baseline comparisons, we train five different models on the entire train set (train-sh) and then evaluate them on the withheld test data (test-sh):

-

•

The Post-processing model of (Hardt et al., 2016) with demographic parity as the fairness constraint (post_proc_dp).

-

•

The Post-processing model of (Hardt et al., 2016) with equalized odds as the fairness constraint (post_proc_eqodds).

-

•

The Robust Fair-logloss model of (Rezaei et al., 2020) with demographic parity as the fairness constraint (fair_logloss_dp).

-

•

The Robust Fair-logloss model of (Rezaei et al., 2020) equalized odds as the fairness constraint (fair_logloss_eqodds).

-

•

The Multiple Fairness Optimization framework of Hsu et al. (2022) which is designed to satisfy three conflicting fairness metrics (demographic parity, equalized odds and predictive rate parity) to the best extent possible (MFOpt).

| Adult | COMPAS | ||||||||

| Prediction error | DP diff | EqOdds diff | PRP diff | Prediction error | DP diff | EqOdds diff | PRP diff | ||

| 62.62 | 35.93 | 6.46 | 4.98 | 82.5 | 4.27 | 3.15 | 12.72 | ||

| -superhuman | 98% | 94% | 100% | 100% | 100% | 100% | 100% | 100% | |

| MinSub-Fair (ours) | 0.210884 | 0.025934 | 0.006690 | 0.183138 | 0.366806 | 0.040560 | 0.124683 | 0.171177 | |

| MFOpt | 0.195696 | 0.063152 | 0.077549 | 0.209199 | 0.434743 | 0.005830 | 0.069519 | 0.161629 | |

| post_proc_dp | 0.212481 | 0.030853 | 0.220357 | 0.398278 | 0.345964 | 0.010383 | 0.077020 | 0.173689 | |

| post_proc_eqodds | 0.213873 | 0.118802 | 0.007238 | 0.313458 | 0.363395 | 0.041243 | 0.060244 | 0.151040 | |

| fair_logloss_dp | 0.281194 | 0.004269 | 0.047962 | 0.124797 | 0.467610 | 0.000225 | 0.071392 | 0.172418 | |

| fair_logloss_eqodds | 0.254060 | 0.153543 | 0.030141 | 0.116579 | 0.451496 | 0.103093 | 0.029085 | 0.124447 | |

Hinge Loss Slopes

As discussed previously, value corresponds to the hinge loss slope, which defines by how far a produced decision does not sufficiently outperform the demonstrations on the feature. When the is large, the model chooses heavily weights support vector reference decisions for that particular when minimizing subdominance. We report these values in our experiments.

4.3 Superhuman Model Specification and Updates

We use a logistic regression model with first-order moments feature function, , and weights applied independently on each item as our decision model. During the training process, we update the model parameter to reduce subdominance.

Sample from Model

In each iteration of the algorithm, we first sample prediction vectors from for the matching items used in demonstrations . In the implementation, to produce the sample, we look up the indices of the items used in , which constructs item set . Now we make predictions using our model on this item set . The model produces a probability distribution for each item which can be sampled and used as a prediction .

Update model parameters

We update until convergence using Algorithm 1. For our logistic regression model, the gradient is:

where denotes the feature function and is the corresponding feature function for the set of reference decisions.

| Adult | COMPAS | ||||||||

| Prediction error | DP diff | EqOdds diff | PRP diff | Prediction error | DP diff | EqOdds diff | PRP diff | ||

| 29.63 | 10.77 | 5.83 | 13.42 | 29.33 | 4.51 | 3.34 | 57.74 | ||

| -superhuman | 100% | 100% | 100% | 100% | 100% | 100% | 100% | 98% | |

| MinSub-Fair (ours) | 0.202735 | 0.030961 | 0.009263 | 0.176004 | 0.359985 | 0.031962 | 0.036680 | 0.172286 | |

| MFOpt | 0.195696 | 0.063152 | 0.077549 | 0.209199 | 0.459731 | 0.091892 | 0.039745 | 0.153257 | |

| post_proc_dp | 0.225462 | 0.064232 | 0.237852 | 0.400427 | 0.353164 | 0.087889 | 0.088414 | 0.160538 | |

| post_proc_eqodds | 0.224561 | 0.103158 | 0.010552 | 0.310070 | 0.351269 | 0.144190 | 0.158372 | 0.148493 | |

| fair_logloss_dp | 0.285549 | 0.007576 | 0.057659 | 0.115751 | 0.484620 | 0.005309 | 0.145502 | 0.183193 | |

| fair_logloss_eqodds | 0.254577 | 0.147932 | 0.012778 | 0.118041 | 0.487025 | 0.127163 | 0.011918 | 0.153869 | |

4.4 Experimental Results

After training each model, e.g., obtaining the best model weight from the training data (train-sh) for superhuman, we evaluate each on unseen test data (test-sh). We employ hard predictions (i.e., the most probable label) using our approach at time time rather than randomly sampling.

| Method | Adult() | Adult() | COMPAS() | COMPAS() |

|---|---|---|---|---|

| MinSub-Fair (ours) | 96% | 100% | 100% | 98% |

| MFOpt | 42% | 0% | 18% | 18% |

| post_proc_dp | 16% | 86% | 100% | 80% |

| post_proc_eqodds | 0% | 66% | 100% | 88% |

| fair_logloss_dp | 0% | 0% | 0% | 0% |

| fair_logloss_eqodds | 0% | 0% | 0% | 0% |

Noise-free reference decisions

Our first set of experiments considers learning from reference decisions with no added noise. The results are shown in Figure 3. We observe that our approach outperforms demonstrations in all fairness metrics and shows comparable performance in accuracy. The (post_proc_dp) performs almost as an average of demonstrations in all dimensions, hence our approach can outperform it in all fairness metrics. In comparison to (post_proc_dp), our approach can outperform in all fairness metrics but is slightly worse in prediction error.

We show the experiment results along with values in Table 1. Note that the margin boundaries (dotted red lines) in Figure 3 are equal to for feature , hence there is reverse relation between and margin boundary for feature . We observe larger values of for prediction error and demographic parity difference. The reason is that these features are already optimized in demonstrations and our model has to increase values for those features to sufficiently outperform them.

Noisy reference decisions

In our second set of experiments, we introduce significant amounts of noise () into our reference decisions. The results for these experiments are shown in Figure 4. We observe that in the case of learning from noisy demonstrations, our approach still outperforms the reference decisions. The main difference here is that due to the noisy setting, demonstrations have worse prediction error but regardless of this issue, our approach still can achieve a competitive prediction error. We show the experimental results along with values in Table 2.

Relationship of noise to superhuman performance

We also evaluate the relationship between the amount of augmented noise in the label and protected attribute of demonstrations, with achieving -superhuman performance in our approach. As shown in Figure 5, with slightly increasing the amount of noise in demonstrations, our approach can outperform of demonstrations and reach to -superhuman performance. In Table 3 we show the percentage of demonstrations that each method can outperform across all prediction/fairness measures (i.e., the superhuman value).

5 Conclusions

In this paper, we introduce superhuman fairness, an approach to fairness-aware classifier construction based on imitation learning. Our approach avoids explicit performance-fairness trade-off specification or elicitation. Instead, it seeks to unambiguously outperform human decisions across multiple performance and fairness measures with maximal frequency. We develop a general framework for pursuing this based on subdominance minimization (Ziebart et al., 2022) and policy gradient optimization methods (Sutton & Barto, 2018) that enable a broad class of probabilistic fairness-aware classifiers to be learned. Our experimental results show the effectiveness of our approach in outperforming synthetic decisions corrupted by small amounts of label and group-membership noise when evaluated using multiple fairness criteria combined with predictive accuracy.

Societal impacts

By design, our approach has the potential to identify fairness-aware decision-making tasks in which human decisions can frequently be outperformed by a learned classifier on a set of provided performance and fairness measures. This has the potential to facilitate a transition from manual to automated decisions that are preferred by all interested stakeholders, so long as their interests are reflected in some of those measures. However, our approach has limitations. First, when performance-fairness tradeoffs can either be fully specified (e.g., based on first principles) or effectively elicited, fairness-aware classifiers optimized for those trade-offs should produce better results than our approach, which operates under greater uncertainty cast by the noisiness of human decisions. Second, if target fairness concepts lie outside the set of metrics we consider, our resulting fairness-aware classifier will be oblivious to them. Third, our approach assumes human-demonstrated decision are well-intentioned, noisy reflections of desired performance-fairness trade-offs. If this is not the case, then our methods could succeed in outperforming them across all fairness measures, but still not provide an adequate degree of fairness.

Future directions

We have conducted experiments with a relatively small number of performance/fairness measures using a simplistic logistic regression model. Scaling our approach to much larger numbers of measures and classifiers with more expressive representations are both of great interest. Additionally, we plan to pursue experimental validation using human-provided fairness-aware decisions in addition to the synthetically-produced decisions we consider in this paper.

References

- Abbeel & Ng (2004) Abbeel, P. and Ng, A. Y. Apprenticeship learning via inverse reinforcement learning. In Proceedings of the International Conference on Machine Learning, pp. 1–8, 2004.

- Blum & Stangl (2019) Blum, A. and Stangl, K. Recovering from biased data: Can fairness constraints improve accuracy? arXiv preprint arXiv:1912.01094, 2019.

- Boyd & Vandenberghe (2004) Boyd, S. and Vandenberghe, L. Convex optimization. Cambridge University Press, 2004.

- Calders et al. (2009) Calders, T., Kamiran, F., and Pechenizkiy, M. Building classifiers with independency constraints. In 2009 IEEE International Conference on Data Mining Workshops, pp. 13–18. IEEE, 2009.

- Celis et al. (2019) Celis, L. E., Huang, L., Keswani, V., and Vishnoi, N. K. Classification with fairness constraints: A meta-algorithm with provable guarantees. In ACM FAT*, 2019.

- Chen & Pu (2004) Chen, L. and Pu, P. Survey of preference elicitation methods. Technical report, EPFL, 2004.

- Chouldechova (2017) Chouldechova, A. Fair prediction with disparate impact: A study of bias in recidivism prediction instruments. Big data, 5(2):153–163, 2017.

- Cortes & Vapnik (1995) Cortes, C. and Vapnik, V. Support-vector networks. Machine learning, 20:273–297, 1995.

- Dheeru & Karra Taniskidou (2017) Dheeru, D. and Karra Taniskidou, E. UCI machine learning repository, 2017. URL http://archive.ics.uci.edu/ml.

- Hardt et al. (2016) Hardt, M., Price, E., and Srebro, N. Equality of opportunity in supervised learning. Advances in Neural Information Processing Systems, 29:3315–3323, 2016.

- Hiranandani et al. (2020) Hiranandani, G., Narasimhan, H., and Koyejo, S. Fair performance metric elicitation. Advances in Neural Information Processing Systems, 33:11083–11095, 2020.

- Hsu et al. (2022) Hsu, B., Mazumder, R., Nandy, P., and Basu, K. Pushing the limits of fairness impossibility: Who’s the fairest of them all? In Advances in Neural Information Processing Systems, 2022.

- Kamishima et al. (2012) Kamishima, T., Akaho, S., Asoh, H., and Sakuma, J. Fairness-aware classifier with prejudice remover regularizer. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, pp. 35–50. Springer, 2012.

- Kleinberg et al. (2016) Kleinberg, J., Mullainathan, S., and Raghavan, M. Inherent trade-offs in the fair determination of risk scores. arXiv preprint arXiv:1609.05807, 2016.

- Larson et al. (2016) Larson, J., Mattu, S., Kirchner, L., and Angwin, J. How we analyzed the compas recidivism algorithm. ProPublica, 9, 2016.

- Liu & Vicente (2022) Liu, S. and Vicente, L. N. Accuracy and fairness trade-offs in machine learning: A stochastic multi-objective approach. Computational Management Science, pp. 1–25, 2022.

- Martinez et al. (2020) Martinez, N., Bertran, M., and Sapiro, G. Minimax Pareto fairness: A multi objective perspective. In Proceedings of the International Conference on Machine Learning, pp. 6755–6764. PMLR, 13–18 Jul 2020.

- Menon & Williamson (2018) Menon, A. K. and Williamson, R. C. The cost of fairness in binary classification. In ACM FAT*, 2018.

- Osa et al. (2018) Osa, T., Pajarinen, J., Neumann, G., Bagnell, J. A., Abbeel, P., Peters, J., et al. An algorithmic perspective on imitation learning. Foundations and Trends® in Robotics, 7(1-2):1–179, 2018.

- Rezaei et al. (2020) Rezaei, A., Fathony, R., Memarrast, O., and Ziebart, B. Fairness for robust log loss classification. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, pp. 5511–5518, 2020.

- Sutton & Barto (2018) Sutton, R. S. and Barto, A. G. Reinforcement learning: An introduction. MIT press, 2018.

- Syed & Schapire (2007) Syed, U. and Schapire, R. E. A game-theoretic approach to apprenticeship learning. Advances in neural information processing systems, 20, 2007.

- Vapnik & Chapelle (2000) Vapnik, V. and Chapelle, O. Bounds on error expectation for support vector machines. Neural computation, 12(9):2013–2036, 2000.

- Ziebart et al. (2022) Ziebart, B., Choudhury, S., Yan, X., and Vernaza, P. Towards uniformly superhuman autonomy via subdominance minimization. In International Conference on Machine Learning, pp. 27654–27670. PMLR, 2022.

- Ziebart et al. (2008) Ziebart, B. D., Maas, A. L., Bagnell, J. A., Dey, A. K., et al. Maximum entropy inverse reinforcement learning. In AAAI, volume 8, pp. 1433–1438, 2008.

Appendix A Proofs of Theorems

Proof of Theorem 3.3.

The gradient of the training objective with respect to model parameters is:

which follows directly from a property of gradients of logs of function:

| (10) |

We note that this is a well-known approach employed by policy-gradient methods in reinforcement learning (Sutton & Barto, 2018).

Next, we consider how to obtain the minimized subdominance for a particular tuple (,,,), , analytically.

First, we note that is comprised of hinged linear functions of , making it a convex and piece-wise linear function of . This has two important implications: (1) any point of the function for which the subgradient includes is a global minimum of the function (Boyd & Vandenberghe, 2004); (2) an optimum must exist at a corner of the function: or where one of the hinge functions becomes active:

| (11) |

The subgradient for the of these points (ordered by value from smallest to largest and denoted for the demonstration) is:

where the final bracketed expression indicates the range of values added to the constant value preceding it.

The smallest for which the largest value in this range is positive must contain the in its corresponding range, and is thus the provides the value for the optimal value. ∎

Proof of Theorem 3.4.

We extend the leave-one-out generalization bound of Ziebart et al. (2022) by considering the set of reference decisions that are support vectors for any learner decisions with non-zero probability. For the remaining reference decisions that are not part of this set, removing them from the training set would not change the optimal model choice and thus contribute zero error to the leave-one-out cross validation error, which is an almost unbiased estimate of the generalization error (Vapnik & Chapelle, 2000).

∎

Appendix B Additional Results

In the main paper, we only included plots that show the relationship of a fairness metric with prediction error. To show the relation between each pair of fairness metrics, in Figures 6 and 7 we show the remaining plots removed from Figures 3 and 4 respectively.

B.1 Experiment with more measures

Since our approach is flexible enough to accept wide range of fairness/performance measures, we extend the experiment on Adult to features. In this experiment we use Demographic Parity (D.DP), Equalized Odds (D.EqOdds), False Negative Rate (D.FNR), False Positive Rate (D.FPR) and Prediction Error as the features to outperform reference decisions on. The results are shown in Figure 8.