Super-Resolution Neural Operator

Abstract

We propose Super-resolution Neural Operator (SRNO), a deep operator learning framework that can resolve high-resolution (HR) images at arbitrary scales from the low-resolution (LR) counterparts. Treating the LR-HR image pairs as continuous functions approximated with different grid sizes, SRNO learns the mapping between the corresponding function spaces. From the perspective of approximation theory, SRNO first embeds the LR input into a higher-dimensional latent representation space, trying to capture sufficient basis functions, and then iteratively approximates the implicit image function with a kernel integral mechanism, followed by a final dimensionality reduction step to generate the RGB representation at the target coordinates. The key characteristics distinguishing SRNO from prior continuous SR works are: 1) the kernel integral in each layer is efficiently implemented via the Galerkin-type attention, which possesses non-local properties in the spatial domain and therefore benefits the grid-free continuum; and 2) the multilayer attention architecture allows for the dynamic latent basis update, which is crucial for SR problems to “hallucinate” high-frequency information from the LR image. Experiments show that SRNO outperforms existing continuous SR methods in terms of both accuracy and running time. Our code is at https://github.com/2y7c3/Super-Resolution-Neural-Operator

1 Introduction

Single image super-resolution (SR) addresses the inverse problem of reconstructing high-resolution (HR) images from their low-resolution (LR) counterparts. In a data-driven way, deep neural networks (DNNs) learn the inversion map from many LR-HR sample pairs and have demonstrated appealing performances [4, 20, 21, 40, 41, 29, 24]. Nevertheless, most DNNs are developed in the configuration of single scaling factors, which cannot be used in scenarios requiring arbitrary SR factors [37, 38]. Recently, implicit neural functions (INF) [5, 18] have been proposed to represent images in arbitrary resolution, and paving a feasible way for continuous SR. These networks, as opposed to storing discrete signals in grid-based formats, represent signals with evaluations of continuous functions at specified coordinates, where the functions are generally parameterized by a multi-layer perceptron (MLP). To share knowledge across instances instead of fitting individual functions for each signal, encoder-based methods [5, 26, 15] are proposed to retrieve latent codes for each signal, and then a decoding MLP is shared by all the instances to generate the required output, where both the coordinates and the corresponding latent codes are taken as input. However, the point-wise behavior of MLP in the spatial dimensions results in limited performance when decoding various objects, particularly for high-frequency components [32, 30].

Neural operator is a newly proposed neural network architecture in the field of computational physics [23, 19, 17] for numerically efficient solvers of partial differential equations (PDE). Stemming from the operator theory, nueral operators learn mappings between infinite-dimensional function spaces, which is inherently capable of continuous function evaluations and has shown promising potentials in various applications [27, 9, 13]. Typically, neural operator consists of three components: 1) lifting, 2) iterative kernel integral, and 3) projection. The kernel integrals operate in the spatial domain, and thus can explicitly capture the global relationship constraining the underlying solution function of the PDE. The attention mechanism in transformers [36] is a special case of kernel integral where linear transforms are first exerted to the feature maps prior to the inner product operations [17]. Tremendous successes of transformers in various tasks [6, 22, 34] have shown the importance of capturing global correlations, and this is also true for SR to improve performance. [8].

In this paper, we propose the super-resolution neural operator (SRNO), a deep operator learning framework that can resolve HR images from their LR counterparts at arbitrary scales. As shown in Fig.1, SRNO learns the mapping between the corresponding function spaces by treating the LR-HR image pairs as continuous functions approximated with different grid sizes. The key characteristics distinguishing SRNO from prior continuous SR works are: 1) the kernel integral in each layer is efficiently implemented via the Galerkin-type attention, which possesses non-local properties in the spatial dimensions and have been proved to be comparable to a Petrov-Galerkin projection [3]; and 2) the multilayer attention architecture allows for the dynamic latent basis update, which is crucial for SR problems to “hallucinate” high-frequency information from the LR image. When employing same encoders to capture features, our method outperforms previous continuous SR methods in terms of both reconstruction accuracy and running time.

In summary, our main contributions are as follows:

-

•

We propose the methodology of super-resolution neural operator that maps between finite-dimensional function spaces, allowing for continuous and zero-shot super-resolution irrespective the discretization used on the input and output spaces.

-

•

We develop an architecture for SRNO that first explores the common latent basis for the whole training set and subsequently refines an instance-specific basis by the Galerkin-type attention mechanism.

-

•

Numerically, we show that the proposed SRNO outperforms existing continuous SR methods with less running time, and even generates better results on the resolutions for which the fixed scale SR networks were trained.

2 Related Work

Deep learning based SR methods. [4, 20, 21, 40, 41, 29, 24] have achieved impressive performances, in multi-scale scenarios one has to train and store several models for each scale factor, which is unfeasible when considering time and memory budgets. In recent years, several methods [11, 37, 31] are proposed to achieve arbitrary-scale SR with a single model, but their performances are limited when dealing with out-of-distribution scaling factors. Inspired by INF, LIIF [5] takes continuous coordinates and latent variables as inputs, and employs an MLP to achieve outstanding performances for both in-distribution and out-of-distribution factors. In contrast, LTE [18] transforms input coordinates into the Fourier domain and uses the dominant frequencies extracted from latent variables to address the spectral bias problem [32, 30]. In a nutshell, treating images as RGB-valued functions and sharing the implicit function space are the keys to the success of LIIF-like works [5, 18]. Nevertheless, a purely local decoder, like MLP, is not able to accurately approximate arbitrary images, although it is rather sensitive to the input coordinates.

Neural Operators. Recently, a novel neural network architecture, Neural Operator (NO), was proposed for discretization invariant solutions of PDEs via infinite-dimensional operator learning [19, 10, 23, 17]. Neural operators only need to be trained once and are capable of transferring solutions between differently discretized meshes while keeping a fixed approximation error. A valuable merit of NO is that it does not require knowledge of the underlying PDE, which allows us to introduce it by the following abstract form,

| (1) |

where is the solution function residing in the Banach space , and is an operator-valued functional that maps the coefficient function of the PDE to , the dual space of . As in many cases the inverse operator of even does not exist, NO seeks a feasible operator , directly mapping the coefficient to the solution within an acceptable tolerance.

The operator is numerically approximated by training a neural network , where are the trainable parameters. Suppose we have pairs of observations where the input functions are sampled from probability measure compactly supported on , and are used as the supervisory output functions. The infinitely dimensional operator learning problem thus is associated with the empirical-risk minimization problem [35]. In practice, we actually measure the approximation loss using the sampled observations and , which are the direct results of discretization:

| (2) |

Similar to classical feedforward neural networks (FFNs), the NO is of an iterative architecture. For ease of exposition, suppose is defined on the bounded domain , and the inputs and outputs of the intermediate layers are all vector-valued functions, with dimension . Then can be formulated as follows:

| (3) | ||||

| (4) | ||||

| (5) |

where , and are the local lifting and projection functions respectively , mapping the input to its first layer hidden representation and the last layer hidden representation back to the output function . is a point-wise linear transformation, and is the nonlinear activation function.

Although the PDE in (1) point-wisely defines the behavior of the solution function , the solution operator we are seeking should exhibit the non-local property such that can approximate everywhere rather than locally. For this purpose, NO may employ the kernel integral operators to maintain the continuum in the spatial domain, and one of the most adopted forms [19] is defined as

| (6) |

where the kernel matrix is parameterized by .

3 Super-resolution Neural Operator

All operations of NO are defined in function space and the training data are just samples at coordinates irrespective of the discretization sizes. This property inspires us to employ the NO’s archetecture for continuous SR. Different from NO that aims at mapping between infinite-dimensional function spaces, we define SRNO in the sense of mappings between two approximation spaces of finite-dimensional but continuous functions. This is reasonable because imaging optics inevitably limit the highest possible frequency in the radiance field under observation. With this configuration, we can design the archetecture of SRNO using the well-developed theoretical tool, the Galerkin-type method that is widely used in the filed of Finite elements [7].

Problem Formulation. Let denote a Hilbert space equipped with the inner-product structure, which is continuously embedded in the space of continuous functions , with a bounded domain. An image is defined as a vector-valued function . Assume we can access the function values of at the coordinates with the biggest discretization size . Note that ’s are not necessarily equidistant. Our goal is to learn a super-resolution neural operator between two Hilbert spaces with different resolutions: , where denotes the coarse and the fine grid sizes, respectively. Given function pairs , where and . Our SRNO parameterized by can be solved through the associated empirical-risk minimization problem:

| (7) |

Since our data and are functions, to work with them numerically, we assume access only to their point-wise evaluations. Let be an approximation space [3] associated with , such that for any , , where form a set of nodal basis for in the sense that . Similarly, is another approximation space associate with grid size . Note that Our observed LR-HR image pairs may have different grid size pairs , which means they belongs to different approximation spaces .

Lifting. The neural operators from PDEs usually use a simple pointwise function, in (3), to expand the input channels. But for the SR problem, a deep feature encoder (with trainable parameters ) and spatial interpolations should be considered in the lifting operation, due to the complexity of natural images and the discretization inconsistency between LR and HR image functions. Another critical ingredient in Lifting is the incorporation scheme of coordinates. Prior study [14] demonstrates that deep CNNs can implicitly learn to encode the information about absolute positions. As shown in Fig.2 (c), the position information of the grid points have been implicitly encoded by employing CNNs. Therefore, we only need to explicitly construct the coordinate features with the fractional part of coordinate inside a grid. In order to reduce the blocky artifacts resulting from direct interpolation of the LR feature maps, we propose to concatenate the features weighted by the corresponding bilinear interpolation factors . Compared to the local ensemble trick in [5], our method takes shorter running time and overcomes the over-smoothing problem. We reformulate the lifting operation in (3) as:

| (8) |

where are coordinates of HR image functions, are the coordinates of the four neighbors of , , represents a local area in HR images with and the scaling factors, and is an local linear transformation function, with the number of channels after .

Kernel integral. The kernel integral operator, in (4), actually tells that we can identify the hidden representation of the underlying image function with distributions [28], inner products in the settings of SRNO. We first define the kernel with respect to the input pair , rather than like (6) depending on the spatial variables . Furthermore, in order to more efficiently explore we can use multiple sets of test functions defining the distributions, which may immediately remind one of a single-head self-attention [17]. Denote for . The kernel integral operator can be approximated by the Monte-Carlo method (omitting the layer index):

| (9) |

where

| (10) |

In the language of transformers, the matrices correspond to the queries, keys and values functions respectively. By allowing to be the sum of multiple functions with separate trainable parameters, the multi-head self-attention can also be rewritten like in (9). For every observation , this operation has a complexity of , which is unaffordable for the SR problem where the sampling number usually reaches to or beyond. To overcome this issue, we employ the Galerkin-type attention operator which has a linear complexity . The approximation capacity of a linearized Galerkin-type attention has been proved to be comparable to a Petrov-Galerkin projection [3].

We suppose that a image function is locally integrable, i.e., is measurable and for every compact . The idea behind distributions [28] is to identify with by suitablely choosing a “test function” , rather than by a series of separate evaluations at coordinates . Let , and be the keys, queries and values functions respectively, which are dimensional vector-valued functions, with a subscript denoting the corresponding component. The kernel integral attention then can be written in a component-wise form ():

| (11) |

Denote the evaluations by . Let . The columns of contain the vector representations of the learned basis functions, spanning certain subspaces of the latent representation Hilbert spaces respectively.

| (12) |

where , with the layer normalization. The -th column of contains the coefficients for the linear combination of the basis vectors to form the output . Consequently, the global correlations are reflected through the components of . As a result, SRNO can utilize all information effectively without the feature unfolding [5] or local convolutions [18], significantly suppressing the discontinuous patterns that appear around feature boundaries.

In addition to providing a global aggregation for the output z at each sampling point, the linear Galerkin-type attention (12) has the ability to obtain a quasi-optimal approximation in the current approximation space spanned with the columns of [3]. However, the expressive capability of the current bases in , , and solely depends on the current input LR image through the latent representation z, is there any chance that we can enrich the bases which contains some extra and useful information for SR reconstruction, but not included in the input z? For this purpose, the point-wise FFN in Fig.2 (b) introduces nonlinearties on one hand, and the positions concatenated in z enhance the bases on the other. In this way, the basis functions are being constantly enriched, and we reformulate the iterative process (See supplementary for a principled discussion):

| (13) |

Network details. The Network architecture is shown in Fig.2. As to the feature encoder , we employ EDSR-baseline [21], or RDN [41], both of which drop their upsampling layers, and their output channel dimensions . The CNNs in assist SRNO in capturing the common basis functions from the ensemble of training samples, while the Galerkin-type attention layers provide the instance-specific basis enhancement. We employ the multi-head attention scheme in [36] by dividing the queries, keys and values into parts with each of dimension . In our implementation, , yielding 16-dimensional output values. We only use two iterations in the kernel integral operator, which already outperforms previous works, while keeping the running time advantage. Note that we utilize convolutions to replace all the linear layers in SRNO, since they have a GPU-friendly data structure. The detailed network structures are listed in the supplementary.

DIV2K()

|

|

|

|

|

|

|

|---|---|---|---|---|---|---|

Urban100()

|

|

|

|

|

|

|

|

Bicubic | LIIF [5] | LTE [18] | SRNO (ours) | GT |

Urban100()

|

|

|

|

|

|---|---|---|---|---|

Urban100()

|

|

|

|

|

| Bicubic | LIIF [5] | LTE [18] | SRNO (ours) | GT |

| Method | In-distribution | Out-of-distribution | ||||||

| 2 | 3 | 4 | 6 | 12 | 18 | 24 | 30 | |

| Bicubic | 31.01 | 28.22 | 26.66 | 24.82 | 22.27 | 21.00 | 20.19 | 19.59 |

| EDSR-baseline [21] | 34.55 | 30.90 | 28.94 | - | - | - | - | - |

| EDSR-baseline-MetaSR [5, 11] | 34.64 | 30.93 | 28.92 | 26.61 | 23.55 | 22.03 | 21.06 | 20.37 |

| EDSR-baseline-LIIF [5] | 34.67 | 30.96 | 29.00 | 26.75 | 23.71 | 22.17 | 21.18 | 20.48 |

| EDSR-baseline-LTE [18] | 34.72 | 31.02 | 29.04 | 26.81 | 23.78 | 22.23 | 21.24 | 20.53 |

| EDSR-baseline-SRNO (ours) | 34.85 | 31.11 | 29.16 | 26.90 | 23.84 | 22.29 | 21.27 | 20.56 |

| RDN-MetaSR [5, 11] | 35.00 | 31.27 | 29.25 | 26.88 | 23.73 | 22.18 | 21.17 | 20.47 |

| RDN-LIIF [5] | 34.99 | 31.26 | 29.27 | 26.99 | 23.89 | 22.34 | 21.31 | 20.59 |

| RDN-LTE [18] | 35.04 | 31.32 | 29.33 | 27.04 | 23.95 | 22.40 | 21.36 | 20.64 |

| RDN-SRNO (ours) | 35.16 | 31.42 | 29.42 | 27.12 | 24.03 | 22.46 | 21.41 | 20.68 |

| Method | In-distribution | Out-of-distribution | In-distribution | Out-of-distribution | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 2 | 3 | 4 | 6 | 8 | 2 | 3 | 4 | 6 | 8 | |

| Set5 | Set14 | |||||||||

| RDN [41] | 38.24 | 34.71 | 32.47 | - | - | 34.01 | 30.57 | 28.81 | - | - |

| RDN-MetaSR [11, 5] | 38.22 | 34.63 | 32.38 | 29.04 | 26.96 | 33.98 | 30.54 | 28.78 | 26.51 | 24.97 |

| RDN-LIIF [5] | 38.17 | 34.68 | 32.50 | 29.15 | 27.14 | 33.97 | 30.53 | 28.80 | 26.64 | 25.15 |

| RDN-LTE [18] | 38.23 | 34.72 | 32.61 | 29.32 | 27.26 | 34.09 | 30.58 | 28.88 | 26.71 | 25.16 |

| RDN-SRNO (ours) | 38.32 | 34.84 | 32.69 | 29.38 | 27.28 | 34.27 | 30.71 | 28.97 | 26.76 | 25.26 |

| B100 | Urban100 | |||||||||

| RDN [41] | 32.34 | 29.26 | 27.72 | - | - | 32.89 | 28.80 | 26.61 | - | - |

| RDN-MetaSR [11, 5] | 32.33 | 29.26 | 27.71 | 25.90 | 24.83 | 32.92 | 28.82 | 26.55 | 23.99 | 22.59 |

| RDN-LIIF [5] | 32.32 | 29.26 | 27.74 | 25.98 | 24.91 | 32.87 | 28.82 | 26.68 | 24.20 | 22.79 |

| RDN-LTE [18] | 32.36 | 29.30 | 27.77 | 26.01 | 24.95 | 33.04 | 28.97 | 26.81 | 24.28 | 22.88 |

| RDN-SRNO (ours) | 32.43 | 29.37 | 27.83 | 26.04 | 24.99 | 33.33 | 29.14 | 26.98 | 24.43 | 23.02 |

4 Experiments

4.1 Training

Dataset. We used the DIV2K dataset [1] for network training, while the DIV2K validation set [1] and four benchmark datasets, including Set5 [2], Set14 [39], B100 [25] and Urban100 [12], for evaluation. Peak signal-to-noise ratio (PSNR) is used as the evaluation metric. Similar to [11, 5], we cropped image boundaries when computing PSNRs.

Implementation Details. Numerically, we have to explore the underlying continuous image function through access to its point-wise evaluations on the HR and LR grids. Let be the batch size and be the LR sampling counts. We first sample random scales following a uniform distribution , and then crop patches of sizes from the HR training images (one per each). We find is sufficient for SRNO to represent most natural image patches and fix this choice in the sequel. The LR counterparts are downsampled using bicubic interpolation with the corresponding . In order to keep a consistent number of supervision points for the LR patches of different scales in a single batch, we randomly sample HR pixels and calculate the corresponding fractional coordinates on the coarse grid associated with , as is done in [5]. We use an L1 loss [21] and the Adam [16] optimizer with an initial learning rate and the maximum . All models are trained for 1000 epochs with batch size 64, and the learning rate decays by the cosine annealing after a warm-up phase of 50 epochs.

4.2 Evaluation

Quantitative result. On the DIV2K validation set, Table 1 presents a quantitative comparison among our SRNO and three existing arbitrary-scale SR methods, MetaSR [11, 5], LIIF [5], and LTE [18]. Results when EDSR-baseline [21], and RDN [41] are used as encoders are displayed in the top and bottom rows, respectively. One can observe that SRNO provides the best results over all scale factors, irrespective of the encoder employed. Additionally, on in-distribution scales (), our method outperforms earlier works by wide margins.

As RDN facilitates better reconstruction accuracy than EDSR-baseline in Tab.1, we choose RDN as the encoder in the comparisons over the four benchmark datasets. The results are listed in Tab.2, where SRNO obtains all the best performances. Note that only SRNO produces substantial improvements over RDN on every in-distribution scale, although the latter trains separate models for each scale. As the experimental settings in Tab.1 and 2 only differ in the decoder part, we conclude that the Galerkin-type attention mechanism employed in SRNO does contribute to better function approximation capability.

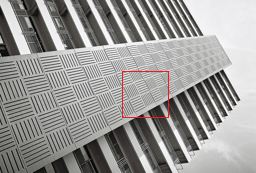

Qualitative result. Figure 3 illustrates the visual results obtained from two images in the Urban100 and DIV2K validation datasets. Both the regions marked with a rectangle are rich in high-frequency structures. The bicubic interpolations exhibit severe aliasing artifacts, which obviously have been transfered into the latent codes of LIIF [5] as it directly relies on the interpolation of the feature maps and the bilinear ensemble. Thanks to the local Fourier frequency estimation step, LTE [18] performs somewhat better in partial regions (e.g. the top-right corner of the building in the second row), but aliasing still dominates on the whole. In contrast, SRNO successfully recovers the fine structures, which again verifies the importance of the global integral kernels in capturing the correct overall structures.

Figure 4 shows two examples of scene text images. In the first row, only SRNO can clearly recover the word ”ANTIQUES”. And in the second row, only SRNO can consistently restore the two e’s. Obviously, the Galerkin-type attention attributes to this global consistency.

4.3 Ablation Study

Data sampling. The quantity of sampling points is crucial in our super-resolution operator learning architecture. Figure 5 shows that almost all scales perform better when using additional pixel samples. However, the benefit becomes less as the numbers of samples increases. or points would be good trade-offs between training time and performance. Figure 6 shows that more sampling points can more accurately represent an RGB-based function, which facilitates to learn the map between two approximation spaces. Using the DIV2K (800 images) and Flickr2K (2650 images) datasets, we also train an EDSR-baseline-MLP with position embedding, denoted by MLPφ. We can see that our SRNO () obtains better performance for scales , trained with only images in DIV2K. The curve for MLP() in Fig.5 reflects the performance advantage of SRNO(), both trained with DIV2K dataset. Random and sequential sampling results are presented in Supplementary.

| Method | #Params. (M) | #FLOPs (G) |

|---|---|---|

| EDSR-baseline-LIIF | 1.6 | 85.0 |

| EDSR-baseline-LTE | 1.7 | 75.3 |

| EDSR-baseline-SRNO (ours) | 2.0 | 65.8 |

| RDN-LIIF | 22.4 | 765.2 |

| RDN-LTE | 22.5 | 755.5 |

| RDN-SRNO (ours) | 22.8 | 746.0 |

| Method | In-dis. | Out-of-dis. | Time (ms) | |||

| 2 | 3 | 4 | 6 | 8 | ||

| SRNO | 33.83 | 30.50 | 28.79 | 26.55 | 25.05 | 149 |

| SRNO (-i) | 33.81 | 30.47 | 28.76 | 26.55 | 25.00 | 156 |

| SRNO (-h) | 33.87 | 30.47 | 28.76 | 26.56 | 25.02 | 153 |

| SRNO (-w) | 33.80 | 30.45 | 28.75 | 26.53 | 25.00 | 124 |

| SRNO (-l) | 33.82 | 30.45 | 28.75 | 26.55 | 25.00 | 117 |

| LIIF [5] | 33.68 | 30.36 | 28.64 | 26.46 | 24.94 | 183 |

| LTE [18] | 33.72 | 30.37 | 28.65 | 26.50 | 24.99 | 205 |

| LTE+ [18] | 33.71 | 30.41 | 28.67 | 26.49 | 24.98 | 160 |

|

|

|

| Bicubic | SRNO () | SRNO () |

|

|

|

| SRNO () | SRNO () | GT |

|

|

| LTE | SRNO (-i) |

|

|

| SRNO | GT |

Other design choices. In Tab.4, we retrain the following models using EDSR-baseline [21]. All the tests were conducted using a single NVIDIA RTX 3090. In comparing our interpolation-free method to SRNO(-i) using nearest-neighbor interpolation, we find that it works better and faster. It should be noted that we only generate the image once, as opposed to employing an MLP four times as in LIIF [5] and LTE [18]. Figure 7 shows that SRNO, compared to SRNO(-i), alleviates the blocky artifact, and compared to the local ensemble [18], overcomes the over-smoothing problem. By comparing SRNO with SRNO(-h), it can be shown that cutting the number of heads from to causes an increase in time costs because GPU’s parallelism is reduced. We see that reducing the number of basis functions and the iterative updating layers results in a considerable performance drop by comparing SRNO with SRNO(-w) and SRNO(-l). The number of basis functions () and the number of subspaces () play an important role in the approximation power of our model.

4.4 Dynamic Basis

We investigate the dynamic basis updating phenomenon across the approximation subspaces in this section. We show two exemplar basis functions in the two consecutive Galerkin attention layers in Fig.8. The basis function in the second layer appears to be more structured than in the previous layer. The dynamic update of the approximation subspaces spanned by the column vectors of comes from two factors, the concatenated random coordinates and the nonlinearity introduced by the FNN. Without them, the Galerkin-type attention would just be a linear combination of the bases in the current approximation subspaces, and SRNO would not be able to take any chances to enrich the basis for every individual image through optimizations. The increasing ranks of the latent representation matrix z, shown in Fig.9, provide the evidence that Galerkin-type attention is becoming more complex than a straightforward linear combination of the existing bases. Due to the space limitation, we have provided a principled discussion on the dynamic basis update processes in the supplementary material.

5 Conclusion

In this paper, we proposed the Super-Resolution Neural Operator (SRNO) for continuous super-resolution. In SRNO, each image is seen as a function, and our method learns a map between finite-dimensional function spaces, which allows SRNO can be trained and generalize on different levels of discretization. First, in the Lifting, we use CNN-based encoders to capture feature maps from LR images and design a simple but efficient interpolation-free method, addressing the discretization inconsistency problem encountered in SR. Second, to approximate our target function in the Iterative Kernel Integration, we employ a linear attention operator that has been proved to be comparable to the Petrov-Galerkin projection. Finally, we map the last hidden representation to the output function. Experimental results show that our SRNO outperforms other arbitrary-scale SR methods in performance as well as computation time, and particularly in the capability of capturing the global image structures.

References

- [1] Eirikur Agustsson and Radu Timofte. Ntire 2017 challenge on single image super-resolution: Dataset and study. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pages 126–135, 2017.

- [2] Marco Bevilacqua, Aline Roumy, Christine Guillemot, and Marie line Alberi Morel. Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In Proceedings of the British Machine Vision Conference, pages 135.1–135.10. BMVA Press, 2012.

- [3] Shuhao Cao. Choose a transformer: Fourier or galerkin. Advances in Neural Information Processing Systems, 34:24924–24940, 2021.

- [4] Hanting Chen, Yunhe Wang, Tianyu Guo, Chang Xu, Yiping Deng, Zhenhua Liu, Siwei Ma, Chunjing Xu, Chao Xu, and Wen Gao. Pre-trained image processing transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 12299–12310, 2021.

- [5] Yinbo Chen, Sifei Liu, and Xiaolong Wang. Learning continuous image representation with local implicit image function. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 8628–8638, 2021.

- [6] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. In International Conference on Learning Representations, 2020.

- [7] Alexandre Ern and Jean-Luc Guermond. Theory and practice of finite elements, volume 159. Springer, 2004.

- [8] Jinjin Gu and Chao Dong. Interpreting super-resolution networks with local attribution maps. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9199–9208, 2021.

- [9] John Guibas, Morteza Mardani, Zongyi Li, Andrew Tao, Anima Anandkumar, and Bryan Catanzaro. Efficient token mixing for transformers via adaptive fourier neural operators. In International Conference on Learning Representations, 2021.

- [10] Gaurav Gupta, Xiongye Xiao, and Paul Bogdan. Multiwavelet-based operator learning for differential equations. Advances in Neural Information Processing Systems, 34:24048–24062, 2021.

- [11] Xuecai Hu, Haoyuan Mu, Xiangyu Zhang, Zilei Wang, Tieniu Tan, and Jian Sun. Meta-sr: A magnification-arbitrary network for super-resolution. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 1575–1584, 2019.

- [12] Jia-Bin Huang, Abhishek Singh, and Narendra Ahuja. Single image super-resolution from transformed self-exemplars. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 5197–5206, 2015.

- [13] Rakhoon Hwang, Jae Yong Lee, Jin Young Shin, and Hyung Ju Hwang. Solving pde-constrained control problems using operator learning. Proceedings of the AAAI Conference on Artificial Intelligence, 36(4):4504–4512, 2022.

- [14] Md Amirul Islam, Sen Jia, and Neil DB Bruce. How much position information do convolutional neural networks encode? In International Conference on Learning Representations, 2019.

- [15] Chiyu” Max” Jiang, Soheil Esmaeilzadeh, Kamyar Azizzadenesheli, Karthik Kashinath, Mustafa Mustafa, Hamdi A Tchelepi, Philip Marcus, and Anima Anandkumar. Meshfreeflownet: a physics-constrained deep continuous space-time super-resolution framework. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, pages 1–15, 2020.

- [16] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In ICLR (Poster), 2015.

- [17] Nikola Kovachki, Zongyi Li, Burigede Liu, Kamyar Azizzadenesheli, Kaushik Bhattacharya, Andrew Stuart, and Anima Anandkumar. Neural operator: Learning maps between function spaces. arXiv preprint arXiv:2108.08481, 2021.

- [18] Jaewon Lee and Kyong Hwan Jin. Local texture estimator for implicit representation function. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1929–1938, 2022.

- [19] Zongyi Li, Nikola Borislavov Kovachki, Kamyar Azizzadenesheli, Kaushik Bhattacharya, Andrew Stuart, Anima Anandkumar, et al. Fourier neural operator for parametric partial differential equations. In International Conference on Learning Representations, 2020.

- [20] Jingyun Liang, Jiezhang Cao, Guolei Sun, Kai Zhang, Luc Van Gool, and Radu Timofte. Swinir: Image restoration using swin transformer. In 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), pages 1833–1844, 2021.

- [21] Bee Lim, Sanghyun Son, Heewon Kim, Seungjun Nah, and Kyoung Mu Lee. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pages 136–144, 2017.

- [22] Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, and Baining Guo. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10012–10022, 2021.

- [23] Lu Lu, Pengzhan Jin, Guofei Pang, Zhongqiang Zhang, and George Em Karniadakis. Learning nonlinear operators via deeponet based on the universal approximation theorem of operators. Nature Machine Intelligence, 3(3):218–229, 2021.

- [24] Salma Abdel Magid, Yulun Zhang, Donglai Wei, Won-Dong Jang, Zudi Lin, Yun Fu, and Hanspeter Pfister. Dynamic high-pass filtering and multi-spectral attention for image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 4288–4297, 2021.

- [25] David Martin, Charless Fowlkes, Doron Tal, and Jitendra Malik. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings Eighth IEEE International Conference on Computer Vision. ICCV 2001, volume 2, pages 416–423. IEEE, 2001.

- [26] Jeong Joon Park, Peter Florence, Julian Straub, Richard Newcombe, and Steven Lovegrove. Deepsdf: Learning continuous signed distance functions for shape representation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 165–174, 2019.

- [27] Jaideep Pathak, Shashank Subramanian, Peter Harrington, Sanjeev Raja, Ashesh Chattopadhyay, Morteza Mardani, Thorsten Kurth, David Hall, Zongyi Li, Kamyar Azizzadenesheli, et al. Fourcastnet: A global data-driven high-resolution weather model using adaptive fourier neural operators. arXiv preprint arXiv:2202.11214, 2022.

- [28] Walter Rudin. Functional analysis, mcgrawhill. Inc, New York, 45:46, 1991.

- [29] Wenzhe Shi, Jose Caballero, Ferenc Huszár, Johannes Totz, Andrew P Aitken, Rob Bishop, Daniel Rueckert, and Zehan Wang. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1874–1883, 2016.

- [30] Vincent Sitzmann, Julien Martel, Alexander Bergman, David Lindell, and Gordon Wetzstein. Implicit neural representations with periodic activation functions. Advances in Neural Information Processing Systems, 33:7462–7473, 2020.

- [31] Sanghyun Son and Kyoung Mu Lee. Srwarp: Generalized image super-resolution under arbitrary transformation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 7782–7791, 2021.

- [32] Matthew Tancik, Pratul Srinivasan, Ben Mildenhall, Sara Fridovich-Keil, Nithin Raghavan, Utkarsh Singhal, Ravi Ramamoorthi, Jonathan Barron, and Ren Ng. Fourier features let networks learn high frequency functions in low dimensional domains. Advances in Neural Information Processing Systems, 33:7537–7547, 2020.

- [33] Radu Timofte, Eirikur Agustsson, Luc Van Gool, Ming-Hsuan Yang, and Lei Zhang. Ntire 2017 challenge on single image super-resolution: Methods and results. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pages 114–125, 2017.

- [34] Hugo Touvron, Matthieu Cord, Matthijs Douze, Francisco Massa, Alexandre Sablayrolles, and Hervé Jégou. Training data-efficient image transformers & distillation through attention. In International Conference on Machine Learning, pages 10347–10357. PMLR, 2021.

- [35] V Vapnik. Statistical learning theory wiley-interscience. New York, 1998.

- [36] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. Advances in neural information processing systems, 30, 2017.

- [37] Longguang Wang, Yingqian Wang, Zaiping Lin, Jungang Yang, Wei An, and Yulan Guo. Learning a single network for scale-arbitrary super-resolution. In Proceedings of the IEEE/CVF international conference on computer vision, pages 4801–4810, 2021.

- [38] Jingyu Yang, Sheng Shen, Huanjing Yue, and Kun Li. Implicit transformer network for screen content image continuous super-resolution. Advances in Neural Information Processing Systems, 34:13304–13315, 2021.

- [39] Roman Zeyde, Michael Elad, and Matan Protter. On single image scale-up using sparse-representations. In International conference on curves and surfaces, pages 711–730. Springer, 2010.

- [40] Yulun Zhang, Kunpeng Li, Kai Li, Lichen Wang, Bineng Zhong, and Yun Fu. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European conference on computer vision (ECCV), pages 286–301, 2018.

- [41] Yulun Zhang, Yapeng Tian, Yu Kong, Bineng Zhong, and Yun Fu. Residual dense network for image super-resolution. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2472–2481, 2018.

Supplemental Material:

Super-Resolution Neural Operator

This document provides additional details and results.

Appendix A Dynamic basis update

In this section, we provide a principled discussion on the quasi-optimal property of the Galerkin-type attention mechanism, which tells that in each attention layer, SRNO can achieve the approximation capability that the Petrov-Galerkin projection can offer. Although some theorems have been proved in [1], we provide a systematic and complete discussion on the dynamic basis update processes in the query, test and value approximation spaces. The background knowledge about the Galerkin projection can be found in [4]

Let be a learnable map that is the composition of the Galerkin-type attention operator and the FFN used in SRNO, where is the current value space spanned by the column vectors of . Similarly, we can define the approximation spaces and . Suppose is the best approximation of image function in current value space , i.e., , and then the approximation error between and can be described as:

| (1) |

Let be a continuous bilinear form. Here we define, for , . Under the settings of Galerkin-type attention [1], for any given , we have

| (2) |

i.e., is coercive on the current key space with constant . (1) can be reformulated as

| (3) |

Our purpose is to minimize (3) by optimizing the trainable parameters ,

| (4) |

(3) and (4) show the approximation capacity of a Galerkin-type attention used in SRNO as the kernel integral operator. In SRNO, we are actually optimizing the basis functions of the current value space to approximate the best . By the Riesz representation theorem [3], there exists a value-to-key linear map such that . In order to reveal the interactions among the bases of the three approximation spaces, we introduce the second bilinear form to substitute the inner product . In practice, the FFN , as a universal approximator in , helps the bilinear form to approximate the inner product . We thus define the following problem to approximate the right hand side of (4) ():

| (5) |

which involves solving the following operator equation system (finding and ):

| (6) |

which is further equivalent to solve the following linear system:

| (7) |

where , is formd by sets of basis , , respectively. , , and . is the vector representation for . Similiar to for . It is straightforward to verify that , , . And then we can get:

| (8) |

if , which is verified by our experiments. We multiply a permutation matrix to Q, such that ’s first columns form the value vector as the bases of . Then we multiply the permuted basis matrix with , yielding

| (9) |

where . The layer normalization scheme for is used to mimick the matrix .

The dynamic basis update rule, minimizer to (5), can be defined as:

| (10) |

where are the column vectors of layer normnalized matrices .

The point-wise FFN introduces nonlinearties on one hand, and the positions concatenated in z enhance the bases on the other. In this way, the basis functions not only approximate the functions in the current value space, but also are being constantly enriched. Note that, in practice, we swap the matrix to make this process closer to self-attention in [7]. In summary, our iterative process consist of two step: 1) the linear attention minimizes the (5) in the current value space; 2) the point-wise FFN and position information for the latent representation enrich the basis functions. The dynamic basis updating phenomenon is demonstrated in Fig.1. We observe that the basis function in the second layer appears to be more structured than in the previous layer, which verifies the validity of our method.

|

|

|

| random coordinates | sequential coordinates |

|

|

|

| SRNO (-s) | SRNO | GT |

Appendix B Network Architecture

The Network architecture is shown in Fig.5. The input LR image undergoes three phases to output the HR image with the specified resolution: (a) Lifting the LR pixel values on the set of coordinates to a higher dimensional feature space by a CNN-based encoder , constructing the latent representation , and linearly transforming into the first layer’s input . (b) kernel integrals composed of layers of Galerkin-type attention, and (c) finally project to the RGB space. As to the feature encoder , we employ EDSR-baseline [6], or RDN [8], both of which drop their upsampling layers, and their output channel dimensions . We employ the multi-head attention scheme in [7] by dividing the queries, keys and values into parts with each of dimension . In our implementation, , yielding 16-dimensional output values. We only use two iterations in the kernel integral operator, which already outperforms previous works, while keeping the running time advantage. Note that we utilize convolutions to replace all the linear layers in SRNO, since they have a GPU-friendly data structure.

Appendix C Random vs. Sequential sampling

For a single batch, we crop patches of sizes from the HR training images (one per each). The LR counterparts are downsampled using bicubic interpolation with the corresponding . In order to keep the consistent dimensions of the LR patches, sharing a common supervisory HR signal in a single batch, we sample HR pixels and calculate the corresponding fractional coordinates on the coarse grid associated with . Figure 2 shows two different ways to sample function values. Experiments, in Tab.1, Tab.2 and Fig.4, verify that the random sampling method achieves better performance than the sequential sampling. These results show that using random sampling method can capture a more comprehensive representation for an image function, which is attributed to the fact that random coordinates, as demonstrated in Fig.3, contain some extra and useful high-frequency information for SR reconstruction.

Appendix D Additional Results

We further compare our SRNO to LIIF, LTE on several images in Fig.6. It can be ovserved from the zoom-in regions that our SRNO consistently produces clearer and finer details than others.

| Method | In-distribution | Out-of-distribution | ||||||

|---|---|---|---|---|---|---|---|---|

| 2 | 3 | 4 | 6 | 12 | 18 | 24 | 30 | |

| Bicubic | 31.01 | 28.22 | 26.66 | 24.82 | 22.27 | 21.00 | 20.19 | 19.59 |

| EDSR-baseline-LTE [5] | 34.72 | 31.02 | 29.04 | 26.81 | 23.78 | 22.23 | 21.24 | 20.53 |

| EDSR-baseline-SRNO | 34.85 | 31.11 | 29.16 | 26.90 | 23.84 | 22.29 | 21.27 | 20.56 |

| EDSR-baseline-SRNO (-s) | 34.79 | 31.07 | 29.09 | 26.84 | 23.80 | 22.26 | 21.24 | 20.54 |

| Dataset | Method | In-distribution | Out-of-distribution | |||

|---|---|---|---|---|---|---|

| 2 | 3 | 4 | 6 | 8 | ||

| Set5 | EDSR-baseline-LTE [5] | 38.03 | 34.48 | 32.27 | 28.96 | 27.04 |

| EDSR-baseline-SRNO | 38.15 | 34.53 | 32.39 | 29.06 | 27.06 | |

| EDSR-baseline-SRNO (-s) | 38.12 | 34.50 | 32.37 | 28.96 | 27.04 | |

| Set14 | EDSR-baseline-LTE [5] | 33.71 | 30.41 | 28.67 | 26.49 | 24.98 |

| EDSR-baseline-SRNO | 33.83 | 30.50 | 28.79 | 26.55 | 25.05 | |

| EDSR-baseline-SRNO (-s) | 33.79 | 30.42 | 28.71 | 26.52 | 25.00 | |

| B100 | EDSR-baseline-LTE [5] | 32.22 | 29.15 | 27.63 | 25.87 | 24.83 |

| EDSR-baseline-SRNO | 32.28 | 29.20 | 27.68 | 25.91 | 24.88 | |

| EDSR-baseline-SRNO (-s) | 32.25 | 29.18 | 27.65 | 25.90 | 24.85 | |

| Urban100 | EDSR-baseline-LTE [5] | 32.29 | 28.32 | 26.25 | 23.84 | 22.52 |

| EDSR-baseline-SRNO | 32.60 | 28.56 | 26.50 | 24.08 | 22.70 | |

| EDSR-baseline-SRNO (-s) | 32.50 | 28.51 | 26.39 | 23.95 | 22.61 | |

Urban100()

|

|

|

|

|

|

|

|---|---|---|---|---|---|---|

Urban100()

|

|

|

|

|

|

|

Urban100()

|

|

|

|

|

|

|

Urban100()

|

|

|

|

|

|

|

Set14()

|

|

|

|

|

|

|

Set14()

|

|

|

|

|

|

|

|

Bicubic | LIIF [2] | LTE [5] | SRNO (ours) | GT |

References

- [1] Shuhao Cao. Choose a transformer: Fourier or galerkin. Advances in Neural Information Processing Systems, 34:24924–24940, 2021.

- [2] Yinbo Chen, Sifei Liu, and Xiaolong Wang. Learning continuous image representation with local implicit image function. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 8628–8638, 2021.

- [3] Philippe G Ciarlet. Linear and nonlinear functional analysis with applications, volume 130. Siam, 2013.

- [4] Alexandre Ern and Jean-Luc Guermond. Theory and practice of finite elements, volume 159. Springer, 2004.

- [5] Jaewon Lee and Kyong Hwan Jin. Local texture estimator for implicit representation function. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1929–1938, 2022.

- [6] Bee Lim, Sanghyun Son, Heewon Kim, Seungjun Nah, and Kyoung Mu Lee. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pages 136–144, 2017.

- [7] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. Advances in neural information processing systems, 30, 2017.

- [8] Yulun Zhang, Yapeng Tian, Yu Kong, Bineng Zhong, and Yun Fu. Residual dense network for image super-resolution. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2472–2481, 2018.