SuPer Deep: A Surgical Perception Framework for Robotic Tissue Manipulation using Deep Learning for Feature Extraction

Abstract

Robotic automation in surgery requires precise tracking of surgical tools and mapping of deformable tissue. Previous works on surgical perception frameworks require significant effort in developing features for surgical tool and tissue tracking. In this work, we overcome the challenge by exploiting deep learning methods for surgical perception. We integrated deep neural networks, capable of efficient feature extraction, into the tissue tracking and surgical tool tracking processes. By leveraging transfer learning, the deep-learning-based approach requires minimal training data and reduced feature engineering efforts to fully perceive a surgical scene. The framework was tested on three publicly available datasets, which use the da Vinci® Surgical System, for comprehensive analysis. Experimental results show that our framework achieves state-of-the-art tracking performance in a surgical environment by utilizing deep learning for feature extraction.

I INTRODUCTION

In the field of health care and surgery, automation is on the horizon due to advancements in robotics. Improved patient outcomes are being achieved through increased precision in tissue manipulation and the development of minimally invasive robotics [1]. One avenue of research in automation using these platforms is through the advancements of control algorithms to move towards autonomy [2, 3]. These algorithms typically aim to automate specific surgical subtasks such as suturing [4], cutting [5], and multilateral debridement [6]. Another development is assistance to the teleoperating surgeon in real-time through virtual fixtures to avoid critical areas [7], augmented reality indicators [8], and motion scaling for finer control near tissue [9].

To utilize these automation endeavors in a real surgical scene, accurate perception of the environment and the agents is essential. There are two major challenges: tracking of the surgical tool to control and localize it in the camera frame, and tracking of the deformable environment for the surgical tool to plan and interact with. While these two problems have been solved outside of surgical robotics [10, 11], the domain-specific challenges are the narrow field of view endoscopes, poor lighting conditions, and the requirement of very high accuracy [12].

The surgical tool tracking community has largely focused on developing feature detection algorithms to update the pose of the surgical tool [12]. The algorithms need to be robust to the poor lighting conditions and the highly reflective tool surfaces. Examples of recent work include using the Canny-edge detector for silhouette extraction [13], online template matching [14], and classified features using classical image features such as the spatial derivatives [15], [16]. Deep neural networks have also achieved promising results in feature tracking for surgical tools [17], [18], but utilizing them for full 3D pose estimation still remains unexamined.

Simultaneously, efforts in tissue tracking have focused mainly on adaptions of 3D reconstruction techniques such as SurfelWarp for deformable tracking [11]. The lack of directly measurable depth information in endoscopes is a significant challenge in the adaptation. Hence, the common approach is to work with stereoscopic endoscopes and use stereo reconstruction techniques such as Efficient Large-Scale Stereo Matching (ELAS) to generate depth images [19]. From this depth estimation, deformable tracking techniques can be applied [20, 21]. Other tissue tracking techniques include tracking key-point features and registration [22] and dense SLAM methods, which use image features to localize the endoscope [23, 24].

A common theme across these two challenges is the need for high-quality image features. Surgical tool tracking mainly focuses on developing detectors for tool features, and recent works in tissue tracking have highlighted depth reconstruction from stereo matching as the most significant bottleneck [20, 25]. Deep learning has the advantage of learning features, which will eliminate the need for feature engineering. However, deep learning previously has not been a front runner in surgical perception due to the lack of large quantities of high-quality medical and surgical data [26].

In this paper, we use state-of-the-art deep neural networks (DNNs) that require minimal training data to explore its application in surgical perception. Our contributions can be summarized as follows:

-

1.

Using deep learning for high quality and robust surgical tool feature extraction,

-

2.

Investigative study of popular deep learning and traditional stereo matching algorithms to improve deformable tissue tracking,

-

3.

Complete integration of deep neural networks into the previously developed Surgical Perception (SuPer) framework [25] to fully perceive the entire surgical scene - SuPer Deep.

Experiments were ran on a tissue manipulation dataset we previously released [25], and two other publicly available datasets collected from the da Vinci® Surgical System, to evaluate the tool tracking and tissue tracking performances individually. SuPer Deep framework advances on SuPer [25], its predecessor, by eliminating the requirement for painted markers and the key-points association process in tool tracking. Moreover, our framework generates more realistic tissue reconstruction by improving the depth estimation. Finally, our work includes the first comparative study on deformable tissue tracking, whereas previous works have been hampered by a paucity of standard benchmarking datasets for surgical perception.

II METHODOLOGY

II-A The Surgical Perception Framework

Our surgical perception framework, as shown in Fig. 1, consists of a deformable tissue tracker and a surgical tool tracker to perceive the entire surgical scene, including the the deforming environment and robotic agent. Two deep neural networks are embedded into our framework for specific feature extractions: DNN(1) finds and matches features from stereo images to generate a depth map for tissue tracking, and DNN(2) extracts point features for surgical tool tracking.

To track the tissue deformation, we update tissue model by fusing the estimated depth maps using the previously developed model-free tissue tracker [25]. The pose of the surgical tool is estimated using a model-based tracker that utilizes a kinematic prior and fusing the encoder readings with the 2D detection from the images, which will be described in Section II-B. In order to separate the tools from the deformable tissue model, a mask of the surgical tool is generated by rendering the 3D CAD model into the endoscopic camera view and is removed from the depth map fed to the deformable tissue tracker. Finally, we combine the tissue point cloud and surgical tools into the camera frame to reconstruct the entire surgical scene.

II-B Surgical Tool Tracking

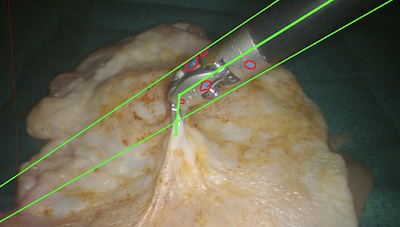

To localize the surgical tools on image frames, we use DeepLabCut [27], which employs DeeperCut [28] as the backbone for point feature detection. The DNN consists of variations of Deep Residual Neural Networks (ResNet) [29] for feature extraction and deconvolutional layers to up-sample the feature maps and produce spatial probability densities. The output estimation for each point feature is represented as a tuple , where is the image coordinate of the -th feature and is the corresponding confidence score. The DNN was fine-tuned with few training samples to adapt to surgical tool tracking by minimizing the cross-entropy loss. The samples were hand-labeled using the open-source DLC toolbox [30]. Fig. 2 shows examples of point features that were detected on surgical instruments.

To estimate the pose of the surgical tool in 3D space, the 2D detections are combined with the encoder readings from the surgical robot, and a particle filter is applied for estimation. Previous work has shown low-profile, cable driven surgical robots [31, 32] have inaccurate in joint angles [33] and challenges calibrating the transform between the camera and robotic base, also known as hand-eye [34]. To localize the surgical tool and overcome these uncertainties, we utilize our previously developed formulation and track the Lumped Error. [35]. Let the Lumped Error be defined as , which is parameterized by an axis-angle vector , and a translational vector . The Lumped Error compensates for both error in joint angles and hand-eye, for more details refer to our previous work [35]. To estimate and at time given time , a zero-mean Gaussian noise is assumed to model the uncertainty. Therefore, the motion model for the particle filter is defined as:

| (1) |

where is the covariance matrix.

Using this Lumped Error formulation, a detected feature point can be projected to the image plane by being first transformed by the kinematic chain and then the corrected hand-eye. More specifically, feature point on the -th link is projected onto the image plane by:

| (2) |

where is the -th joint transform generated by joint angle , is the initial hand-eye transform from the set-up joints or calibration, is the intrinsic camera matrix, and is the scaling factor that constraints the point on the image plane. Note that denotes the homogeneous representation of a point (e.g. ).

From here, an observation model can be defined by relating the predicted feature location with the detected features. Given a list of observations, , the observation model is defined to be:

| (3) |

where is a tuning parameter.

Since the deep neural network performs end-to-end 2D feature detection, the only parameters that need to be tuned for tool tracking are and . The direct association of the key-point features from the neural network also eliminates the need for explicit data association - a significant improvement for tool tracking over the previous method [25]. Furthermore, the feature detection from the deep neural network does not rely on the tracked states, which is a common technique in surgical tool tracking [14] and can lead to detrimental results when the tracking begins to fail.

II-C Depth Estimation for Deformable Tissue Tracking

Deformable tissue tracking relies heavily on the quality of depth estimation, as the deformable tracker uses the depth maps as the observation [20], [25]. To estimate the depth, a stereo matching algorithm is used to compute the disparity, and then inverted to obtain pixel-wise depth.

Traditional stereo matching algorithms, like [19] and [36], typically take a pair of rectified stereo images and as input, and estimate the disparity by matching image patches or features between and . Due to the complexity of the surgical setting, the image quality is not the sharpest, which makes finding pixel-level correspondence extremely challenging. Deep-learning-based algorithms, such as [37], [38], and [39], use a weight-sharing feature extractor to obtain feature maps and from and , respectively. Then a 4D matching cost volume is formed by concatenating the and , such that is the concatenation of and , where is the pixel location and is the disparity. The cost volume is regularized using 3D convolutional layers and reducing the dimension of the 4-th channel to 1. Finally, the resulting 3D tensor is used to estimate the disparity for each pixel as

| (4) |

where is the max disparity and denotes the softmax function. An investigation between these stereo matching algorithms will be presented in the Section III. After estimating the disparity , the depth value is obtained by the following transform

| (5) |

where is the horizontal offset (i.e., baseline) between the two cameras, and is the focal length, which can be obtained from camera calibration.

Various stereo-matching algorithms can be substituted in for DNN(1) of our framework shown in Fig. 1. To find the best one, we investigated several stereo matching algorithms by combining with the previously developed tissue tracker [25] for deformable tissue tracking. The state-of-the-art algorithm, Guided Aggregation Network (GA-Net) [39], was finally chosen for our framework. In comparison to previous works on deformable tissue tracking, which require a substantial amount of spatial and temporal filtering on the depth image [20], [25], our method employs a deep stereo matching network for accurate and dense depth estimation, which requires no post-processing on the depth image.

III Experiments

We evaluated the proposed framework on three open-source datasets for multiple tasks addressing the performance of the surgical tool tracking and deformable tissue tracking. We compared it with the state-of-the-art methods for analysis. The experiments were conducted on two identical computers, each containing an Intel® Core™ i9-7940X Processor and NVIDIA’s GeForce RTX 2080.

III-A Datasets and Evaluation Metrics

The Surgical Perception (SuPer) dataset***https://sites.google.com/ucsd.edu/super-framework/home mentioned in [25] is a recording of a repeated tissue manipulation experiment using the da Vinci Research Kit (dVRK) [32]. The dataset consists of a raw stereo endoscopic video stream and encoder readings from the surgical robot with ground-truth labels for the tool tracking tasks, which consists 50 hand-labeled surgical tool masks. The tool tracking performance was evaluated by calculating the Intersection-Over-Union (IoU, Jaccard Index) for the rendered tool masks, which are based on estimated tool poses.

The Hamlyn Centre Video Dataset [40] was used to evaluate the performance of deformable tissue tracking. It includes two video sequences of silicone heart phantom deforming with cardiac motion and consists of ex-vivo endoscopic stereo videos (resolution: 360288) with depth information generated from CT scans. The re-projected depth maps of the reconstructed tissue model are evaluated, which is the projection of the entire reconstructed point cloud to the image plane, with each pixel containing a depth value. We calculated the per-pixel root-mean-square (RMS) error of the depth map for every image:

| (6) |

where is the pixel position, is the estimated depth value, is the ground truth depth value, and is the total number of pixels for each image. We also reported the percentage of the valid (non-zero) pixels of the depth map.

The da Vinci tool tracking dataset used in [14] consists of a stereo video stream and the corresponding kinematic information of the da Vinci® surgical robot. The dataset is used to evaluate surgical tool feature detection and pose estimation. Note that the SuPer dataset has painted markers, and hence this additional experiment ensures that surgical tool feature detector learns surgical tool point features and is not dependent on colored markers. The performance of feature detection is evaluated by calculating the norm of the error in pixels, for the -th feature:

| (7) |

where is the total number of test images, is the predicted feature point location, and is the ground truth feature point location in the -th image. We experiment with varying amounts of hand-labeled training data to illustrate the data efficiency of the proposed surgical tool feature detection method.. Due to lack of ground-truth data for pose estimation, we only provide qualitative results for surgical robotic tool tracking on this dataset.

III-B Implementation Details

III-B1 Surgical Tool Tracking

For the SuPer dataset, the images were downsampled by 2 before passing to DeepLabCut for feature detection. The weights of DeepLabCut were pre-trained on ImageNet and fine-tuned by training on only 50 hand-labeled images for 7100 iterations with stochastic gradient descent of batch size 1 and learning rate . For the particle filter, we used 1000 particles with bootstrap approximation on the prediction step and stratified resampling when the number of effective particles dropped below 500. For initialization, the parameterized Lumped Error is set to with . For the motion model, the covariance is , and the is set to 0.1 for the observation model.

III-B2 Deformable Tissue Tracking

For depth map estimation, the raw stereo images were rectified, undistorted, and resized to (640, 480) before being passed into the stereo matching algorithm. Due to the lack of task-specific datasets for surgical environments, the pretrained weights of GA-Net were utilized, which was trained on the Scene Flow dataset from scratch for 10 epochs and fine-tuned on the KITTI2015 dataset for 640 epochs. The maximum disparity was set to 192. After inverting the disparity, the resulting depth map was fused into the tissue model after subtracting the rendered tool mask, which is dilated by 5 pixels.

For the comparative study, we implemented 3 deep-learning-based and 3 non-deep learning stereo matching algorithms for deformable tissue tracking. The stereoBM†††https://docs.opencv.org/4.2.0/d9/dba/classcv_1_1StereoBM.html and stereoSGBM [36] algorithms are implemented using OpenCV package. ELAS, which is utilized by [20] and [25], is implemented using the open-source library [19]. The Pyramid Stereo Matching Network (PSMNet) [38] and the Hierarchical Stereo Matching network (HSM) [42] were implemented using the off-the-shelf pretrained weights provided by their original implementations without any task-specific fine-tuning.

IV Results

Qualitative results of the environment mapping on the SuPer dataset are presented in Fig. 3. As highlighted in the figures, SuPer Deep provides a larger field of view of the unstructured environment while preserving better details on the reconstruction. In comparison to the SuPer framework, more detailed information is captured due to the lack of filtering and smoothing applied on the stereo reconstruction process, which was required in previous implementations of tissue tracking [25].

| Method | Video 1 | Video 2 | ||

|---|---|---|---|---|

| RMSE | Perc. valid | RMSE | Perc. valid | |

| stereoBM + deformable tissue tracker | 23.24 2.18 | 0.565 0.037 | 34.02 2.08 | 0.523 0.033 |

| stereoSGBM + deformable tissue tracker | 16.84 1.99 | 0.713 0.038 | 24.68 1.84 | 0.683 0.032 |

| ELAS + deformable tissue tracker | 16.12 2.22 | 0.716 0.044 | 22.03 2.89 | 0.719 0.049 |

| PSMNet + deformable tissue tracker | 5.64 1.48 | 0.940 0.023 | 8.23 1.32 | 0.939 0.014 |

| HSM + deformable tissue tracker | 5.33 1.36 | 0.938 0.023 | 6.73 1.28 | 0.946 0.020 |

| GA-Net + deformable tissue tracker | 4.87 1.55 | 0.947 0.026 | 6.20 1.57 | 0.957 0.024 |

IV-1 Deformable Tissue Tracking

Using the Hamyln Centre Video Dataset, the deformable tissue tracking results were compared by combining popular stereo matching algorithms with the deformable tissue tracker. We visualize the reconstruction results by fusing the first 10 estimated depth maps from each algorithm in Fig. 4. Deep-learning-based algorithms generally provide dense and consistent matches and result in realistic tissue reconstructions. We calculated the average per-pixel RMS error on the re-projected depth maps, and the quantitative results are shown in Table I. Deep-learning-based approaches achieve much lower per-pixel RMS error with the higher percentage of the valid pixel, which confirms the observations from the environment mapping results in Fig. 3 and Fig. 4.

IV-2 Surgical Tool Tracking

Fig. 6 shows the feature detection performance of the DeepLabCut with varying numbers of training samples. By leveraging transfer learning, the feature detector is able to achieve high performance on detecting surgical tool features using few training samples.

For the tool tracking task, SuPer Deep achieved mean IoU on the SuPer tool segmentation task, which is a significant improvement on the original method (SuPer: 82.8). Notably, SuPer Deep does marker-less tool tracking while the former utilizes painted markers. Qualitative results of the tool tracking are presented in Fig. 5, where we experimented with our tool tracker on both the SuPer dataset and the da Vinci tool tracking dataset. In the visualization, the Augmented Reality rendering from the estimated tool pose produces a near-perfect overlap with the tool on real images.

V Discussion

The experimental results show SuPer Deep achieves excellent performances in both surgical tool tracking and deformable tissue tracking. By utilizing deep neural networks, SuPer Deep produces more consistent depth maps and achieves accurate tool pose estimation. The latter also helps to reduce the dilation of the tool mask, which reduces the amount of information lost. As the visualizations shows in Fig. 3, SuPer Deep’s reconstruction has the surgical tool touching the point cloud (as opposed to just being above the point cloud).

There are occasional failures in feature detection on the surgical tool, owing mainly to the symmetry of the tool parts, for example, the Roll_1, Pitch_1 and Yaw_2 features. As shown in Fig. 6, detecting those features is more challenging compared to other ones. The misdetections are, however, of low confidence. Hence they are handled by the probability weighting of the detected points in the observation model of the particle filter. In Fig. 7, for instance, one of the grippers of the tools is misdetected, but has substantially lower confidence; meanwhile the correctly detected points have confidence scores higher than 70%. Similarly, in the second case, two features are detected on the wrong side of the shaft due to the surgical tools symmetry, but again with low confidence and hence is not detrimental to the pose estimation. Overall, the feature detection is robust and results in accurate perception.

VI Conclusion

Deep learning has not been utilized as a major tool in surgical robotic perception, with a lack of training data as the primary bottleneck [25]. The SuPer Deep framework, incorporating two deep neural networks as major components, shows that the challenge of insufficient data is surmountable. Using transfer learning, even on limited training data, the framework accomplishes excellent feature detection for surgical scene perception. Our comparative study on deformable tissue tracking, which utilizes the deep neural networks with only pretrained weights, shows that deep learning techniques can be applied for stereo reconstruction and gives a performance evaluation on these techniques applied to a surgical context.

Currently, we believe that the major limitation of the SuPer Deep framework is its high computation power. Running multiple deep neural networks in real-time requires multiple processing units, which limits the update rates of the trackers. Lightweight deep neural networks will be ideal for real-time surgical applications, if adapted without compromising on accuracy. As recent progress has been made on deep-learning-based reconstruction and rendering techniques [43], [44], a future direction could be utilizing a learnable tissue tracker and tool tracker to further optimize the perception framework. Another direction to pursue is surgical task automation. By using the perceived environment as feedback, controllers applied to the surgical tool will be able to accomplish tasks in unstructured, deforming surgical environments.

References

- [1] G. H. Ballantyne and F. Moll, “The da vinci telerobotic surgical system: the virtual operative field and telepresence surgery,” Surgical Clinics, vol. 83, no. 6, pp. 1293–1304, 2003.

- [2] M. Yip and N. Das, “Robot autonomy for surgery,” in Encyclopedia of Medical Robotics, ch. 10, pp. 281–313, World Scientific, 2017.

- [3] F. Richter, R. K. Orosco, and M. C. Yip, “Open-sourced reinforcement learning environments for surgical robotics,” arXiv preprint arXiv:1903.02090, 2019.

- [4] R. C. Jackson and M. C. Çavuşoğlu, “Needle path planning for autonomous robotic surgical suturing,” in ICRA, pp. 1669–1675, 2013.

- [5] B. Thananjeyan, A. Garg, S. Krishnan, C. Chen, L. Miller, and K. Goldberg, “Multilateral surgical pattern cutting in 2D orthotropic gauze with deep reinforcement learning policies for tensioning,” in ICRA, IEEE, 2017.

- [6] B. Kehoe et al., “Autonomous multilateral debridement with the raven surgical robot,” in ICRA, pp. 1432–1439, IEEE, 2014.

- [7] M. Selvaggio, G. A. Fontanelli, F. Ficuciello, L. Villani, and B. Siciliano, “Passive virtual fixtures adaptation in minimally invasive robotic surgery,” IEEE Robotics and Automation Letters, vol. 3, no. 4, pp. 3129–3136, 2018.

- [8] L. Qian, J. Y. Wu, S. DiMaio, N. Navab, and P. Kazanzides, “A review of augmented reality in robotic-assisted surgery,” IEEE Transactions on Medical Robotics and Bionics, 2019.

- [9] D. Zhang, B. Xiao, B. Huang, L. Zhang, J. Liu, and G.-Z. Yang, “A self-adaptive motion scaling framework for surgical robot remote control,” IEEE Robotics and Automation Letters, vol. 4, no. 2, pp. 359–366, 2018.

- [10] Y. Li, J. Zhu, S. C. Hoi, W. Song, Z. Wang, and H. Liu, “Robust estimation of similarity transformation for visual object tracking,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 8666–8673, 2019.

- [11] W. Gao and R. Tedrake, “Surfelwarp: Efficient non-volumetric single view dynamic reconstruction,” in Robotics: Science and System, 2018.

- [12] D. Bouget, M. Allan, D. Stoyanov, and P. Jannin, “Vision-based and marker-less surgical tool detection and tracking: a review of the literature,” Medical image analysis, vol. 35, pp. 633–654, 2017.

- [13] R. Hao, O. Özgüner, and M. C. Çavuşoğlu, “Vision-based surgical tool pose estimation for the da Vinci® robotic surgical system,” in Intl. Conf. on Intelligent Robots and Systems, IEEE, 2018.

- [14] M. Ye, L. Zhang, S. Giannarou, and G.-Z. Yang, “Real-time 3D tracking of articulated tools for robotic surgery,” in Intl. Conf. on Medical Image Computing and Computer-Assisted Intervention, pp. 386–394, Springer, 2016.

- [15] A. Reiter, P. K. Allen, and T. Zhao, “Feature classification for tracking articulated surgical tools,” in Intl. Conf. on Medical Image Computing and Computer-Assisted Intervention, pp. 592–600, Springer, 2012.

- [16] A. Reiter, P. K. Allen, and T. Zhao, “Appearance learning for 3d tracking of robotic surgical tools,” The International Journal of Robotics Research, vol. 33, no. 2, pp. 342–356, 2014.

- [17] T. Kurmann et al., “Simultaneous recognition and pose estimation of instruments in minimally invasive surgery,” Medical Image Computing and Computer-Assisted Intervention – MICCAI 2017, pp. 505–513, 2017.

- [18] E. Colleoni, S. Moccia, X. Du, E. De Momi, and D. Stoyanov, “Deep learning based robotic tool detection and articulation estimation with spatio-temporal layers,” IEEE Robotics and Automation Letters, vol. 4, pp. 2714–2721, July 2019.

- [19] A. Geiger, M. Roser, and R. Urtasun, “Efficient large-scale stereo matching,” in Asian Conf. on Computer Vision, pp. 25–38, 2010.

- [20] J. Song, J. Wang, L. Zhao, S. Huang, and G. Dissanayake, “Dynamic reconstruction of deformable soft-tissue with stereo scope in minimal invasive surgery,” RA-Letters, vol. 3, no. 1, pp. 155–162, 2017.

- [21] J. Song, J. Wang, L. Zhao, S. Huang, and G. Dissanayake, “Mis-slam: Real-time large-scale dense deformable slam system in minimal invasive surgery based on heterogeneous computing,” IEEE Robotics and Automation Letters, vol. 3, no. 4, pp. 4068–4075, 2018.

- [22] M. C. Yip, D. G. Lowe, S. E. Salcudean, R. N. Rohling, and C. Y. Nguan, “Tissue tracking and registration for image-guided surgery,” IEEE transactions on medical imaging, vol. 31, no. 11, pp. 2169–2182, 2012.

- [23] N. Mahmoud et al., “Live tracking and dense reconstruction for handheld monocular endoscopy,” IEEE transactions on medical imaging, vol. 38, no. 1, pp. 79–89, 2018.

- [24] A. Marmol, A. Banach, and T. Peynot, “Dense-arthroslam: Dense intra-articular 3-d reconstruction with robust localization prior for arthroscopy,” IEEE Robotics and Automation Letters, vol. 4, no. 2, pp. 918–925, 2019.

- [25] Y. Li, F. Richter, J. Lu, E. K. Funk, R. K. Orosco, J. Zhu, and M. C. Yip, “Super: A surgical perception framework for endoscopic tissue manipulation with surgical robotics,” IEEE Robotics and Automation Letters, vol. 5, pp. 2294–2301, April 2020.

- [26] Y. Kassahun, B. Yu, A. T. Tibebu, D. Stoyanov, S. Giannarou, J. H. Metzen, and E. Vander Poorten, “Surgical robotics beyond enhanced dexterity instrumentation: a survey of machine learning techniques and their role in intelligent and autonomous surgical actions,” International journal of computer assisted radiology and surgery, vol. 11, no. 4, pp. 553–568, 2016.

- [27] A. Mathis, P. Mamidanna, K. M. Cury, T. Abe, V. N. Murthy, M. W. Mathis, and M. Bethge, “Deeplabcut: markerless pose estimation of user-defined body parts with deep learning,” Nature neuroscience, vol. 21, no. 9, p. 1281, 2018.

- [28] E. Insafutdinov, L. Pishchulin, B. Andres, M. Andriluka, and B. Schiele, “Deepercut: A deeper, stronger, and faster multi-person pose estimation model,”

- [29] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” 2015.

- [30] T. Nath, A. Mathis, A. C. Chen, A. Patel, M. Bethge, and M. W. Mathis, “Using deeplabcut for 3d markerless pose estimation across species and behaviors,” Nature Protocols, 2019.

- [31] M. J. Lum et al., “The raven: Design and validation of a telesurgery system,” The International Journal of Robotics Research, vol. 28, no. 9, pp. 1183–1197, 2009.

- [32] P. Kazanzides, Z. Chen, A. Deguet, G. S. Fischer, R. H. Taylor, and S. P. DiMaio, “An open-source research kit for the da vinci® surgical system,” in 2014 IEEE International Conference on Robotics and Automation (ICRA), pp. 6434–6439, May 2014.

- [33] D. Seita, S. Krishnan, R. Fox, S. McKinley, J. Canny, and K. Goldberg, “Fast and reliable autonomous surgical debridement with cable-driven robots using a two-phase calibration procedure,” in 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 6651–6658, IEEE, 2018.

- [34] O. Özgüner, T. Shkurti, S. Huang, R. Hao, R. C. Jackson, W. S. Newman, and M. C. Çavuşoğlu, “Camera-robot calibration for the da vinci robotic surgery system,” IEEE Transactions on Automation Science and Engineering, vol. 17, no. 4, pp. 2154–2161, 2020.

- [35] F. Richter, J. Lu, R. K. Orosco, and M. C. Yip, “Robotic tool tracking under partially visible kinematic chain: A unified approach,” arXiv preprint arXiv:2102.06235, 2021.

- [36] H. Hirschmuller, “Stereo processing by semiglobal matching and mutual information,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 30, pp. 328–341, Feb 2008.

- [37] A. Kendall, H. Martirosyan, S. Dasgupta, and P. Henry, “End-to-end learning of geometry and context for deep stereo regression,” 2017 IEEE International Conference on Computer Vision (ICCV), Oct 2017.

- [38] J.-R. Chang and Y.-S. Chen, “Pyramid stereo matching network,” 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018.

- [39] F. Zhang, V. Prisacariu, R. Yang, and P. H. Torr, “Ga-net: Guided aggregation net for end-to-end stereo matching,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 185–194, 2019.

- [40] D. Stoyanov, M. V. Scarzanella, P. Pratt, and G.-Z. Yang, “Real-time stereo reconstruction in robotically assisted minimally invasive surgery,” in Medical Image Computing and Computer-Assisted Intervention – MICCAI 2010, Springer Berlin Heidelberg, 2010.

- [41] F. Richter, Y. Zhang, Y. Zhi, R. K. Orosco, and M. C. Yip, “Augmented reality predictive displays to help mitigate the effects of delayed telesurgery,” in 2019 International Conference on Robotics and Automation (ICRA), May 2019.

- [42] G. Yang, J. Manela, M. Happold, and D. Ramanan, “Hierarchical deep stereo matching on high-resolution images,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

- [43] Y. Jiang, D. Ji, Z. Han, and M. Zwicker, “Sdfdiff: Differentiable rendering of signed distance fields for 3d shape optimization,” in The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

- [44] K. Park, A. Mousavian, Y. Xiang, and D. Fox, “Latentfusion: End-to-end differentiable reconstruction and rendering for unseen object pose estimation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020.