Subsurface Boundary Geometry Modeling: Applying Computational Physics, Computer Vision and Signal Processing Techniques to Geoscience

Abstract

This paper describes an interdisciplinary approach to geometry modeling of geospatial boundaries. The objective is to extract surfaces from irregular spatial patterns using differential geometry and obtain coherent directional predictions along the boundary of extracted surfaces to enable more targeted sampling and exploration. Specific difficulties of the data include sparsity, incompleteness, causality and resolution disparity. Surface slopes are estimated using only sparse samples from cross-sections within a geological domain with no other information at intermediate depths. From boundary detection to subsurface reconstruction, processes are automated in between. The key problems to be solved are boundary extraction, region correspondence and propagation of the boundaries via contour morphing. Established techniques from computational physics, computer vision and signal processing are used with appropriate modifications to address challenges in each area. To facilitate boundary extraction, an edge map synthesis procedure is presented. This works with connected component analysis, anisotropic diffusion and active contours to convert unordered points into regularized boundaries. For region correspondence, component relationships are handled via graphical decomposition. FFT-based spatial alignment strategies are used in region merging and splitting scenarios. Shape changes between aligned regions are described by contour metamorphosis. Specifically, local spatial deformation is modeled by PDE and computed using level-set methods. Directional predictions are obtained using particle trajectories by following the evolving boundary. However, when a branching point is encountered, particles may lose track of the wavefront. To overcome this, a curvelet backtracking algorithm has been proposed to recover information for boundary segments without particle coverage to minimize shape distortion.

Keywords Interdisciplinary Perspective

Active Contours

Backtracking

Contour Morphing

Directional Prediction

Particle Trajectories

Spatial Correspondence

Subsurface Boundaries

Wavefront Propagation.

CCS Concepts:

Computing methodologies Computer graphics Shape modeling Parametric curve and surface models;

Computing methodologies … Computer vision representations Shape representation;

Computing methodologies … Computer vision problems Tracking;

Applied computing Physical sciences Earth sciences.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/b7ab0b66-b7ba-4654-aa21-a69084353155/x1.png) |

1 Introduction

This paper considers the feasibility of modeling geospatial boundaries using differential geometry given sparse observations. The objective is to extract surfaces from spatial patterns and obtain coherent directional predictions along the boundary of the extracted surfaces. Modeling underground geological formations is challenging in general because the measurements are sparse and indirect. Due to operational constraints and the significant costs associated with data gathering111This includes operator cost, energy expenditure for drilling, replacement cost of mechanical parts, efficiency cost of coordinating dependent processes such as blasting and excavation, and the cost of performing chemical assays to determine the composition, material type or geological domain associated with each sample., the available observations may not paint a complete picture in terms of spatial coverage. These measurements, although point-based, differ from those encountered in computer vision or image processing in some signficant ways. The input consists of sparse spatial patterns in the form of irregularly spaced labeled drilled holes. Not only are the measurement locations sparse in the x-y plane, the sampling is less dense along the z-axis. Geo-assay data is typically collected sequentially on a bench-by-bench basis from the top down (each bench has a height of 10m) with significant time lapse in-between. In contrast, pixels are captured almost instantaneously using an image sensor array. The combination of these two factors means volumetric segmentation approaches that utilize 3D partial derivatives, and those that conduct minimal path search with respect to z, are not applicable as there are no voxels or fine-grain data available at intermediate depths between successive benches.

From a system perspective, the output provides a volumetric reconstruction of subterranean surfaces that conveys directional information. The motivation for predicting the slope of extracted boundaries is to provide guidance for more targeted drilling and exploration. The accuracy of this directional information needs only be commensurate with the resolution of the raw input (roughly 5m) for it to be useful in a mining context. However, the directional estimates need to be coherent. An example of what not to do is using the outward normals of a contour as a means for extrapolation which inevitably cross-over when non-convex boundaries are involved. Hence, partial differential equations (PDE) are used to describe boundary movement in a more principled manner.

The input data used in this work contrasts with dense data sources such as point cloud produced by terrain laser scanners (LIDAR) [1][2] and high-resolution slices generated by computed tomography [3] and presents its own challenges. Data incompleteness, sparsity, causality and resolution disparity are some of the issues to contend with and probable reasons for why differential geometry has not been more widely used in subterranean geology modeling. Most established PDE techniques for modeling surfaces operate on uniform grid data whereas our input samples are irregularly spaced. To overcome this, a bridging step based on boundary detection and edge map synthesis is described. This enables level-set methods to be applied to contours extracted from sparse non-uniform data points.

As an overview, Fig. 1 shows the processes for achieving the ultimate goal. Once a set of contours emerge following boundary extraction, the work flow next enters the spatial correspondence (reasoning) phase whose purpose is to associate ‘source’ regions with ‘target’ regions at successive intervals and estimate component displacements. This problem shares many similarities with object tracking in computer vision, but is made difficult by significant variations rather than gradual changes in contour shape. Often, the vertical resolution is low, whilst some regions may not be sampled adequately, or at all, due to operational constraints. A region association and translation estimation approach is proposed to deal with the complexity of region merging and splitting from a resource allocation perspective.

Each set of associated source–target contours define a region in a motion-compensated frame for which contour morphing (spatial warping) [3] is applied. The objective is to model the shape of the boundary in between two cross-sections as a propagating interface. The underlying premise is that local surface deformation can be described as an evolutionary process governed by some PDE. Although the exact form used in this work might not match reality in terms of ore genesis, it is a reasonable alternative to unconstrained warping approaches and does provide a continuous deformable model. Using level-set methods [4], topological changes can be handled seamlessly during the morphing process. To facilitate slope prediction, the evolving interface (contour boundaries) are tracked using particle trajectories. This works well along portions of the boundaries where differences in curvature between the source and target contours are small. It breaks down when branching occurs, i.e., when a curvelet emanates from a single point. To address this, a novel backtracking algorithm has been proposed to recover lost information during particle advection and minimize boundary distortion attributed to tracking failure.

1.1 Related works

This paper is distinguished from prior work through its attempt in fully automating a chain of processes required to reconstruct subterranean surfaces using sparse labeled data. These processes include boundary extraction, spatial correspondence and contour evolution, as outlined in Fig. 1. Although the contours for various geological domains (henceforth referred as geozones) can be specified interactively, our model basically requires only a set of unordered points, sampled non-uniformly across an orebody in multiple cross-sections as input.

In [5], Sprague and de Kemp presented a partially automated tool which uses Bézier and NURBS (non-uniform rational B-spline) curves to model non-planar 3D surfaces. Fitting under the guidance of a control frame, it makes use of 2D interpreted plan views provided by mine geologists, created using local slices of projected drill hole data at semi-regular spaced depths. The authors emphasized that in moving from 2D polygonal lines to 3D surface construction, the continuation of common features requires delicate spatial synchronization across neighboring plan-sections, and manual correspondence performed by the geologist was critical to the integrity of the modeled structure and preventing self-intersections. The term map trace was defined as a “geological interpretation delineating the intersection of a geologic boundary as it breaks through the surface…or as it intersects a given elevation plane”. This definition closely resembles our notion of active contour extracted boundaries which represent segmented regions in a geozone. Their technique were refined by imposing positional and orientation constraints using structural ribbons (expert knowledge), thus it may be categorized as a semi-supervised technique.

In [6], Mitášová and Hofierka applied differential geometry, more specifically, regularized spline with tension to topographic analysis of a watershed. This utilized convex/concave sections with smooth curvature for interpolation. However, the focus was to model the top surface (as opposed to sub-surfaces) using dense elevation data obtained via remote sensing (as opposed to sparse data).

In [7], Kaufmann and Martin built 3D subsurface models using a variety of sources (drill holes, cross-sections and geological maps) with different motivations. Their goal was to further understand subsoil characteristics such as hydrogeologic or geothermic properties of the geological bodies. Their surfaces were modeled using DSI (discrete smooth interpolation) which computes the location of nodes by balancing roughness and misfit constraints (see Mallet [8] and Frank [2]). This is representative of a class of information-rich GIS fusion approaches which utilize topographic, geological and structural data [9]. In contrast, our approach makes the most of the limited data in an information-poor environment where only blasthole locations and geozone labels are available.

In [10], Caumon et al. presented general guidelines for creating a 3D structural model made of faults and horizons using sparse field data. Their focus was natural resource evaluation and hazard assessment; triangulated surfaces with variable resolution was also discussed. The authors offered many insights, one notable comment is that “3D subsurface modeling is generally not an end, but a means of improving data interpretation through visualization…to generate support for numerical simulations of complex phenomena (i.e., earthquakes, fluid transport) in which structures [11] play an important role.” This is also true of subsurface models serving as decision support tools in mining and geological exploration.

In [12], Dirstein et al. demonstrated that automated surface extraction and segmentation of peak and troughs from seismic survey can provide insight into structural and sedimentary morphology. In particular, differential geometry was used to select objects of concave and convex curvature, these features can help identify subtle cues for fluid flow events that are perhaps over-looked by conventional interpretation methods. For an in-depth survey of past efforts and current interests in 3D geological mapping, readers are referred to [13], [14], [15] and [16]. Relevant techniques from computational physics and computer vision are presented in Appendixes A and B. As a quick overview, the major themes and related works are highlighted in Table 1.

| Description and referenced works | |

| A | Theoretical treatment of active contours & gradient vector field |

| (Kass et al., 1988) [17], (Caselles et al., 1997) [18], | |

| (Ivins and Porrill, 1995) [19], (Xu and Prince, 1998) [20], | |

| (Horn and Schunck, 1981) [21]. | |

| B | Foundations for contour metamorphosis |

| (Nilsson et al., 2005) [3], (Breen and Whitaker, 2001) [22], | |

| (Museth et al., 2005) [23], (Nielsen and Museth, 2006) [24], | |

| (Bertalmio et al., 2000) [25], (Osher and Sethian, 1988) [26], | |

| (Peng et al., 1999) [27], (Nagashima et al., 2007) [28] |

In both Dirstein’s and our work, curvature preserving spatial representations are used to good effect albeit the objectives and modalities are different. One aspect of Dirstein’s work is waveform analysis, where genetic fitness (similarity) relative to a genotype (waveform signature) can reveal relative stability of neighboring segments above and below a particular surface and confer understanding about its structure and stratigraphy. In our work, we obtain an essentially continuous representation for the slope of geozone subsurfaces from sparse boundary patterns through contour extraction and metamorphosis; this provides useful directions for subsequent drilling and exploration. Our motivation stems from an interest in integrating techniques from pattern analysis, computational physics, computer vision and signal processing, bringing these to bear on a subsurface reconstruction problem, solving it in unconventional ways.

1.2 Application Scenario

The target application is the modeling of subsurface boundaries in an open-pit mine of sedimentary iron ore deposits.222The most common minerals are hematite and goethite Fe3+O(OH). These deposits are believed to have formed as chemical precipitates on the floor of shallow marine basins in a highly oxidizing environment during the Proterozoic eon, circa 1.9–2.4 billion years ago. Here, the sparse spatial patterns derive from blasthole samples where each is labeled with a geozone. The geozone labels provide a classification of geological domains based on mineralization and stratigraphic units [29]. From a mining perspective, these are used to segregate high-grade minerals from low grade or unprofitable materials. With some effort, the geozones can be turned into coherent spatial clusters. However, as unorganized points, or even with a discretized block-based representation, there is no easy way of obtaining a coherent motion field, one that describes the direction a boundary moves in consistently without artifacts or discontinuities. This is one major reason for using a curve-based, deformable surface model.

At the outset, it is important to distinguish this work from 3D segmentation approaches [30, 31] that use energy functional for minimum path optimization given contours at specific depths which is a common scenario in computer tomography. These approaches often require 3D partial derivatives to be computed, our data simply do not have the requisite (vertical) resolution to facilitate that. Furthermore, as our data are literally mined through manual processes, they only become available progressively at periodic intervals. In our application, slope trends need to be estimated in a causal manner using cross-section samples from as little as two successive benches (at two particular depths). Our problem also differs from grade estimation or material classification for which a variety of probabilistic inference and machine learning approaches are known [32], [33], [34], [12], [29], [35], [36], [37]. The focus here is explicit spatial representation which encompasses boundary extraction, region correspondence and capturing changes to subsurfaces using differential geometry.

The proposed system takes input in one of two forms. The contours which describe geospatial regions are either given or derived. Fig. 2(a) depicts a common mining scenario where stratigraphic information are obtained by examining core specimen extracted from the drilled holes. Alternatively, geozone transitions (marker bands) are established from geochemical assays or geophysical measurements taken while drilling. The main characteristics of this data is high resolution in z and sparse sampling in the x-y plane. Geologists typically interpolate the boundary at locations (dotted lines) between the drilled holes based on an understanding of the geological setting. The time and effort required to “join up” these vertical slices to form a preliminary 3D surface model can be substantial. Fig 2(b) depicts a second scenario where horizontal cross-sections are taken. This scenario differs from the previous through denser sampling in the x-y plane and having relatively low z-resolution; blastholes are plentiful albeit non-uniformly sampled. In this case, the contours are not given — only the coordinates and geozone designations (classification labels) of the blastholes are known. This second scenario poses additional challenges with respect to boundary extraction which will be further considered in this paper. These pictures highlight the main objective of this work which is to model how a volumetric region evolves through the cross-sections using contours and estimate the direction in which the boundary moves in.

The proposed boundary geometry modeling framework (henceforth, abbreviated as BGM) consists of four sub-systems. The processing pipeline is shown in Fig. 1. The role of each subsystem is briefly described below.

-

•

Boundary extraction finds connected regions (components) from labeled blastholes. It locates boundary samples on each component and converts them into a closed contour.

-

•

Spatial correspondence automates the process of region association and component alignment. Having identified one or more components on each cross-section, the next goal is to match-up and motion-compensate contours, i.e., find the optimal translation which brings corresponding regions into alignment. By convention, contours found in the top and bottom of any two successive slices are referred as ‘source’ and ‘target’ components respectively.

-

•

Contour metamorphosis uses particle trajectories to facilitate slope estimation. The goal is to model residual differences after source–target components are aligned in a common frame. Contour boundaries are embedded in a 2D level-set function, by tracking the movement of the zero-interface, one establishes pathways for morphing the source region(s) into the target region(s) as the level-set evolves under the dynamics of a PDE.

-

•

Contour prediction reconstructs a 3D volume through interpolation or extrapolation of normalized trajectories.

1.3 Contributions

The main contributions are as follows.

-

•

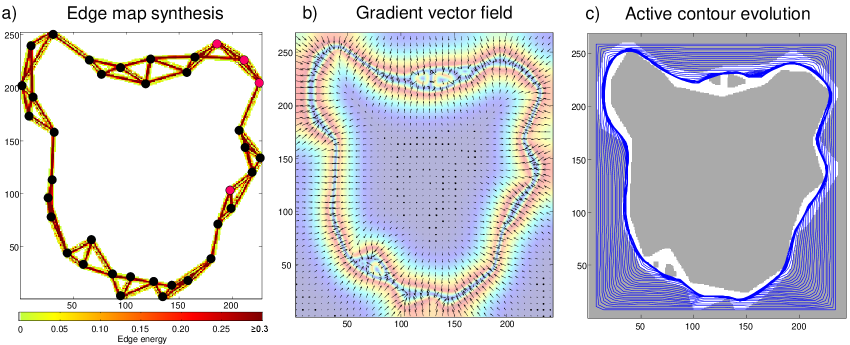

For boundary extraction, an edge map synthesis procedure is described which converts sparse non-uniform data points to an image representation. Gradient vector field and active contours are used to extract shapes (regularized contours) from unordered edge pixels.

-

•

For spatial correspondence, region association and component alignment problems are solved using FFT cross-correlation under spatial constraints. Obstacle avoidance is approached from a resource allocation view point, multiple-source multiple-target component relationships are explored using intersection graphs.

-

•

For contour metamorphosis, slope estimation is achieved using particle trajectories. A curvelet backtracking algorithm has been devised to overcome tracking failure caused by branching; a phenomenon that leads to boundary distortion. This occurs when there is a significant mismatch in shape (local curvature) between the corresponding boundary segments.

| Technique | Field of origin / inspiration |

| Boundary Extraction | |

| Connected component analysis | data analysis, clustering |

| Orientation selective gap closure | mathematical morphology |

| Synthesis of edge structures | image processing |

| GVF (anisotropic diffusion) | computational physics |

| Active contours (object localization) | computer vision |

| Spatial Correspondence | |

| Region association | probability / statistics |

| Cross-correlation/shift estimation | signal processing (FFT) |

| Component alignment strategies: | resource allocation, |

| resolve conflicts, avoid obstacles | perception |

| Source–target dependency tree | graphical decomposition |

| Land mass characterization | shape analysis |

| Contour Metamorphosis / Prediction | |

| Level-sets, signed distance function | applied mathematics |

| Wave propagation (hyperbolic PDE) | computational physics |

| Particle trajectories | object tracking |

| Boundary tracing | topology, computer vision |

| Surface reconstruction from slices | computer graphics |

Table 2 provides an overview of the techniques employed in each area. In the ensuing sections, the reasons for choosing these techniques will be elaborated as specific challenges are described. We discuss how standard approaches are modified to address particular needs. The solutions sought for different parts of the problem draw inspiration from different fields.

2 Boundary extraction

The first objective is to extract the boundary of contiguous spatial regions (connected components) given sparse data. For input, we have the spatial coordinates of blastholes for various horizontal cross-sections, and these blastholes have been classified as belonging to different geozones from prior analysis based on material properties or chemical composition. An example of this is shown in Fig. 2(b) where the blue, gray dots and orange squares represent blastholes sampled from three different geozones. An important point about these samples is that they are irregularly spaced and unordered. Consequently, efficient algorithms for labeling connected components [38, 39] that perform sequential scans on a uniform grid cannot be used here. A related problem is that attempts at forming an edge map by connecting peripheral samples often produce false edges and loops which make clean boundary extraction difficult. These issues will be addressed in due course. In preparation for what follows, the boundary extraction process is summarized by a series of steps.

-

•

Connected component analysis: For each cross-section, identify separate clusters within each geozone.

-

•

Blasthole boundary detection: Find samples located on the boundary of each cluster / connected component.

-

•

Edge map synthesis: Project the region (edges connecting the boundary samples) onto an image grid.

-

•

Gradient vector field computation: Drive the active contour (PDE solution) toward the boundary.

-

•

Active contours evolution: Extract the boundary as a closed contour described by control points.

2.1 Connected component analysis

The modification involves using a kD-tree for nearest neighbor search and adopts a region growing approach. All samples from the same geozone and same cross-section are numbered from 1 to , initially each belonging to the cluster of ‘self’. Neighboring samples within a radius of are merged with the current sample and labeled with the minimum cluster index amongst the group. Cluster membership information is propagated iteratively until no further changes occur and connected components remain.

2.2 Blasthole boundary detection

For each connected component, boundary samples are identified by thresholding the local entropy which is significantly non-zero at geozone transition points. Suppose a sample has neighbors within a radius of and the fraction of samples belonging to geozones and are and . The local entropy is computed as . Sample is marked as a boundary sample if where and [the median entropy in ’s neighborhood] is used to suppress “non-maximum” responses.

2.3 Automated gap closure

One limitation of this detector is the entropy tends to zero when the geozone labels no longer vary. This creates a problem as boundaries remain essentially open at the frontiers of surveyed regions which are not bordered by a different geozone. To remedy this situation, we perform orientation analysis to close these gaps. The objective is to recognize samples on the outskirts of a geozone as edges. The direction of the closest neighbors from are computed and sorted in ascending order. If a gap larger than radian is found, sample is deemed to be on an open edge that needs to be closed. Considering the blastholes are often sampled on a hexagonal lattice, we set and . Some intermediate results are shown in Fig. 3.

One observation from Fig. 3(b) is that there is often a large gap between [what humans perceive as] adjacent samples along the [as yet undetermined] component boundaries. Indeed, the variable gap size renders useless boundary tracing algorithms like ‘marching squares’ that rely on fixed separating distances. A more robust approach to boundary extraction is to formulate it as an energy functional minimization problem that provides a tradeoff between smoothness and fidelity. For this, projection of the boundary onto an image grid constitutes the first step.

2.4 Edge map synthesis

The main objective is to bridge the gap between adjacent samples located on the boundary. Each sample connects with its nearest neighbors by forming an edge between them. This edge structure comprising of line segments is then densely sampled and transferred over to the image plane. Specifically, edge energy is accumulated by pixels within some margin of each line segment.333Morphological closing is subsequently applied to give the edges adequate thickness. This procedure is further described in Algorithm 2.444Mapping real-world coordinates to the image domain (and vice-versa) involves scale changes. Uniform quantization is used implicitly throughout. For illustration, a synthesized edge map is shown in Fig. 4(a). Although it contains some false edges, the active-contour segmentation approach (to be described next) can tolerate small imperfection.

2.5 Active contour evolution in gradient vector field

The final goal is to obtain a contour (polygon of regularized boundary points) given the synthesized edge structure. This is achieved by performing region segmentation using Active Contour Evolution (ACE) in a Gradient Vector Field (GVF). The GVF is obtained from the synthesized edge structure following the procedure given in Appendix A. It may be visualized as a field of arrows (an external force) that pushes any given point in space toward the boundary (see Fig. 4(b)). Accordingly, an active contour which has been initialized as the component bounding box will evolve over time under the action of the GVF and be drawn inward until it converges at the region’s boundary. This is illustrated in Fig. 4(c) and the short video (see multimedia content). The computational aspects of GVF active contour evolution are described in Appendix A. This highlights the first connection with computational physics.

3 Spatial correspondence

The boundary extraction subsystem produces a collection of contours. For instance, a set of real component boundaries extracted from multiple cross-sections can be seen in Fig. 5. When given two successive cross-sections, we will henceforth refer to the upper and lower cross-sections (likewise for the components contained within them) as ‘source’ and ’target’ respectively. This section presents general strategies for establishing relationships between source and target contours (viz., region association) and estimating the optimal translation (performing component alignment) with and without spatial constraints. The devised strategies take into account unique characteristics such as incompleteness, causality and resolution disparity in the data as foreshadowed in the introduction.

In this paper, spatial correspondence is treated as a two-stage process. The spatial mapping is the result of combining component translation and contour metamorphosis (local surface deformation). The present goal is to account for the rigid movement of associated components — principally the translation observed in successive cross-sections. Subsequently, non-rigid movement will be considered in Section 4.

3.1 Region association

Given two cross-sections with source components and target components, the goal is compute the association matrix which describes the relationships between and . In the preliminary assessment, a ‘1’ in implies there is potential interaction between source and target region .

3.1.1 Formulation

A Gaussian pdf is used to model the likelihood of source-target association. In this setup, spatial proximity is measured by the difference of : an arbitrary location in the image and a fixed : the centroid position of source component . Design choices revolve around the hyper-parameters and which must capture relevant aspects to produce sensible results. The right formulation very much depends on the problem, the sampling characteristics and reliability of the data.555Examples of probabilistic reasoning and state estimation techniques are plentiful in the literature. Those that incorporate geometry information for visual tracking [40, 41, 42, 43, 44, 45, 46] generally rely on having rich and accurate information delivered at a high frame rate. e.g., solving spatial correspondence problems using optical flow or persistent templates. In our application, shape invariance is not something that can be reliably exploited. Topological changes, under-sampling (large spacing between successive cross-sections) and sampling uncertainty (mixture of material and poor resolution) often contribute to sudden and unexpected changes. In geology and mining exploration, operational constraints also limit the amount of observations that can be gathered, this creates a situation where fast changing dynamics may surpass the learning rate for a complex model. Given the few samples available, we resort to using the most basic measures like the magnitude, but not the directionality, of motion.

Apparent motion and object area represent two factors most relevant to our model. Apparent motion is inferred from the statistics and which are computed from observed distances between the sources and targets, as follows.

| (1) | ||||

| (2) |

Variability in the observations is given by the standard error . The association probability takes the form

| (3) | |||

| (4) |

The term relates to the uncertainty of the observations and is normalized by a reference value . The ‘noise’ parameter regulates the shape of the Gaussian. When variability is low () the association function contracts and becomes more concentrated around . Conversely, it dilates when there is a lack of consensus on ; this allows more distant targets to become potentially associated with the source.

3.1.2 Scale effects

Since the observations are noisy, component size should be compared with the apparent translation . When exceeds the object span , the policy should be adopted. This limits the scope of association for smaller components by shrinking .666The object span is approximated from the area as . To prevent from becoming too narrow — which would eliminate any source-target association potential when taken to the extreme — it would be prudent to also place a lower limit on .

The final form of the association likelihood is given by

| (5) | ||||

| (6) | ||||

| (7) |

where is related to the anticipated lateral movement. It basically dictates the minimum radius of association.

3.1.3 Decision function

The decision of whether to associate source with target is governed by a discriminant function

| (8) | ||||

| (9) |

-

•

The set notation in (8) refers only to points inside the support interval of target component .

-

•

The percentile in (9) depends on the source-to-target area ratio (). The rationale is as follows:

-

–

If , the source can (at best) fit itself wholly within the target with room to spare. Thus, a fair value for assessing spatial proximity (the association potential) is the median likelihood, offset by the fraction of which cannot overlap.

-

–

In the limit as , seeks out the maximum likelihood when is treated as a point object.

-

–

3.2 Component alignment strategies

Having obtained the association matrix , source–target relations can be represented by a graph. This gives rise to several possibilities: a 1-to-1 mapping, many-to-one, one-to-many, and many-to-many correspondence between the source and target components. These scenarios are shown in Fig. 6. For complex scenarios (e.g., multiple sources associated with multiple targets), the intersection graph is decomposed into subtrees (see Fig. 6 inset). This section is concerned with devising suitable strategies for each scenario, i.e., finding source translation estimates which maximize target alignment or component coverage. Graphically, each scenario is described by a subtree rooted at an s-node and its expansion includes all connected t-nodes and their children (any s-nodes connected to the t-nodes).

3.2.1 Displacement estimation: cross-correlation via FFT

A 1-to-1 mapping, or single-source single-target alignment, is the simplest situation encountered in region association. Conceptually, the best alignment is achieved by template matching; this is otherwise known as 2D cross-correlation. Using vectorized notation, e.g., , the problem may be posed as

| (10) |

where the matched filter response with lag is given by

| (11) |

These operations can be implemented efficiently as

| (12) |

using the Fast Fourier Transform (separable FFT) [47]. In this equation,777Caveats: Certain technical conditions need to be satisfied for this to work. Stated simply, the inputs need to have first quadrant spatial support and be sufficiently padded around the margins to ensure no shifted component ever steps outside the image border. Detailed guidance is given in [47] §4.6.4. represents the 2D cross-correlation between and , where is the source mask (a binary indicator function for the region occupied by the source component in the image plane) and is a signed distance function [48] (SDF) for the target component. The SDF (computed in Algorithm 3) gives a 2D map of the signed distance of any location from the contour boundary (zero-interface) and adheres to the convention where pixels inside and outside the target region have positive and negative values, respectively.

On the RHS, represents the inverse FFT. and denote the complex conjugate of the FFT coefficients for and FFT coefficients for . Finding the optimal shift (displacement ) is equivalent to locating the peak in . This lays the basic foundation for component alignment (see Algorithm 4).

3.2.2 Multiple-source single-target scenario

The proposed solution (10) requires certain modifications in a multiple-source single-target (many-to-one) scenario. Consider the situation depicted in Fig. 7(a). As it stands, if source acts selfishly and tries to maximize its correlation with the target with no regard for other source components, , and will be locked out of the region merging arrangement. To prevent this, the sources will participate in target sharing. This is posed as a resource allocation problem where the objective is to divide the target into sectors (or ports of varying sizes) and assign the ports in such a way as to minimize their angular difference with the affiliated source component (see Fig. 7(a)). The solution is presented in Algorithms 5–6. The port boundaries and target sector-to-source mapping so obtained allow very specific spatial constraints to be imposed. For instance, in Fig. 7(b), by imposing a penalty on target sectors reserved for other source components (see striped region), will refrain from stepping into other’s territory whilst maximizing its overlap with the target. This behavior can be realized by setting (initially, the target signed distance function) to large negative values at locations which are marked out-of-bounds.

3.2.3 Single-source multiple-target scenario

An issue with the current approach is that it is somewhat biased toward single-target alignment. There is no incentive for a source component to overlap with multiple targets since stepping outside the boundary of a target incurs a negative penalty in (11). This usually deters any ‘cross-over’ unless the source component is large enough to encompass both targets and the targets are reasonably close together that reward outweighs penalty. As motivation, Fig. 8(b) illustrates a situation where the source is encouraged to straddle two targets; this in turn maximizes component coverage and offers a more plausible explanation in a region splitting scenario. Within the current framework, this can be achieved by placing rewards at strategic locations in the value function to promote cross-over. This is further described in Algorithm 7.

3.2.4 Multiple-source multiple-target scenario

The cooperative and reward strategies described in §3.2.2–3.2.3 provide a unified way for dealing with complex relationships under the displacement estimation framework of §3.2.1. As an illustration, these techniques are applied to a multiple-source multiple-target scenario in Fig. 9. The initial position of the source and target components are shown in Fig. 9(a). This has the same graphical structure as the type C configuration in Fig. 6. Hence, it can be decomposed into 4 subtrees or expansion steps as shown in Fig. 9(b).

A compact way of writing these steps is \footnotesize1⃝: , \footnotesize2⃝: , \footnotesize3⃝: , \footnotesize4⃝: where the source component under consideration (to be moved) is typed in bold.

Within a subtree, each link describes a tentative association between an s-node and a t-node in Fig. 9(b). This simply means there is a potential for the source to intersect with the target. The objective is to eliminate edges where a connection does not exist — perhaps due to obstacles (the presence of other source components) which have prevented an overlap between and — and find the translation for source components that do overlap with targets (i.e., maximize correlation subject to spatial constraints).

For brevity, the subtree expansion steps are omitted here. Interested readers may refer to the worked example given in the Supplementary Material for a full description of the expansion steps. Through the lens of spatial reasoning (Algorithm 3–9 and Figure 4–9), Section 3 has outlined the main connections with signal processing, computer vision, resource allocation and graphical decomposition in this work.

In summary, the spatial correspondence subsystem produces an association matrix and translation estimates which describe the relationship between source and target components in two successive cross-sections. Specifically, the source contours associated with a given target define an instance for the next subsystem, contour metamorphosis, to work on.

4 Contour metamorphosis

The objective is to capture local spatial variation using differential geometry, to explain how source regions evolve into target regions using PDE. This problem may be visualized as a transformation from (a) to (b) in Fig. 10.

Given displacement estimates and translation operator , the starting point is a principal target contour , its associated (shift-compensated) source contours and any affiliated target contours connected to the source nodes. Fig. 10(a) shows a simple case where there is only one source and one target contour involved. It is this residual difference, the non-rigid changes in shape in the aligned common frame, that is being modeled.

In the PDE model, contours are represented by level-sets which embed region boundaries as the zero-interface, viz., . Initially, is set to , the signed distance function of . The basic objective is to model the evolutionary process from to . As the level-set evolves888A level-set may be defined as a collection of iso-contours or a family of embedded point sets each represented by ., the region boundary morphs from (a) to (b). The morphing process is described in Appendix B wherein (20) represents the key equation for level-set update. Visually, this computation describes a propagating wavefront as depicted in Fig. 10(c).

4.1 Iso-contours and wave speed adjustment

It is important to note that these level-set zero interfaces are functions of time. Ultimately, we need to obtain iso-contours at different elevations . However, no single (other than ) currently corresponds to a fixed elevation.

As a corollary, not all points on the advancing wavefront will reach the target contour at the same time since the wave propagates at different speeds depending on location.999The propagation speed depends on the geometric distance between the interface and target contour in accordance with (19). Therefore, particle trajectories (see Fig. 10(d)) are used to track the direction in which the zero-interface moves to facilitate slope estimation at a specific height. The relevant procedure called “particle advection” is described in Appendix B wherein (26) represents the key equation for particle update.

The particle speed (spacing) along each trajectory will eventually be adjusted to ensure each advances at a uniform rate and reaches the target boundary at the same time. This allows the zero interfaces to be converted into iso-contours of constant elevation. These ideas capture the essence of contour metamorphosis; Fig. 10(e) also serves as a prelude of the end goal.

4.2 Challenges

In principle, Appendix B provides all the necessary tools for capturing information on local spatial deformation. In practice, when the shape of the source and target regions are dissimilar, the scheme may fail. Specifically, particle trajectories may fail to track the wavefront in areas where complex topological changes occur especially when they coincide with large differences in local curvature. This can be seen in Fig. 11(a) where some target boundary segments have zero particle coverage. Fig. 11(b) highlights regions which end up being unmodeled despite being reached by the wavefront. This usually occurs due to undersampling when the cross-section spacing is large. The goal in this section is to determine its root cause and contribute a solution to this problem.

In each time step, wavefront segments which the particle trajectories had failed to track are archived. This produces a collection of time-stamped pixels (called curvelets) which identify areas of innovation, viz., locations where the wavefront and head of the particle trajectories have diverged. The window of interest in Fig. 11(c) is magnified in (d) to reveal its structure. It can be seen the waves emanate from two separate sources and merge in the middle, this describes a region splitting situation which can be reasonably expected based on the topology shown in (a). For other unmodeled regions shown in (c), the situation is less complex and the behavior can be attributed to significant differences in local curvature. The common theme is that the missing particle trajectories appear to emerge from a single branching point.

The color strands in Fig. 11(e) show the trajectory flow for new particles sitting on the curvelets. The arrows indicate the direction the backtracking algorithm proceeds in. The aim is to establish pathways from the target back toward the branching point. In Fig. 11(f), new pathways deduced from the curvelets are slotted into the unmodeled regions. Evidently, this recovers directional information between the source and target contours in areas where information had previously been lost.

4.3 Curvelet backtracking

The backtracking algorithm is formally described in Algorithm 10. The starting point is a collection of time-stamped pixels, these represent wavefront segments the particle trajectories had failed to track using the particle update equation (26) during contour metamorphosis. Backtracking comprises six primitive steps; these are described below and illustrated in Fig. 12.

-

(a)

Curvelet endpoint identification

Locate the start and endpoints of prospective curvelets within — the set of unordered pixels on the wavefront which have drifted away from the particle trajectories at time . -

(b)

Curvelet segmentation (clustering and ordering)

Extract individual curvelets by tracing the connecting pixels between relevant endpoints. -

(c)

Curvelet regularization

Fit pixels with a smoothing spline to remove jitter. Resample segment as point regularized curvelet . -

(d)

Curvelet track initialization / extension

The displacement of curvelet points between successive time steps describe possible pathways for the missing trajectories in unmodeled regions. -

(e)

Curvelet association

Given regularized points for curvelet at time , find a matching curvelet at time .101010Nearest neighbor search is employed to find a surjective mapping from to . Each point has at least one match at , but not every pixel on is matched with a point at . For this reason, as illustrated in Fig. 12(e), this is used only for curvelet association and not for point-wise correspondence. This is because inconsistent (jittery) movement has a detrimental effect on trajectory estimation. To eliminate the cumulative effects of drifting which would otherwise skew the tracks over time, the associated point set is constrained to lie on a regularized curvelet as shown in Fig. 12(f). A case of interest is depicted in Fig. 12(e) where the segmented pixels from two curvelets are merged from to . This is handled by considering interval mappings for multiple curvelet parts (). -

(f)

Curvelet correspondence

Once matching pixels are found, the curvelet at time is smoothed and resampled evenly with points. This curvelet is then collectively mapped onto , the current curvelet.111111This provides an interesting contrast to the wavefront tracking approach proposed by Tomek et al. [49] which represents two sets of time-stamped pixels with a complete oriented bipartite graph, poses the task of finding the best tracking arrows as a network flow problem, formulates and solves it as an integer program using branch and bound. Similarly, Singh et al. treated this as an optimization problem and employed dynamic programming in [50]. For a given point , the sequence [] subsequently defines a track which traverses the unmodeled region.

These steps capture the essence of the backtracking algorithm which produces particle tracks, see color strands in Fig. 11(e) and (f), for the unmodeled regions. The remaining tasks involve branching point identification which enables existing particle trajectories [the trunk portion extending from the source contour to the branching point] to be fused with the newly discovered tracks [from the branching point to the target contour] to complete the missing pieces. The details are described in Algorithms 11 and 12 and the notations are clarified in Fig. 13.

5 Results

The proposed boundary extraction technique was applied to blasthole patterns from a Pilbara iron ore mine in Western Australia. Fig. 14(a) illustrates one such pattern for a mineralized geozone before any modeling was done.

5.1 Visualization

Spatial correspondence and metamorphosis were applied to the segmented regions; culminating in the contours seen in Fig. 14 (b and e). These contours are triangulated to produce the surfaces shown in Fig. 14 (c and f). These results demonstrate that differential geometry can model subterranean boundaries, handling topological changes (e.g., region merging) satisfactorily. The proposed techniques essentially transform irregularly-spaced points of low resolution into continuously deformable surfaces that represent boundaries. Fig. 15 reveals the structure of the two geozones. The two vantage points show that lies above , such bedded planes (layered geological formations) are common for iron ore deposits at the mine site.

5.2 Predictive Value

To assess the utility of slope information conveyed by the model, precision and recall rates are computed for predicted contours and compared against ground truth boundaries. The terrain extends from 690m down to 610m and is partitioned into disjoint 10m intervals (called benches) and processed top-down. Predictions are made using only information above the current bench floor. Therefore, predicted contours represent extrapolations from the known interval. For each comparison, data at the predicted depths from the bench below are withheld (not used to inform the prediction model) and these contours are used only for validation purpose at a given bench. Precision and recall rates are defined using set notations. The general setup is shown in Fig. 16. For predicted region , precision is defined as . For ground truth region , recall is defined as . The overall precision (resp., recall rate) at depth is the area-weighted average precision (resp., recall rate) of all contours at the specified depth. The depth varies from 0 to 10m in steps of 1.25m. Precision and recall statistics are computed under two conditions: (i) not using slope information [prediction employs zero-order hold], (ii) using the gradient field from the proposed model to obtain displacement estimates, i.e., combining the translation with the local deformation component estimated during spatial correspondence and contour metamorphosis, respectively.

Fig. 17 demonstrates a consistent improvement in precision and recall rate when slope information is used in the model prediction. Significant gains in precision (12.7–19.2%) and recall (17.6–22.8%) are observed at depths of 5 to 10 meter. These statistics are summarized in Table 3.

| Depth (m) | Precision (%) | Recall (%) | ||||

|---|---|---|---|---|---|---|

| nil | model | gain | nil | model | gain | |

| 1.25 | 91.0 | 95.0 | +4.0 | 90.4 | 97.6 | +7.2 |

| 2.5 | 84.6 | 90.9 | +6.3 | 83.4 | 95.1 | +11.7 |

| 3.75 | 78.7 | 88.1 | +9.4 | 75.4 | 90.9 | +15.5 |

| 5.0 | 72.0 | 84.7 | +12.7 | 69.8 | 87.4 | +17.6 |

| 6.25 | 66.5 | 81.4 | +14.9 | 64.1 | 82.3 | +18.2 |

| 7.5 | 59.8 | 77.5 | +17.7 | 58.0 | 77.3 | +19.3 |

| 8.75 | 53.8 | 73.0 | +19.2 | 51.9 | 73.4 | +21.5 |

| 10.0 | 49.1 | 67.5 | +18.4 | 45.1 | 67.9 | +22.8 |

6 Discussion

These results demonstrate the feasibility of modeling subsurface boundary geometry using interdisciplinary techniques. In spite of the incomplete, sparse, causal and limited nature of the spatial patterns, computational physics (partial differential equations) and computer vision techniques can be combined to estimate directionality and produce a subsurface model that gives greater insight into the general structure of a geological domain. Modeling geological boundaries using differential geometry also offers other advantages. For ore reserve estimation, the volume of the triangulated surfaces may be computed easily from the vertices as outlined by Zhang [51]. Given coherent slope estimates, directional predictions made by the model may be used in adaptive sampling [52] to make drilling more targeted and cost-effective. Indeed, various forms of analysis performed on those samples (including chemical assays and material classification by experts) can further constrain or correct inaccurate predictions, when geological boundaries need to be updated or learned further afield. This may be appreciated as a form of active learning in an exploration/exploitation framework. The surface slopes can also serve as a decision support tool for multi-agent scheduling and planning activities that involve drilling and excavation.

Several observations about the BGM system should be noted. First, although the boundary segmentation process has been designed to extract contours from sparse spatial patterns, it can take as input any sensible closed contour generated by humans or computers, such as lithological boundary detection using rock face image segmentation [53] and 2D decision boundaries from Gaussian process implicit surfaces [54] or SVM. Second, for spatial correspondence, it is possible to have humans in the loop to provide interactive estimates and perform component alignment in difficult cases. Third, the contour metamorphosis step may be preceded by geometric operations such as affine transform. For instance, a rotation between the source and target components can be estimated in the Fourier domain using the technique described by Nagashima [28]. Fourth, the directional estimates generated by the model can feedback into the system and act as a prior during region association. Finally, when data becomes more abundant, machine learning techniques can potentially be used to estimate certain parameters relating to surface dynamics (e.g., the anticipated lateral movement for certain regions).

7 Conclusion

This paper presented a framework for modeling geological boundaries using differential geometry. The objective was to create subsurfaces from sparse spatial patterns and obtain coherent directional predictions along the boundary of the extracted surfaces. Under the model, the precision and recall rate for contour prediction improved on average by 15–20%. For boundary extraction, an edge map synthesis procedure was described. This converted sparse non-uniform data points to an image representation and facilitated the use of PDE-based techniques which operate on dense uniform 2D arrays. Gradient vector field and active contours were used to obtain regularized contours from unordered edge pixels. For spatial correspondence, region association and component alignment problems were examined, translation estimates were obtained using FFT cross-correlation under spatial constraints. Multi-source mult-target component relationships were explored using intersection graphs; strategies for obstacle avoidance were formulated from a resource allocation perspective. For contour metamorphosis, local surface deformation was modeled using PDE (described in Appendix B). Surface slopes were estimated using normalized particle trajectories. The branching phenomenon was described: branching occurs during contour morphing when there is a significant mismatch in curvature between the source and target boundary segments. A curvelet backtracking algorithm was proposed to recover information lost during particle advection and thus overcome tracking failure caused by branching. In essence, the overall solution dealt with sparsity (irregularity), spatial constraints, and branching-induced tracking failure.

In a Nature editorial, an opinion piece [55] characterized interdisciplinary research as a synthesis of different approaches into something unique. This captures the essence of this study which is to re-imagine how concepts from established areas can be synthesized and applied to a different field. This study had a narrow focus on estimating and exploiting the directionality of subsurface boundaries for more targeted drilling and exploration. Its uniqueness stems from applying differential geometry to sparse data. A different blend of technologies may be appropriate in a different setting where automation involves something different. Whilst research papers in engineering and the natural sciences have increasingly cited work outside their own disciplines [56], some areas remain unexplored. These areas can benefit from fresh perspectives and offer opportunities for meaningful collaboration [57]. It is hoped this work can encourage more people to engage in and apply their expertise to various emerging and non-traditional fields.

Appendixes

Appendixes are included as Supplementary Material. With open-access, this may be downloaded from https://ieeexplore.ieee.org/ielx7/6287639/8600701/8891690/supplementalmaterial.pdf?tp=&arnumber=8891690. The subject matters are outlined in Table 4.

| A | Theoretical treatment of active contours & gradient vector field |

|---|---|

| B | Foundations for contour metamorphosis |

Algorithms

Algorithms are also included as Supplementary Material. Table 5 lists the algorithms and referenced sections.

| Description | Ref. section | |

| 1 | Active contour evolution in GVF | Appendix A |

| 2 | Edge map synthesis | § 2.4 |

| 3 | Signed distance function | § 3.2.1 |

| 4 | Single-source single-target correspondence | § 3.2.1 |

| 5 | Port allocation to handle spatial contention | § 3.2.2 |

| 6 | Multi-source single-target correspondence | § 3.2.2 |

| 7 | Single-source multi-target correspondence | § 3.2.3 |

| 8 | Multi-source multi-target correspondence | § 3.2.4 |

| 9 | Unified spatial correspondence strategy | § 3.2.4 |

| 10 | Wavefront curvelets backtracking | § 4.3, Fig. 12 |

| 11 | Wavefront curvelets branching analysis | § 4.3, Fig. 13 |

| 12 | Assembling particle trajectories (merging | § 4.3, Fig. 13 |

| existing and new trajectories from curvelets) |

Acknowledgment

This work was supported by the Australian Centre for Field Robotics and the Rio Tinto Centre for Mine Automation. Professor Salah Sukkarieh and the reviewers are thanked for their suggestions and considered opinion which improved the quality of this manuscript.

References

- [1] Simon J Buckley, JA Howell, HD Enge, and TH Kurz. Terrestrial laser scanning in geology: data acquisition, processing and accuracy considerations. Journal of the Geological Society, 165(3):625–638, 2008.

- [2] Tobias Frank, Anne-Laure Tertois, and Jean-Laurent Mallet. 3D-reconstruction of complex geological interfaces from irregularly distributed and noisy point data. Computers & Geosciences, 33(7):932–943, 2007.

- [3] Ola Nilsson, David Breen, and Ken Museth. Surface reconstruction via contour metamorphosis: An Eulerian approach with Lagrangian particle tracking. In Visualization, 2005, pages 407–414. IEEE, 2005.

- [4] David Adalsteinsson and James A Sethian. A fast level set method for propagating interfaces. Journal of Computational Physics, 118(2):269–277, 1995.

- [5] Kevin B Sprague and Eric A De Kemp. Interpretive tools for 3-D structural geological modelling part II: Surface design from sparse spatial data. GeoInformatica, 9(1):5–32, 2005.

- [6] Helena Mitášová and Jaroslav Hofierka. Interpolation by regularized spline with tension: II. Application to terrain modeling and surface geometry analysis. Mathematical Geology, 25(6):657–669, 1993.

- [7] Olivier Kaufmann and Thierry Martin. 3D geological modelling from boreholes, cross-sections and geological maps, application over former natural gas storages in coal mines. Computers & Geosciences, 34(3):278–290, 2008.

- [8] Jean-Laurent Mallet. Discrete smooth interpolation in geometric modelling. Computer-aided design, 24(4):178–191, 1992.

- [9] Andrea Zanchi, Salvi Francesca, Zanchetta Stefano, Sterlacchini Simone, and Guerra Graziano. 3D reconstruction of complex geological bodies: Examples from the alps. Computers & Geosciences, 35(1):49–69, 2009.

- [10] G Caumon, P Collon-Drouaillet, C Le Carlier De Veslud, S Viseur, and J Sausse. Surface-based 3D modeling of geological structures. Mathematical Geosciences, 41(8):927–945, 2009.

- [11] Bert De Waele, Mathieu Lacorde, Michael Cunningham, and Benjamin Jupp. Understanding geology and structure: An essential part of mineral resource estimation. ASEG Extended Abstracts, 2018(1):1–8, 2018.

- [12] J.K. Dirstein, T. Rudge, R. Li, and A.J. Stanley. Insights from the automated extraction of surfaces from the Bunda 3D seismic survey. West Australian Basins Symposium, pages 18–21, August 2013.

- [13] Nat Voorhies, Scott Bowen, Tom Battenhouse, Rob Porges, and Tad Fox. From 2D cross-sections to 3D model: a toolset for integrated data management, modelling, and visualization. In Richard C Berg, Hazen AJ Russell, and L Harvey Thorleifson, editors, Three-Dimensional Geological Mapping: Workshop extended abstracts; Geological Society of America Annual Meeting. Illinois State Geological Survey, Open File Series 2009-4, Portland, Oregon, 2009.

- [14] Richard C Berg, Stephen J Mathers, Holger Kessler, and Donald A Keefer. Synopsis of current three-dimensional geological mapping and modeling in geological survey organizations. Ilinois State Geological Survey Circular, 578, 2011.

- [15] MD Lindsay, MW Jessell, L Ailleres, S Perrouty, E de Kemp, and PG Betts. Geodiversity: Exploration of 3D geological model space. Tectonophysics, 594:27–37, 2013.

- [16] Bingxian Lin, Liangchen Zhou, Guonian Lv, and A-Xing Zhu. 3D geological modelling based on 2D geological map. Annals of GIS, 23(2):117–129, 2017.

- [17] Michael Kass, Andrew Witkin, and Demetri Terzopoulos. Snakes: Active contour models. Int. Journal of Computer Vision, 1(4):321–331, 1988.

- [18] Vicent Caselles, Ron Kimmel, and Guillermo Sapiro. Geodesic active contours. International Journal of Computer Vision, 22(1):61–79, 1997.

- [19] Jim Ivins and John Porrill. Everything you always wanted to know about snakes (but were afraid to ask). Artificial Intelligence, 2000, 1995.

- [20] Chenyang Xu and Jerry L Prince. Snakes, shapes, and gradient vector flow. Image Processing, IEEE Transactions on, 7(3):359–369, 1998.

- [21] Berthold KP Horn and Brian G Schunck. Determining optical flow. Artificial Intelligence, 17(1-3):185–203, 1981.

- [22] David E. Breen and Ross T. Whitaker. A level-set approach for the metamorphosis of solid models. Visualization and Computer Graphics, IEEE Transactions on, 7(2):173–192, 2001.

- [23] Ken Museth, David E. Breen, Ross T. Whitaker, Sean Mauch, and David Johnson. Algorithms for interactive editing of level set models. In Computer Graphics Forum, volume 24, pages 821–841. Wiley Online Library, 2005.

- [24] Michael B Nielsen and Ken Museth. Dynamic tubular grid: An efficient data structure and algorithms for high resolution level sets. Journal of Scientific Computing, 26(3):261–299, 2006.

- [25] Marcelo Bertalmio, Guillermo Sapiro, and Gregory Randall. Morphing active contours. Pattern Analysis and Machine Intelligence, IEEE Transactions on, 22(7):733–737, 2000.

- [26] Stanley Osher and James A Sethian. Fronts propagating with curvature-dependent speed: algorithms based on Hamilton-Jacobi formulations. Journal of Computational Physics, 79(1):12–49, 1988.

- [27] Danping Peng, Barry Merriman, Stanley Osher, Hongkai Zhao, and Myungjoo Kang. A PDE-based fast local level set method. Journal of Computational Physics, 155(2):410–438, 1999.

- [28] Sei Nagashima, Koichi Ito, Takafumi Aoki, Hideaki Ishii, and Koji Kobayashi. A high-accuracy rotation estimation algorithm based on 1D phase-only correlation. In Image Analysis and Recognition, pages 210–221. Springer, 2007.

- [29] Mehala Balamurali and Arman Melkumyan. t-SNE based visualisation and clustering of geological domain. In International Conference on Neural Information Processing, pages 565–572. Springer, 2016.

- [30] Roberto Ardon, Laurent D Cohen, and Anthony Yezzi. Fast surface segmentation guided by user input using implicit extension of minimal paths. Journal of Mathematical Imaging and Vision, 25(3):289–305, 2006.

- [31] Roberto Ardon, Laurent D Cohen, and Anthony Yezzi. A new implicit method for surface segmentation by minimal paths in 3d images. Applied Mathematics and Optimization, 55(2):127–144, 2007.

- [32] Ryan Goodfellow, Francisco Albor Consuegra, Roussos Dimitrakopoulos, and Tim Lloyd. Quantifying multi-element and volumetric uncertainty, Coleman McCreedy deposit, Ontario, Canada. Computers & Geosciences, 42:71–78, 2012.

- [33] Pejman Tahmasebi and Ardeshir Hezarkhani. A hybrid neural networks fuzzy logic genetic algorithm for grade estimation. Computers & Geosciences, 42:18–27, 2012.

- [34] Matthew J. Cracknell and Anya M. Reading. Geological mapping using remote sensing data: a comparison of five machine learning algorithms, their response to variations in the spatial distribution of training data and the use of explicit spatial information. Computers & Geosciences, 63:22–33, 2014.

- [35] Katherine L Silversides and Arman Melkumyan. Gaussian processes based fusion of multiple data sources for automatic identification of geological boundaries in mining. In International Conference on Neural Information Processing, pages 294–301. Springer, 2016.

- [36] Anuj Karpatne, Imme Ebert-Uphoff, Sai Ravela, Hassan Ali Babaie, and Vipin Kumar. Machine learning for the geosciences: Challenges and opportunities. IEEE Transactions on Knowledge and Data Engineering, 2018.

- [37] Bahram Jafrasteh, Nader Fathianpour, and Alberto Suárez. Comparison of machine learning methods for copper ore grade estimation. Computational Geosciences, 22(5):1371–1388, 2018.

- [38] L. Shapiro and G Stockman. Computer Vision. In Prentice Hall, chapter 3.4. 2002.

- [39] Kenji Suzuki, Isao Horiba, and Noboru Sugie. Linear-time connected-component labeling based on sequential local operations. Computer Vision and Image Understanding, 89(1):1–23, 2003.

- [40] Dieter Koller, Joseph Weber, and Jitendra Malik. Robust multiple car tracking with occlusion reasoning. Computer Vision – ECCV ’94, pages 189–196, 1994.

- [41] Michael Isard and Andrew Blake. Condensation — conditional density propagation for visual tracking. International Journal of Computer Vision, 29(1):5–28, 1998.

- [42] Christopher Rasmussen and Gregory D. Hager. Probabilistic data association methods for tracking complex visual objects. IEEE Transactions on Pattern Analysis and Machine Intelligence, 23(6):560–576, 2001.

- [43] Gavrill Tsechpenakis, Konstantinos Rapantzikos, Nicolas Tsapatsoulis, and Stefanos Kollias. A snake model for object tracking in natural sequences. Signal Processing: Image Communication, 19(3):219–238, 2004.

- [44] Oliver Williams, Andrew Blake, and Roberto Cipolla. Sparse bayesian learning for efficient visual tracking. IEEE Transactions on Pattern Analysis and Machine Intelligence, 27(8):1292–1304, 2005.

- [45] Björn Stenger, Arasanathan Thayananthan, Philip HS Torr, and Roberto Cipolla. Model-based hand tracking using a hierarchical bayesian filter. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28(9):1372–1384, 2006.

- [46] Yogesh Rathi, Namrata Vaswani, Allen Tannenbaum, and Anthony Yezzi. Tracking deforming objects using particle filtering for geometric active contours. IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(8):1470–1475, 2007.

- [47] Rafael C. Gonzalez and Richard E. Woods. Digital Image Processing. Prentice Hall, 2nd edition, 2002.

- [48] Pedro Felzenszwalb and Daniel Huttenlocher. Distance transforms of sampled functions. Theory of Computing, 8:415–428, 2012.

- [49] Jakub Tomek, Rebecca AB Burton, and Gil Bub. Ccoffinn: Automated wave tracking in cultured cardiac monolayers. Biophysical Journal, 111:1595–1599, (Supplementary Materials 1.3), 2016.

- [50] R Singh, I Pavlidis, and NP Papanikolopoulos. Recognition of 2D shapes through contour metamorphosis. In International Conference on Robotics and Automation, pages 1651–1656, 1997.

- [51] Cha Zhang and Tsuhan Chen. Efficient feature extraction for 2D/3D objects in mesh representation. In Image Processing, IEEE Proceedings International Conference on, volume 3, pages 935–938. IEEE, 2001.

- [52] Nasir Ahsan, Steven Scheding, Sildomar T. Monteiro, Raymond Leung, Charles McHugh, and Danielle Robinson. Adaptive sampling applied to blast-hole drilling in surface mining. International Journal of Rock Mechanics and Mining Sciences, 75:244–255, 2015.

- [53] Yathunanthan Vasuki, Eun-Jung Holden, Peter Kovesi, and Steven Micklethwaite. An interactive image segmentation method for lithological boundary detection: A rapid mapping tool for geologists. Computers & Geosciences, 100:27–40, 2017.

- [54] Oliver Williams and Andrew Fitzgibbon. Gaussian process implicit surfaces. Gaussian Proc. in Practice, pages 1–4, 2007.

- [55] Mind meld, in Interdisciplinary. Nature Editorial, 525(7569):289–290, 2015.

- [56] Richard Van Noorden. Interdisciplinary research by the numbers. Nature, 525(7569):306–307, 2015.

- [57] Rebekah R Brown, Ana Deletic, and Tony HF Wong. How to catalyse collaboration: turn the fraught flirtation between the social and biophysical sciences into fruitful partnerships with these five principles. Nature, 525(7569):315–318, 2015.